Enhanced Multi-Model Machine Learning-Based Dementia Detection Using a Data Enrichment Framework: Leveraging the Blessing of Dimensionality

Abstract

1. Introduction

2. Related Studies

2.1. ML-Based Predictive Models with Feature Selection

2.2. ML-Based Predictive Models with Increased Data Dimensionality

2.3. Research Highlights and Proposed Work

3. Research Methodology

3.1. Data Collection

3.2. Data Preprocessing

3.2.1. Outlier Handling

3.2.2. Train/Test Split

3.2.3. Data Imputation

3.2.4. Comorbidity Index Mapping

3.2.5. Prior Knowledge-Based Feature Creation

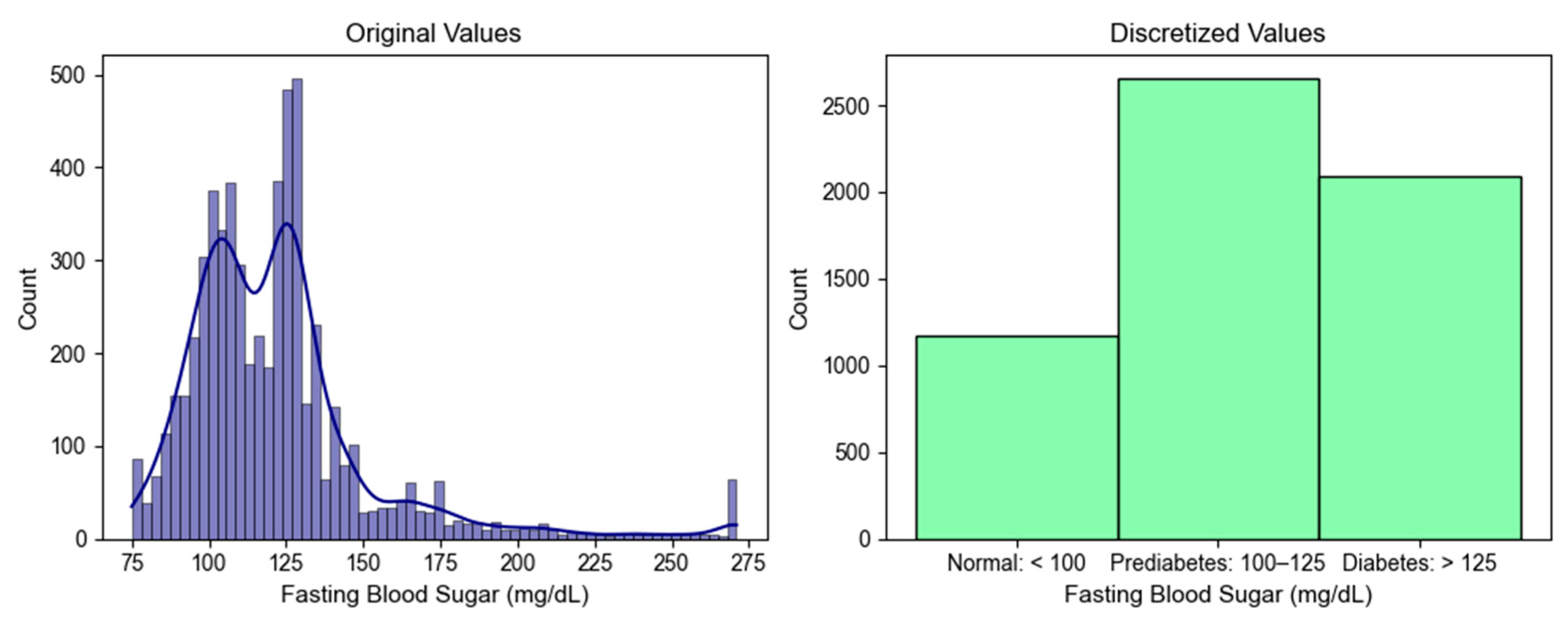

3.2.6. Data Discretization

3.2.7. Feature Scaling

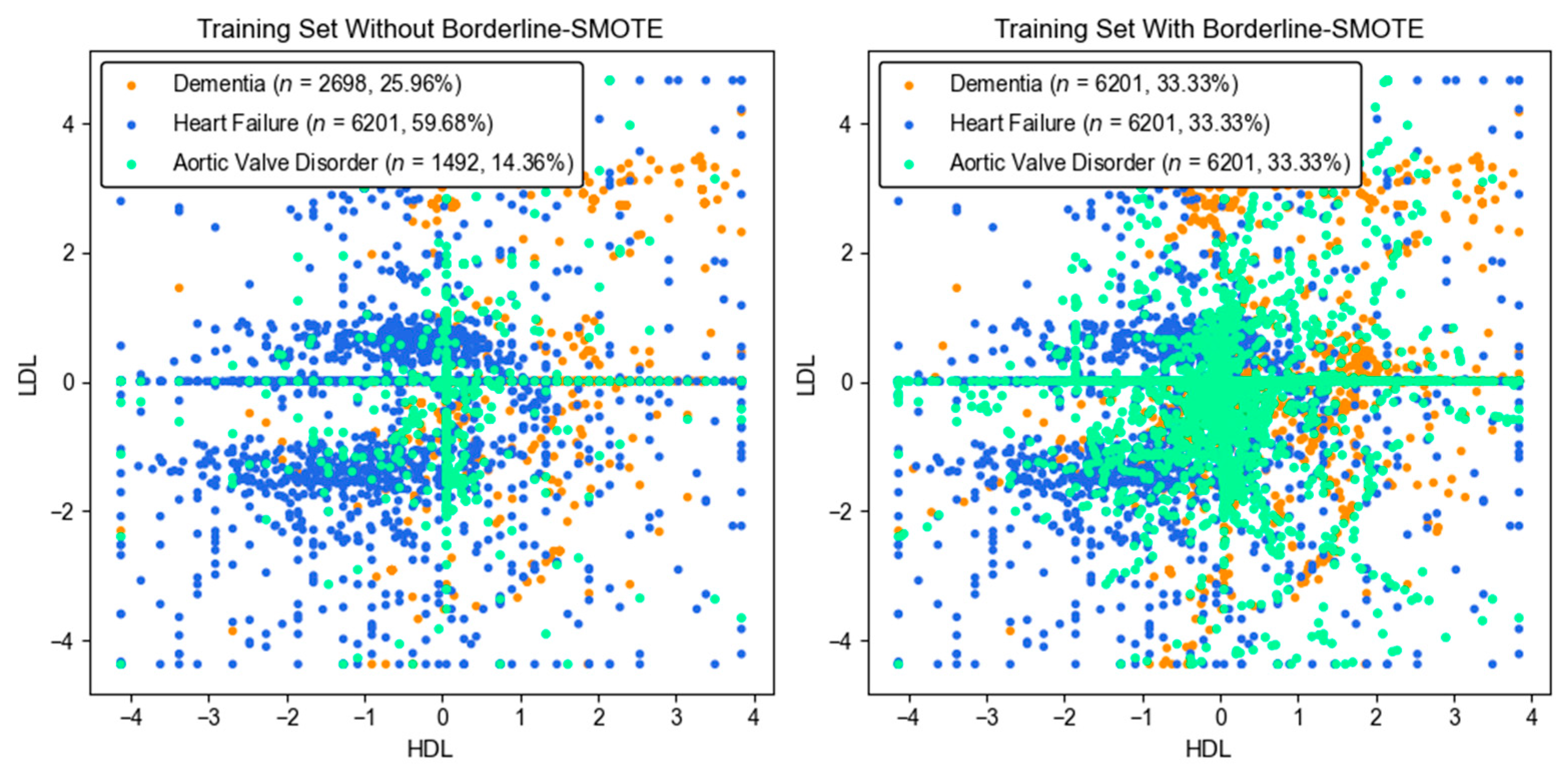

3.2.8. Imbalanced Data Handling

3.3. Model Training and Testing

3.4. Performance Metrics

3.5. Hyperparameter Optimization

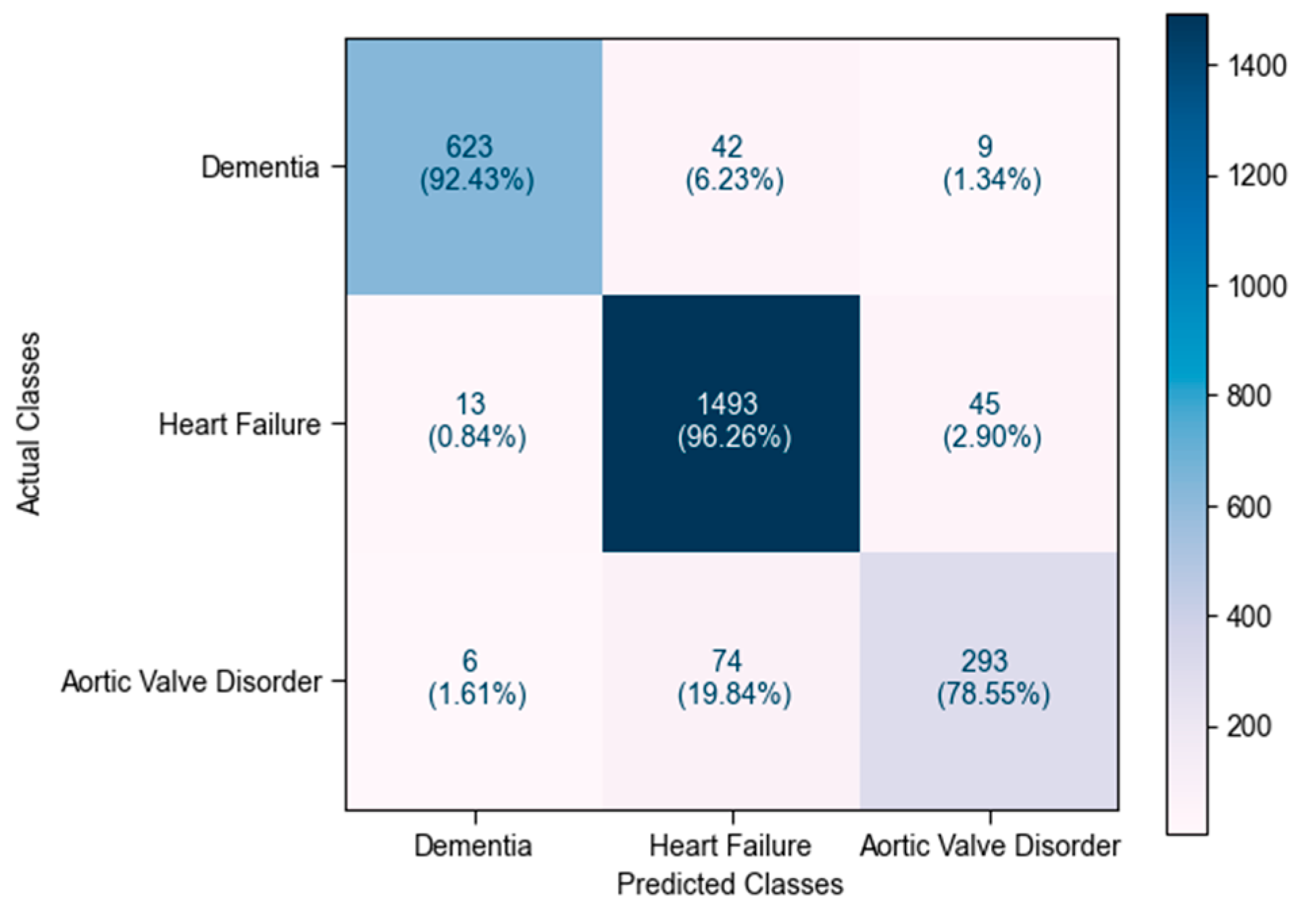

4. Experimental Results and Discussion

4.1. Model Comparison Based on Precision

4.2. Model Comparison Based on Recall

4.3. Model Comparison Based on F1 Score

4.4. Model Comparison Based on Accuracy

4.5. Model Comparison Based on ROC Curve

4.6. Model Comparison Based on PR Curve

4.7. Discussion of Overall Results

4.8. Feature Importance Based on SHAP Values

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

References

- What Is Dementia? Available online: https://www.alzheimers.gov/alzheimers-dementias/what-is-dementia (accessed on 15 December 2024).

- Dementia. Available online: https://www.who.int/news-room/fact-sheets/detail/dementia (accessed on 27 December 2023).

- Paitoonpong, S. Promotion of active aging and quality of life in old age and preparation for a complete aged society in Thailand. Thail. Dev. Res. Inst. Bangk. Thail. 2023, 38, 1–38. [Google Scholar]

- Chuakhamfoo, N.N.; Phanthunane, P.; Chansirikarn, S.; Pannarunothai, S. Health and long-term care of the elderly with dementia in rural Thailand: A cross-sectional survey through their caregivers. BMJ Open 2020, 10, e032637. [Google Scholar] [CrossRef]

- Arvanitakis, Z.; Shah, R.C.; Bennett, D.A. Diagnosis and management of dementia: Review. JAMA 2019, 322, 1589–1599. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Global action plan on the public health response to dementia 2017–2025. In Global Action Plan on the Public Health Response to Dementia 2017–2025; World Health Organization: Geneva, Switzerland, 2017. [Google Scholar]

- Justin, B.N.; Turek, M.; Hakim, A.M. Heart disease as a risk factor for dementia. Clin. Epidemiol. 2013, 5, 135. [Google Scholar] [PubMed]

- Li, J.; Wu, Y.; Zhang, D.; Nie, J. Associations between heart failure and risk of dementia: A prisma-compliant meta-analysis. Medicine 2020, 99, e18492. [Google Scholar] [CrossRef]

- Thomas, S.; Rich, M.W. Epidemiology, pathophysiology, and prognosis of heart failure in the elderly. Heart Fail. Clin. 2007, 3, 381–387. [Google Scholar] [CrossRef]

- Metra, M.; Cotter, G.; El-Khorazaty, J.; Davison, B.A.; Milo, O.; Carubelli, V.; Bourge, R.C.; Cleland, J.G.; Jondeau, G.; Krum, H.; et al. Acute heart failure in the elderly: Differences in clinical characteristics, outcomes, and prognostic factors in the veritas study. J. Card. Fail. 2015, 21, 179–188. [Google Scholar] [CrossRef]

- Lazzarini, V.; Mentz, R.J.; Fiuzat, M.; Metra, M.; O’Connor, C.M. Heart failure in elderly patients: Distinctive features and unresolved issues. Eur. J. Heart Fail. 2013, 15, 717–723. [Google Scholar] [CrossRef]

- Janwanishstaporn, S.; Karaketklang, K.; Krittayaphong, R. National trend in heart failure hospitalization and outcome under public health insurance system in Thailand 2008–2013. BMC Cardiovasc. Disord. 2022, 22, 203. [Google Scholar] [CrossRef]

- Ponikowski, P.; Voors, A.A.; Anker, S.D.; Bueno, H.; Cleland, J.G.; Coats, A.J.; Falk, V.; González-Juanatey, J.R.; Harjola, V.-P.; Jankowska, E.A.; et al. ESC guidelines for the diagnosis and treatment of acute and chronic heart failure. Eur. Heart J. 2016, 37, 2129–2200. [Google Scholar] [CrossRef]

- James, S.L.; Abate, D.; Abate, K.H.; Abay, S.M.; Abbafati, C.; Abbasi, N.; Abbastabar, H.; Abd-Allah, F.; Abdela, J.; Abdelalim, A.; et al. Global, regional, and national incidence, prevalence, and years lived with disability for 354 diseases and injuries for 195 countries and territories, 1990–2017: A systematic analysis for the global burden of disease study 2017. Lancet 2018, 392, 1789–1858. [Google Scholar] [CrossRef]

- van Riet, E.E.; Hoes, A.W.; Wagenaar, K.P.; Limburg, A.; Landman, M.A.; Rutten, F.H. Epidemiology of heart failure: The prevalence of heart failure and ventricular dysfunction in older adults over time. A systematic review. Eur. J. Heart Fail. 2016, 18, 242–252. [Google Scholar] [CrossRef]

- Caruana, L. Do patients with suspected heart failure and preserved left ventricular systolic function suffer from “diastolic heart failure” or from misdiagnosis? A prospective descriptive study. BMJ 2000, 321, 215–218. [Google Scholar] [CrossRef]

- Wenn, P.; Zeltser, R. Aortic valve disease. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- Pujari, S.; Agasthi, P. Aortic stenosis. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2021. [Google Scholar]

- Dewaswala, N.; Chait, R. Aortic regurgitation. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2021. [Google Scholar]

- Wiens, J.; Shenoy, E.S. Machine learning for healthcare: On the verge of a major shift in healthcare epidemiology. Clin. Infect. Dis. 2017, 66, 149–153. [Google Scholar] [CrossRef]

- Abdelaziz, A.; Elhoseny, M.; Salama, A.S.; Riad, A. A machine learning model for improving healthcare services on cloud computing environment. Measurement 2018, 119, 117–128. [Google Scholar] [CrossRef]

- Char, D.S.; Abràmoff, M.D.; Feudtner, C. Identifying ethical considerations for machine learning healthcare applications. Am. J. Bioeth. 2020, 20, 7–17. [Google Scholar] [CrossRef]

- Ahmad, M.A.; Eckert, C.; Teredesai, A. Interpretable machine learning in healthcare. In Proceedings of the 2018 ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics, Washington, DC, USA, 29 August–1 September 2018; pp. 559–560. [Google Scholar]

- Kononenko, I. Machine learning for medical diagnosis: History, state of the art and perspective. Artif. Intell. Med. 2001, 23, 89–109. [Google Scholar] [CrossRef]

- Jayatilake, S.M.D.A.C.; Ganegoda, G.U. Involvement of machine learning tools in healthcare decision making. J. Healthc. Eng. 2021, 2021, 6679512. [Google Scholar] [CrossRef]

- Vo, T.H.; Nguyen, N.T.K.; Kha, Q.H.; Le, N.Q.K. On the road to explainable AI in drug-drug interactions prediction: A systematic review. Comput. Struct. Biotechnol. J. 2022, 20, 2112–2123. [Google Scholar] [CrossRef]

- Hung, T.N.K.; Le, N.Q.K.; Le, N.H.; Van Tuan, L.; Nguyen, T.P.; Thi, C.; Kang, J.-H. An AI-based prediction model for drug-drug interactions in osteoporosis and Paget’s diseases from smiles. Mol. Inform. 2022, 41, 2100264. [Google Scholar] [CrossRef]

- Battineni, G.; Chintalapudi, N.; Amenta, F. Machine learning in medicine: Performance calculation of dementia prediction by support vector machines (SVM). Inform. Med. Unlocked 2019, 16, 100200. [Google Scholar] [CrossRef]

- Ryu, S.-E.; Shin, D.-H.; Chung, K. Prediction model of dementia risk based on XGBoost using derived variable extraction and hyper parameter optimization. IEEE Access 2020, 8, 177708–177720. [Google Scholar] [CrossRef]

- Yongcharoenchaiyasit, K.; Arwatchananukul, S.; Temdee, P.; Prasad, R. Gradient boosting based model for elderly heart failure, aortic stenosis, and dementia classification. IEEE Access 2023, 11, 48677–48696. [Google Scholar] [CrossRef]

- Gijsen, R.; Hoeymans, N.; Schellevis, F.G.; Ruwaard, D.; Satariano, W.A.; van den Bos, G.A. Causes and consequences of comorbidity: A review. J. Clin. Epidemiol. 2001, 54, 661–674. [Google Scholar] [CrossRef]

- Charlson, M.E.; Pompei, P.; Ales, K.L.; MacKenzie, C.R. A new method of classifying prognostic comorbidity in longitudinal studies: Development and validation. J. Chronic Dis. 1987, 40, 373–383. [Google Scholar] [CrossRef]

- Elixhauser, A.; Steiner, C.; Harris, D.R.; Coffey, R.M. Comorbidity measures for use with administrative data. Med. Care 1998, 36, 8–27. [Google Scholar] [CrossRef]

- Quan, H.; Sundararajan, V.; Halfon, P.; Fong, A.; Burnand, B.; Luthi, J.-C.; Saunders, L.D.; Beck, C.A.; Feasby, T.E.; Ghali, W.A. Coding algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data. Med. Care 2005, 43, 1130–1139. [Google Scholar] [CrossRef]

- Van Walraven, C.; Austin, P.C.; Jennings, A.; Quan, H.; Forster, A.J. A modification of the Elix Hauser comorbidity measures into a point system for hospital death using administrative data. Med. Care 2009, 47, 626–633. [Google Scholar] [CrossRef]

- Moore, B.J.; White, S.; Washington, R.; Coenen, N.; Elixhauser, A. Identifying increased risk of readmission and in-hospital mortality using hospital administrative data. Med. Care 2017, 55, 698–705. [Google Scholar] [CrossRef]

- Quan, H.; Li, B.; Couris, C.M.; Fushimi, K.; Graham, P.; Hider, P.; Januel, J.M.; Sundararajan, V. Updating and validating the Charlson comorbidity index and score for risk adjustment in hospital discharge abstracts using data from 6 countries. Am. J. Epidemiol. 2011, 173, 676–682. [Google Scholar] [CrossRef]

- Roscher, R.; Bohn, B.; Duarte, M.F.; Garcke, J. Explainable machine learning for scientific insights and discoveries. IEEE Access 2020, 8, 42200–42216. [Google Scholar] [CrossRef]

- Liu, H.; Hussain, F.; Tan, C.L.; Dash, M. Discretization: An enabling technique. Data Min. Knowl. Discov. 2002, 6, 393–423. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.-Y.; Mao, B.-H. Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. In International Conference on Intelligent Computing; Springer: Berlin/Heidelberg, Germany, 2005; pp. 878–887. [Google Scholar]

- Shehab, M.; Abualigah, L.; Shambour, Q.; Abu-Hashem, M.A.; Shambour, M.K.Y.; Alsalibi, A.I.; Gandomi, A.H. Machine learning in medical applications: A review of state-of-the-art methods. Comput. Biol. Med. 2022, 145, 105458. [Google Scholar] [CrossRef]

- Mathis, M.R.; Engoren, M.C.; Joo, H.; Maile, M.D.; Aaronson, K.D.; Burns, M.L.; Sjoding, M.W.; Douville, N.J.; Janda, A.M.; Hu, Y.; et al. Early detection of heart failure with reduced ejection fraction using perioperative data among noncardiac surgical patients: A machine learning approach. Anesth. Analg. 2020, 130, 1188–1200. [Google Scholar] [CrossRef]

- Tian, P.; Liang, L.; Zhao, X.; Huang, B.; Feng, J.; Huang, L.; Huang, Y.; Zhai, M.; Zhou, Q.; Zhang, J.; et al. Machine learning for mortality prediction in patients with heart failure with mildly reduced ejection fraction. J. Am. Heart Assoc. 2023, 12, e029124. [Google Scholar] [CrossRef]

- Budholiya, K.; Shrivastava, S.K.; Sharma, V. An optimized XGBoost based diagnostic system for effective prediction of heart disease. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 4514–4523. [Google Scholar] [CrossRef]

- Yang, J.; Guan, J. A heart disease prediction model based on feature optimization and SMOTE-XGBoost algorithm. Information 2022, 13, 475. [Google Scholar] [CrossRef]

- Kang, N.G.; Suh, Y.J.; Han, K.; Kim, Y.J.; Choi, B.W. Performance of prediction models for diagnosing severe aortic stenosis based on aortic valve calcium on cardiac computed tomography: Incorporation of radiomics and machine learning. Korean J. Radiol. 2021, 22, 334. [Google Scholar] [CrossRef]

- Anand, V.; Hu, H.; Weston, A.D.; Scott, C.G.; Michelena, H.I.; Pislaru, S.V.; Carter, R.E.; Pellikka, P.A. Machine learning-based risk stratification for mortality in patients with severe aortic regurgitation. Eur. Heart J.-Digit. Health 2023, 4, 188–195. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Tian, J.; Zheng, C.; Yang, H.; Ren, J.; Li, C.; Han, Q.; Zhang, Y. Improving risk identification of adverse outcomes in chronic heart failure using SMOTE + ENN and machine learning. Risk Manag. Healthc. Policy 2021, 14, 2453–2463. [Google Scholar] [CrossRef] [PubMed]

- Tian, J.; Yan, J.; Han, G.; Du, Y.; Hu, X.; He, Z.; Han, Q.; Zhang, Y. Machine learning prognosis model based on patient-reported outcomes for chronic heart failure patients after discharge. Health Qual. Life Outcomes 2023, 21, 31. [Google Scholar] [CrossRef] [PubMed]

- Oh, S.S.; Kang, B.; Hong, D.; Kim, J.I.; Jeong, H.; Song, J.; Jeon, M. A multivariable prediction model for mild cognitive impairment and dementia: Algorithm development and validation. JMIR Med. Inform. 2024, 12, e59396. [Google Scholar] [CrossRef] [PubMed]

- Qiu, S.; Miller, M.I.; Joshi, P.S.; Lee, J.C.; Xue, C.; Ni, Y.; Wang, Y.; De Anda-Duran, I.; Hwang, P.H.; Cramer, J.A.; et al. Multimodal Deep Learning for Alzheimer’s Disease Dementia Assessment. Nat. Commun. 2022, 13, 3404. [Google Scholar] [CrossRef]

- Lin, K.; Washington, P.Y. Multimodal Deep Learning for Dementia Classification Using Text and Audio. Sci. Rep. 2024, 14, 7002. [Google Scholar] [CrossRef] [PubMed]

- Jaul, E.; Barron, J. Age-related diseases and clinical and public health implications for the 85 years old and overpopulation. Front. Public Health 2017, 5, 316964. [Google Scholar] [CrossRef]

- Yu, T.; Simoff, S.; Jan, T. VQSVM: A case study for incorporating prior domain knowledge into inductive machine learning. Neurocomputing 2010, 73, 2614–2623. [Google Scholar] [CrossRef]

- Quispe, R.; Elshazly, M.B.; Zhao, D.; Toth, P.P.; Puri, R.; Virani, S.S.; Michos, E.D. Total cholesterol/HDL-cholesterol ratio discordance with LDL-cholesterol and non-HDL-cholesterol and incidence of atherosclerotic cardiovascular disease in primary prevention: The ARIC study. Eur. J. Prev. Cardiol. 2019, 27, 1597–1605. [Google Scholar] [CrossRef]

- Kuang, M.; Peng, N.; Qiu, J.; Zhong, Y.; Zou, Y.; Sheng, G. Association of LDL: HDL ratio with prediabetes risk: A longitudinal observational study based on Chinese adults. Lipids Health Dis. 2022, 21, 44. [Google Scholar] [CrossRef]

- Na, X.; Xi, M.; Zhou, Y.; Yang, J.; Zhang, J.; Xi, Y.; Yang, Y.; Yang, H.; Zhao, A. Association of dietary sodium, potassium, sodium/potassium, and salt with objective and subjective cognitive function among the elderly in China: A prospective cohort study. Glob. Transit. 2022, 4, 28–39. [Google Scholar] [CrossRef]

- Babic, N.; Valjevac, A.; Zaciragic, A.; Avdagic, N.; Zukic, S.; Hasic, S. The triglyceride/HDL ratio and triglyceride glucose index as predictors of glycemic control in patients with diabetes mellitus type 2. Med. Arch. 2019, 73, 163. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, X.; Shi, S.; Gao, X.; Li, Y.; Wu, H.; Song, Q.; Zhang, B. Blood urea nitrogen to creatinine ratio and long-term survival in patients with chronic heart failure. Eur. J. Med. Res. 2023, 28, 343. [Google Scholar] [CrossRef]

- Levey, A.S.; Coresh, J.; Greene, T.; Stevens, L.A.; Zhang, Y.; Hendriksen, S.; Kusek, J.W.; Van Lente, F.; Chronic Kidney Disease Epidemiology Collaboration*. Using standardized serum creatinine values in the modification of diet in renal disease study equation for estimating glomerular filtration rate. Ann. Intern. Med. 2006, 145, 247–254. [Google Scholar] [CrossRef] [PubMed]

- Blaha, M.J.; Blumenthal, R.S.; Brinton, E.A.; Jacobson, T.A. The importance of non-HDL cholesterol reporting in lipid management. J. Clin. Lipidol. 2008, 2, 267–273. [Google Scholar] [CrossRef]

- Ackerman, G.L. Serum Sodium. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990. [Google Scholar]

- Levey, A.S.; Stevens, L.A.; Schmid, C.H.; Zhang, Y.L.; Castro, A.F.; Feldman, H.I.; Kusek, J.W.; Eggers, P.; Lente, F.V.; Greene, T.; et al. A new equation to estimate glomerular filtration rate. Ann. Intern. Med. 2009, 150, 604. [Google Scholar] [CrossRef] [PubMed]

- Assessing Your Weight. 2022. Available online: https://www.cdc.gov/healthyweight/assessing/index.html (accessed on 10 September 2024).

- Whelton, P.K.; Carey, R.M.; Aronow, W.S.; Casey, D.E.; Collins, K.J.; Himmelfarb, C.D.; DePalma, S.M.; Gidding, S.; Jamerson, K.A.; Jones, D.W.; et al. ACC/AHA/AAPA/ABC/ACPM/AGS/APhA/ASH/ASPC/NMA/PCNA guideline for the prevention, detection, evaluation, and management of high blood pressure in adults. J. Am. Coll. Cardiol. 2018, 71, e127–e248. [Google Scholar] [CrossRef] [PubMed]

- Buonacera, A.; Stancanelli, B.; Colaci, M.; Malatino, L. Neutrophil to lymphocyte ratio: An emerging marker of the relationships between the immune system and diseases. Int. J. Mol. Sci. 2022, 23, 3636. [Google Scholar] [CrossRef]

- Thai CV Risk Score. 2015. Available online: https://www.rama.mahidol.ac.th/cardio_vascular_risk/thai_cv_risk_score/tcvrs_en.html (accessed on 30 August 2024).

- LaRosa, J.C. At what levels of total low- or high-density lipoprotein cholesterol should diet/drug therapy be initiated? United States guidelines. Am. J. Cardiol. 1990, 65, 7–10. [Google Scholar] [CrossRef]

- Lee, Y.; Siddiqui, W. Cholesterol Levels; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- Diabetes—Diagnosis and Treatment. 2023. Available online: https://www.mayoclinic.org/diseases-conditions/diabetes/diagnosis-treatment/drc-20371451 (accessed on 3 March 2024).

- Blumenreich, M.S. The White Blood Cell and Differential Count. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990. [Google Scholar]

- Blood Differential. 2023. Available online: https://www.ucsfhealth.org/medical-tests/blood-differential-test (accessed on 2 March 2024).

- Hosten, A.O. BUN and Creatinine. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990. [Google Scholar]

- Billett, H.H. Hemoglobin and Hematocrit. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990. [Google Scholar]

- Stiff, P.J. Platelets. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990. [Google Scholar]

- Castro, D.; Sharma, S. Hypokalemia; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- Rastegar, A. Serum Potassium. In Clinical Methods: The History, Physical, and Laboratory Examinations, 3rd ed.; Butterworths: Boston, MA, USA, 1990. [Google Scholar]

- Simon, L.V.; Hashmi, M.F.; Farrell, M.W. Hyperkalemia; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- Yeo, I.-K.; Johnson, R.A. A new family of power transformations to improve normality or symmetry. Biometrika 2000, 87, 954–959. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Kotsiantis, S.B. Decision trees: A recent overview. Artif. Intell. Rev. 2013, 39, 261–283. [Google Scholar] [CrossRef]

- Myles, A.J.; Feudale, R.N.; Liu, Y.; Woody, N.A.; Brown, S.D. An introduction to decision tree modeling. J. Chemom. 2004, 18, 275–285. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Han, J.; Pei, J.; Tong, H. Data Mining: Concepts and Techniques; Morgan Kaufinann: Burlington, MA, USA, 2022. [Google Scholar]

- Burkov, A. The Hundred-Page Machine Learning Book; Andriy Burkov: Quebec City, QC, Canada, 2019; Volume 1. [Google Scholar]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intell. Rev. 2021, 54, 1937–1967. [Google Scholar] [CrossRef]

- Arik, S.O.; Pfister, T. TabNet: Attentive interpretable tabular learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021; Volume 35, pp. 6679–6687. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar]

| Feature | Description | Dementia (n = 3372) | Heart Failure (n = 7752) | Aortic Valve Disorder (n = 1865) | p-Value |

|---|---|---|---|---|---|

| Age | Age in years | 74.83 ± 8.38 | 73.43 ± 8.61 | 71.50 ± 7.83 | 1.88 × 10−41 |

| Weight | Body weight in kg | 51.86 ± 7.94 | 58.25 ± 8.56 | 53.64 ± 8.01 | 6.82 × 10−86 |

| Height | Height in cm | 155.29 ± 7.86 | 154.80 ± 8.59 | 156.23 ± 8.03 | 2.57 × 10−11 |

| Male (n, %) | Biological characteristics of sex | 1551 (46.00) | 3307 (42.66) | 1014 (54.37) | 4.79 × 10−19 |

| Female (n, %) | Biological characteristics of sex | 1821 (54.00) | 4445 (57.34) | 851 (45.63) | 4.79 × 10−19 |

| SBP | Systolic blood pressure in mmHg | 128.63 ± 20.59 | 127.36 ± 16.46 | 131.02 ± 14.95 | 2.85 × 10−8 |

| DBP | Diastolic blood pressure in mmHg | 70.09 ± 12.36 | 68.10 ± 10.85 | 67.39 ± 9.56 | 3.41 × 10−6 |

| FBS | Fasting blood sugar in mg/dL | 121.22 ± 24.48 | 125.80 ± 34.26 | 106.18 ± 20.08 | 3.92 × 10−72 |

| Cholesterol | Total cholesterol in mg/dL | 183.52 ± 34.00 | 163.64 ± 27.87 | 163.21 ± 23.69 | 6.89 × 10−124 |

| HDL | High-density lipoprotein (HDL) cholesterol in mg/dL | 48.30 ± 8.54 | 40.81 ± 8.54 | 44.64 ± 7.66 | 8.10 × 10−200 |

| LDL | Low-density lipoprotein (LDL) cholesterol in mg/dL | 120.09 ± 27.40 | 111.16 ± 23.46 | 107.59 ± 17.96 | 4.17 × 10−35 |

| Triglyceride | Triglyceride level in mg/dL | 116.18 ± 26.59 | 117.57 ± 39.72 | 111.41 ± 35.43 | 2.59 × 10−6 |

| BUN | Blood urea nitrogen (BUN) in mg/dL | 19.65 ± 8.13 | 29.65 ± 20.02 | 20.61 ± 10.32 | 1.98 × 10−108 |

| Creatinine | Serum creatinine in mg/dL | 1.26 ± 0.52 | 1.72 ± 1.58 | 1.28 ± 0.95 | 3.55 × 10−46 |

| Hb | Hemoglobin in g/dL | 11.44 ± 1.49 | 11.19 ± 2.11 | 11.55 ± 1.27 | 9.72 × 10−11 |

| Platelet | Platelet count in platelets/uL | 232,564.16 ± 56,834.95 | 240,627.10 ± 66,390.62 | 244,594.27 ± 52,312.73 | 3.50 × 10−7 |

| WBC | White blood cell count in cells/uL | 7591.22 ± 1591.14 | 9718.22 ± 3056.44 | 8036.06 ± 1626.68 | 9.85 × 10−217 |

| Lymphocyte | Lymphocyte in % | 22.08 ± 6.56 | 16.60 ± 8.54 | 18.98 ± 7.38 | 7.23 × 10−129 |

| Neutrophil | Neutrophil in % | 65.72 ± 8.19 | 78.07 ± 14.59 | 73.40 ± 11.29 | 6.52 × 10−235 |

| K | Potassium in mEq/L | 3.97 ± 0.32 | 3.92 ± 0.59 | 4.06 ± 0.50 | 2.68 × 10−17 |

| Na | Sodium in mEq/L | 137.75 ± 1.96 | 137.71 ± 3.22 | 138.25 ± 2.74 | 1.05 × 10−6 |

| Smoker (n, %) | Daily or occasional smoker | 14 (0.42) | 150 (1.93) | 16 (0.86) | 1.40 × 10−1 |

| Drinker (n, %) | Daily or occasional drinker | 23 (0.68) | 139 (1.79) | 8 (0.43) | 6.83 × 10−4 |

| Derived Feature | Description | Dementia | Heart Failure | Aortic Valve Disorder | p-Value |

|---|---|---|---|---|---|

| Body mass index (BMI) | Body fat measurement based on weight in kg divided by the square of height in m | 23.37 ± 1.78 | 24.17 ± 3.37 | 22.65 ± 2.39 | 1.13 × 10−104 |

| Mid blood pressure | The mean of SBP and DBP | 98.36 ± 6.56 | 97.89 ± 9.03 | 98.61 ± 6.61 | 3.43 × 10−4 |

| Mean arterial pressure | The average blood pressure over a complete cardiac cycle calculated by adding DBP to one-third of the difference between SBP and DBP | 88.45 ± 5.98 | 87.98 ± 8.37 | 88.36 ± 6.05 | 4.74 × 10−3 |

| BUN-to-creatinine ratio | The ratio of blood urea nitrogen-to-creatinine | 18.36 ± 5.57 | 20.39 ± 12.94 | 16.04 ± 6.52 | 5.44 × 10−61 |

| Plasma osmolality | The body’s electrolyte–water balance measurement calculated by (2Na) + (FBS/18) + (BUN/2.8) | 291.04 ± 3.36 | 292.66 ± 8.16 | 290.09 ± 4.79 | 4.39 × 10−62 |

| Na-to-K ratio | The ratio of sodium and potassium | 34.94 ± 2.53 | 35.85 ± 5.38 | 34.65 ± 3.68 | 1.72 × 10−34 |

| LDL-to-HDL ratio | The ratio of LDL cholesterol-to-HDL cholesterol | 2.51 ± 0.36 | 2.7 ± 0.49 | 2.52 ± 0.41 | 4.54 × 10−111 |

| Cholesterol-to-HDL ratio | The ratio of total cholesterol-to-HDL cholesterol | 3.84 ± 0.54 | 3.98 ± 0.64 | 3.79 ± 0.5 | 9.20 × 10−51 |

| Triglyceride-to-HDL ratio | The ratio of triglyceride level to HDL cholesterol | 2.57 ± 0.71 | 2.82 ± 0.81 | 2.62 ± 0.8 | 2.22 × 10−59 |

| Non-HDL ratio | Total cholesterol minus HDL cholesterol | 129.77 ± 22.48 | 123.89 ± 18.45 | 121.36 ± 17.3 | 8.71 × 10−64 |

| Neutrophil count | The estimated neutrophil count calculated using the rule of three applied to the total white blood cell count | 5967.54 ± 1297.72 | 7620.04 ± 2941.34 | 6196.43 ± 1548.1 | 2.32 × 10−271 |

| Lymphocyte count | The estimated lymphocyte count calculated using the rule of three applied to the total white blood cell count | 1633.2 ± 347.41 | 1507.72 ± 548.78 | 1514.33 ± 448.91 | 2.04 × 10−35 |

| Neutrophil-to-lymphocyte ratio | The ratio of the estimated neutrophil count to the estimated lymphocyte count | 3.78 ± 1.12 | 5.71 ± 3.25 | 4.4 ± 1.61 | 1.16 × 10−282 |

| MDRD | The estimated glomerular filtration rate using the MDRD formula | 47.07 ± 18.28 | 47.08 ± 22.35 | 49.07 ± 20.42 | 7.94 × 10−4 |

| CKD-EPI | The estimated glomerular filtration rate using the CKD-EPI formula | 46.63 ± 17.79 | 46.97 ± 21.9 | 49.11 ± 19.8 | 5.83 × 10−5 |

| CVD risk score | The estimated 10-year risk score for atherosclerotic CVD | 2.33 ± 0.77 | 2.21 ± 0.79 | 2.09 ± 0.69 | 4.52 × 10−26 |

| Model | Baseline Feature Set (n = 22) | Feature Set (n = 108) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) | AUC | AUPRC | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) | AUC | AUPRC | |

| XGBoost | 90.28 ± 0.91 | 87.53 ± 1.20 | 88.79 ± 0.99 | 91.75 ± 0.64 | 0.9730 ± 0.0042 | 0.9452 ± 0.0050 | 91.31 ± 0.84 | 88.68 ± 1.15 | 89.90 ± 0.92 | 92.56 ± 0.67 | 0.9806 ± 0.0030 | 0.9552 ± 0.0050 |

| GB | 88.00 ± 1.11 | 86.82 ± 1.01 | 87.37 ± 0.97 | 90.64 ± 0.68 | 0.9665 ± 0.0040 | 0.9336 ± 0.0061 | 89.59 ± 0.83 | 88.26 ± 1.17 | 88.87 ± 0.79 | 91.72 ± 0.49 | 0.9762 ± 0.0030 | 0.9469 ± 0.0045 |

| RF | 88.52 ± 0.77 | 87.45 ± 0.82 | 87.95 ± 0.63 | 90.95 ± 0.59 | 0.9708 ± 0.0046 | 0.9393 ± 0.0065 | 88.68 ± 0.71 | 87.53 ± 0.65 | 88.07 ± 0.50 | 91.02 ± 0.48 | 0.9761 ± 0.0029 | 0.9455 ± 0.0050 |

| ET | 86.05 ± 0.90 | 86.26 ± 0.83 | 86.12 ± 0.76 | 89.40 ± 0.64 | 0.9666 ± 0.0038 | 0.9325 ± 0.0047 | 86.51 ± 0.94 | 86.10 ± 0.51 | 86.28 ± 0.52 | 89.63 ± 0.38 | 0.9703 ± 0.0032 | 0.9348 ± 0.0046 |

| SVM | 78.61 ± 1.30 | 80.55 ± 1.40 | 79.43 ± 1.26 | 83.27 ± 0.94 | 0.9282 ± 0.0047 | 0.8674 ± 0.0097 | 80.01 ± 1.04 | 81.61 ± 1.31 | 80.48 ± 0.78 | 84.64 ± 0.65 | 0.9407 ± 0.0036 | 0.8727 ± 0.0093 |

| TabNet | 75.36 ± 1.88 | 79.28 ± 1.47 | 76.88 ± 1.76 | 81.00 ± 1.84 | 0.9173 ± 0.0061 | 0.8463 ± 0.0136 | 81.04 ± 1.58 | 81.40 ± 1.03 | 81.28 ± 1.25 | 85.43 ± 1.06 | 0.9250 ± 0.0055 | 0.8558 ± 0.0109 |

| DT | 80.06 ± 1.24 | 83.06 ± 1.08 | 81.33 ± 1.03 | 85.14 ± 0.93 | 0.8753 ± 0.0069 | 0.7231 ± 0.0123 | 79.75 ± 1.53 | 82.44 ± 1.57 | 80.92 ± 1.50 | 84.89 ± 1.26 | 0.8707 ± 0.0110 | 0.7167 ± 0.0189 |

| KNN | 76.82 ± 1.15 | 82.63 ± 1.11 | 78.95 ± 1.16 | 82.17 ± 1.05 | 0.9105 ± 0.0065 | 0.7961 ± 0.0088 | 77.37 ± 1.36 | 83.22 ± 1.30 | 79.49 ± 1.35 | 82.70 ± 1.21 | 0.9178 ± 0.0078 | 0.8090 ± 0.0154 |

| Model | Baseline Feature Set (n = 22) | Full Feature Set (n = 108) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) | AUC | AUPRC | Precision (%) | Recall (%) | F1 Score (%) | Accuracy (%) | AUC | AUPRC | |

| XGBoost | 90.20 | 87.88 | 88.97 | 91.76 | 0.9754 | 0.9481 | 91.42 | 89.08 | 90.19 | 92.73 | 0.9816 | 0.9570 |

| GB | 87.70 | 87.46 | 87.57 | 90.72 | 0.9695 | 0.9387 | 89.34 | 88.23 | 88.77 | 91.69 | 0.9765 | 0.9480 |

| RF | 88.11 | 87.68 | 87.88 | 90.69 | 0.9719 | 0.9423 | 88.52 | 88.12 | 88.32 | 90.99 | 0.9747 | 0.9448 |

| ET | 85.64 | 86.72 | 86.12 | 89.15 | 0.9684 | 0.9369 | 86.48 | 85.76 | 86.11 | 89.22 | 0.9689 | 0.9341 |

| SVM | 79.31 | 81.97 | 80.47 | 83.95 | 0.9377 | 0.8762 | 79.83 | 82.84 | 81.10 | 84.72 | 0.9435 | 0.8771 |

| TabNet | 76.42 | 80.42 | 78.05 | 81.79 | 0.9142 | 0.8469 | 78.93 | 80.66 | 79.72 | 83.83 | 0.9255 | 0.8554 |

| DT | 77.66 | 81.73 | 79.28 | 82.99 | 0.8633 | 0.6979 | 78.60 | 82.69 | 80.32 | 83.91 | 0.8709 | 0.7099 |

| KNN | 74.38 | 81.26 | 76.60 | 79.75 | 0.9074 | 0.7862 | 76.60 | 82.97 | 78.75 | 81.60 | 0.9132 | 0.7929 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yongcharoenchaiyasit, K.; Arwatchananukul, S.; Hristov, G.; Temdee, P. Enhanced Multi-Model Machine Learning-Based Dementia Detection Using a Data Enrichment Framework: Leveraging the Blessing of Dimensionality. Bioengineering 2025, 12, 592. https://doi.org/10.3390/bioengineering12060592

Yongcharoenchaiyasit K, Arwatchananukul S, Hristov G, Temdee P. Enhanced Multi-Model Machine Learning-Based Dementia Detection Using a Data Enrichment Framework: Leveraging the Blessing of Dimensionality. Bioengineering. 2025; 12(6):592. https://doi.org/10.3390/bioengineering12060592

Chicago/Turabian StyleYongcharoenchaiyasit, Khomkrit, Sujitra Arwatchananukul, Georgi Hristov, and Punnarumol Temdee. 2025. "Enhanced Multi-Model Machine Learning-Based Dementia Detection Using a Data Enrichment Framework: Leveraging the Blessing of Dimensionality" Bioengineering 12, no. 6: 592. https://doi.org/10.3390/bioengineering12060592

APA StyleYongcharoenchaiyasit, K., Arwatchananukul, S., Hristov, G., & Temdee, P. (2025). Enhanced Multi-Model Machine Learning-Based Dementia Detection Using a Data Enrichment Framework: Leveraging the Blessing of Dimensionality. Bioengineering, 12(6), 592. https://doi.org/10.3390/bioengineering12060592