1. Introduction

Esophagogastroduodenoscopy (EGD) serves as a paramount diagnostic and therapeutic modality for upper gastrointestinal disorders, with millions of procedures performed worldwide every year. Ensuring the quality of EGD is of utmost importance to accurately diagnose and effectively manage patients’ conditions [

1]. In addition to the technical and non-technical skills required for adept endoscopy manipulation, EGD training necessitates cognitive acumen and quality control measures, such as maintaining a minimal blind spot during examination [

2], which profoundly influences the quality of EGD [

3].

At present, it is necessary to designate an expert endoscopist as the training director in each training program. Their responsibilities include regularly monitoring trainees’ acquisition of technical and cognitive skills, maintaining comprehensive records of the trainees’ procedural experience (including indications, findings, and adverse events), assessing their performance against defined objective standards, integrating teaching resources into the program, reviewing and updating the training methodology and program quality, discussing evaluation forms with trainers and trainees, and continuously reviewing and updating the training curriculum [

4]. However, in clinical practice, there has been a shortage of expert endoscopists with adequate time to supervise and train novice endoscopists.

In recent years, artificial intelligence (AI) has emerged as a transformative force across various medical disciplines, offering new possibilities to enhance clinical practice and medical education [

5]. AI-assisted training systems have the potential to revolutionize EGD education by addressing the limitations of traditional training approaches [

6]. The main tasks performed by AI are real-time detection, also known as computer-aided detection (CADe), and characterization, referred to as computer-aided diagnosis (CADx) [

7]. Notably, AI excels at identifying blind spots during EGD procedures and reminding endoscopists to improve the quality of examination [

8,

9]. Additionally, this technology can enhance the completeness of photodocumentation. Inspired by these applications, we believe that AI systems can serve as training directors during novice EGD training [

10], helping inexperienced endoscopists improve their gastroscopy operations while avoiding blind spots. If effectively applied, this system could significantly alleviate the training burden on expert endoscopists and enable the training of more qualified endoscopists to meet the demands of gastrointestinal endoscopy [

11], thus improving the access to high-quality endoscopic services, ensuring accurate diagnoses, and optimizing patient care [

11].

Despite existing AI tools for quality control in endoscopy, there remains a lack of systems specifically designed to support novice training in a structured and real-time manner. Novice endoscopists often struggle with recognizing anatomical landmarks and ensuring complete mucosal inspection without expert supervision. This not only leads to variability in training outcomes but also increases the risk of blind spots and missed lesions. Prior study have demonstrated that AI-based systems can significantly improve novice trainees’ performance in esophagogastroduodenoscopy (EGD), particularly by reducing blind spots and enhancing mucosal visualization [

4]. Similarly, systematic reviews have established the value of virtual reality (VR) simulators in accelerating the acquisition of technical skills and reducing patient discomfort, although these technologies often lack real-time AI guidance and are commonly limited to simulated settings [

12,

13]. Moreover, international position statements and multi-center surveys, including those by the European Society of Gastrointestinal Endoscopy (ESGE), have emphasized the importance of standardized curricula, simulation-based learning, and competency-based assessment in endoscopy training [

14,

15]. However, most prior AI-focused studies have been limited to single centers, single phases, or simulated environments, and have not integrated real-time AI guidance, structured feedback, and longitudinal assessment within real-world, multi-phase clinical training [

4,

12,

16,

17].

Recognizing these challenges, we were motivated to develop an AI-based assistant that could serve as a real-time training director, offering immediate feedback on anatomical coverage, blind spot detection, and photodocumentation completeness. Such a system aims to enhance procedural learning, reduce reliance on expert trainers, and promote standardization in endoscopy education.

2. Materials and Methods

2.1. Development of the EndoAdd Teaching System

To aid novice trainees, a deep learning model based on a convolutional neural network (CNN) was developed to classify EGD images into 26 predefined categories representing upper gastrointestinal tract sites. The goal was to assist in their training, as depicted in

Figure 1.

2.2. Dataset

A dataset comprising EGD images from 5000 patients was constructed to train and validate the EndoAdd system. In vitro images were selected by a junior endoscopist for further annotation. Two senior endoscopists independently labeled these images into 27 categories, including 26 different upper gastrointestinal tract sites and “NA” (not applicable). Another senior endoscopist reviewed the images and labels to ensure quality control and resolve any disagreements between the two endoscopists. Images from 500 patients were randomly divided into training (80%, 400 patients, 35,974 images), validation (10%, 50 patients, 5415 images), and test (10%, 50 patients, 5564 images) sets at the patient level.

2.3. Network Architecture

The EndoAdd system employed XceptionNet [

11] as the backbone network for EGD frame classification. This convolutional neural network was selected for its efficiency and strong performance in medical image recognition tasks. As shown in

Figure 1A, the architecture consists of three main parts: (1) the entry flow, which extracts low-level features such as edges and contours from input images (

Figure 1C); (2) the middle flow, repeated eight times, which uses depthwise separable convolutions to extract higher-level semantic features; and (3) the exit flow, which fuses features through global average pooling and a fully connected layer to generate final predictions (

Figure 1D). The core building block is the depthwise separable convolution module (

Figure 1B), an enhanced version of the Inception module [

18], designed to reduce the number of learnable parameters while maintaining feature extraction capability. In the EndoAdd system, each incoming EGD video frame is resized and preprocessed before being fed into the XceptionNet model. The model outputs a probability distribution across 26 predefined anatomical classes and one “NA” (not applicable) class. These outputs are used to determine the current anatomical region being visualized in real time. The classification results are continuously logged and aggregated to track which anatomical sites have been inspected. Based on this, the system dynamically generates a real-time blind spot map and provides both live procedural guidance and post-procedure summaries. The entire classification system was implemented using PyTorch 1.8 and deployed on a workstation configured for real-time inference (~25 fps), enabling seamless integration into the clinical endoscopy workflow. This classification component forms the core of EndoAdd’s functionality in training support and procedural quality assurance.

2.4. Network Training

EGD images were inputted into the network and the outputs were probabilities corresponding to 26 different upper gastrointestinal tract sites and “NA”. The network weights pretrained on ImageNet [

19] were adapted and fine-tuned using our in-house EGD dataset. The binary cross-entropy loss function was utilized:

where N is the number of samples, M is the number of classes, p

ij is the predicted probability, and y

ij is the ground-truth label annotated by endoscopists. The neural network was implemented using PyTorch 1.8 on a workstation with an Intel Core i7-6700K CPU, 32 GB RAM, and NVIDIA GTX1060 GPU with 6 GB memory.

2.4.1. Data Augmentation

To enhance model generalizability and reduce the risk of overfitting, we implemented comprehensive data augmentation strategies during training. These included random rotations within ±15°, horizontal and vertical flipping, scaling between 95% and 105%, random cropping, and color jitter (adjustment of brightness and contrast within ±10%). Each augmentation was applied with a defined probability to each training image, ensuring a diverse training dataset.

2.4.2. Hyperparameter Optimization

Model hyperparameters—including learning rate, batch size, number of epochs, and dropout rate—were optimized using a grid search approach on the training dataset. The grid search covered learning rates from 1 × 10−5 to 1 × 10−3, batch sizes of 16 and 32, and dropout rates ranging from 0.2 to 0.5. The final hyperparameter set was selected based on the highest performance metrics obtained on the validation set during cross-validation.

2.4.3. Model Validation

Model performance and stability were assessed using stratified five-fold cross-validation within the training set. Hyperparameter tuning was conducted based on validation results in each fold. For final evaluation, the model was retrained on the combined training and validation data using the optimized hyperparameters, and tested on the independent held-out test set. Performance metrics, including accuracy, sensitivity, specificity, and the area under the receiver operating characteristic curve (AUC), were calculated to comprehensively assess the model’s effectiveness. An early stopping strategy was applied based on validation loss to prevent overfitting. The final model was evaluated on the test set and performance was reported as accuracy and AUC.

2.5. Trial Design

This study was designed as a prospective, randomized controlled trial. The study consisted of three phases: the training phase, the practicing phase, as well as the test phase. The entire study protocol was approved by the institutional review board of Zhongshan Hospital (B2021-805R). It was registered at the Chinese Clinical Trial Registry (ChiCTR2200062730). All authors had access to the study data and reviewed and approved the final manuscript.

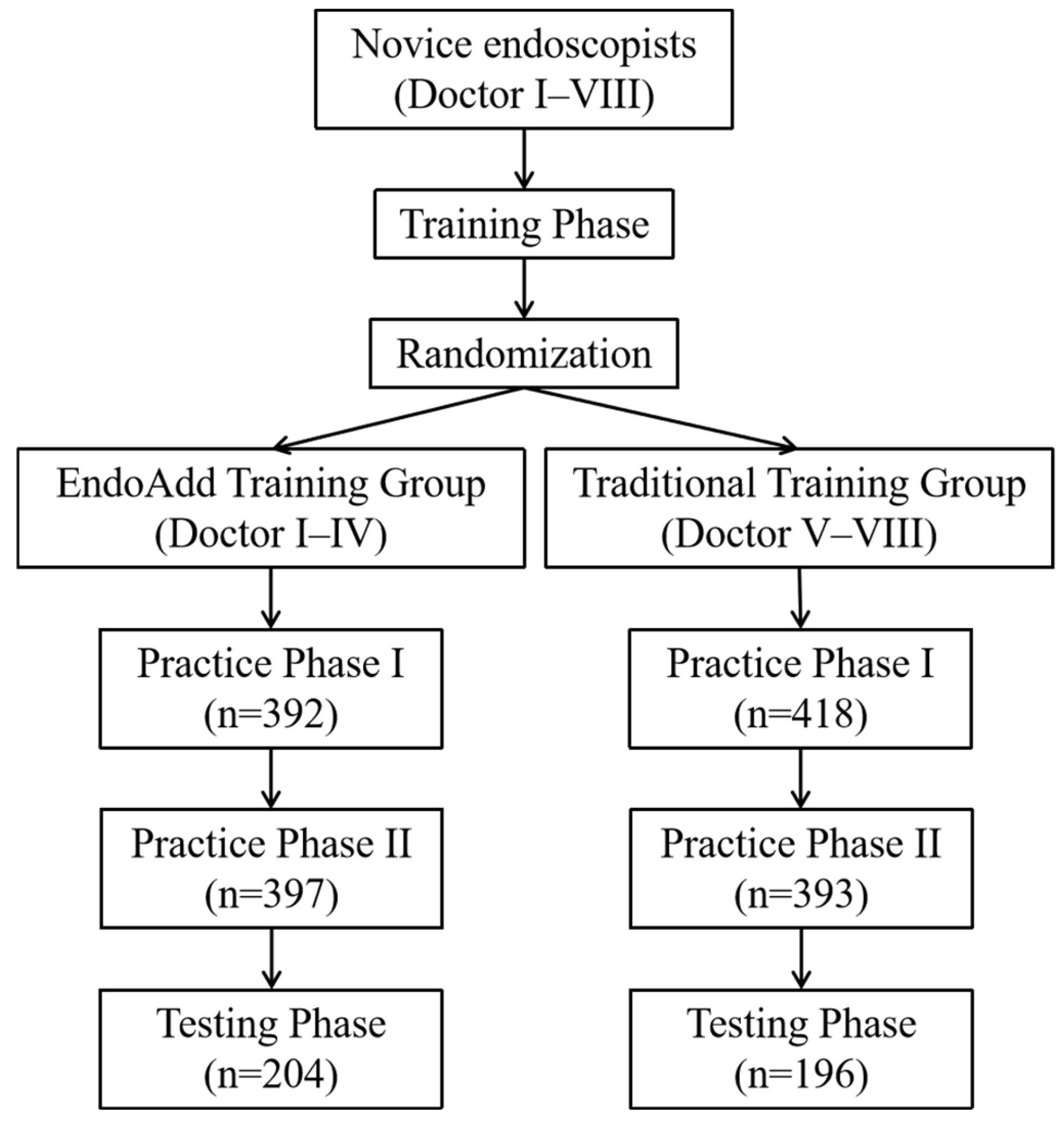

Eight novice trainees without prior EGD operational experience were recruited for this research between 8 August 2022 and 31 January 2023. The flow chart of the study is depicted in

Figure 2. All endoscopic images used for model development and clinical procedures were obtained using the Olympus GIF-H290 HD endoscope system (Olympus Medical Systems, Tokyo, Japan) under standard white-light imaging mode.

2.6. Training Phase

Before performing EGD procedures, the eight novice trainees completed a comprehensive training course. The course covered basic concepts of indications and contraindications for EGD, the process of EGD, the operating demonstrations, the introduction to common endoscopic instruments, as well as the diagnosis of upper gastrointestinal diseases. The training course lasted approximately 10 h and involved five experienced endoscopists and one nurse as instructors. Subsequently, the trainees were allowed to observe EGD procedures in the examination room and perform five EGDs with the assistance of senior endoscopists. Before concluding the training phase, the trainees were required to take an exam consisting of twenty multiple-choice and five short-answer questions assessing their knowledge of basic EGD concepts, anatomical structures, and common lesions.

2.7. Randomization and Blinding Procedure of the Practicing and Testing Phase

Before commencing the practicing and testing phase, the eight novice trainees selected grouped envelopes and were randomly assigned to either the EndoAdd group or the control group, with each group consisting of four trainees.

During outpatient visits when scheduling EGD appointments, patients were interviewed by a research assistant. Written informed consent was obtained from all patients. Meanwhile, the assistant explained the aims of this study and collected demographic and medical information using a data collection sheet. Eligible participants were randomized into the EndoAdd group and the control group in a 1:1 ratio through block randomization with stratification by center. The random allocation table was generated using SAS 9.4 software, and the masking of randomization was facilitated with opaque envelopes. Patients remained blinded to their group assignment.

To minimize performance and assessment bias, senior trainers who supervised the EGD procedures were not informed of the group assignments (EndoAdd or control) of the novice trainees during the testing phase. During this phase, the EndoAdd system did not display real-time feedback, ensuring that both groups underwent procedures under identical visual and procedural conditions. Furthermore, all procedural recordings were anonymized and coded before being reviewed by independent assessors who were blinded to group allocation. During the practicing phases, while it was necessary for trainers to be aware of the intervention due to the visible presence of the EndoAdd system in the AI group, direct trainer input was restricted by protocol. Trainers were instructed not to intervene or provide feedback during the examination unless patient safety was at risk. Their role was limited to observation and emergency intervention, thereby reducing the risk of introducing bias based on group knowledge.

2.8. Patient Recruitment of the Practicing and Testing Phase

A cohort of outpatients aged between 18 and 75 years who were scheduled to undergo routine diagnostic EGD were enrolled in this study. Participants were required to willingly provide informed consent. Additionally, specific exclusion criteria were implemented to maintain the study’s internal validity. These exclusion criteria encompassed the following conditions: (1) history of prior surgical interventions related to esophageal, gastric, duodenal, small intestinal, or colorectal cancer; (2) presence of gastroparesis or gastric outlet obstruction; (3) severe chronic renal failure, defined as a creatinine clearance below 30 mL/min; (4) severe congestive heart failure, classified as New York Heart Association class III or IV; (5) ongoing pregnancy or breastfeeding; (6) diagnosis of toxic colitis or megacolon; (7) poorly controlled hypertension, indicated by systolic blood pressure exceeding 180 mm Hg and/or diastolic blood pressure surpassing 100 mm Hg; (9) moderate or substantial active gastrointestinal bleeding, quantified as greater than 100 mL per day; and (10) existence of major psychiatric illness.

2.9. Interventions of the Practicing and Testing Phase

Patients were randomly assigned to either the normal group or the EndoAdd group and all EGD examinations took place between 8:30 and 11:30 or 13:30 and 16:30. All EGD procedures in this study were performed under deep sedation using intravenous propofol, administered by anesthesiologists according to institutional protocols, to ensure consistent baseline conditions across all participants. In addition, a scopolamine butylbromide was routinely administered prior to the examination unless contraindicated. The examination was supervised by senior trainers with over 5000 EGD experiences.

During the practicing period, doctors in the EndoAdd group performed an examination with the assistance of the EndoAdd system; the normal group performed an examination with the assistance of senior doctors. During the testing period, doctors in both groups performed an examination under the surveillance of senior doctors, without the assistance of the EndoAdd system. Real-time blind spots were displayed on the screen and novice trainees in this group could refer to this information during the EGD procedure. If any EGD lesions were detected, trainees were allowed to perform biopsies before concluding the procedure. Once they confirmed the completion of the EGD, the screen displayed their performance, including remaining blind spots, the procedure route, and photodocumentation recorded from the EGD. Senior trainers were not permitted to provide additional information.

Patients in the normal group were examined by the novice trainees using a routine inspection process. No additional information was shown on the screen. If any EGD lesions were detected, trainees were allowed to perform biopsies before concluding the procedure. Trainers could provide instructions on EGD procedures.

The time allotted for the EGD by novice trainees was limited to 10 min, excluding biopsy time, to ensure patient well-being. Senior trainers had the authority to stop the EGD procedure if they anticipated adverse events. All patients underwent a repeat EGD performed by a senior trainer to prevent missed diagnoses.

2.10. Outcome

The primary outcome of the study was the number of blind spots in the control and EndoAdd groups. The secondary outcomes included: (1) blind spot rate (number of unobserved sites in each patient/26 × 100%); (2) inspection time; (3) detection rate of the lesions; and (4) completeness of photodocumentation produced by endoscopists.

2.11. Statistical Analysis and Sample Size Calculation

The number of blind spots observed among trainees at our endoscopic centers was approximately 5.8. We hypothesized that the app would increase the number of blind spots to 2. To detect this difference accompanied with a significance level (α) of 0.05 as well as a power of 80% based on a two-tailed test, the sample size for this study was calculated to be approximately 322 patients. Considering that approximately 20% of patients may cancel their colonoscopy appointments, we estimated that a total of 400 patients would be necessary for the discovery of a statistically significant difference in the primary outcomes.

All statistical analyses were performed using SAS software (version 9.4). To address the risk of inflated type I error due to multiple comparisons across anatomical regions and time points, we applied the Benjamini–Hochberg procedure to control the false discovery rate (FDR) for all relevant outcome measures. Adjusted p-values were reported and a two-tailed p-value of <0.05 after correction was considered statistically significant. As secondary outcomes were considered exploratory, statistical multiplicity resulting from multiple outcomes was not corrected in this study.

Continuous variables were presented as mean ± standard deviation (SD) and compared using student’s t-test. Categorical variables were presented as numbers (percentages) and analyzed using either the chi-square test or Fisher exact test. To estimate the rate and its 95% confidence interval (CI) for each group, we employed the Clopper–Pearson method. Additionally, we calculated the rate difference between the two groups and its 95% CI using the Newcombe–Wilson method with a continuity correction. In certain subgroups, we also compared the rate of adequate bowel preparation between the study group and the control group.

3. Results

3.1. Performance of the EndoAdd System on Image Classification

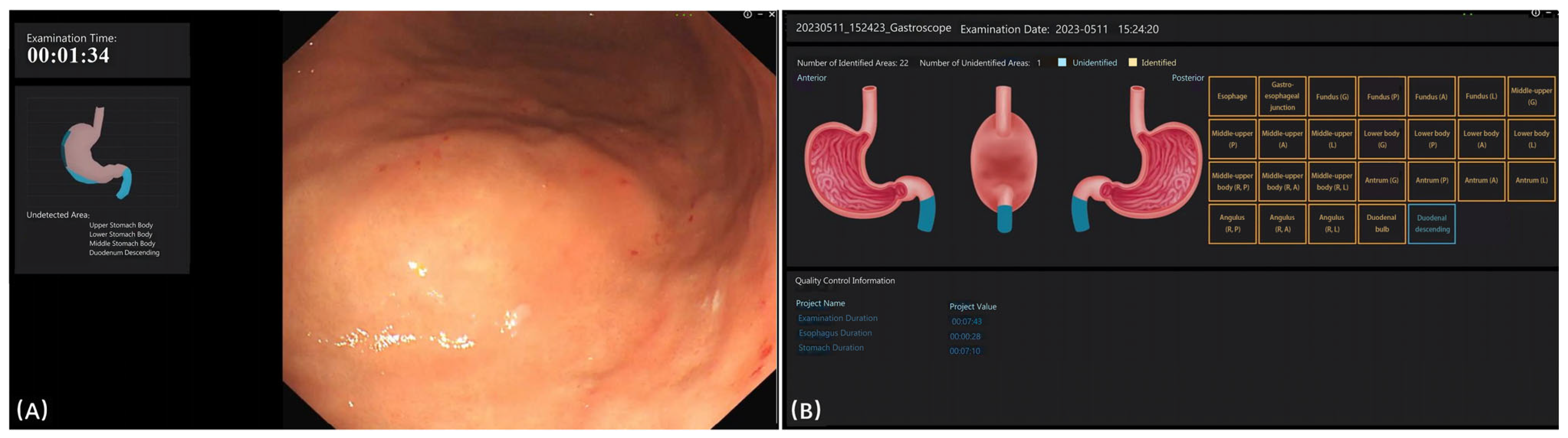

Figure 3 displays the interface of the EndoAdd system during and after examination. During the examination, the blind spot was presented on the left side of the screen (

Figure 3A). After the examination, any missed categories were displayed on the screen (

Figure 3B). The EndoAdd system demonstrated robust performance in EGD image classification. After approximately 300 training epochs, the model achieved an overall accuracy of 98.0% and a mean area under the curve (AUC) of 0.984 on the test set. The system provided real-time feedback during examinations by highlighting blind spots and, after each procedure, displayed any missed anatomical sites (

Figure 3). Detailed performance metrics for each anatomical category are available in

Supplementary Table S1.

3.2. Trainee Characteristics and Knowledge Assessment

After completing the training phase, the eight trainees took an exam consisting of twenty multiple-choice questions and five short-answer questions designed to assess their knowledge of basic concepts, anatomical structures, and common lesions related to EGD. The two groups of trainees demonstrated comparable understanding and performance levels (25.5 ± 2.38 vs. 26 ± 2.16,

p = 0.31) regarding the material covered during the training phase (

Supplementary Tables S2 and S3). However, it is important to note that this exam primarily assessed theoretical knowledge and its results may not directly correlate with practical performance.

3.3. Post-Training Outcomes

Baseline information of patients is shown in

Table 1. The comparison of pre- and post-training outcomes for the eight endoscopists (see

Supplementary Tables S2 and S3) revealed a statistically significant decrease in average examination time in both the EndoAdd group and traditional training group for most endoscopists (

p < 0.01), including Doctors I, II, III, IV, VII, and VIII. Additionally, a statistically significant reduction in blind spots was observed across all endoscopists (

p < 0.01). Most endoscopists also demonstrated improvements in the completeness of photodocumentation (

p < 0.01).

The EndoAdd group exhibited significant reductions in omission rates across various anatomical areas, including the Middle-upper, Middle-upper body, Angulus, as well as selected areas of the Antrum and Fundus. These improvements ranged from 4 to 10 significant improvements per physician, totaling 28 improvements. In contrast, the traditional training group showed fewer significant improvements, ranging from 3 to 7 improvements per physician, totaling 19 improvements, which were primarily concentrated in the Middle-upper and Middle-upper body areas.

3.4. Analysis of the Practicing Phases and Testing Phase

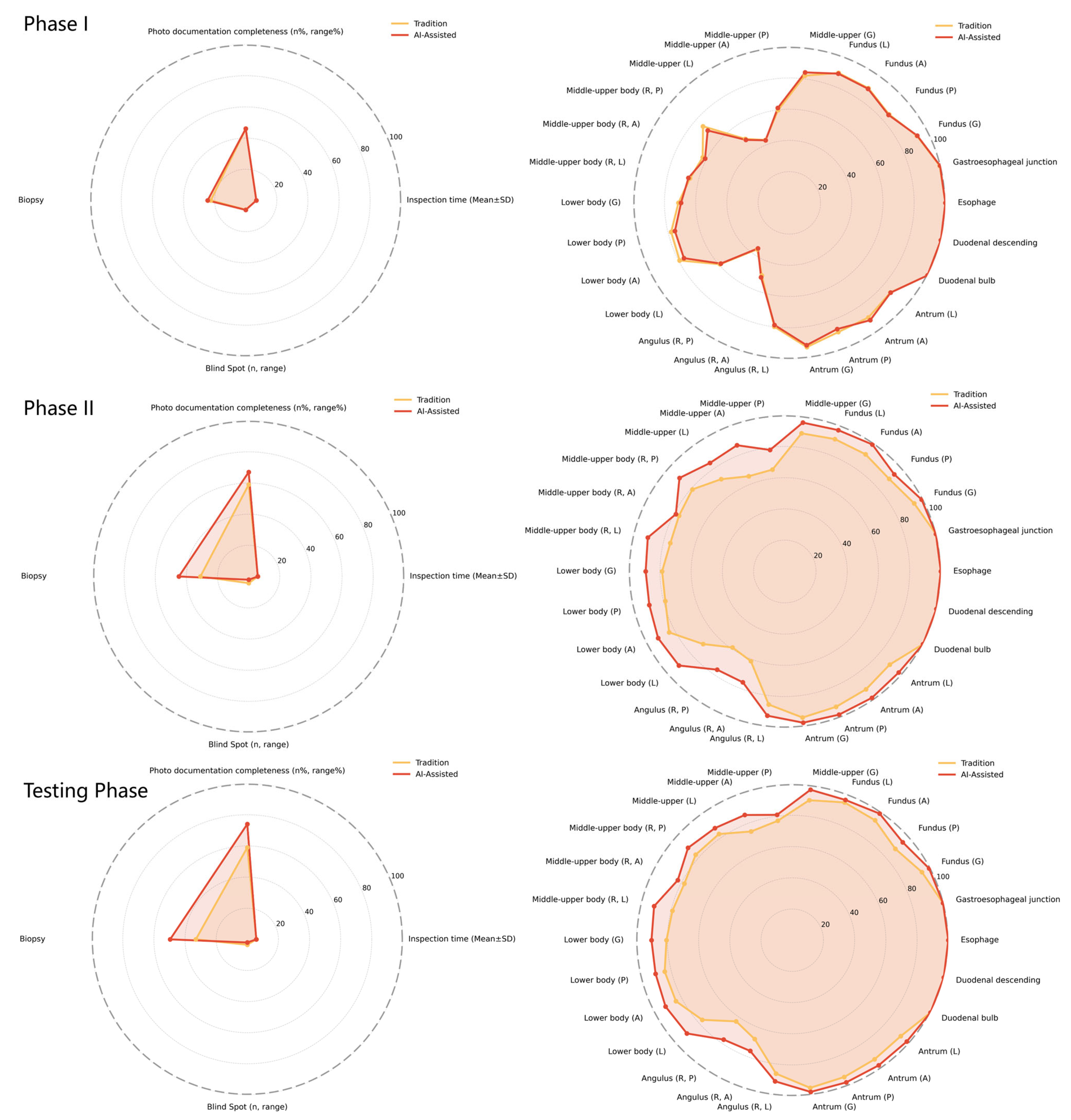

Further analysis was conducted across three distinct phases: practicing phase I, practicing phase II, and the testing phase.

Table 2 and

Figure 4 present a comprehensive comparison of outcomes for both the EndoAdd and traditional training groups. Prior to training, no statistically significant differences were observed between the EndoAdd group and the traditional training group regarding examination time, missed diagnosis rates in various anatomical areas, or biopsy rates.

During practicing phase II, the EndoAdd group demonstrated superior performance across multiple indicators compared to the traditional training group. Notable improvements included enhanced completeness of photodocumentation (67 (45, 88) vs. 59 (30, 79), p < 0.01), higher biopsy rates (44.84% vs. 31.04%, p < 0.01), and reduced omission rates in specific regions such as the Fundus, Middle-upper, Middle-upper body, Lower body, Angulus, and selected areas of the Antrum (p < 0.01). Similar trends persisted during the testing phase.

Notably, during practicing phase II, the EndoAdd group achieved a satisfactory level of examination proficiency comparable to that of experienced endoscopists. In the subsequent testing phase, the AI-assisted group demonstrated significant advantages, including improved photodocumentation completeness (74 (18, 92) vs. 59 (28, 82), p < 0.01).

3.5. Diagnostic Rates and Lesion Detection

Regarding the diagnostic rates and lesion detection, an evaluation was conducted to compare the performance of both the EndoAdd group and the traditional training group in diagnosing benign and malignant lesions. The experienced endoscopists were considered as the gold standard for this assessment. The findings indicated that both groups achieved diagnostic rates comparable to those of the experienced endoscopists in identifying conditions such as H. pylori infection, ulcers, polyps, submucosal elevations, and early-stage gastrointestinal cancers. Notably, there were no significant differences observed in the lesion detection rates between the two groups, as depicted in

Table 3.

4. Discussion

The growing demand for EGD services amidst a shortage of skilled endoscopists underscores the limitations of traditional training methods, which are often inefficient, variably safe, and dependent on individual trainers’ expertise [

20,

21,

22]. Emerging evidence demonstrates that AI-assisted training can achieve diagnostic accuracy comparable to experienced endoscopists [

20,

21], suggesting its potential to address these challenges. This study systematically evaluated the efficacy of EndoAdd, an AI-assisted training system, compared to conventional methods in novice endoscopist education. Both training approaches improved endoscopist performance across multiple metrics, but EndoAdd demonstrated distinct advantages. Notably, AI guidance reduced blind spots more effectively than traditional training (

p < 0.05). The real-time feedback mechanism likely enhanced lesion detection by improving anatomical awareness and procedural consistency, particularly in complex regions such as the Angulus, partial Antrum, and Fundus [

23]. These findings align with prior reports on AI’s capacity to standardize gastrointestinal endoscopy practices [

22]. By bridging the gap between algorithmic development and practical, standardized deployment of AI in endoscopy education, our study contributes new evidence and a practical framework for future research and clinical adoption of AI-guided training.

Photodocumentation completeness—a critical factor for accurate diagnosis and interdisciplinary communication improved significantly in both groups, with EndoAdd showing superior enhancement (

p < 0.01). The system’s automated imaging guidance ensured comprehensive gastrointestinal tract visualization, facilitating precise documentation without compromising procedural efficiency. Both groups achieved comparable reductions in examination time, indicating that AI integration does not impede workflow dynamics. During phase II training, EndoAdd trainees attained proficiency levels equivalent to experienced endoscopists in both blind spot reduction and photodocumentation (

p < 0.05), whereas traditional trainees demonstrated slower progression. This accelerated skill acquisition translated to fewer required training cases for AI-assisted learners (median 28 vs. 41 cases;

p = 0.003). Importantly, neither method compromised diagnostic accuracy for common lesions (

p > 0.05), confirming AI training’s non-inferiority in developing core competencies. Qualitative assessments corroborated quantitative findings: EndoAdd users reported heightened confidence in independent procedure execution due to real-time blind spot visualization, while traditional trainees emphasized the value of mentorship despite challenges in self-directed error correction [

21]. These observations suggest that AI systems may optimally function as adjuncts to human supervision, providing standardized, objective feedback to complement experiential learning.

While a formal cost-effectiveness analysis was beyond the scope of this study, initial considerations suggest that implementing the EndoAdd system would involve costs related to hardware, software licensing, and user training. However, potential benefits may include increased training efficiency, reduced demand for direct expert supervision, and shorter learning curves, which could translate into long-term cost savings for training programs.

Economic modeling indicates that EndoAdd implementation could yield long-term cost efficiencies through reduced expert supervision requirements and increased procedural throughput, despite initial infrastructure investments. However, successful clinical integration requires addressing technical compatibility with existing endoscopic platforms, workflow optimization, and regulatory compliance—including GDPR-compliant data handling protocols. Prospective validation under formal regulatory frameworks remains essential to ensure safety and accountability in AI-driven medical education.

The integration of artificial intelligence (AI) into medical training also introduces several important ethical considerations that must be thoughtfully addressed to ensure safe, effective, and equitable practice. While AI systems offer valuable real-time feedback and standardized guidance, there is a risk that over-reliance may undermine the development of independent decision-making and critical thinking in trainees, making it essential for training programs to foster core competencies and professional judgment with AI serving as a supportive tool rather than a replacement for human expertise. Additionally, the use of AI-assisted systems raises important questions regarding patient consent, as patients should be fully informed when AI is involved in their care, including the potential benefits, limitations, and data privacy considerations, to maintain transparency and trust. The introduction of AI may also affect the doctor-patient relationship, as technology can enhance procedural quality and patient safety, but may also lead to concerns about depersonalization if not carefully managed. Therefore, open communication and a commitment to maintaining empathy and interpersonal connection are critical to ensuring that AI enhances rather than diminishes the human aspect of care. Overall, the responsible adoption of AI in endoscopy training depends on proactive attention to these ethical issues, with ongoing dialogue among educators, clinicians, patients, and ethicists guiding the integration of technology into medical education.

This study demonstrates that AI-assisted training effectively addresses key limitations of traditional endoscopy education while maintaining diagnostic rigor. Future research should investigate longitudinal skill retention and broader implementation strategies across diverse healthcare settings.

5. Limitations

The EndoAdd system demonstrates notable advantages in minimizing blind spots and improving photodocumentation quality; however, several critical limitations require consideration. Firstly, the training dataset’s single-center origin and East Asian predominance may compromise external validity across ethnically diverse populations and clinical settings with differing disease prevalence patterns. While encompassing common pathologies, the system’s diagnostic performance metrics approached ceiling levels in both intervention and control groups for many lesion types. This ceiling effect likely attenuated our ability to detect statistically meaningful differences attributable to AI assistance, potentially underestimating the intervention’s incremental value. These findings underscore the necessity for human oversight in clinical deployment, particularly for rare or complex lesions. Secondly, the pilot study’s limited sample size constrains generalizability. The small cohort fails to capture inter-individual variability in baseline competencies and learning trajectories, while the visible presence of the EndoAdd system rendered complete blinding of trainers during practice phases unfeasible. This potential source of performance bias may have influenced trainee behavior or supervision intensity despite protocol restrictions on direct intervention. Additionally, the absence of longitudinal follow-up precludes assessment of skill retention and autonomous application post-AI training. Thirdly, the lack of formal cost-benefit analysis warrants attention. Although potential long-term gains in procedural quality exist, substantial upfront implementation costs may hinder adoption in resource-constrained settings. Fourthly, informal qualitative feedback was collected from trainees after the intervention. Key themes included increased confidence in anatomical recognition, perceived usefulness of real-time guidance (AI group), and suggestions for more interactive features. However, this feedback was anecdotal and not obtained through a structured questionnaire. Future studies should incorporate standardized, quantitative measures to more rigorously capture trainee perspectives. Fifth, AI integration in early training raises concerns regarding overdependence effects. Prolonged reliance on automated guidance might attenuate the development of critical competencies including independent clinical judgment, adaptive decision-making, and pattern recognition skills. Future technical refinements should prioritize enhanced lesion characterization algorithms, real-time procedural guidance, and optimized user interface design. Addressing real-world implementation challenges requires standardized interoperability protocols, reduced computational latency under clinical workload pressures, and seamless integration with heterogeneous hospital IT infrastructures. Particular attention must be given to mitigating cognitive burden on endoscopists through streamlined workflow integration. To address the ceiling effect limitation, future studies should incorporate more challenging or subtle lesion cases (e.g., flat neoplasms, early cancers) and employ granular assessment methodologies such as confidence scoring, lesion conspicuity metrics, or eye-tracking analysis to better quantify diagnostic improvements. Multicenter trials with extended follow-up periods are essential to validate the system’s scalability, cost-effectiveness, and educational sustainability. Complementary developments should focus on multimodal data fusion strategies, algorithmic improvements in detection specificity, and bidirectional human-AI interaction frameworks to enhance training efficacy.