Abstract

Obstructive sleep apnea (OSA) is characterized by frequent episodes of sleep-disordered breathing (SDB), which are often accompanied by leg movement (LM) events, especially periodic limb movements during sleep (PLMS). Traditional single-event detection methods often overlook the dynamic interactions between SDB and LM, failing to capture their temporal overlap and differences in duration. To address this, we propose Attention-enhanced CRF with U-Net (AttenCRF-U), a novel joint detection framework that integrates multi-head self-attention (MHSA) within an encoder–decoder architecture to model long-range dependencies between overlapping events and employs multi-scale convolutional encoding to extract discriminative features across different temporal scales. The model further incorporates a conditional random field (CRF) to refine event boundaries and enhance temporal continuity. Evaluated on clinical PSG recordings from 125 OSA patients, the model with CRF improved the average F1 score from 0.782 to 0.788 and reduced temporal alignment errors compared with CRF-free baselines. The joint detection strategy distinguished respiratory-related leg movements (RRLMs) from PLMS, boosting the PLMS detection F1 score from 0.756 to 0.778 and the SDB detection F1 score from 0.709 to 0.728. By integrating MHSA into a CRF-augmented U-Net framework and enabling joint detection of multiple event types, this study presents a novel approach to modeling temporal dependencies and event co-occurrence patterns in sleep disorder diagnosis.

1. Introduction

Obstructive sleep apnea (OSA) is a common sleep disorder primarily characterized by snoring, sleep fragmentation, and frequent nocturnal awakenings. OSA impairs sleep quality and is closely associated with a range of serious health conditions, including hypertension, coronary artery disease, pulmonary heart disease, and stroke [1]. The core feature of OSA is the frequent occurrence of sleep-disordered breathing (SDB) events, including apnea and hypopnea, which are characterized by intermittent complete or partial airway collapse [2]. A key metric used to assess the severity of OSA is the apnea–hypopnea index (AHI), defined as the number of SDB events per hour of sleep. According to the AHI classification criteria, OSA can be classified as mild, moderate, or severe [3]. Accurate detection of SDB events is essential for the clinical assessment of OSA, as AHI directly influences decisions regarding treatment interventions, such as continuous positive airway pressure (CPAP) [3].

In OSA patients, SDB events often co-occur with leg movements (LM). LM typically manifests as involuntary muscle contractions, commonly observed in the toes, ankles, knees, and hips, primarily involving contractions of the tibialis anterior muscle [4]. When LM occurs in a periodic manner, it is referred to as periodic limb movements during sleep (PLMS), which may disrupt sleep architecture, leading to sleep fragmentation and daytime functional impairments [5]. Although patients are often unaware of the presence of PLMS, it can lead to difficulties falling asleep, excessive daytime sleepiness, reduced attention, and decreased work efficiency [6]. A key parameter for evaluating PLMS is the PLMS index (PLMI), which quantifies the number of PLMS events per hour. OSA patients with comorbid PLMS exhibit worse disease outcomes and daytime symptoms, including a higher risk of adverse cardiovascular events [7]. Epidemiological data show that 14.8% of OSA patients have a PLMI exceeding 15/h, underscoring the clinical importance of PLMS in OSA progression [8].

In current clinical practice, the annotation of sleep events is primarily based on whole-night polysomnography (PSG), with manual scoring performed by specialists according to the American Academy of Sleep Medicine (AASM) guidelines. PSG includes multiple physiological signals such as electroencephalogram (EEG), electrooculogram (EOG), electromyogram (EMG), and electrocardiogram (ECG) [9].

SDB events include apnea, defined as a ≥90% airflow reduction lasting ≥10 s during sleep, and hypopnea, defined as a ≥30% reduction for ≥10 s, accompanied by either a ≥3% oxygen desaturation or an arousal [10].

LM events are annotated using tibialis anterior EMG signals, with the onset marked by an amplitude >8 μV above the baseline and offset as a sustained drop <2 μV for ≥0.5 s, with durations ranging from 0.5 to 10 s. A PLMS series is identified when ≥4 LMs occur with inter-movement intervals of 5–90 s [11].

SDB events can elicit respiratory-related leg movements (RRLMs), defined as LMs occurring within 0.5 s before to 0.5 s after an SDB event. RRLMs should be excluded from PLMS annotations to prevent misclassification [11].

Although manual scoring remains the clinical gold standard, it imposes a significant clinical burden, including an average of 1–3 h of labor per PSG recording for expert annotation and inter-rater variability rates of 15–20% in event classification [9]. Extensive research has been conducted on single-event automatic detection, including studies targeting LM [12,13] and SDB [14,15,16].

To meet the growing clinical need for comprehensive evaluation, the joint detection of multiple sleep events has become a key research direction, offering richer diagnostic insights and supporting personalized interventions. Furthermore, LM and SDB events frequently coexist in the same patient, especially among those with OSA, where LM incidence is significantly higher and closely related to sleep quality deterioration caused by OSA [17].

Therefore, previous works have leveraged multi-modal physiological signals and various modeling strategies for joint LM and SDB detection. For instance, Waltisberg et al. [18] combined minimum redundancy maximum relevance (mRMR) for feature selection with a Bayesian classifier to classify 60-s segments into normal, LM, and SDB events. Biswal et al. [19] employed a recursive convolutional neural network (CNN) for sleep staging and LM/SDB recognition. They divided events into 1 s segments for classification across large-scale PSG datasets. Zahid et al. [20] proposed a Multi-Modal Sleep Event Detection (MSED) model that combines a CNN, bidirectional gated recurrent unit (biGRU), and attention mechanism to extract event features through multiple processing streams. Their model outperformed single-event detection models. The DeepSDBPLM model designed by Almutairi et al. [21] integrates empirical mode decomposition with attention for the joint classification of SDB and PLM events within 30 s signal segments. Despite these advances, most existing methods still rely on fixed-time windows, limiting temporal resolution and clinical applicability. To overcome this, recent studies have shifted towards event-based modeling. Seeuws et al. [22] proposed learning directly from event annotations without post-processing, and later introduced a human-in-the-loop annotation framework [23] to improve adaptability with minimal manual effort.

The key challenges of joint detection lie in the temporal overlap between LM and SDB events, as well as their differing durations, both of which require models capable of capturing fine-grained and long-range temporal dependencies. Moreover, engineering constraints such as multi-channel signal synchronization and limitations of conventional acquisition systems pose barriers to scalable deployment. To address these issues, this study proposes an automatic joint detection framework for LM and SDB events in OSA patients, aiming to enhance diagnostic reliability, reduce clinical burden, and enable future integration into wearable or bedside monitoring systems.

This study makes three key contributions. First, a joint detection strategy is proposed to reduce isolated event errors by reinforcing the associations between LM and SDB events, enabling accurate recognition of RRLM and PLMS series. Second, temporal dependency is enhanced through multi-head self-attention (MHSA) to capture long-range temporal relationships, while a conditional random field (CRF) refines prediction continuity to reduce fragmentation in event sequences. Third, an encoder–decoder structure with multi-scale convolutional operations is designed to extract discriminative patterns across different temporal scales, improving detection granularity and adapting to heterogeneous event durations.

2. Materials and Methods

2.1. Datasets

Data collection was conducted at Huashan Hospital from August 2021 to April 2024 with ethical approval (Approval No. 2021-811). All 125 adult participants provided written informed consent and were diagnosed with OSA (). The cohort spanned mild to severe cases and included common comorbidities such as hypertension, obesity, and insomnia (Table 1).

Table 1.

Demographics and PSG parameters of the Huashan dataset (Mean ± SD, [Min, Max]).

Whole-night PSG recordings included six EEG channels (F3/M2, F4/M1, C3/M2, C4/M1, O1/M2, O2/M1), three chin EMG channels (Chin1, Chin2, Chin3), one ECG channel (ECG), two EOG channels (E1, E2), left and right tibialis anterior EMG channels (Leg/L and Leg/R), and multiple respiratory signals, including nasal airflow pressure (Nasal), oxygen saturation, thoracic effort (Thor), and abdominal effort (Abdo). All signals were sampled at 1024 Hz. Patients refrained from taking LM-affecting medications for at least 2 weeks prior to the recording. Events were re-annotated by trained technicians following AASM guidelines, covering LM, PLMS, apnea, and hypopnea.

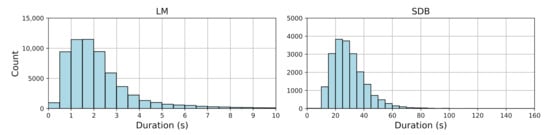

Only sleep epochs were included. Figure 1 shows the duration distribution of LM and SDB events. Table 2 provides descriptive statistics, including event counts, median durations with interquartile ranges (IQRs), and mean durations with standard deviations and ranges for both LM and SDB events. LM events mostly ranged from 0.5 to 2.5 s, indicating a clear clustering pattern, while SDB events exhibited greater variability (10–151.52 s), reflecting their complexity.

Figure 1.

Histogram of LM and SDB event durations.

Table 2.

Descriptive statistics of LM and SDB events (duration in seconds).

2.2. Overview of AttenCRF-U (Attention-Enhanced CRF with U-Net)

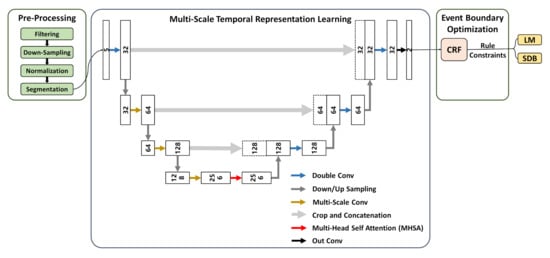

The framework consists of three major components, as illustrated in the following Figure 2:

Figure 2.

Overview of AttenCRF-U for detecting LM and SDB.

Data pre-processing. Raw signals are filtered, down-sampled, normalized, and segmented to improve signal quality and remove noise, resulting in reliable input.

Multi-scale temporal representation learning. A multi-scale deep learning architecture is employed to enhance the representation of event features across varying temporal scales. Specifically, the U-Net backbone improves temporal resolution through an encoder–decoder structure. MHSA captures long-range dependencies and strengthens global semantic perception. Multi-scale convolution adapts to both short- and long-duration events using receptive fields of different sizes, which improves the recognition of diverse event types.

Event boundary optimization. CRF is introduced to refine event boundaries, enhancing continuity and consistency in event sequences. Physiology-based rule constraints are incorporated to filter out implausible predictions, thereby enhancing the medical interpretability of detection results.

2.3. Data Pre-Processing

Five PSG signal channels were selected as input. The Leg/L and Leg/R channels are used to detect LM events, while the Abdo, Thor, and Nasal channels are used as the reference channels for SDB events. The pre-processing pipeline consists of filtering, down-sampling, normalization, and segmentation. The filtering process includes the use of a fourth-order Butterworth band-pass filter with cutoff frequencies of 10 Hz and 50 Hz for the EMG signals [10]. For the thoracic effort and abdominal effort signals, second-order Butterworth band-pass filters with cutoff frequencies of 0.1 Hz and 15 Hz are applied to emphasize respiratory frequency characteristics. The nasal airflow pressure signal is filtered within the range of 0.03 Hz to 50 Hz to eliminate low-frequency drift and high-frequency noise [10]. All channels are down-sampled to 100 Hz to ensure consistency and comparability across signals. Z-score normalization is applied individually to each channel for each patient. The normalization is performed on the time sequence , and is defined as

The signal is segmented into 60 s epochs, offering a trade-off between temporal granularity and global contextual awareness. To ensure model relevance, the framework is trained only on segments containing at least one event. To enable precise point-wise detection and account for potential temporal overlaps between LM and SDB events, event labels are generated by aligning manually annotated event intervals with the sampling resolution of the signals to produce point-wise label sequences. No event prioritization is applied during this process, and all annotated events are retained. Each time point is assigned a two-dimensional label vector [LM_label, SDB_label], where each element is either 1 (presence) or 0 (absence).

2.4. Multi-Scale Temporal Representation Learning

This module adopts an encoder–decoder structure that strengthens temporal representation through a hierarchical structure: the encoder compresses input to extract high-level features, while the decoder restores fine-grained details to ensure accurate boundary reconstruction. Skip connections are used to fuse shallow local features with deep global semantics, effectively reducing information loss [24].

- (1)

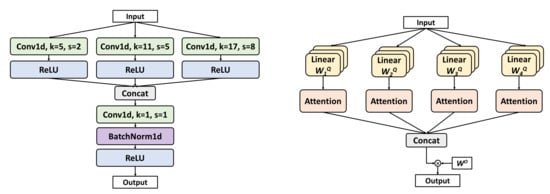

- Multi-Scale Conv

Each encoder layer employs parallel multi-scale convolution, as shown in Figure 3 (left). The kernel sizes (5, 11, 17) are selected to capture short, medium, and long temporal patterns to match the durations of LM (0.5–10 s) and SDB (>10 s) events. Smaller kernels are better at detecting transient EMG bursts (LM), while larger kernels capture sustained respiratory patterns (SDB).

Figure 3.

Implementation details of Multi-Scale Conv and MHSA.

- (2)

- MHSA

The bottleneck layer incorporates MHSA, a mechanism originally proposed by Vaswani et al. [25], as shown in Figure 3 (right). Four self-attention heads are employed. The computation of MHSA proceeds as follows:

The input is linearly projected into query (Q), key (K), and value (V) vectors using head-specific weights , , and for each attention head, as follows:

where , and h is the number of heads. Each head computes its respective attention weights according to the following formula:

where is the dimensionality of the K vectors, and serves as a scaling factor. Finally, the outputs from all attention heads are concatenated and passed through a linear transformation using a weight matrix to produce the final output, as follows:

MHSA offers two key benefits: it captures multi-scale temporal dependencies, facilitating the extraction of features from LM and SDB events of varying durations; and it enables efficient parallel processing of long sequences (e.g., 60 s), outperforming traditional methods such as long short-term memory (LSTM) in both accuracy and computational speed.

2.5. Event Boundary Optimization

Point-wise predictions from temporal representation learning may result in short, isolated events or imprecise boundaries, compromising detection consistency. To address this issue, an optimization module that combines CRF [26] with rule-based constraints is introduced. The CRF captures temporal dependencies to globally refine event boundaries, reducing fragmentation and boundary shifts. It smooths predictions, enforces continuity at event onsets and offsets, and eliminates implausible patterns (e.g., rapid LM fluctuations), thus ensuring physiological plausibility [27,28]. The CRF workflow is presented in Algorithm 1.

| Algorithm 1: CRF-Based Temporal Smoothing for LM and SDB Detection |

|

Rule-based constraints, guided by AASM guidelines, further refine predictions. Adjacent LM events less than 0.5 s apart are merged, and thresholding removes events that are too short or excessively long, reducing false detections due to noise or abnormal patterns. Together, CRF and rule-based refinement yield temporally coherent outputs that better align with true event boundaries, enhancing both prediction consistency and clinical interpretability.

2.6. Experimental Settings and Evaluation Metrics

Patients were randomly split in a 9:1 ratio, and only segments with stable signal quality and complete annotations were used. Experiments were conducted on a Linux system equipped with an Intel® Xeon® Gold 5218 CPU (Intel Corporation, Santa Clara, CA, USA) (2.30 GHz) and two NVIDIA GeForce RTX 3090 GPUs (NVIDIA Corporation, Santa Clara, CA, USA). The models were implemented in Python 3.8.12 with PyTorch 1.10.1. The framework was trained using the Adam optimizer [29] with a learning rate of 0.001 and a weight decay of 0.0001, over 50 epochs with a batch size of 128. The loss function was the average Dice loss across all classes, as defined in Equation (5), which is suitable for multi-class outputs. Compared with cross-entropy, Dice loss more effectively addresses class imbalance, particularly when foreground events (e.g., LM and SDB) are sparse [30,31].

where and denote the predicted and ground truth labels for class i, respectively. A Laplace smoothing term of is added to prevent division by zero [31].

The detection framework is evaluated based on point-wise predictions, comparing the output with ground truth labels at each time point. The performance for LM and SDB event detection is assessed using the following metrics:

where C denotes the number of classes, and , , and represent the true positives, false positives, and false negatives for class i, respectively.

For a more comprehensive assessment, event-level evaluation is introduced, treating entire events as the evaluation unit, unlike point-wise metrics. The match between predicted and ground truth events is determined using the Intersection over Union (IoU) [32]. A predicted event is considered correctly detected if its IoU with the corresponding ground truth event exceeds a threshold of 0.3. The IoU is defined as

where X and Y are the temporal intervals of the predicted and ground truth events, respectively. In addition to standard event-level precision, recall, and F1 score, the evaluation includes temporal alignment errors to quantify accuracy in locating event boundaries. These errors consist of onset error, offset error, and duration error, defined as the difference between predicted and actual time points. A positive value indicates a delayed prediction, while a negative value indicates an early prediction.

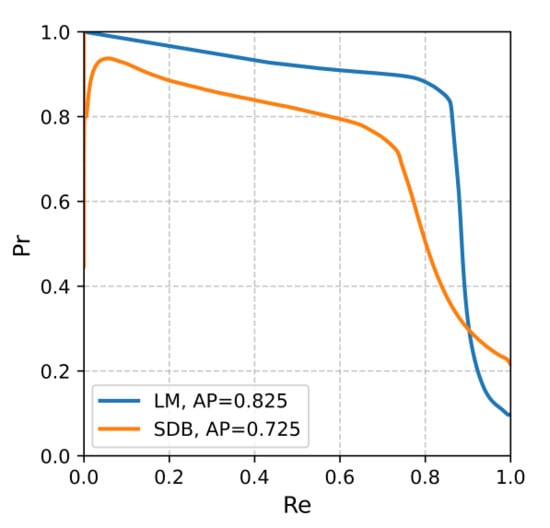

3. Results

The performance of the proposed AttenCRF-U framework was systematically evaluated on the Huashan dataset for both LM and SDB event detection (Table 3). For LM events, the F1 score was 0.848, with a precision of 0.862 and a recall of 0.834, indicating strong stability and accuracy. For SDB events, the F1 score of 0.728 was lower than that of LM, which can be attributed to two primary factors. First, SDB events are less frequent, while Dice loss assigns equal weight across classes. Moreover, SDB events often exhibit subtle and gradual onset/offset transitions, making their boundaries more difficult to localize than the abrupt EMG bursts characteristic of LM. The overall average F1 score reached 0.788, demonstrating robust and balanced detection performance across event categories. Precision–recall curves (Figure A1) show that LM and SDB events achieved average precision (AP) values of 0.825 and 0.725, respectively.

Table 3.

Performance evaluation results on the Huashan dataset.

Further event-level evaluation results are summarized in Table 4. With the IoU threshold set at 0.3, the F1 scores for LM and SDB events improved to 0.863 and 0.739, respectively, which are higher than the point-wise results, indicating more temporally coherent predictions at the event level. In terms of temporal alignment error, LM events showed a slight onset error of 0.034 s, an offset error of −0.051 s, and a duration error of −0.085 s, suggesting a conservative tendency. In contrast, SDB events exhibited more pronounced boundary errors, with an onset error of 2.264 s, an offset error of −2.202 s, and a duration error of −4.466 s. This is likely due to the longer duration and more variable patterns of SDB events. Although a lower IoU threshold increases tolerance and reduces false negatives, it can also compromise boundary precision, which affects longer events like SDB more evidently.

Table 4.

Event-level evaluation results on the Huashan dataset.

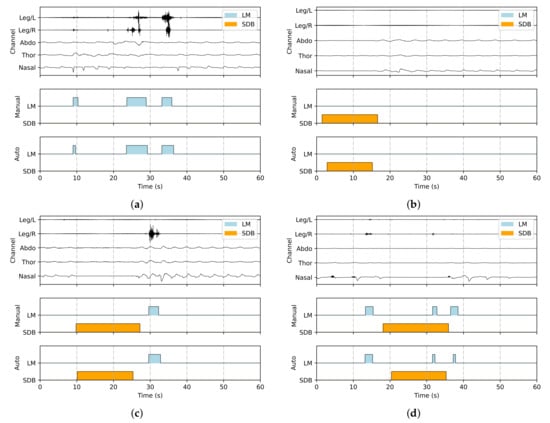

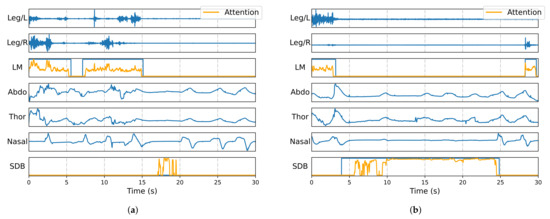

Figure 4 visualizes annotated signal segments, showing all five input channels (Leg/L, Leg/R, Abdo, Thor, and Nasal), along with manual and automatic annotations of LM and SDB events. The predictions align closely with the ground truth, especially in onset and offset timing. Furthermore, the framework effectively distinguishes overlapping events, ensuring both accurate classification and precise duration estimation.

Figure 4.

Representative visualizations of joint detection results, with LM and SDB events marked in blue and orange, respectively: (a) only LM events, (b) only SDB events, (c) co-occurrence of LM and SDB events without overlap, and (d) co-occurrence of LM and SDB events with overlap.

4. Discussion

4.1. Comparative Analysis of the Effect of Multi-Scale Temporal Representation Learning

To illustrate how the attention module attends to relevant temporal segments when identifying events, gradient-weighted class activation mapping (Grad-CAM) [33] was employed for interpretability analysis of the trained model. Grad-CAM generated attention heatmaps for the two event classes, with values exceeding 1 truncated to 1, and overlaid them onto the original signals and labels for visualization. These visualizations help interpret the features that most contribute to each class of events and reveal different attention patterns. As shown in Figure 5, the attention values of the framework increase significantly in the temporal locations corresponding to event occurrences, indicating that the model effectively focuses on the signal regions associated with the target events.

Figure 5.

Grad-CAM-based attention visualization of PSG signal model for LM and SDB event detection, where orange represents attention values, and blue represents different signals and predicted events: (a) example 1 and (b) example 2.

An ablation study (Table A1) compared convolutional kernel combinations (5, 11, 17). Single-kernel performance degraded with increasing size, as smaller kernels excelled at capturing boundary details. The triple-kernel setup achieved the best avg F1 (0.788), confirming that multi-scale fusion is critical for detecting LM/SDB patterns.

4.2. Comparative Analysis of the Effect of Event Boundary Optimization

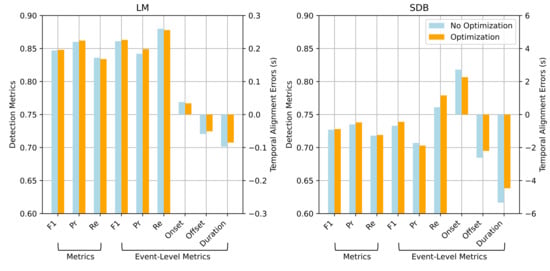

Key metrics before and after event boundary optimization were compared to assess the effect of this module. As shown in Figure 6, although the overall improvements are modest, it consistently enhances precision and temporal alignment.

Figure 6.

Comparison of detection performance and temporal alignment errors for LM and SDB events with and without event boundary optimization.

For LM events, the point-wise F1 score improved slightly from 0.847 to 0.848, with precision increasing from 0.860 to 0.862 and a minor drop in recall. At the event level, the F1 score increased from 0.861 to 0.863, precision changed from 0.842 to 0.849, and recall slightly decreased to 0.878. This suggests that event boundary optimization reduces false positives by refining boundary estimates. Moreover, the onset and offset errors decreased from 0.038 s to 0.034 s and from s to s, respectively, indicating improved temporal alignment with the ground truth. Similar trends were observed for SDB detection. Event boundary optimization improved all major metrics and notably reduced boundary errors, demonstrating its effectiveness in handling long-duration and complex events.

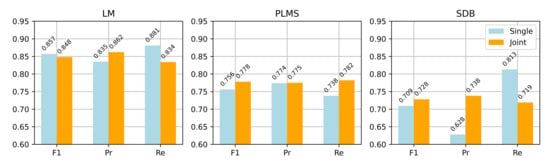

4.3. Comparative Analysis of Single and Joint Detection Strategies for Multi-Event Detection

A comparative analysis was conducted between single and joint detection strategies for LM, PLMS, and SDB events. In the single detection strategy, the network architecture remained unchanged, but input channels and loss functions were adjusted for each event type: Leg/L and Leg/R for LM, and Abdo, Thor, and Nasal for SDB. The same training and pre-processing protocols were applied to ensure fair comparison. PLMS detection in the single strategy relied on periodicity analysis, while the joint strategy excluded RRLM based on automatically detected SDB events and identified PLMS from the remaining LM events.

Figure 7 compares the two strategies across the three event types in terms of point-wise F1 score, precision, and recall. The joint detection strategy achieved better recall and stability for PLMS and SDB events, while maintaining strong LM detection performance. Specifically, the PLMS F1 score improved from 0.756 to 0.778, and SDB from 0.709 to 0.728, indicating enhanced generalization for complex event patterns. From a theoretical perspective, joint detection leverages shared features among related events, leading to better discrimination and aligning more closely with clinical needs for integrated multi-event analysis.

Figure 7.

Performance comparison between single and joint detection across LM, PLM, and SDB events (point-wise F1 score, precision, and recall).

4.4. Performance in Comparison with Other Proposed Methods

To validate the effectiveness of the proposed AttenCRF-U framework, we compared its performance against two representative baselines: MSED [20] and Event-Based Modeling [22]. Since MSED originally includes arousal events in its detection pipeline, we modified its input configuration to include only the two relevant information streams (leg and respiratory signals), while keeping the structure unchanged. Event-Based Modeling follows the design proposed by Seeuws et al. [22], which uses a U-Net backbone to predict event centers and durations. All methods were evaluated on the Huashan dataset, with the results summarized in Table 5.

Table 5.

Point-wise F1 scores of different methods on the Huashan dataset.

For LM event detection, AttenCRF-U achieved a substantially higher F1 score of 0.848, compared with 0.756 for Event-Based Modeling and 0.691 for MSED. Similarly, for SDB events, AttenCRF-U attained an F1 score of 0.728, outperforming Event-Based Modeling (0.705) and MSED (0.633). These results demonstrate that AttenCRF-U offers significant improvements in both event categories.

The superior performance of AttenCRF-U can be attributed to its integrated design, which combines multi-scale temporal feature extraction via U-Net, enhanced contextual representation through multi-head attention, and structured sequence inference with CRF. However, one limitation of the proposed method lies in its reliance on post-processing to further optimize event boundaries.

4.5. Evaluation of Detection Performance Based on Respiratory Channel Combinations

This study used three respiratory signal channels—Abdo, Thor, and Nasal—which collectively capture respiratory motion patterns and rate variability during sleep. To investigate the impact of these channels on detection performance, we designed comparative experiments using (1) single-channel input (e.g., Nasal only), (2) dual-channel combinations (e.g., Abdo + Nasal), and (3) a three-channel fusion (Abdo + Thor + Nasal). The EMG channels (Leg/L and Leg/R) were held constant across all configurations. The evaluation results are presented in Table 6, including point-wise F1 score, precision, and recall for different event types. The results indicate that the configuration of the respiratory channels significantly affects the detection of both SDB and LM events.

Table 6.

Effect of respiratory signal channels on SDB and LM event evaluation.

Among single-channel inputs, the Nasal channel achieved the highest average F1 score (0.775), with an F1 score of 0.711 for SDB events, demonstrating strong discriminative power for respiratory event detection. However, its F1 score for LM events (0.838) was not superior to other channels. In contrast, the Abdo and Thor channels yielded slightly lower average F1 scores, with SDB F1 scores of 0.631 and 0.612, respectively, but slightly better LM detection (F1 scores of 0.847 and 0.851, respectively). This may be due to subtle postural changes during LM events, which affect thoracoabdominal respiratory signals. Although these signals are not direct indicators of LM, they provide useful auxiliary cues.

In dual-channel combinations, Abdo + Thor improved the average F1 score to 0.764, with an SDB F1 score of 0.682, showing that fusing thoracoabdominal motion signals enhances respiratory anomaly detection. When Nasal was combined with either Abdo or Thor, SDB detection further improved, with F1 scores of 0.718 and 0.706, respectively, while LM detection remained stable (F1 scores of 0.839 and 0.848). This indicates a complementary effect between airflow and respiratory motion signals, enhancing the ability to identify SDB events.

The three-channel fusion configuration (Abdo + Thor + Nasal) achieved the best overall performance, with the highest average F1 score (0.788). SDB event detection reached its peak F1 score of 0.728, while LM detection remained robust (F1 score = 0.848). These results demonstrate that multi-channel integration maximizes the advantages of individual signals, improving detection accuracy and stability.

Notably, SDB detection is primarily driven by the Nasal channel. Even in the Nasal-only setting, its F1 score (0.711) closely approached the optimal performance achieved with the three-channel combination (0.728). This can be attributed to the fact that nasal airflow directly reflects respiratory flow and aligns well with manual annotations, making it more sensitive to apnea and hypopnea events than the Abdo and Thor channels. When Abdo and Thor signals are incorporated, the model benefits from additional information on chest and abdominal movements, which helps capture diverse respiratory patterns and improves robustness under signal variability or partial signal loss.

Although respiratory signals do not directly capture limb activity, LM detection is influenced by their choice. The Thor-only configuration achieved the highest F1 score (0.851) for LM detection, likely due to physiological coupling and signal crosstalk. LMs, especially periodic ones, often involve subtle postural shifts or respiratory modulation, leading to correlated thoracic movements. Moreover, muscle crosstalk may produce detectable low-frequency changes in thoracic effort signals. In contrast, abdominal and nasal channels provide limited relevance. Specifically, abdominal movements are more closely associated with breathing effort rather than limb activity, while nasal airflow often remains stable during LM. Including these channels may introduce noise that masks the informative Thor patterns. This observation highlights the need for further investigation using more precise biomechanical data.

From a signal acquisition perspective, the Nasal channel uses a nasal cannula connected to a pressure sensor to estimate airflow via pressure fluctuations, offering high sensitivity for SDB detection [34]. However, it may interfere with natural sleep and reduce user comfort. In comparison, thoracoabdominal signals are collected via respiratory inductive plethysmography (RIP) using inductive belts, which measure magnetic flux changes to reflect breathing effort [35]. This setup is more wearable and better suited for long-term monitoring, particularly in dynamic environments, with reduced sensitivity to body position. In summary, while the three-channel fusion yields the best detection performance, real-world applications require balancing accuracy with usability and comfort. For example, in home or mobile settings, thoracoabdominal signals may be preferable for their comfort and stability, whereas in clinical contexts where detection precision is essential, incorporating nasal airflow can enhance SDB detection. Therefore, input channel selection should be guided by specific use cases and detection goals.

4.6. Limitations

Despite promising results, this study has several limitations, which are as follows:

- Incomplete representation of clinical variability in training data: The current framework is trained primarily on event-containing segments. Including more event-free data could improve robustness and reduce false positives.

- Indirect identification of PLMS: PLMS detection relies on identifying LM and SDB events, followed by the exclusion of RRLM based on AASM guidelines. A dedicated mechanism for RRLM detection has yet to be developed. Future studies may explore a direct approach using respiratory inputs to enhance detection completeness and clinical relevance.

- In addition to model-level limitations, sensor-level considerations are crucial for practical deployment in sleep monitoring. While our study employed standard thoracic, abdominal, nasal airflow, and EMG signals, future work could benefit from more advanced sensing modalities for detecting both SDB and LM. One promising direction is the resonant force sensor based on ionic polymer metal composites (IPMCs), as proposed by Bonomo et al. [36]. These flexible sensors can detect millinewton-scale forces with DC response, making them well suited for capturing subtle physiological signals such as leg movements and thoracoabdominal respiration. Moreover, IPMCs can be fabricated into plastic microelectromechanical systems (PMEMS), enabling seamless integration into wearable devices or bedding for unobtrusive, home-based sleep monitoring.

5. Conclusions

We present AttenCRF-U, a joint detection framework for LM and SDB events in OSA patients. By integrating multi-scale feature extraction, MHSA, and CRF, AttenCRF-U leverages the temporal associations between LM and SDB to reduce isolated detection errors and improve the distinction between RRLM and PLMS. MHSA captures long-range dependencies, while CRF enhances sequence coherence. The U-Net backbone with multi-scale convolution further improves sensitivity and boundary accuracy across events of varying durations. Experiments on the Huashan dataset demonstrate strong performance, indicating clinical potential.

Beyond model performance, this study provides biomedical engineering insights. The joint modeling of LM and SDB using standard PSG signals, including thoracic, abdominal, nasal airflow, and EMG channels, reflects their physiological coupling and informs the design of multimodal diagnostic systems. This approach supports simplified sensor configurations and improved diagnostic efficiency. Given its compatibility with streaming architectures, AttenCRF-U is also suitable for integration into wearable or home-based sleep monitoring systems.

By jointly detecting LM and SDB events, the proposed framework improves understanding of their interaction, which is clinically relevant in comorbid PLMS and OSA. Notably, it may inform CPAP titration strategies by identifying RRLMs that influence treatment response, thereby supporting individualized OSA management. This study excluded wake epochs to focus on sleep-specific detection, which may limit applicability in home settings with frequent wake–sleep transitions. Future work will incorporate wake periods and explore generalization across populations and broader sleep event types to enable real-time, low-burden assessment and personalized intervention.

Author Contributions

Methodology, Q.L.; resources, K.L. and C.F.; data curation, K.L. and C.F.; writing—review and editing, Q.L., Y.Z., C.F., H.Y., C.C. and W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Huashan Hospital (protocol code 2021-811, approved in 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to ethical reasons.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| OSA | obstructive sleep apnea |

| SDB | sleep-disordered breathing |

| AHI | apnea–hypopnea index |

| LM | leg movement |

| PLMS | periodic limb movements during sleep |

| PLMI | PLMS index |

| AASM | American Academy of Sleep Medicine |

| RRLM | respiratory-related leg movement |

| MHSA | multi-head self-attention |

| CRF | conditional random field |

Appendix A. Precision–Recall Curves

Figure A1.

Precision–recall curves for LM and SDB event detection.

Appendix B. Ablation Study on Convolutional Kernel Combinations

Table A1.

Ablation study on convolutional kernel combinations in AttenCRF-U.

Table A1.

Ablation study on convolutional kernel combinations in AttenCRF-U.

| Kernel Size = 5 | Kernel Size = 11 | Kernel Size = 17 | Avg F1 |

|---|---|---|---|

| 🗸 | 0.762 | ||

| 🗸 | 0.754 | ||

| 🗸 | 🗸 | 🗸 | 0.695 |

| 🗸 | 🗸 | 0.773 | |

| 🗸 | 0.763 | ||

| 🗸 | 🗸 | 🗸 | 0.760 |

| 0.788 |

References

- Young, T.; Skatrud, J.; Peppard, P.E. Risk factors for obstructive sleep apnea in adults. JAMA 2004, 291, 2013–2016. [Google Scholar] [CrossRef] [PubMed]

- McNicholas, W.; McNicholas, W.T.; Levy, P.; Backer, W.D.; Douglas, N.; Marrone, O.; Montserrat, J.; Peter, J.H.; Rodenstein, D.; ERS Task Force; et al. Public health and medicolegal implications of sleep apnoea. Eur. Respir. J. 2002, 20, 1594–1609. [Google Scholar] [CrossRef]

- Kainulainen, S.; Töyräs, J.; Oksenberg, A.; Korkalainen, H.; Sefa, S.; Kulkas, A.; Leppänen, T. Severity of desaturations reflects OSA-related daytime sleepiness better than AHI. J. Clin. Sleep Med. 2019, 15, 1135–1142. [Google Scholar] [CrossRef] [PubMed]

- Scofield, H.; Roth, T.; Drake, C. Periodic limb movements during sleep: Population prevalence, clinical correlates, and racial differences. Sleep 2008, 31, 1221–1227. [Google Scholar] [CrossRef]

- Ferri, R.; Koo, B.B.; Picchietti, D.L.; Fulda, S. Periodic leg movements during sleep: Phenotype, neurophysiology, and clinical significance. Sleep Med. 2017, 31, 29–38. [Google Scholar] [CrossRef]

- Picchietti, D.L.; Stevens, H.E. Early manifestations of restless legs syndrome in childhood and adolescence. Sleep Med. 2008, 9, 770–781. [Google Scholar] [CrossRef]

- Zinchuk, A.V.; Jeon, S.; Koo, B.B.; Yan, X.; Bravata, D.M.; Qin, L.; Selim, B.J.; Strohl, K.P.; Redeker, N.S.; Concato, J.; et al. Polysomnographic phenotypes and their cardiovascular implications in obstructive sleep apnoea. Thorax 2018, 73, 472–480. [Google Scholar] [CrossRef]

- Budhiraja, R.; Javaheri, S.; Pavlova, M.K.; Epstein, L.J.; Omobomi, O.; Quan, S.F. Prevalence and correlates of periodic limb movements in OSA and the effect of CPAP therapy. Neurology 2020, 94, e1820–e1827. [Google Scholar] [CrossRef]

- Levin, K.H.; Chauvel, P. Chapter 25-Polysomnography. In Clinical Neurophysiology: Basis and Technical Aspects; Handbook of Clinical Neurology; Elsevier: Amsterdam, The Netherlands, 2019; Volume 160, pp. 381–392. [Google Scholar] [CrossRef]

- Berry, R.B.; Budhiraja, R.; Gottlieb, D.J.; Gozal, D.; Iber, C.; Kapur, V.K.; Marcus, C.L.; Mehra, R.; Parthasarathy, S.; Quan, S.F.; et al. Rules for scoring respiratory events in sleep: Update of the 2007 AASM manual for the scoring of sleep and associated events: Deliberations of the sleep apnea definitions task force of the American Academy of Sleep Medicine. J. Clin. Sleep Med. 2012, 8, 597–619. [Google Scholar] [CrossRef]

- Berry, R.B.; Brooks, R.; Gamaldo, C.; Harding, S.M.; Lloyd, R.M.; Quan, S.F.; Troester, M.T.; Vaughn, B.V. AASM scoring manual updates for 2017 (version 2.4). J. Clin. Sleep Med. 2017, 13, 6576. [Google Scholar] [CrossRef]

- Iv, H.M.; Leary, E.; Lee, S.Y.; Carrillo, O.; Stubbs, R.; Peppard, P.; Young, T.; Widrow, B.; Mignot, E. Design and Validation of a Periodic Leg Movement Detector. PLoS ONE 2014, 9, e114565. [Google Scholar] [CrossRef]

- Carvelli, L.; Olesen, A.N.; Brink-Kjær, A.; Leary, E.B.; Peppard, P.E.; Mignot, E.; Sørensen, H.B.D.; Jennum, P. Design of a deep learning model for automatic scoring of periodic and non-periodic leg movements during sleep validated against multiple human experts. Sleep Med. 2020, 69, 109–119. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Biswas, S.K.; Chunka, C.; Kumar Das, A. A Machine Learning Model for Obstructive Sleep Apnea Detection Using Ensemble Learning and Single-Lead EEG Signal Data. IEEE Sensors J. 2024, 24, 20266–20273. [Google Scholar] [CrossRef]

- Alić, B.; Wiede, C.; Viga, R.; Seidl, K. Feature-Based Detection and Classification of Sleep Apnea and Hypopnea Using Multispectral Imaging. IEEE J. Biomed. Health Inform. 2025, 29, 2074–2087. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, W.; Fu, Z.; Han, Z.; Zhang, J.; Sun, C.; Ma, D.; Wang, C. Real-Time Sleep Apnea Detection and Prediction From Single-Channel Airflow. IEEE Trans. Instrum. Meas. 2025, 74, 1–9. [Google Scholar] [CrossRef]

- Manconi, M.; Zavalko, I.; Fanfulla, F.; Winkelman, J.W.; Fulda, S. An evidence-based recommendation for a new definition of respiratory-related leg movements. Sleep 2015, 38, 295–304. [Google Scholar] [CrossRef]

- Waltisberg, D.; Amft, O.; Brunner, D.P.; Tröster, G. Detecting disordered breathing and limb movement using in-bed force sensors. IEEE J. Biomed. Health Inform. 2016, 21, 930–938. [Google Scholar] [CrossRef]

- Biswal, S.; Sun, H.; Goparaju, B.; Westover, M.B.; Sun, J.; Bianchi, M.T. Expert-level sleep scoring with deep neural networks. J. Am. Med Inform. Assoc. 2018, 25, 1643–1650. [Google Scholar] [CrossRef]

- Zahid, A.N.; Jennum, P.; Mignot, E.; Sorensen, H.B. MSED: A multi-modal sleep event detection model for clinical sleep analysis. IEEE Trans. Biomed. Eng. 2023, 70, 2508–2518. [Google Scholar] [CrossRef]

- Almutairi, H.; Hassan, G.M.; Datta, A. Detecting Sleep Disorders from NREM Using DeepSDBPLM. In Proceedings of the International Conference on Robotics, Vision, Signal Processing and Power Applications, Penang, Malaysia, 5–6 April 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 459–467. [Google Scholar] [CrossRef]

- Seeuws, N.; De Vos, M.; Bertrand, A. Avoiding Post-Processing With Event-Based Detection in Biomedical Signals. IEEE Trans. Biomed. Eng. 2024, 71, 2442–2453. [Google Scholar] [CrossRef]

- Seeuws, N.; De Vos, M.; Bertrand, A. A Human-in-the-Loop Method for Annotation of Events in Biomedical Signals. IEEE J. Biomed. Health Inform. 2025, 29, 95–106. [Google Scholar] [CrossRef] [PubMed]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Krähenbühl, P.; Koltun, V. Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials. In Proceedings of the Advances in Neural Information Processing Systems; Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira,, F., Weinberger, K., Eds.; Curran Associates, Inc.: New York, NY, USA, 2011; Volume 24. [Google Scholar] [CrossRef]

- Sutton, C.; McCallum, A. An introduction to conditional random fields. Found. Trends® Mach. Learn. 2012, 4, 267–373. [Google Scholar] [CrossRef]

- Wallach, H.M.; Conditional random fields: An introduction. Technical Report MS-CIS-04-21, University of Pennsylvania, Department of Computer and Information Science. 2004. Available online: https://nemenmanlab.org/ilya/images/d/d4/Wallach-04.pdf (accessed on 24 February 2004).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2017, arXiv:cs.LG/1412.6980. [Google Scholar]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2017; pp. 240–248. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, S.; Li, C.; Wang, J. Rethinking the dice loss for deep learning lesion segmentation in medical images. J. Shanghai Jiaotong Univ. (Sci.) 2021, 26, 93–102. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. UnitBox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, New York, NY, USA, 15–19 October 2016; pp. 516–520. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Sabil, A.; Glos, M.; Günther, A.; Schöbel, C.; Veauthier, C.; Fietze, I.; Penzel, T. Comparison of apnea detection using oronasal thermal airflow sensor, nasal pressure transducer, respiratory inductance plethysmography and tracheal sound sensor. J. Clin. Sleep Med. 2019, 15, 285–292. [Google Scholar] [CrossRef]

- Kogan, D.; Jain, A.; Kimbro, S.; Gutierrez, G.; Jain, V. Respiratory Inductance Plethysmography Improved Diagnostic Sensitivity and Specificity of Obstructive Sleep Apnea. Respir. Care 2016, 61, 1033–1037. [Google Scholar] [CrossRef]

- Bonomo, C.; Fortuna, L.; Giannone, P.; Graziani, S.; Strazzeri, S. A resonant force sensor based on ionic polymer metal composites. Smart Mater. Struct. 2007, 17, 015014. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).