Triplanar Point Cloud Reconstruction of Head Skin Surface from Computed Tomography Images in Markerless Image-Guided Surgery

Abstract

1. Introduction

- This work presents a novel outer skin layer extraction method from CT scans capable of handling external object interference. Each valid slice’s contour points are projected into 3D space using slice metadata to form uniplanar point clouds, which are fused via geometric addition into a triplanar point cloud to offer enhanced density and detail.

- To the best of our knowledge, no existing studies focus on extracting only the skin surface using DICOM metadata for registration purposes. We propose a novel approach that addresses this gap, resulting in a triplanar point cloud that structurally and visually resembles those captured intraoperatively by 3D cameras. This similarity enhances mutual compatibility and enables precise and robust markerless image-to-patient registration.

Related Work

2. Materials and Methods

2.1. Data Preprocessing

| Algorithm 1: CT slice to binary mask conversion |

|

2.2. Image Processing

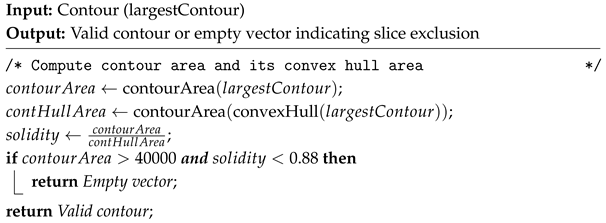

| Algorithm 2: Contour Solidity Validation |

|

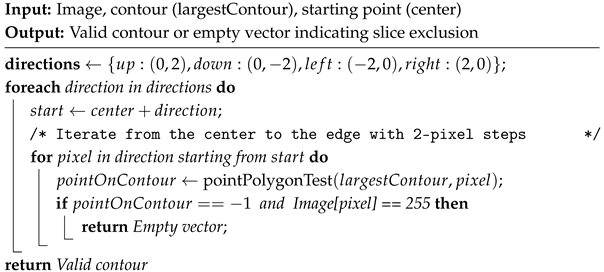

| Algorithm 3: Four-Direction Contour Validation |

|

. The final filter is applied based on heuristically determined locations of medical equipment in the lower region of the image.

. The final filter is applied based on heuristically determined locations of medical equipment in the lower region of the image.2.3. Spatial Reconstruction

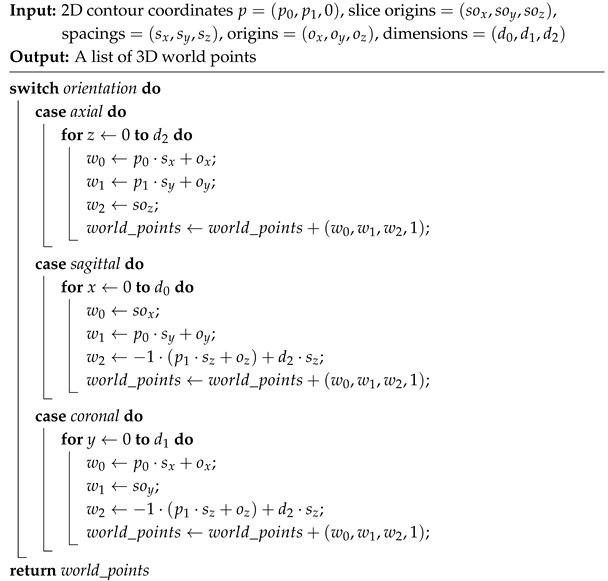

| Algorithm 4: 2D Slice Contour Point to 3D Space |

|

3. Results

3.1. Datasets

3.2. Identifying Unique Points

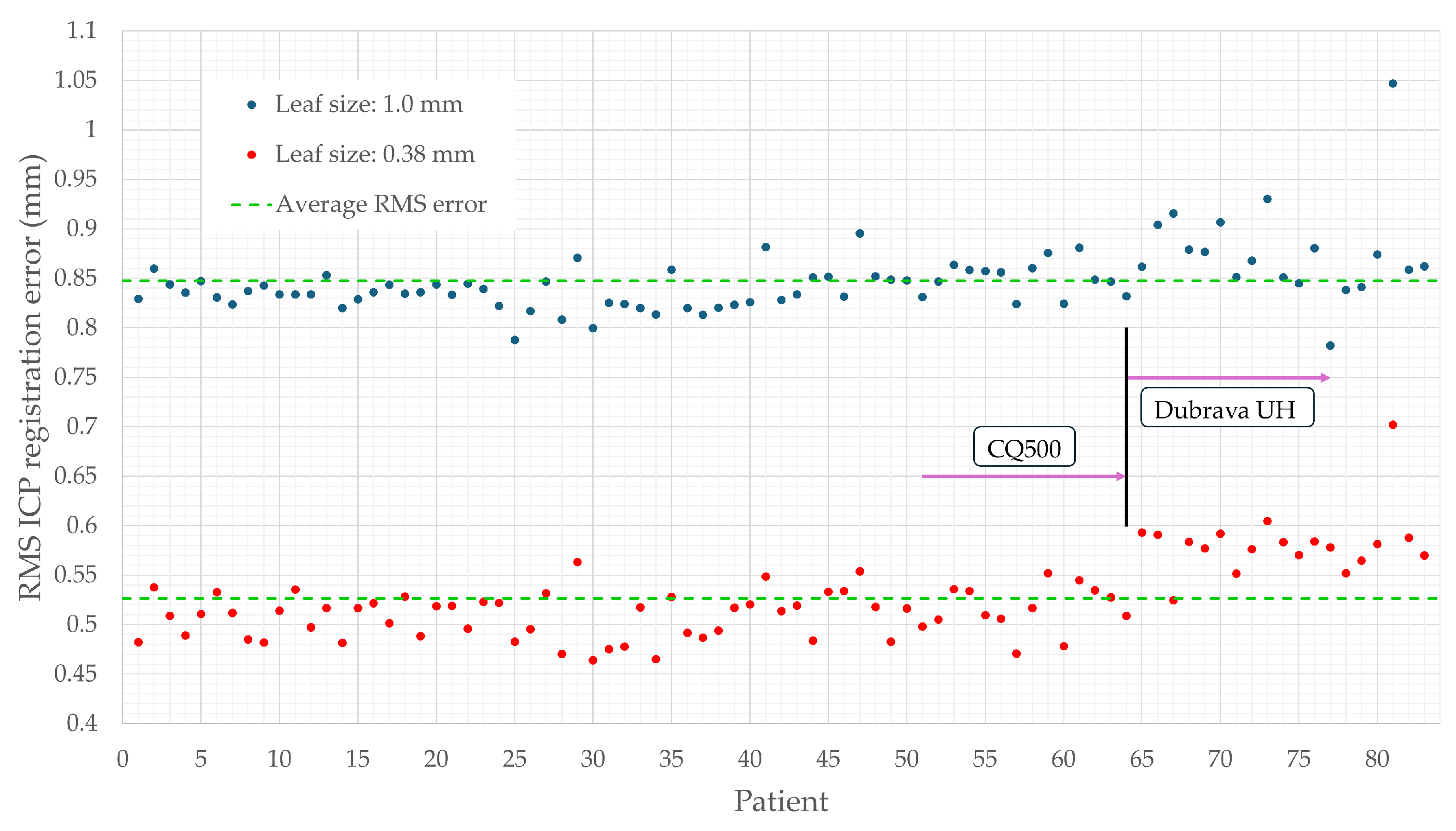

3.3. Positional Accuracy Analysis

3.4. Assessing Triplanar Point Cloud Density

3.5. Execution Time

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CT | Computed Tomography |

| RMS | Root Mean Square |

| IGS | Image-Guided Surgery |

| MRI | Magnetic Resonance Imaging |

| 3D | Three-Dimensional |

| 2D | Two-Dimensional |

| HU | Hounsfield Unit |

| CTA | Computed Tomography Angiography |

| FCM | Fuzzy C-Means |

| DICOM | Digital Imaging and Communications in Medicine |

| VTK | Visualization Toolkit |

| ITK | Insight Segmentation and Registration Toolkit |

| MIMICS | Materialise Interactive Medical Image Control System |

| PCL | Point Cloud Library |

| CNN | Convolutional Neural Network |

| OpenCV | Open Source Computer Vision Library |

| GT | Ground Truth |

| ICP | Iterative Closest Point |

References

- Unger, M.; Berger, J.; Melzer, A. Robot-Assisted Image-Guided Interventions. Front. Robot. AI 2021, 8, 664622. [Google Scholar] [CrossRef] [PubMed]

- Jerbić, B.; Švaco, M.; Chudy, D.; Šekoranja, B.; Šuligoj, F.; Vidaković, J.; Dlaka, D.; Vitez, N.; Župančić, I.; Drobilo, L.; et al. RONNA G4—Robotic Neuronavigation: A Novel Robotic Navigation Device for Stereotactic Neurosurgery. In Handbook of Robotic and Image-Guided Surgery; Abedin-Nasab, M.H., Ed.; Elsevier: Amsterdam, The Netherlands, 2020; pp. 599–625. [Google Scholar] [CrossRef]

- Taleb, A.; Guigou, C.; Leclerc, S.; Lalande, A.; Bozorg Grayeli, A. Image-to-Patient Registration in Computer-Assisted Surgery of Head and Neck: State-of-the-Art, Perspectives, and Challenges. J. Clin. Med. 2023, 12, 5398. [Google Scholar] [CrossRef]

- Ulinuha, M.A.; Yuniarno, E.M.; Hariadi, M.; Eddy Purnama, I.K. Extraction of Skull and Face Surfaces from CT Images. In Proceedings of the International Conference of Artificial Intelligence and Information Technology (ICAIIT), Yogyakarta, Indonesia, 13–15 March 2019; pp. 37–40. [Google Scholar] [CrossRef]

- Ulinuha, M.A.; Yuniarno, E.M.; Nugroho, S.M.S.; Hariadi, M. Outer contour extraction of skull from CT scan images. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017; Volume 185, p. 012028. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, L.; Li, Y.; Dai, S.; Zhang, D. Head CT Image Segmentation and Three-Dimensional Reconstruction Technology Based on Human Anatomy. Comput. Intell. Neurosci. 2022, 2022, 7091476. [Google Scholar] [CrossRef]

- Tuan, T.A.; Kim, J.Y.; Bao, P.T. Adaptive Region Growing for Skull, Brain, and Scalp Segmentation from 3D MRI. Biomed. Eng. Appl. Basis Commun. 2019, 31, 1950033. [Google Scholar] [CrossRef]

- Zhou, Z.; Yang, Z.; Jiang, S.; Zhu, T.; Ma, S.; Li, Y.; Zhuo, J. Design and validation of a navigation system of multimodal medical images for neurosurgery based on mixed reality. Vis. Inform. 2023, 7, 64–71. [Google Scholar] [CrossRef]

- Chen, Y.W.; Shih, C.T.; Cheng, C.Y.; Lin, Y.C. The Development of Skull Prosthesis Through Active Contour Model. J. Med. Syst. 2017, 41, 164. [Google Scholar] [CrossRef] [PubMed]

- Roser, P.; Birkhold, A.; Preuhs, A.; Stimpel, B.; Syben, C.; Strobel, N.; Kowarschik, M.; Fahrig, R.; Maier, A. Fully-Automatic CT Data Preparation for Interventional X-Ray Skin Dose Simulation. In Bildverarbeitung für die Medizin 2020; Tolxdorff, T., Deserno, T., Handels, H., Maier, A., Maier-Hein, K., Palm, C., Eds.; Springer Vieweg: Wiesbaden, Germany, 2020; pp. 251–257. [Google Scholar] [CrossRef]

- Hokmabadi, E.; Abrishami Moghaddam, H.; Mohtasebi, M.; Kazemloo, A.; Gity, M.; Wallois, F. Subject-Specific Probability Maps of Scalp, Skull and Cerebrospinal Fluid for Cranial Bones Segmentation in Neonatal Cerebral MRIs. IRBM 2024, 45, 100844. [Google Scholar] [CrossRef]

- Dangi, S.; Shah, H.; Porras, A.; Paniagua, B.; Linte, C.; Linguraru, M.; Enquobahrie, A. Robust head CT image registration pipeline for craniosynostosis skull correction surgery. Healthc. Technol. Lett. 2017, 4, 174–178. [Google Scholar] [CrossRef]

- Gupta, P.; Jain, N. Segmentation-Based Fusion of CT and MR Images. J. Digit. Imaging Inform. Med. 2024, 37, 2635–2648. [Google Scholar] [CrossRef]

- Dai, Z.; Yang, R.; Hang, F.; Zhuang, J.; Lin, Q.; Wang, Z.; Lao, Y. Neurosurgical Craniotomy Localization Using Interactive 3D Lesion Mapping for Image-Guided Neurosurgery. IEEE Access 2019, 7, 10606–10616. [Google Scholar] [CrossRef]

- Deepika, P.; Marisetty, S.; Pavan, K.; Vinay, C.K.; Srikanth, T.K.; Vazhayil, V.; Rao, M. Autonomous Neuro-Navigation System for Neurosurgical Robotics. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Yoo, H.; Sim, T. A Deep Learning-Based Approach for Automated Coarse Registration (ACR) of Image-Guided Surgical Navigation. IEEE Access 2022, 10, 115884–115894. [Google Scholar] [CrossRef]

- Lechelek, L.; Horna, S.; Zrour, R.; Naudin, M.; Guillevin, C. A Hybrid Method for 3D Reconstruction of MR Images. J. Imaging 2022, 8, 103. [Google Scholar] [CrossRef]

- Tiwary, P.; Delbos, B.; Chalard, R.; Moreau, R.; Lelevé, A.; Cheng, I. 3D Segmentation and Visualization of Human Brain CT Images for Surgical Training—A VTK Approach. In Smart Multimedia; Berretti, S., Su, G.M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; Volume 13497, pp. 202–212. [Google Scholar] [CrossRef]

- Eley, K.; Delso, G. Automated 3D MRI rendering of the craniofacial skeleton: Using ZTE to drive the segmentation of black bone and FIESTA-C images. Neuroradiology 2021, 63, 91–98. [Google Scholar] [CrossRef] [PubMed]

- Gsaxner, C.; Pepe, A.; Wallner, J.; Schmalstieg, D.; Egger, J. Markerless Image-to-Face Registration for Untethered Augmented Reality in Head and Neck Surgery. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2019; Shen, D., Liu, T., Peters, T.M., Staib, L.H., Essert, C., Zhou, S., Yap, P.T., Khan, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11768. [Google Scholar] [CrossRef]

- Mazzocchetti, S.; Spezialetti, R.; Bevini, M.; Badiali, G.; Lisanti, G.; Salti, S.; Di Stefano, L. Neural shape completion for personalized Maxillofacial surgery. Sci. Rep. 2024, 14, 19810. [Google Scholar] [CrossRef]

- Vu, B.T.D.; Kamona, N.; Kim, Y.; Ng, J.J.; Jones, B.C.; Wehrli, F.W.; Song, H.K.; Bartlett, S.P.; Lee, H.; Rajapakse, C.S. Three contrasts in 3 min: Rapid, high-resolution, and bone-selective UTE MRI for craniofacial imaging with automated deep-learning skull segmentation. Magn. Reson. Med. 2025, 93, 245–260. [Google Scholar] [CrossRef]

- Matzkin, F.; Newcombe, V.; Stevenson, S.; Khetani, A.; Newman, T.; Digby, R.; Stevens, A.; Glocker, B.; Ferrante, E. Self-supervised Skull Reconstruction in Brain CT Images with Decompressive Craniectomy. In Medical Image Computing and Computer-Assisted Intervention; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; Volume 12262, pp. 390–399. [Google Scholar] [CrossRef]

- Sarmah, M.; Arambam, N. CCCD: Corner detection and curve reconstruction for improved 3D surface reconstruction from 2D medical images. Turk. J. Electr. Eng. Comput. Sci. 2023, 31, 928–950. [Google Scholar] [CrossRef]

- Zhang, R.; Lee, H.; Zhao, X.; Song, H.K.; Vossough, A.; Wehrli, F.W.; Bartlett, S.P. Bone-Selective MRI as a Nonradiative Alternative to CT for Craniofacial Imaging. Acad. Radiol. 2020, 27, 1515–1522. [Google Scholar] [CrossRef] [PubMed]

- Hu, D.; Liang, H.; Qu, S.; Han, C.; Jiang, Y. A fast and accurate brain extraction method for CT head images. BMC Med. Imaging 2023, 23, 124. [Google Scholar] [CrossRef]

- Stephan, C.; Meikle, B.; Freudenstein, N.; Taylor, R.; Claes, P. Facial soft tissue thicknesses in craniofacial identification: Data collection protocols and associated measurement errors. Forensic Sci. Int. 2019, 304, 109965. [Google Scholar] [CrossRef]

- Lev, M.; Gonzalez, R. CT Angiography and CT Perfusion Imaging, in Brain Mapping. In The Methods, 2nd ed.; Elsevier Science: San Diego, CA, USA, 2002; pp. 427–484. [Google Scholar]

- Dlaka, D.; Švaco, M.; Chudy, D.; Jerbić, B.; Šekoranja, B.; Šuligoj, F.; Vidaković, J.; Romić, D.; Raguž, M. Frameless stereotactic brain biopsy: A prospective study on robot-assisted brain biopsies performed on 32 patients by using the RONNA G4 system. Int. J. Med. Robot. Comput. Assist. Surg. 2021, 17, e2245. [Google Scholar] [CrossRef]

- Chilamkurthy, S.; Ghosh, R.; Tanamala, S.; Biviji, M.; Campeau, N.G.; Venugopal, V.K.; Mahajan, V.; Rao, P.; Warier, P. Deep learning algorithms for detection of critical findings in head CT scans: A retrospective study. Lancet 2018, 392, 2388–2396. [Google Scholar] [CrossRef] [PubMed]

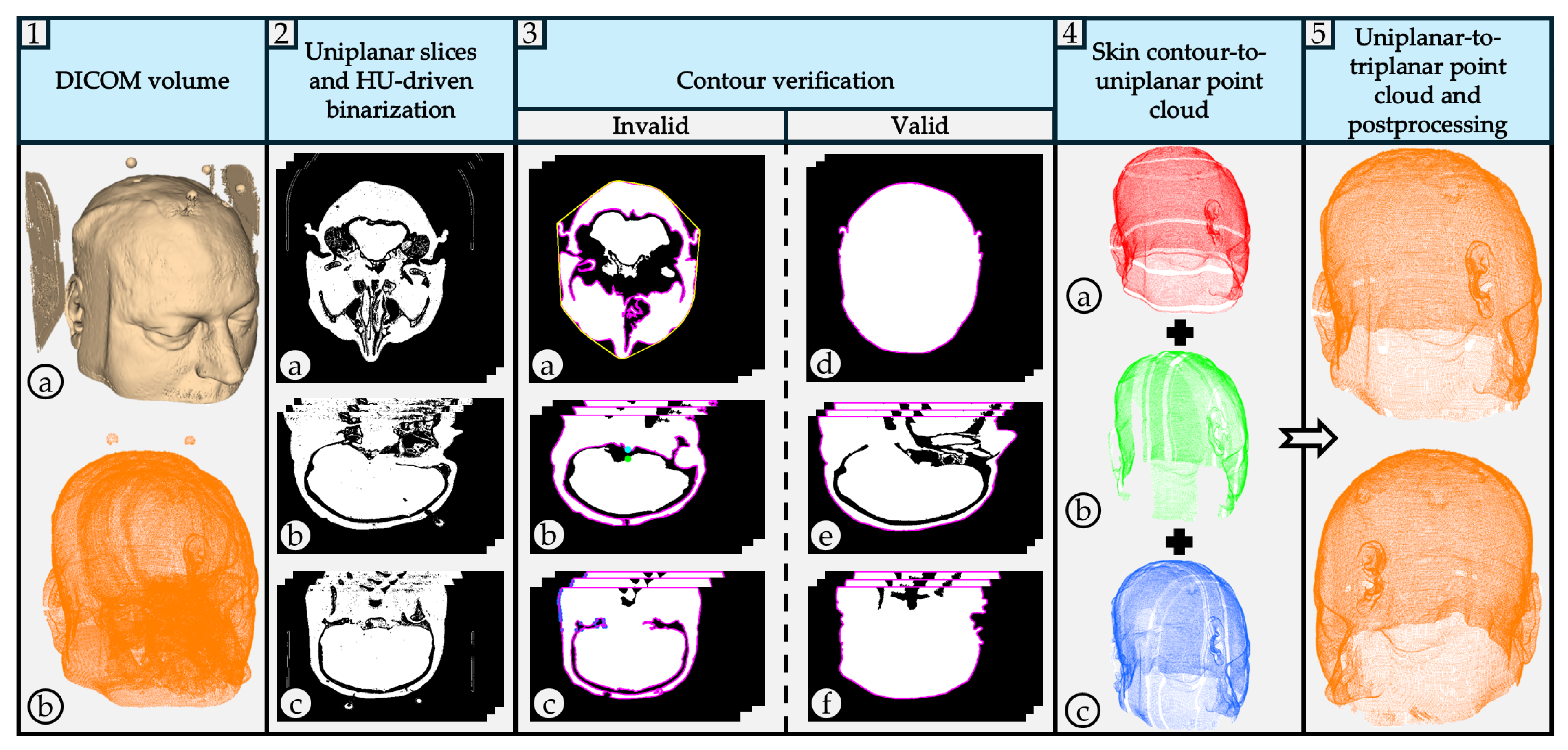

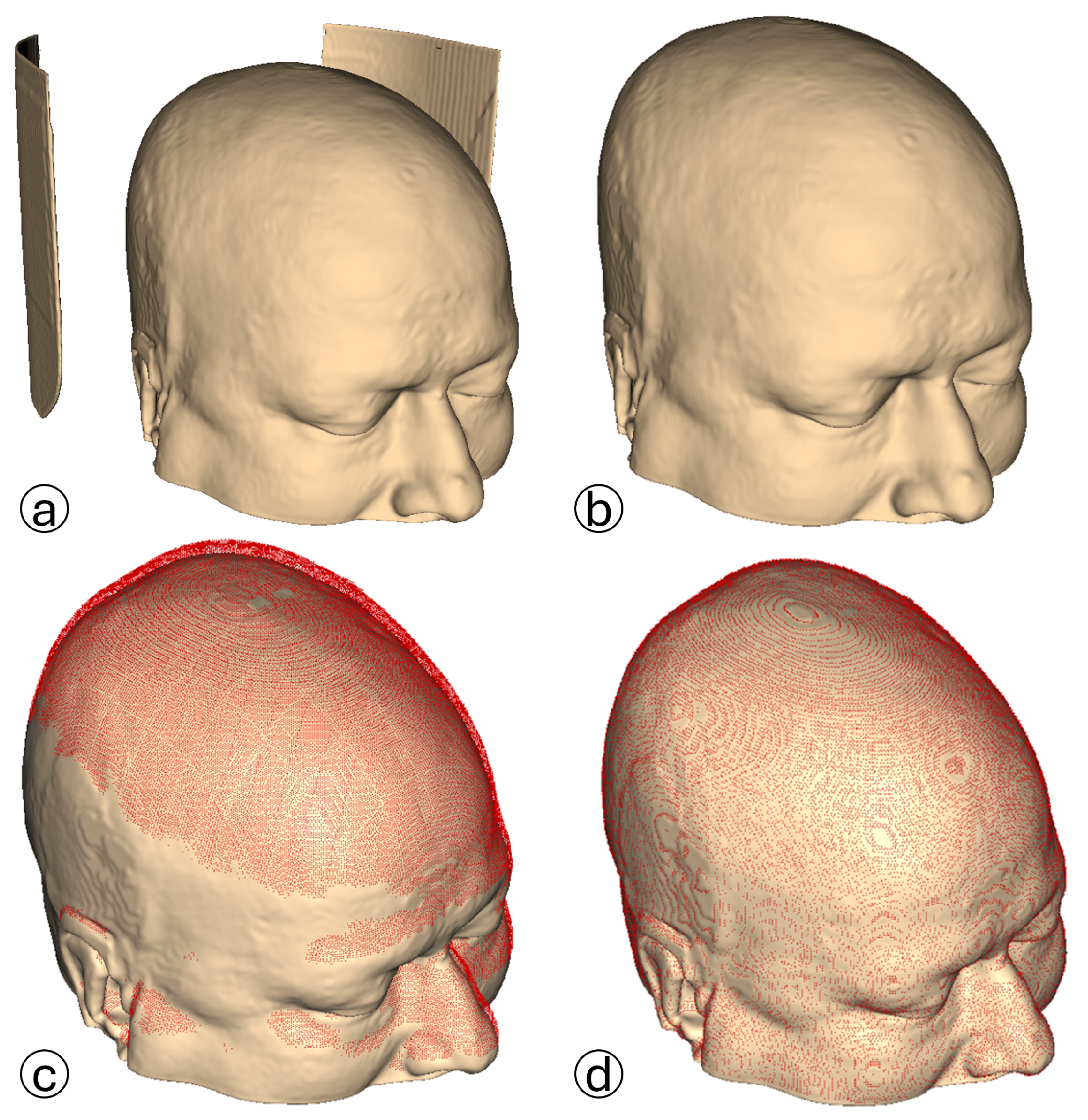

The CT scan model is processed in 3D Slicer using the CT soft tissue segmentation preset. Medical equipment artifacts and fiducial markers are visible.

The CT scan model is processed in 3D Slicer using the CT soft tissue segmentation preset. Medical equipment artifacts and fiducial markers are visible.  The 3D reconstructed point cloud, generated using the proposed algorithm, is presented without filters to emphasize external and intracranial noise. The acquired uniplanar slices (axial, sagittal, coronal) undergo HU-based thresholding to generate binary images:

The 3D reconstructed point cloud, generated using the proposed algorithm, is presented without filters to emphasize external and intracranial noise. The acquired uniplanar slices (axial, sagittal, coronal) undergo HU-based thresholding to generate binary images:  axial binary images;

axial binary images;  sagittal binary images;

sagittal binary images;  coronal binary images. The pseudocode is given in Algorithm 1. Valid contour extraction is performed on the binary images to delineate the skin layer. Several filters are applied to exclude images containing invalid contours:

coronal binary images. The pseudocode is given in Algorithm 1. Valid contour extraction is performed on the binary images to delineate the skin layer. Several filters are applied to exclude images containing invalid contours:  Contour solidity validation filter. Pink contour represents the largest found contour using OpenCV’s findContours function, while the yellow contour represents the contour’s convex hull area. The pseudocode is given in Algorithm 2.

Contour solidity validation filter. Pink contour represents the largest found contour using OpenCV’s findContours function, while the yellow contour represents the contour’s convex hull area. The pseudocode is given in Algorithm 2.  Four-direction contour validation filter. The pink contour represents the largest found contour. The blue circle represents the contour’s center of mass, while the green circle represents the pixel that satisfies both conditions presented in Algorithm 3.

Four-direction contour validation filter. The pink contour represents the largest found contour. The blue circle represents the contour’s center of mass, while the green circle represents the pixel that satisfies both conditions presented in Algorithm 3.  Pixel counting filter. The pink contour represents the largest found contour. The algorithm iterates over image rows in steps of 4, evaluating each column using an OpenCV method to identify contour pixels. A contour pixel is considered valid if it is at least 4 pixels away from the last detected one, incrementing a contour pixel counter. If this condition is met at least 4 times, a row pixel counter is also incremented. Images with the row pixel contour exceeding a threshold of 4 are excluded. Blue points indicate detected contour pixels.

Pixel counting filter. The pink contour represents the largest found contour. The algorithm iterates over image rows in steps of 4, evaluating each column using an OpenCV method to identify contour pixels. A contour pixel is considered valid if it is at least 4 pixels away from the last detected one, incrementing a contour pixel counter. If this condition is met at least 4 times, a row pixel counter is also incremented. Images with the row pixel contour exceeding a threshold of 4 are excluded. Blue points indicate detected contour pixels.  Valid axial slice contour.

Valid axial slice contour.  Valid sagittal slice contour.

Valid sagittal slice contour.  Valid coronal slice contour. Valid contours are used to generate 3D point cloud reconstructions from each view, generating three uniplanar point clouds:

Valid coronal slice contour. Valid contours are used to generate 3D point cloud reconstructions from each view, generating three uniplanar point clouds:  axial point cloud;

axial point cloud;  sagittal point cloud;

sagittal point cloud;  coronal point cloud. Generated uniplanar point clouds are merged into a triplanar point cloud to maximize structural detail and density. The triplanar point cloud is downsampled to eliminate redundant points and processed using two distinct outlier removal filters.

coronal point cloud. Generated uniplanar point clouds are merged into a triplanar point cloud to maximize structural detail and density. The triplanar point cloud is downsampled to eliminate redundant points and processed using two distinct outlier removal filters.  The same triplanar skin surface point cloud is presented from two perspectives, with medical equipment artifacts, fiducial markers, and intracranial noise eliminated.

The same triplanar skin surface point cloud is presented from two perspectives, with medical equipment artifacts, fiducial markers, and intracranial noise eliminated.

The CT scan model is processed in 3D Slicer using the CT soft tissue segmentation preset. Medical equipment artifacts and fiducial markers are visible.

The CT scan model is processed in 3D Slicer using the CT soft tissue segmentation preset. Medical equipment artifacts and fiducial markers are visible.  The 3D reconstructed point cloud, generated using the proposed algorithm, is presented without filters to emphasize external and intracranial noise. The acquired uniplanar slices (axial, sagittal, coronal) undergo HU-based thresholding to generate binary images:

The 3D reconstructed point cloud, generated using the proposed algorithm, is presented without filters to emphasize external and intracranial noise. The acquired uniplanar slices (axial, sagittal, coronal) undergo HU-based thresholding to generate binary images:  axial binary images;

axial binary images;  sagittal binary images;

sagittal binary images;  coronal binary images. The pseudocode is given in Algorithm 1. Valid contour extraction is performed on the binary images to delineate the skin layer. Several filters are applied to exclude images containing invalid contours:

coronal binary images. The pseudocode is given in Algorithm 1. Valid contour extraction is performed on the binary images to delineate the skin layer. Several filters are applied to exclude images containing invalid contours:  Contour solidity validation filter. Pink contour represents the largest found contour using OpenCV’s findContours function, while the yellow contour represents the contour’s convex hull area. The pseudocode is given in Algorithm 2.

Contour solidity validation filter. Pink contour represents the largest found contour using OpenCV’s findContours function, while the yellow contour represents the contour’s convex hull area. The pseudocode is given in Algorithm 2.  Four-direction contour validation filter. The pink contour represents the largest found contour. The blue circle represents the contour’s center of mass, while the green circle represents the pixel that satisfies both conditions presented in Algorithm 3.

Four-direction contour validation filter. The pink contour represents the largest found contour. The blue circle represents the contour’s center of mass, while the green circle represents the pixel that satisfies both conditions presented in Algorithm 3.  Pixel counting filter. The pink contour represents the largest found contour. The algorithm iterates over image rows in steps of 4, evaluating each column using an OpenCV method to identify contour pixels. A contour pixel is considered valid if it is at least 4 pixels away from the last detected one, incrementing a contour pixel counter. If this condition is met at least 4 times, a row pixel counter is also incremented. Images with the row pixel contour exceeding a threshold of 4 are excluded. Blue points indicate detected contour pixels.

Pixel counting filter. The pink contour represents the largest found contour. The algorithm iterates over image rows in steps of 4, evaluating each column using an OpenCV method to identify contour pixels. A contour pixel is considered valid if it is at least 4 pixels away from the last detected one, incrementing a contour pixel counter. If this condition is met at least 4 times, a row pixel counter is also incremented. Images with the row pixel contour exceeding a threshold of 4 are excluded. Blue points indicate detected contour pixels.  Valid axial slice contour.

Valid axial slice contour.  Valid sagittal slice contour.

Valid sagittal slice contour.  Valid coronal slice contour. Valid contours are used to generate 3D point cloud reconstructions from each view, generating three uniplanar point clouds:

Valid coronal slice contour. Valid contours are used to generate 3D point cloud reconstructions from each view, generating three uniplanar point clouds:  axial point cloud;

axial point cloud;  sagittal point cloud;

sagittal point cloud;  coronal point cloud. Generated uniplanar point clouds are merged into a triplanar point cloud to maximize structural detail and density. The triplanar point cloud is downsampled to eliminate redundant points and processed using two distinct outlier removal filters.

coronal point cloud. Generated uniplanar point clouds are merged into a triplanar point cloud to maximize structural detail and density. The triplanar point cloud is downsampled to eliminate redundant points and processed using two distinct outlier removal filters.  The same triplanar skin surface point cloud is presented from two perspectives, with medical equipment artifacts, fiducial markers, and intracranial noise eliminated.

The same triplanar skin surface point cloud is presented from two perspectives, with medical equipment artifacts, fiducial markers, and intracranial noise eliminated.

| Study | Modality | Segmentation Method | Reconstruction/Output | ML/AI Used | Skin Extraction Focus |

|---|---|---|---|---|---|

| Ulinuha et al. [4,5] | CT | Thresholding + Scanning | Face + skull reconstruction | No | Yes, No |

| Wu et al. [6] | CT | Morphology + Region Growing + Level Set | Skull reconstruction | No | No |

| Tuan et al. [7] | MRI | Otsu Thresholding + Pixel Clustering | Skull segmentation | No | No |

| Zhou et al. [8] | CTA | Manual Seed + Region Growing | Skull pixel removal | No | No |

| Chen et al. [9] | CT | Binary Mask + Active Contour | Skull reconstruction | No | No |

| Roser et al. [10] | CT | HU Filtering + Canny Edge + Connected Components | Skin layer segmentation | No | Yes |

| Hokmabadi et al. [11] | MRI | Histogram Thresholding + Morphology + Coupled Level Sets | Skull + scalp segmentation | No | Yes |

| Dangi et al. [12] | CT | Multi-threshold + Connected Components | Skull segmentation | No | No |

| Gupta et al. [13] | CT + MRI | Fuzzy C-means Clustering | Bone structure segmentation | No | No |

| Dai et al. [14] | CT | Fast Marching + Thresholding + Filtering | Scalp reconstruction | No | Yes |

| Deepika et al. [15] | MRI | Thresholding + Marching Cubes | Skin reconstruction | No | Yes |

| Yoo et al. [16] | CT | Marching Cubes | Skin reconstruction | No | Yes |

| Lechelek et al. [17] | MRI | FE + MPU | 3D brain reconstruction | No | No |

| Tiwary et al. [18] | CT | HU-based Segmentation (VTK) | Soft tissue + skull rendering | No | Yes |

| Eley et al. [19] | MRI | Automated Segmentation (ZTE) | 3D craniofacial reconstruction | Yes | Yes |

| Gsaxner et al. [20] | CT | Studierfenster + Marching Cubes | Skin reconstruction | No | Yes |

| Mazzocchetti et al. [21] | CT | Depth Maps + MIMICS | 3D merged point clouds | Yes | Yes |

| Vu et al. [22] | CT + MRI | Thresholding + U-Net | Skull reconstruction (MRI + CT) | Yes | No |

| Matzkin et al. [23] | CT | Thresholding + U-Net | Skull reconstruction | Yes | No |

| Sarmah et al. [24] | CT | Edge Detection + Curvature + GNN | 3D skull models | Yes | No |

| Zhang et al. [25] | CT | Threshold + ML Classification | Skull reconstruction | Yes | No |

| Hu et al. [26] | CT | Threshold + CNN Closure + Morphology | Skull segmentation | Yes | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cvetić, J.; Šekoranja, B.; Švaco, M.; Šuligoj, F. Triplanar Point Cloud Reconstruction of Head Skin Surface from Computed Tomography Images in Markerless Image-Guided Surgery. Bioengineering 2025, 12, 498. https://doi.org/10.3390/bioengineering12050498

Cvetić J, Šekoranja B, Švaco M, Šuligoj F. Triplanar Point Cloud Reconstruction of Head Skin Surface from Computed Tomography Images in Markerless Image-Guided Surgery. Bioengineering. 2025; 12(5):498. https://doi.org/10.3390/bioengineering12050498

Chicago/Turabian StyleCvetić, Jurica, Bojan Šekoranja, Marko Švaco, and Filip Šuligoj. 2025. "Triplanar Point Cloud Reconstruction of Head Skin Surface from Computed Tomography Images in Markerless Image-Guided Surgery" Bioengineering 12, no. 5: 498. https://doi.org/10.3390/bioengineering12050498

APA StyleCvetić, J., Šekoranja, B., Švaco, M., & Šuligoj, F. (2025). Triplanar Point Cloud Reconstruction of Head Skin Surface from Computed Tomography Images in Markerless Image-Guided Surgery. Bioengineering, 12(5), 498. https://doi.org/10.3390/bioengineering12050498