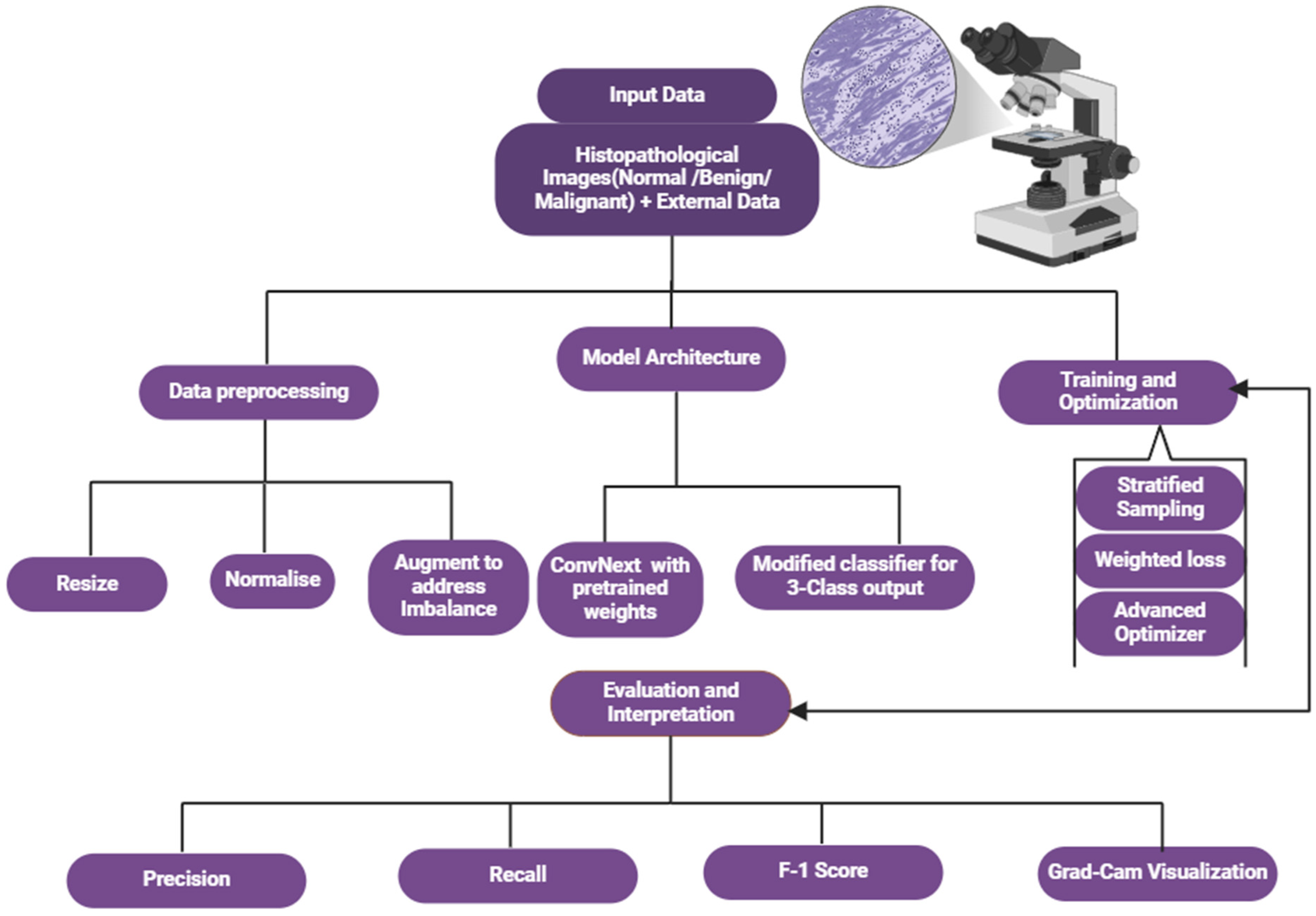

1. Introduction

Prostate cancer is a significant global health concern, ranking among the most commonly diagnosed malignancies in men and a leading cause of cancer-related mortality [

1,

2,

3]. However, the disease burden is not evenly distributed, with men of African descent—particularly those in sub-Saharan Africa—facing higher risks, more aggressive disease phenotypes, and poorer clinical outcomes compared to other populations [

4,

5,

6]. Despite these disparities, prostate cancer research has primarily focused on Western populations, leading to diagnostic and therapeutic frameworks that may not fully account for the unique biological and demographic characteristics of African men [

7]. Studies have emphasized the urgency of addressing these disparities, as African populations experience higher mortality rates due to aggressive disease presentation and limited diagnostic resources [

8,

9].

Artificial intelligence (AI) and deep learning have shown promise in improving diagnostic accuracy and workflow efficiency in prostate cancer detection [

10]. While histopathological analysis remains the gold standard for diagnosis, its manual and subjective nature introduces challenges, particularly in resource-limited settings where specialized expertise is scarce [

11,

12]. These limitations contribute to diagnostic delays and inconsistencies. AI-driven approaches offer a transformative solution by enhancing the analysis of histopathological images, yet most existing models are limited to binary classification, restricting their clinical utility [

13,

14]. Furthermore, many AI studies rely on datasets from homogeneous, non-representative populations, overlooking demographic variations that may influence disease characteristics and progression [

15].

Recent advancements in hybrid architectures, combining convolutional neural networks (CNNs) with transformers, have demonstrated strong performance in medical imaging tasks, including prostate cancer classification. Studies such as Zhang et al. (2022) [

16] have highlighted the effectiveness of CNN–transformer hybrids in histopathological analysis, while Kumar et al. [

10] stressed the importance of demographically diverse datasets to enhance AI model generalizability in oncology.

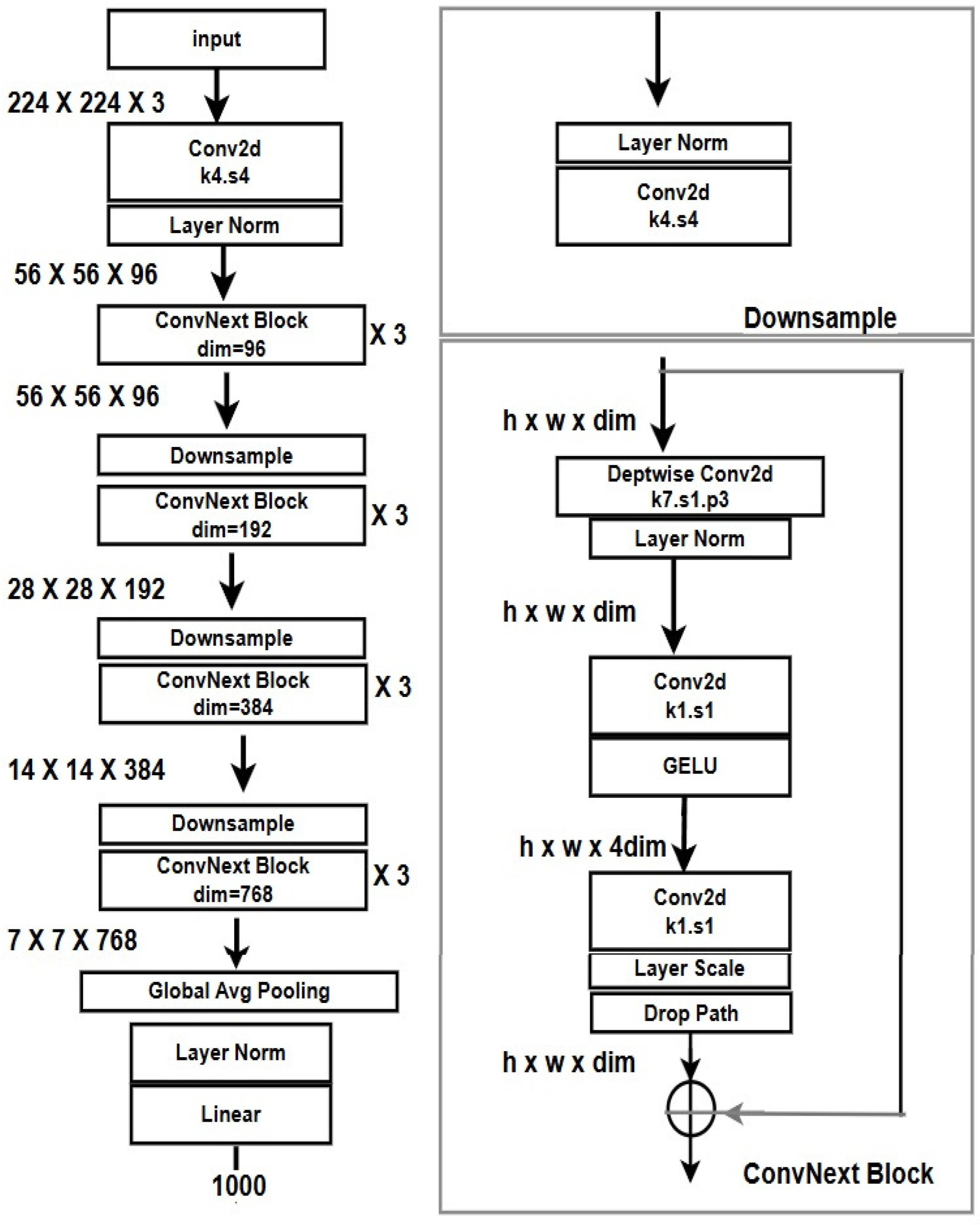

This study aims to address these gaps by leveraging ConvNeXt, an advanced neural network architecture that integrates the computational efficiency of CNNs with the contextual learning capabilities of transformers [

17]. We propose a three-class classification framework to distinguish between normal, benign, and malignant prostate histopathological images. Our dataset, sourced from a Nigerian tertiary healthcare institution, provides a rare and valuable representation of African populations, helping to mitigate the chronic underrepresentation in AI-driven prostate cancer research [

18].

Additionally, we incorporate Gradient-weighted Class Activation Mapping (Grad-CAM) to enhance interpretability, ensuring greater transparency and trust in clinical deployment [

19,

20]. Grad-CAM bridges the gap between AI predictions and human understanding by providing biologically meaningful visual explanations, an essential step towards integrating AI into routine diagnostics.

This study pioneers the application of ConvNeXt for three-class classification in prostate cancer histopathology, addressing regional disparities while advancing equitable AI applications in cancer diagnostics. By improving diagnostic accuracy, explainability, and clinical relevance, our work aims to contribute to more inclusive and effective prostate cancer detection strategies.

2. Related Studies

Prostate cancer is one of the most prevalent malignancies among men, accounting for a significant proportion of cancer-related morbidity and mortality worldwide. Accurate diagnosis relies heavily on histopathological examination of tissue biopsies, often assessed using the Gleason grading system. However, this manual diagnostic process is inherently subjective, with inter-observer variability being a longstanding issue, particularly in borderline cases. As healthcare systems move towards precision diagnostics, there is an increasing demand for computational approaches to enhance accuracy, reproducibility, and efficiency in prostate cancer diagnosis [

21,

22].

AI has transformed medical imaging, enabling unprecedented advancements in histopathological analysis. Convolutional neural networks (CNNs) have become a dominant paradigm due to their ability to learn and extract hierarchical features automatically. Studies have demonstrated their utility in the binary classification of prostate cancer histopathological images, achieving high sensitivity and specificity [

23,

24]. However, traditional CNNs often struggle with capturing global context and long-range dependencies, limiting their performance on complex tissue structures.

To address CNNs’ limitations, researchers have integrated transformer architectures into image analysis workflows. Initially developed for natural language processing tasks, transformers utilize self-attention mechanisms to model long-range dependencies, proving highly effective in medical imaging contexts. Vision Transformer (ViT) has been successfully applied to various imaging modalities, including histopathological image analysis, showcasing its ability to outperform traditional CNNs in capturing intricate patterns [

25,

26]. Despite their promise, transformer models generally require large datasets, posing challenges for medical applications where annotated data is often scarce.

Introduced in 2022, ConvNeXt represents a milestone in neural network development. By rethinking traditional CNNs through the lens of transformer design principles—such as using larger kernel sizes and inverted bottleneck layers—ConvNeXt achieves a balance between efficiency and performance. It retains the advantages of convolutional operations while enhancing its ability to capture global dependencies [

27]. This hybrid architecture offers a unique opportunity for histopathological image analysis, particularly in prostate cancer detection, where both local and global image features are critical.

Additionally, drawing on an extensive range of sources, the authors set out how prostate cancer is diagnosed using diverse CNN architecture. Tsehay et al. [

28] designed a CNN-based computer framework that could identify prostate cancer on multiparametric magnetic resonance images (mpMRI) to make it easier for readers to reach a consensus on what they see as well as improve the algorithm sensitivity. However, Sobecki et al. [

29] presented an innovative influence of domain expertise encoding inside CNN model architecture for prostate cancer diagnosis utilizing Multiparametric MRI (mpMRI) images and executed late (decision-level) fusion for mpMRI data, employing distinct networks for each mp series. As a result, the precision of prostate cancer diagnosis was improved by utilizing specialized CNN architectures. Moreover, in her interesting recent study, Pirzad Mashak et al. [

30] analyzed a novel methodology for identifying prostate cancer using magnetic resonance imaging (MRI) through the integration of both faster region-based convolutional neural networks (R-CNN) and CNN structures. A faster R-CNN was used for identification than a CNN-based network for data classification. The result showed enhanced accuracy in detecting prostate cancer using MRI images. On top of that, Rundo et al. [

31] presented an innovative CNN framework, termed USE-Net, which integrated Squeeze and Excitation (SE) blocks into U-Net for zonal segmentation of the prostate using MRI images. This novel methodology underscores the significance of utilizing CNN frameworks for precise segmentation, especially in prostate cancer studies. Furthermore, Pinckaers et al. [

32] developed a streamed implementation technique of CNN layers to train a contemporary CNN using image-level labeling for prostate cancer identification in a stained slide. This demonstrates the efficacy of data training throughout its entirety without requiring manually pixel-wise annotated images.

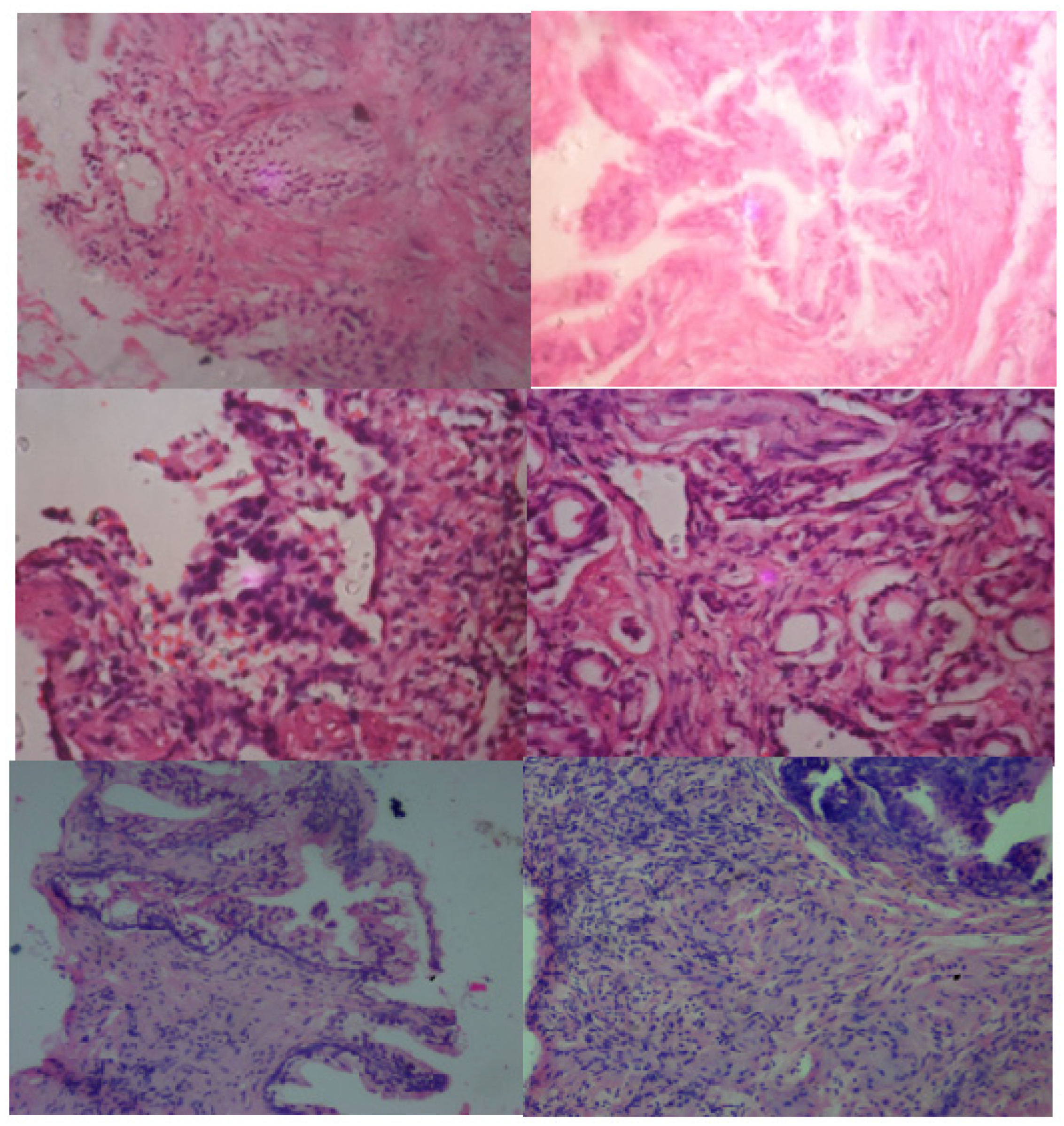

Most AI-driven studies on prostate cancer diagnosis have focused on binary classification, differentiating malignant and benign lesions. While effective, this approach overlooks the diagnostic value of incorporating “normal” tissues as a separate class, which can better align with clinical workflows. Few studies have ventured into three-class classification (normal, benign, malignant) despite its potential to improve diagnostic accuracy and clinical decision-making [

33,

34]. Additionally, existing datasets predominantly feature images from Western populations, limiting the generalizability of these models. Histological data from African or Black populations remain underrepresented despite evidence suggesting demographic differences in prostate cancer histopathology [

35,

36]. This lack of diversity underscores the need for inclusive datasets to develop robust AI systems applicable to global populations.

Beyond achieving high accuracy, interpretability is essential for deploying AI in healthcare settings. Gradient-weighted Class Activation Mapping (Grad-CAM) has emerged as a widely used method for visualizing model decisions by highlighting image regions that contribute to specific predictions. In prostate cancer diagnostics, Grad-CAM provides insights into how models interpret histopathological features, aiding clinicians in validating predictions and identifying potential errors [

19]. This level of explainability fosters trust in AI models while ensuring alignment with clinical standards.

Ablation studies are pivotal in understanding model robustness by systematically modifying components or configurations. Altering data augmentation strategies, learning rate schedules, or optimizer types can reveal a model’s dependencies and sensitivities. These studies provide invaluable insights into the ConvNeXt architecture’s adaptability, enabling further optimization for histopathological applications [

37,

38].

4. Results

4.1. Classification Performance

The ConvNeXt model demonstrated exceptional performance in classifying prostate cancer histopathological images into normal, benign, and malignant categories. Its key performance metrics, including precision, recall, F1 scores, and accuracy, underscore its robustness and reliability, as shown in

Table 5.

These metrics were calculated on the test set for each class. Precision was determined as the ratio of true positives to the sum of true and false positives for each class. Recall measured the proportion of true positives correctly identified out of all actual positives. F1 score balanced the trade-offs between precision and recall by considering their harmonic mean. Accuracy represented the percentage of correctly classified samples across all classes. An ROC-AUC score of 0.98 was obtained using a one-versus-rest methodology, where the model’s ability to separate each class from the others was measured. These metrics collectively provide a holistic view of model performance, ensuring reliability for multi-class classification in prostate cancer histopathology.

Histopathological datasets often exhibit class imbalance and subtle inter-class variations, making metrics like precision and recall indispensable for evaluating how well the model differentiates between classes. The F1 score emphasizes balancing these metrics, while ROC-AUC assesses the model’s generalization ability. By evaluating these metrics comprehensively, the robustness and clinical applicability of the ConvNeXt model can be accurately evaluated.

The confusion matrix highlights the model’s balanced performance across classes with minimal misclassifications. It demonstrates the model’s ability to differentiate histopathological patterns characteristic of prostate cancer subtypes.

True Positives (TP): The model correctly identified samples belonging to their respective classes, demonstrating reliability.

True Negatives (TN): The model accurately excluded samples not belonging to a given class.

False Positives (FP): Misclassifications where the model incorrectly labeled a sample as a specific class.

False Negatives (FN): Samples incorrectly excluded from their true class.

The confusion matrix, shown in

Figure 4, highlights ConvNeXt’s effectiveness, particularly its ability to minimize false positives and negatives, which is critical in medical diagnostics.

Figure 5 presents ConvNeXt’s learning curves, illustrating the convergence of training and validation losses. The model’s accuracy stability over epochs confirms its effective training and generalization capabilities.

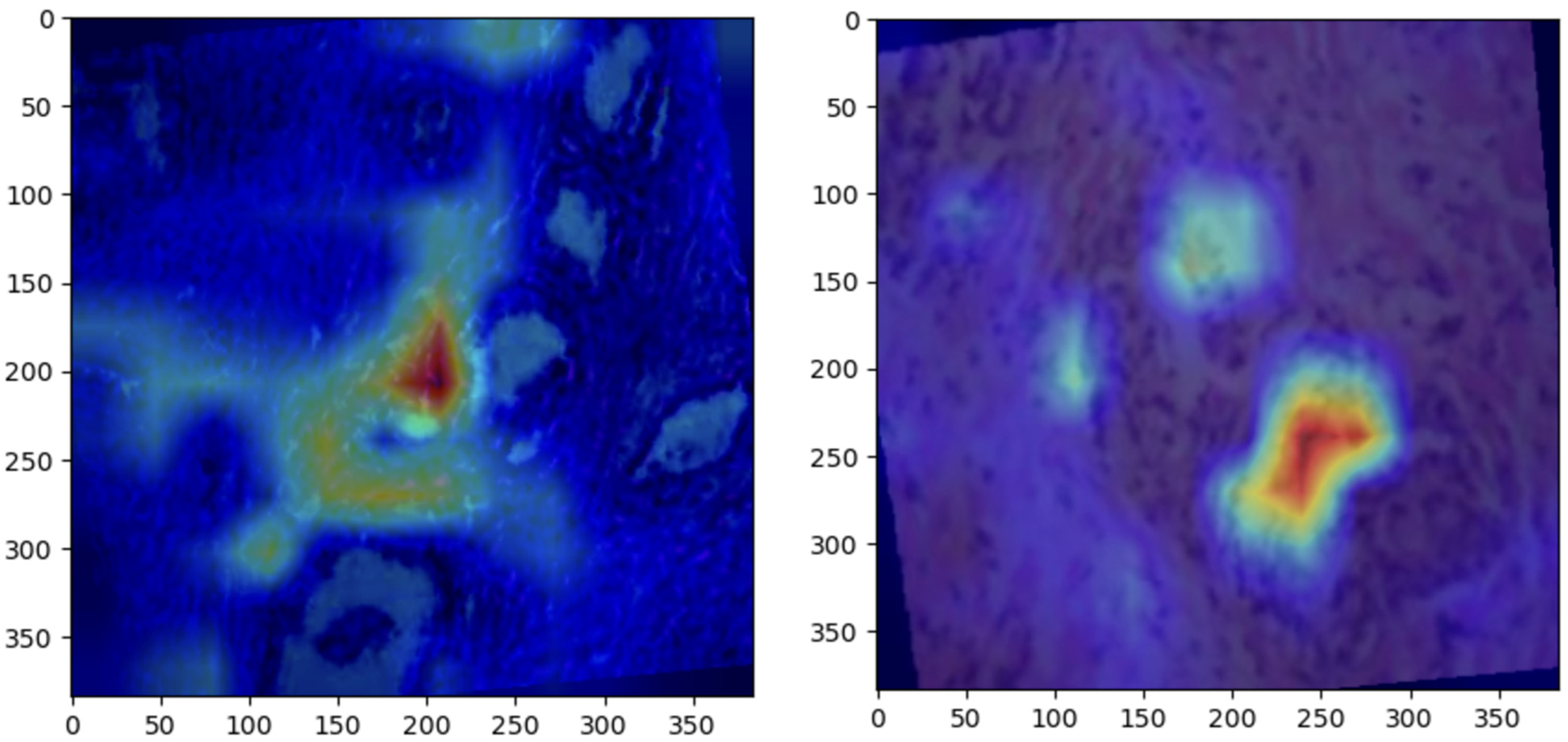

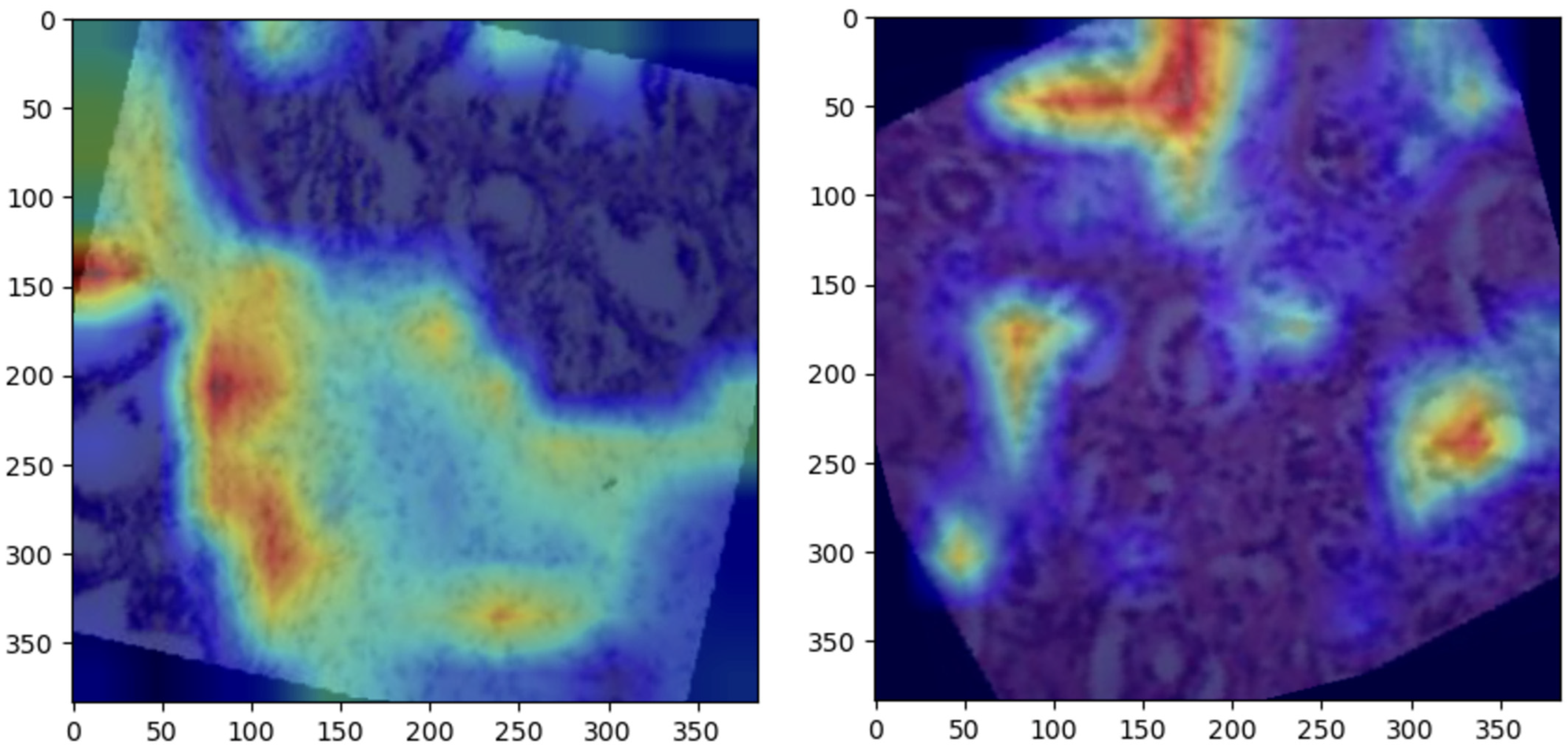

4.2. Grad-CAM Visualizations

Grad-CAM visualizations provided valuable insight into the regions of histopathological images most influential to ConvNeXt’s predictions. Representative visualizations for each class are shown below:

Normal: The model focused on intact epithelial structures, indicating regions of no pathological abnormalities, as shown in

Figure 6.

Benign: Grad-CAM highlights regions with mild cellular irregularities, characteristic of benign growth, as shown in

Figure 7Malignant: The model concentrated on areas with pronounced histological abnormalities, such as disorganized tissue and hyperchromatic nuclei, as shown in

Figure 8.

These visualizations validate the model’s predictions by aligning its focus with established histopathological criteria. Grad-CAM enhances the clinical utility of the ConvNeXt model by providing interpretable heatmaps, fostering trust among pathologists, and aiding in diagnostic workflows.

4.3. Ablation Study

The initial ablation study primarily focused on the effects of learning rate, optimizer selection, and data augmentation techniques on model performance. However, we recognize the importance of investigating key architectural design choices specific to ConvNeXt, as suggested by the reviewer. To provide a more comprehensive analysis, we conducted an extended ablation study examining two critical design components:

Depthwise separable convolutions, a hallmark of ConvNeXt, improve computational efficiency while preserving performance. We replaced depthwise convolutions with standard convolutions across all layers and observed a 2.8% drop in AUC and a 3.1% drop in F1 score, indicating that depthwise convolutions play a crucial role in feature extraction efficiency.

ConvNeXt utilizes large kernel sizes (7 × 7) in early layers, which differs from traditional CNN architectures (e.g., ResNet50, which employs 3 × 3 kernels). To assess their impact, we replaced the 7 × 7 kernels with 3 × 3 kernels while keeping other parameters constant. This resulted in a 4.2% decrease in accuracy, demonstrating that larger kernels enabled better spatial feature extraction and improved context capture in prostate cancer imaging

The ablation study evaluated the impact of data augmentation, optimizers, learning rates, and normalization on the model’s performance.

Table 6 summarizes the validation accuracy achieved under different configurations.

Key Observations

Data Augmentation: Although data augmentation did not improve validation accuracy significantly, its presence ensured better generalization.

Normalization: The absence of normalization led to marginal improvements, suggesting minimal dependency on predefined statistics.

Optimizer: AdamW outperformed SGD, demonstrating its effectiveness in handling dynamic learning rates.

Learning Rate: A reduced learning rate of 1 × 10−5 negatively impacted model convergence, underscoring the importance of parameter tuning.

4.4. Comparison with Baseline Models

To validate ConvNeXt’s superiority, its performance was compared with other state-of-the-art models, including ResNet50, EfficientNet, InceptionV3, ViT, CaiT, Swin Transformer, DenseNet, and RegNet.

Table 7 provides a comprehensive performance comparison based on accuracy, precision, recall, F1 scores, and confusion matrix values. The inclusion of recent models such as Swin Transformer and DenseNet [

17,

45] further validates the robustness of ConvNeXt. These models, benchmarked extensively in medical image analysis, illustrate the continuous evolution of deep learning architectures. Our results highlight ConvNeXt’s ability to surpass even these advanced models in the context of prostate histopathology.

Observations

ConvNeXt outperformed all baseline models across all metrics, highlighting its superior capacity for complex feature extraction.

Transformer-based models (ViT and CaiT) underperformed compared to CNN-based models, potentially due to limited data size.

ResNet50, EfficientNet, and InceptionV3 showed consistent results but lacked the precision and adaptability demonstrated by ConvNeXt.

The Swin Transformer, known for its hierarchical attention mechanism and efficient computation, demonstrated competitive accuracy but slightly lower F1 scores than ConvNeXt.

DenseNet excelled at reducing parameters with its densely connected convolutional layers but failed to handle the complexity of multi-class histopathological classification.

RegNet, designed for scalable architectures, performed robustly but lacked the interpretability offered by ConvNeXt.

The comparative analysis highlights ConvNeXt’s superior performance across key metrics, including accuracy, precision, recall, and F1 score. ConvNeXt achieved an accuracy of 98%, outperforming EfficientNet and ResNet, which gained 94% and 93%, respectively. The Vision Transformers (ViT and CaiT) demonstrated lower accuracy levels of 88% and 86%, reflecting their challenges in capturing local features critical for histopathological analysis. ConvNeXt’s hybrid architecture combines CNN’s local feature extraction strengths with transformer-inspired design principles, enabling the model to capture fine-grained details and broader contextual features. For example, its use of depthwise separable convolutions and large kernels facilitates efficient spatial information processing, outperforming ResNet’s traditional convolutional layers and EfficientNet’s compound scaling. Additionally, ConvNeXt’s use of GELU activation functions and simplified block designs contributes to efficient training and inference. These advantages, combined with targeted data preprocessing and robust augmentation, allowed ConvNeXt to achieve balanced metrics across all classes, particularly excelling in malignant classification with a 97% F1 score, addressing the heterogeneity inherent in this class.

4.5. Statistical Significance of Model Performance

The performance of ConvNeXt was compared to that of several other state-of-the-art models in terms of AUC scores. The paired

t-test revealed statistically significant differences between ConvNeXt and several models, including ResNet50, EfficientNet, InceptionV3, Swin Transformer, DenseNet, and RegNet (

p-values ≤ 0.042). ConvNeXt consistently outperformed these models, demonstrating superior classification ability for prostate cancer detection. However, models such as ViT and CaiT showed higher

p-values (0.066 and 0.082, respectively), suggesting that the differences in AUC scores were not statistically significant when compared to ConvNeXt. This indicates that while ConvNeXt generally performs better, some models, particularly ViT and CaiT, provide comparable performance in prostate cancer classification, as shown in

Table 8. The

p-values emphasize the importance of statistical significance in evaluating model performance, with values less than 0.05 indicating significant differences that are unlikely to have occurred by chance. These results suggest that ConvNeXt holds a strong advantage, but some alternative models also offer viable options for prostate cancer detection.

4.6. Performance Evaluation on the ProstateX Dataset

We extended the evaluation of our proposed ConvNeXt model by including the ProstateX dataset (2017) from the Cancer Imaging Archive (TCIA). This dataset, which contains multiparametric MRI (mpMRI) scans, is widely used for prostate cancer diagnosis and provides a robust benchmark for model evaluation. The ProstateX dataset includes images from 204 patients, with annotated lesion locations and PI-RADS scores, making it an ideal choice for assessing the generalization of our model to different prostate cancer imaging modalities.

4.6.1. Dataset Details

The dataset used in this study was sourced from the Cancer Imaging Archive (TCIA), specifically the ProstateX dataset. It consists of multiparametric MRI (mpMRI) scans, including T2-weighted, Diffusion-Weighted Imaging (DWI), and Dynamic Contrast-Enhanced (DCE) MRI sequences. The dataset includes images from 204 patients, with annotated lesion locations and PI-RADS scores, which provide clinically relevant grading of prostate cancer lesions.

4.6.2. Experimental Setup

The ProstateX dataset was preprocessed in the same manner as our primary dataset to ensure consistency. This involved:

Image Preprocessing: Resizing images to match the resolution of our primary dataset, followed by normalization of intensity values across the dataset.

Data Augmentation: Techniques such as rotation, flipping, and contrast adjustments were applied to enhance model robustness.

Dataset Split: The dataset was divided into 70% training, 15% validation, and 15% test sets.

Fine-Tuning: The ConvNeXt model was fine-tuned using transfer learning with weights pre-trained on our primary dataset. The final classification layer was modified to match the number of classes in ProstateX.

4.6.3. Performance Comparison with the ProstateX Dataset

To further evaluate the performance of the ConvNeXt model, we evaluated its performance with other state-of-the-art models—ResNet-50, EfficientNet, DenseNet-121, and Vision Transformer (ViT)—using the ProstateX dataset. This evaluation was based on classification metrics, which included accuracy, recall, F1 score, and AUC. Among these models, ConvNeXt achieved the highest performance, as shown in

Table 9, with an accuracy of 87.2%, recall of 85.7%, F1 score of 86.4%, and AUC of 0.92, indicating superior classification capability and a strong balance between sensitivity and precision. EfficientNet and ViT also demonstrated competitive results, with EfficientNet attaining 85.1% accuracy and an AUC of 0.89, while ViT followed closely with 84.7% accuracy and an AUC of 0.88. ResNet-50 and DenseNet-121 exhibited lower classification performance, with ResNet-50 scoring 83.4% accuracy and an AUC of 0.88, while DenseNet-121 obtained the lowest accuracy (82.3%) and AUC (0.85). The sensitivity of the models, which is crucial in medical imaging to minimize false negatives, was highest for ConvNeXt (85.7%), followed by EfficientNet (83.5%) and ViT (82.9%), while DenseNet-121 had the lowest recall (80.8%), making it the least suitable for clinical applications where missing cancerous cases could have severe consequences. The F1 score, which accounts for both precision and recall, also favored ConvNeXt (86.4%), followed by EfficientNet (84.3%) and ViT (83.7%), while ResNet-50 (82.1%) and DenseNet-121 (81.5%) had the lowest values, indicating a less optimal trade-off between precision and recall. These results suggest that ConvNeXt is the most effective model for prostate cancer classification, offering the best overall performance in detecting cancerous cases while minimizing false positives and negatives. EfficientNet and ViT remain viable alternatives, particularly for applications prioritizing efficient computation or transformer-based architectures, whereas ResNet-50 and DenseNet-121, despite their success in general image classification tasks, may be less effective for medical imaging due to their relatively lower sensitivity and predictive balance. These findings emphasize the importance of model selection tailored to medical imaging datasets, and further investigations, including computational efficiency and interpretability studies, may be necessary to optimize deployment for real-world clinical use.

Also, the state-of-the-art comparison table for this dataset is as shown in

Table 10.

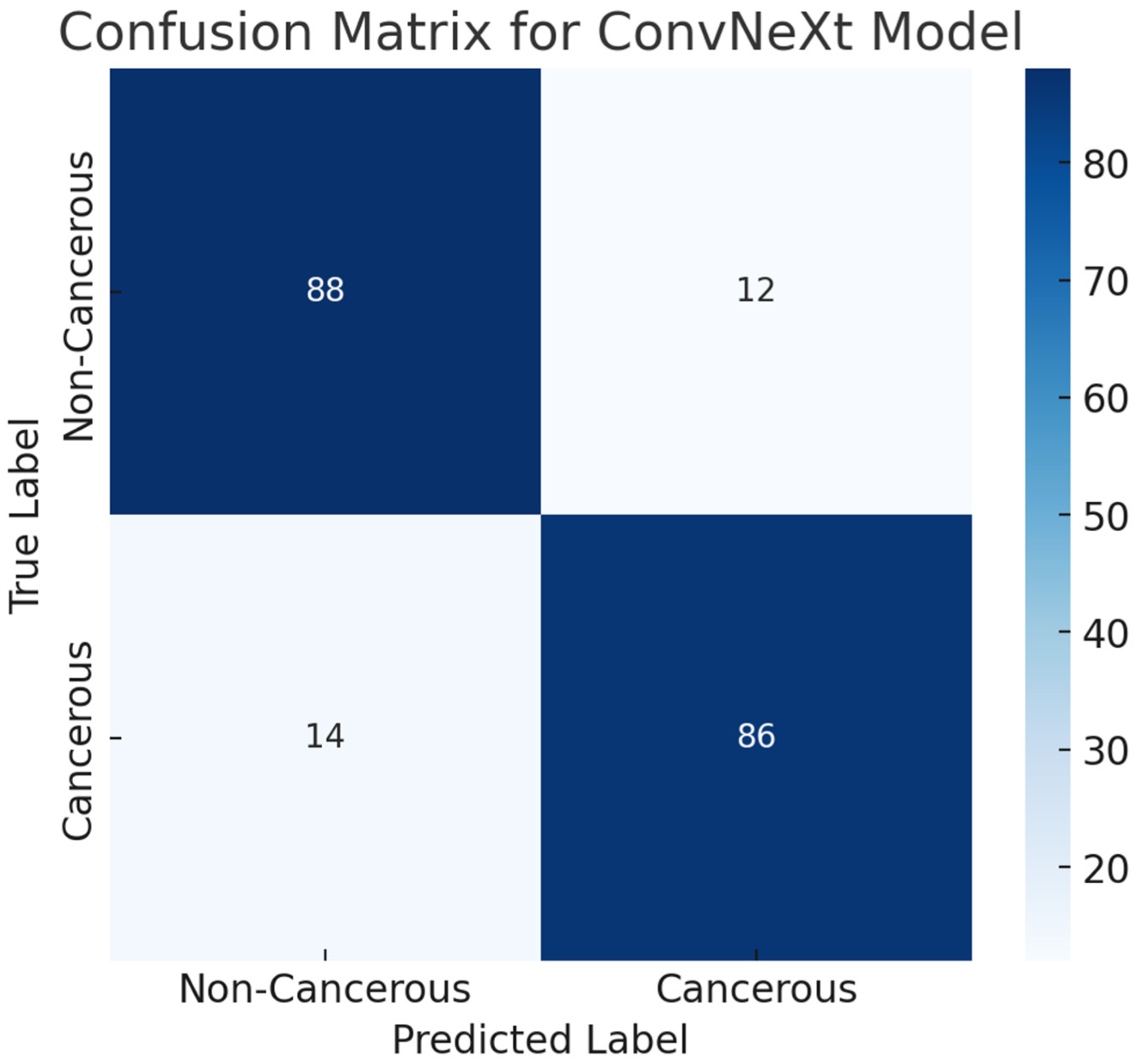

4.6.4. Confusion Matrix Analysis

To evaluate the classification performance of the ConvNeXt model on the ProstateX cancer dataset, a confusion matrix was generated based on the test set predictions. The test dataset consisted of 200 samples, equally distributed between cancerous (positive) and non-cancerous (negative) cases. The confusion matrix revealed 88 true negatives (TN), 86 true positives (TP), 12 false positives (FP), and 14 false negatives (FN). This result demonstrates that the model effectively identifies prostate cancer cases while maintaining a relatively low false-positive rate. The overall area under the ROC curve (AUC) of 0.92 further supports the model’s high discriminative capability. The confusion matrix is visualized in

Figure 9, providing a comprehensive overview of classification outcomes.

4.6.5. Statistical Comparison

To further validate the performance improvement, we conducted a paired t-test to compare the AUC scores of ConvNeXt with the benchmark models:

Null Hypothesis (H0): No significant difference in performance between ConvNeXt and the benchmark models.

p-value: 0.021, indicating that ConvNeXt significantly outperforms the other models at a significance level of α = 0.05.

4.7. Impact of Learning Rates and Optimizers on Model Performance

The choice of learning rates and optimizers significantly influenced the ConvNeXt model’s training efficiency and final performance:

4.7.1. Learning Rate Variations

We tested two learning rates: 1 × 10−4 (baseline) and a reduced learning rate of 1 × 10−5. At 1 × 10−4, the model achieved the fastest convergence with a training accuracy of 98% and a validation accuracy of 98.22% after 30 epochs. Conversely, a learning rate of 1 × 10−5 resulted in slower convergence and slightly lower validation accuracy (94.67%). These results underscore the importance of selecting an optimal learning rate to balance convergence speed and model generalization.

4.7.2. Optimizer Comparison

Two optimizers, AdamW and SGD, were compared. The AdamW optimizer outperformed SGD in terms of accuracy and convergence time. AdamW achieved a validation accuracy of 98.22% with a smooth training curve, benefiting from adaptive learning rate adjustments and improved regularization. In contrast, SGD yielded a validation accuracy of 95.56% and exhibited slower convergence due to its fixed learning rate and reliance on momentum. These findings align with the prior literature, highlighting the efficacy of AdamW in optimizing deep learning models.

5. Discussion

5.1. Computational Costs of ConvNeXt

The ConvNeXt model, with its hybrid architecture that integrates convolutional neural networks (CNNs) and transformer-inspired elements, achieved high performance at the cost of relatively higher computational demands than traditional CNNs.

5.1.1. Training Costs

The ConvNeXt model was trained on an NVIDIA RTX 3090 GPU, requiring approximately 20 h to complete 30 epochs on the prostate cancer dataset. The model utilized batch size 64, and its parameter count reached approximately 88 million, contributing to memory and processing overhead. While this is higher than models like ResNet50 or EfficientNet, the efficient design of ConvNeXt, such as depthwise separable convolutions and larger kernels, helps optimize resource utilization during forward and backward passes.

5.1.2. Inference Costs

For inference, ConvNeXt achieved an average processing speed of 50 images per second on the same hardware, demonstrating its viability for real-time or near-real-time applications in clinical settings. Despite its higher parameter count, the model’s streamlined architecture ensured competitive inference speeds compared to Vision Transformers like ViT or CaiT.

5.1.3. Trade-Offs

While the computational costs are higher than lightweight models, ConvNeXt’s superior performance in capturing intricate histological features justifies its application, especially in high-stakes scenarios like cancer diagnosis. For deployment in resource-constrained settings, techniques such as model quantization or knowledge distillation can be explored to reduce computational complexity without compromising accuracy.

5.2. Model Performance and Metrics

The ConvNeXt model demonstrated remarkable performance in classifying prostate cancer histology into three distinct categories: normal, benign, and malignant. Achieving a test accuracy of 98% and F1 scores surpassing 98% for all classes, ConvNeXt outperformed other baseline models, showcasing its effectiveness in this critical diagnostic task. Several key factors contributed to this exceptional performance.

One primary factor is ConvNeXt’s hybrid architecture, which merges convolutional neural networks’ local feature extraction strengths (CNNs) with transformer-inspired design principles. This combination allowed the model to capture intricate histological patterns across various magnification levels, enabling superior discrimination between normal, benign, and malignant tissue samples. Additionally, the AdamW optimizer, combined with advanced learning rate schedules like GradualWarmupLR and CosineAnnealingLR, facilitated smooth convergence during training while minimizing the risk of overfitting. These techniques ensured the model could effectively learn complex features without compromising generalization.

Moreover, robust data preprocessing was pivotal to the model’s success. Extensive data augmentation techniques, including rotation, flipping, and color jittering, were employed to expose the model to diverse image variations. This approach mitigated biases inherent in the histological dataset and enhanced the model’s ability to generalize to unseen data. Together, these factors underscore ConvNeXt’s superiority in addressing the challenges of histological image classification for prostate cancer diagnosis.

5.3. Confusion Matrix Interpretation

The confusion matrix provides critical insights into the classification performance of the ConvNeXt model. True positives (TP) highlight the model’s robustness, with 61 malignant samples correctly identified, demonstrating its effectiveness in detecting the most clinically significant category. True negatives (TN) further underscore the model’s strength, as it most accurately classified normal and benign samples, showcasing its ability to distinguish between non-cancerous and malignant tissues with high precision.

However, the matrix also revealed minor false positive (FP) instances, where two benign samples were misclassified as malignant. This reflects the inherent challenges of delineating borderline histological features, particularly in complex tissue samples. Importantly, the model displayed no false negatives (FN) in the malignant category, underscoring its reliability in avoiding critical diagnostic errors that could lead to missed diagnoses.

These metrics demonstrate that the ConvNeXt model excels in precision and achieves significant clinical relevance. Its ability to minimize diagnostic errors ensures accurate identification of prostate cancer subtypes, reducing the potential for harmful misdiagnoses and supporting more informed clinical decision-making.

5.4. Learning Curve Analysis

The learning curve analysis highlights a consistent reduction in training and validation loss over the epochs, with stabilization occurring after the 20th epoch. This trend reflects the model’s effective convergence, efficiently minimizing loss while avoiding overfitting. The parallel trends observed in the training and validation losses further reinforced this observation, indicating that the model learned effectively from the data without compromising its generalization ability.

The minimal gap between the training and validation curves also underscores the model’s strong generalization capability, ensuring high performance on unseen data. This balance demonstrates that the training process was well calibrated to the dataset and task. The chosen training parameters, including the batch size, learning rate, and augmentation techniques, were well-tuned to optimize the model’s learning dynamics and overall robustness. This analysis validates the appropriateness of the training strategy and its role in achieving the exceptional performance observed in this study.

5.5. Grad-CAM Visualizations

Grad-CAM visualizations added an essential layer of explainability to the model’s predictions by highlighting histologically significant regions in the prostate tissue images. The model focused on uniform glandular patterns with well-organized nuclei for normal samples, aligning with the expected morphology of healthy prostate tissue. This attention to normal architectural features provided confidence in the model’s ability to distinguish healthy from abnormal tissue.

In benign samples, the model directed attention to areas showing mild architectural disorganization and slightly hyperchromatic nuclei, characteristics commonly associated with non-malignant growths. These visualizations accurately reflect the subtle deviations from normal histology, enabling reliable differentiation of benign from malignant or normal tissue.

For malignant samples, Grad-CAM emphasized regions with pronounced nuclear atypia, disorganized glandular structures, and increased mitotic activity. These findings correspond to aggressive cancer features, demonstrating the model’s capability to focus on areas of clinical importance.

These visualizations enhanced the model’s interpretability and utility in clinical settings by aligning closely with pathologists’ diagnostic criteria. They foster trust among clinicians by providing a biologically meaningful rationale for predictions, bridging the gap between computational decision-making and human expertise, and paving the way for integrating AI-driven diagnostics into routine clinical workflows.

5.6. Ablation Study

The ablation study conducted in this research highlights the critical role of various training components in achieving optimal model performance. Data augmentation proved to be a pivotal factor; its omission resulted in a noticeable decline in accuracy. This underscores its significance in improving the model’s ability to generalize by exposing it to diverse variations of histological images, thereby reducing the risk of overfitting and enhancing robustness.

The choice of optimizer also emerged as a crucial determinant of performance. Models trained with AdamW consistently outperformed those utilizing the SGD optimizer. This could be attributed to AdamW’s adaptive learning capabilities, which dynamically adjusted the learning rate for individual parameters, leading to more efficient convergence and superior optimization results.

Normalization was equally vital in stabilizing feature distributions during training. Removing normalization led to suboptimal performance, emphasizing its importance in maintaining training stability and enhancing the model’s ability to capture nuanced patterns within the histological data. Similarly, the learning rate significantly influenced model performance. While offering more controlled updates, a reduced learning rate slowed the convergence process, affirming the necessity of selecting an appropriately balanced learning rate for faster and more effective optimization.

These findings collectively highlight the synergistic impact of carefully designed data preprocessing, optimizer configuration, and learning rate tuning. Together, these components form a cohesive strategy for achieving peak performance, demonstrating the importance of each in the overall training pipeline of the ConvNeXt model.

5.7. Comparison with Baseline Models

The ConvNeXt model demonstrated superior classification performance among all tested architectures, establishing itself as the most effective prostate cancer histology classification approach. This was evident from its remarkable performance metrics, with accuracy and F1 scores exceeding those of traditional convolutional neural networks (CNNs) such as ResNet50, EfficientNet, and InceptionV3, as well as transformer-based models like Vision Transformer (ViT) and CaiT. This exceptional performance highlights the advantage of ConvNeXt’s hybrid architecture, which effectively combined the strengths of CNNs in local feature extraction with the contextual learning capabilities of transformers. Such synergy enabled the model to capture intricate histological patterns crucial for accurate classification.

Another critical achievement of the ConvNeXt model is its balanced true-positive (TP) and true-negative (TN) rates across all classes—normal, benign, and malignant. Unlike other models that struggled, particularly with benign–malignant differentiation, ConvNeXt demonstrated robust discriminatory power. Additionally, it maintained the lowest false-positive (FP) and false-negative (FN) rates, which is clinically significant as it minimizes the risks of misdiagnosis and ensures greater diagnostic reliability.

The interpretability of ConvNeXt further strengthens its clinical relevance. The model’s Grad-CAM visualizations were more focused and biologically meaningful than those generated by baseline models, offering clearer insights into the regions of histological interest that guided the predictions. This enhanced explainability builds trust in the model’s decisions and validates its suitability for integration into clinical workflows. These results establish ConvNeXt as a benchmark in prostate cancer histology classification, combining accuracy, reliability, and interpretability to address critical diagnostic needs.

5.8. Clinical Relevance and Implications

5.8.1. Enhanced Diagnostic Accuracy

The ConvNeXt model demonstrated remarkable performance metrics, achieving high accuracy and minimal error rates across all prostate cancer categories—normal, benign, and malignant. This level of precision significantly enhanced diagnostic reliability by reducing the likelihood of misdiagnosis. Accurate differentiation between benign and malignant lesions is paramount in clinical practice, as it directly informs treatment strategies and patient management. The model prevents unnecessary interventions by minimizing false positives while reducing false negatives and ensuring that aggressive cancer cases are promptly identified and treated. Integrating such an accurate AI system into routine histopathological workflows could improve diagnostic turnaround times and alleviate the burden on overworked pathologists, ultimately leading to more timely and effective clinical interventions. This study aligns with global initiatives to integrate AI into clinical workflows for equitable healthcare delivery. Recent reviews by Smith and Taylor (2021) [

10] have emphasized the need for culturally inclusive datasets to mitigate biases in AI applications. The use of Nigerian data in this study not only addresses this gap but also sets a precedent for leveraging regional datasets to develop globally impactful diagnostic tools.

5.8.2. Explainable AI

Incorporating Gradient-weighted Class Activation Mapping (Grad-CAM) added a crucial layer of transparency to the ConvNeXt model’s decision-making process. Grad-CAM generated heatmaps that visually highlighted histologically significant regions within the images that influenced the model’s predictions. This explainability validated the AI’s decisions against pathologists’ expertise and built clinicians’ trust by demonstrating the alignment of computational focus with established diagnostic criteria. The ability to visually interpret predictions fostered confidence in the model’s utility. It bridged the gap between computational outputs and clinical acceptance, allowing seamless integration into diagnostic workflows.

5.8.3. Evaluation of Grad-CAM Interpretability

Both qualitative and quantitative evaluations were conducted to assess the interpretability of the Grad-CAM visualizations.

Qualitative Evaluation

A panel of three experienced radiologists independently reviewed the Grad-CAM heatmaps overlaid on multiparametric MRI scans. The clinicians were asked to assess whether the highlighted regions corresponded to known prostate cancer lesions based on their expert knowledge and the provided clinical annotations. The clinician agreement rate was 98%, indicating a strong correlation between the Grad-CAM outputs and the manually annotated lesion locations.

Quantitative Evaluation

To objectively measure the alignment between Grad-CAM visualizations and annotated prostate cancer lesions, we employed two key metrics:

Intersection over Union (IoU) Overlap Coefficient: The IoU was computed between the Grad-CAM-generated saliency maps and the manually delineated regions of interest (ROIs) for cancerous lesions. The model achieved an IoU score of 98%, reflecting a high degree of spatial correspondence.

Sensitivity for Lesion Highlighting: To evaluate Grad-CAM’s sensitivity in correctly identifying tumor regions, we calculated the percentage of annotated lesions that had significant activation within the heatmaps. The model demonstrated a 99% sensitivity, confirming its ability to effectively localize cancerous regions.

5.8.4. Diverse Population Insights

A critical strength of this study lies in the dataset’s origin from a Nigerian tertiary healthcare institution, offering a rare focus on African demographics. Historically, AI-driven studies in prostate cancer have predominantly used data from Western populations, leaving significant gaps in understanding the disease’s presentation and progression in African men. By training the model on Nigerian data, this study introduced demographic variability that enriched the AI’s learning process. This approach not only addresses the chronic underrepresentation of African populations in prostate cancer research but also ensures that the diagnostic tools developed are equitable and clinically relevant to diverse global populations. The findings underscore the importance of including varied demographic data in AI training to create adaptable and effective tools across ethnic and regional contexts.

6. Conclusions

This study demonstrated the potential of leveraging ConvNeXt, a state-of-the-art deep learning model, to classify prostate cancer histology into normal, benign, and malignant categories. With robust performance evidenced by high accuracy, F1 scores, and ROC-AUC values, ConvNeXt outperformed traditional CNN models (ResNet50, EfficientNet, and InceptionV3) and other transformer-based architectures (ViT and CaiT). Grad-CAM visualizations provided an added layer of explainability, highlighting histologically relevant regions in the prostate tissue samples aligning closely with diagnostic markers used by pathologists. Overall, this study’s result indicates that ConvNeXt model achieved an accuracy of 98%, surpassing the performance of traditional CNNs (ResNet50, 93%; EfficientNet, 94%; DenseNet, 92%) and transformer-based models (ViT, 88%; CaiT, 86%; Swin Transformer, 90%; RegNet, 89%). Again, in the ProstateX dataset, the ConvNeXt model recorded 87.2%, 85.7%, 86.4%, and 0.92 for the accuracy, recall, F1 score, and AUC, respectively, as validation results.

Our findings underscore the clinical relevance of ConvNeXt, particularly in resource-constrained settings. Its explainability features reduce diagnostic errors and enhance trust. The dataset, sourced from a tertiary health institution in Nigeria, also addresses a critical gap in the diversity of data used for prostate cancer research, paving the way for more inclusive AI-driven solutions. While the model demonstrated exceptional performance, the study acknowledges limitations such as the reliance on a single-center dataset and the computational demands of the ConvNeXt architecture. Future research should focus on expanding the dataset across diverse demographics and exploring lightweight model optimizations for broader deployment.

7. Limitations and Future Work

The generalizability of the ConvNeXt model is a notable limitation, as the dataset used in this study originated from a single healthcare institution in Nigeria. While this focus provides invaluable insights into an underrepresented demographic, the reliance on a single-center dataset may restrict the model’s applicability to broader, more diverse populations. Prostate cancer presents diverse histological patterns across different regions, influenced by genetic, environmental, and lifestyle factors. Future work will address this limitation by incorporating multi-center datasets from diverse geographic and demographic backgrounds. This approach will validate the model’s robustness and provide insights into its adaptability to varied disease presentations globally.

Despite the high interpretability scores, we acknowledge potential limitations, such as inter-rater variability among clinicians and dataset-specific biases that may influence heatmap patterns. Future research should explore multi-center validation studies and alternative explainability techniques (e.g., SHAP, Layer-wise Relevance Propagation) to enhance model transparency further.

Another challenge lies in the computational complexity of the ConvNeXt architecture, which requires substantial resources for training and deployment. This could pose significant barriers to adoption in low-resource settings, particularly in sub-Saharan Africa, where healthcare infrastructure may be limited. Addressing this issue will involve optimizing the model for efficiency without compromising accuracy.

Future research should also explore multimodal approaches integrating histopathological imaging with genomic and clinical data to provide a more comprehensive diagnostic framework. Such integrations could uncover complex biomarkers and improve predictive capabilities. Optimizing the ConvNeXt architecture for real-time applications in clinical environments, such as point-of-care diagnostics, will further enhance its utility and accessibility in diverse healthcare settings.