A Synergistic Multi-Scale Attention and Composite Feature Extraction Network for Coronary Artery Segmentation

Abstract

1. Introduction

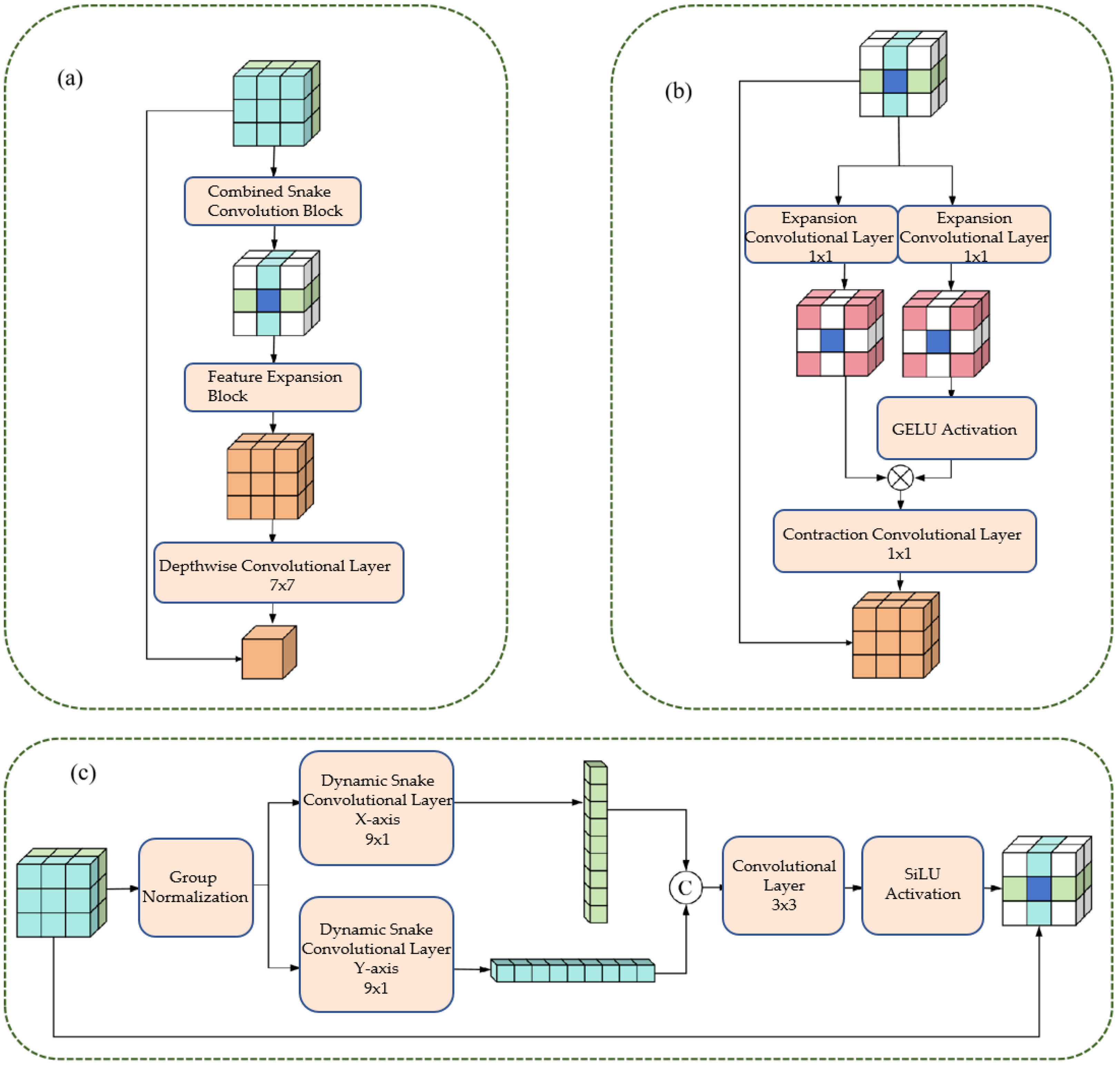

- Composite Feature Extraction Module (CFEM): Combines dynamic snake convolution with dual-path scaling to effectively capture tubular vascular features through directional convolutions, while employing expansion-contraction operations to enhance multi-scale vessel adaptability.

- Multi-scale Composite Attention Module (MCAM): Integrates multi-scale convolution with spatial and lightweight channel attention mechanisms to improve perception of intricate vascular branches and model long-range dependencies, thereby enhancing topological connectivity.

- Hybrid Dice-Focal Loss: Strategically combines Dice and Focal losses to optimize region overlap while addressing class imbalance, particularly benefiting challenging cases like fine branches and vascular intersections.

- Comprehensive Validation: Extensive experiments on the public ARCADE dataset demonstrate statistically significant improvements in segmentation accuracy, topological connectivity, and boundary precision, validating the method’s clinical potential.

2. Related Works

2.1. Analysis of Morphological Characteristics of Coronary Vessels

Cylindrical Feature

2.2. Key Issues in Coronary Vessel Segmentation

2.2.1. Accuracy Issue

2.2.2. Connectivity Issues

2.3. Classification of Existing Partitioning Methods

- 1.

- Traditional segmentation methods: The traditional methods mainly rely on low-level features such as image gray values and textures for segmentation, including threshold segmentation, edge detection, and morphological operations. For instance, Hassouna et al. [23] achieved brain vessel segmentation based on a random model, but it was sensitive to noise; Sun et al. [24,25,26] extracted vascular trees by combining morphological multi-scale enhancement with the watershed algorithm, but it was difficult to handle complex background artifacts.

- 2.

- Segmentation method based on deep learning: Based on deep learning methods, which possess the ability of end-to-end learning [27], they have become the mainstream technology for coronary artery segmentation at present. U-Net [28], the benchmark model for medical image segmentation, integrates high- and low-level features through skip connections; however, its adaptability to multi-scale blood vessels is insufficient. MedNeXt [29] employs a scaling architecture to expand the receptive field, thereby enhancing its ability to segment complex structures; however, it has not been optimized for vascular tubular features. DSCNet [30] combines dynamic snake convolution to capture tubular features and has a relatively high segmentation accuracy. However, it cannot model long-distance dependencies and is prone to connectivity issues.

- 3.

- Segmentation method based on Transformer: The Transformer architecture achieves long-range dependency modeling through the self-attention mechanism, providing a new approach to solving the vascular connectivity problem. TransUNet [31] combines the Transformer and U-Net architectures, enhancing its ability to perceive the overall structure. Swin-Unet [32] reduces computational costs through hierarchical window attention; however, it still faces challenges such as high data requirements and slow inference speed, which make it difficult to meet the real-time navigation requirements in clinical settings.

2.4. U-Net in Broader Biomedical Segmentation Context

3. Methods

3.1. Overall Network Architecture

- 1.

- Encoder: Comprising four sequential stages, each consisting of a CFEM followed by a downsampling block. Each downsampling block, implemented via a convolutional operation with a stride of 2, progressively reduces the feature map resolution by half while doubling the channel count, thereby expanding the receptive field and extracting hierarchical global features.

- 2.

- Decoder: Structured with four corresponding stages, each featuring an upsampling block followed by a CFEM. Upsampling is achieved through transposed convolution, which restores the feature map resolution. Crucially, multi-scale features from the encoder are integrated into the decoder via skip connections, where the MCAM plays a pivotal role in refining these features before they are fused with the upsampled features.

- 3.

- Bottleneck: A single CFEM is positioned at the network’s bottleneck, responsible for extracting the highest-level global features and providing essential contextual information to the decoder.

- 4.

- Output Layer: The final segmentation probability map is generated by a 1 × 1 convolutional layer, which maps the feature channels to two (representing foreground vessel and background), followed by a sigmoid activation function.

3.2. Composite Feature Extraction Module (CFEM)

3.2.1. Cylindrical Feature Extraction Layer

- 1.

- Group Normalization: Apply Group Normalization (GN) to the input feature maps to reduce the impact of batch size on training and stabilize the training process.

- 2.

- Directional convolution: The normalized feature maps are respectively input into the dynamic snake-shaped convolutions along the x-axis (9 × 1 convolution kernel) and y-axis (1 × 9 convolution kernel), resulting in two directional tubular feature maps.

- 3.

- Feature Integration: Concatenate Fx and Fy along the channel dimension, and integrate the features from both directions through a 3 × 3 ordinary convolution to achieve comprehensive capture of tubular features.

- 4.

- Activation function: Perform group normalization on the integrated feature map, combine with the SiLU activation function to enhance the non-linear expression ability of the features, and output tubular feature maps.

3.2.2. Dual-Path Scaling Architecture

- 1.

- Feature routing: The tubular feature map (Fconcat) is divided into two paths, which are respectively input into the expansion convolution (Exp) layer.

- 2.

- Extended convolution: Utilizing a 1 × 1 convolution kernel with a 4-fold expansion rate, the feature receptive field is expanded by a factor of 4, enabling the capture of large-scale vascular features.

- 3.

- Feature Interaction: Apply the GELU activation function to the output of one path, and perform matrix multiplication with the production of another path to achieve feature interaction and scale adaptation.

- 4.

- Contraction convolution: Utilizing a 1 × 1 convolution kernel with a 4-fold contraction rate, the receptive field is restored to its original size, ensuring that the output feature map is consistent with the input size.

- 5.

- Deep convolution: By using 7 × 7 deep convolution to integrate features, the number of parameters is reduced and the computational efficiency is improved;

- 6.

- Residual Connection: Before the output of the module, a residual connection is introduced, where the input feature map (Fin) is added to the processed feature map, enhancing the robustness of the network and preventing the vanishing gradient.

3.2.3. Multi-Scale Composite Attention Module (MCAM)

4. Results

4.1. Dataset Introduction

4.2. Experimental Environment and Parameter Settings

4.2.1. Evaluation Index

- 1.

- Dice Similarity Coefficient (Dice): Measures the degree of overlap between the segmented area and the actual area, with a range of . The higher the value, the higher the segmentation accuracy [26].

- 2.

- Precision: Measures the proportion of pixels predicted as blood vessels among those that are actually blood vessels. The value ranges from 0 to 1. The higher the value, the lower the false detection rate.

- 3.

- Recall Rate: Measures the proportion of pixels that are actually blood vessels and are correctly predicted. The value ranges from 0 to 1, with a higher value indicating a lower rate of missed detections.

- 4.

- Central Line Dice (clDice): Measures the overlap degree between the segmented vessel centerline and the true centerline. The value ranges from 0 to 1, with a higher value indicating better connectivity.

- 5.

- 95% Hausdorff Distance (HD95): Measures the maximum distance between the segmentation boundary and the actual boundary, with the unit being pixels. The smaller the value, the higher the boundary accuracy.

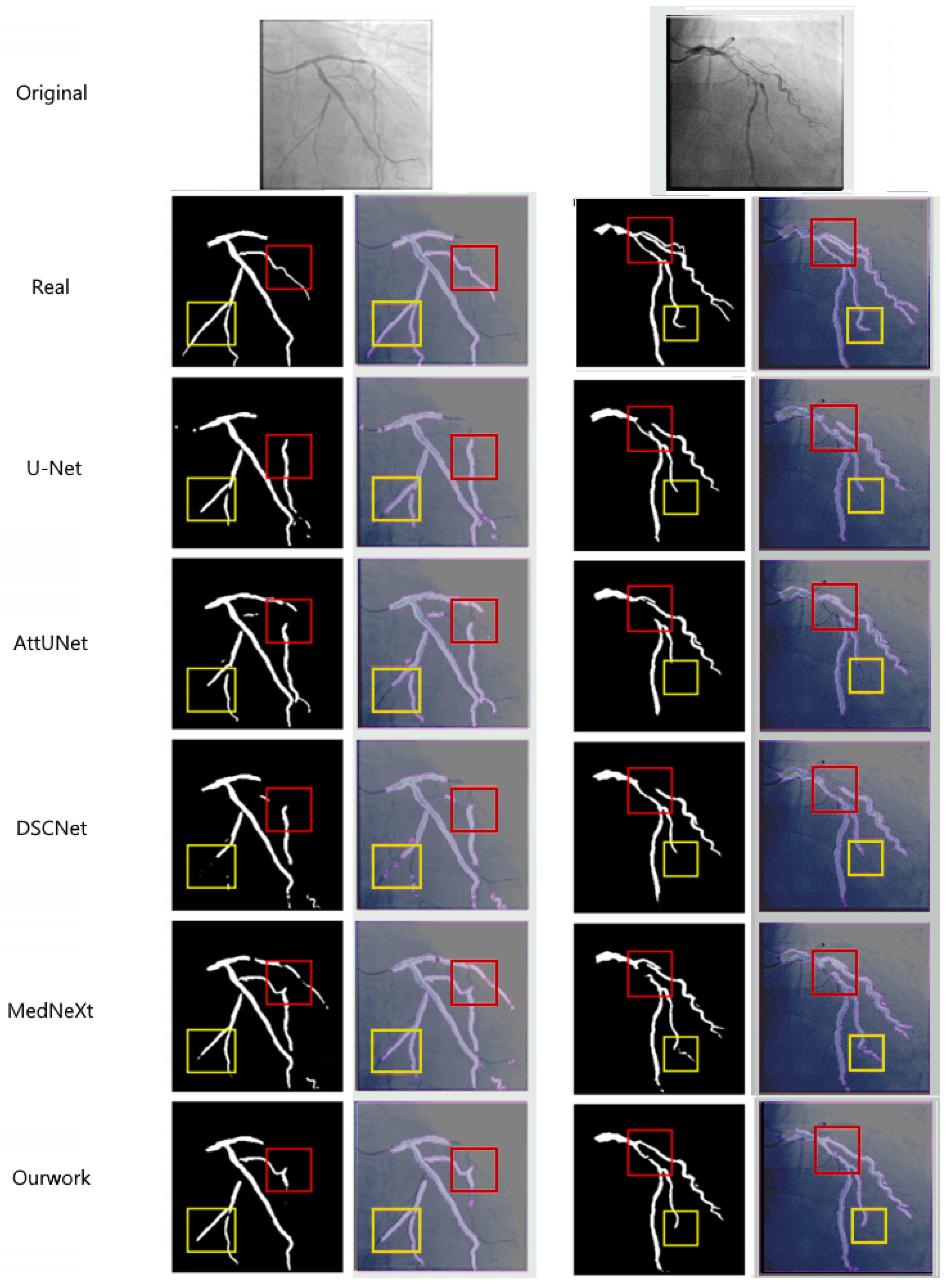

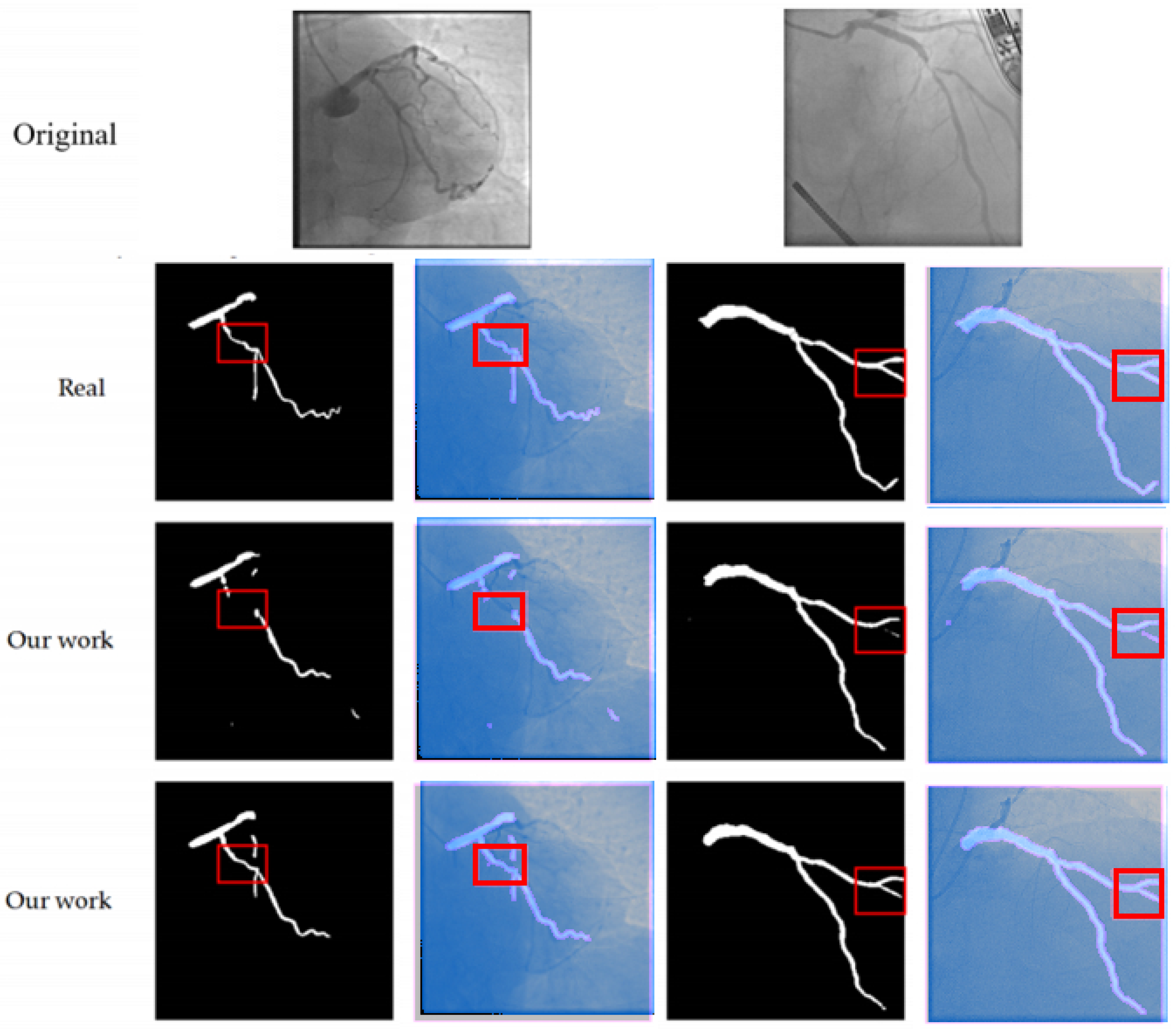

4.2.2. Comparison of Experimental Results and Analysis

- Dice coefficient: The method in this paper reaches 76.74%, which is 4.48%, 4.23%, 1.64%, 3.26%, and 20.84% higher than U-Net (72.26%), AttUNet (72.51%), MedNeXt (75.10%), DSCNet (73.48%), and SPNet (55.90%) respectively. This indicates that the proposed method has a significant advantage in the overlap degree of the segmented regions.

- clDice coefficient: The method in this paper achieves 50.30%, which is an improvement of 1.54% compared to the best-performing method in the comparison (MedNeXt, 48.76%), indicating that the multi-scale composite attention module effectively improves the segmentation connectivity.

- HD95 distance: The method in this paper achieves 57.8358 pixels, which is 18.18 pixels lower than U-Net (76.0132), 14.18 pixels lower than AttUNet (72.0205), 4.12 pixels lower than MedNeXt (61.9603), 11.69 pixels lower than DSCNet (69.5269), and 21.56 pixels lower than SPNet (79.3913). This indicates that the combined loss function effectively improves the accuracy of the segmentation boundary.

- Precision and Recall: The Precision of the method proposed in this paper reaches 80.66% and the Recall reaches 74.87%, both of which are superior to the comparison methods. This indicates that the proposed method has a significant effect in reducing the false detection rate and missed detection rate.

5. Discussion

5.1. Methodological Performance Advantages and Clinical Significance

5.2. Methodological Limitations

- Insufficient model lightweighting: Although the computational complexity of this method is lower than that of most comparison methods, for resource-constrained embedded devices (such as local computing units of surgical robots), the model parameter size and computational load still need to be further reduced.

- Lack of multimodal data fusion: The current method only performs segmentation based on two-dimensional DSA images, lacking information on the microscopic structure of the vessel wall (such as plaque properties), making it challenging to meet the precise segmentation requirements of the lesion area.

- Generalization ability needs to be improved: The performance of the method depends on the distribution characteristics of the ARCADE dataset. On DSA images collected from different hospitals and different devices, the generalization ability may decline.

5.3. Future Research Directions

- Model lightweight optimization: Utilize techniques such as model pruning, quantization, and knowledge distillation, combined with lightweight network architectures (such as MobileNet, EfficientNet), to further reduce the model’s parameters and inference time while maintaining accuracy, in order to meet the requirements of embedded devices.

- Multi-modal data fusion: Combine modal data such as intravascular ultrasound (IVUS) and optical coherence tomography (OCT), integrate macrovascular structure (DSA) and microvascular wall information (IVUS/OCT), to improve the segmentation accuracy of lesion areas (such as stenosis, calcification).

- Generalized segmentation model adaptation: Based on large-scale medical image pre-training generalized segmentation models (such as SAM-Med2D), through transfer learning, adapt the coronary artery segmentation task, reduce the reliance on labeled data, and enhance the model’s generalization ability on different datasets.

- Multi-task joint learning: Combine coronary artery segmentation with functions such as stenosis detection and surgical path planning, design a multi-task joint learning model, achieve an end-to-end surgical navigation system, and further improve surgical efficiency and safety.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. World Health Statistics 2020: Monitoring Health for the SDGs, Sustainable Development Goals, 1st ed.; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- World Health Organization. Global Health Estimates: Life Expectancy and Leading Causes of Death and Disability; World Health Organization: Geneva, Switzerland, 2024. [Google Scholar]

- Goldstein, J.A.; Balter, S.; Cowley, M.; Hodgson, J.; Klein, L.W. Occupational hazards of interventional cardiologists: Prevalence of orthopedic health problems in contemporary practice. Catheter. Cardiovasc. Interv. 2004, 63, 407–411. [Google Scholar] [CrossRef]

- Piayda, K.; Kleinebrecht, L.; Afzal, S.; Bullens, R.; ter Horst, I.; Polzin, A.; Veulemans, V.; Dannenberg, L.; Wimmer, A.C.; Jung, C.; et al. Dynamic coronary roadmapping during percutaneous coronary intervention: A feasibility study. Eur. J. Med. Res. 2018, 23, 36. [Google Scholar] [CrossRef]

- Solomon, R.; Dauerman, H.L. Contrast-Induced Acute Kidney Injury. Circulation 2010, 122, 2451–2455. [Google Scholar] [CrossRef]

- Wang, R.; Li, C.; Wang, J.; Wei, X.; Li, Y.; Zhu, Y.; Zhang, S. Threshold segmentation algorithm for automatic extraction of cerebral vessels from brain magnetic resonance angiography images. J. Neurosci. Methods 2015, 241, 30–36. [Google Scholar] [CrossRef]

- Orujov, F.; Maskeliūnas, R.; Damaševičius, R.; Wei, W. Fuzzy based image edge detection algorithm for blood vessel detection in retinal images. Appl. Soft Comput. 2020, 94, 106452. [Google Scholar] [CrossRef]

- Sun, K.; Chen, Z.; Jiang, S.; Wang, Y. Morphological multiscale enhancement, fuzzy filter and watershed for vascular tree extraction in angiogram. J. Med. Syst. 2011, 35, 811–824. [Google Scholar] [CrossRef]

- Cinsdikici, M.G.; Aydın, D. Detection of blood vessels in ophthalmoscope images using MF/ant (matched filter/ant colony) algorithm. Comput. Methods Programs Biomed. 2009, 96, 85–95. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Perez, M.E.; Hughes, A.D.; Thom, S.A.; Bharath, A.A.; Parker, K.H. Segmentation of blood vessels from red-free and fluorescein retinal images. Med. Image Anal. 2007, 11, 47–61. [Google Scholar] [CrossRef]

- Hassouna, M.S.; Farag, A.A.; Hushek, S.; Moriarty, T. Cerebrovascular segmentation from TOF using stochastic models. Med. Image Anal. 2006, 10, 2–18. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Jin, Q.; Meng, Z.; Pham, T.D.; Chen, Q.; Wei, L.; Su, R. DUNet: A deformable network for retinal vessel segmentation. Knowl.-Based Syst. 2019, 178, 149–162. [Google Scholar] [CrossRef]

- Shen, Y.; Fang, Z.; Gao, Y.; Xiong, N.; Zhong, C.; Tang, X. Coronary arteries segmentation based on 3D FCN with attention gate and level set function. IEEE Access 2019, 7, 42826–42835. [Google Scholar] [CrossRef]

- Chen, J.; Wan, J.; Fang, Z.; Wei, L. LMSA-net: A lightweight multi-scale aware network for retinal vessel segmentation. Int. J. Imaging Syst. Technol. 2023, 33, 1515–1530. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. TransUNet: Rethinking the U-net architecture design for medical image segmentation through the lens of transformers. Med. Image Anal. 2024, 97, 103280. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Liu, X.; Zhang, D.; Yao, J.; Tang, J. Transformer and convolutional based dual branch network for retinal vessel segmentation in OCTA images. Biomed. Signal Process. Control 2023, 83, 104604. [Google Scholar] [CrossRef]

- Xu, H.; Wu, Y. G2ViT: Graph neural network-guided vision transformer enhanced network for retinal vessel and coronary angiograph segmentation. Neural Netw. 2024, 176, 106356. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2014; Volume 27. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2020; Volume 33, pp. 6840–6851. [Google Scholar]

- Chen, Z.; Xie, L.; Chen, Y.; Zeng, Q.; ZhuGe, Q.; Shen, J.; Wen, C.; Feng, Y. Generative adversarial network based cerebrovascular segmentation for time-of-flight magnetic resonance angiography image. Neurocomputing 2022, 488, 657–668. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Alamri, A. VNet: An end-to-end fully convolutional neural network for road extraction from high-resolution remote sensing data. IEEE Access 2020, 8, 179424–179436. [Google Scholar] [CrossRef]

- Li, J.; Wu, Q.; Wang, Y.; Zhou, S.; Zhang, L.; Wei, J.; Zhao, D. DiffCAS: Diffusion based multi-attention network for segmentation of 3D coronary artery from CT angiography. Signal Image Video Process. 2024, 18, 7487–7498. [Google Scholar] [CrossRef]

- Hoover, A.D.; Kouznetsova, V.; Goldbaum, M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans. Med. Imaging 2000, 19, 203–210. [Google Scholar] [CrossRef] [PubMed]

- Staal, J.; Abramoff, M.D.; Niemeijer, M.; Viergever, M.A.; Van Ginneken, B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Li, D.; Wang, D. DCT based multi-head attention-BiGRU model for EEG source location. Biomed. Signal Process. Control 2024, 93, 106171. [Google Scholar] [CrossRef]

- Vega, F. Adverse reactions to radiological contrast media: Prevention and treatment. Radiologia 2024, 66 (Suppl. 2), S98–S109. [Google Scholar] [CrossRef]

- Wu, B.; Kheiwa, A.; Swamy, P.; Mamas, M.A.; Tedford, R.J.; Alasnag, M.; Parwani, P.; Abramov, D. Clinical significance of coronary arterial dominance: A review of the literature. J. Am. Heart Assoc. Cardiovasc. Cerebrovasc. Dis. 2024, 13, e032851. [Google Scholar] [CrossRef]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic Snake Convolution based on Topological Geometric Constraints for Tubular Structure Segmentation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 6047–6056. [Google Scholar]

- Popov, M.; Amanturdieva, A.; Zhaksylyk, N.; Alkanov, A.; Saniyazbekov, A.; Aimyshev, T.; Ismailov, E.; Bulegenov, A.; Kuzhukeyev, A.; Kulanbayeva, A.; et al. Dataset for automatic region-based coronary artery disease diagnostics using X-ray angiography images. Sci. Data 2024, 11, 20. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-Like Pure Transformer for Medical Image Segmentation. In Proceedings of the Computer Vision—ECCV 2022 Workshops, Tel Aviv, Israel, 23–27 October 2023; Springer: Cham, Switzerland, 2023; pp. 205–218. [Google Scholar]

- Cihan, P.; Saygili, A.; Akyuzlu, M.; Özmen, N.E.; Ermutlu, C.Ş.; Aydın, U.; Yılmaz, A.; Aksoy, Ö. U-Net-Based Approaches for Biometric Identification and Recognition in Cattle. J. Fac. Vet. Med. Kafkas Univ. 2025, 31, 425. [Google Scholar]

- Wang, C.; Zhao, Z.; Ren, Q.; Xu, Y.; Yu, Y. Dense U-net based on patch-based learning for retinal vessel segmentation. Entropy 2019, 21, 168. [Google Scholar] [CrossRef]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef]

- Hendrycks, D.; Gimpel, K. Gaussian Error Linear Units (GELUs). arXiv 2023, arXiv:1606.08415. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SimAM: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Roy, S.; Koehler, G.; Ulrich, C.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. MedNeXt: Transformer-Driven Scaling of ConvNets for Medical Image Segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2023, Vancouver, BC, Canada, 8–12 October 2023; Springer Nature: Cham, Switzerland, 2023; pp. 405–415. [Google Scholar]

- Hou, Q.; Zhang, L.; Cheng, M.M.; Feng, J. Strip Pooling: Rethinking Spatial Pooling for Scene Parsing. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4002–4011. [Google Scholar]

| Hyperparameter | Value/Configuration |

|---|---|

| Optimizer | Adam |

| Learning Rate | |

| Weight Decay | |

| Training Epochs | 300 |

| Early Stopping Patience | 20 epochs |

| Batch Size | 4 |

| Loss Function | Combined Dice-Focal Loss |

| Data Normalization | [0, 1] |

| Input Image Size (Training) | (Random Crop) |

| Method | Dice (↑) | Precision (↑) | Recall (↑) | clDice (↑) | HD95 (↓) |

|---|---|---|---|---|---|

| U-Net | 0.7226 | 0.7788 | 0.6963 | 0.4817 | 76.0132 |

| AttUNet | 0.7251 | 0.7812 | 0.7001 | 0.4793 | 72.0205 |

| MedNeXt | 0.7510 | 0.8002 | 0.7250 | 0.4876 | 61.9603 |

| DSCNet | 0.7348 | 0.7524 | 0.7360 | 0.4741 | 69.5269 |

| SPNet | 0.5590 | 0.5417 | 0.5992 | 0.1683 | 79.3913 |

| Ours + Att | 0.7539 | 0.7964 | 0.7320 | 0.5002 | 61.8773 |

| Ours − Att | 0.7674 | 0.8066 | 0.7487 | 0.5030 | 57.8358 |

| Comparison | Mean Dice | Effect Size | Estimated |

|---|---|---|---|

| (Our Method vs.) | Difference (↑) | (Cohen’s ) | -Value |

| U-Net | 0.0448 | Medium to Large (≈0.45–) | |

| AttUNet | 0.0423 | Medium to Large (≈0.42–) | |

| MedNeXt | 0.0164 | Small to Medium (≈0.16–) | |

| DSCNet | 0.0326 | Medium (≈0.33–) | |

| SPNet | 0.2084 | Very Large (≈1.7–) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Du, Y.; Lin, Y.; Cheng, Z.; Li, Y.; Zhang, B.; Zhou, S. A Synergistic Multi-Scale Attention and Composite Feature Extraction Network for Coronary Artery Segmentation. Bioengineering 2025, 12, 1247. https://doi.org/10.3390/bioengineering12111247

Zhang L, Du Y, Lin Y, Cheng Z, Li Y, Zhang B, Zhou S. A Synergistic Multi-Scale Attention and Composite Feature Extraction Network for Coronary Artery Segmentation. Bioengineering. 2025; 12(11):1247. https://doi.org/10.3390/bioengineering12111247

Chicago/Turabian StyleZhang, Long, Yue Du, Yunlong Lin, Zhenyu Cheng, Yiyuan Li, Boyuan Zhang, and Shoujun Zhou. 2025. "A Synergistic Multi-Scale Attention and Composite Feature Extraction Network for Coronary Artery Segmentation" Bioengineering 12, no. 11: 1247. https://doi.org/10.3390/bioengineering12111247

APA StyleZhang, L., Du, Y., Lin, Y., Cheng, Z., Li, Y., Zhang, B., & Zhou, S. (2025). A Synergistic Multi-Scale Attention and Composite Feature Extraction Network for Coronary Artery Segmentation. Bioengineering, 12(11), 1247. https://doi.org/10.3390/bioengineering12111247