Generative Adversarial Networks in Dermatology: A Narrative Review of Current Applications, Challenges, and Future Perspectives

Abstract

1. Introduction

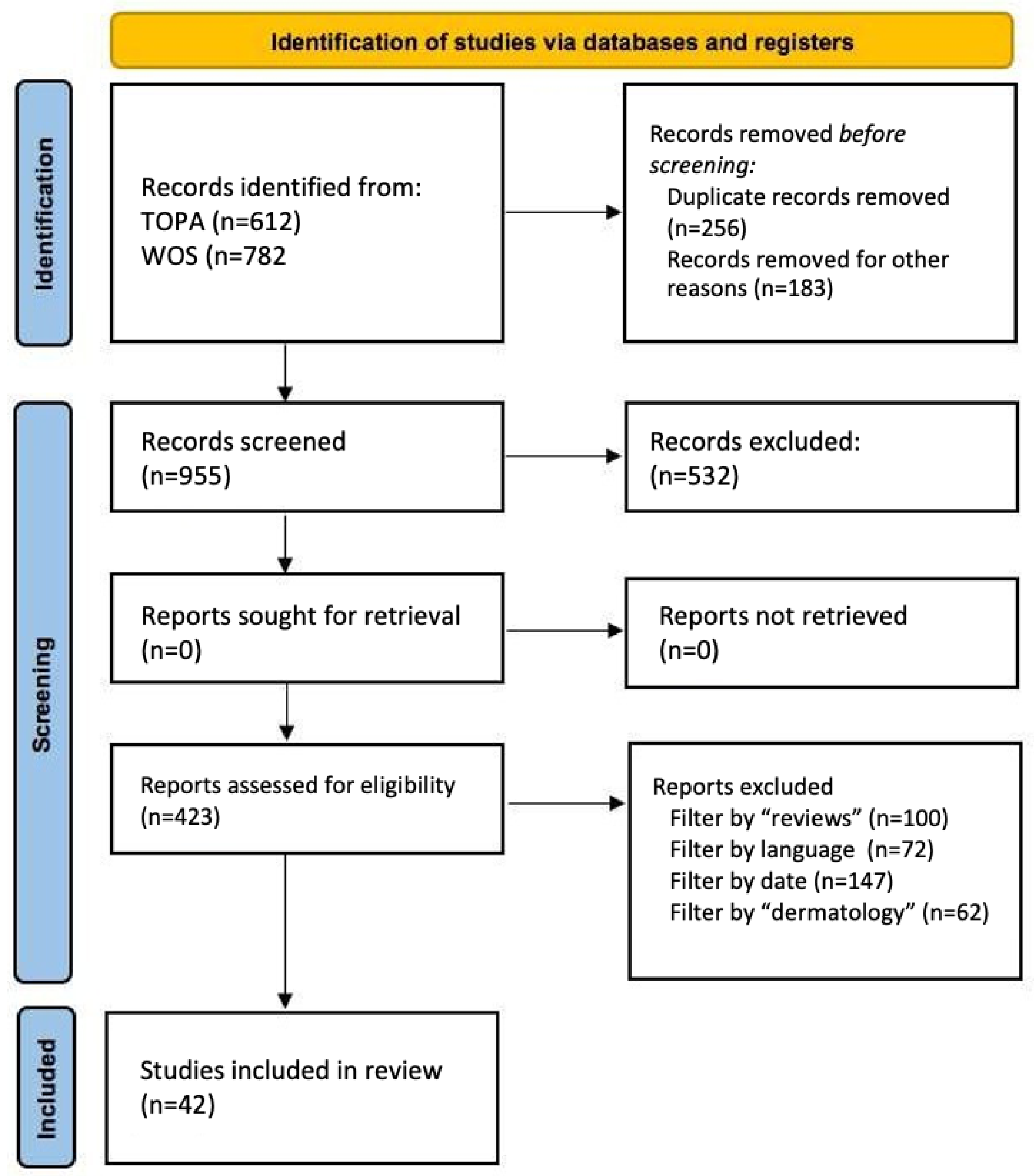

2. Materials and Methods

2.1. Objectives of the Work

- To review fundamental concepts of AI in dermatology, with a focus on the role of GANs in this field.

- To identify current applications of GANs for the generation, augmentation, and enhancement of dermatological images.

- To analyze the technical, ethical, and regulatory challenges associated with the implementation of GANs in dermatological practice and research.

- To explore the future perspectives of GANs and their potential for clinical integration.

2.2. Bibliographic Search Methodology

- Articles published in indexed journals or academic works (e.g., Final Degree Project (FDP) or Master’s Thesis (MT)) focused on AI and dermatology, prioritizing review articles.

- Publications from the last five years (2020–2025) to ensure relevance and currency, although some foundational studies published earlier were also included.

- Full-text availability.

- Articles in English or Spanish.

- Identification: A preliminary search was conducted in selected databases.

- Screening: Duplicates were removed, and titles/abstracts were reviewed for relevance.

- Eligibility: Full texts were evaluated according to predefined inclusion and exclusion criteria.

- Inclusion: Only studies that met all criteria were included, ensuring methodological rigor and thematic relevance.

2.3. Methodological Quality Assessment of Included Articles

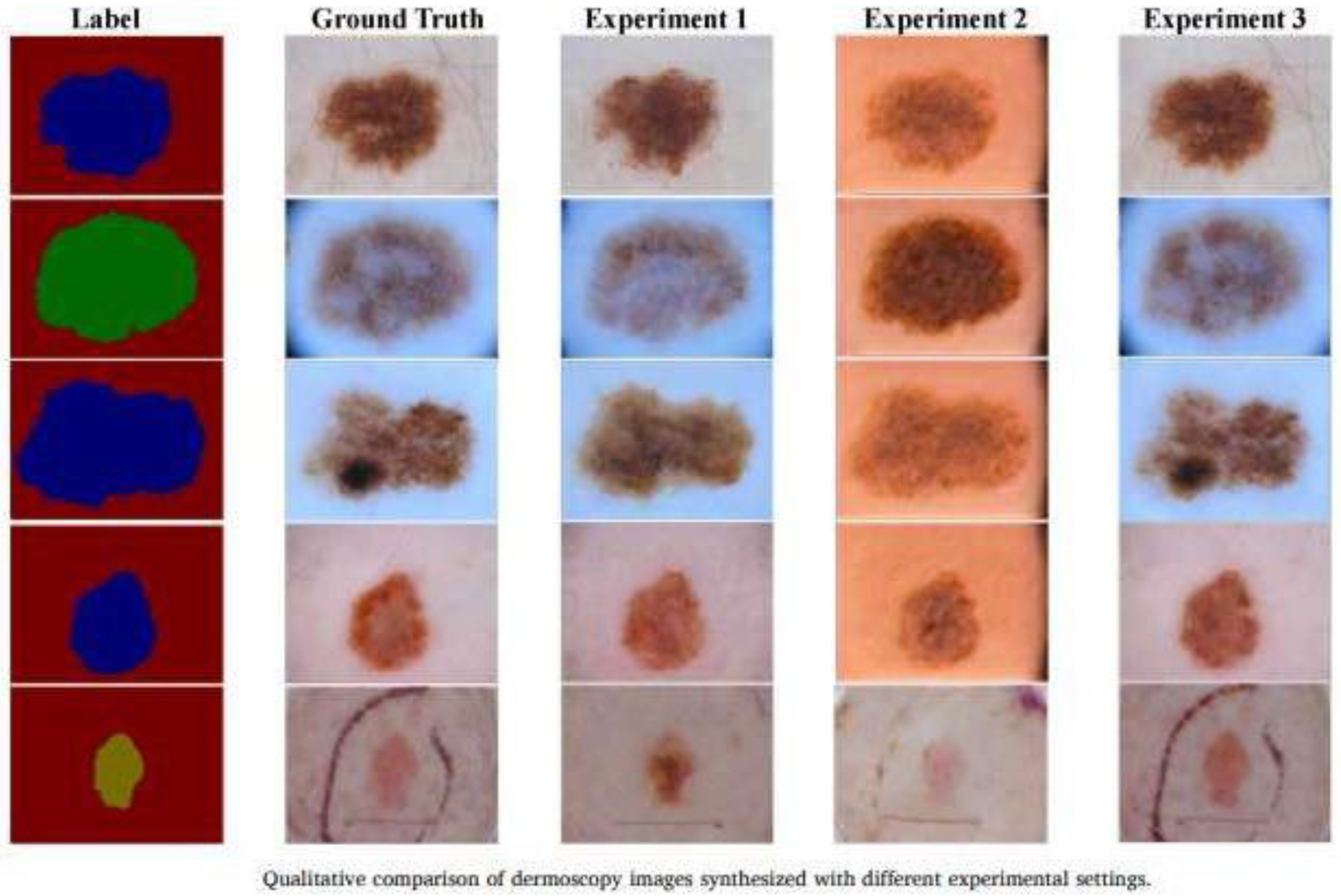

- Type of study (experimental, comparative, methodological, review, etc.).

- Objective and methodology (clarity of aim, GAN architecture, dataset characteristics, and validation strategy).

- Level of evidence, adapted from AI-in-health classification systems:

- ○

- Level I–II: Studies with clinical validation or comparative trials with standard reference.

- ○

- Level III: Methodological studies using retrospective data or public datasets.

- ○

- Level IV–V: Foundational theoretical studies or narrative reviews without experimental validation.

- Strengths (e.g., innovation, external validation, reproducibility, applicability).

- Limitations (e.g., small sample size, lack of clinical testing, limited generalizability).

3. Results

3.1. AI in Dermatology

3.1.1. Basic Concepts of AI in Medical Imaging

- -

- Convolutional layers: This is the core of the CNN and where the main operations are performed. Each layer applies filters or kernels that apply mathematical filters on the original image to generate activation maps that highlight specific features (such as edges, textures, anomalies, or simple shapes) relevant for classification. The output of this operation generates feature maps representing the regions activated by the applied filter.

- -

- Pooling layers: They reduce the spatial dimensions of the processed images, keeping only the most relevant information and reducing the computational complexity. The most common techniques are max pooling (selection of the maximum value within a region) and average pooling (averaging of values).

- -

- Fully connected layers: After multiple convolutional and clustering layers, these layers receive the previously generated activation maps and use them to make final predictions or classifications. These layers work by integrating all previously extracted features to generate a specific diagnosis or classification.

- -

- Automation in feature extraction: CNNs eliminate the need to manually design filters to identify patterns in images.

- -

- Spatial invariance: They are able to recognize objects regardless of their position or orientation within an image.

- -

- Hierarchy in learning: They learn representations from simple features (edges) to complex (whole objects).

3.1.2. AI Applications in Dermatology

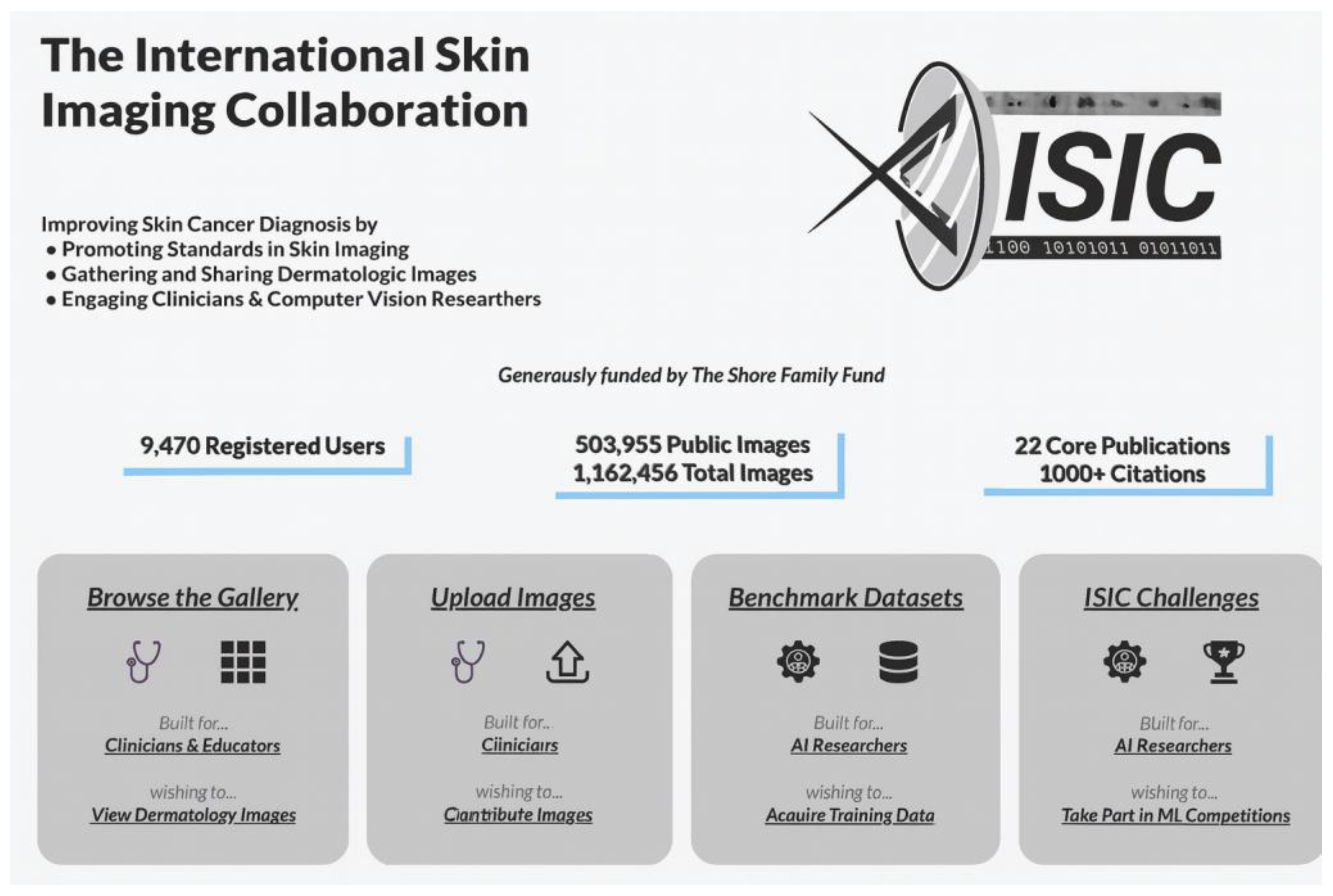

International Skin Imaging Collaboration (ISIC—New York, NY, USA)

- -

- Public Image Archive: ISIC maintains an open-access public archive containing tens of thousands of dermatologic images. This resource is invaluable for teaching, research, and the development of artificial intelligence algorithms for skin disease diagnosis and is used in most AI projects in dermatological imaging.

- -

- Standards Development: The collaboration works on the creation and promotion of standards for imaging in dermatology, addressing aspects such as technology, technique, terminology, privacy, and the interoperability of dermatological imaging.

- -

- Machine Learning Challenges (ISIC Challenges): ISIC organizes annual competitions that invite artificial intelligence researchers to develop and evaluate algorithms for the analysis of skin lesion images. These challenges have contributed significantly to the advancement of computer-aided diagnostic techniques in dermatology, and some of them have included the use of GANs.

AI in Dermatology

- -

- AI-assisted diagnosis: Models such as the one developed by Han et al. [44] have demonstrated greater than 90% accuracy in detecting melanomas from dermoscopy images.

- -

- Detection of basal cell and squamous cell carcinomas: Here, again, the algorithm of Han et al. [44] showed an accuracy of 94.8% in the identification of malignant skin tumors.

- -

- -

- Segmentation of dermatological images: Some of the “ISIC Challenge” have driven accurate segmentation algorithms to delineate pigmented lesions and tumor margins in dermoscopic images and improve the quality of dermatological images [5].

- -

- -

- Follow-up of disease progression: Longitudinal follow-up tools, such as DermTrainer® (Silverchair Information Systems, Charlottesville, VA, USA), allow us to assess the evolution of skin lesions by comparing serial images, which is very useful, e.g., to evaluate the efficacy of treatments (https://www.silverchair.com/news/silverchair-launches-dermtrainer/ accessed on 12 May 2025)

- -

- AI-assisted teledermatology: AI-enabled mobile apps allow patients to obtain a pre-assessment of skin lesions without the need for an in-person consultation. The SkinVision® app (SkinVision BV, Amsterdam, The Netherlands) analyzes images of moles and suspicious lesions using AI and provides a risk recommendation to the patient. The First Derm® platform (iDoc24 AB, Gothenburg, Sweden) uses AI algorithms to assist in the triage of patient-referred dermatology cases (https://www.firstderm.com/ai-dermatology/ accessed on 12 May 2025)

- -

- Medical education and training: Simulators such as VisualDx’s Virtual Patient® (VisualDx, Rochester, NY, USA) use AI to generate clinical cases and evaluate students’ diagnostic reasoning.

- -

- Dermatological research: Analysis of large volumes of images from the HAM10000 project (Medical University of Vienna, Vienna, Austria) has advanced the study of patterns in common pigmented lesions [46].

- -

- Cosmetic applications and esthetic dermatology: Tools such as YouCam@ (Perfect Corp., Taipei, Taiwan) or Modiface@ (Modiface Inc., Toronto, ON, Canada) use AI to simulate cosmetic treatments, analyze skin aging, and predict esthetic results.

3.1.3. Current Challenges and Limitations

- -

- Biases in AI Models

- -

- Model Interpretability

- -

- Regulatory and Legal Considerations

- -

- Integration with Clinical Practice

- There is a need for interoperability with electronic medical record systems.

- There is a reluctance on the part of professionals to rely on automated models. In addition, clinical validation is needed, which is difficult to achieve due to the lack of multicenter studies with prospective evaluation (i.e., it would be essential to have clinical trials that demonstrate real benefit in daily practice).

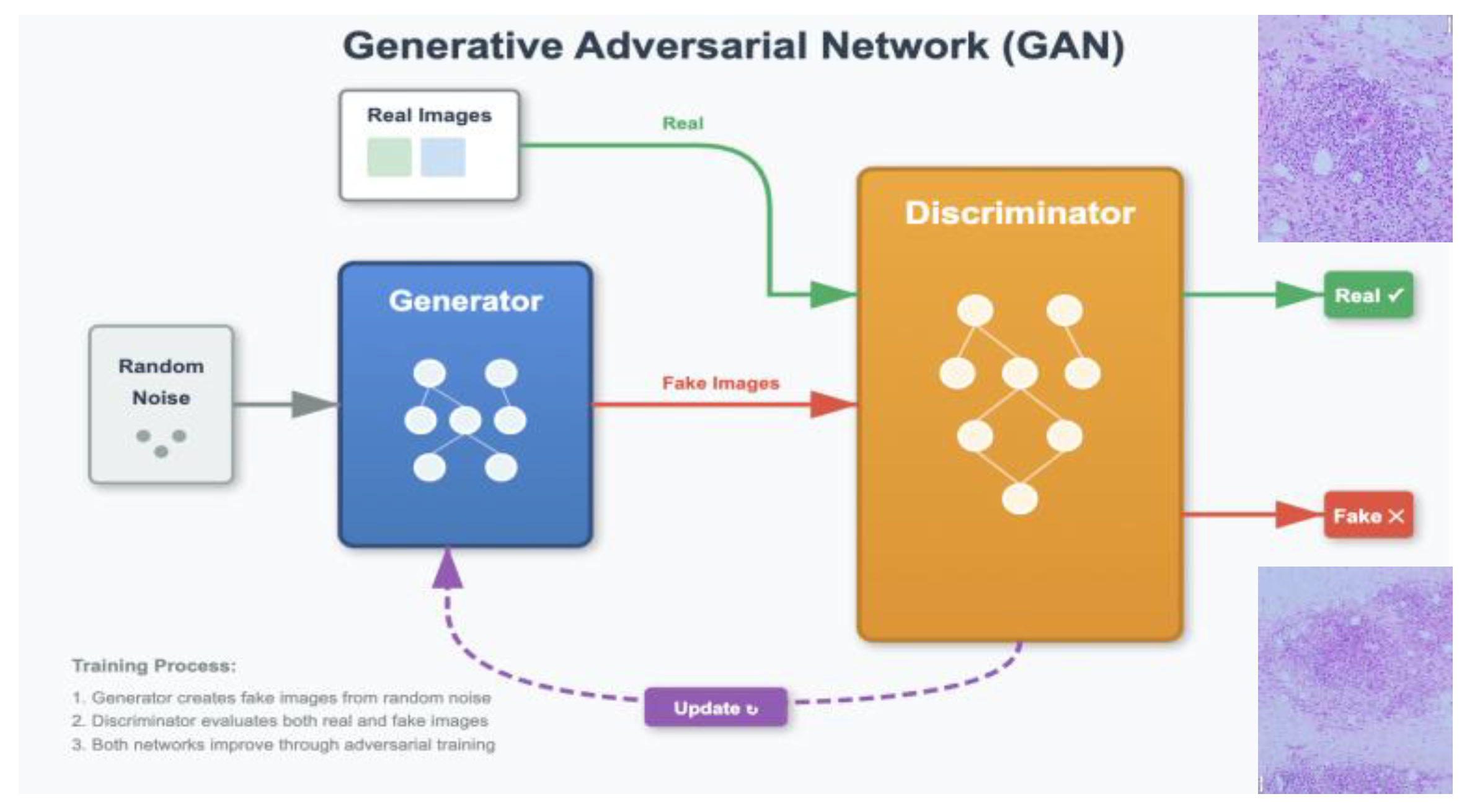

3.2. Generative Adversarial Networks (GANs)

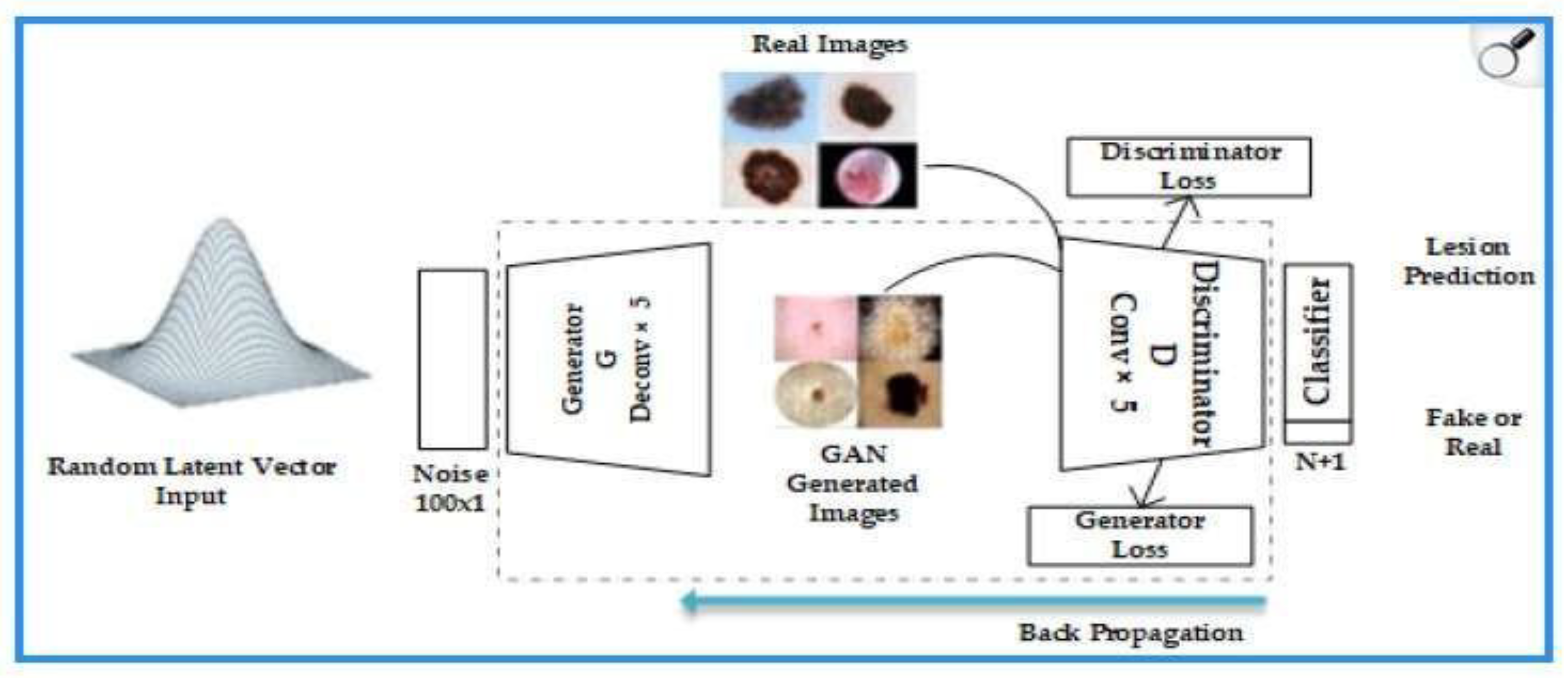

3.2.1. Principles and Functions of GANs

- -

- Generator: transforms random noise vectors (z) into synthetic images. In dermatology, it is optimized to replicate skin textures (e.g., pores or vascularization) using deep convolutional layers [50].

- -

- Discriminator: evaluates whether an image is real or artificially generated, using adversarial loss functions.

3.2.2. Relevant GAN Architectures in Medical Imaging

- -

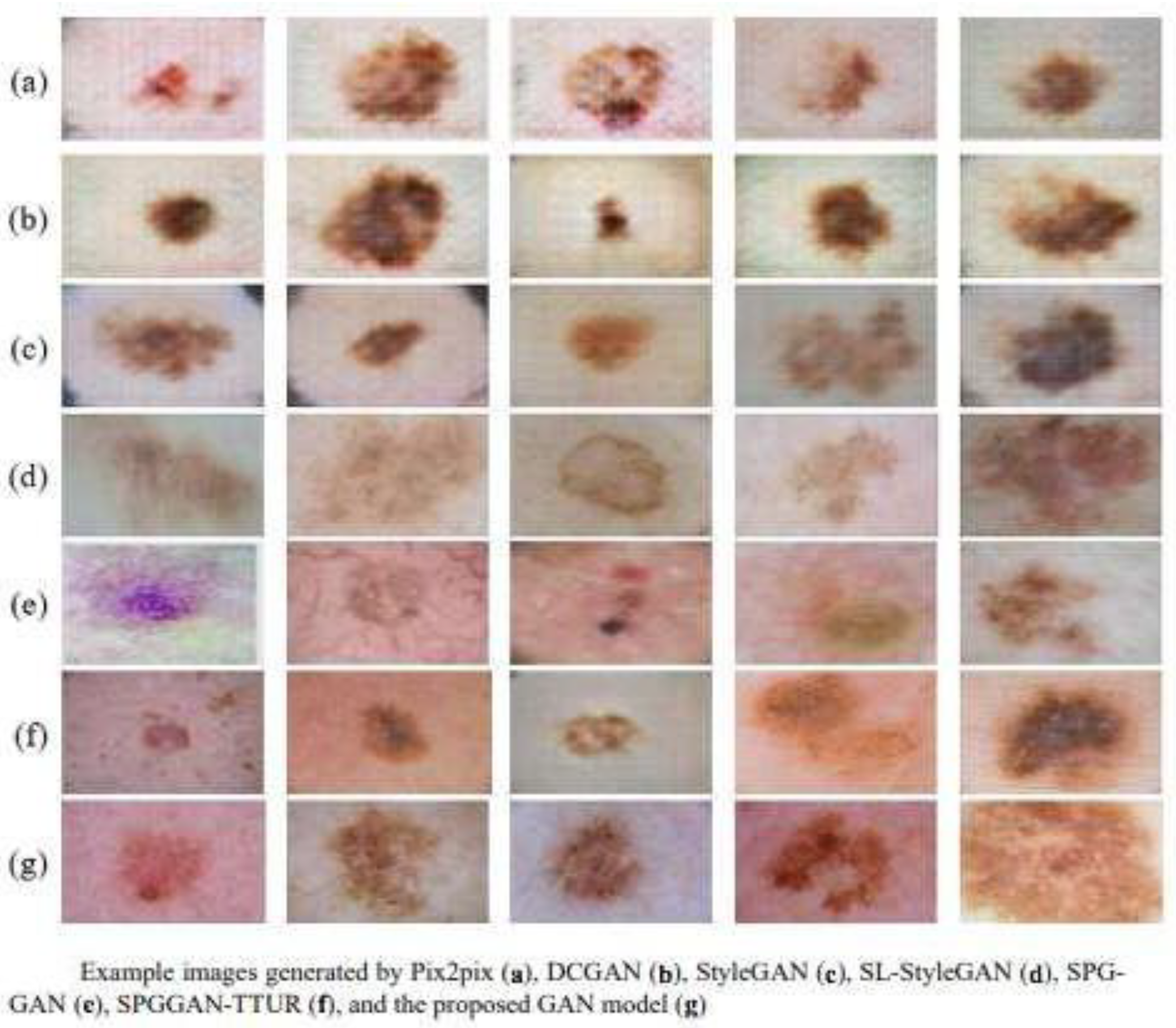

- DCGAN (Deep Convolutional GANs): Introduces deep convolutions to improve the quality of the generated images [51]. For example, it standardizes stable training with batch normalization, generating 1024 × 1024-pixel images useful for analyzing melanoma patterns [8,39]. It is often used to synthesize dermoscopic patches that mimic lesion texture, helping to balance datasets and improve classifier training.

- -

- Pix2Pix: Used for image translation tasks, such as the conversion of grayscale images to color in dermatology [52]. Useful for paired image-to-image translation (e.g., clinical versus dermoscopic views when paired data exist), supporting reconstruction and education.

- -

- CycleGAN: Allows for the conversion of images between domains without the need for image pairs, useful in the synthesis of dermatoscopic dermatological images from clinical images [53]. Enables unpaired domain transfer (e.g., standardizing images from different cameras/centers), which can reduce device-related variability before analysis. The study by Han et al. [44] achieved an SSIM (Structural Similarity Index) of 0.89 in this task.

- -

3.2.3. Evolution and Improvements in Models GANs

- Training stability

- 2.

- Image quality and resolution

- 3.

- Semantic control

- 4.

- Conditionality

- 5.

- Multimodality

3.2.4. Limitations and Challenges in Implementing GANs

- -

- Lack of explainability and interpretability: The “black box” of generative models makes their clinical validation difficult [3,9,46]. GANs present difficulties in explaining how they generate specific synthetic images. In the medical context, this lack of interpretability can be a major obstacle to their clinical adoption, as practitioners need to understand how the images are produced in order to rely on them as diagnostic tools.

- -

- Possibility of bias in the generated data: If the training dataset is limited or not representative, GANs can amplify existing biases [47]. Also in medical imaging, data can vary significantly depending on the quality of the equipment used, the experience of the technician generating the data, and the presence of artifacts. These inconsistencies can “foul” the data and compromise the accuracy of trained models [18,30].

- -

- Regulation and clinical approval: The lack of specific regulatory standards for generative models poses obstacles to their clinical implementation. Moreover, although these images do not correspond to real patients, their misuse could raise privacy and security issues. In addition, there is a risk of GANs being used to create false or misleading medical content [35].

- -

- Costs and operational complexity: The creation and maintenance of standardized databases to train GANs is a resource-intensive process. This includes technical harmonization between different sources, accurate annotation, and compliance with legal and ethical regulations. These difficulties limit the availability and representativeness of the data needed to develop reliable tools [9,41].

3.3. GANs in Dermatology

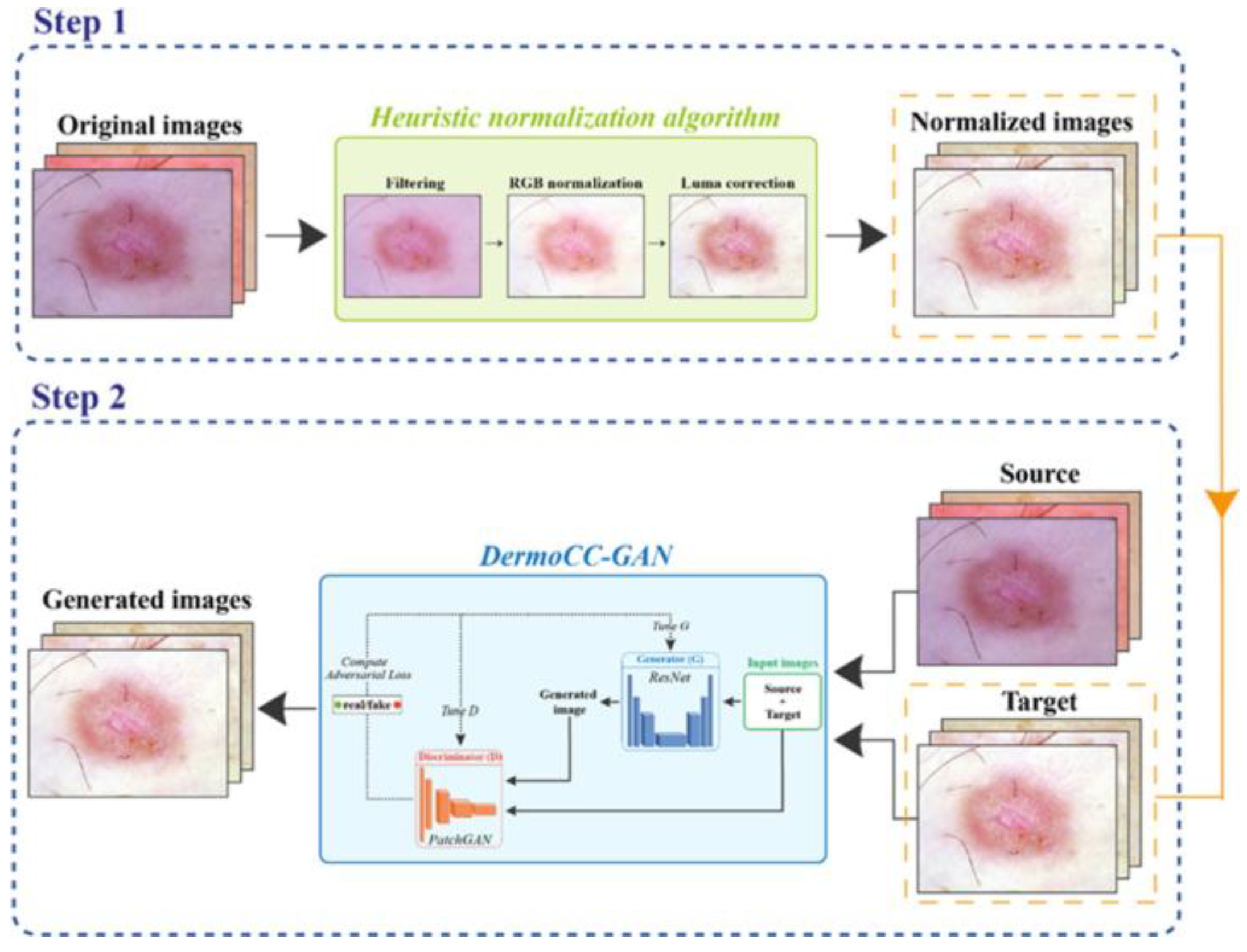

- DermoCC-GAN [29]:

- 2.

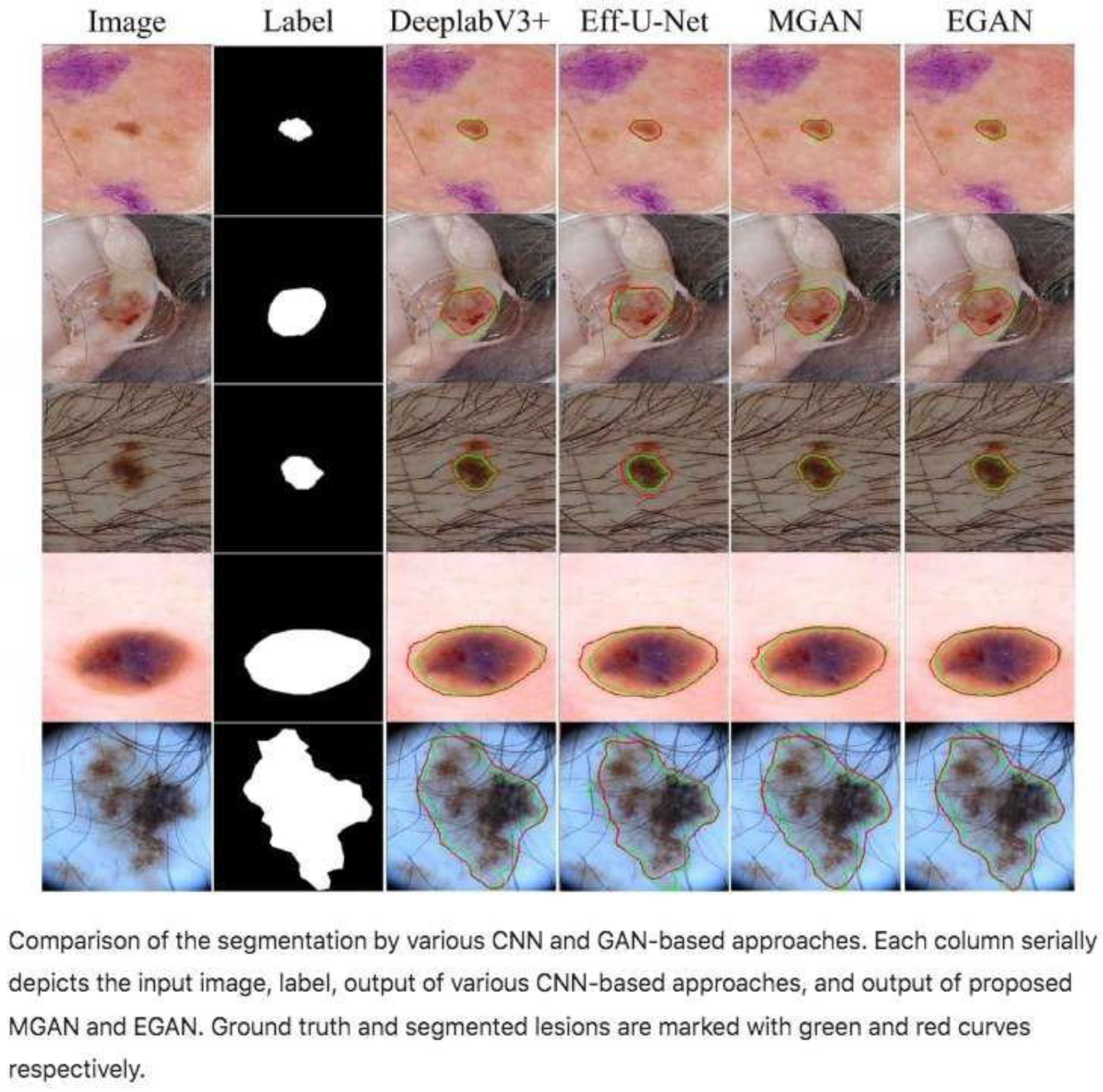

- Efficient-GAN (EGAN) [24]

- 3.

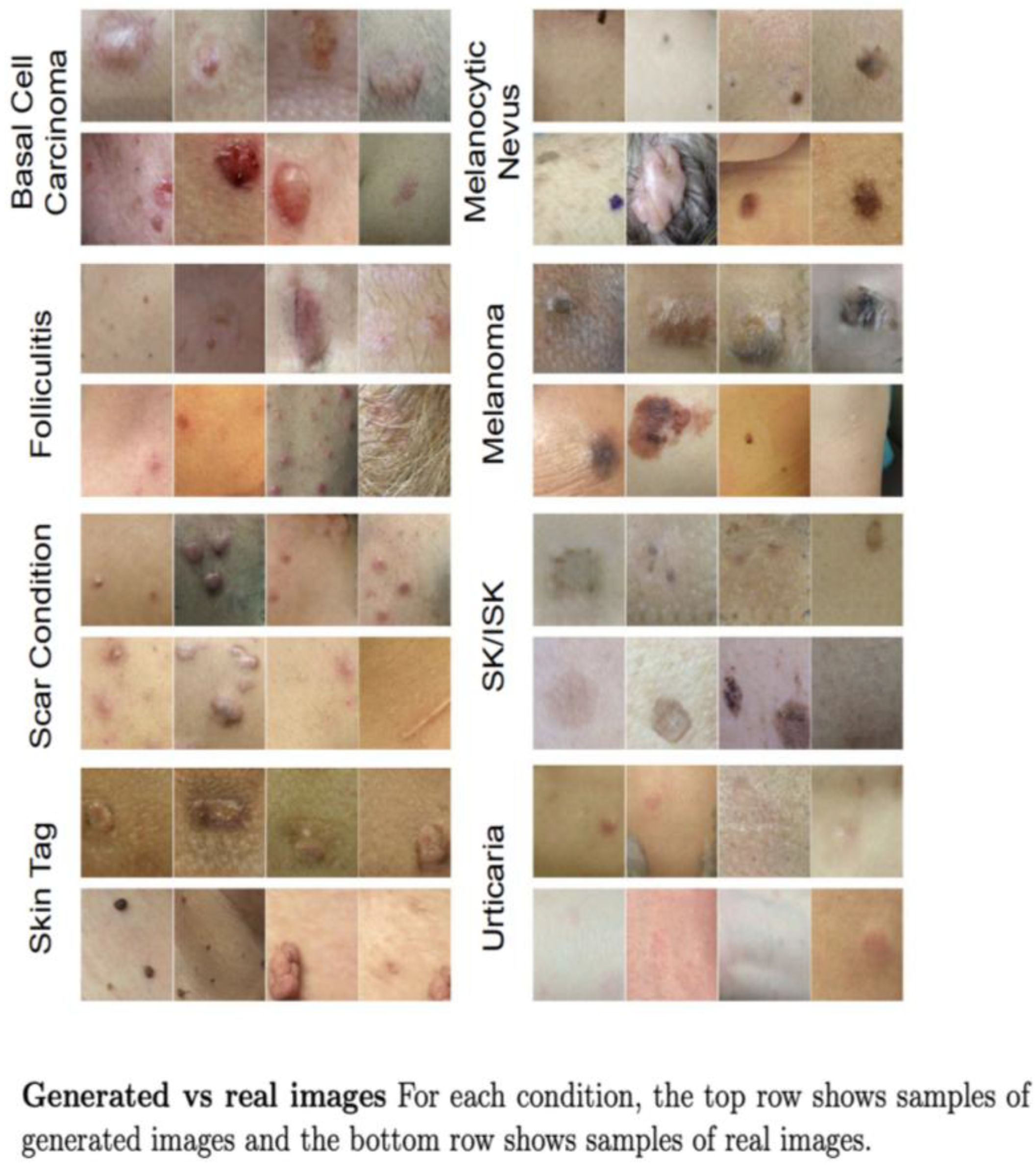

- DermGAN [22]

- 4.

- Skin Lesion Style-Based GANs [17]

- 5.

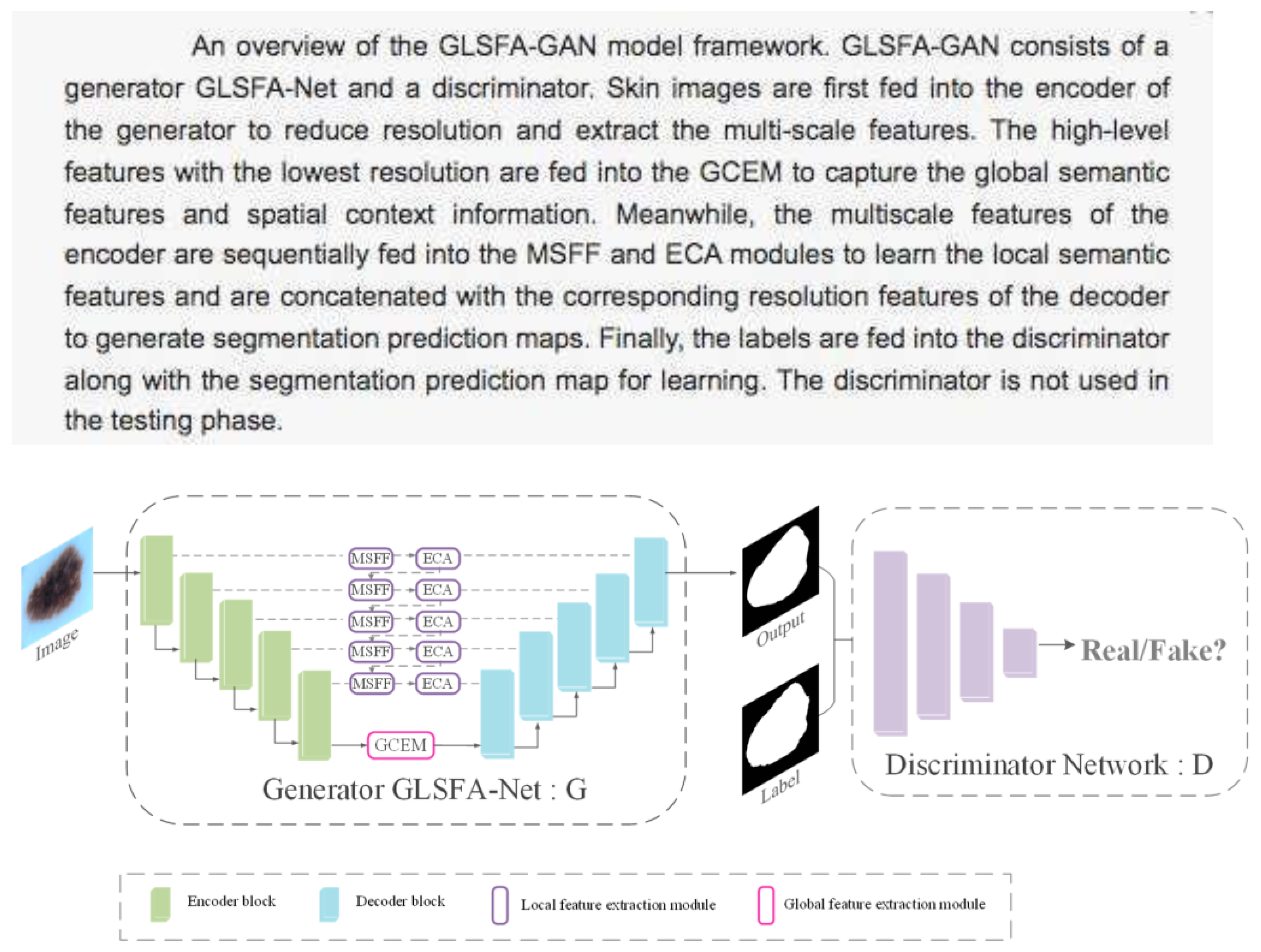

- GLSFA-GAN (Generative Adversarial Network with Global and Local Semantic Feature Awareness) [34].

- 6.

- GAN with Hybrid Loss Function [23].

- 7.

- “Conditional GANs for Dermatological Imaging [19].

3.4. Practical Applications in Clinical Dermatology

- -

- Clinical support in consultation or automated triage: We could augment datasets and generate realistic synthetic images of skin lesions (melanoma, psoriasis, acne, etc.) to improve the training of AI algorithms when there is little data [16]. This will improve the accuracy of diagnostic models.

- -

- Simulation of disease progression [19]: We could show how a lesion would evolve over time if left untreated (melanoma, ulcers, psoriasis, etc.). This would be an extraordinary support in clinical decision-making, in visual explanations for patients, and in the selection of more aggressive treatments if unfavorable evolution is predicted.

- -

- Reconstruction of poor-quality images: We could improve blurred, pixelated, or poorly illuminated photos (e.g., sent by patients). This would allow us to make better remote diagnoses (teledermatology), even with suboptimal images.

- -

- Standardization of color and image conditions [30]: We could automatically correct for differences in light and skin tone or contrasts between images taken under different conditions. This would facilitate comparisons between visits, evolutionary follow-up, and improve the accuracy of AI or our own clinical assessment.

- -

- Lesion segmentation: We could automatically separate the lesion from the background (healthy skin) in an image. This could lead to a more objective measurement of the affected area (ulcer, psoriasis, eczema…), follow-ups on the response to treatment, and more accurate clinical documentation.

- -

- AI-assisted classification [25,27]: We could generate images to train systems that then classify lesions (melanoma vs. nevus [25], types of acne, etc.). This could be a source of diagnostic support in consultation, which is especially useful in patients with multiple lesions, or in the triaging of suspicious cases.

- -

- Medical education and training: We could create synthetic didactic images of rare cases or cases at different stages. These could serve as educational tool for students, residents, or even other specialists (e.g., primary care physicians).

- -

- Improve balance in clinical datasets: We could generate images of under-represented populations (e.g., dark skin tones [37]). This could contribute to more inclusive AI systems and avoid bias in automated diagnostics.

- -

- Creation of interactive tools for patients: We could show the patient how their skin will look with certain treatments or if left untreated (e.g., progression of rosacea, acne, vitiligo). This could improve adherence to treatment programs and understanding of the disease, especially in digital natives or young patients.

- -

- Simulation of esthetic or surgical results: We could generate simulated images of how the skin will look after an intervention (laser, cryotherapy, surgical resection). This could lead to clearer communication with the patient, the management of expectations, and support for informed consent.

- -

- Development of AI clinical applications: We could use generated images to create or enhance automatic assessment apps (e.g., for acne patients who send weekly photos). This could assist in the remote monitoring of chronic patients and the optimization of office resources and lead to more accurate referrals.

3.5. Reliability Assessment and Ethical Challenges

3.5.1. Validation of Images Generated by GANs

Comparison with Real Images Using Metrics:

- -

- Fréchet Inception Distance (FID) [23,58]: FID measures the degree of similarity between images generated by a GAN and real images by comparing not only pixels but also deep features extracted by a trained neural network (usually Inception v3). The real images and the images generated by the Inception network are passed; this network extracts “features” (internal representations) from each set of images and compares the statistical distributions of those features (mean and covariance) using a mathematical formula called Fréchet distance. The lower the FID, the better; it indicates that the generated images are more similar to the real ones. An FID of 0 would be ideal (i.e., the fake images are indistinguishable from the real ones).

- -

- Structural Similarity Index (SSIM) [19,33]: The SSIM compares two images and evaluates their similarity structurally. It is very useful, for example, to see if a generated image is visually similar to an original image (e.g., in super-resolution or denoising tasks). It analyzes three things between the two images: luminance, contrast, and structure. A value between 0 and 1 is calculated, where 1 means identical images, and values close to 0 are completely different. A value greater than 0.9 is usually already considered to represent a high structural similarity.

- -

- Quality and realism metrics:

- -

- Perceptual metrics:

- Learned Perceptual Image Patch Similarity (LPIPS) [26,34,48]: Measures perceptual similarity between images by calculating the distance between activations of deep neural networks (such as AlexNet, VGG) between two images. It has the advantage of a better correlation with human perception than SSIM or PSNR.

- -

- Diversity Metrics:

- Mode Score [26]: An extension of the Inception Score that penalizes the lack of class coverage and is used when you want to evaluate class diversity (e.g., digits 0 to 9 in MNIST).

- -

- Specific metrics in medical imaging:

Evaluations by Clinical Experts

Testing on Models from Classification

3.5.2. Biases and Problems in Generative Models

- -

- Limitation in the representation of skin phototypes: One of the most prominent problems in the use of GANs for medical imaging is the lack of representativeness in the training data, especially in relation to skin phototypes. Most of the datasets used to train these models are biased towards lighter skin tones, which may reduce the effectiveness of the images generated for patients with darker skin. According to Adamson and Smith [47], this bias limits diagnostic and therapeutic capability in under-represented populations, perpetuating inequities in access to advanced artificial intelligence-based tools. This problem is especially critical in dermatology, where the visual characteristics of skin lesions can vary significantly depending on the patient’s skin tone. The Fitzpatrick scale [60], used to classify phototypes according to the amount of melanin and sensitivity to the sun, could serve as a benchmark for developing more diverse and representative datasets. However, its implementation requires a coordinated effort to collect clinical images that include all phototypes, from I (very light skin) to VI (very dark skin).

- -

- Risk of overfitting to specific patterns which may affect the variability of the generated images. Overfitting is another critical challenge in GAN training. This phenomenon occurs when the model learns specific patterns from the training dataset and loses the ability to generalize to new data. In the medical context, this can result in synthetic images that reflect only features present in the original data, reducing the variability needed to adequately represent different clinical cases [61].

- -

- Difficulties in model calibration: Although GANs are capable of generating highly realistic images, they may contain incorrect or irrelevant clinical information. Yi et al. [8] point out that GANs may generate artifacts or anatomical inconsistencies that do not correspond to real features observed in patients. This represents a significant risk for clinical applications, as synthetic images could induce diagnostic errors if used without proper validation.

3.5.3. Ethics and Regulations in the Use of Synthetic Imaging

Ethical Considerations

Regulatory Landscape

Summary and Perspectives

3.6. Future Perspectives and Clinical Applications

3.6.1. Integration into Clinical Practice

- Clinical and regulatory validation: Before being incorporated into routine care, GANs-based applications must undergo rigorous clinical trials that evaluate their safety, diagnostic efficacy and added value over conventional methods. In addition, they must comply with regulatory standards, such as the European Union’s Medical Device Regulation (MDR) or FDA approval in the USA.

- Acceptance by healthcare personnel: For these technologies to be adopted, it is essential that dermatologists understand how they work, their limitations and their clinical applicability. Training in artificial intelligence (AI) and interpretation of results is key to fostering effective human–machine collaboration [46].

- Technological infrastructure and digitization: Implementation of GANs models requires a robust computing, storage, and networking infrastructure. The hospitals must have interoperable and secure systems that allow for the seamless integration of these tools [40].

- Interoperability with electronic health record systems (EHRs): GAN-based models can be integrated with EHRs to facilitate automated documentation, improve continuity of care, and provide more accurate assisted diagnoses [5]. This could also streamline screening for suspicious lesions through automatically generated clinical alerts.

Potential Impact on Assisted Diagnosis by AI

- -

- Generation of extended and balanced datasets [59]: GANs can synthesize high-quality dermatological images with a variety of pathologies, which allows for the balancing of datasets with a scarcity of examples (e.g., images of rare diseases), reducing bias in deep learning models.

- -

- Enhanced teledermatology: The combination of GANs with telemedicine platforms allows for the simulation of diverse clinical cases, facilitating the training of models operating in remote contexts. This is especially useful for regions with limited access to dermatologists [20].

- -

- Support in differential diagnosis: GANs can generate images that represent different stages of a disease or atypical variations, helping dermatologists to recognize complex patterns and improve their clinical judgment. This could help reduce diagnostic errors in difficult cases [32].

3.6.2. Future Lines of Research

- -

- Improving explainability: One of the main criticisms of AI systems is their opacity. Therefore, GAN models are being developed [48] that integrate visual interpretation mechanisms, such as attention maps or Grad-CAM techniques, which allow for the identification of which regions of the image have influenced the model’s decision.

- -

- Equity and inclusion in datasets: To prevent algorithms from perpetuating inequalities, synthetic generation strategies focused on under-represented populations, including different ethnic groups and skin phototypes, are being promoted. Diversity in training data is essential to ensure truly equitable AI [47,60].

- -

- Personalized medicine [2]: GANs could be used to predict treatment response in chronic inflammatory diseases such as psoriasis or severe acne by simulating clinical evolution with and without treatment. This would open the door to individualized therapeutic decision models.

- -

- Collaboration between dermatologists and engineers will be key to ensure clinically relevant applications.

4. Conclusions

4.1. Summary of Findings

4.2. Limitations of the Study

4.3. Recommendations for Future Research

- -

- Formation of multidisciplinary teams: It is essential to foster collaboration between dermatologists, computer engineers, biomedical engineers, statisticians, and artificial intelligence experts. The correct implementation of GANs models in clinical settings requires not only sound technical development but also a thorough understanding of the dermatologic diagnostic processes, clinical needs, and ethical issues associated with the use of synthetic medical images. The presence of multidisciplinary teams ensures a more robust experimental design, a validation more in line with clinical practice, and a proper interpretation of the results.

- -

- Increasing the diversity and representativeness of the data: Many current studies use public databases with an under-representation of high phototypes or rare pathologies. It is crucial to encourage the collection and sharing of diverse and well-annotated dermatologic data to improve the generalizability of models and avoid algorithmic biases.

- -

- Clinical validation and comparison with specialists: It is recommended that the generated models be evaluated not only by quantitative metrics (FID, IS, IoU…) but also through comparative studies with dermatologic clinical practice. This includes the participation of specialists to assess the diagnostic utility of the synthetic images or of the segmentation or classification models trained with them.

- -

- Exploration of new clinical applications: beyond diagnosis, GANs could have applications in medical education, image quality improvement, dataset generation for rare diseases, or pre-surgical simulations. Future studies should explore these possibilities.

- -

- Development of accessible and explainable tools: It is recommended to prioritize the development of models that are not only accurate but also interpretable and understandable for clinicians. The algorithmic transparency and the possibility of auditing the model’s decisions will facilitate its acceptance and use in practice.

- -

- Ethical, legal, and regulatory considerations: Any development should incorporate from its early stages an assessment of the ethical, legal, and privacy risks, especially in relation to the generation of synthetic images of patients. Future studies should align with emerging regulatory frameworks on AI in healthcare.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviation | Meaning |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| CDRH | Center for Devices and Radiological Health |

| cGAN | Conditional Generative Adversarial Network |

| CNN | Convolutional Neural Network |

| DCGAN | Deep Convolutional GAN |

| DL | Deep Learning |

| EGAN | Efficient-GAN |

| EMA | European Medicines Agency |

| EU | European Union |

| FDA | Food and Drug Administration |

| FDP | Final Degree Project |

| FID | Fréchet Inception Distance |

| FLAIR | Fluid Attenuated Inversion Recovery |

| GAN | Generative Adversarial Network |

| GDPR | General Data Protection Regulation |

| GLSFA | Global and Local Semantic Feature Awareness |

| GMLP | Good Machine Learning Practice |

| HIPAA | Health Insurance Portability and Accountability Act |

| IoU | Intersection over Union |

| IS | Inception Score |

| LPIPS | Learned Perceptual Image Patch Similarity |

| MDR | Medical Device Regulation |

| MELIIGAN | Melanoma Lesion Image Improvement GAN |

| MSE | Mean Squared Error |

| MT | Master’s Thesis |

| PMA | Premarket Approval |

| PPL | Perceptual Path Length |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PSNR | Peak Signal-to-Noise Ratio |

| ROC | Receiver Operating Characteristic |

| SaMD | Software as a Medical Device |

| SSIM | Structural Similarity Index |

| VAE | Variational Autoencoder |

| VGG | Visual Geometry Group |

| WGAN | Wasserstein GAN |

References

- Annals RANM. Artificial Intelligence, Medical Imaging, and Precision Medicine: Advances and Perspectives. 2024. Available online: https://analesranm.es/revista/2024/141_02/14102_rev02 (accessed on 15 August 2025).

- Kourounis, G.; Elmahmudi, A.A.; Thomson, B.; Hunter, J.; Ugail, H.; Wilson, C. Computer image analysis with artificial intelligence: A practical introduction for medical professionals. Postgrad. Med. J. 2023, 99, 1287–1294. [Google Scholar] [CrossRef]

- Omiye, J.A.; Gui, H.; Daneshjou, R.; Cai, Z.R.; Muralidharan, V. Principles, applications, and future of artificial intelligence in dermatology. Front. Med. 2023, 10, 1278232. [Google Scholar] [CrossRef] [PubMed]

- Wongvibulsin, S.; Yan, M.J.; Pahalyants, V.; Murphy, W.; Daneshjou, R.; Rotemberg, V. Current State of Dermatology Mobile Applications With Artificial Intelligence Features. JAMA Dermatol. 2024, 160, 646–650. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Koban, K.C.; Schenck, T.L.; Giunta, R.E.; Li, Q.; Sun, Y. Artificial Intelligence in Dermatology Image Analysis: Current Developments and Future Trends. J. Clin. Med. 2022, 11, 6826. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar] [CrossRef]

- Daneshjou, R.; Vodrahalli, K.; Novoa, R.A.; Jenkins, M.; Liang, W.; Rotemberg, V.; Ko, J.; Swetter, S.M.; Bailey, E.E.; Gevaert, O.; et al. Disparities in dermatology AI performance on a diverse, curated clinical image set. Sci. Adv. 2022, 8, eabq6147. [Google Scholar] [CrossRef]

- Yi, X.; Walia, E.; Babyn, P. Generative adversarial network in medical imaging: A review. Med. Image Anal. 2019, 58, 101552. [Google Scholar] [CrossRef]

- Behara, K.; Bhero, E.; Agee, J.T. AI in dermatology: A comprehensive review into skin cancer detection. PeerJ Comput. Sci. 2024, 10, e2530. [Google Scholar] [CrossRef]

- Furriel, B.C.R.S.; Oliveira, B.D.; Prôa, R.; Paiva, J.Q.; Loureiro, R.M.; Calixto, W.P.; Reis, M.R.C.; Giavina-Bianchi, M. Artificial intelligence for skin cancer detection and classification for clinical environment: A systematic review. Front. Med. 2024, 10, 1305954. [Google Scholar] [CrossRef]

- Hermosilla, P.; Soto, R.; Vega, E.; Suazo, C.; Ponce, J. Skin cancer detection and classification using neural network algorithms: A systematic review. Diagnostics 2024, 14, 454. [Google Scholar] [CrossRef]

- Iglesias-Puzas, A.; Boixeda, P. Deep learning and mathematical models in dermatology. Actas Dermosifiliogr. 2020, 111, 192–195. [Google Scholar] [CrossRef]

- Liu, Y.; Li, C.; Li, F.; Lin, R.; Zhang, D.; Lian, Y. Advances in deep learning-facilitated early detection of melanoma. Brief. Funct. Genom. 2025, 24, elaf002. [Google Scholar] [CrossRef]

- Nazari, S.; Garcia, R. Automatic skin cancer detection using clinical images: A comprehensive review. Life 2023, 13, 2123. [Google Scholar] [CrossRef] [PubMed]

- Vardasca, R.; Reddy, G.; Sanches, J.; Moreira, D. Skin cancer image classification using AI: A systematic review. J. Imaging 2024, 10, 265. [Google Scholar] [CrossRef]

- Alshardan, A.; Alahmari, S.; Alghamdi, M.; AL Sadig, M.; Mohamed, A.; Mohammed, G.P. GAN-based synthetic medical image augmentation for class imbalanced dermoscopic image analysis. Fractals 2025, 33, 2540039. [Google Scholar] [CrossRef]

- Behara, K.; Bhero, E.; Agee, J.T. Skin Lesion Synthesis and Classification Using an Improved DCGAN Classifier. Diagnostics 2023, 13, 2635. [Google Scholar] [CrossRef] [PubMed]

- Carrasco Limeros, S.; Camacho Vallejo, T.; Escudero, V.; Pérez, L.; Pomares, H.; Rojas, I. GAN-based generative modelling for dermatological applications: Comparative study. arXiv 2022. [Google Scholar] [CrossRef]

- Ding, S.; Zheng, J.; Liu, Z.; Zheng, Y.; Chen, Y.; Xu, X.; Lu, J.; Xie, J. High-resolution dermoscopy image synthesis with conditional generative adversarial networks. Biomed. Signal Process. Control 2021, 64, 102224. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Efimenko, M.; Ignatev, A.; Koshechkin, K. Review of medical image recognition technologies to detect melanomas using neural networks. BMC Bioinform. 2020, 21 (Suppl. 11), 270. [Google Scholar] [CrossRef]

- Ghorbani, A.; Natarajan, V.; Coz, D.; Liu, Y. DermGAN: Synthetic Generation of Clinical Skin Images with Pathology. Proc. Mach. Learn. Health NeurIPS Workshop 2020, 116, 155–170. Available online: https://proceedings.mlr.press/v116/ghorbani20a.html (accessed on 12 May 2025).

- Goceri, E. GAN-based augmentation using a hybrid loss function for dermoscopy images. Artif. Intell. Rev. 2024, 57, 234. [Google Scholar] [CrossRef]

- Innani, S.; Dutande, P.; Baid, U.; Pokuri, V.; Bakas, S.; Talbar, S.; Baheti, B.; Guntuku, S.C. Generative adversarial networks based skin lesion segmentation. Sci. Rep. 2023, 13, 13467. [Google Scholar] [CrossRef] [PubMed]

- Heenaye-Mamode Khan, M.; Gooda Sahib-Kaudeer, N.; Dayalen, M.; Mahomedaly, F.; Sinha, G.R.; Nagwanshi, K.K.; Taylor, A. Multi-class skin problem classification using deep generative adversarial network (DGAN). Comput. Intell. Neurosci. 2022, 2022, 9480139. [Google Scholar] [CrossRef]

- Perez, E.; Ventura, S. Progressive growing of GANs for improving data augmentation and skin cancer diagnosis. Artif. Intell. Med. 2023, 141, 102556. [Google Scholar] [CrossRef]

- Qin, Z.; Song, K.; Leng, J.; Cheng, X.; Zheng, J. A GAN-based image synthesis method for skin lesion classification. Comput. Methods Programs Biomed. 2020, 195, 105568. [Google Scholar] [CrossRef]

- Ren, Z.; Liang, J.; Wan, W.; Wang, D.; Wang, Y.; Zhong, Y. Controllable medical image generation via GAN. J. Percept. Imaging 2022, 5, 5021. [Google Scholar] [CrossRef]

- Salvi, M.; Molinari, F.; Havaei, M.; Depeursinge, A. DermoCC-GAN: A new approach for standardizing dermatological images using generative adversarial networks. Comput. Methods Programs Biomed. 2022, 225, 107040. [Google Scholar] [CrossRef]

- Salvi, M.; Bassoli, S.; Molinari, F.; Havaei, M.; Depeursinge, A. Generative models for color normalization in pathology and dermatology. Expert. Syst. Appl. 2024, 245, 123105. [Google Scholar] [CrossRef]

- Sharafudeen, M.; Andrew, J.; Chandra, S.S.V. Leveraging Vision Attention Transformers for Detection of Artificially Synthesized Dermoscopic Lesion Deepfakes Using Derm-CGAN. Diagnostics 2023, 13, 825. [Google Scholar] [CrossRef]

- Jütte, L.; González-Villà, S.; Quintana, J.; Steven, M.; Garcia, R.; Roth, B. Integrating generative AI with ABCDE rule analysis for enhanced skin cancer diagnosis, dermatologist training and patient education. Front. Med. 2024, 11, 1445318. [Google Scholar] [CrossRef] [PubMed]

- Veeramani, N.; Jayaraman, P. A promising AI based super resolution image reconstruction technique for early diagnosis of skin cancer. Sci. Rep. 2025, 15, 5084. [Google Scholar] [CrossRef] [PubMed]

- Zou, R.; Zhang, J.; Wu, Y. Skin Lesion Segmentation through Generative Adversarial Networks with Global and Local Semantic Feature Awareness. Electronics 2024, 13, 3853. [Google Scholar] [CrossRef]

- Albisua, J.; Pacheco, P. Límites éticos en el uso de la inteligencia artificial (IA) en medicina. Open Respir. Arch. 2025, 7, 100383. [Google Scholar] [CrossRef]

- Bleher, H.; Braun, M. Reflections on putting AI ethics into practice: How three AI ethics approaches conceptualize theory and practice. Sci. Eng. Ethics 2023, 29, 21. [Google Scholar] [CrossRef]

- Rezk, E.; Eltorki, M.; El-Dakhakhni, W. Leveraging Artificial Intelligence to Improve the Diversity of Dermatological Skin Color Pathology: Protocol for an Algorithm Development and Validation Study. JMIR Res. Protoc. 2022, 11, e34896. [Google Scholar] [CrossRef]

- Higuera González, I.D. Evaluación de la Precisión y Automatización de Técnicas de Machine Learning en la Predicción de Enfermedades Mediante Imágenes Médicas. Monografía de Grado, Universidad Nacional Abierta y a Distancia, Bogota, Colombia, 2025. [Google Scholar]

- Redondo Hernández, S. Deep Learning para la Detección de Patologías de Cáncer de piel y Generación de Imágenes de Tejidos Humanos. Tesis de Máster, Universitat Politècnica de Catalunya, Barcelona, Spain, Universitat de Barcelona, Barcelona, Spain, 2020. Available online: https://upcommons.upc.edu/entities/publication/58aa1e22-041a-4456-bee0-f8fb040fad62 (accessed on 15 August 2025).

- Puri, P.; Shunatona, S.; Rosenbach, M.; Swerlick, R.; Anolik, R.; Chen, S.T.; Ko, C.J. Deep learning for dermatologists: Part II. Current applications. J. Am. Acad. Dermatol. 2022, 87, 1352–1360. [Google Scholar] [CrossRef]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. ICLR. 2018. Available online: https://github.com/tkarras/progressive_growing_of_gans (accessed on 12 May 2025).

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014. [Google Scholar] [CrossRef]

- Paladugu, P.S.; Ong, J.; Nelson, N.; Kamran, S.A.; Waisberg, E.; Zaman, N.; Kumar, R.; Dias, R.D.; Lee, A.G.; Tavakkoli, A. Generative Adversarial Networks in Medicine: Important Considerations for this Emerging Innovation in Artificial Intelligence. Ann. Biomed. Eng. 2023, 51, 2130–2142. [Google Scholar] [CrossRef]

- Han, S.S.S.; Kim, M.S.; Lim, W.; Park, G.H.; Park, I.; Chang, S.E. Classification of the clinical images for benign and malignant cutaneous tumors using a deep learning algorithm. J. Investig. Dermatol. 2018, 138, 1529–1538. [Google Scholar] [CrossRef]

- AlSuwaidan, L. Deep learning based classification of dermatological disorders. Biomed. Eng. Comput. Biol. 2023, 14, 11795972221138470. [Google Scholar] [CrossRef]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef] [PubMed]

- Adamson, A.S.; Smith, A. Machine learning and health care disparities in dermatology. JAMA Dermatol. 2018, 154, 1247–1248. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Sengupta, D. Artificial Intelligence in Diagnostic Dermatology: Challenges and the Way Forward. Indian Dermatol. Online J. 2023, 14, 782–787. [Google Scholar] [CrossRef] [PubMed]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of StyleGAN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2016, arXiv:1511.06434. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Confrence Computer Vision Pattern Recognit, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using CycleGAN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks (StyleGAN). IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 4217–4228. [Google Scholar] [CrossRef]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. Inf. Process. Med. Imaging 2017, 10265, 146–157. [Google Scholar]

- Chartsias, A.; Joyce, T.; Dharmakumar, R.; Tsaftaris, S.A. Multimodal MR synthesis via modality-invariant latent representation. Med. Image Anal. 2018, 48, 118–131. [Google Scholar] [CrossRef]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 6626–6637. [Google Scholar]

- Bissoto, A.; Valle, E.; Avila, S. GAN-Based Data Augmentation and Anonymization for Skin-Lesion Analysis: A Critical Review. arXiv 2021, arXiv:2104.10603. [Google Scholar]

- Kim, Y.H.; Kobic, A.; Vidal, N.Y. Distribution of race and Fitzpatrick skin types in datasets for dermatology deep learning: A systematic review. J. Am. Acad. Dermatol. 2022, 87, 460–463. [Google Scholar] [CrossRef]

- EKEN, E. Determining overfitting and underfitting in generative adversarial networks using Fréchet distance. Turk. J. Electr. Eng. Comput. Sci. 2021, 29, 1524–1538. [Google Scholar] [CrossRef]

- European Commission. Proposal for a Regulation Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act). 2021. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206 (accessed on 5 June 2025).

- European Parliament and Council. Regulation (EU) 2017/745 on Medical Devices. 2017. Available online: https://eur-lex.europa.eu/eli/reg/2017/745/oj/eng (accessed on 5 June 2025).

- US Food & Drug Administration. Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices (accessed on 5 June 2025).

- US Food & Drug Administration. Good Machine Learning Practice for Medical Device Development: Guiding Principles. 2021. Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/good-machine-learning-practice-medical-device-development-guiding-principles (accessed on 5 June 2025).

- Fei, Z.; Ryeznik, Y.; Sverdlov, O.; Tan, C.W.; Wong, W.K. An overview of healthcare data analytics with applications to the COVID-19 pandemic. IEEE Trans. Big Data 2022, 8, 1463–1480. [Google Scholar] [CrossRef]

| Type of Study | Number of Articles | Authors and Year |

|---|---|---|

| Systematic and narrative reviews | 13 | Behara et al. (2024) [9], Daneshjou et al. (2022) [7], Furriel et al. (2024) [10], Hermosilla et al. (2024) [11], Iglesias-Puzas and Boixeda (2020) [12], Wongvibulsin et al. (2024) [4], Kourounis et al. (2023) [2], Liu et al. (2025) [13], Li et al. (2022) [5], Nazari and Garcia (2023) [14], Omiye et al. (2023) [3], Vardasca et al. (2024) [15], Yi et al. (2019) [8] |

| Methodological studies (development and validation of algorithms) | 20 | Alshardan et al. (2025) [16], Behara et al. (2023) [17], Carrasco Limeros et al. (2022) [18], Ding et al. (2021) [19], Esteva et al. (2017) [20], Efimenko et al. (2020) [21], Ghorbani et al. (2020) [22], Goceri (2024) [23], Innani et al. (2023) [24], Heenaye-Mamode Khan et al. (2022) [25], Perez and Ventura (2023) [26], Qin et al. (2020) [27], Ren et al. (2022) [28], Salvi et al. (2022) [29], Salvi et al. (2024) [30], Sharafudeen et al. (2023) [31], Jütte et al. (2024) [32], Veeramani and Jayaraman (2025) [33], Yi et al. (2019) [8], Zou et al. (2024) [34]. |

| Ethical or regulatory studies | 3 | Albisua (2025) [35], Bleher and Braun (2023) [36], Rezk et al. (2022) [37] |

| Academic papers (FDP/MT) | 2 | Higuera González (2025) [38], Redondo Hernández (2020) [39] |

| Others (comments, proposals, hybrids not focused on GANs) | 4 | Puri et al. (2022) [40], Karras et al. (2018) [41], Mirza and Osindero (2014) [42], Paladugu et al. (2023) [43]. |

| Article | Type of Study | Objective | Method | Level of Evidence | Strengths | Limitations |

|---|---|---|---|---|---|---|

| Alshardan et al. (2025) [16] | Methodological study | Improve analysis of unbalanced dermoscopic images | DCGAN to generate synthetic images; evaluation with ResNet50 | III | Good performance with minority classes | Use of only one dataset, limited external validation |

| Behara et al. (2023) [17] | Methodological study | Synthesis and classification of skin lesions using an improved DCGAN | DCGAN with modified architecture + CNN classifier | III | Good classification performance after augmentation, testing with multiple lesion types | Limited dataset, no external validation |

| Carrasco Limeros et al. (2022) [18] | Comparative study | Evaluate different GAN models in dermatology | Comparison of StyleGAN and CycleGAN architectures | III | Extensive comparison, use of quantitative and qualitative metrics | Preprint study, lacking peer review |

| Ding et al. (2021) [19] | Methodological study | High-resolution dermoscopic image synthesis | cGAN with improved architecture for dermoscopic imaging | III | High image quality generated, robust metrics | Evaluation limited to synthetic quality, not clinical diagnosis |

| Ghorbani et al. (2020) [22] | Methodological study | Synthetic generation of clinical skin images with pathology | DermGAN (customized GAN with regularization to maintain pathologic features) | III | Preservation of relevant clinical features, morphological diversity, educational potential | Not clinically validated, dataset limited to Stanford images |

| Goceri (2024) [23] | Methodological study | Augmentation of hybrid lossy images | GAN with combined loss function (pixel + perception) | III | Improved class balance, direct applicability to classifier models | Limited to a single GAN type and database |

| Innani et al. (2023) [24] | Methodological study | Segmentation of lesions with GAN | GAN personalized with encoder–decoder architecture | III | Better delineation accuracy than U-Net | No comparison with other models |

| Heenaye-Mamode Khan et al. (2022) [25] | Methodological study | Multiclass classification of skin diseases | DGAN (modified GAN) combined with CNN | III | Good multiclass performance, complete metrics | External validation not performed |

| Karras et al. (2018) [41] | Foundational study | Improve quality and stability of GAN | Progressive GAN proposal with progressive training | IV | High synthetic quality, significant methodological impact | Not focused on dermatology |

| Mirza and Osindero (2014) [42] | Foundational study | Introduce the concept of conditional GAN | Conditional GAN with class vector | IV | Cornerstone for cGANs | No direct application in health |

| Paladugu et al. (2023) [43] | Methodological review | Review of the use of cGANs in medicine | Narrative review with classification of applications | V | Broad topic coverage, useful for developers | Does not evaluate empirical evidence |

| Perez and Ventura (2023) [26] | Methodological study | Data augmentation for skin cancer diagnosis | Progressive HLG for dermoscopic image generation | III | Improved diagnostic accuracy using synthetic images | Simulation-based assessment alone |

| Qin et al. (2020) [27] | Methodological study | Synthetic imaging classification of skin lesions | GAN for generation + CNN for classification | III | Improved sensitivity in classes with less data | Limited data and no clinical validation |

| Ren et al. (2022) [28] | Methodological study | Controlled generation of medical images | GAN with attribute control | III | Explicit control of variables in synthetic imaging | Not exclusively focused on dermatology |

| Salvi et al. (2022) [29] | Methodological study | Color standardization in dermatological images | DermoCC-GAN for visual standardization | III | Improved comparability of images between devices | Lack of direct clinical impact assessed |

| Salvi et al. (2024) [30] | Methodological study | Color standardization in pathology and dermatology | GANs with style transfer networks | III | Cross-cutting application in digital medicine | Non-dermatology specific |

| Sharafudeen et al. (2023) [31] | Methodological study | Detection of false synthetic images (deepfakes) | Vision Transformer on images generated with CGAN | III | High precision, novel combination of technologies | Low sample size |

| Veeramani and Jayaraman (2025) [33] | Methodological study | High-resolution melanoma image reconstruction | MELIIGAN (enhanced GAN model with residual blocks) | III | Intricate details preserved, high objective metrics | Not tested in clinical setting |

| Yi et al. (2019) [8] | Narrative review | Review of the use of GANs in medical imaging | Narrative review with task analysis and architecture | V | Detailed overview, basis for future research | Does not evaluate clinical efficacy directly |

| Zou et al. (2024) [34] | Methodological study | Segmentation of skin lesions using GANs with semantic awareness | GANs with global and local care modules | III | Significant improvement in segmentation metrics, innovative architecture | Validation on only two databases, no comparison to non-GAN methods |

| Clinical Task | How a GAN Helps | Clinical Benefit | Commercial Apps® Examples |

|---|---|---|---|

| Data augmentation for AI | Generates realistic synthetic images of lesions | Improves performance of AI models even with little data | SkinVision, DermaSensor |

| Progression simulation | Visually predicts lesion progression | Assists in treatment planning and patient education | FotoFinder, 3Derm |

| Image reconstruction | Restores blurred or poorly illuminated images | Improves diagnosis at a distance or with suboptimal images | ModMed EMA, Skinive |

| Image standardization | Corrects illumination and contrast differences contrast | Facilitates tracking and comparison between consultations | DermoCC-GAN, DermEngine |

| Lesion segmentation | Distinguishes lesion from background | Allows for objective measurement of the affected area and clinical follow- up | SkinIO, SLSNet |

| AI-assisted classification | Improves datasets to train classifiers | Automated diagnostic support or in triage systems | SkinVision, DermaSensor |

| Medical education | Creates didactic images of rare cases | Enhances training of students and residents | Crisalix, TouchMD |

| Skin tone inclusion | Generates images of diverse skin tones | Reduces bias in AI, improves diagnostic fairness diagnosis | Fitzpatrick 17k, SkinIO |

| Patient tools | Simulates evolution/improvement with treatment | Increases therapeutic adherence and patient understanding | Crisalix, ModMed Patient Portal |

| Esthetic/surgical simulation | Visualize effects of surgeries or treatments | Improves communication and informed consent | Crisalix, MirrorMe3D |

| Clinical Apps | Basis for monitoring or self-monitoring | Chronic patient management and remote monitoring | Skinive, DermaSensor |

| Metric | What It Measures | Advantages | Limitations | Application in Dermatology |

|---|---|---|---|---|

| FID | Distance between feature distributions (Inception) | Sensitive to quality and diversity, correlates with human perception | Depends on extraction model (Inception not trained on medical data) | Widely used in studies of GANs in skin, although not specific |

| SSIM | Perceptual structural similarity | Captures differences in luminance, contrast, and structure | Does not consider semantic content or general realism | Useful in direct comparison (e.g., reconstruction or super-resolution) |

| IS | Diversity and realism using classification | Easy to implement | Does not compare to real data, biased by base network | Less used in medicine, more in natural imaging |

| PSNR | Pixel-to-pixel error (based on noise) | Simple and known | Non-perceptual, very sensitive to small differences | Used in reconstruction or resolution enhancement tasks |

| MSE | Mean squared difference between images | Intuitive and mathematically simple | Very limited for perceptual images | Similar to PSNR, useful for tasks where ground truth is known |

| LPIPS | Distance between deep activations (perceptual) | High correlation with human perception, robust to small deformations | More computationally expensive | Well suited for comparing realism in synthetic lesions |

| PPL | Latent space smoothness | Evaluates interpolations in latent space (important in GANs) | More useful for model evaluation than for direct image comparison | Indirect, useful in GAN development (e.g., StyleGAN) |

| Precision/Recall (distributional) | Fidelity vs. diversity of sample generated | Allows evaluation of collapse and overfitting mode | More complex to calculate | Useful when looking for variety of injury types |

| Mode Score | Realism and class coverage | Evaluates diversity when there are clear labels | Requires class annotations | Applicable in annotated datasets (e.g., lesion types) |

| Dice/Jaccard | Spatial overlap between masks or structures | Widely used in medical imaging with segmentation | Only applicable if segmentations are present | Ideal if images with defined anatomical regions |

| Domain | European Union (EU) | United States (U.S.) |

|---|---|---|

| Primary Regulation | AI Act (2024, pending full implementation); Medical Device Regulation (MDR, 2017/745) | FDA regulations under the Federal Food, Drug, and Cosmetic Act and Software as a Medical Device (SaMD) Framework |

| Regulatory Authority | European Commission; National Competent Authorities; European Medicines Agency (EMA) | Food and Drug Administration (FDA); Center for Devices and Radiological Health (CDRH) |

| AI-specific Framework | AI Act classifies AI systems by risk (unacceptable, high, limited, minimal); GANs used in diagnosis are high-risk | FDA does not yet have a specific AI law but follows guidance on Good Machine Learning Practice (GMLP) and SaMD categories |

| Classification Approach | Risk-based: GANs for clinical decision-making would be “high-risk” under AI Act and Class IIa or higher under MDR | Function-based: based on intended use, risk level, and degree of autonomy; GANs used in diagnosis likely fall under Class II or III |

| Validation and Safety | Strict requirements under MDR for clinical evaluation, safety, and post-market surveillance | Requires premarket clearance or approval (510 (k), De Novo, PMA), and validation through clinical evidence |

| Ethical Guidelines | European Ethics Guidelines for Trustworthy AI (2019); human oversight and transparency mandatory | AI-based tools must demonstrate safety, effectiveness, and transparency; bias mitigation is a growing focus (FDA AI/ML Action Plan) |

| Data Privacy and Governance | GDPR compliance required, including data minimization and patient consent | HIPAA compliance governs medical data use and patient privacy protection |

| Ongoing Initiatives | EU AI Liability Directive; European Health Data Space; EUDAMED platform | Digital Health Center of Excellence; AI/ML SaMD Action Plan; real-world performance monitoring frameworks |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Izu-Belloso, R.M.; Ibarrola-Altuna, R.; Rodriguez-Alonso, A. Generative Adversarial Networks in Dermatology: A Narrative Review of Current Applications, Challenges, and Future Perspectives. Bioengineering 2025, 12, 1113. https://doi.org/10.3390/bioengineering12101113

Izu-Belloso RM, Ibarrola-Altuna R, Rodriguez-Alonso A. Generative Adversarial Networks in Dermatology: A Narrative Review of Current Applications, Challenges, and Future Perspectives. Bioengineering. 2025; 12(10):1113. https://doi.org/10.3390/bioengineering12101113

Chicago/Turabian StyleIzu-Belloso, Rosa Maria, Rafael Ibarrola-Altuna, and Alex Rodriguez-Alonso. 2025. "Generative Adversarial Networks in Dermatology: A Narrative Review of Current Applications, Challenges, and Future Perspectives" Bioengineering 12, no. 10: 1113. https://doi.org/10.3390/bioengineering12101113

APA StyleIzu-Belloso, R. M., Ibarrola-Altuna, R., & Rodriguez-Alonso, A. (2025). Generative Adversarial Networks in Dermatology: A Narrative Review of Current Applications, Challenges, and Future Perspectives. Bioengineering, 12(10), 1113. https://doi.org/10.3390/bioengineering12101113