Multi-Shared-Task Self-Supervised CNN-LSTM for Monitoring Free-Body Movement UPDRS-III Using Wearable Sensors

Abstract

1. Introduction

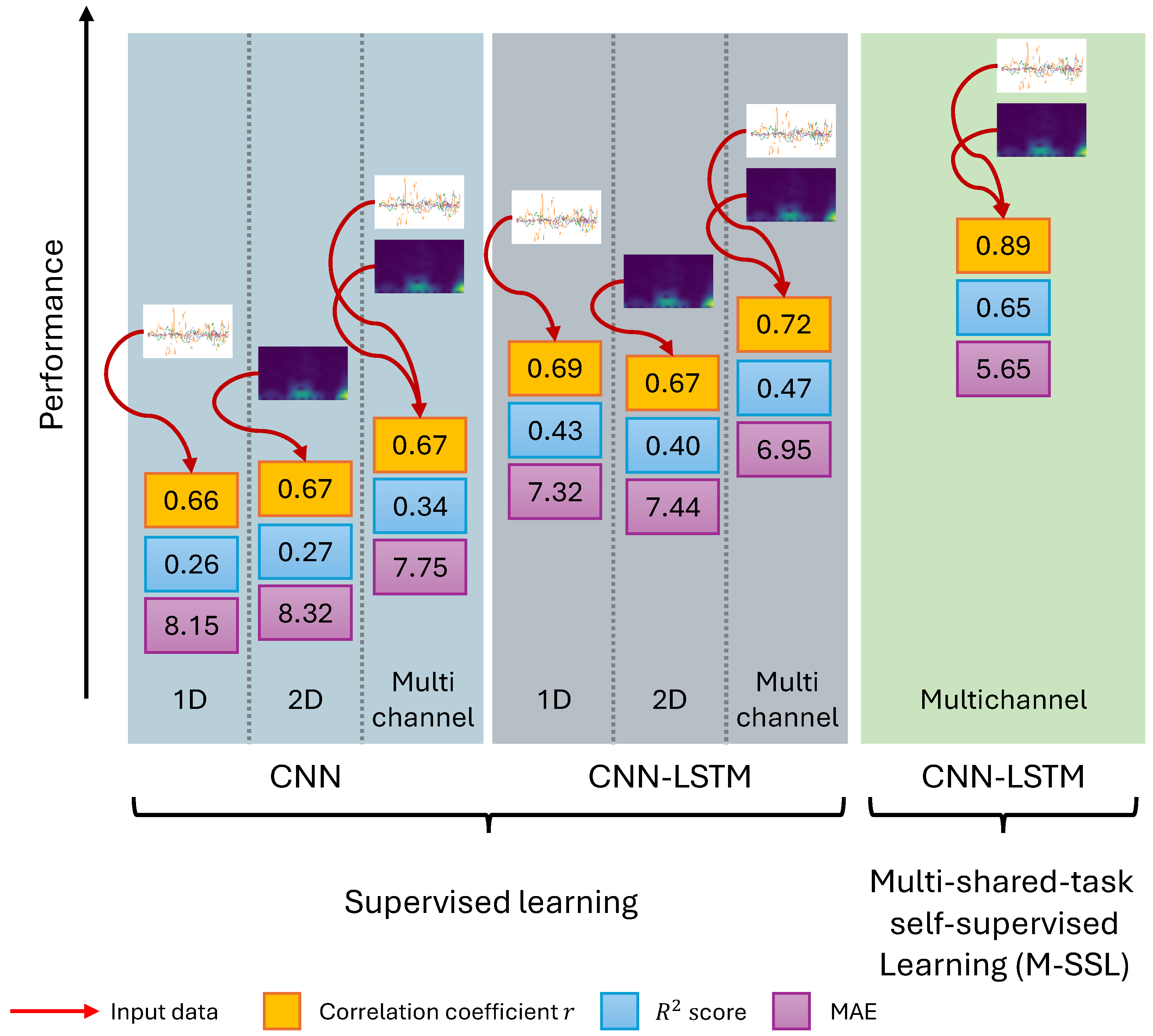

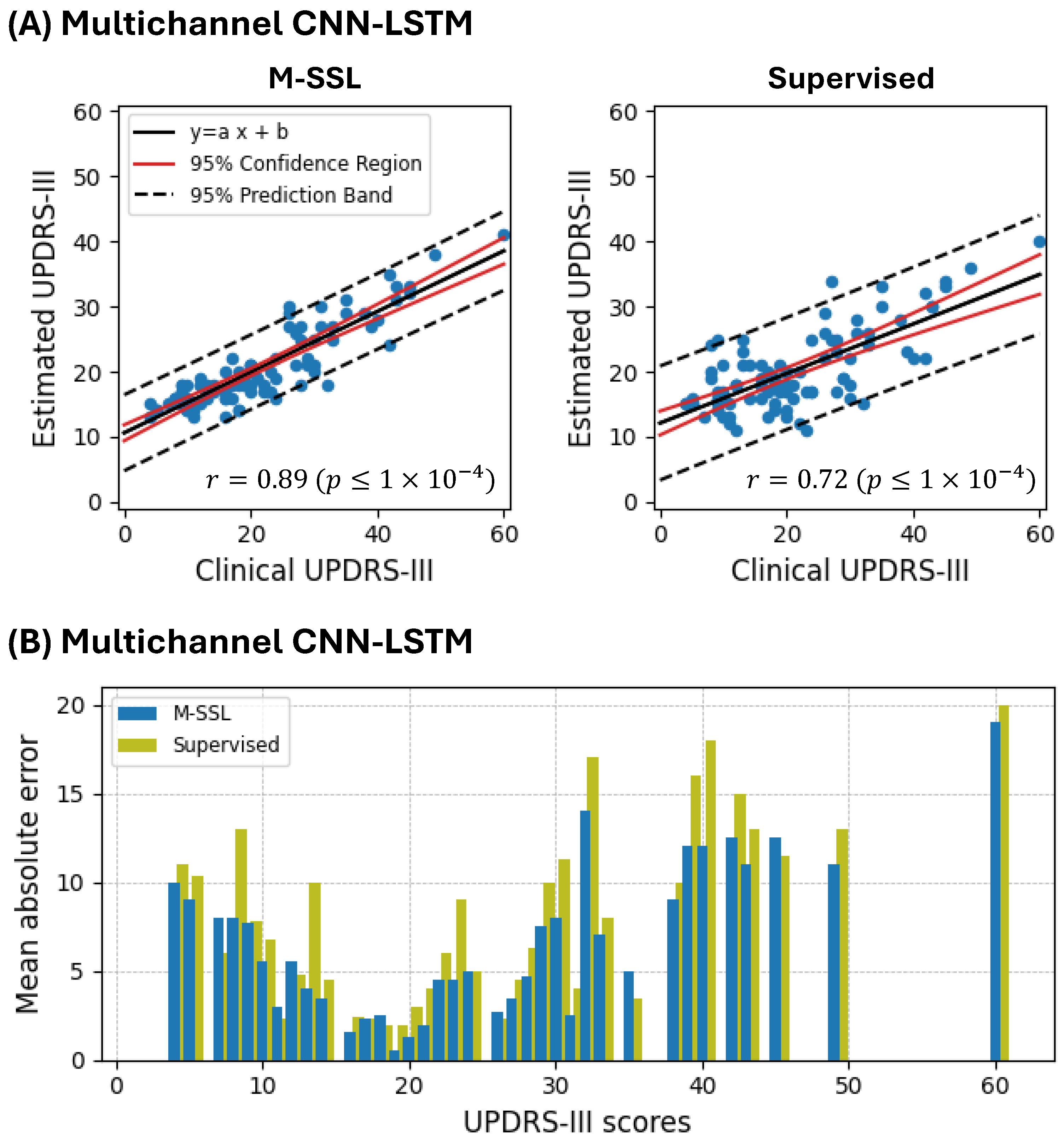

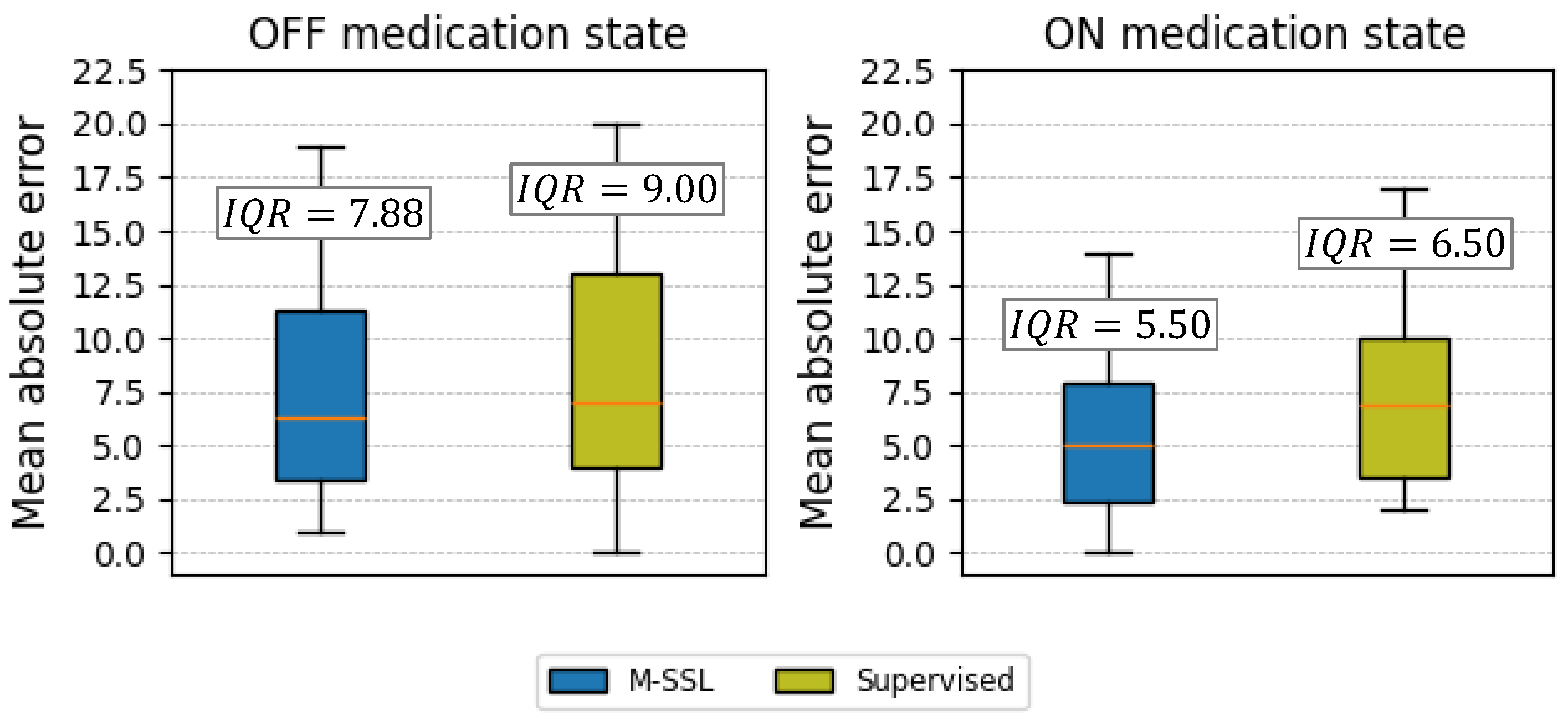

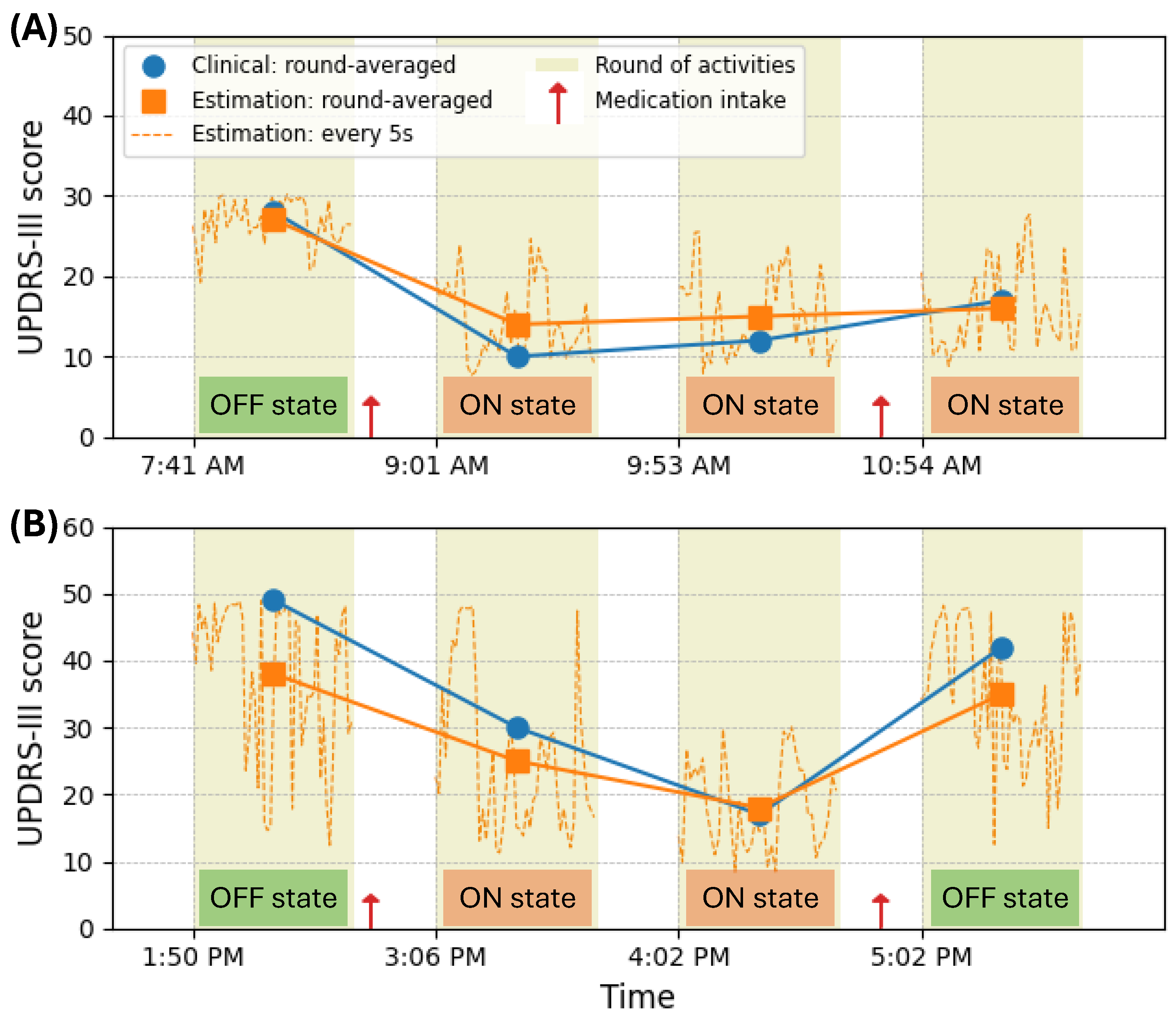

- We introduce a multichannel CNN-LSTM framework designed to process and analyze raw gyroscope sensor data and their spectrogram representations in parallel. This architecture allows for the comprehensive extraction of spatial and temporal features from PD patients’ movement data, significantly enhancing the accuracy of UPDRS-III score estimation. Integrating 1D CNN models for raw signal processing and 2D CNN models for spectrogram analysis, coupled with LSTM networks for capturing long-term dependencies, represents a novel approach. This combination effectively addresses the complexities of PD symptom manifestation in sensor data, setting a new standard for precision in PD monitoring technologies.

- Our other novel contribution is extending the capabilities of SSL by introducing a Multi-shared-task SSL (M-SSL) strategy. This approach leverages unlabeled data to pre-train a multichannel CNN on various signal transformation recognition tasks, significantly improving the model’s ability to extract and learn meaningful features from PD motion data without human annotation. Implementing shared layers between the branches of the CNN for each transformation recognition task, based on the congruence of spectrograms and raw signals, introduces a novel mechanism for enhancing feature learning. This method’s ability to refine data representation and feature extraction without labeled data is a considerable advancement over traditional SSL applications in bioengineering.

- We methodologically configure the multichannel CNN-LSTM network, including specific convolutional blocks and LSTM layers, optimized through the Bayesian technique. This setup is tailored for the dual objectives of learning signal representations and estimating UPDRS-III scores, thus offering a robust foundation for capturing the full spectrum of PD symptoms. This innovative selection of convolutional kernel sizes, pooling layers, and dropout rates, alongside integrating LSTM layers for sequence modeling, enables precise UPDRS-III score estimation from complex sensor data.

2. Related Work

3. Materials and Methods

3.1. The Parkinson’s Disease Dataset

3.2. Data Preprocessing

3.3. The Utilized Deep Neural Networks Architectures

3.3.1. Convolutional Processing Branches

- Raw Signal Branch (ConvR): This branch is responsible for processing the raw gyroscope signal component of the input, denoted as . It employs 1D convolutional kernels in its layers, allowing the network to learn patterns directly from the raw signal data.

- Spectrogram Signal Branch (ConvS): In contrast, this branch processes the spectrograms generated from the input gyroscope signal, denoted as . It utilizes 2D convolutional kernels in its layers to learn and extract features from the spectrograms, representing the signal’s frequency content over time.

3.3.2. Multichannel CNN

3.3.3. LSTM Integration for Temporal Analysis

3.4. Multi-Shared-Task Self-Supervised Learning

3.4.1. Signal Representation Learning

- Rotation : This transformation involves applying a random rotation with an angle to the data to generate . This enables the network to gain insights into different sensor placements.

- Permutation : This transformation randomly disrupts the temporal sequence within a data window by rearranging its segments, producing . This allows the network to learn about the varying temporal positions of symptoms within the window data.

- Time warping : This transformation perturbs the temporal pattern of the data using a smooth warping path or a randomly located fixed window, which distorts the time intervals between samples and generates . This method allows the network to learn about the changes in the temporal spacing of the samples.

3.4.2. Target Task: The Estimation of UPDRS-III Score

3.5. Model Hyperparameters

3.5.1. Signal Representation Learning Network

3.5.2. UPDRS-III Estimation Network

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PD | Parkinson’s disease |

| UPDRS | The Unified Parkinson’s Disease Rating Scale |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| SSL | Self-supervised Learning |

| M-SSL | Multi-shared-task Self-supervised Learning |

| ADL | Activities of Daily Living |

| FIR | Finite Impulse Response |

| MAE | Mean Absolute Error |

| IQR | Interquartile Range |

References

- Bloem, B.R.; Okun, M.S.; Klein, C. Parkinson’s disease. Lancet 2021, 397, 2284–2303. [Google Scholar] [CrossRef] [PubMed]

- Sveinbjornsdottir, S. The clinical symptoms of Parkinson’s disease. J. Neurochem. 2016, 139, 318–324. [Google Scholar] [CrossRef] [PubMed]

- Ramaker, C.; Marinus, J.; Stiggelbout, A.M.; Van Hilten, B.J. Systematic evaluation of rating scales for impairment and disability in Parkinson’s disease. Mov. Disord. Off. J. Mov. Disord. Soc. 2002, 17, 867–876. [Google Scholar] [CrossRef] [PubMed]

- Goyal, J.; Khandnor, P.; Aseri, T.C. Classification, prediction, and monitoring of Parkinson’s disease using computer assisted technologies: A comparative analysis. Eng. Appl. Artif. Intell. 2020, 96, 103955. [Google Scholar] [CrossRef]

- Shuqair, M.; Jimenez-Shahed, J.; Ghoraani, B. Incremental learning in time-series data using reinforcement learning. In Proceedings of the 2022 IEEE International Conference on Data Mining Workshops (ICDMW), Orlando, FL, USA, 28 November–1 December 2022; pp. 868–875. [Google Scholar]

- Moshfeghi, S.; Jan, M.T.; Conniff, J.; Ghoreishi, S.G.A.; Jang, J.; Furht, B.; Yang, K.; Rosselli, M.; Newman, D.; Tappen, R.; et al. In-Vehicle Sensing and Data Analysis for Older Drivers with Mild Cognitive Impairment. In Proceedings of the 2023 IEEE 20th International Conference on Smart Communities: Improving Quality of Life Using AI, Robotics and IoT (HONET), Boca Raton, FL, USA, 4–6 December 2023; pp. 140–145. [Google Scholar]

- Espay, A.J.; Hausdorff, J.M.; Sánchez-Ferro, Á.; Klucken, J.; Merola, A.; Bonato, P.; Paul, S.S.; Horak, F.B.; Vizcarra, J.A.; Mestre, T.A.; et al. A roadmap for implementation of patient-centered digital outcome measures in Parkinson’s disease obtained using mobile health technologies. Mov. Disord. 2019, 34, 657–663. [Google Scholar] [CrossRef] [PubMed]

- Memar, S.; Delrobaei, M.; Pieterman, M.; McIsaac, K.; Jog, M. Quantification of whole-body bradykinesia in Parkinson’s disease participants using multiple inertial sensors. J. Neurol. Sci. 2018, 387, 157–165. [Google Scholar] [CrossRef]

- Dai, H.; Cai, G.; Lin, Z.; Wang, Z.; Ye, Q. Validation of inertial sensing-based wearable device for tremor and bradykinesia quantification. IEEE J. Biomed. Health Inform. 2020, 25, 997–1005. [Google Scholar] [CrossRef]

- Mahadevan, N.; Demanuele, C.; Zhang, H.; Volfson, D.; Ho, B.; Erb, M.K.; Patel, S. Development of digital biomarkers for resting tremor and bradykinesia using a wrist-worn wearable device. NPJ Digit. Med. 2020, 3, 5. [Google Scholar] [CrossRef]

- Fiska, V.; Giannakeas, N.; Katertsidis, N.; Tzallas, A.T.; Kalafatakis, K.; Tsipouras, M.G. Motor data analysis of Parkinson’s disease patients. In Proceedings of the 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), Cincinnati, OH, USA, 26–28 October 2020; pp. 946–950. [Google Scholar]

- Kim, H.B.; Lee, W.W.; Kim, A.; Lee, H.J.; Park, H.Y.; Jeon, H.S.; Kim, S.K.; Jeon, B.; Park, K.S. Wrist sensor-based tremor severity quantification in Parkinson’s disease using convolutional neural network. Comput. Biol. Med. 2018, 95, 140–146. [Google Scholar] [CrossRef]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Wearable sensors for estimation of parkinsonian tremor severity during free body movements. Sensors 2019, 19, 4215. [Google Scholar] [CrossRef]

- Moon, S.; Song, H.J.; Sharma, V.D.; Lyons, K.E.; Pahwa, R.; Akinwuntan, A.E.; Devos, H. Classification of Parkinson’s disease and essential tremor based on balance and gait characteristics from wearable motion sensors via machine learning techniques: A data-driven approach. J. Neuroeng. Rehabil. 2020, 17, 1–8. [Google Scholar] [CrossRef]

- Vescio, B.; De Maria, M.; Crasà, M.; Nisticò, R.; Calomino, C.; Aracri, F.; Quattrone, A.; Quattrone, A. Development of a New Wearable Device for the Characterization of Hand Tremor. Bioengineering 2023, 10, 1025. [Google Scholar] [CrossRef]

- Rodríguez-Molinero, A.; Pérez-López, C.; Samà, A.; Rodríguez-Martín, D.; Alcaine, S.; Mestre, B.; Quispe, P.; Giuliani, B.; Vainstein, G.; Browne, P.; et al. Estimating dyskinesia severity in Parkinson’s disease by using a waist-worn sensor: Concurrent validity study. Sci. Rep. 2019, 9, 13434. [Google Scholar] [CrossRef] [PubMed]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Dyskinesia estimation during activities of daily living using wearable motion sensors and deep recurrent networks. Sci. Rep. 2021, 11, 7865. [Google Scholar] [CrossRef]

- Zampogna, A.; Borzì, L.; Rinaldi, D.; Artusi, C.A.; Imbalzano, G.; Patera, M.; Lopiano, L.; Pontieri, F.; Olmo, G.; Suppa, A. Unveiling the Unpredictable in Parkinson’s Disease: Sensor-Based Monitoring of Dyskinesias and Freezing of Gait in Daily Life. Bioengineering 2024, 11, 440. [Google Scholar] [CrossRef] [PubMed]

- Nilashi, M.; Ibrahim, O.; Ahmadi, H.; Shahmoradi, L.; Farahmand, M. A hybrid intelligent system for the prediction of Parkinson’s Disease progression using machine learning techniques. Biocybern. Biomed. Eng. 2018, 38, 1–15. [Google Scholar] [CrossRef]

- Zhan, A.; Mohan, S.; Tarolli, C.; Schneider, R.B.; Adams, J.L.; Sharma, S.; Elson, M.J.; Spear, K.L.; Glidden, A.M.; Little, M.A.; et al. Using smartphones and machine learning to quantify Parkinson disease severity: The mobile Parkinson disease score. JAMA Neurol. 2018, 75, 876–880. [Google Scholar] [CrossRef] [PubMed]

- Butt, A.H.; Rovini, E.; Fujita, H.; Maremmani, C.; Cavallo, F. Data-driven models for objective grading improvement of Parkinson’s disease. Ann. Biomed. Eng. 2020, 48, 2976–2987. [Google Scholar] [CrossRef]

- Sotirakis, C.; Su, Z.; Brzezicki, M.A.; Conway, N.; Tarassenko, L.; FitzGerald, J.J.; Antoniades, C.A. Identification of motor progression in Parkinson’s disease using wearable sensors and machine learning. Npj Park. Dis. 2023, 9, 142. [Google Scholar] [CrossRef]

- Ghoraani, B.; Galvin, J.E.; Jimenez-Shahed, J. Point of view: Wearable systems for at-home monitoring of motor complications in Parkinson’s disease should deliver clinically actionable information. Park. Relat. Disord. 2021, 84, 35–39. [Google Scholar] [CrossRef]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Ensemble deep model for continuous estimation of Unified Parkinson’s Disease Rating Scale III. Biomed. Eng. Online 2021, 20, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Rehman, R.Z.U.; Rochester, L.; Yarnall, A.J.; Del Din, S. Predicting the progression of Parkinson’s disease MDS-UPDRS-III motor severity score from gait data using deep learning. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Virtual, 1–5 November 2021; pp. 249–252. [Google Scholar]

- Mera, T.O.; Burack, M.A.; Giuffrida, J.P. Objective motion sensor assessment highly correlated with scores of global levodopa-induced dyskinesia in Parkinson’s disease. J. Park. Dis. 2013, 3, 399–407. [Google Scholar] [CrossRef] [PubMed]

- Pulliam, C.L.; Heldman, D.A.; Brokaw, E.B.; Mera, T.O.; Mari, Z.K.; Burack, M.A. Continuous assessment of levodopa response in Parkinson’s disease using wearable motion sensors. IEEE Trans. Biomed. Eng. 2017, 65, 159–164. [Google Scholar] [CrossRef] [PubMed]

- Dai, H.; Zhang, P.; Lueth, T.C. Quantitative assessment of parkinsonian tremor based on an inertial measurement unit. Sensors 2015, 15, 25055–25071. [Google Scholar] [CrossRef] [PubMed]

- Shawen, N.; O’Brien, M.K.; Venkatesan, S.; Lonini, L.; Simuni, T.; Hamilton, J.L.; Ghaffari, R.; Rogers, J.A.; Jayaraman, A. Role of data measurement characteristics in the accurate detection of Parkinson’s disease symptoms using wearable sensors. J. Neuroeng. Rehabil. 2020, 17, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Brownlee, J. Deep Learning for Natural Language Processing: Develop Deep Learning Models for Your Natural Language Problems; Machine Learning Mastery: Vermont, Australia, 2017. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-supervised learning: Generative or contrastive. IEEE Trans. Knowl. Data Eng. 2021, 35, 857–876. [Google Scholar] [CrossRef]

- Saeed, A.; Ozcelebi, T.; Lukkien, J. Multi-task self-supervised learning for human activity detection. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–30. [Google Scholar] [CrossRef]

- Um, T.T.; Pfister, F.M.; Pichler, D.; Endo, S.; Lang, M.; Hirche, S.; Fietzek, U.; Kulić, D. Data augmentation of wearable sensor data for parkinson’s disease monitoring using convolutional neural networks. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 216–220. [Google Scholar]

- Owen, A.B. A robust hybrid of lasso and ridge regression. Contemp. Math. 2007, 443, 59–72. [Google Scholar]

- Shuqair, M. Multi-Shared-Task Self-Supervised CNN-LSTM. 2024. Available online: https://github.com/mshuqair/Multi-shared-task-Self-supervised-CNN-LSTM (accessed on 28 June 2024).

- Pfister, F.M.; Um, T.T.; Pichler, D.C.; Goschenhofer, J.; Abedinpour, K.; Lang, M.; Endo, S.; Ceballos-Baumann, A.O.; Hirche, S.; Bischl, B.; et al. High-resolution motor state detection in Parkinson’s disease using convolutional neural networks. Sci. Rep. 2020, 10, 5860. [Google Scholar] [CrossRef] [PubMed]

- Mekruksavanich, S.; Jitpattanakul, A. A multichannel cnn-lstm network for daily activity recognition using smartwatch sensor data. In Proceedings of the 2021 Joint International Conference on Digital Arts, Media and Technology with ECTI Northern Section Conference on Electrical, Electronics, Computer and Telecommunication Engineering, Cha-am, Thailand, 3–6 March 2021; pp. 277–280. [Google Scholar]

- Koşar, E.; Barshan, B. A new CNN-LSTM architecture for activity recognition employing wearable motion sensor data: Enabling diverse feature extraction. Eng. Appl. Artif. Intell. 2023, 124, 106529. [Google Scholar] [CrossRef]

- Parisi, F.; Ferrari, G.; Giuberti, M.; Contin, L.; Cimolin, V.; Azzaro, C.; Albani, G.; Mauro, A. Body-sensor-network-based kinematic characterization and comparative outlook of UPDRS scoring in leg agility, sit-to-stand, and gait tasks in Parkinson’s disease. IEEE J. Biomed. Health Inform. 2015, 19, 1777–1793. [Google Scholar] [CrossRef] [PubMed]

- Goetz, C.G.; Tilley, B.C.; Shaftman, S.R.; Stebbins, G.T.; Fahn, S.; Martinez-Martin, P.; Poewe, W.; Sampaio, C.; Stern, M.B.; Dodel, R.; et al. Movement Disorder Society-sponsored revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS): Scale presentation and clinimetric testing results. Mov. Disord. Off. J. Mov. Disord. Soc. 2008, 23, 2129–2170. [Google Scholar] [CrossRef] [PubMed]

- Merello, M.; Gerschcovich, E.R.; Ballesteros, D.; Cerquetti, D. Correlation between the Movement Disorders Society Unified Parkinson’s Disease rating scale (MDS-UPDRS) and the Unified Parkinson’s Disease rating scale (UPDRS) during L-dopa acute challenge. Park. Relat. Disord. 2011, 17, 705–707. [Google Scholar] [CrossRef]

| Participant Attributes | Value/Mean ± std |

|---|---|

| Total number | 24 |

| Sex (male, female) | |

| Age (years) | |

| Disease duration (years) | |

| UPDRS-III prior to medication | |

| UPDRS-III after medication | |

| Levodopa equivalent daily dose LEDD (mg) |

| Method | Dataset | Sensors No. | Method’s Input | Activities | r | MAE | RMSE | |

|---|---|---|---|---|---|---|---|---|

| Zhan et al. [20] | Theirs | 1 | Features extracted from smartphone data | 5 smartphone tasks | − | − | − | |

| Butt et al. [21] | Theirs | 2 | Features extracted from accelerometer and gyroscope | 12 MD-UPDRS-III-specific tasks | − | − | − | |

| Sotirakis et al. [22] | Theirs | 6 | Features extracted from accelerometer and gyroscope | Walking and postural sway | − | − | − | |

| Rehman et al. [25] | Theirs | 1 | Accelerometer raw | Walking | − | − | − | |

| Hssayeni et al. [24] | Ours | 2 | Gyroscope raw and spectrograms | 7 ADL | ||||

| Rehman et al. [25] | Ours | 2 | Accelerometer raw | 7 ADL | ||||

| Proposed M-SSL multichannel CNN-LSTM | Ours | 2 | Gyroscope raw and spectrograms | 7 ADL |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shuqair, M.; Jimenez-Shahed, J.; Ghoraani, B. Multi-Shared-Task Self-Supervised CNN-LSTM for Monitoring Free-Body Movement UPDRS-III Using Wearable Sensors. Bioengineering 2024, 11, 689. https://doi.org/10.3390/bioengineering11070689

Shuqair M, Jimenez-Shahed J, Ghoraani B. Multi-Shared-Task Self-Supervised CNN-LSTM for Monitoring Free-Body Movement UPDRS-III Using Wearable Sensors. Bioengineering. 2024; 11(7):689. https://doi.org/10.3390/bioengineering11070689

Chicago/Turabian StyleShuqair, Mustafa, Joohi Jimenez-Shahed, and Behnaz Ghoraani. 2024. "Multi-Shared-Task Self-Supervised CNN-LSTM for Monitoring Free-Body Movement UPDRS-III Using Wearable Sensors" Bioengineering 11, no. 7: 689. https://doi.org/10.3390/bioengineering11070689

APA StyleShuqair, M., Jimenez-Shahed, J., & Ghoraani, B. (2024). Multi-Shared-Task Self-Supervised CNN-LSTM for Monitoring Free-Body Movement UPDRS-III Using Wearable Sensors. Bioengineering, 11(7), 689. https://doi.org/10.3390/bioengineering11070689