Pixel-Wise Interstitial Lung Disease Interval Change Analysis: A Quantitative Evaluation Method for Chest Radiographs Using Weakly Supervised Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Datasets

2.3. Image Acquisition

2.4. Reference Standard

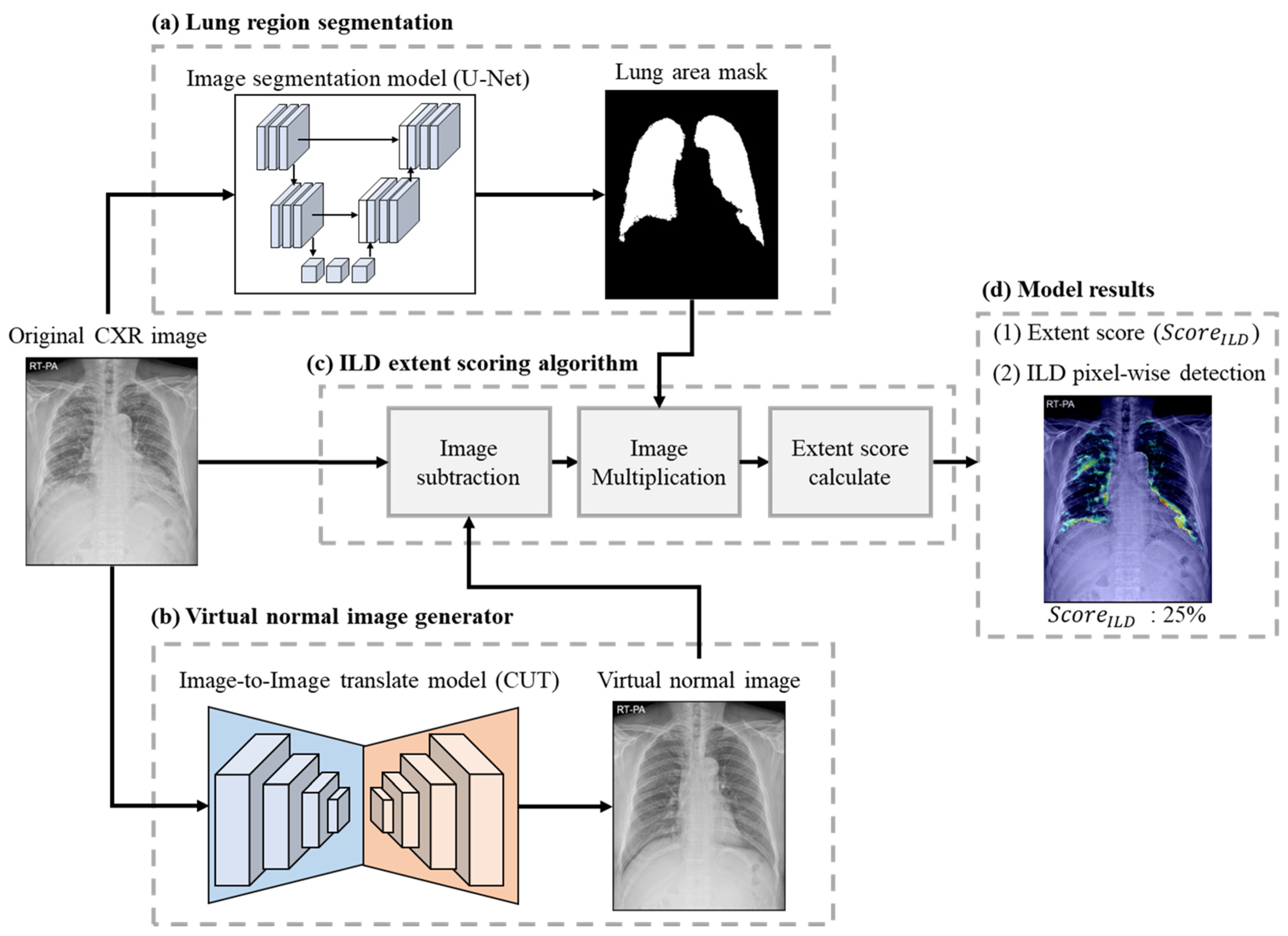

2.5. Model Structure

2.5.1. Lung Area Segmentation

2.5.2. Virtual Normal Image Generator

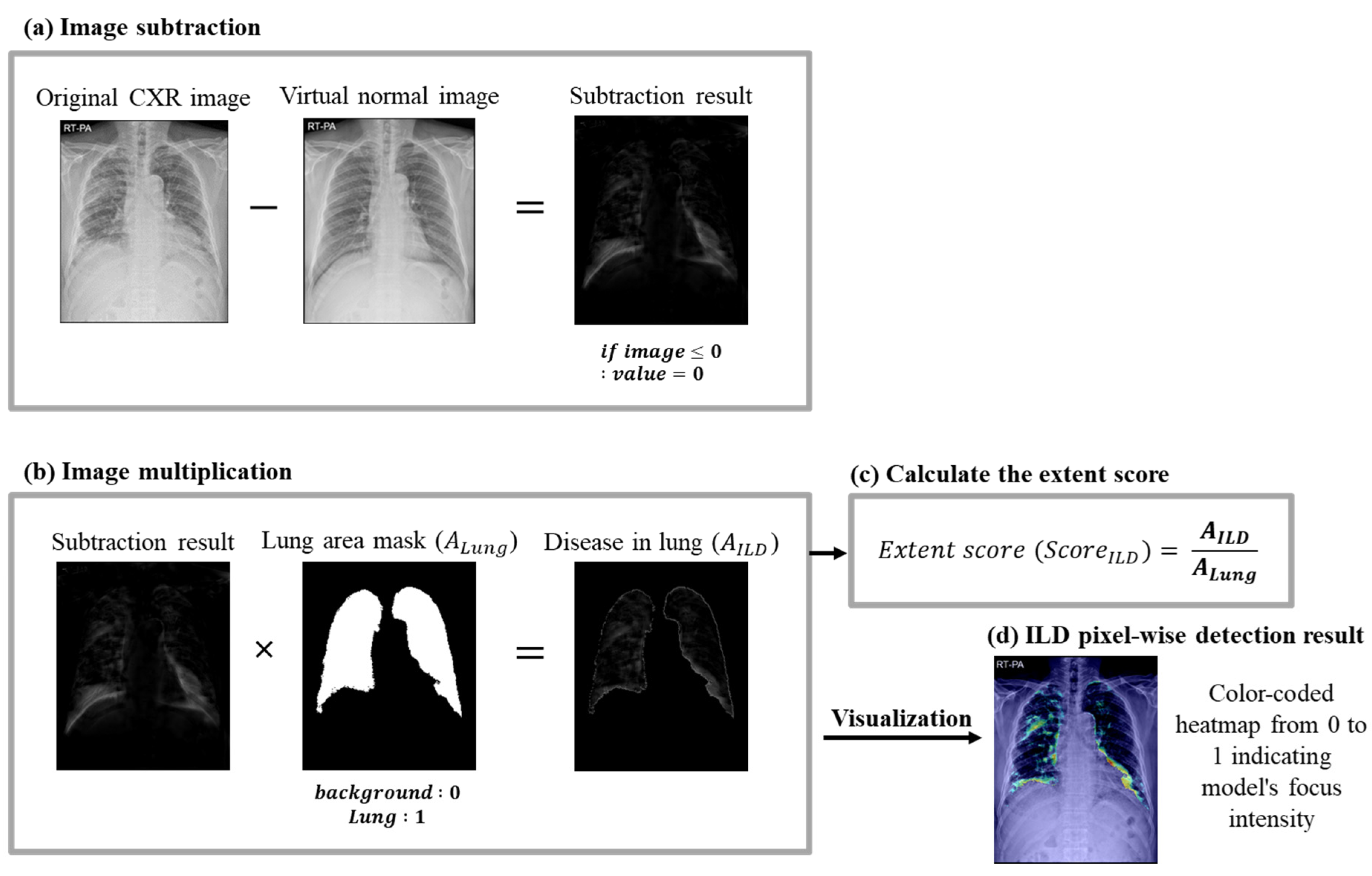

2.5.3. ILD Extent Scoring Algorithm

2.6. Development Environment

2.7. Model Evaluation

3. Results

3.1. Lung Area Segmentation Performance

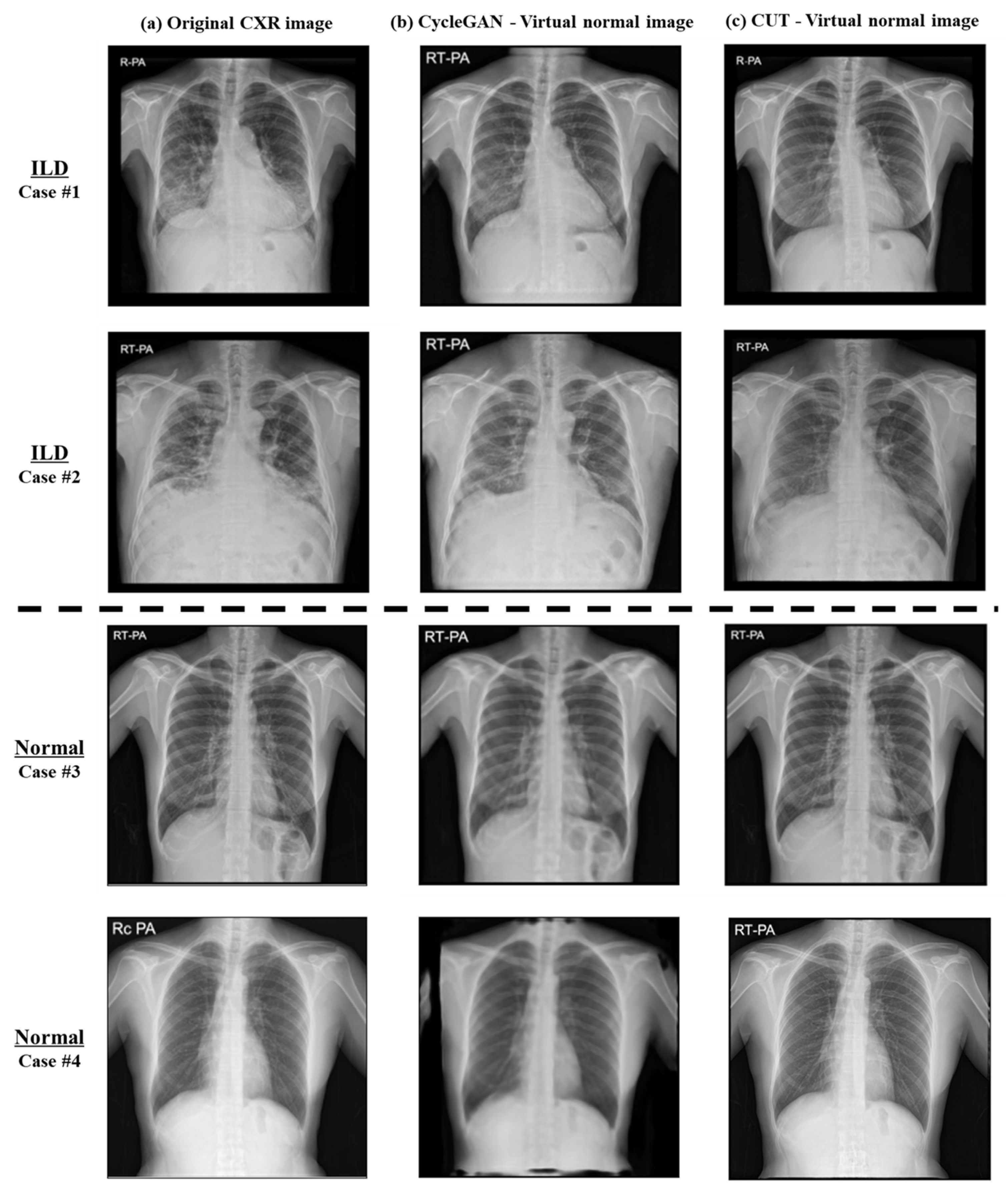

3.2. Image-to-Image Translation Fidelity

3.3. ILD Classification Accuracy

3.4. ILD Interval Change Classification Accuracy

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kolb, M.; Vašáková, M. The natural history of progressive fibrosing interstitial lung diseases. Respir. Res. 2019, 20, 57. [Google Scholar] [CrossRef] [PubMed]

- Raghu, G.; Remy-Jardin, M.; Richeldi, L.; Thomson, C.C.; Inoue, Y.; Johkoh, T.; Kreuter, M.; Lynch, D.A.; Maher, T.M.; Martinez, F.J.; et al. Idiopathic Pulmonary Fibrosis (an Update) and Progressive Pulmonary Fibrosis in Adults: An Official ATS/ERS/JRS/ALAT Clinical Practice Guideline. Am. J. Respir. Crit. Care Med. 2022, 205, e18–e47. [Google Scholar] [CrossRef] [PubMed]

- Flaherty, K.R.; Wells, A.U.; Cottin, V.; Devaraj, A.; Walsh, S.L.F.; Inoue, Y.; Richeldi, L.; Kolb, M.; Tetzlaff, K.; Stowasser, S.; et al. Nintedanib in Progressive Fibrosing Interstitial Lung Diseases. N. Engl. J. Med. 2019, 381, 1718–1727. [Google Scholar] [CrossRef] [PubMed]

- Ghodrati, S.; Pugashetti, J.V.; Kadoch, M.A.; Ghasemiesfe, A.; Oldham, J.M. Diagnostic Accuracy of Chest Radiography for Detecting Fibrotic Interstitial Lung Disease. Ann. Am. Thorac. Soc. 2022, 19, 1934–1937. [Google Scholar] [CrossRef] [PubMed]

- Exarchos, K.P.; Gkrepi, G.; Kostikas, K.; Gogali, A. Recent Advances of Artificial Intelligence Applications in Interstitial Lung Diseases. Diagnostics 2023, 13, 2303. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Lee, S.M.; Lee, K.H.; Jung, K.H.; Bae, W.; Choe, J.; Seo, J.B. Deep learning-based detection system for multiclass lesions on chest radiographs: Comparison with observer readings. Eur. Radiol. 2020, 30, 1359–1368. [Google Scholar] [CrossRef] [PubMed]

- Nam, J.G.; Kim, M.; Park, J.; Hwang, E.J.; Lee, J.H.; Hong, J.H.; Goo, J.M.; Park, C.M. Development and validation of a deep learning algorithm detecting 10 common abnormalities on chest radiographs. Eur. Respir. J. 2021, 57, 2003061. [Google Scholar] [CrossRef] [PubMed]

- Sung, J.; Park, S.; Lee, S.M.; Bae, W.; Park, B.; Jung, E.; Seo, J.B.; Jung, K.H. Added Value of Deep Learning-based Detection System for Multiple Major Findings on Chest Radiographs: A Randomized Crossover Study. Radiology 2021, 299, 450–459. [Google Scholar] [CrossRef] [PubMed]

- Kim, W.; Lee, S.M.; Kim, J.I.; Ahn, Y.; Park, S.; Choe, J.; Seo, J.B. Utility of a Deep Learning Algorithm for Detection of Reticular Opacity on Chest Radiography in Patients with Interstitial Lung Disease. AJR Am. J. Roentgenol. 2022, 218, 642–650. [Google Scholar] [CrossRef]

- Nishikiori, H.; Kuronuma, K.; Hirota, K.; Yama, N.; Suzuki, T.; Onodera, M.; Onodera, K.; Ikeda, K.; Mori, Y.; Asai, Y.; et al. Deep-learning algorithm to detect fibrosing interstitial lung disease on chest radiographs. Eur. Respir. J. 2023, 61, 2102269. [Google Scholar] [CrossRef]

- Barnes, H.; Humphries, S.M.; George, P.M.; Assayag, D.; Glaspole, I.; Mackintosh, J.A.; Corte, T.J.; Glassberg, M.; Johannson, K.A.; Calandriello, L. Machine learning in radiology: The new frontier in interstitial lung diseases. Lancet Digit. Health 2023, 5, e41–e50. [Google Scholar] [CrossRef] [PubMed]

- Kim, G.H.J.; Goldin, J.G.; Hayes, W.; Oh, A.; Soule, B.; Du, S. The value of imaging and clinical outcomes in a phase II clinical trial of a lysophosphatidic acid receptor antagonist in idiopathic pulmonary fibrosis. Ther. Adv. Respir. Dis. 2021, 15, 17534666211004238. [Google Scholar] [CrossRef]

- Lancaster, L.; Goldin, J.; Trampisch, M.; Kim, G.H.; Ilowite, J.; Homik, L.; Hotchkin, D.L.; Kaye, M.; Ryerson, C.J.; Mogulkoc, N. Effects of nintedanib on quantitative lung fibrosis score in idiopathic pulmonary fibrosis. Open Respir. Med. J. 2020, 14, 22. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In 2017 IEEE International Conference on Computer Vision (ICCV); IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Moujahid, H.; Cherradi, B.; Al-Sarem, M.; Bahatti, L.; Eljialy, A.B.A.M.Y.; Alsaeedi, A.; Saeed, F. Combining CNN and Grad-Cam for COVID-19 Disease Prediction and Visual Explanation. Intell. Autom. Soft Comput. 2022, 32, 723–745. [Google Scholar] [CrossRef]

- Oh, Y.; Park, S.; Ye, J.C. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans. Med. Imaging 2020, 39, 2688–2700. [Google Scholar] [CrossRef]

- Devnath, L.; Fan, Z.; Luo, S.; Summons, P.; Wang, D. Detection and visualisation of pneumoconiosis using an ensemble of multi-dimensional deep features learned from Chest X-rays. Int. J. Environ. Res. Public Health 2022, 19, 11193. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference On Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- DeGrave, A.J.; Janizek, J.D.; Lee, S.-I. AI for radiographic COVID-19 detection selects shortcuts over signal. Nat. Mach. Intell. 2021, 3, 610–619. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Y.; Li, J.; Huang, Y.; Ding, X. Unsupervised anomaly segmentation using image-semantic cycle translation. arXiv 2021, arXiv:2103.09094. [Google Scholar]

- Park, T.; Efros, A.A.; Zhang, R.; Zhu, J.-Y. Contrastive Learning for Unpaired Image-to-Image Translation. 2020. Available online: https://arxiv.org/abs/2007.15651 (accessed on 1 March 2024).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional Networks for Biomedical Image Segmentation. 2015. Available online: https://arxiv.org/abs/1505.04597 (accessed on 1 March 2024).

- Pizer, S.M.; Johnston, R.E.; Ericksen, J.P.; Yankaskas, B.C.; Muller, K.E. Contrast-limited adaptive histogram equalization: Speed and effectiveness. In Proceedings of the First Conference on Visualization in Biomedical Computing, Atlanta, GA, USA, 22–25 May 1990; Available online: https://ieeexplore.ieee.org/document/109340 (accessed on 1 March 2024).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Hobbs, S.; Chung, J.H.; Leb, J.; Kaproth-Joslin, K.; Lynch, D.A. Practical Imaging Interpretation in Patients Suspected of Having Idiopathic Pulmonary Fibrosis: Official Recommendations from the Radiology Working Group of the Pulmonary Fibrosis Foundation. Radiol. Cardiothorac. Imaging 2021, 3, e200279. [Google Scholar] [CrossRef] [PubMed]

- Akram, F.; Hussain, S.; Ali, A.; Javed, H.; Fayyaz, M.; Ahmed, K. Diagnostic Accuracy Of Chest Radiograph In Interstitial Lung Disease As Confirmed By High Resolution Computed Tomography (HRCT) Chest. J. Ayub Med. Coll. Abbottabad 2022, 34 (Suppl. S1), S1008–S1012. [Google Scholar] [CrossRef]

- Hoyer, N.; Prior, T.S.; Bendstrup, E.; Wilcke, T.; Shaker, S.B. Risk factors for diagnostic delay in idiopathic pulmonary fibrosis. Respir. Res. 2019, 20, 103. [Google Scholar] [CrossRef]

| Clinical Application | Machine Learning Method | |

|---|---|---|

| Park et al. [6] | Feasibility of DL-based detection system for multiclass lesions (nodule/mass, interstitial opacity, pleural effusion, and pneumothorax) | Multitask CNN |

| Namet al. [7] | DL algorithm detecting 10 common abnormalities (pneumothorax, mediastinal widening, pneumoperitoneum, nodule/mass, consolidation, pleural effusion, linear atelectasis, fibrosis, calcification, and cardiomegaly) | ResNet34-based deep CNN |

| Sung et al. [8] | Comparison of observer performance in detecting and localizing major abnormal findings (nodules, consolidation, interstitial opacity, pleural effusion, and pneumothorax) with/without DL-based detection system | DL algorithm (VUNO Med-Chest X-ray, version 1.0.0) |

| Kim et al. [9] | Evaluation of the utility of a DL algorithm for detection of reticular opacity on chest radiographs of patients with surgically confirmed ILD | DL algorithm (VUNO Med-Chest X-ray, version 1.0.0) |

| Nishikiori et al. [10] | DL algorithm to detect chronic fibrosing-ILDs | DenseNet-based Deep CNN |

| Training Dataset | Evaluation Dataset | |||

|---|---|---|---|---|

| ILD | Normal | ILD (F/U) | Normal | |

| Number of cases | 5266 | 5266 | 397 | 500 |

| Sex (F/M) | 2284/2982 | 3264/2002 | 183/214 | 257/243 |

| Age (mean ± std) | 65.5 ± 10.4 | 52.7 ± 15.6 | 64.1 ± 8.5 | 52.9 ± 15.7 |

| Model | CycleGAN | CUT | |||

|---|---|---|---|---|---|

| Evaluation metrics | SSIM | PSNR | SSIM | PSNR | |

| Category of dataset | Normal | 0.88 | 23.68 | 0.97 | 36.43 |

| ILD | 0.71 | 18.46 | 0.90 | 26.61 | |

| Task | Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Classification model | VGG16 | 68.65% | 72.82% | 71.19% | 68.45% |

| ResNet-34 | 92.11% | 92.03% | 91.84% | 91.93% | |

| EfficientNet-B0 | 91.57% | 91.89 | 90.93% | 91.30% | |

| ViT | 76.97% | 76.62% | 77.05% | 76.72% | |

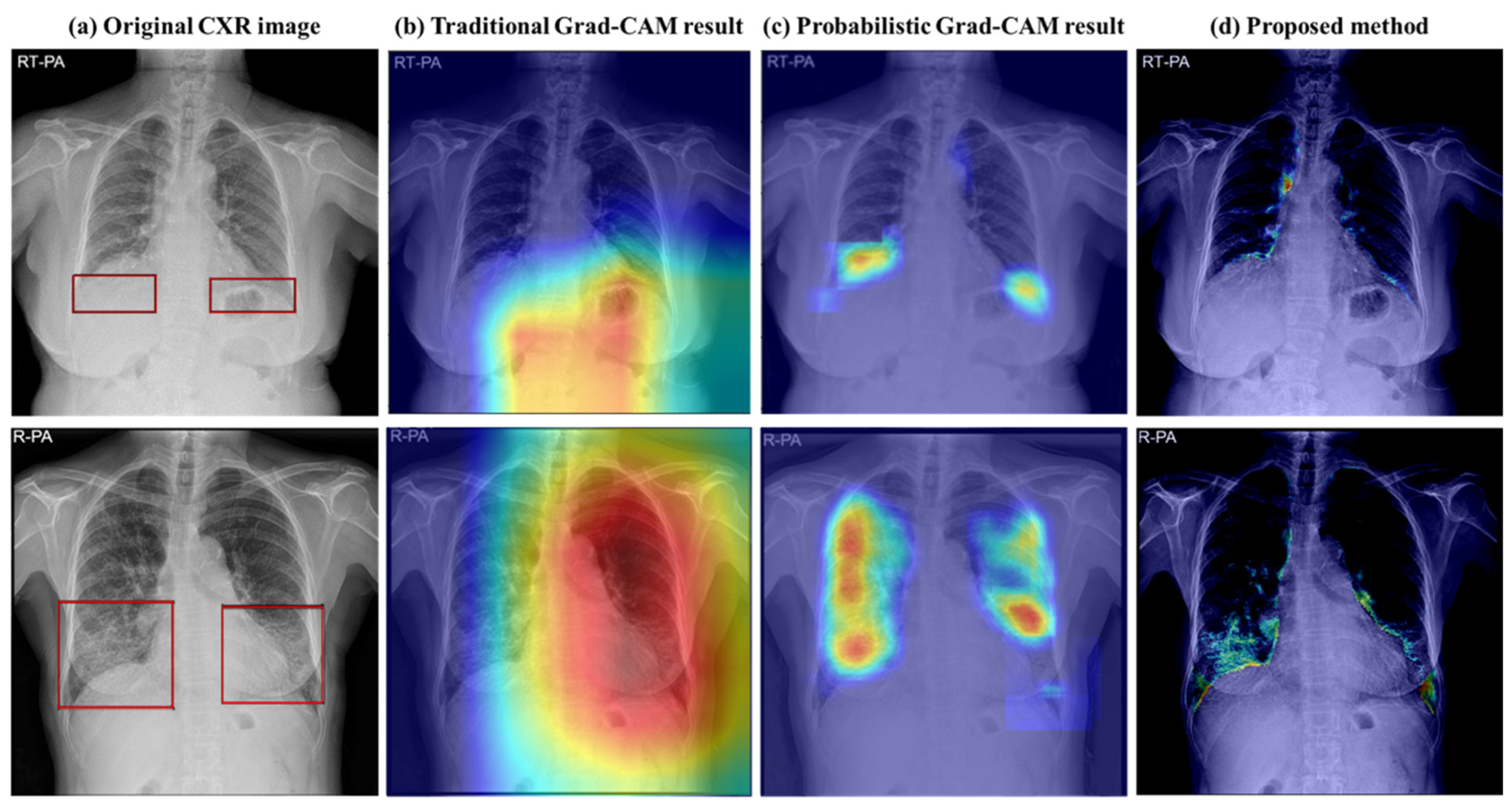

| Abnormal area detection | Probabilistic Grad-CAM [16] | 84.76% | 85.65% | 83.40% | 84.02% |

| Image-to-Image translation model | CycleGAN [18] with extent scoring algorithm | 81.20% | 73.14% | 89.16% | 76.96% |

| CUT [21] with extent scoring algorithm | 92.98% | 98.54% | 85.13% | 95.68% |

| Interval Class | Accuracy | Precision | Recall | F1-Score | Specificity |

|---|---|---|---|---|---|

| Aggravation | 88.24% | 72.58% | 80.35% | 76.27% | 90.66% |

| No change | 85.29% | 93.08% | 86.04% | 89.42% | 83.33% |

| Improvement | 97.06% | 58.82% | 100% | 74.07% | 96.93% |

| Total class | 85.29% | 85.29% | 88.24% | 85.29% | 92.65% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Kim, J.H.; Woo, J.H.; Park, S.Y.; Cha, Y.K.; Chung, M.J. Pixel-Wise Interstitial Lung Disease Interval Change Analysis: A Quantitative Evaluation Method for Chest Radiographs Using Weakly Supervised Learning. Bioengineering 2024, 11, 562. https://doi.org/10.3390/bioengineering11060562

Park S, Kim JH, Woo JH, Park SY, Cha YK, Chung MJ. Pixel-Wise Interstitial Lung Disease Interval Change Analysis: A Quantitative Evaluation Method for Chest Radiographs Using Weakly Supervised Learning. Bioengineering. 2024; 11(6):562. https://doi.org/10.3390/bioengineering11060562

Chicago/Turabian StylePark, Subin, Jong Hee Kim, Jung Han Woo, So Young Park, Yoon Ki Cha, and Myung Jin Chung. 2024. "Pixel-Wise Interstitial Lung Disease Interval Change Analysis: A Quantitative Evaluation Method for Chest Radiographs Using Weakly Supervised Learning" Bioengineering 11, no. 6: 562. https://doi.org/10.3390/bioengineering11060562

APA StylePark, S., Kim, J. H., Woo, J. H., Park, S. Y., Cha, Y. K., & Chung, M. J. (2024). Pixel-Wise Interstitial Lung Disease Interval Change Analysis: A Quantitative Evaluation Method for Chest Radiographs Using Weakly Supervised Learning. Bioengineering, 11(6), 562. https://doi.org/10.3390/bioengineering11060562