Abstract

Background and objective: Local advanced rectal cancer (LARC) poses significant treatment challenges due to its location and high recurrence rates. Accurate early detection is vital for treatment planning. With magnetic resonance imaging (MRI) being resource-intensive, this study explores using artificial intelligence (AI) to interpret computed tomography (CT) scans as an alternative, providing a quicker, more accessible diagnostic tool for LARC. Methods: In this retrospective study, CT images of 1070 T3–4 rectal cancer patients from 2010 to 2022 were analyzed. AI models, trained on 739 cases, were validated using two test sets of 134 and 197 cases. By utilizing techniques such as nonlocal mean filtering, dynamic histogram equalization, and the EfficientNetB0 algorithm, we identified images featuring characteristics of a positive circumferential resection margin (CRM) for the diagnosis of locally advanced rectal cancer (LARC). Importantly, this study employs an innovative approach by using both hard and soft voting systems in the second stage to ascertain the LARC status of cases, thus emphasizing the novelty of the soft voting system for improved case identification accuracy. The local recurrence rates and overall survival of the cases predicted by our model were assessed to underscore its clinical value. Results: The AI model exhibited high accuracy in identifying CRM-positive images, achieving an area under the curve (AUC) of 0.89 in the first test set and 0.86 in the second. In a patient-based analysis, the model reached AUCs of 0.84 and 0.79 using a hard voting system. Employing a soft voting system, the model attained AUCs of 0.93 and 0.88, respectively. Notably, AI-identified LARC cases exhibited a significantly higher five-year local recurrence rate and displayed a trend towards increased mortality across various thresholds. Furthermore, the model’s capability to predict adverse clinical outcomes was superior to those of traditional assessments. Conclusion: AI can precisely identify CRM-positive LARC cases from CT images, signaling an increased local recurrence and mortality rate. Our study presents a swifter and more reliable method for detecting LARC compared to traditional CT or MRI techniques.

1. Introduction

Rectal cancer, a significant subset of colorectal malignancies, presents distinct diagnostic and therapeutic challenges, especially in its advanced stages. The treatment approach for rectal cancer is primarily stage-dependent. Stage IV cancer, marked by metastasis to other organs, necessitates systemic therapy. Conversely, Stages I–III are considered localized diseases, and among the treatment modalities, the total mesorectal excision (TME) technique for complete removal of the tumor within the rectal mesentery, introduced by Heald, remains the most effective method to date [1]. According to the research, if a complete excision can be achieved, the likelihood of local recurrence is very low, at 2.6%. A key measure of a complete excision is the pathological absence of cancer cells at the circumferential resection margins (pCRMs). When cancer cells are present at these margins (pCRM+), it is associated with poor prognosis, leading to higher risks of local recurrence and mortality, often due to extensive tumor invasion or inadequate surgical techniques [2]. To reduce the tumor size and facilitate a clean resection, the introduction of radio (chemo)therapy (neoadjuvant therapy) before total mesorectal excision has led to an improved prognosis worldwide [3].

Among these, locally advanced rectal cancer (LARC) is notably challenging due to its deep pelvic location and proximity to critical organ structures. LARC is defined by tumors located within 1 mm of the planned circumferential resection margin (CRM) on imaging, indicating an advanced disease stage. This condition leads to a 26.3% local recurrence rate and a 37.8% mortality rate over a five-year follow-up period, thus complicating treatment efforts [4]. To decrease the local recurrence rate of the disease, applying neoadjuvant chemoradiation therapy before radical resection surgery is suggested in current guidelines. The stronger agent “total neoadjuvant therapy”, which includes standard radiation therapy with six-cycle chemotherapy, is suggested especially for LARC cases [5,6]. However, neoadjuvant treatment increases the expense and the rate of surgical complications, such as an increased rate of anastomosis stricture [7]. The rate of permanent stoma following low anterior resection is 13%. Reducing neoadjuvant therapy to chemotherapy with FOLFOX alone [8] or opting for direct surgery [9] for rectal cancer with an adequate surgical margin has been proven to offer comparable efficacy. Hence, the rapid identification of LARC with margin-threatening characteristics is crucial at the outset of rectal cancer therapy.

Current guidelines recommend magnetic resonance imaging (MRI) for staging rectal cancer; however, the limited availability of MRI resources limits the timeliness of this diagnostic method. Studies have indicated that CT, interpreted by experienced physicians, can achieve comparable efficacy to MRI in assessing the circumferential resection margin (CRM), a crucial factor in LARC diagnosis [10,11]. The rapid advancements in artificial intelligence (AI) image interpretation could significantly speed up and improve diagnostic accuracy for physicians [12,13,14,15,16]. This progress opens a promising avenue for utilizing AI to detect features of LARC that threaten the CRM from CT images.

Currently, the application of AI in cutting-edge methodologies for single-image recognition has shown promising results across numerous studies [17,18]. However, the comprehensive evaluation of case datasets remains an underexplored area in the literature. If AI were capable of identifying cases of locally advanced rectal cancer (LARC) with an accuracy comparable to those of specialists, it could markedly accelerate the treatment initiation process. Moreover, in the context of large-scale, multi-institutional trials for LARC treatment, AI has the potential to minimize diagnostic variations among clinicians, significantly improving the objectivity and fairness of trial outcomes.

Our study explores the use of AI in identifying LARC cases with CRM-threatening features from CT images, aiming to facilitate a rapid diagnosis of LARC. This approach, if successful, could provide a timely and effective tool for the management of rectal cancer in healthcare settings. It could also provide a fair and objective method to define LARC cases in research affairs.

2. Materials and Methods

2.1. Dataset

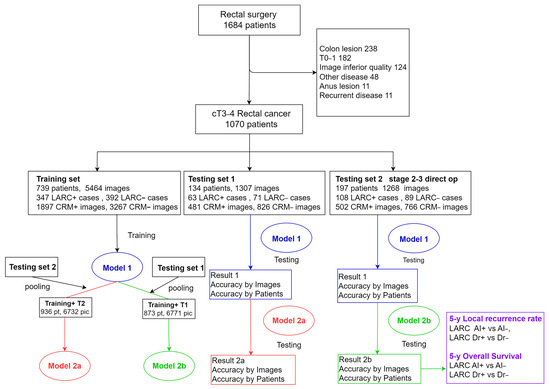

We compiled a comprehensive dataset of rectal surgeries conducted from 1 October 2010 to 31 December 2022. We focused on first-diagnosed rectal cancer cases in clinical stages T3–4, prioritizing CT images of high quality and with contrast. Exclusions included cases with primary tumors in other organs, non-adenocarcinoma pathology, tumors located in the sigmoid colon or lower rectum, early-stage T0–2 tumors, and CT images lacking contrast or compromised by prosthesis noise. The CT images around the rectal tumor segment were harvested. This selection yielded data from 1070 patients, providing 7739 CT images. These images were categorized into three sets: 739 cases for training, 134 for test set 1, and 197 for test set 2 consisting of stage-2–3 patients who underwent direct surgery (Figure 1, Table 1). Ethical approval was obtained (IRB number: CE21235B), and this study was registered at ClinicalTrials.gov (NCT05723965) accessed on 9 February 2023.

Figure 1.

Diagrammatic representation of study material workflow. This figure outlines the systematic process used in this study, tracing the flow of materials from initial data collection through to their final application within the research framework.

Table 1.

Demographic, clinical, and pathological characteristics of patients in study group.

2.2. CT Imaging Methods

CT scans were conducted at diagnosis and pre-surgery using Philips Healthcare scanners (Brilliance series, Cleveland, USA) at 100, 120, and 130 kV, or with automatic mA control, and without additional noise reduction. Images were captured at a slice thickness of 0.7 to 1.0 mm and a resolution of 512 × 512 pixels. The contrast medium dosage was calculated based on body weight, with a maximum limit of 100 mL. All images were reconstructed into 5 mm slices for detailed interpretation and analysis.

2.3. Annotation of CRM-Threatening LARC Cases

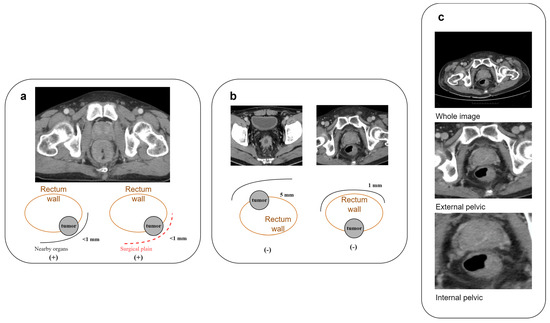

We defined LARC as tumor invasion within 1 mm of the circumferential resection margin (CRM) in MRI or CT images (Figure 2a,b). To validate the reliability of CT, fifty rectal cancer patients underwent both CT and MRI, and three specialists (CY Lin, 7 years of proctology; YC Liu, 8 years of radio-oncology; PY Chang, 12 years of radiology) assessed the LARC status. The consistency between CT and MRI interpretations was assessed using a kappa statistic of 0.728 (IBM SPSS version 22.0), indicating substantial agreement.

Figure 2.

Characterization and processing of rectal cancer features in CT imaging. (a) Criteria for identifying CRM-threatening features associated with locally advanced rectal cancer in CT scans; (b) illustrative cases of rectal cancer in CT imagery lacking CRM-threatening attributes; (c) comparative illustration of three cropping techniques for rectal cancer image preparation: full image, external pelvic boundary, and internal pelvic boundary.

2.4. Image Processing

We used the data collected above to train the deep learning algorithm model. The training materials included the original DICOM files downloaded from the PACS system. We utilized 3D slicer (version 5.2.2) software for image cropping along the outer pelvic edge and inner pelvic edge. After training and testing, it was found that using only the images cut along the inner pelvic edge yielded the highest result, based on preliminary AUC results (Figure 2c, Table 2).

Table 2.

Prediction rates of CRM-positive images in test set 1 derived from various image material sources.

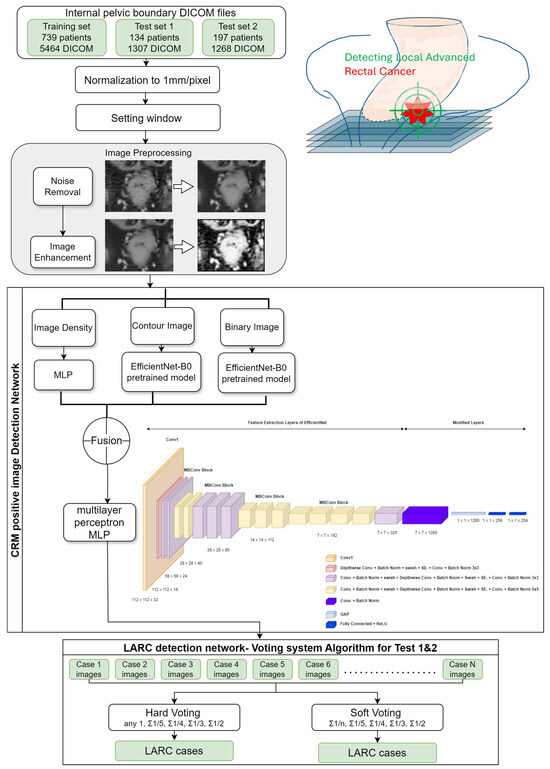

First, we normalized the CT images. Since the CT observation range was too large, we limited its range by normalizing the image with a window level of 50 and a window width of 400. A value of 250 and a minimum value of −150 were substituted, which are the standard observation values for the abdomen. Although these values may vary by institution and provider, the window width and level are usually very similar. After normalization, we scaled the values to a range of 0–255 to match the storage range of grayscale images. In addition to the normalization of CT values, we also normalized the unit distance of CT images. Different CT images may have different unit distances due to different operators, so we corrected the unit distance of all images to 1 mm × 1 mm per pixel, ensuring that all images had the same unit size. Improving image quality, specifically through noise reduction, is a crucial step prior to performing CT image prediction. From the literature [19,20,21,22], it is confirmed that the noise in CT images is typically additive white Gaussian noise. We selected nonlocal mean filtering for denoising, as it searches for similar areas in the image in units of image blocks and then averages these areas, which can better filter out the Gaussian noise in the image. We set the search area to 21 × 21 pixels and the similarity comparison block to 7 × 7 pixels, and we used the square of the pixel brightness difference to estimate the similarity (Figure 3).

Figure 3.

AI-based diagnostic framework for detecting the locally advanced rectal cancer architectural blueprint of the AI model for LARC identification. This diagram illustrates the methodological approach and the deep learning architecture utilized in this study for the detection of locally advanced rectal cancer (LARC) from CT scans. The framework encapsulates the AI’s training and predictive process, detailing the progression from image preprocessing to the final LARC classification.

After removing the noise from the image, we then applied dynamic histogram equalization to improve its quality [23]. It first applies a smoothing process to the histogram using a 1 × 3 filter, and then searches for the locations of the troughs in the histogram to perform the first histogram division. To ensure that there were no dominant pixel values within each division (i.e., to prevent high-frequency occurrences of luminance values from overriding low-frequency occurrences), an evaluation of the segments was performed, followed by a second histogram division. During the second division, the mean and standard deviation of each segment were calculated. If the standard deviation was more than twice the 68.3% probability threshold, no further division was performed. If the condition was not met, the segment was divided into three subsegments.

Finally, histogram equalization was applied. This processing method solves the problem of high-frequency luminance values overpowering low-frequency luminance values during histogram equalization, thereby achieving a superior contrast enhancement effect. The aim is to locate the tumor in the resulting image after final processing. Since the image is cropped around the tumor, we assume that the contour closest to the center is the contour of the tumor. Therefore, we started searching for contours from the center of the image outward. We used the OpenCV package to find all the contours by checking the distance from the contour’s centroid to the center of the image. We continuously updated and iterated until we found the closest contour. We drew the contour on the original image and used it as one of the inputs to our model [24].

2.5. Deep Learning Algorithm for CRM-Positive Image Identification

This study employed the deep learning architecture EfficientNetB0, recognized for its efficiency and high performance despite a manageable parameter size, making it ideal for fine-tuning with the limited yet high-quality data collected in the hospital setting. We utilized EfficientNetB0 as a pre-trained model, integrating a global average pooling layer immediately preceding the classification layer, and replacing the traditional fully connected layer [25]. The loss was set to binary cross-entropy, and the class weights were set to adjust for data imbalance. We used Adam as the optimizer, which updates parameters of different scales based on different gradients. We set the learning rate to 0.001 and the batch size to 32. We trained the model for 500 epochs and selected the model with the best validation accuracy. We evaluated the impact of the architectural decisions on the overall performance of the pipeline by monitoring the performance in the training set. The impact of the hyperparameter values on the generalization capabilities of the models in an ablation study was investigated in the performance monitoring in the validation and test sets. We divided the training set data into 64%, 16%, and 20% for training, validation, and testing, respectively, based on the proportion of patients. Since the amount of data provided by each patient was different, there may be errors in the proportion of categories after splitting. We used a hash table to record the number of positive and negative images corresponding to each patient and recursively divided the data until the proportion of each category was close to the minimum error (Figure 3).

2.6. Determining LARC Cases through Series of Images

We used a voting system to gather statistics and determine the presence of LARC based on the proportion of CRM-positive images in each case. The determination of whether a case was considered locally advanced rectal cancer (LARC) was based on the collective clinical judgment of three specialists. Hard voting was used at first; that is, if the probability of the model judging the image as positive was greater than or equal to 0.5, the image was considered positive, and whether the patient was LARC was determined according to the previous threshold.

Hard voting

Any one positive: any CRM-positive image be predicted in case series:

Subsequently, soft voting was adopted, averaging the probability values across all images of a patient. The optimal threshold was determined using the Youden index on ROC curves, combining sensitivity and specificity to assess the diagnostic effectiveness.

Soft voting

2.7. Local Recurrence Rate Analysis

Training AI models to expert-level judgment is not sufficient in demonstrating clinical value; we aimed to understand the clinical predictive power of the decisions made. In rectal cancer, LARC has significantly higher local recurrence (LR) and mortality rates. Therefore, we selected 197 stage-2–3 rectal cancer patients who underwent direct surgery (whose survival rates are not influenced by stage or treatment) from test set 2, followed for an average of 49.3 months (mean). We conducted survival analysis based on the AI model’s prediction to compare the predictive strength of AI and specialist physicians’ interpretations.

2.8. Statistical Analysis

Data were analyzed from 1 May 2022 to 31 May 2023, using Cohen’s kappa statistics (SPSS version 22.0) for CT and MRI inter-rater reliability, chi-square tests, and ANOVA for patient characteristic comparisons. Survival curves were evaluated using the Kaplan–Meier method and log-rank tests, employing IBM SPSS version 22.0.

This methodology ensured a robust and comprehensive approach to evaluating AI’s capability in accurately identifying LARC cases through CT imaging, potentially transforming diagnostic processes in oncological care.

3. Results

3.1. Training Set and Test Set Materials

In our training set, we compiled 739 cases, including 347 (47.0%) identified as LARC. From these cases, we obtained 5464 CT images, with 1897 (36.7%) classified as CRM-positive and 3267 (63.3%) as CRM-negative. Using the cropping method focused on the inner pelvic edge, we processed these images for training using the ResNet50 model, a multiscale squeeze and excitation model. For test set 1, we gathered 134 cases with 63 (47.0%) LARC instances, resulting in 1307 CT images (481 CRM-positive and 826 CRM-negative). Test set 2 comprised 197 cases, including 108 (54.8%) LARC cases, yielding 1268 images (502 CRM-positive, 766 CRM-negative) (Table 1).

3.2. Model Performance by Image

Upon completing image processing, we fed the data into our deep learning algorithm. In test set 1, the AI model achieved a sensitivity, specificity, accuracy, and balanced accuracy of 0.81, with an AUC of 0.89. For test set 2, the model achieved a sensitivity of 0.75, specificity of 0.81, accuracy of 0.79, balanced accuracy of 0.78, and AUC of 0.86. These results illustrate the AI’s capability in accurately interpreting CRM-positive CT images, closely aligning with the performance of experienced specialists (Table 3, Supplement S1).

Table 3.

Prediction of CRM-positive images by Model 1.

3.3. Model Performance by Patient

We next assessed whether the AI’s ability to interpret LARC cases paralleled that of specialists. A voting system was employed for this purpose, initially using hard voting (binary classification of images). The optimal AI performance in test set 1 was achieved with a one-fifth threshold, resulting in an AUC of 0.84 and a binary accuracy (BA) of 0.84. In test set 2, the best performance was at a one-fourth threshold, with an AUC of 0.79 and a BA of 0.79. Switching to soft voting, which uses the summation of probabilities for assessments, improved the AI’s performance: the highest performance was at a one-third threshold, achieving an AUC of 0.93 and BA of 0.88 in test set 1 and an AUC of 0.88 and BA of 0.83 in test set 2 (Table 4, Supplement S2).

Table 4.

Prediction of LARC cases using Model 1 with hard and soft voting systems.

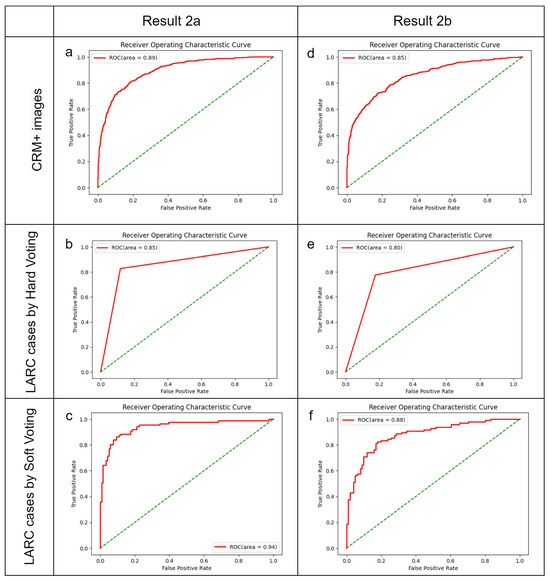

3.4. Expanding the Training Set

To enhance the model’s capabilities, we combined the data from testing sets 1 and 2 to form new training sets for Models 2a and 2b. Model 2a was trained with 936 patients (6732 images, 123.2% more than the original training set) and Model 2b with 873 patients (6771 images, 123.9% more than the original training set). For Model 2a in test set 1, we noted an image identification BA of 0.83 and an AUC of 0.89. The best LARC identification was achieved at a one-third threshold in hard voting (BA: 0.85, AUC: 0.86) and a half threshold in soft voting (BA: 0.89, AUC: 0.94). In test set 2, Model 2b’s image identification showed a BA of 0.77 and AUC of 0.85. The optimal LARC identification occurred at a one-fourth threshold in hard voting (BA: 0.80, AUC: 0.80) and at one-third and one-fifth thresholds in soft voting (BA: 0.82/0.83, AUC: 0.88) (Figure 4, Table 5, Supplement S3).

Figure 4.

Optimal diagnostic outcomes using Models 2a and 2b. The green dotted line indicate randomize classifier. (a–c) Performance of Model 2a, amalgamating data from the training set and test set 2, when applied to test set 1. (a) Identification of CRM-threatening features indicative of LARC; (b) determination of LARC status using a hard voting threshold of one-third; (c) assessment of LARC cases employing a soft voting threshold of a half. (d–f) Efficacy of Model 2b, integrating data from the training set and test set 1, utilized on test set 2. (d) Image analysis for CRM-threatening features associated with LARC; (e) LARC case adjudication based on a hard voting threshold of one-fourth; (f) LARC case determination via a soft voting threshold of one-third.

Table 5.

Identification of CRM-positive images and LARC cases by Model 2a (trained with training set + test set 2) and Model 2b (trained with training set + test set 1).

3.5. Prediction Results and Survival Analysis in Test Set 2

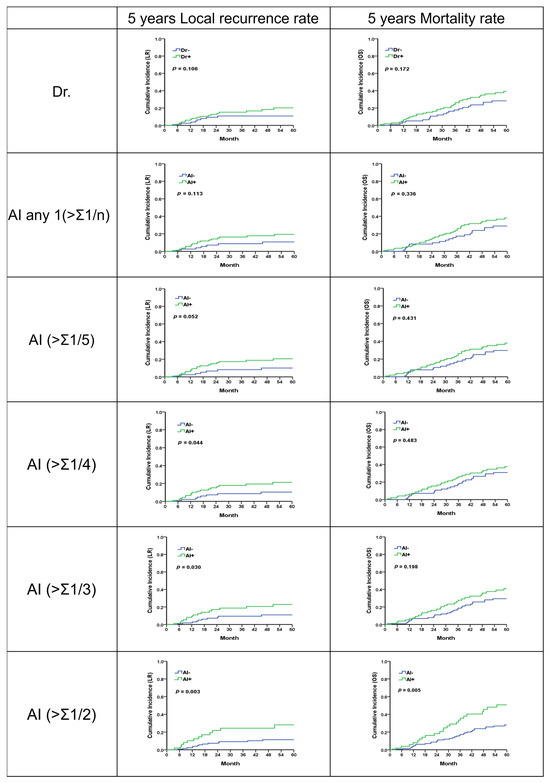

Regarding patients with stage 2 or 3 rectal cancer who underwent surgical treatment, over an average follow-up period of 49.3 months, it was observed that locally advanced rectal cancer (LARC), as interpreted by physicians, was associated with higher rates of local recurrence (LR) and mortality, though these findings were not statistically significant (p = 0.106; 0.172).

When LARC was identified using artificial intelligence (AI), the number of LARC cases identified decreased as the risk threshold was increased (from 108 cases at a threshold of 0.20 to 51 cases at a threshold of 0.50). Despite the reduction in identified cases, there was a consistent trend of higher LR rates among the identified LARC cases across all risk thresholds. Notably, the trend of increased LR risk became significantly more pronounced when the threshold was raised from 0.20 to 0.50 (p = 0.052 to p = 0.003). The predictive value for mortality, when compared with physician interpretation, showed a similar trend towards higher rates but was not statistically significant (Figure 5, Table 6, Supplement S4). This result demonstrates that within the threshold range of 0.20 to 0.50, AI’s predictions consistently showed clinical significance, indicating that AI can reliably interpret meaningful outcomes.

Figure 5.

Five-year local recurrence and overall survival rates for LARC from physician and analysis of different AI methodologies.

Table 6.

Summary of disease survival time in interpretation of physician and AI in each threshold using soft voting. The disease survival status was determined using the local recurrent time and overall survival time.

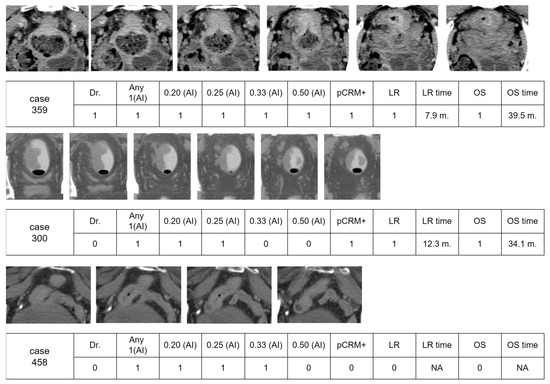

3.6. Visual Examples of Interpretation by AI and Doctor

Figure 6 presents a series of image cases with interpretations from both a doctor and artificial intelligence (AI) across various thresholds. The evaluated clinical outcomes include the positive pathological circumferential margin (pCRM), the time until local recurrence (LR), and overall survival (OS).

Figure 6.

Three visual examples of interpretation results by a physician and AI. The surgery outcome with a positive pathological circumferential margin (pCRM), disease survival of local recurrence, and overall survival time were also recorded. The following abbreviations are used: pCRM+, positive pathological circumferential margin; LR, local recurrence; OS, overall survival.

For Case 359, interpretations by both the doctor and AI indicated positive results, leading to positive pCRM and local recurrence at 7.9 months, respectively, with overall survival times of 39.5 months. Case 300 highlights a scenario where the AI identified a few images with a positive CRM, which was overlooked by the doctor. This patient experienced a local recurrence at 12.3 months and succumbed to the disease at 34.0 months.

In Case 458, which featured a higher-positioned rectal cancer near the small intestine, the physician determined that surgical resection would not pose a risk of positive circumferential resection margin (pCRM), suggesting confidence in achieving clear margins and thus reducing the likelihood of local recurrence. Despite this professional assessment, an artificial intelligence (AI) system identified the cancer as locally advanced rectal cancer (LARC) at risk thresholds ranging from 0.20 to 0.33. This classification by the AI was likely influenced by the visual proximity of the rectum to the small intestine in several images, which could be interpreted as a more aggressive or advanced disease. However, following surgery, the patient was found to have stage II cancer and did not experience any disease recurrence over a prolonged period.

4. Discussion

4.1. Integration of Key Results with Existing Research

Our study’s crucial discovery is that AI can accurately interpret CT images for the diagnosis of locally advanced rectal cancer (LARC), despite the absence of a comparative model. By conducting a comparative analysis with the results from the MERCURY trial(4), which used MRI to assess rectal cancer, we found that local recurrence rates for LARC were comparable between our study and the MERCURY trial (22.9% vs. 26.3%). This comparison emphasizes the AI’s predictive accuracy, showcasing its potential value even without direct model comparisons. Moreover, our AI model’s high sensitivity, specificity, accuracy, and predictive value in differentiating CRM-positive from CRM-negative images are comparable to the performance of experienced radiologists. This underscores the substantial promise of AI in enhancing medical diagnostics.

4.2. Significant Achievements and Contributions

This research makes a substantial contribution by applying AI in series analysis of medical images, a domain traditionally reliant on human expertise. The use of hard and soft voting techniques enables the AI model to interpret multiple images for a comprehensive diagnosis, highlighting its potential in complex diagnostic scenarios. This extends AI’s application beyond state-of-the-art methods [26,27] such as single-image analysis, demonstrating more intricate diagnostic tasks.

Moreover, this study’s findings on the AI model’s ability to predict local recurrence risks in LARC cases underscore the potential role of AI in clinical decision making and prognostication, aligning with recent advances in AI for personalized treatment planning [28,29,30,31,32].

4.3. Combining Current Findings with Original Study Aspects

For newly diagnosed rectal cancer, understanding whether there is organ metastasis and evaluating local staging are crucial. LARC, defined as CRM-threatening, necessitates neoadjuvant chemoradiation therapy [3,5]. While MRI is the recommended imaging modality [4,10], its limited availability has necessitated reliance on CT scans. Our study’s use of CT interpretations by experienced physicians for deep learning algorithm training shows that AI can quickly detect LARC with enough data, providing a viable alternative to MRI.

Identifying LARC involves understanding the spatial relationship between the cancer and other pelvic structures. Our approach, using 2D image feature recognition and voting systems, successfully tackles this challenge, offering a method that closely matches the accuracy of professional physicians’ interpretations.

4.4. State-of-the-Art Method for CRM+ Images and LARC Cases

Our study introduces an adaptation of the EfficientNetB0 architecture and a novel voting mechanism for detecting locally advanced rectal cancer (LARC) via CT scans. EfficientNetB0 is engineered to maximize accuracy with minimal parameters, tackling the challenge of scarce high-quality medical imaging data and representing state-of-the-art methods in recognizing CRM-positive images. To further enhance LARC case identification, we employed our innovative voting system, combining hard and soft voting approaches. Typically, a case consists of 3 to 10 images, and the challenge lies in determining LARC from a series of image results. Accurately interpreting CRM+ images does not automatically equate to identifying an LARC case, a finding corroborated by our study results; indeed, any single prediction result is often the least accurate. We discovered that employing a voting system for comprehensive risk assessment yields consistent and precise predictive outcomes. This system closely mirrors expert decision making by comprehensively evaluating image series, thereby providing consistent results across different thresholds and ensuring reliable LARC identification. Given the absence of comparative methods for LARC case identification, our CRM+ identification approach with the soft voting system is considered a state-of-the-art method. Importantly, this study goes beyond mere diagnostic accuracy to demonstrate the model’s clinical utility in predicting local recurrence rates and overall survival, bridging the gap between technical accuracy and meaningful clinical outcomes. The strategic data management and model training approach further ensure the system’s operational efficiency and reliability. This integration of innovative architecture and practical clinical relevance marks a significant step forward in leveraging AI for medical diagnostics.

4.5. How to Utilize the AI Prediction Results

In the last three example cases, there was a significant discrepancy between the AI’s predictive assessment and the actual clinical course. This underscores the critical need for the careful evaluation of AI recommendations, especially when they might lead to the overestimation of disease severity based on imaging alone. While AI can provide valuable insights, particularly in complex cases, this scenario highlights its limitations and the irreplaceable value of human clinical judgment in making final treatment decisions. These case outcomes exemplify the importance of integrating AI tools with comprehensive clinical evaluation to ensure accurate diagnosis and appropriate treatment planning.

4.6. Limitations and Future Directions

This study utilized CT images from a single institution, and the limited number of cases could potentially restrict the robustness of its findings. A larger, well-annotated database could enhance the outcomes, offering a richer dataset for analysis and potentially improving the accuracy of AI predictions. Furthermore, this research could be expanded to include MRI imaging in the future. With precise annotation, the superior detail offered by MRI images could lead to the more accurate identification of locally advanced rectal cancer (LARC). Moreover, MRI’s enhanced imaging capabilities could also contribute to the investigation of lymph node involvement, significantly aiding in disease staging.

4.7. Possible Applications of this Research

Utilizing this approach, it may be possible to develop a real-time monitoring system that alerts physicians to the presence of locally advanced rectal cancer (LARC) while they are reviewing CT images. This system could offer objective and immediate feedback, serving as an invaluable tool in clinical settings. Furthermore, during multicenter studies, it could act as an objective reference standard for diagnosing LARC.

Expanding on this foundation, future research that integrates semi-automated segmentation tools [33], localizes colorectal cancer [34], and detects colon polyps [35] in contrast enhancement CT could lead to the creation of automated imaging staging for colorectal cancer. AI has the potential to provide an experienced and objective perspective, serving as a valuable tool in clinical treatment. Such a system could enhance clinical treatment by providing consistent and accurate staging information. In the future, with the aid of GAN [36], it will be possible to more accurately simulate the relationship between rectal tumors and nearby organs, thus defining LARC. By automating the staging process and integrating real-time risk assessment, clinicians can improve the precision of their diagnoses and treatment strategies, leading to better patient outcomes. This illustrates the promise of combining AI with medical expertise to advance healthcare delivery.

5. Conclusions

This study highlights the potential of AI in accurately interpreting CT images for diagnosing locally advanced rectal cancer (LARC), rivaling the precision of experienced radiologists. It underscores the need for further automation and broader datasets in AI diagnostics, particularly in settings where MRI is less accessible. Ultimately, these findings pave the way for integrating AI into routine oncological care, enhancing diagnostic efficiency and accuracy.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/bioengineering11040399/s1, Supplement S1: Model 1 CRM positive Images result; Supplement S2: Model 1 LARC case result; Supplement S3: Model 2a 2b LARC case result; Supplement S4: Test two LR OS survival to sta.

Author Contributions

Conceptualization, C.-Y.L., H.H.-S.L. and O.K.-S.L.; methodology, C.-Y.L., J.C.-H.W., H.H.-S.L. and O.K.-S.L.; software, J.C.-H.W. and Y.-M.K.; validation, J.C.-H.W. and Y.-M.K.; formal analysis, C.-Y.L., Y.-C.L. and P.-Y.C.; investigation, C.-Y.L., Y.-C.L. and P.-Y.C.; resources, C.-Y.L., Y.-C.L. and H.H.-S.L.; data curation, Y.-C.L. and P.-Y.C.; writing—original draft preparation, C.-Y.L., J.C.-H.W., P.-Y.C. and J.-P.C.; writing—review and editing, O.K.-S.L. and H.H.-S.L.; visualization, J.C.-H.W., Y.-M.K. and J.-P.C.; supervision, O.K.-S.L. and H.H.-S.L.; project administration, C.-Y.L. and O.K.-S.L.; funding acquisition, H.H.-S.L., C.-Y.L. and Y.-C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work received funding from various sources, including the National Science and Technology Council (Grants: 110-2811-M-A49-550-MY2, 110-2118-M-A49-002-MY3, 111-2634-F-A49-014-, 112-2321-B-075-002-, 112-2321-B-182A-004), the Taichung Veterans General Hospital (Grants: TCVGH-1125301B, TCVGH-1127102B), the Higher Education Sprout Project of the National Yang Ming Chiao Tung University from the Ministry of Education, and the Yushan Scholar Program of the Ministry of Education, Taiwan.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board I&II of Taichung Veterans General Hospital (CE21235B), and this study was registered at ClinicalTrials.gov (NCT05723965) accessed on 9 February 2023.

Data Availability Statement

The research data supporting the findings of this publication are available upon reasonable request. Interested parties may contact either the corresponding author or the first author for access to these materials.

Acknowledgments

We thank Wan-Yi Tai for her valuable assistance and acknowledge the National Center for High-performance Computing for providing computing resources.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have influenced the work reported in this study.

References

- Heald, R.J.; Ryall, R.D. Recurrence and survival after total mesorectal excision for rectal cancer. Lancet 1986, 1, 1479–1482. [Google Scholar] [CrossRef] [PubMed]

- Nagtegaal, I.D.; Quirke, P. What Is the Role for the Circumferential Margin in the Modern Treatment of Rectal Cancer? J. Clin. Oncol. 2008, 26, 303–312. [Google Scholar] [CrossRef] [PubMed]

- van Gijn, W.; Marijnen, C.A.; Nagtegaal, I.D.; Kranenbarg, E.M.; Putter, H.; Wiggers, T.; Rutten, H.J.; Påhlman, L.; Glimelius, B.; van de Velde, C.J. Preoperative radiotherapy combined with total mesorectal excision for resectable rectal cancer: 12-year follow-up of the multicentre, randomised controlled TME trial. Lancet Oncol. 2011, 12, 575–582. [Google Scholar] [CrossRef] [PubMed]

- Taylor, F.G.; Quirke, P.; Heald, R.J.; Moran, B.J.; Blomqvist, L.; Swift, I.R.; Sebag-Montefiore, D.; Tekkis, P.; Brown, G. Preoperative magnetic resonance imaging assessment of circumferential resection margin predicts disease-free survival and local recurrence: 5-year follow-up results of the MERCURY study. J. Clin. Oncol. 2014, 32, 34–43. [Google Scholar] [CrossRef] [PubMed]

- Bahadoer, R.R.; Dijkstra, E.A.; van Etten, B.; Marijnen, C.A.M.; Putter, H.; Kranenbarg, E.M.; Roodvoets, A.G.H.; Nagtegaal, I.D.; Beets-Tan, R.G.H.; Blomqvist, L.K.; et al. Short-course radiotherapy followed by chemotherapy before total mesorectal excision (TME) versus preoperative chemoradiotherapy, TME, and optional adjuvant chemotherapy in locally advanced rectal cancer (RAPIDO): A randomised, open-label, phase 3 trial. Lancet Oncol. 2021, 22, 29–42. [Google Scholar] [CrossRef] [PubMed]

- Conroy, T.; Bosset, J.-F.; Etienne, P.-L.; Rio, E.; François, É.; Mesgouez-Nebout, N.; Vendrely, V.; Artignan, X.; Bouché, O.; Gargot, D.; et al. Neoadjuvant chemotherapy with FOLFIRINOX and preoperative chemoradiotherapy for patients with locally advanced rectal cancer (UNICANCER-PRODIGE 23): A multicentre, randomised, open-label, phase 3 trial. Lancet Oncol. 2021, 22, 702–715. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.I.; Hong, S.W.; Lim, S.B.; Yang, D.H.; Kim, E.B.; Kim, M.H.; Kim, C.W.; Lee, J.L.; Yoon, Y.S.; Park, I.J.; et al. Risk factors for the failure of endoscopic balloon dilation to manage anastomotic stricture from colorectal surgery: Retrospective cohort study. Surg. Endosc. 2024, 38, 1775–1783. [Google Scholar] [CrossRef]

- Schrag, D.; Shi, Q.; Weiser, M.R.; Gollub, M.J.; Saltz, L.B.; Musher, B.L.; Goldberg, J.; Al Baghdadi, T.; Goodman, K.A.; McWilliams, R.R.; et al. Preoperative Treatment of Locally Advanced Rectal Cancer. N. Engl. J. Med. 2023, 389, 322–334. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.B.; Kim, H.S.; Ham, A.; Chang, J.S.; Shin, S.J.; Beom, S.H.; Koom, W.S.; Kim, T.; Han, Y.D.; Han, D.H.; et al. Role of Preoperative Chemoradiotherapy in Clinical Stage II/III Rectal Cancer Patients Undergoing Total Mesorectal Excision: A Retrospective Propensity Score Analysis. Front. Oncol. 2020, 10, 609313. [Google Scholar] [CrossRef] [PubMed]

- Maizlin, Z.V.; Brown, J.A.; So, G.; Brown, C.; Phang, T.P.; Walker, M.L.; Kirby, J.M.; Vora, P.; Tiwari, P. Can CT replace MRI in preoperative assessment of the circumferential resection margin in rectal cancer? Dis. Colon. Rectum 2010, 53, 308–314. [Google Scholar] [CrossRef]

- Heo, S.H.; Kim, J.W.; Shin, S.S.; Jeong, Y.Y.; Kang, H.K. Multimodal imaging evaluation in staging of rectal cancer. World J. Gastroenterol. 2014, 20, 4244–4255. [Google Scholar] [CrossRef] [PubMed]

- Liew, W.S.; Tang, T.B.; Lin, C.H.; Lu, C.K. Automatic colonic polyp detection using integration of modified deep residual convolutional neural network and ensemble learning approaches. Comput. Methods Programs Biomed. 2021, 206, 106114. [Google Scholar] [CrossRef] [PubMed]

- Muniz, F.B.; Baffa, M.F.O.; Garcia, S.B.; Bachmann, L.; Felipe, J.C. Histopathological diagnosis of colon cancer using micro-FTIR hyperspectral imaging and deep learning. Comput. Methods Programs Biomed. 2023, 231, 107388. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Lan, L.; You, L.; Chen, K.; Peng, L.; Zhao, W.; Song, B.; Wang, Y.; Ji, Z.; Zhou, X. Automated CT Pancreas Segmentation for Acute Pancreatitis Patients by combining a Novel Object Detection Approach and U-Net. Biomed. Signal Process. Control 2023, 81, 104430. [Google Scholar] [CrossRef] [PubMed]

- Wong, P.K.; Yan, T.; Wang, H.; Chan, I.N.; Wang, J.; Li, Y.; Ren, H.; Wong, C.H. Automatic detection of multiple types of pneumonia: Open dataset and a multi-scale attention network. Biomed. Signal Process. Control 2022, 73, 103415. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Guo, S.; Han, L.; Cekderi, A.B. Two-dimensional reciprocal cross entropy multi-threshold combined with improved firefly algorithm for lung parenchyma segmentation of COVID-19 CT image. Biomed. Signal Process. Control 2022, 78, 103933. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.D.; Zhang, Z.; Zhang, X.; Wang, S.H. MIDCAN: A multiple input deep convolutional attention network for COVID-19 diagnosis based on chest CT and chest X-ray. Pattern Recognit. Lett. 2021, 150, 8–16. [Google Scholar] [CrossRef] [PubMed]

- Bhatt, C.; Kumar, I.; Vijayakumar, V.; Singh, K.U.; Kumar, A. The state of the art of deep learning models in medical science and their challenges. Multimed. Syst. 2021, 27, 599–613. [Google Scholar] [CrossRef]

- Skiadopoulos, S.; Karatrantou, A.; Korfiatis, P.; Costaridou, L.; Vassilakos, P.; Apostolopoulos, D.; Panayiotakis, G. Evaluating image denoising methods in myocardial perfusion single photon emission computed tomography (SPECT) imaging. Meas. Sci. Technol. 2009, 20, 104023. [Google Scholar] [CrossRef]

- Hanzouli, H.; Lapuyade-Lahorgue, J.; Monfrini, E.; Delso, G.; Pieczynski, W.; Visvikis, D.; Hatt, M. PECT/CT image denoising and segmentation based on a multi observation and multi scale Markov tree model. In Proceedings of the IEEE Nuclear Science Symposium Conference Record 2013, Seoul, Republic of Korea, 27 October–2 November 2013. [Google Scholar] [CrossRef]

- Shao, W.j.; Ni, J.; Zhu, C. A Hybrid Method of Image Restoration and Denoise of CT Images. In Proceedings of the 2012 Sixth International Conference on Internet Computing for Science and Engineering, Washington, DC, USA, 21–23 April 2012; pp. 117–121. [Google Scholar]

- Guo, S.; Wang, G.; Han, L.; Song, X.; Yang, W. COVID-19 CT image denoising algorithm based on adaptive threshold and optimized weighted median filter. Biomed. Signal Process. Control 2022, 75, 103552. [Google Scholar] [CrossRef] [PubMed]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A Dynamic Histogram Equalization for Image Contrast Enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Lee, Y.; Hwang, H.; Shin, J.; Oh, B.T. Pedestrian detection using multi-scale squeeze-and-excitation module. Mach. Vis. Appl. 2020, 31, 55. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- James, A.P.; Dasarathy, B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion 2014, 19, 4–19. [Google Scholar] [CrossRef]

- Rana, M.; Bhushan, M. Machine learning and deep learning approach for medical image analysis: Diagnosis to detection. Multimed. Tools Appl. 2022, 82, 26731–26769. [Google Scholar] [CrossRef] [PubMed]

- Susič, D.; Syed-Abdul, S.; Dovgan, E.; Jonnagaddala, J.; Gradišek, A. Artificial intelligence based personalized predictive survival among colorectal cancer patients. Comput. Methods Programs Biomed. 2023, 231, 107435. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.H.; Chen, W.C.; Chen, J.B.; Huang, H.C.; Chuang, L.Y. Overall mortality risk analysis for rectal cancer using deep learning-based fuzzy systems. Comput. Biol. Med. 2023, 157, 106706. [Google Scholar] [CrossRef] [PubMed]

- Morís, D.I.; de Moura, J.; Marcos, P.J.; Rey, E.M.; Novo, J.; Ortega, M. Comprehensive analysis of clinical data for COVID-19 outcome estimation with machine learning models. Biomed. Signal Process. Control 2023, 84, 104818. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Raldow, A.C.; Weidhaas, J.B.; Zhou, Q.; Qi, X.S. Prediction of Radiation Treatment Response for Locally Advanced Rectal Cancer via a Longitudinal Trend Analysis Framework on Cone-Beam CT. Cancers 2023, 15, 5142. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.; Zhang, Y.; Hu, J.; Zhou, W.; Hu, X.; Cui, T.; Yue, N.J.; Sun, X.; Nie, K. Radiomics for the Prediction of Pathological Complete Response to Neoadjuvant Chemoradiation in Locally Advanced Rectal Cancer: A Prospective Observational Trial. Bioengineering 2023, 10, 634. [Google Scholar] [CrossRef] [PubMed]

- Hamabe, A.; Ishii, M.; Kamoda, R.; Sasuga, S.; Okuya, K.; Okita, K.; Akizuki, E.; Sato, Y.; Miura, R.; Onodera, K.; et al. Artificial intelligence-based technology for semi-automated segmentation of rectal cancer using high-resolution MRI. PLoS ONE 2022, 17, e0269931. [Google Scholar] [CrossRef] [PubMed]

- Sahoo, P.K.; Gupta, P.; Lai, Y.-C.; Chiang, S.-F.; You, J.-F.; Onthoni, D.D.; Chern, Y.-J. Localization of Colorectal Cancer Lesions in Contrast-Computed Tomography Images via a Deep Learning Approach. Bioengineering 2023, 10, 972. [Google Scholar] [CrossRef] [PubMed]

- Manjunath, K.N.; Siddalingaswamy, P.C.; Prabhu, G.K. Domain-Based Analysis of Colon Polyp in CT Colonography Using Image-Processing Techniques. Asian Pac. J. Cancer Prev. 2019, 20, 629–637. [Google Scholar] [CrossRef]

- Ferreira, A.; Li, J.; Pomykala, K.L.; Kleesiek, J.; Alves, V.; Egger, J. GAN-based generation of realistic 3D volumetric data: A systematic review and taxonomy. Med. Image Anal. 2024, 93, 103100. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).