Contactless Blood Oxygen Saturation Estimation from Facial Videos Using Deep Learning

Abstract

1. Introduction

- It is trained and evaluated on a large-scale multi-modal public benchmark dataset of facial videos.

- It outperforms conventional contactless SpO2 measurement approaches, showing potential for applications in real-world scenarios.

- It provides a deep learning baseline for contactless SpO2 measurement. With this baseline, future research can be benchmarked fairly, facilitating progress in this important emerging field.

2. Literature Review

2.1. Contact-Based SpO2 Measurement

2.2. SpO2 Measurement with RGB Cameras

2.3. Deep Learning-Based Remote Vital Sign Monitoring

2.4. Spatial–Temporal Representation for Vital Sign Estimation

3. Materials and Methods

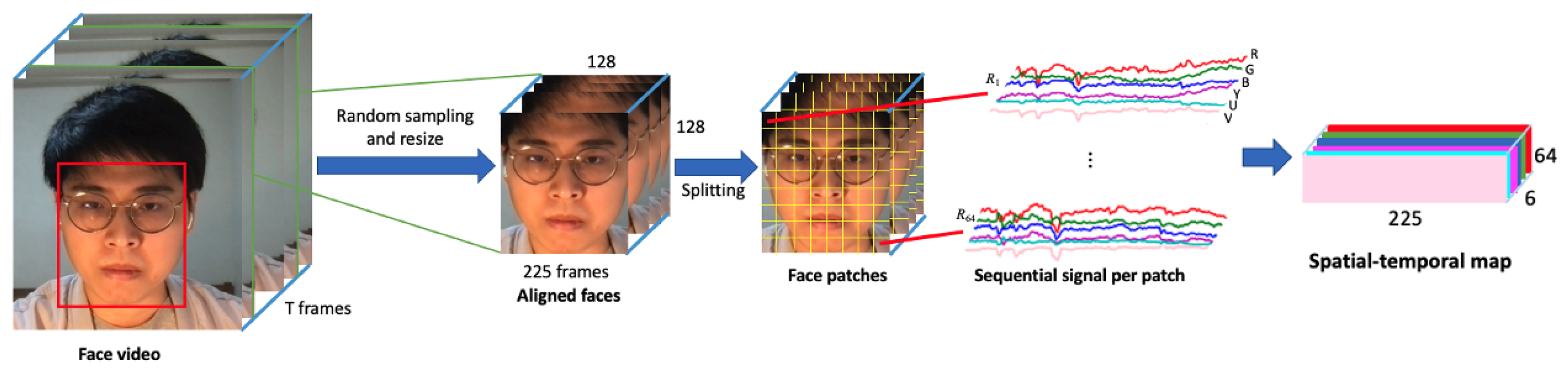

3.1. Spatial–Temporal Map Generation

3.2. SpO2 Estimation Using CNNs

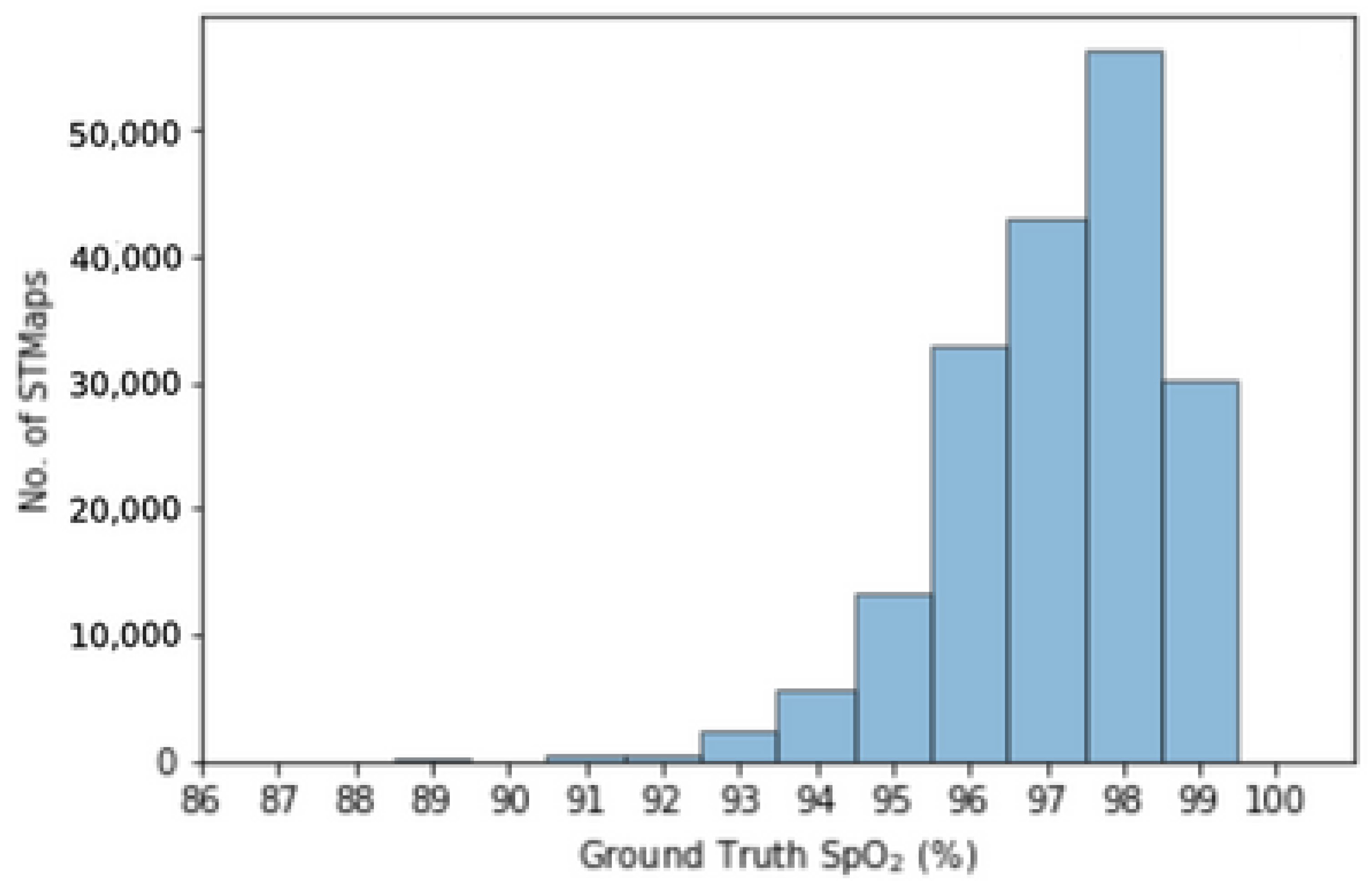

3.3. Dataset

3.4. Evaluation Metrics

- Mean absolute error (MAE) = ;

- Root mean square error (RMSE) = .

3.5. Training Settings

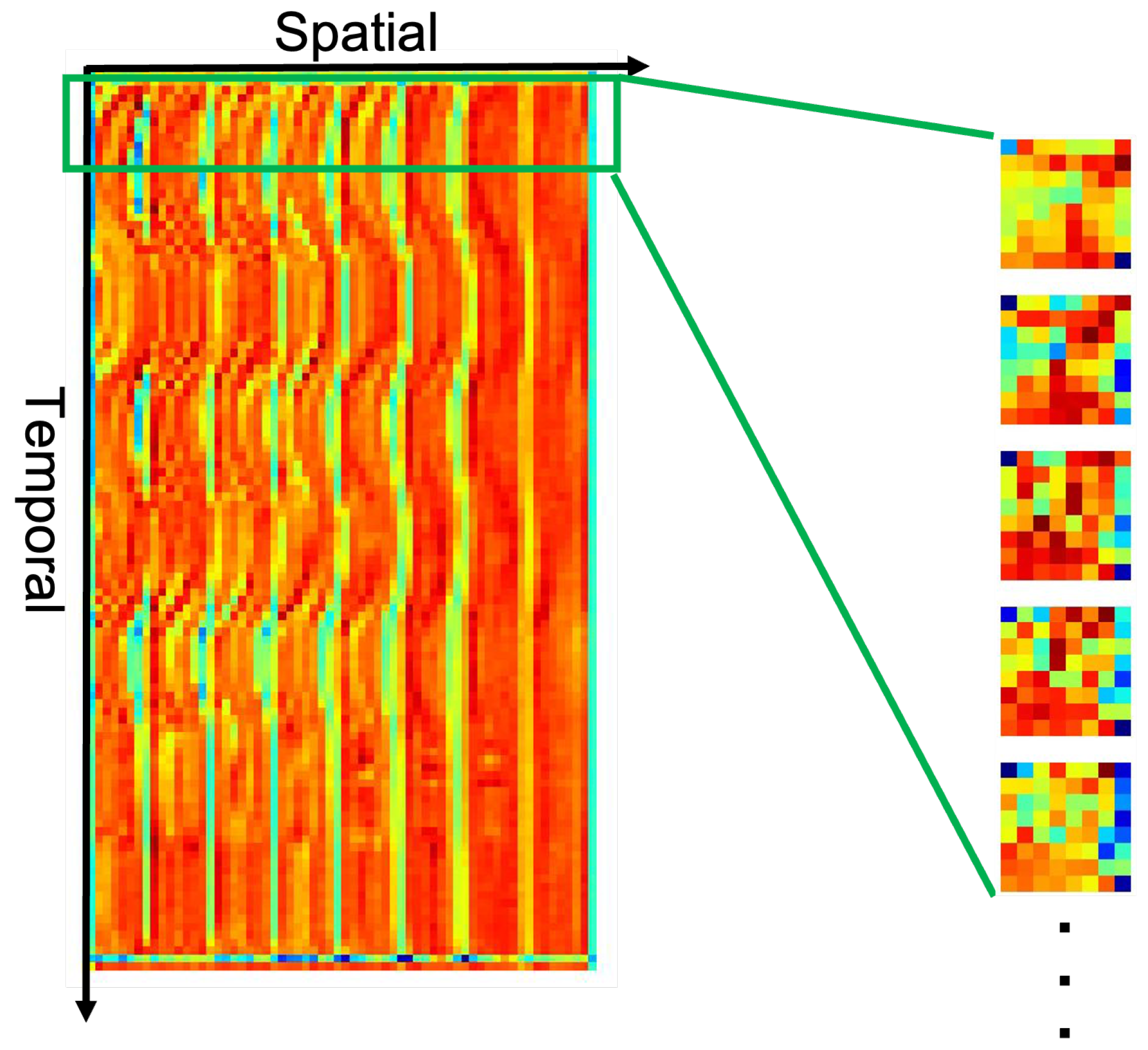

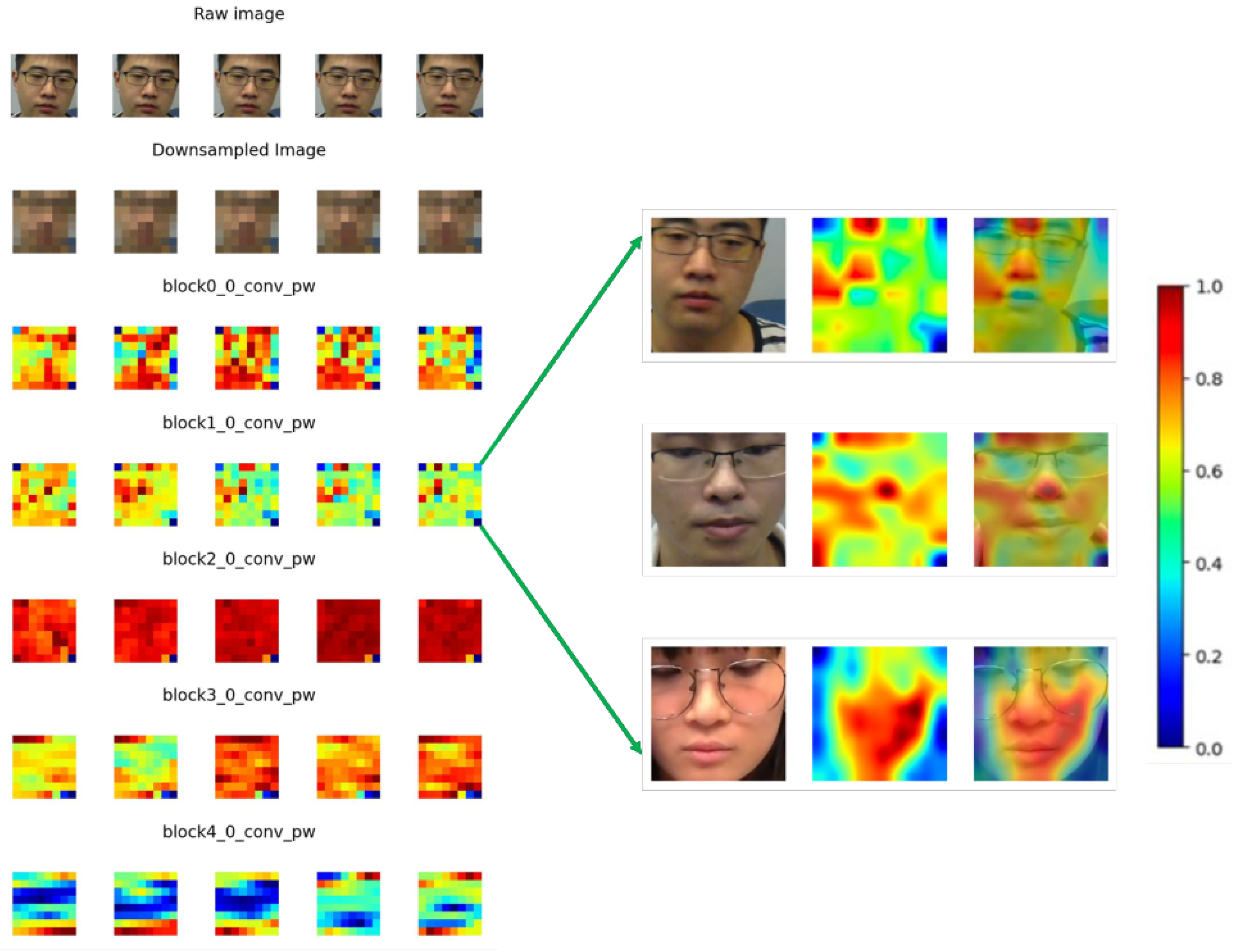

3.6. Feature Map Visualization

4. Results and Discussion

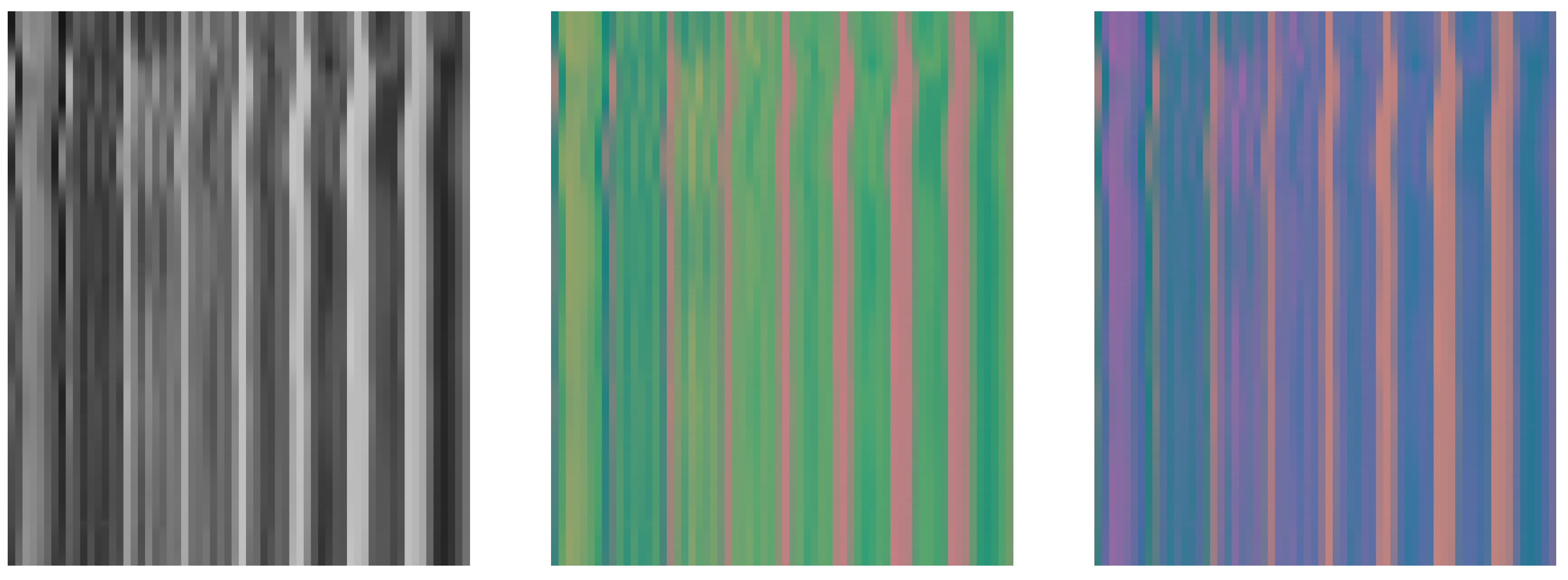

4.1. Performance on STMaps Generated from Different Color Spaces

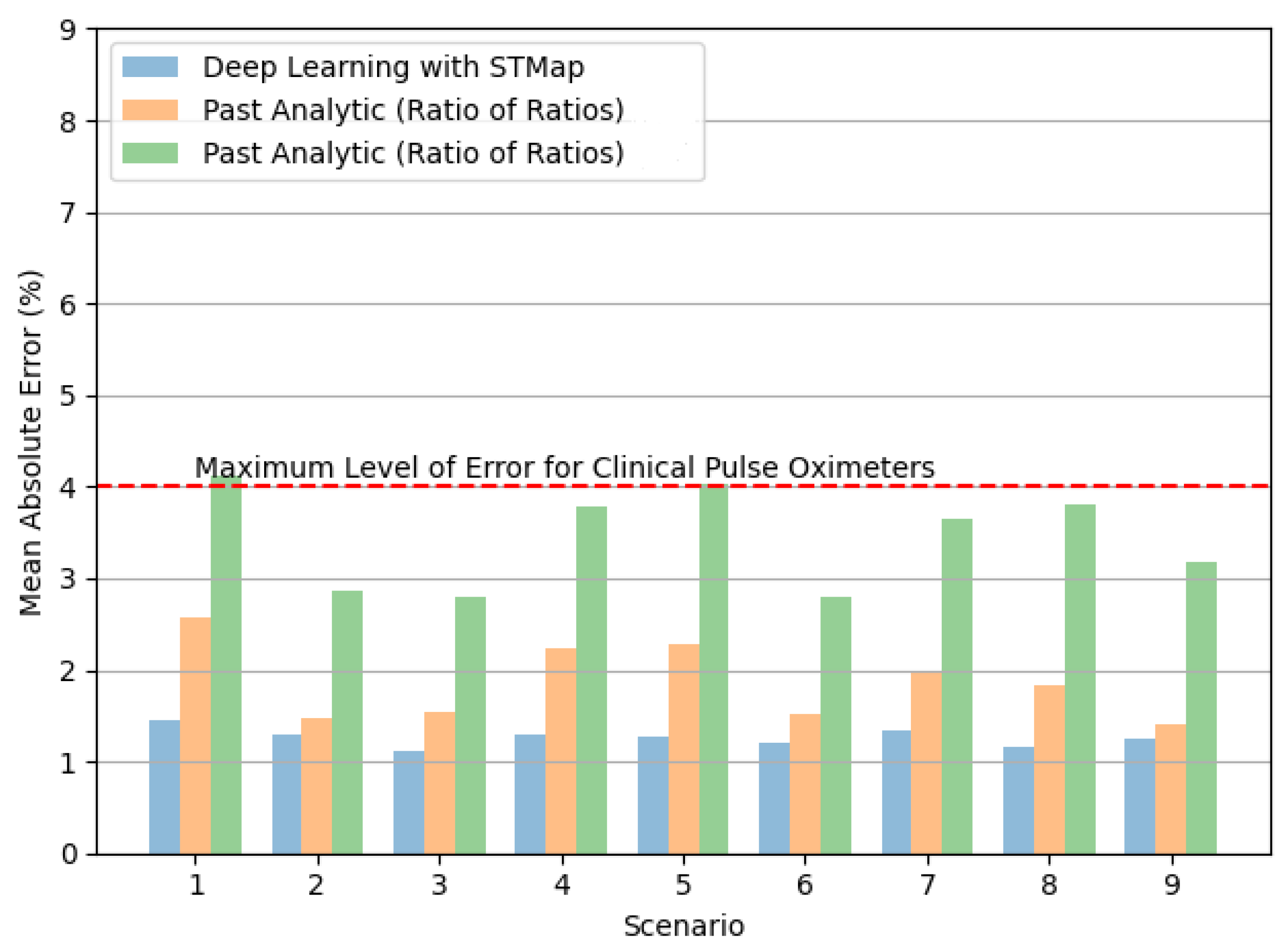

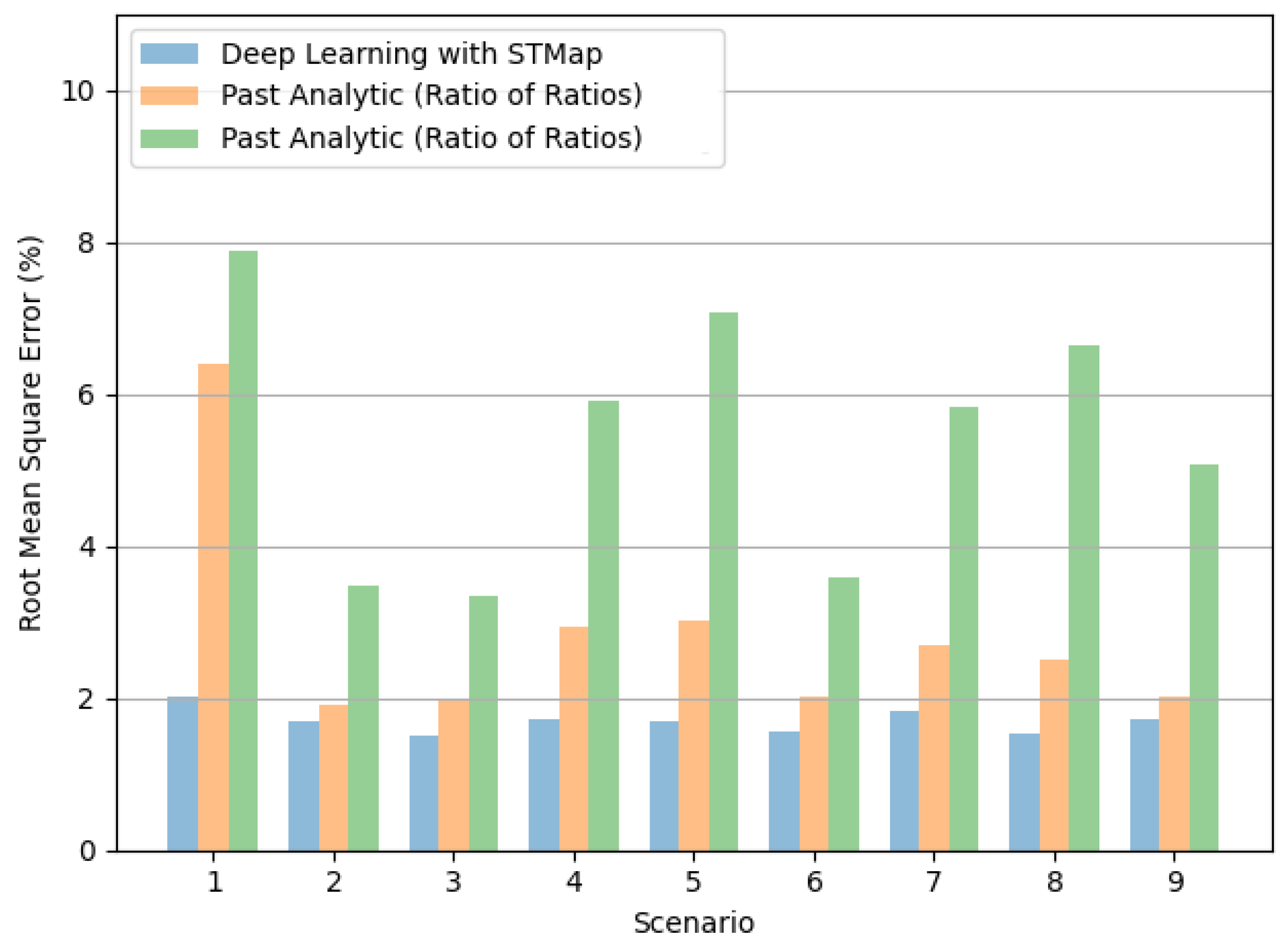

4.2. Performance on Different Subject Scenarios and Acquisition Devices

4.3. Performance over Different SpO2 Ranges

4.4. Feature Maps Learned by CNN Model

5. Conclusions and Future Research Direction

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Castledine, G. The importance of measuring and recording vital signs correctly. Br. J. Nurs. 2006, 15, 285. [Google Scholar] [CrossRef]

- Molinaro, N.; Schena, E.; Silvestri, S.; Bonotti, F.; Aguzzi, D.; Viola, E.; Buccolini, F.; Massaroni, C. Contactless Vital Signs Monitoring from Videos Recorded with Digital Cameras: An Overview. Front. Physiol. 2022, 13, 160. [Google Scholar] [CrossRef]

- Adochiei, F.; Rotariu, C.; Ciobotariu, R.; Costin, H. A wireless low-power pulse oximetry system for patient telemonitoring. In Proceedings of the 2011 7th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 12 May 2011; pp. 1–4. [Google Scholar]

- Dumitrache-Rujinski, S.; Calcaianu, G.; Zaharia, D.; Toma, C.L.; Bogdan, M. The role of overnight pulse-oximetry in recognition of obstructive sleep apnea syndrome in morbidly obese and non obese patients. Maedica 2013, 8, 237. [Google Scholar]

- Ruangritnamchai, C.; Bunjapamai, W.; Pongpanich, B. Pulse oximetry screening for clinically unrecognized critical congenital heart disease in the newborns. Images Paediatr. Cardiol. 2007, 9, 10. [Google Scholar]

- Mitra, B.; Luckhoff, C.; Mitchell, R.D.; O’Reilly, G.M.; Smit, D.V.; Cameron, P.A. Temperature screening has negligible value for control of COVID-19. Emerg. Med. Australas. 2020, 32, 867–869. [Google Scholar] [CrossRef]

- Vilke, G.M.; Brennan, J.J.; Cronin, A.O.; Castillo, E.M. Clinical features of patients with COVID-19: Is temperature screening useful? J. Emerg. Med. 2020, 59, 952–956. [Google Scholar] [CrossRef]

- Pimentel, M.A.; Redfern, O.C.; Hatch, R.; Young, J.D.; Tarassenko, L.; Watkinson, P.J. Trajectories of vital signs in patients with COVID-19. Resuscitation 2020, 156, 99–106. [Google Scholar] [CrossRef]

- Starr, N.; Rebollo, D.; Asemu, Y.M.; Akalu, L.; Mohammed, H.A.; Menchamo, M.W.; Melese, E.; Bitew, S.; Wilson, I.; Tadesse, M.; et al. Pulse oximetry in low-resource settings during the COVID-19 pandemic. Lancet Glob. Health 2020, 8, e1121–e1122. [Google Scholar] [CrossRef]

- Manta, C.; Jain, S.S.; Coravos, A.; Mendelsohn, D.; Izmailova, E.S. An Evaluation of Biometric Monitoring Technologies for Vital Signs in the Era of COVID-19. Clin. Transl. Sci. 2020, 13, 1034–1044. [Google Scholar] [CrossRef]

- Scully, C.G.; Lee, J.; Meyer, J.; Gorbach, A.M.; Granquist-Fraser, D.; Mendelson, Y.; Chon, K.H. Physiological parameter monitoring from optical recordings with a mobile phone. IEEE Trans. Biomed. Eng. 2011, 59, 303–306. [Google Scholar] [CrossRef]

- Ding, X.; Nassehi, D.; Larson, E.C. Measuring oxygen saturation with smartphone cameras using convolutional neural networks. IEEE J. Biomed. Health Inform. 2018, 23, 2603–2610. [Google Scholar] [CrossRef] [PubMed]

- Rouast, P.V.; Adam, M.T.; Chiong, R.; Cornforth, D.; Lux, E. Remote heart rate measurement using low-cost RGB face video: A technical literature review. Front. Comput. Sci. 2018, 12, 858–872. [Google Scholar] [CrossRef]

- Stogiannopoulos, T.; Cheimariotis, G.A.; Mitianoudis, N. A Study of Machine Learning Regression Techniques for Non-Contact SpO2 Estimation from Infrared Motion-Magnified Facial Video. Information 2023, 14, 301. [Google Scholar] [CrossRef]

- Tarassenko, L.; Villarroel, M.; Guazzi, A.; Jorge, J.; Clifton, D.; Pugh, C. Non-contact video-based vital sign monitoring using ambient light and auto-regressive models. Physiol. Meas. 2014, 35, 807. [Google Scholar] [CrossRef]

- Kong, L.; Zhao, Y.; Dong, L.; Jian, Y.; Jin, X.; Li, B.; Feng, Y.; Liu, M.; Liu, X.; Wu, H. Non-contact detection of oxygen saturation based on visible light imaging device using ambient light. Opt. Express 2013, 21, 17464–17471. [Google Scholar] [CrossRef]

- Shao, D.; Liu, C.; Tsow, F.; Yang, Y.; Du, Z.; Iriya, R.; Yu, H.; Tao, N. Noncontact monitoring of blood oxygen saturation using camera and dual-wavelength imaging system. IEEE Trans. Biomed. Eng. 2015, 63, 1091–1098. [Google Scholar] [CrossRef]

- Liao, W.; Zhang, C.; Sun, X.; Notni, G. Oxygen saturation estimation from near-infrared multispectral video data using 3D convolutional residual networks. In Proceedings of the Multimodal Sensing and Artificial Intelligence: Technologies and Applications III. SPIE, Munich, Germany, 9 August 2023; Volume 12621, pp. 177–191. [Google Scholar]

- Freitas, U.S. Remote camera-based pulse oximetry. In Proceedings of the 6th International Conference on eHealth, Telemedicine, and Social Medicine, Barcelona, Spain, 23–27 March 2014; pp. 59–63. [Google Scholar]

- Guazzi, A.R.; Villarroel, M.; Jorge, J.; Daly, J.; Frise, M.C.; Robbins, P.A.; Tarassenko, L. Non-contact measurement of oxygen saturation with an RGB camera. Biomed. Opt. Express 2015, 6, 3320–3338. [Google Scholar] [CrossRef]

- Bal, U. Non-contact estimation of heart rate and oxygen saturation using ambient light. Biomed. Opt. Express 2015, 6, 86–97. [Google Scholar] [CrossRef]

- Casalino, G.; Castellano, G.; Zaza, G. A mHealth solution for contact-less self-monitoring of blood oxygen saturation. In Proceedings of the 2020 IEEE Symposium on Computers and Communications (ISCC), Rennes, France, 7 July 2020; pp. 1–7. [Google Scholar]

- Cheng, J.C.; Pan, T.S.; Hsiao, W.C.; Lin, W.H.; Liu, Y.L.; Su, T.J.; Wang, S.M. Using Contactless Facial Image Recognition Technology to Detect Blood Oxygen Saturation. Bioengineering 2023, 10, 524. [Google Scholar] [CrossRef]

- Cheng, C.H.; Wong, K.L.; Chin, J.W.; Chan, T.T.; So, R.H. Deep Learning Methods for Remote Heart Rate Measurement: A Review and Future Research Agenda. Sensors 2021, 21, 6296. [Google Scholar] [CrossRef]

- Chen, W.; McDuff, D. Deepphys: Video-based physiological measurement using convolutional attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8 September 2018; pp. 349–365. [Google Scholar]

- Liu, X.; Fromm, J.; Patel, S.; McDuff, D. Multi-task temporal shift attention networks for on-device contactless vitals measurement. Adv. Neural Inf. Process. Syst. 2020, 33, 19400–19411. [Google Scholar]

- Yu, Z.; Peng, W.; Li, X.; Hong, X.; Zhao, G. Remote heart rate measurement from highly compressed facial videos: An end-to-end deep learning solution with video enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October 2019; pp. 151–160. [Google Scholar]

- Perepelkina, O.; Artemyev, M.; Churikova, M.; Grinenko, M. HeartTrack: Convolutional neural network for remote video-based heart rate monitoring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14 June 2020; pp. 288–289. [Google Scholar]

- Hu, M.; Qian, F.; Guo, D.; Wang, X.; He, L.; Ren, F. ETA-rPPGNet: Effective time-domain attention network for remote heart rate measurement. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Birla, L.; Shukla, S.; Gupta, A.K.; Gupta, P. ALPINE: Improving remote heart rate estimation using contrastive learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2 January 2023; pp. 5029–5038. [Google Scholar]

- Li, B.; Zhang, P.; Peng, J.; Fu, H. Non-contact PPG signal and heart rate estimation with multi-hierarchical convolutional network. Pattern Recognit. 2023, 139, 109421. [Google Scholar] [CrossRef]

- Sun, W.; Sun, Q.; Sun, H.M.; Sun, Q.; Jia, R.S. ViT-rPPG: A vision transformer-based network for remote heart rate estimation. J. Electron. Imaging 2023, 32, 023024. [Google Scholar] [CrossRef]

- Speth, J.; Vance, N.; Flynn, P.; Czajka, A. Non-contrastive unsupervised learning of physiological signals from video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17 June 2023; pp. 14464–14474. [Google Scholar]

- Ouzar, Y.; Djeldjli, D.; Bousefsaf, F.; Maaoui, C. X-iPPGNet: A novel one stage deep learning architecture based on depthwise separable convolutions for video-based pulse rate estimation. Comput. Biol. Med. 2023, 154, 106592. [Google Scholar] [CrossRef]

- Wang, R.X.; Sun, H.M.; Hao, R.R.; Pan, A.; Jia, R.S. TransPhys: Transformer-based unsupervised contrastive learning for remote heart rate measurement. Biomed. Signal Process. Control 2023, 86, 105058. [Google Scholar] [CrossRef]

- Gupta, K.; Sinhal, R.; Badhiye, S.S. Remote photoplethysmography-based human vital sign prediction using cyclical algorithm. J. Biophotonics 2024, 17, e202300286. [Google Scholar] [CrossRef]

- Othman, W.; Kashevnik, A.; Ali, A.; Shilov, N.; Ryumin, D. Remote Heart Rate Estimation Based on Transformer with Multi-Skip Connection Decoder: Method and Evaluation in the Wild. Sensors 2024, 24, 775. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Chen, J.; Chen, Y.; Chen, A.; Zhou, L.; Wang, X. Pulse rate estimation based on facial videos: An evaluation and optimization of the classical methods using both self-constructed and public datasets. Tradit. Med. Res. 2024, 9, 2. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Y.; Yu, Z.; Lu, H.; Yue, H.; Yang, J. rPPG-MAE: Self-supervised Pretraining with Masked Autoencoders for Remote Physiological Measurements. IEEE Trans. Multimed. 2024. [Google Scholar] [CrossRef]

- Bian, D.; Mehta, P.; Selvaraj, N. Respiratory rate estimation using PPG: A deep learning approach. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20 July 2000; pp. 5948–5952. [Google Scholar]

- Ravichandran, V.; Murugesan, B.; Balakarthikeyan, V.; Ram, K.; Preejith, S.; Joseph, J.; Sivaprakasam, M. RespNet: A deep learning model for extraction of respiration from photoplethysmogram. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23 July 2019; pp. 5556–5559. [Google Scholar]

- Liu, Z.; Huang, B.; Lin, C.L.; Wu, C.L.; Zhao, C.; Chao, W.C.; Wu, Y.C.; Zheng, Y.; Wang, Z. Contactless Respiratory Rate Monitoring for ICU Patients Based on Unsupervised Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17 June 2023; pp. 6004–6013. [Google Scholar]

- Yue, Z.; Shi, M.; Ding, S. Facial Video-based Remote Physiological Measurement via Self-supervised Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13844–13859. [Google Scholar] [CrossRef]

- Brieva, J.; Ponce, H.; Moya-Albor, E. Non-Contact Breathing Rate Estimation Using Machine Learning with an Optimized Architecture. Mathematics 2023, 11, 645. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J.; Kwon, Y.; Kwon, J.; Park, S.; Sohn, R.; Park, C. Multitask Siamese Network for Remote Photoplethysmography and Respiration Estimation. Sensors 2022, 22, 5101. [Google Scholar] [CrossRef] [PubMed]

- Vatanparvar, K.; Gwak, M.; Zhu, L.; Kuang, J.; Gao, A. Respiration Rate Estimation from Remote PPG via Camera in Presence of Non-Voluntary Artifacts. In Proceedings of the 2022 IEEE-EMBS International Conference on Wearable and Implantable Body Sensor Networks (BSN), Ioannina, Greece, 27 September 2022; pp. 1–4. [Google Scholar]

- Ren, Y.; Syrnyk, B.; Avadhanam, N. Improving video-based heart rate and respiratory rate estimation via pulse-respiration quotient. In Proceedings of the Workshop on Healthcare AI and COVID-19, Baltimore, MD, USA, 22 July 2022; pp. 136–145. [Google Scholar]

- Hu, M.; Wu, X.; Wang, X.; Xing, Y.; An, N.; Shi, P. Contactless blood oxygen estimation from face videos: A multi-model fusion method based on deep learning. Biomed. Signal Process. Control 2023, 81, 104487. [Google Scholar] [CrossRef]

- Hamoud, B.; Othman, W.; Shilov, N.; Kashevnik, A. Contactless Oxygen Saturation Detection Based on Face Analysis: An Approach and Case Study. In Proceedings of the 2023 33rd Conference of Open Innovations Association (FRUCT), Zilina, Slovakia, 24 May 2023; pp. 54–62. [Google Scholar]

- Akamatsu, Y.; Onishi, Y.; Imaoka, H. Blood Oxygen Saturation Estimation from Facial Video Via DC and AC Components of Spatio-Temporal Map. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4 June 2023; pp. 1–5. [Google Scholar]

- Gupta, A.; Ravelo-Garcia, A.G.; Dias, F.M. Availability and performance of face based non-contact methods for heart rate and oxygen saturation estimations: A systematic review. Comput. Methods Programs Biomed. 2022, 219, 106771. [Google Scholar] [CrossRef]

- Niu, X.; Shan, S.; Han, H.; Chen, X. Rhythmnet: End-to-end heart rate estimation from face via spatial-temporal representation. IEEE Trans. Image Process. 2019, 29, 2409–2423. [Google Scholar] [CrossRef]

- Niu, X.; Han, H.; Shan, S.; Chen, X. VIPL-HR: A multi-modal database for pulse estimation from less-constrained face video. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2 December 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 562–576. [Google Scholar]

- Severinghaus, J.W. Takuo Aoyagi: Discovery of pulse oximetry. Anesth. Analg. 2007, 105, S1–S4. [Google Scholar] [CrossRef]

- Tian, X.; Wong, C.W.; Ranadive, S.M.; Wu, M. A Multi-Channel Ratio-of-Ratios Method for Noncontact Hand Video Based SpO2 Monitoring Using Smartphone Cameras. IEEE J. Sel. Top. Signal Process. 2022, 16, 197–207. [Google Scholar] [CrossRef]

- Lopez, S.; Americas, R. Pulse oximeter fundamentals and design. Free. Scale Semicond. 2012, 23. [Google Scholar]

- Azhar, F.; Shahrukh, I.; Zeeshan-ul Haque, M.; Shams, S.; Azhar, A. An Hybrid Approach for Motion Artifact Elimination in Pulse Oximeter using MatLab. In Proceedings of the 4th European Conference of the International Federation for Medical and Biological Engineering, Antwerp, Belgium, 23 November 2008; Springer: Berlin/Heidelberg, Germnay, 2009; Volume 22, pp. 1100–1103. [Google Scholar]

- Nitzan, M.; Romem, A.; Koppel, R. Pulse oximetry: Fundamentals and technology update. Med. Devices 2014, 7, 231. [Google Scholar] [CrossRef]

- Mathew, J.; Tian, X.; Wu, M.; Wong, C.W. Remote Blood Oxygen Estimation From Videos Using Neural Networks. arXiv 2021, arXiv:2107.05087. [Google Scholar] [CrossRef]

- Schmitt, J. Optical Measurement of Blood Oxygenation by Implantable Telemetry; Technical Report G558–15; Stanford University: Stanford, CA, USA, 1986. [Google Scholar]

- Takatani, S.; Graham, M.D. Theoretical analysis of diffuse reflectance from a two-layer tissue model. IEEE Trans. Biomed. Eng. 1979, 26, 656–664. [Google Scholar] [CrossRef]

- Sun, Y.; Thakor, N. Photoplethysmography revisited: From contact to noncontact, from point to imaging. IEEE Trans. Biomed. Eng. 2015, 63, 463–477. [Google Scholar] [CrossRef]

- Xiao, H.; Liu, T.; Sun, Y.; Li, Y.; Zhao, S.; Avolio, A. Remote photoplethysmography for heart rate measurement: A review. Biomed. Signal Process. Control 2024, 88, 105608. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13 August 2016; pp. 785–794. [Google Scholar]

- Niu, X.; Yu, Z.; Han, H.; Li, X.; Shan, S.; Zhao, G. Video-based remote physiological measurement via cross-verified feature disentangling. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 295–310. [Google Scholar]

- Yu, Z.; Li, X.; Wang, P.; Zhao, G. Transrppg: Remote photoplethysmography transformer for 3d mask face presentation attack detection. IEEE Signal Process. Lett. 2021, 28, 1290–1294. [Google Scholar] [CrossRef]

- Niu, X.; Han, H.; Shan, S.; Chen, X. Synrhythm: Learning a deep heart rate estimator from general to specific. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20 August 2018; pp. 3580–3585. [Google Scholar]

- Niu, X.; Zhao, X.; Han, H.; Das, A.; Dantcheva, A.; Shan, S.; Chen, X. Robust remote heart rate estimation from face utilizing spatial-temporal attention. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14 May 2019; pp. 1–8. [Google Scholar]

- Baltrusaitis, T.; Zadeh, A.; Lim, Y.C.; Morency, L.P. OpenFace 2.0: Facial Behavior Analysis Toolkit. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face Gesture Recognition (FG 2018), Xi’an, China, 15 May 2018; pp. 59–66. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21 July 2017; pp. 4700–4708. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9 June 2019; pp. 6105–6114. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April 2018. [Google Scholar]

- Yang, Y.; Liu, C.; Yu, H.; Shao, D.; Tsow, F.; Tao, N. Motion robust remote photoplethysmography in CIELab color space. J. Biomed. Opt. 2016, 21, 117001. [Google Scholar] [CrossRef]

- Stricker, R.; Müller, S.; Gross, H.M. Non-contact video-based pulse rate measurement on a mobile service robot. In Proceedings of the The 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25 August 2014; pp. 1056–1062. [Google Scholar]

- International Organization for Standardization. Particular Requirements for Basic Safety and Essential Performance of Pulse Oximeter Equipment; International Organization for Standardization: Geneva, Switzerland, 2011. [Google Scholar]

- Li, X.; Han, H.; Lu, H.; Niu, X.; Yu, Z.; Dantcheva, A.; Zhao, G.; Shan, S. The 1st challenge on remote physiological signal sensing (repss). In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13 June 2020; pp. 314–315. [Google Scholar]

- Fitzpatrick, T.B. The validity and practicality of sun-reactive skin types I through VI. Arch. Dermatol. 1988, 124, 869–871. [Google Scholar] [CrossRef]

- Nowara, E.M.; McDuff, D.; Veeraraghavan, A. A meta-analysis of the impact of skin tone and gender on non-contact photoplethysmography measurements. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13 June 2020; pp. 284–285. [Google Scholar]

- Shirbani, F.; Hui, N.; Tan, I.; Butlin, M.; Avolio, A.P. Effect of ambient lighting and skin tone on estimation of heart rate and pulse transit time from video plethysmography. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20 July 2020; pp. 2642–2645. [Google Scholar]

- Dasari, A.; Prakash, S.K.A.; Jeni, L.A.; Tucker, C.S. Evaluation of biases in remote photoplethysmography methods. NPJ Digit. Med. 2021, 4, 91. [Google Scholar] [CrossRef]

| Model | Params | FLOPs |

|---|---|---|

| EfficientNet-B3 [72] | 9.2 M | 1.0 B |

| ResNet-50 [70] | 26 M | 4.1 B |

| DenseNet-121 [71] | 8 M | 5.7 B |

| RGB | YUV | RGB + YUV | YCrCb | |||||

|---|---|---|---|---|---|---|---|---|

| Model | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE |

| (%) | (%) | (%) | (%) | (%) | (%) | (%) | (%) | |

| EfficientNet-B3 [72] | 1.274 | 1.710 | 1.304 | 1.756 | 1.279 | 1.707 | 1.273 | 1.680 |

| ResNet-50 [70] | 1.309 | 1.741 | 1.307 | 1.750 | 1.321 | 1.781 | 1.423 | 1.939 |

| DenseNet-121 [71] | 1.284 | 1.722 | 1.357 | 1.783 | 1.296 | 1.713 | 1.421 | 1.860 |

| Method | MAE (%) | RMSE (%) |

|---|---|---|

| Deep Learning with STMap (EfficientNet-B3 + RGB) | 1.274 | 1.710 |

| Deep Learning [48] | 1.000 | 1.430 |

| Deep Learning [49] | 1.170 | - |

| Past Analytic (Ratio of Ratios) [22] | 3.334 | 5.137 |

| Past Analytic (Ratio of Ratios) [21] | 1.838 | 2.489 |

| Normal | Abnormal | |||

|---|---|---|---|---|

| Method | MAE | RMSE | MAE | RMSE |

| (%) | (%) | (%) | (%) | |

| Deep Learning with STMap (EfficientNet-B3 + RGB) | 0.978 | 1.288 | 3.077 | 3.563 |

| Past Analytic (Ratio of Ratios) [22] | 3.140 | 4.972 | 6.798 | 7.496 |

| Past Analytic (Ratio of Ratios) [21] | 1.690 | 2.264 | 4.482 | 5.034 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, C.-H.; Yuen, Z.; Chen, S.; Wong, K.-L.; Chin, J.-W.; Chan, T.-T.; So, R.H.Y. Contactless Blood Oxygen Saturation Estimation from Facial Videos Using Deep Learning. Bioengineering 2024, 11, 251. https://doi.org/10.3390/bioengineering11030251

Cheng C-H, Yuen Z, Chen S, Wong K-L, Chin J-W, Chan T-T, So RHY. Contactless Blood Oxygen Saturation Estimation from Facial Videos Using Deep Learning. Bioengineering. 2024; 11(3):251. https://doi.org/10.3390/bioengineering11030251

Chicago/Turabian StyleCheng, Chun-Hong, Zhikun Yuen, Shutao Chen, Kwan-Long Wong, Jing-Wei Chin, Tsz-Tai Chan, and Richard H. Y. So. 2024. "Contactless Blood Oxygen Saturation Estimation from Facial Videos Using Deep Learning" Bioengineering 11, no. 3: 251. https://doi.org/10.3390/bioengineering11030251

APA StyleCheng, C.-H., Yuen, Z., Chen, S., Wong, K.-L., Chin, J.-W., Chan, T.-T., & So, R. H. Y. (2024). Contactless Blood Oxygen Saturation Estimation from Facial Videos Using Deep Learning. Bioengineering, 11(3), 251. https://doi.org/10.3390/bioengineering11030251