DSP-KD: Dual-Stage Progressive Knowledge Distillation for Skin Disease Classification

Abstract

1. Introduction

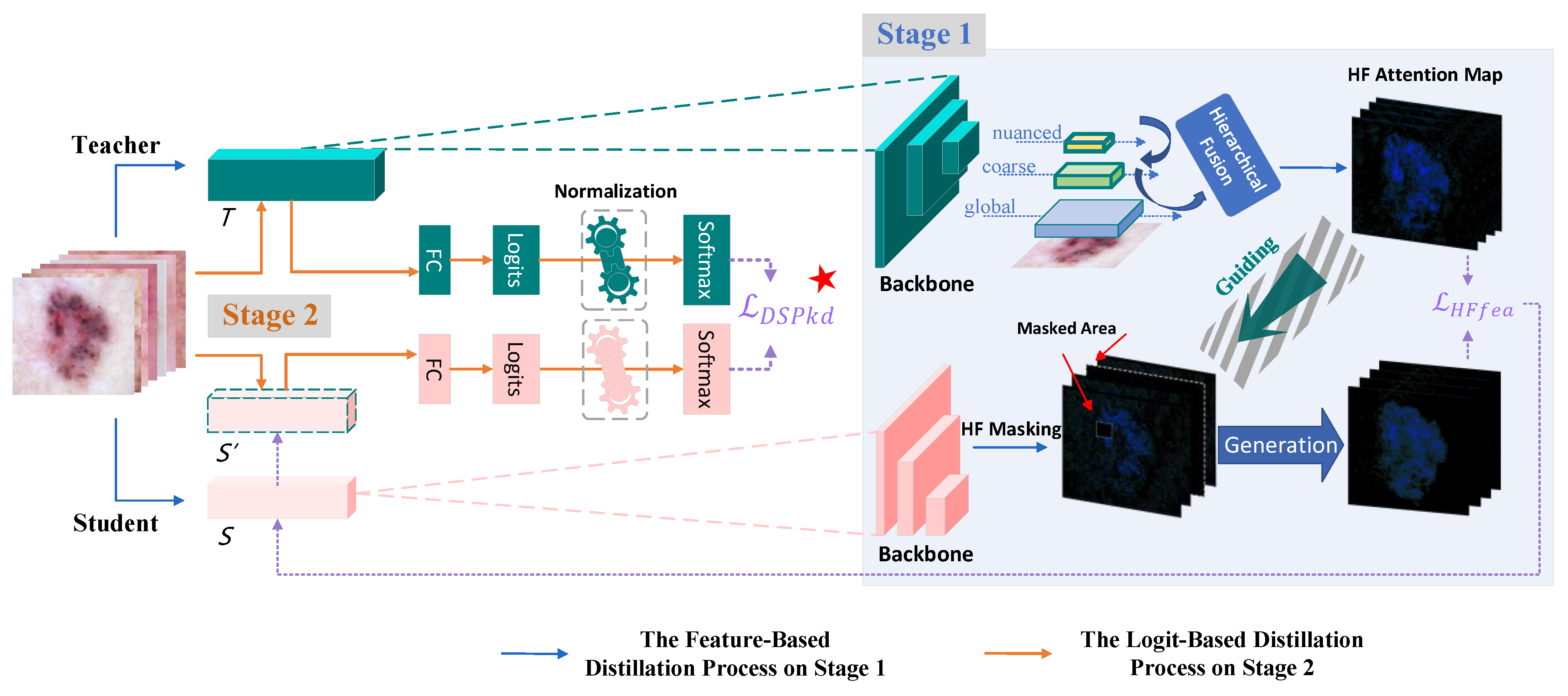

- We propose a novel progressive fusion distillation framework with enhanced diverse knowledge, based on dermatoscopic images. Unlike other approaches employing simultaneous multi-teacher distillation, our framework adopts a dual-stage distillation paradigm with the same teacher. This strategy gradually minimizes teacher–student differences’ adverse impact on performance and maximizes learning “from the inside out” (from feature levels to logit levels).

- In Stage 1, we design a hierarchical fusion masking strategy, serving as a plug-and-play enhancement module. By horizontally fusing shallow texture features and deep semantic features in dermatoscopic images, it effectively guides the student in the first stage to reconstruct the feature maps used for prediction.

- In Stage 2, the student model undergoes a secondary distillation with the teacher model after updating its network parameters through Stage 1. A normalization operation is introduced during this process to enrich information for predicting labels and improve fitting.

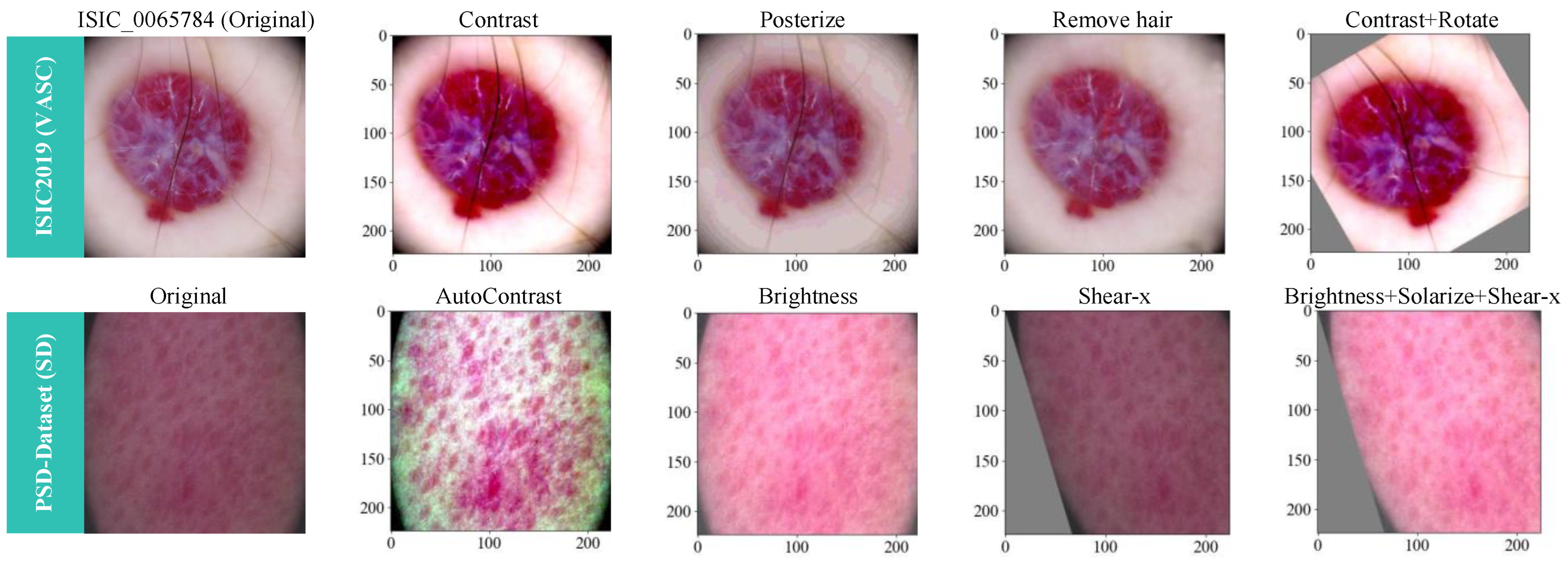

- Extensive experiments are conducted on both the publicly available ISIC2019 dataset, which focuses on high-risk malignant skin cancer, and our private dataset featuring common skin diseases like acne. To further enhance model performance, we apply the RandAugment data augmentation method and a pseudo-shadow removal technique during dataset preprocessing.

- The effectiveness of our KD framework and the benefits brought about by its key components are significantly validated through comparisons with baseline models and other cutting-edge KD methods.

2. Related Work

2.1. Skin Disease Classification with CNN

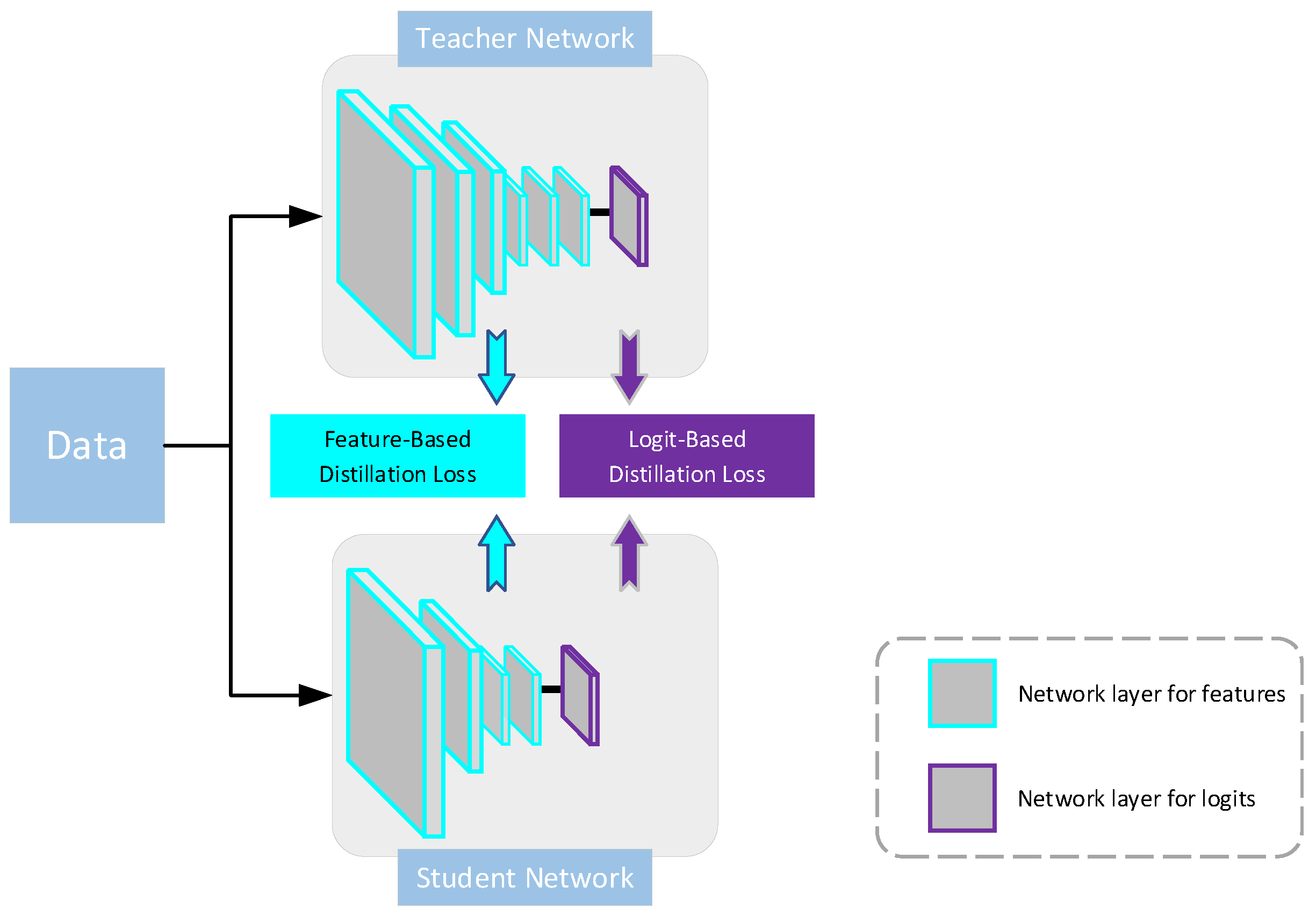

2.2. Knowledge Distillation

3. Proposed Framework: DSP-KD

3.1. Overall Structure

3.2. Methodology on Stage 1

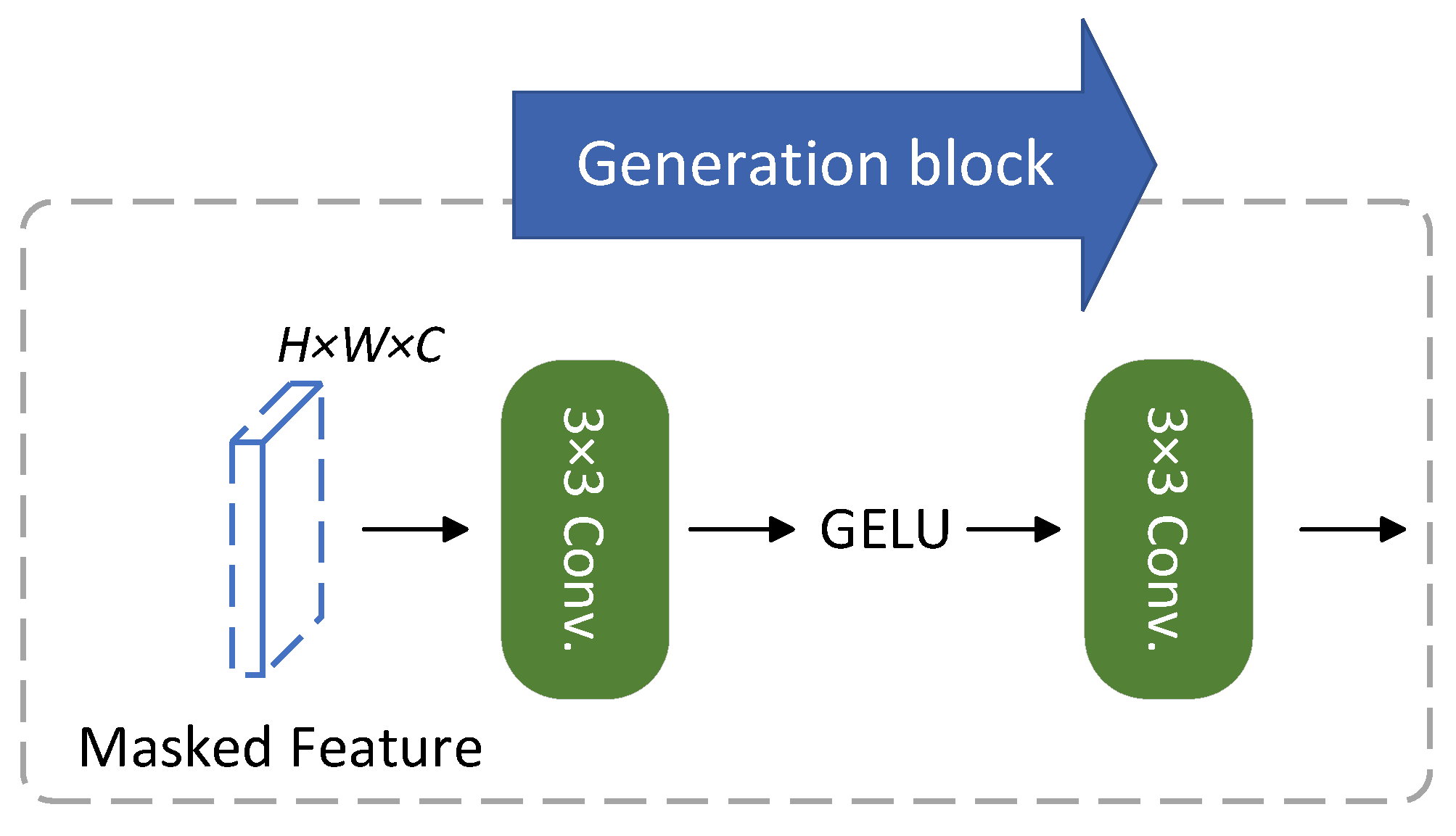

3.2.1. Masked Generative Distillation (MGD)

3.2.2. Hierarchical Fusion Masking Strategy

3.3. Methodology on Stage 2

Normalized Logits

4. Experiments and Results

4.1. Datasets and Data Preprocessing

4.2. Experimental Setup

4.3. Evaluation Metrics

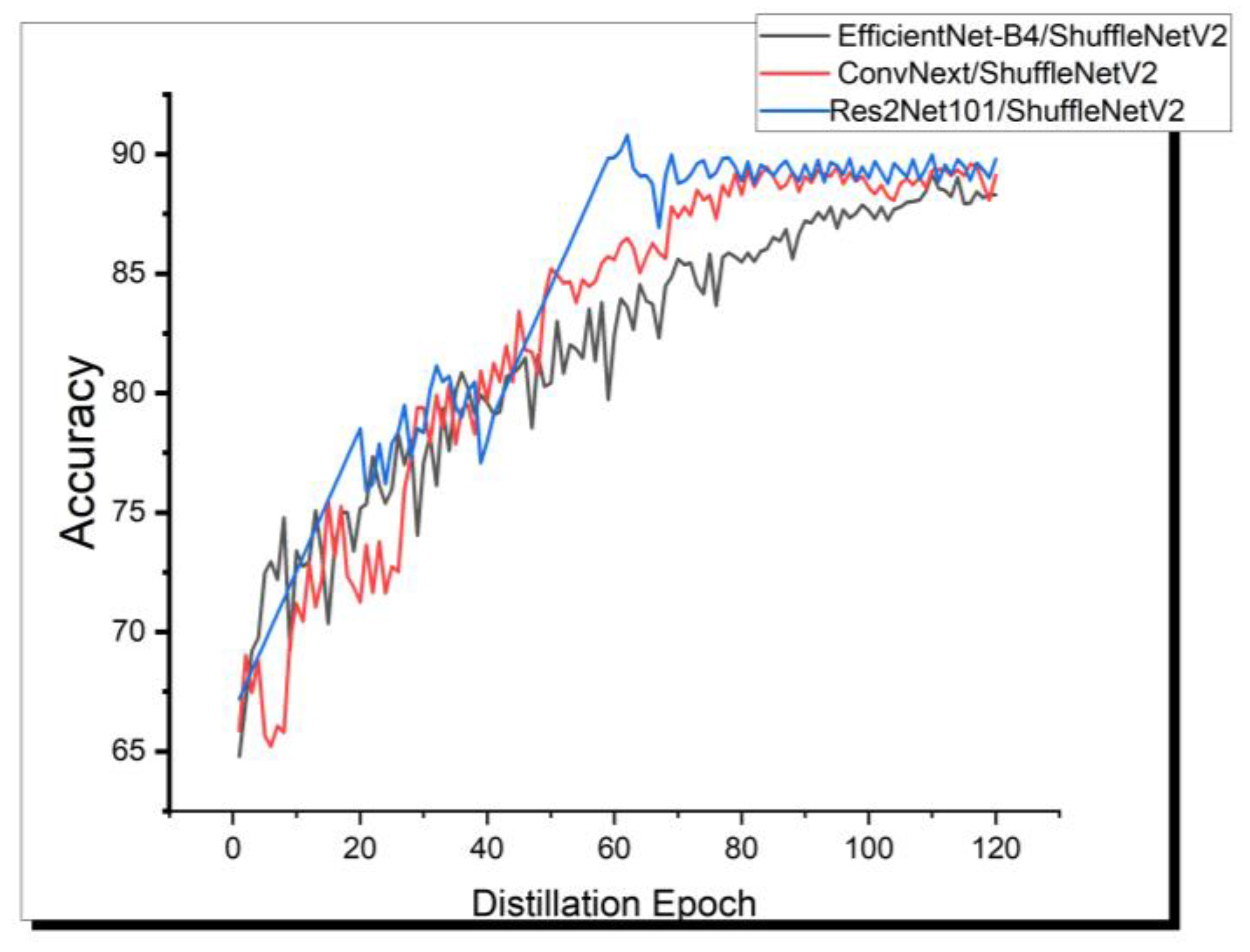

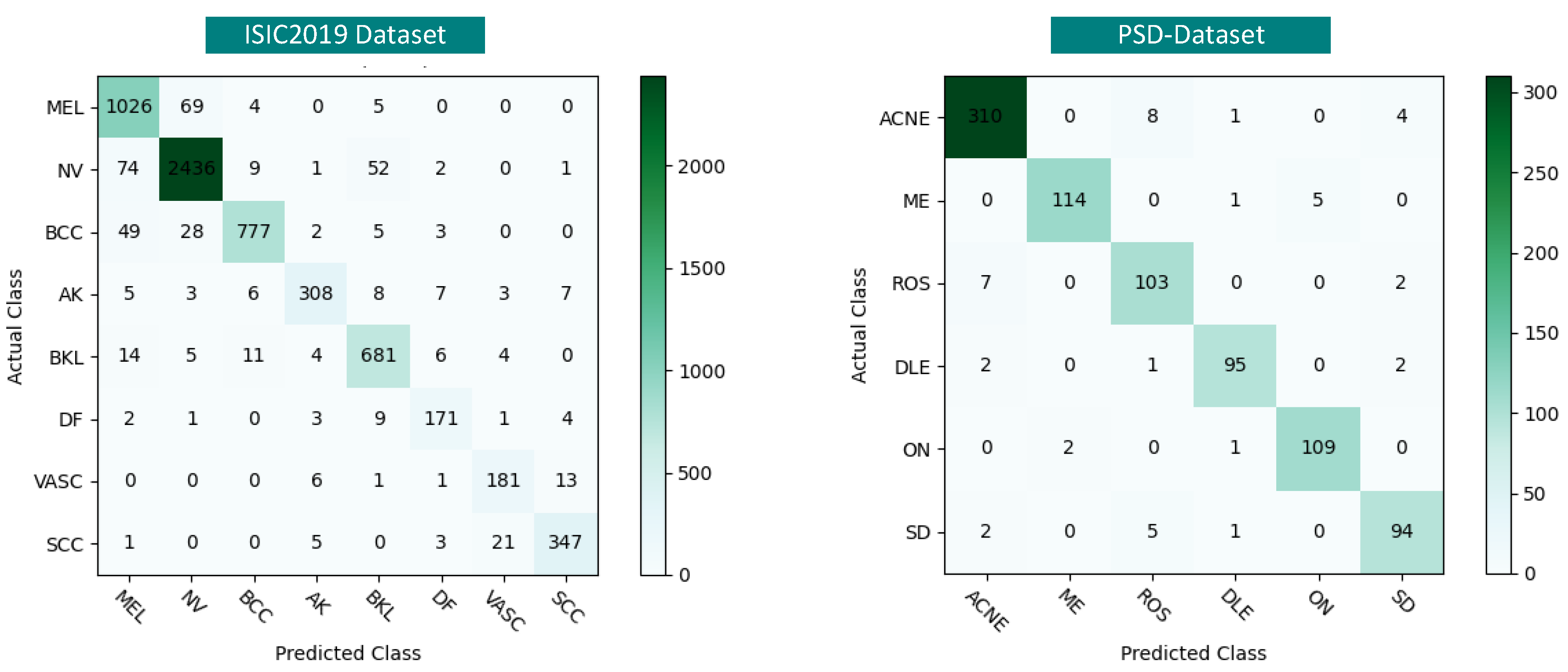

4.4. Results

4.4.1. Comparison of the Potential Teacher and Student Models

4.4.2. Overall Comparison of KD Methods with Different Knowledge Sources

4.4.3. Per-Class Performance Comparison of KD Methods

4.4.4. Time Inference

4.5. Ablation Study of DSP-KD

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Karimkhani, C.; Dellavalle, R.P.; Coffeng, L.E.; Flohr, C.; Hay, R.J.; Langan, S.M.; Nsoesie, E.O.; Ferrari, A.J.; Erskine, H.E.; Silverberg, J.I. Global skin disease morbidity and mortality: An update from the global burden of disease study 2013. JAMA Dermatol. 2017, 153, 406–412. [Google Scholar] [CrossRef] [PubMed]

- Gordon, R. Skin cancer: An overview of epidemiology and risk factors. Semin. Oncol. Nurs. 2013, 29, 160–169. [Google Scholar] [CrossRef] [PubMed]

- Abbas, Q.; Garcia, I.; Rashid, M. Automatic skin tumour border detection for digital dermoscopy using a new digital image analysis scheme. Br. J. Biomed. Sci. 2010, 67, 177–183. [Google Scholar] [CrossRef]

- Walter, F.M.; Prevost, A.T.; Vasconcelos, J.; Hall, P.N.; Burrows, N.P.; Morris, H.C.; Kinmonth, A.L.; Emery, J.D. Using the 7-point checklist as a diagnostic aid for pigmented skin lesions in general practice: A diagnostic validation study. Br. J. Gen. Pract. 2013, 63, e345–e353. [Google Scholar] [CrossRef]

- Jensen, J.D.; Elewski, B.E. The ABCDEF rule: Combining the “ABCDE rule” and the “ugly duckling sign” in an effort to improve patient self-screening examinations. J. Clin. Aesthetic Dermatol. 2015, 8, 15. [Google Scholar]

- Benyahia, S.; Meftah, B.; Lézoray, O. Multi-features extraction based on deep learning for skin lesion classification. Tissue Cell 2022, 74, 101701. [Google Scholar] [CrossRef] [PubMed]

- Singh, P.; Sizikova, E.; Cirrone, J. CASS: Cross architectural self-supervision for medical image analysis. arXiv 2022, arXiv:2206.04170. [Google Scholar]

- Wang, Y.; Wang, Y.; Cai, J.; Lee, T.K.; Miao, C.; Wang, Z.J. Ssd-kd: A self-supervised diverse knowledge distillation method for lightweight skin lesion classification using dermoscopic images. Med. Image Anal. 2023, 84, 102693. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. Stat 2015, 1050, 9. [Google Scholar]

- Wang, L.; Yoon, K.-J. Knowledge distillation and student-teacher learning for visual intelligence: A review and new outlooks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3048–3068. [Google Scholar] [CrossRef]

- Chi, Z.; Zheng, T.; Li, H.; Yang, Z.; Wu, B.; Lin, B.; Cai, D. NormKD: Normalized Logits for Knowledge Distillation. arXiv 2023, arXiv:2308.00520. [Google Scholar]

- Chen, W.-C.; Chang, C.-C.; Lee, C.-R. Knowledge distillation with feature maps for image classification. In Proceedings of the Computer Vision–ACCV 2018: 14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Revised Selected Papers, Part III 14. pp. 200–215. [Google Scholar]

- Hsu, Y.-C.; Smith, J.; Shen, Y.; Kira, Z.; Jin, H. A closer look at knowledge distillation with features, logits, and gradients. arXiv 2022, arXiv:2203.10163. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Tang, H. Image Classification based on CNN: Models and Modules. In Proceedings of the 2022 International Conference on Big Data, Information and Computer Network (BDICN), Sanya, China, 20–22 January 2022; pp. 693–696. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1–9. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Hoang, L.; Lee, S.-H.; Lee, E.-J.; Kwon, K.-R. Multiclass skin lesion classification using a novel lightweight deep learning framework for smart healthcare. Appl. Sci. 2022, 12, 2677. [Google Scholar] [CrossRef]

- Kumar, T.S.; Annappa, B.; Dodia, S. Classification of Skin Cancer Images using Lightweight Convolutional Neural Network. In Proceedings of the 2023 4th International Conference for Emerging Technology (INCET), Belgaum, India, 26–28 May 2023; pp. 1–6. [Google Scholar]

- Liu, X.; Yang, L.; Ma, X.; Kuang, H. Skin Disease Classification Based on Multi-level Feature Fusion and Attention Mechanism. In Proceedings of the 2023 IEEE 3rd International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 26–28 May 2023; pp. 1500–1504. [Google Scholar]

- Maqsood, S.; Damaševičius, R. Multiclass skin lesion localization and classification using deep learning based features fusion and selection framework for smart healthcare. Neural Netw. 2023, 160, 238–258. [Google Scholar] [CrossRef]

- Hao, Z.; Guo, J.; Han, K.; Hu, H.; Xu, C.; Wang, Y. VanillaKD: Revisit the Power of Vanilla Knowledge Distillation from Small Scale to Large Scale. arXiv 2023, arXiv:2305.15781. [Google Scholar]

- Zhou, H.; Song, L.; Chen, J.; Zhou, Y.; Wang, G.; Yuan, J.; Zhang, Q. Rethinking Soft Labels for Knowledge Distillation: A Bias–Variance Tradeoff Perspective. In Proceedings of the International Conference on Learning Representations, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. Fitnets: Hints for thin deep nets. arXiv 2014, arXiv:1412.6550. [Google Scholar]

- Kim, J.; Park, S.; Kwak, N. Paraphrasing complex network: Network compression via factor transfer. Adv. Neural Inf. Process. Syst. 2018, 31, 2760–2769. [Google Scholar]

- Yang, Z.; Li, Z.; Shao, M.; Shi, D.; Yuan, Z.; Yuan, C. Masked generative distillation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland; pp. 53–69. [Google Scholar]

- Liu, Y.; Zhang, W.; Wang, J. Adaptive multi-teacher multi-level knowledge distillation. Neurocomputing 2020, 415, 106–113. [Google Scholar] [CrossRef]

- Pham, C.; Hoang, T.; Do, T.-T. Collaborative Multi-Teacher Knowledge Distillation for Learning Low Bit-width Deep Neural Networks. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 6435–6443. [Google Scholar]

- Li, L.; Su, W.; Liu, F.; He, M.; Liang, X. Knowledge Fusion Distillation: Improving Distillation with Multi-scale Attention Mechanisms. Neural Process. Lett. 2023, 55, 6165–6180. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, G.; Zhang, H.; Ji, D. Embedded mutual learning: A novel online distillation method integrating diverse knowledge sources. Appl. Intell. 2023, 53, 11524–11537. [Google Scholar] [CrossRef]

- Khan, M.S.; Alam, K.N.; Dhruba, A.R.; Zunair, H.; Mohammed, N. Knowledge distillation approach towards melanoma detection. Comput. Biol. Med. 2022, 146, 105581. [Google Scholar] [CrossRef]

- Back, S.; Lee, S.; Shin, S.; Yu, Y.; Yuk, T.; Jong, S.; Ryu, S.; Lee, K. Robust skin disease classification by distilling deep neural network ensemble for the mobile diagnosis of herpes zoster. IEEE Access 2021, 9, 20156–20169. [Google Scholar] [CrossRef]

- Combalia, M.; Codella, N.C.; Rotemberg, V.; Helba, B.; Vilaplana, V.; Reiter, O.; Carrera, C.; Barreiro, A.; Halpern, A.C.; Puig, S. Bcn20000: Dermoscopic lesions in the wild. arXiv 2019, arXiv:1908.02288. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 702–703. [Google Scholar]

- Contributors, M. OpenMMLab’s Pre-Training Toolbox and Benchmark. 2023. Available online: https://github.com/open-mmlab/mmpretrain (accessed on 31 August 2023).

- Contributors, M. Openmmlab Model Compression Toolbox and Benchmark. 2021. Available online: https://github.com/open-mmlab/mmrazor (accessed on 15 July 2023).

- Contributors, M. Openmmlab Model Compression Toolbox and Benchmark. 2022. Available online: https://github.com/open-mmlab/mmengine (accessed on 15 July 2023).

- Jin, Y.; Wang, J.; Lin, D. Multi-Level Logit Distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 24276–24285. [Google Scholar]

| Model | Parameters /Million | FLOPs /Giga | Accuracy/% | Precision/% | Specificity/% | F1-Score/% | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| ISIC2019 | PSD-Dataset | ISIC2019 | PSD-Dataset | ISIC2019 | PSD-Dataset | ISIC2019 | PSD-Dataset | |||

| Res2Net101 | 43.174 | 8.102 | 92.59 | 94.66 | 91.96 | 94.26 | 98.08 | 99.35 | 88.67 | 85.24 |

| ConvNeXt | 87.575 | 15.383 | 90.21 | 92.18 | 90.64 | 91.98 | 97.25 | 98.17 | 87.45 | 81.15 |

| EfficientNet-B4 | 19.342 | 1.543 | 89.54 | 91.23 | 88.72 | 92.21 | 97.93 | 98.24 | 87.22 | 82.66 |

| ShuffleNetV1 | 0.912 | 0.143 | 85.37 | 86.14 | 85.11 | 86.97 | 90.63 | 91.45 | 79.63 | 77.32 |

| ShuffleNetV2 | 1.262 | 0.148 | 86.21 | 89.48 | 86.56 | 90.30 | 92.40 | 95.24 | 82.49 | 80.71 |

| EfficientNet-B0 | 5.289 | 0.4 | 82.71 | 83.26 | 82.19 | 82.88 | 88.35 | 88.93 | 76.85 | 75.95 |

| MobileNetV2 | 3.505 | 0.313 | 86.34 | 88.94 | 86.32 | 88.64 | 91.26 | 96.34 | 81.30 | 80.22 |

| Teacher model | Res2Net101 | ConvNext | EfficientNet-B4 | |

| Student model | ShuffleNetV2 | ShuffleNetV2 | ShuffleNetV2 | |

| Teacher accuracy | 92.59 | 90.21 | 89.54 | |

| Student accuracy | 86.21 | 86.21 | 86.21 | |

| Knowledge source | Distillation Method | Accuracy/% | Accuracy/% | Accuracy/% |

| Logits | Vanilla-KD (Hinton et al.) [9] | 87.39 | 86.98 | 87.20 |

| Logits | WSLD (Zhou et al.) [31] | 87.77 | 86.83 | 87.38 |

| Features | Fitnets (Romero et al.) [32] | 88.13 | 87.55 | 86.85 |

| Features | MGD (Yang et al.) [34] | 88.54 | 88.01 | 87.92 |

| Diverse | EML (Li et al.) [38] | 87.92 | 88.95 | 88.34 |

| Diverse | SSD-KD (Wang et al.) [8] | 89.95 | 88.23 | 88.12 |

| Diverse | DSP-KD (Ours) | 92.83 | 89.39 | 89.01 |

| Teacher model | Res2Net101 | ConvNext | EfficientNet-B4 | |

| Student model | ShuffleNetV2 | ShuffleNetV2 | ShuffleNetV2 | |

| Teacher accuracy | 94.66 | 92.18 | 91.23 | |

| Student accuracy | 89.48 | 89.48 | 89.48 | |

| Knowledge source | Distillation Method | Accuracy/% | Accuracy/% | Accuracy/% |

| Logits | Vanilla-KD (Hinton et al.) [9] | 90.11 | 89.93 | 89.03 |

| Logits | WSLD (Zhou et al.) [31] | 90.57 | 90.19 | 89.46 |

| Features | Fitnets (Romero et al.) [32] | 92.36 | 90.64 | 89.96 |

| Features | MGD (Yang et al.) [34] | 91.29 | 91.08 | 90.23 |

| Diverse | EML (Li et al.) [38] | 92.03 | 91.37 | 90.74 |

| Diverse | SSD-KD (Wang et al.) [8] | 93.81 | 92.70 | 91.39 |

| Diverse | DSP-KD (Ours) | 94.93 | 92.89 | 92.01 |

| KD Method | MEL | NV | BCC | AK | BKL | DF | VASC | SCC | Macro-Average (F1) |

|---|---|---|---|---|---|---|---|---|---|

| Vanilla-KD (Hinton et al.) [9] | 86.31 | 86.99 | 79.13 | 74.04 | 78.56 | 71.24 | 75.91 | 73.40 | 78.19 |

| Fitnets (Romero et al.) [32] | 86.06 | 87.58 | 86.96 | 78.37 | 81.67 | 75.21 | 74.30 | 82.44 | 81.57 |

| SSD-KD (Wang et al.) [8] | 87.69 | 90.33 | 88.21 | 86.14 | 85.87 | 78.55 | 83.07 | 88.32 | 86.02 |

| DSP-KD (Ours) | 90.20 | 95.21 | 93.00 | 91.12 | 91.66 | 89.06 | 87.86 | 92.66 | 91.35 |

| KD Method | ACNE | ME | ROS | DLE | ON | SD | Macro-Average (F1) |

|---|---|---|---|---|---|---|---|

| Vanilla-KD (Hinton et al.) [9] | 93.35 | 87.46 | 79.31 | 81.22 | 84.97 | 80.07 | 84.40 |

| Fitnets (Romero et al.) [32] | 93.99 | 89.06 | 80.32 | 84.97 | 85.84 | 86.14 | 86.72 |

| SSD-KD (Wang et al.) [8] | 94.86 | 95.10 | 85.39 | 89.62 | 92.27 | 86.44 | 90.61 |

| DSP-KD (Ours) | 96.27 | 96.61 | 89.96 | 95.48 | 96.46 | 92.16 | 94.49 |

| Method | Top-1 | Parameters /Million | Inference Time/s |

|---|---|---|---|

| Res2Net101(T) | 92.59 | 43.174 | 0.297 |

| ShuffleNetV2(S) | 86.21 | 1.262 | 0.046 |

| wide-ShuffleNet [26] | 85.48 | 1.8 | 0.101 |

| T/S+SSD-KD | 89.95 | 1.13 | 0.072 |

| T/S+DSP-KD | 92.83 | 1.09 | 0.023 |

| Method | Top-1 | Δ |

|---|---|---|

| ShuffleNetV2(S) | 86.21 | - |

| S + MGD (a) | 88.54 | S + 2.33 |

| S + MGD + HF (Stage 1) | 90.89 | a + 2.35 |

| S + VKD (b) | 87.39 | S + 1.18 |

| S + NKD (Stage 2) | 88.28 | b + 0.89 |

| S + MGD + HF + VKD | 91.47 | Stage 1+0.58 |

| S+MGD + HF + NKD (DSP-KD, Ours) | 92.83 | Stage 1 + 1.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, X.; Ji, Z.; Zhang, H.; Chen, R.; Liao, Q.; Wang, J.; Lyu, T.; Zhao, L. DSP-KD: Dual-Stage Progressive Knowledge Distillation for Skin Disease Classification. Bioengineering 2024, 11, 70. https://doi.org/10.3390/bioengineering11010070

Zeng X, Ji Z, Zhang H, Chen R, Liao Q, Wang J, Lyu T, Zhao L. DSP-KD: Dual-Stage Progressive Knowledge Distillation for Skin Disease Classification. Bioengineering. 2024; 11(1):70. https://doi.org/10.3390/bioengineering11010070

Chicago/Turabian StyleZeng, Xinyi, Zhanlin Ji, Haiyang Zhang, Rui Chen, Qinping Liao, Jingkun Wang, Tao Lyu, and Li Zhao. 2024. "DSP-KD: Dual-Stage Progressive Knowledge Distillation for Skin Disease Classification" Bioengineering 11, no. 1: 70. https://doi.org/10.3390/bioengineering11010070

APA StyleZeng, X., Ji, Z., Zhang, H., Chen, R., Liao, Q., Wang, J., Lyu, T., & Zhao, L. (2024). DSP-KD: Dual-Stage Progressive Knowledge Distillation for Skin Disease Classification. Bioengineering, 11(1), 70. https://doi.org/10.3390/bioengineering11010070