Exploiting Information in Event-Related Brain Potentials from Average Temporal Waveform, Time–Frequency Representation, and Phase Dynamics

Abstract

:1. Introduction

2. Method and Results

2.1. EEG Dataset Used

2.2. Calculation and Presentation of the ERP, TF Power, and Phase Dynamics

- R1: Transient dynamic responses that are additive to the ongoing activity.

- R2: Suppression of ongoing oscillatory activity.

- R3: Enhancement of ongoing oscillatory activity.

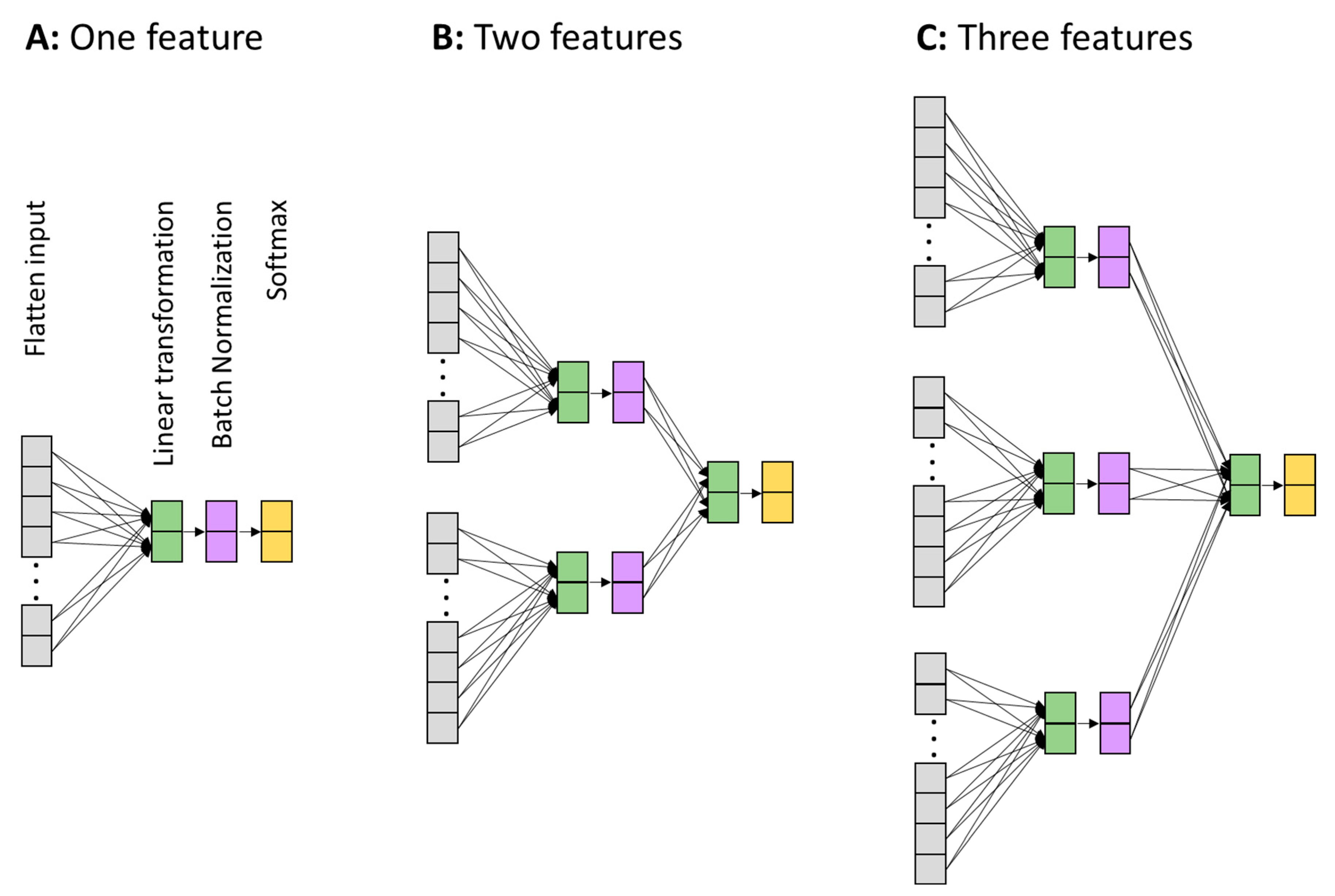

2.3. Demonstration of the Non-Redundancy of Cognitive Information Encoded in Different Neural Features Based on Machine Learning (on Trial-Average Features)

2.4. Demonstration of the Non-Redundancy of Cognitive Information Encoded in Different Neural Features Based on Machine Learning (on Single-Trial Features)

3. Discussion

3.1. Summary

3.2. Implications for Basic Neural Cognitive Research

3.3. Implications for Applied Neuroscience Research

3.4. The Accuracies at Different Levels of Machine Learning Applications: Single Trial and Average Data

3.5. Comparison with Conventional Approaches to Analyzing Neural Activity Data

3.6. Limitations

3.7. Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, T.T. Noise contributions to the fMRI signal: An overview. NeuroImage 2016, 143, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Prince, J.S.; Charest, I.; Kurzawski, J.W.; Pyles, J.A.; Tarr, M.J.; Kay, K.N. Improving the accuracy of single-trial fMRI response estimates using GLMsingle. eLife 2022, 11, e77599. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Li, Z.; Zhang, F.; Gu, R.; Peng, W.; Hu, L. Demystifying signal processing techniques to extract task-related EEG responses for psychologists. Brain Sci. Adv. 2020, 6, 171–188. [Google Scholar] [CrossRef]

- Kim, S.-P. Preprocessing of EEG. In Computational EEG Analysis: Methods and Applications; Springer: Singapore, 2018; pp. 15–33. [Google Scholar]

- Ouyang, G.; Zhou, C. Characterizing the brain’s dynamical response from scalp-level neural electrical signals: A review of methodology development. Cogn. Neurodyn. 2020, 14, 731–742. [Google Scholar] [CrossRef] [PubMed]

- Gibson, E.; Lobaugh, N.J.; Joordens, S.; McIntosh, A.R. EEG variability: Task-driven or subject-driven signal of interest? NeuroImage 2022, 252, 119034. [Google Scholar] [CrossRef] [PubMed]

- Mishra, A.; Englitz, B.; Cohen, M.X. EEG microstates as a continuous phenomenon. Neuroimage 2020, 208, 116454. [Google Scholar] [CrossRef]

- Zhao, D.; Tang, F.; Si, B.; Feng, X. Learning joint space–time–frequency features for EEG decoding on small labeled data. Neural Netw. 2019, 114, 67–77. [Google Scholar] [CrossRef]

- Pei, L.; Northoff, G.; Ouyang, G. Comparative analysis of multifaceted neural effects associated with varying endogenous cognitive load. Commun. Biol. 2023, 6, 795. [Google Scholar] [CrossRef]

- Keil, A.; Bernat, E.M.; Cohen, M.X.; Ding, M.; Fabiani, M.; Gratton, G.; Kappenman, E.S.; Maris, E.; Mathewson, K.E.; Ward, R.T. Recommendations and publication guidelines for studies using frequency domain and time-frequency domain analyses of neural time series. Psychophysiology 2022, 59, e14052. [Google Scholar] [CrossRef]

- Vázquez-Marrufo, M.; Caballero-Díaz, R.; Martín-Clemente, R.; Galvao-Carmona, A.; González-Rosa, J.J. Individual test-retest reliability of evoked and induced alpha activity in human EEG data. PLoS ONE 2020, 15, e0239612. [Google Scholar] [CrossRef]

- Peterson, V.; Galván, C.; Hernández, H.; Spies, R. A feasibility study of a complete low-cost consumer-grade brain-computer interface system. Heliyon 2020, 6, e03425. [Google Scholar] [CrossRef]

- Wu, D.; Xu, Y.; Lu, B.-L. Transfer learning for EEG-based brain–computer interfaces: A review of progress made since 2016. IEEE Trans. Cogn. Dev. Syst. 2020, 14, 4–19. [Google Scholar] [CrossRef]

- Berger, H. Über das elektroenkephalogramm des menschen. Arch. Psychiatr. Nervenkrankh. 1929, 87, 527–570. [Google Scholar] [CrossRef]

- Gevins, A.; Smith, M.E. Neurophysiological measures of working memory and individual differences in cognitive ability and cognitive style. Cereb. Cortex 2000, 10, 829–839. [Google Scholar] [CrossRef] [PubMed]

- Klimesch, W. EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 1999, 29, 169–195. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Stancak Jr, A.; Neuper, C. Event-related synchronization (ERS) in the alpha band—An electrophysiological correlate of cortical idling: A review. Int. J. Psychophysiol. 1996, 24, 39–46. [Google Scholar] [CrossRef]

- Sauseng, P.; Klimesch, W.; Gruber, W.R.; Hanslmayr, S.; Freunberger, R.; Doppelmayr, M. Are event-related potential components generated by phase resetting of brain oscillations? A critical discussion. Neuroscience 2007, 146, 1435–1444. [Google Scholar] [CrossRef]

- Herrmann, C.S.; Rach, S.; Vosskuhl, J.; Strüber, D. Time–frequency analysis of event-related potentials: A brief tutorial. Brain Topogr. 2014, 27, 438–450. [Google Scholar] [CrossRef]

- Ouyang, G. A generic neural factor linking resting-state neural dynamics and the brain’s response to unexpectedness in multilevel cognition. Cereb. Cortex 2022, 33, 2931–2946. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, G.; Dien, J.; Lorenz, R. Handling EEG artifacts and searching individually optimal experimental parameter in real time: A system development and demonstration. J. Neural Eng. 2022, 19, 016016. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Lee, T.-W.; Girolami, M.; Sejnowski, T.J. Independent component analysis using an extended infomax algorithm for mixed subgaussian and supergaussian sources. Neural Comput. 1999, 11, 417–441. [Google Scholar] [CrossRef]

- Pion-Tonachini, L.; Kreutz-Delgado, K.; Makeig, S. ICLabel: An automated electroencephalographic independent component classifier, dataset, and website. NeuroImage 2019, 198, 181–197. [Google Scholar] [CrossRef]

- Aydore, S.; Pantazis, D.; Leahy, R.M. A note on the phase locking value and its properties. Neuroimage 2013, 74, 231–244. [Google Scholar] [CrossRef]

- Stefanics, G.; Kremláček, J.; Czigler, I. Visual mismatch negativity: A predictive coding view. Front. Hum. Neurosci. 2014, 8, 666. [Google Scholar] [CrossRef] [PubMed]

- Friedman, D.; Cycowicz, Y.M.; Gaeta, H. The novelty P3: An event-related brain potential (ERP) sign of the brain’s evaluation of novelty. Neurosci. Biobehav. Rev. 2001, 25, 355–373. [Google Scholar] [CrossRef]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the importance of initialization and momentum in deep learning. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 17–19 June 2013; pp. 1139–1147. [Google Scholar]

- Šoškić, A.; Jovanović, V.; Styles, S.J.; Kappenman, E.S.; Ković, V. How to do better N400 studies: Reproducibility, consistency and adherence to research standards in the existing literature. Neuropsychol. Rev. 2022, 32, 577–600. [Google Scholar] [CrossRef]

- Kriegeskorte, N.; Simmons, W.K.; Bellgowan, P.S.; Baker, C.I. Circular analysis in systems neuroscience: The dangers of double dipping. Nat. Neurosci. 2009, 12, 535–540. [Google Scholar] [CrossRef]

- Tan, X.; Wang, D.; Chen, J.; Xu, M. Transformer-Based Network with Optimization for Cross-Subject Motor Imagery Identification. Bioengineering 2023, 10, 609. [Google Scholar] [CrossRef] [PubMed]

- Tarailis, P.; Koenig, T.; Michel, C.M.; Griškova-Bulanova, I. The functional aspects of resting EEG microstates: A Systematic Review. Brain Topogr. 2023, 1–37. [Google Scholar] [CrossRef]

- Yoo, G.; Kim, H.; Hong, S. Prediction of Cognitive Load from Electroencephalography Signals Using Long Short-Term Memory Network. Bioengineering 2023, 10, 361. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhang, L.; Xia, P.; Wang, P.; Chen, X.; Du, L.; Fang, Z.; Du, M. EEG-based emotion recognition using a 2D CNN with different kernels. Bioengineering 2022, 9, 231. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ouyang, G.; Zhou, C. Exploiting Information in Event-Related Brain Potentials from Average Temporal Waveform, Time–Frequency Representation, and Phase Dynamics. Bioengineering 2023, 10, 1054. https://doi.org/10.3390/bioengineering10091054

Ouyang G, Zhou C. Exploiting Information in Event-Related Brain Potentials from Average Temporal Waveform, Time–Frequency Representation, and Phase Dynamics. Bioengineering. 2023; 10(9):1054. https://doi.org/10.3390/bioengineering10091054

Chicago/Turabian StyleOuyang, Guang, and Changsong Zhou. 2023. "Exploiting Information in Event-Related Brain Potentials from Average Temporal Waveform, Time–Frequency Representation, and Phase Dynamics" Bioengineering 10, no. 9: 1054. https://doi.org/10.3390/bioengineering10091054

APA StyleOuyang, G., & Zhou, C. (2023). Exploiting Information in Event-Related Brain Potentials from Average Temporal Waveform, Time–Frequency Representation, and Phase Dynamics. Bioengineering, 10(9), 1054. https://doi.org/10.3390/bioengineering10091054