Abstract

Accurate segmentation of interstitial lung disease (ILD) patterns from computed tomography (CT) images is an essential prerequisite to treatment and follow-up. However, it is highly time-consuming for radiologists to pixel-by-pixel segment ILD patterns from CT scans with hundreds of slices. Consequently, it is hard to obtain large amounts of well-annotated data, which poses a huge challenge for data-driven deep learning-based methods. To alleviate this problem, we propose an end-to-end semi-supervised learning framework for the segmentation of ILD patterns (ESSegILD) from CT images via self-training with selective re-training. The proposed ESSegILD model is trained using a large CT dataset with slice-wise sparse annotations, i.e., only labeling a few slices in each CT volume with ILD patterns. Specifically, we adopt a popular semi-supervised framework, i.e., Mean-Teacher, that consists of a teacher model and a student model and uses consistency regularization to encourage consistent outputs from the two models under different perturbations. Furthermore, we propose introducing the latest self-training technique with a selective re-training strategy to select reliable pseudo-labels generated by the teacher model, which are used to expand training samples to promote the student model during iterative training. By leveraging consistency regularization and self-training with selective re-training, our proposed ESSegILD can effectively utilize unlabeled data from a partially annotated dataset to progressively improve the segmentation performance. Experiments are conducted on a dataset of 67 pneumonia patients with incomplete annotations containing over 11,000 CT images with eight different lung patterns of ILDs, with the results indicating that our proposed method is superior to the state-of-the-art methods.

1. Introduction

Interstitial lung diseases (ILDs) refer to a heterogeneous group of over 200 chronic parenchymal lung disorders that account for of all cases seen by pulmonologists. They are associated with substantial morbidity and mortality. In 2019, there were approximately 655,000 patients affected by ILDs and 22,000 deaths from ILDs in the USA [1]. Considering the wide variety of ILDs, differential diagnosis is necessary to develop appropriate therapy plans for patients with ILDs and to prevent patients from life-threatening complications resulting from misdiagnosis. However, it is fairly difficult even for experienced radiologists due to the inevitable fatigue caused by repetitive work and the similar clinical manifestations between ILDs. Also, it is challenging for existing computer-aided diagnosis (CAD) systems [2] to distinguish the variety of appearances of ILDs in lung CT scans, the most preferred clinical imaging evidence for ILD diagnosis. Developing an automatic and accurate segmentation tool to perform fine-grained classification of ILD patterns from CT images can greatly help radiologists and CAD systems to identify the ILD types, as well as to assess the progression of the diseases by quantitative measures, thereby favoring treatment and follow-up.

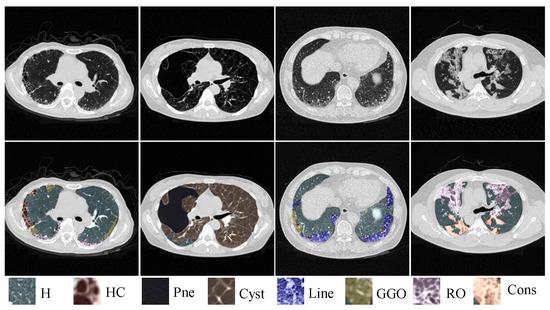

Over the past two decades, deep learning methods, especially deep convolutional neural networks (CNNs), have achieved remarkable success in natural and medical image recognition [3,4,5,6]. With the remarkable performance of CNNs in various tasks, they have been gradually adopted for the problem of ILD pattern recognition [7,8,9,10,11,12,13,14]. Most previous work often focused on image-level ILD identification [11,12] and patch-level ILD pattern classification [7,8,9,10,14]. The image-level ILD identification methods assign single or multiple labels to a holistic CT slice with ILDs, but they cannot locate ILD lesions in CT images for quantitative analysis. Patch-level ILD pattern classification methods often classify small regular image patches or regions of interest (ROIs) (i.e., ) into a specific ILD pattern. However, the image patch size is relatively small, where some visual details and spatial context may not be fully captured. The pre-defined regular patches or ROIs for detection remain not fine-grained enough, which tends to result in a misclassification of many different classes of pixels near the boundary. This is because the spread of the disease is not symmetric but arbitrary and multiple ILD patterns may coexist on a CT patch. Therefore, pixel-level segmentation of ILD patterns from CT images is more desirable, but is challenging for some of the reasons given below. First, ILD patterns are diverse and hard to distinguish in CT images due to inter- and intra-class variability. Figure 1 shows eight typical ILD patterns with totally different textures. Second, obtaining a large amount of well-labeled data is extremely expensive due to the expert-driven and time-consuming nature of pixel-level ILD annotations from CT scans. As far as we know, there are only a few studies that pixel-by-pixel segment the ILD patterns in CT images [13]. Anthimopoulos et al. in [13] proposed a dilated fully convolutional network for segmenting six typical ILD patterns on the publicly available multimedia database of interstitial lung diseases maintained by the Geneva University Hospital (HUG) [15]. However, this database was sparsely annotated, i.e., only labeling one prominent disease region, not an entire CT slice. This imposes limitations on the segmentation models that need to discard unlabeled slices during training and exclude unannotated lung regions during evaluation.

Figure 1.

Illustration of similar manifestations with different ILD patterns coexisting on a single CT slice. ‘H’, ‘HC’, ‘Pne’,‘Cyst’, ‘Line’, ‘GGO’, ‘RO’ and ‘Cons’ represent Healthy, Honeycombing, Pneumothorax, Cyst, Linear, Ground-glass opacity, Reticular opacity and Consolidation, respectively.

To leverage the unlabeled CT slices in our collected dataset and boost the segmentation performance, we propose the adoption of a popular semi-supervised learning (SSL) framework termed Mean-Teacher in our work, which was initially proposed for image classification [16]. A typical Mean-Teacher framework consists of a teacher model and a student model. The framework aims to improve the student model’s predictions by using the teacher model’s predictions as a guide. This is accomplished by applying a consistency loss between the predictions of the two models to encourage consistent outputs. To better capture the discriminative features of ILD patterns in CT images, we select a high-resolution network (HRNet) with parallel multi-resolution representations as the network of the two models. In SSL, self-training approaches have been widely applied to improve segmentation performance. For example, the student model is retrained using the pseudo-labeled data generated by the teacher model from unlabeled data. However, since the teacher model cannot predict well on all unlabeled data, it may result in potential performance degradation when iteratively optimizing the model with bad pseudo-labels. To mitigate this issue, a selection procedure is necessary to select reliable pseudo-labels for retraining the student model. In our work, we adopt the latest selection strategy as in ST++ [17] to select and prioritize more reliable and easier images in the re-training phase.

To sum up, we propose an end-to-end semi-supervised learning framework for the segmentation of ILD patterns (ESSegILD) from CT images via self-training with selective re-training. The main contributions of our work can be summarized as follows:

- (1)

- We propose a novel method termed ESSegILD for the segmentation of ILD patterns from CT images. As far as we know, this is one of the few studies on pixel-level ILD pattern recognition in CT images.

- (2)

- In our proposed ESSegILD framework, we utilize consistency regularization and self-training with selective re-training to appropriately and effectively utilize the unlabeled images for improving the segmentation performance. Therein, a high-resolution network (HRNet) with parallel multi-resolution representations is adopted as the backbone of our model to better capture the discriminative features of ILD patterns.

- (3)

- Extensive experiments are conducted on a large-scale partially annotated CT dataset with eight different ILD patterns, with results suggesting the effectiveness of our proposed method and its superiority to other comparison methods. To the best of our knowledge, the ILD patterns identified in our work are the most diverse ever reported.

2. Related Work

2.1. ILD Pattern Recognition

ILDs are characterized by textural changes in the lung parenchyma, which are often assessed using texture classification schemes on local regions or volumes of interest [18,19]. In recent years, solutions based on CNNs have been proposed for lung pattern recognition in ILDs. However, the majority focused on the patch-level or image-level classification of ILDs from CT images [10,11,20,21,22,23,24]. Patch- or image-level recognition usually classifies the regular image patches or the whole slices into a class of the ILD patterns from manually annotated polygon-like regions of interest (ROIs) or 2D axial slices. The classification often tends to misclassify many different classes of pixels in a single CT slice. Although recent approaches have utilized deep learning techniques for the automatic detection or identification of ILDs [25,26,27,28], the development of pixel-level segmentation models is hindered by the limited availability of labeled data and high labor costs. Consequently, there are only a few studies focused on pixel-level recognition for ILD patterns from CT images. The most related work to ours is [13], which introduced a deep dilated CNN for the semantic segmentation of ILD patterns. However, the training data used in their work were sparsely annotated, i.e., not covering the entire CT slices or lesion regions but the most typical disease regions. In this paper, we collect a relatively large dataset of 67 pneumonia patients comprising more than 11,000 CT images with a slice-wise sparse annotation, i.e., only labeling a few slices in each CT volume with ILD patterns pixel by pixel to minimize the annotation burden on radiologists. Then, we present a semi-supervised segmentation method for the pixel-by-pixel recognition of multiple ILD patterns from CT images using the collected CT scans with partial annotations.

2.2. Semi-Supervised Learning

Semi-supervised learning (SSL) has demonstrated promising results in the field of image classification/segmentation by utilizing unlabeled data to promote model training. Two common strategies employed in previous SSL approaches are consistency regularization [16,29,30,31,32,33,34,35,36,37,38,39] and self-training [17,40,41,42,43,44,45,46].

Consistency regularization involves enforcing the consistency of predictions with various perturbations, i.e., performing data or model perturbations while enforcing consistency among the predictions. This idea was used in [29], where a temporal ensembling method was proposed to form a consensus prediction of the unknown labels using the outputs of the network in training on different epochs under different regularization and input augmentation conditions. Later, Mean-Teacher was proposed, averaging model weights instead of label predictions [16], showing better performance than the temporal ensembling method. In a Mean-Teacher framework consisting of a teacher and a student network, the teacher network has a similar architecture to the student network, but its parameters are updated as an exponential moving average (EMA) of the student network weights. The output of the student is compared with that of the teacher using consistency loss. Inspired by the impressive performance achieved by Mean-Teacher, many studies have extended it to improve model performance [30,31]. For instance, Miyato et al. [30] presented a novel data augmentation method via virtual adversarial learning (VAT) and applied it to the input, where consistency regularization was imposed on the predictions. Yu et al. [33] applied the Mean-Teacher paradigm to the task of semi-supervised 3D left atrium segmentation and introduced an uncertainty estimation method to guide the calculation of consistency loss.

Self-training seeks to generate pseudo-labels for unlabeled data and use them to expand the labeled training data to train more powerful models. This idea was used in [47], where they selected the classes with the maximum predicted probability and used them as if they were true labels to train a semi-supervised classification model. Different strategies have been developed to reduce the adverse effect of unreliable pseudo-labels caused by limited labeled data [40,41,43]. More recently, Yang et al. [17] proposed an advanced self-training framework (namely, ST++) to select reliable pseudo-labeled images based on holistic prediction-level stability. The improved performance indicates the efficacy of their idea that exploits the pseudo-labeled images in a reliable-to-unreliable and easy-to-hard curriculum manner.

Inspired by previous work, our proposed ESSegILD method adopts the Mean-Teacher model as a foundation, together with the latest ST++ technique [17], for the semi-supervised segmentation of ILD patterns from CT images. We aim to make maximum use of the unlabeled data from two aspects, i.e., consistency regularization and self-training.

3. Dataset and Methods

In this work, we have collected a dataset of 67 CT scans, which comprises over 11,000 CT images of eight different kinds of ILD patterns. In addition, we have obtained pixel-level annotations for a small fraction of the images, which provides us with a valuable set of labeled examples to train and evaluate our algorithm. Using the ILD dataset with partial annotations, we developed an end-to-end semi-supervised learning method for segmenting ILD patterns from CT images. Specifically, ESSegILD, which aims to leverage the partially labeled dataset from CT images via self-training with selective re-training, is proposed to reduce the dependence on expensive and time-consuming manual annotations. In the following, we will describe the dataset and our proposed method.

3.1. Dataset

Clinical high-resolution CT scans from 67 pneumonia patients were collected from the First Affiliated Hospital of Guangzhou Medical University from 2015 to present. All cases were clinically diagnosed as pulmonary alveolar proteinosis (PAP), usual interstitial pneumonia (UIP), or lymphangiomatosis (LAM) with ILD lesions. The number of slices in each CT scan ranged from 127 to 339, with a resolution of and a pixel size ranging from to mm. To ensure the quality and relevance of our dataset, we selected cases that met three inclusion criteria: (a) the subjects had to be diagnosed with ILDs in multiple disciplines; (b) the CT scans were acquired by spiral CT and reconstructed by a high-resolution algorithm, with slice thicknesses of 1–2 mm; (c) there were no other concurrent lung diseases, such as infection, pneumoconiosis, tumor, etc. Then, to generate ground-truth segmentation masks, two radiologists with 7 and 19 years of experience were invited to annotate the ILD patterns in the dataset. Notably, a slice-wise with pixel-level sparse annotation method was adopted in our work, i.e., only labeling a few slices for each CT scan and the annotation covers the entire lung region for the slices selected by the two radiologists. This kind of annotation could alleviate the labeling burden on radiologists as well as increase the diversity of the labeled dataset. In CT images, the features of adjacent slices are often highly correlated, and lesions typically appear consecutively across adjacent slices, so we selected unlabeled slices from 3 upper and lower areas of the labeled slice as unlabeled data to train our model. In such a way, a total of 11,493 CT images are included in our work, of which 2132 images are fully annotated. All comparison methods follow the same data partition divided on the patient basis, including 1732 labeled and 7360 corresponding unlabeled images as the training set, 200 labeled images as the validation set, and 200 labeled images as the test set. All annotated areas contain 8 types of lung patterns with ILDs, including Healthy (H), Honeycombing (HC), Pneumothorax (Pne), Cyst, Linear (Line), Ground-glass opacity (GGO), Reticular opacity (RO), and Consolidation (Cons).

3.2. Methods

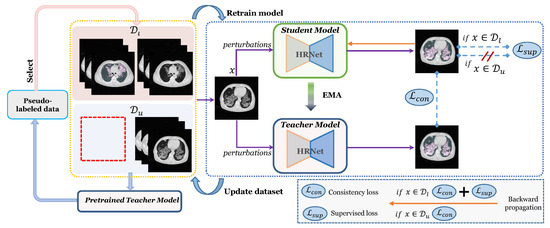

In this work, we propose an end-to-end semi-supervised learning method for segmenting ILD patterns from CT images (denoted as ESSegILD). As shown in Figure 2, we propose the adoption of a popular semi-supervised model, i.e., Mean-Teacher, as our model, which includes a teacher model and a student model. Inspired by [17], a selective re-training strategy is introduced to leverage a wealth of information from unlabeled images during training our ESSegILD model. The selective retraining strategy allows the reliability of those pseudo-labels generated by the teacher model to be ranked and more reliable pseudo-labeled data to be prioritized to expand the labeled dataset for re-training the model. Such a training process is repeated to progressively improve the model performance until it remains stable.

Figure 2.

Illustration of our proposed ESSegILD framework for segmentation of ILD patterns from CT images. The framework consists of three components: the training of Mean-Teacher model with labeled images and unlabeled images , pseudo-label selection, where a stability score is calculated for the selection of reliable pseudo-labels, and iterative re-training for the retraining of the Mean-Teacher using the updated dataset.

3.2.1. The Proposed ESSegILD Framework

For densely segmenting the ILD patterns from CT images in a semi-supervised setting, we collected a relatively large CT dataset, with slice-wise sparse annotations for each CT volume, for building a combination set of manually labeled images and unlabeled images , where . Via pseudo-labeling, high-confidence pseudo-labeled images are selected to expand the labeled set . As presented in Figure 2, an iterative re-training strategy is utilized in the proposed method, in which the model is retrained in a new training round using the updated dataset consisting of expanded labeled data and reduced unlabeled data. The training process of our proposed ESSegILD method includes four steps as described below:

- Step 1:

- Training the segmentation model f using labeled set and unlabeled set .

- Step 2:

- Pseudo labeling using the pretrained teacher model and selecting high-confidence pseudo-labeled data from to obtain pseudo-labeled set , where the model’s prediction is represented as .

- Step 3:

- Updating the labeled and unlabeled images in the training set by = and = .

- Step 4:

- Retraining the model f using the updated dataset.

- Step 5:

- Repeating Steps 2–4 until reaching the maximum training rounds.

Here, we describe how to train the segmentation model and select high-confidence pseudo-labeled samples in the training process.

Model training. In the proposed ESSegILD method, we adopt the popular semi-supervised model, i.e., Mean-Teacher, as our segmentation model. Thus, our segmentation model consists of a teacher model and a student model as in typical Mean-Teacher models. Note that only the student model is involved in the backward propagation to update its weights, while the weights of the teacher model are updated as an exponential moving average (EMA) of the student weights according to the following equation:

where and are the parameters of the teacher and student models, respectively, z is the training step, and is a smoothing coefficient. For training the student model, a supervised loss term for labeled data and a consistency loss term for labeled and unlabeled data are used to optimize the training process. As we know, ILD lesions tend to be diffuse and irregular in shape, and different ILD patterns can occupy distinct regions in lung CT slices, resulting in the imbalance of the training data. A combination of dice loss [48] and focal loss [49] is used as the supervised loss term for labeled data to mitigate the imbalance issue, which can be expressed as

where is used to balance the relative importance of dice loss and focal loss. Therein, and can be formulated as

In Equation (3), denotes the probability that a pixel belongs to class t, represents the corresponding ground-truth binary label of class t, and T is the number of categories to be identified. In Equation (4), is used to balance the importance of different samples across different categories, which depends on the amount of data available for each category. The variable , as defined in Equation (5), represents the probability of the model correctly predicting the sample, and is the modulating factor to encourage the model to prioritize challenging samples over easier ones by assigning a higher weight to the challenging samples. The modulating factor can be modified to control the contribution of easy samples to the loss function by adjusting the value of .

A consistency loss is often used to force the teacher and the student to make stable and consistent predictions on labeled and unlabeled data under various perturbations. We adopt the mean square error (MSE) in Equation (6) as the consistency loss, where and correspond to the predictions of the teacher and student models, respectively. Thus, the total loss function of the segmentation model can be expressed as

where is the hyper-parameter to balance the two loss terms, i.e., and .

High-confidence pseudo-labeled sample selection. To reduce the bias by unreliable pseudo-labels, an effective selection step is necessary to select high-confidence pseudo-labeled samples from an unlabeled set. Yang et al. [17] found that there was a positive correlation between the segmentation performance and the evolving stability of produced pseudo masks during the training phase. Inspired by ST++ [17], we introduce filtering out unreliable pseudo-labeled images from an unlabeled set at an image level based on their evolving stability during training. Specifically, considering an unlabeled image , we predict the pseudo masks of with Q checkpoints of the teacher model saved during training to obtain . Then, a stability score for an unlabeled image can be calculated by

In this work, we sort the scores in descending order and prioritize unlabeled samples with more reliable pseudo-labels that have higher stability scores and select them to expand the labeled set to retrain the segmentation model in the next training round.

For quantitative evaluation, we used the dice coefficient in Equation (9) as the principal performance metric. In this equation, X and Y represent the region segmented by the trained teacher model and the ground truth, respectively.

3.2.2. High-Resolution Network

The majority of existing medical image segmentation networks, such as U-Net [50] and SegNet [51], consist of a contracting path and an expanding path, with the former encoding the input image as low-resolution feature maps to capture semantic information and the latter recovering the feature maps to the original resolution for precise localization. However, it will lose information, especially for fine structures, due to the unidirectional down- and up-sampling processes. As the imaging features of ILD patterns in HRCT images are very similar, the detailed information of the feature map is essential to distinguish the similar manifestations of ILD patterns. To better capture the discriminative features of ILD patterns, a high-resolution network (HRNet) with parallel multi-resolution representations [52] is adopted as the basic network for both the teacher and student networks in our ESSegILD model. The HRNet network has the following features: (1) connecting the high-to-low-resolution convolution streams in parallel, and (2) repeatedly exchanging information across resolutions. In such a way, the resulting representation is semantically richer and spatially more precise by maintaining high-resolution representation throughout the entire training process. More details about the network architecture of HRNet can be found in [52].

3.3. Implementation Details

Image pre-processing. Lung segmentation and intensity normalization were first performed on the CT images. We carefully followed the previous image pre-processing method in [10], and the image intensity within the extracted lung region was cropped to a specific Hounsfield unit (HU) window of [−1000, 250] and mapped to [0, 1]. Before feeding the input CT images into the segmentation model, we first augmented them by adding noise, flipping, rotation, and shifting.

Network training. To reduce the effect of the student on the teacher when the student’s performance is poor, we set the value of in Equation (1) to obtain a more robust teacher network during the ramp-up phase and for the rest of the training. To minimize the confirmation bias and enhance the quality of pseudo-labels, we used a batch size of 4, consisting of 1 labeled slice and 3 unlabeled slices, and added different noise perturbations to the input data during each forward propagation process in both the teacher and student networks. We also took a dropout ratio of 0.5 at the end of the teacher and student networks as a method of model perturbation for better performance. We set the value of in Equation (4) to alleviate the effect of unbalanced data and in Equation (2) to make the model focus on hard-to-classify samples. At the beginning of the training, we expected the network to focus on labeled data to accelerate the convergence of the network, so the in Equation (7) increased gradually from 0 to 1 as the training steps increased during each round of model re-training. At the end of every training round, the stability score for each unlabeled image in Equation (8) was computed. We set and simply treated the top highest-scored images as the reliable ones to expand the labeled set to train the model in the next training round. In one training round, the total training epochs of the segmentation model were 200 and the training took about 8 h on a machine equipped with a GPU NVIDIA GeForce RTX 2080 Ti.

4. Experiments and Results

4.1. Experimental Setup

In this section, we conduct an ablation study to validate the efficacy of different key factors in our proposed ESSegILD, and a group of experiments to compare our method with several recent semi-supervised segmentation methods, including MT [16], UA-MT [33], CPS-Seg [53], ST [47], and ST++ [17]. Here, we briefly introduce these comparison methods.

MT. Here we refer to the classical Mean-Teacher method as MT for short. The MT method proposes a consistent regularization loss for both labeled and unlabeled data to constrain the teacher and student models to output similar results for the same input under different perturbations. Different from our ESSegILD method, the MT model needs to be trained only once without iterative re-training. UA-MT. The UA-MT method has been proposed for semi-supervised 3D left atrium segmentation by Yu et al. [33]. It also adopts the Mean-Teacher framework, and an uncertainty-aware consistent loss is presented in the method to mitigate the adverse effects of unreliable predictions by the teacher model.

CPS-Seg. The CPS-Seg method has been originally proposed for semi-supervised natural image segmentation, in which a novel consistency regularization approach, called cross-pseudo supervision (CPS), is introduced to impose consistency on two segmentation networks.

ST. The self-training (ST) method via pseudo-labeling is a simple and efficient semi-supervised learning method. The proposed network is trained in a supervised manner using labeled data and pseudo-labels simultaneously.

ST++. Most recently, Yang et al. [17] proposed an advanced ST++ framework based on ST, in which more reliable images are automatically selected and prioritized in the re-training phase. Inspired by ST++, we also progressively leveraged unlabeled data via selective re-training in our method.

4.2. Ablation Study

Three key factors, including network architecture, training data, and iterative re-training, mainly affect the segmentation performance in our proposed ESSegILD method. To investigate the impact of different factors, we have conducted an ablation study with alternative configurations.

Impact of Network Architectures. We trained different segmentation models to identify different ILD patterns in CT images using different networks, such as U-Net [50], Dilated CNN [13] and HRNet, and compared their segmentation performance to validate the efficacy of the HRNet as the basic network in our method. The Dilated CNN network is the same as in [13], in which it is used for the semantic segmentation of ILD patterns. Notably, we only used the labeled data from our collected dataset to train these models in a supervised manner for rapid validation. The segmentation results for 8 ILD patterns of different segmentation models using various networks are reported in Table 1. In Table 1, we can see that the segmentation performance of the HRNet network is superior to other models using the network architecture of U-Net and Dilated CNN. The competitive segmentation results demonstrate the superiority of high-resolution representation used in the HRNet for capturing the discriminative features of ILDs in CT images. Therefore, we adopted HRNet as the basic network of our teacher and student models in our ESSegILD framework.

Table 1.

Segmentation performance of different network architectures, measured by dice coefficient (mean ± s.d.%). The dice coefficient for each pattern is calculated separately using Equation (9). The term in the table denotes the average dice score of all patterns. The networks are trained with only labeled data in a supervised manner. The bold font represents the optimal experimental result.

Impact of Using Different Training Data. As we mentioned before, we collected a dataset containing 11,493 CT images from 67 subjects with eight ILD patterns, among which only a small fraction of CT images were fully annotated. For training our ESSegILD model, we leveraged the information from both the labeled data and the remaining mostly unlabeled data as the training data to boost the segmentation performance in a semi-supervised learning manner. To explore the gain in segmentation performance of the unlabeled data in our training data, we trained our model using the labeled data and the remaining unlabeled data in different proportions, such as , , , and . When only using those 1732 fully annotated images, a supervised-only (SupOnly) model was trained as the baseline model, in which the supervised loss functions, such as dice loss and focal loss, and the network architecture, i.e., HRNet, were consistent with our proposed ESSegILD model. Table 2 reports the comparison of the SupOnly model and our semi-supervised ESSegILD models with the different amounts of unlabeled training data. Note that the number of training rounds for all semi-supervised ESSegILD models in Table 2 was set to three. From Table 2, we can make two observations. First, as more unlabeled data are included in the training data, the segmentation performance of the corresponding model is improved. Second, our ESSegILD model with labeled and unlabeled data largely improves the segmentation performance of the SupOnly model by percent points regarding the average dice coefficient of eight ILD patterns. These results suggest that our proposed semi-supervised learning framework can boost the segmentation performance by effectively leveraging unlabeled data.

Table 2.

Comparison of our segmentation models when additional different proportions of unlabeled data are included during training. The SupOnly model is trained in a supervised manner using only labeled data, while the other variants are trained in a semi-supervised manner using both labeled and different proportions of unlabeled data. The bold font represents the optimal experimental results achieved by our model with different proportions of unlabeled data. All results are measured by dice coefficient (mean ± s.d.%).

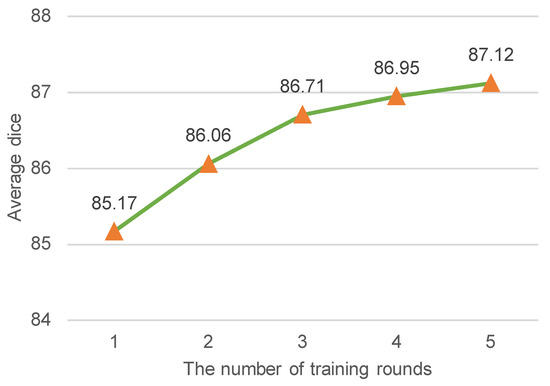

Impact of Iterative Re-training. In our work, we adopted the latest selective re-training strategy [17] to select reliable pseudo-labeled samples as if they were true labeled data to re-train our ESSegILD model. To further boost the performance, we assigned pseudo labels to unlabeled images using the current teacher model, selected reliable pseudo-labeled data to expand the labeled dataset, and then re-trained the model using the updated dataset, which was repeated several times. Intuitively, the model performance improves as the number of training rounds increases, but it also increases the training time. Therefore, a proper number of training rounds is required to balance the training time and segmentation performance. Figure 3 shows the relationship between the segmentation metric (i.e., the average dice of segmenting eight lung patterns) and the number of training rounds. From Figure 3, we can observe that the segmentation performance improves as the number of training rounds increases. Retraining the model more than three times makes it slower to improve the model’s performance. To balance efficacy and efficiency, the number of training rounds was set to three in this work.

Figure 3.

The average dice coefficient (%) vs. the number of training rounds. The average dice indicates the mean segmentation performance of the model for 8 ILD patterns. Intuitively, the improvement of dice coefficient becomes less pronounced after a certain number of training rounds. To achieve a balance between segmentation performance and training time, the training rounds were limited to 3 in this study.

4.3. Comparison with the State-of-the-Art Semi-Supervised Segmentation Methods

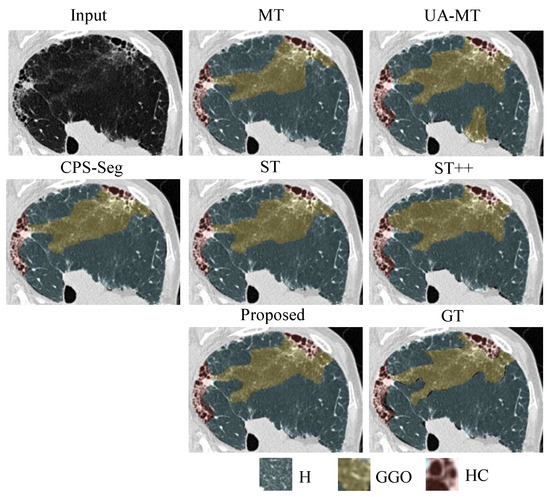

We implemented several state-of-the-art semi-supervised segmentation methods for comparison. Note that we used the same network (HRNet) in these methods for a fair comparison. Table 3 shows the quantitative results of different comparison methods, including MT, UA-MT, CPS-Seg, ST, ST++, and our proposed ESSegILD, for segmenting eight ILD patterns from high-resolution CT images. As shown in Table 3, in the comparison between two self-training methods, i.e., ST and ST++, the ST++ is better than ST since it selects the trustworthy pseudo-labels as if they were true labels to augment the labeled dataset and then trains the model in a progressive way. This shows the effectiveness of the selective re-training strategy in [17] for improving the performance of semi-supervised learning. Inspired by the improvement achieved by ST++, our proposed ESSegILD combines the selective re-training strategy and consistency regularization to better leverage abundant unlabeled data. As shown in Table 3, our proposed ESSegILD achieves the best performance regarding the dice coefficient of all lung patterns. Specifically, the ESSegILD surpasses methods based on improved consistency regularization, such as UA-MT and CPS-Seg, as well as the self-training methods (i.e., ST and ST++) without consistency regularization, which suggests the efficacy of the combination of consistency regularization and selective re-training in semi-supervised learning. We also show the local and global visual results of all comparison methods in Figure 4 and Figure 5. Consistent results can be seen in them. The predicted masks from our proposed ESSegILD are the closest to the manually outlined masks. Several strategies may contribute to the better segmentation results of our method. Previous semi-supervised segmentation approaches typically focus on training models using large-scale natural images, which may not perform optimally on CT images with ILDs. Additionally, factors such as the selection of appropriate loss functions, criteria for selecting pseudo-labels, and the selection ratio of pseudo-labeled data for retraining can also significantly impact the final segmentation results. In the proposed ESSegILD, a better pretrained teacher model can generate higher-quality pseudo-labels at the beginning of the iterative re-training process, which may also be a key factor in the better experimental results.

Table 3.

Quantitative comparison with other semi-supervised methods, measured by dice coefficient (mean ± s.d.%). The bolded font represent the experimental results of our model, showcasing its optimal performance in each class.

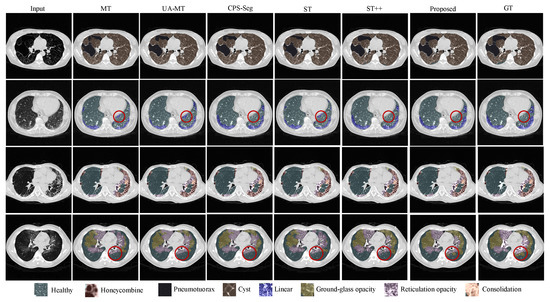

Figure 4.

Local visual results of different methods for segmenting ILD patterns from CT images. Three types of ILD patterns (Healthy/Ground-glass opacity/Honeycombing) coexist in the partial CT slice. We can see that the predicted masks from our proposed ESSegILD are the closest to the ground-truth labels ().

Figure 5.

Global visual results of different methods for segmenting 8 ILD patterns from CT images. For better comparison, some regions in the segmentation result of each method are marked with red circles. The proposed method demonstrates improved responses in these regions compared to the ground truth.

5. Discussion

For dense medical image segmentation, costly pixel-wise labeling and required expert domain knowledge make it extremely difficult to collect large amounts of labeled data, thus hindering the development of data-driven deep learning models for automatic segmentation. Semi-supervised learning (SSL) can mitigate the requirement for labeled data by incorporating unlabeled data into model training. In this work, we collected a dataset of 67 pneumonia patients containing over 11,000 CT images with eight typical ILD patterns and propose an effective semi-supervised framework that integrates consistency regularization and self-training (or pseudo-labeling), called ESSegILD, for recognizing multiple ILD disease patterns in CT images. The proposed model can automatically segment ILD lesions in CT images, consequently enhancing the diagnostic performance of radiologists. We believe that it can significantly alleviate the burden on physicians and contribute to patients’ treatment and follow-up.

In our work, we performed an extensive ablation study and a group of method comparison experiments to validate the efficacy of the proposed ESSegILD. Several strategies contributed to the better segmentation results of our method. First, the basic network that is adopted in our work, i.e., HRNet with parallel multi-resolution representations, is powerful enough to effectively learn knowledge from entire CT input images. Second, we propose an effective way to utilize unlabeled data at scale. Some other strategies, such as the appropriate loss functions and the election ratio of pseudo-labels in the selective re-training data, may also be potential reasons to boost the segmentation performance. However, our main point lies in progressively leveraging unlabeled images by selecting and prioritizing more reliable and easier images in the re-training phase and encouraging the consistency of the predictions of different networks for the same input.

Although our proposed method can take advantage of unlabeled images, it does not take into account the inter-slice consistency of a CT volume, i.e., the features of adjacent slice images in a CT volume are highly correlated and lesions usually appear consecutively between adjacent slices. Moreover, the selection of pseudo-labels in our method may inevitably include some poor-quality unlabeled samples, limiting the model’s potential to achieve better results. To address these limitations, inter-slice consistency information and potential poor-quality pseudo-labeled samples should be effectively utilized for segmenting multiple ILD patterns. In the future, we will explore the method to utilize the inter-slice consistency information and make full use of the potential poor-quality pseudo-labels. We aim to integrate the model into a CAD system to assist radiologists in the differential diagnosis of ILDs.

6. Conclusions

In this paper, we develop an end-to-end semi-supervised segmentation method for ILD pattern recognition, called ESSegILD. The results of extensive ablation studies and comparison experiments have demonstrated the effectiveness of our approach. Specifically, the adopted HRNet, which maintains high-resolution representation throughout the entire training process, enhances the ability of the network to capture the discriminative features of ILD patterns. The iterative re-training strategy can select more reliable pseudo-labels to boost segmentation performance, demonstrating its effectiveness in progressively expanding the labeled set for subsequent training rounds. Finally, the experimental results of the proposed ESSegILD with consistency regularization and self-training show significant performance improvements with respect to the state-of-the-art semi-supervised segmentation methods. In our future research, we will focus on addressing the limitations of the current method to boost segmentation performance by utilizing inter-slice consistency information and leveraging poor-quality pseudo-labeled samples.

Author Contributions

Conceptualization, G.-W.C. and W.Y., methodology, G.-W.C. and Y.-B.L.; data curation, Y.D., R.-H.L. and Q.-S.Z.; writing—original draft preparation, G.-W.C.; writing—review and editing, W.Y. and Y.-B.L.; funding acquisition, W.Y., Q.-J.F. and Y.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by grants from the National Natural Science Foundation of China (No.82172020), National key R&D program of China (No.2021YFC2500700), and Guangdong Provincial Key Laboratory of Medical Image Processing (No.2020B 1212060039).

Institutional Review Board Statement

We take the ethical implications of our research very seriously, and we obtained approval for this experiment from the Ethics Committee of the First Affiliated Hospital of Guangzhou Medical University.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The individual interstitial lung disease (ILD) dataset in this study is available upon request from the corresponding author due to privacy or ethical restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jeganathan, N.; Sathananthan, M. The prevalence and burden of interstitial lung diseases in the USA. Eur. Respir. Soc. 2022, 8, 00630-2021. [Google Scholar] [CrossRef] [PubMed]

- Trusculescu, A.A.; Manolescu, D.; Tudorache, E.; Oancea, C. Deep learning in interstitial lung disease—How long until daily practice. Eur. Radiol. 2020, 30, 6285–6292. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zhang, Y.; Deng, L.; Zhu, H.; Wang, W.; Ren, Z.; Zhou, Q.; Lu, S.; Sun, S.; Zhu, Z.; Gorriz, J.M.; et al. Deep Learning in Food Category Recognition. Inf. Fusion 2023, 98, 101859. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.; Wang, S.H.; Zhang, Y.D. Detection of abnormal brain in MRI via improved AlexNet and ELM optimized by chaotic bat algorithm. Neural Comput. Appl. 2021, 33, 10799–10811. [Google Scholar] [CrossRef]

- Zhao, W.; Xu, R.; Hirano, Y.; Tachibana, R.; Kido, S. Classification of diffuse lung diseases patterns by a sparse representation based method on HRCT images. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5457–5460. [Google Scholar]

- Anthimopoulos, M.; Christodoulidis, S.; Christe, A.; Mougiakakou, S. Classification of interstitial lung disease patterns using local DCT features and random forest. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 6040–6043. [Google Scholar]

- Song, Y.; Cai, W.; Huang, H.; Zhou, Y.; Feng, D.D.; Wang, Y.; Fulham, M.J.; Chen, M. Large margin local estimate with applications to medical image classification. IEEE Trans. Med. Imaging 2015, 34, 1362–1377. [Google Scholar] [CrossRef]

- Anthimopoulos, M.; Christodoulidis, S.; Ebner, L.; Christe, A.; Mougiakakou, S. Lung pattern classification for interstitial lung diseases using a deep convolutional neural network. IEEE Trans. Med. Imaging 2016, 35, 1207–1216. [Google Scholar] [CrossRef]

- Gao, M.; Xu, Z.; Lu, L.; Harrison, A.P.; Summers, R.M.; Mollura, D.J. Holistic interstitial lung disease detection using deep convolutional neural networks: Multi-label learning and unordered pooling. arXiv 2017, arXiv:1701.05616. [Google Scholar]

- Gao, M.; Bagci, U.; Lu, L.; Wu, A.; Buty, M.; Shin, H.C.; Roth, H.; Papadakis, G.Z.; Depeursinge, A.; Summers, R.M.; et al. Holistic classification of CT attenuation patterns for interstitial lung diseases via deep convolutional neural networks. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2018, 6, 1–6. [Google Scholar] [CrossRef]

- Anthimopoulos, M.; Christodoulidis, S.; Ebner, L.; Geiser, T.; Christe, A.; Mougiakakou, S. Semantic segmentation of pathological lung tissue with dilated fully convolutional networks. IEEE J. Biomed. Health Inform. 2018, 23, 714–722. [Google Scholar] [CrossRef] [PubMed]

- Bermejo-Peláez, D.; Ash, S.Y.; Washko, G.R.; San José Estépar, R.; Ledesma-Carbayo, M.J. Classification of interstitial lung abnormality patterns with an ensemble of deep convolutional neural networks. Sci. Rep. 2020, 10, 338. [Google Scholar] [CrossRef] [PubMed]

- Depeursinge, A.; Vargas, A.; Platon, A.; Geissbuhler, A.; Poletti, P.A.; Müller, H. Building a reference multimedia database for interstitial lung diseases. Comput. Med. Imaging Graph. 2012, 36, 227–238. [Google Scholar] [CrossRef] [PubMed]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Yang, L.; Zhuo, W.; Qi, L.; Shi, Y.; Gao, Y. St++: Make self-training work better for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4268–4277. [Google Scholar]

- Korfiatis, P.D.; Karahaliou, A.N.; Kazantzi, A.D.; Kalogeropoulou, C.; Costaridou, L.I. Texture-based identification and characterization of interstitial pneumonia patterns in lung multidetector CT. IEEE Trans. Inf. Technol. Biomed. 2009, 14, 675–680. [Google Scholar] [CrossRef]

- van Tulder, G.; de Bruijne, M. Learning features for tissue classification with the classification restricted Boltzmann machine. In Proceedings of the Medical Computer Vision: Algorithms for Big Data: International Workshop (MCV 2014)—Held in Conjunction with MICCAI 2014, Cambridge, MA, USA, 18–20 September 2014; Revised Selected Papers 4. Springer: Cham, Switzerland, 2014; pp. 47–58. [Google Scholar]

- Christodoulidis, S.; Anthimopoulos, M.; Ebner, L.; Christe, A.; Mougiakakou, S. Multisource transfer learning with convolutional neural networks for lung pattern analysis. IEEE J. Biomed. Health Inform. 2016, 21, 76–84. [Google Scholar] [CrossRef]

- Wang, Q.; Zheng, Y.; Yang, G.; Jin, W.; Chen, X.; Yin, Y. Multiscale rotation-invariant convolutional neural networks for lung texture classification. IEEE J. Biomed. Health Inform. 2017, 22, 184–195. [Google Scholar] [CrossRef]

- Guo, W.; Xu, Z.; Zhang, H. Interstitial lung disease classification using improved DenseNet. Multimed. Tools Appl. 2019, 78, 30615–30626. [Google Scholar] [CrossRef]

- Pawar, S.P.; Talbar, S.N. Two-stage hybrid approach of deep learning networks for interstitial lung disease classification. BioMed Res. Int. 2022, 2022, 7340902. [Google Scholar] [CrossRef]

- Gupta, A.U.; Singh Bhadauria, S. Multi Level Approach for Segmentation of Interstitial Lung Disease (ILD) Patterns Classification Based on Superpixel Processing and Fusion of K-Means Clusters: SPFKMC. Comput. Intell. Neurosci. 2022, 2022, 4431817. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef]

- Aliboni, L.; Pennati, F.; Dias, O.; Baldi, B.; Sawamura, M.; Chate, R.; De Carvalho, C.R.; Aliverti, A. Convolutional neural network (CNN) for interstitial lung disease (ILD) patterns recognition. Eur. Respir. J. 2019, 54, PA3926. [Google Scholar]

- Hwang, H.J.; Seo, J.B.; Lee, S.M.; Kim, E.Y.; Park, B.; Bae, H.J.; Kim, N. Content-based image retrieval of chest CT with convolutional neural network for diffuse interstitial lung disease: Performance assessment in three major idiopathic interstitial pneumonias. Korean J. Radiol. 2021, 22, 281. [Google Scholar] [CrossRef]

- Aoki, R.; Iwasawa, T.; Saka, T.; Yamashiro, T.; Utsunomiya, D.; Misumi, T.; Baba, T.; Ogura, T. Effects of Automatic Deep-Learning-Based Lung Analysis on Quantification of Interstitial Lung Disease: Correlation with Pulmonary Function Test Results and Prognosis. Diagnostics 2022, 12, 3038. [Google Scholar] [CrossRef] [PubMed]

- Laine, S.; Aila, T. Temporal ensembling for semi-supervised learning. arXiv 2016, arXiv:1610.02242. [Google Scholar] [CrossRef]

- Miyato, T.; Maeda, S.i.; Koyama, M.; Ishii, S. Virtual adversarial training: A regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1979–1993. [Google Scholar] [CrossRef]

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. Mixmatch: A holistic approach to semi-supervised learning. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Berthelot, D.; Carlini, N.; Cubuk, E.D.; Kurakin, A.; Sohn, K.; Zhang, H.; Raffel, C. Remixmatch: Semi-supervised learning with distribution alignment and augmentation anchoring. arXiv 2019, arXiv:1911.09785. [Google Scholar]

- Yu, L.; Wang, S.; Li, X.; Fu, C.W.; Heng, P.A. Uncertainty-aware self-ensembling model for semi-supervised 3D left atrium segmentation. In Proceedings of the 22nd International Conference of the Medical Image Computing and Computer Assisted Intervention (MICCAI 2019), Shenzhen, China, 13–17 October 2019; Proceedings—Part II 22. Springer: Cham, Switzerland, 2019; pp. 605–613. [Google Scholar]

- Cao, X.; Chen, H.; Li, Y.; Peng, Y.; Wang, S.; Cheng, L. Uncertainty aware temporal-ensembling model for semi-supervised abus mass segmentation. IEEE Trans. Med. Imaging 2020, 40, 431–443. [Google Scholar] [CrossRef]

- Wang, G.; Liu, X.; Li, C.; Xu, Z.; Ruan, J.; Zhu, H.; Meng, T.; Li, K.; Huang, N.; Zhang, S. A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images. IEEE Trans. Med. Imaging 2020, 39, 2653–2663. [Google Scholar] [CrossRef]

- Xie, Q.; Dai, Z.; Hovy, E.; Luong, T.; Le, Q. Unsupervised data augmentation for consistency training. Adv. Neural Inf. Process. Syst. 2020, 33, 6256–6268. [Google Scholar]

- Wang, Y.; Peng, J.; Zhang, Z. Uncertainty-aware pseudo label refinery for domain adaptive semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9092–9101. [Google Scholar]

- Liu, Y.; Tian, Y.; Chen, Y.; Liu, F.; Belagiannis, V.; Carneiro, G. Perturbed and strict mean teachers for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4258–4267. [Google Scholar]

- Chen, J.; Fu, C.; Xie, H.; Zheng, X.; Geng, R.; Sham, C.W. Uncertainty teacher with dense focal loss for semi-supervised medical image segmentation. Comput. Biol. Med. 2022, 149, 106034. [Google Scholar] [CrossRef]

- Xie, Q.; Luong, M.T.; Hovy, E.; Le, Q.V. Self-training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10687–10698. [Google Scholar]

- Arazo, E.; Ortego, D.; Albert, P.; O’Connor, N.E.; McGuinness, K. Pseudo-labeling and confirmation bias in deep semi-supervised learning. In Proceedings of the IEEE 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Pham, H.; Dai, Z.; Xie, Q.; Le, Q.V. Meta pseudo labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11557–11568. [Google Scholar]

- Shi, Y.; Zhang, J.; Ling, T.; Lu, J.; Zheng, Y.; Yu, Q.; Qi, L.; Gao, Y. Inconsistency-aware uncertainty estimation for semi-supervised medical image segmentation. IEEE Trans. Med. Imaging 2021, 41, 608–620. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhou, L.; Wang, L.; Shi, Y.; Gao, Y. LaSSL: Label-guided self-training for semi-supervised learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 9208–9216. [Google Scholar]

- Chen, B.; Jiang, J.; Wang, X.; Wan, P.; Wang, J.; Long, M. Debiased Self-Training for Semi-Supervised Learning. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Kim, M.; Kim, J.; Bento, J.; Song, G. Revisiting Self-Training with Regularized Pseudo-Labeling for Tabular Data. arXiv 2023, arXiv:2302.14013. [Google Scholar]

- Lee, D.H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the Workshop on Challenges in Representation Learning (ICML), Atlanta, GA, USA, 16–21 June 2013; Volume 3, p. 896. [Google Scholar]

- Li, X.; Sun, X.; Meng, Y.; Liang, J.; Wu, F.; Li, J. Dice loss for data-imbalanced NLP tasks. arXiv 2019, arXiv:1911.02855. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Yuan, Y.; Zeng, G.; Wang, J. Semi-supervised semantic segmentation with cross pseudo supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2613–2622. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).