An Isolated CNN Architecture for Classification of Finger-Tapping Tasks Using Initial Dip Images: A Functional Near-Infrared Spectroscopy Study

Abstract

1. Introduction

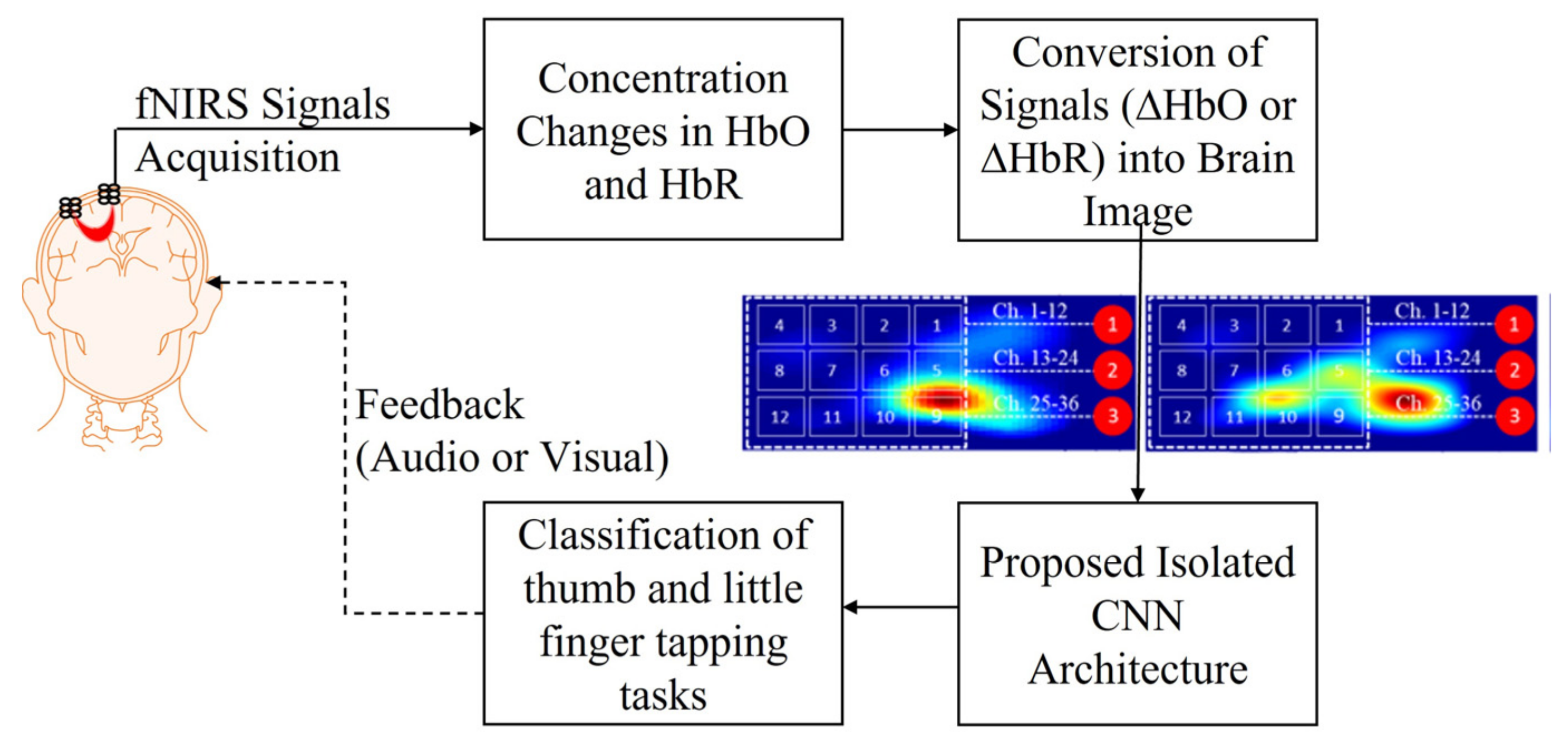

2. Materials and Methods

2.1. Experimental Data and Pre-Processing

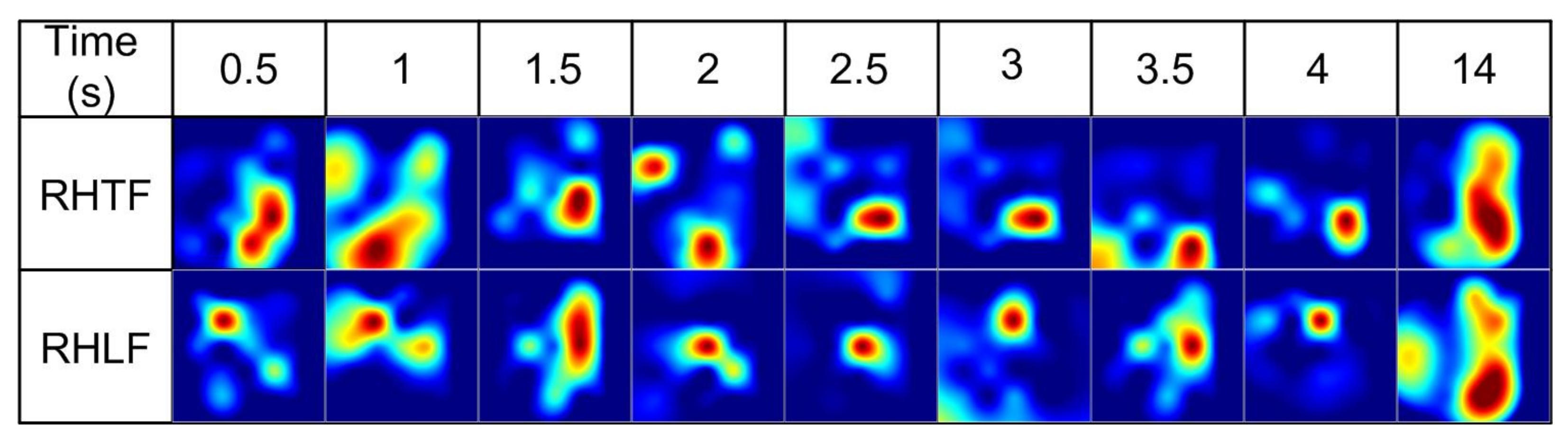

2.2. Image Construction

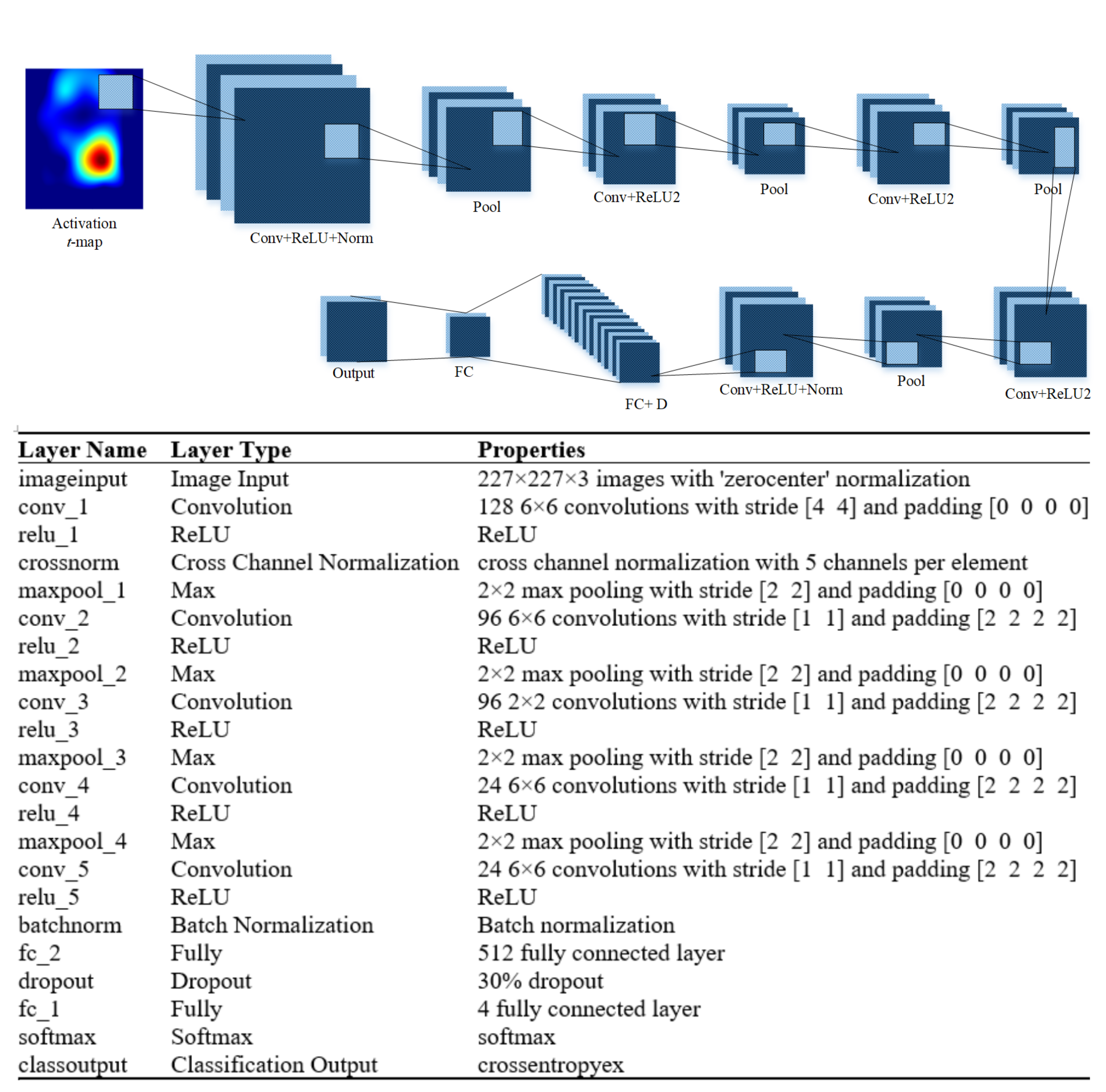

2.3. Isolated Convolutional Neural Network

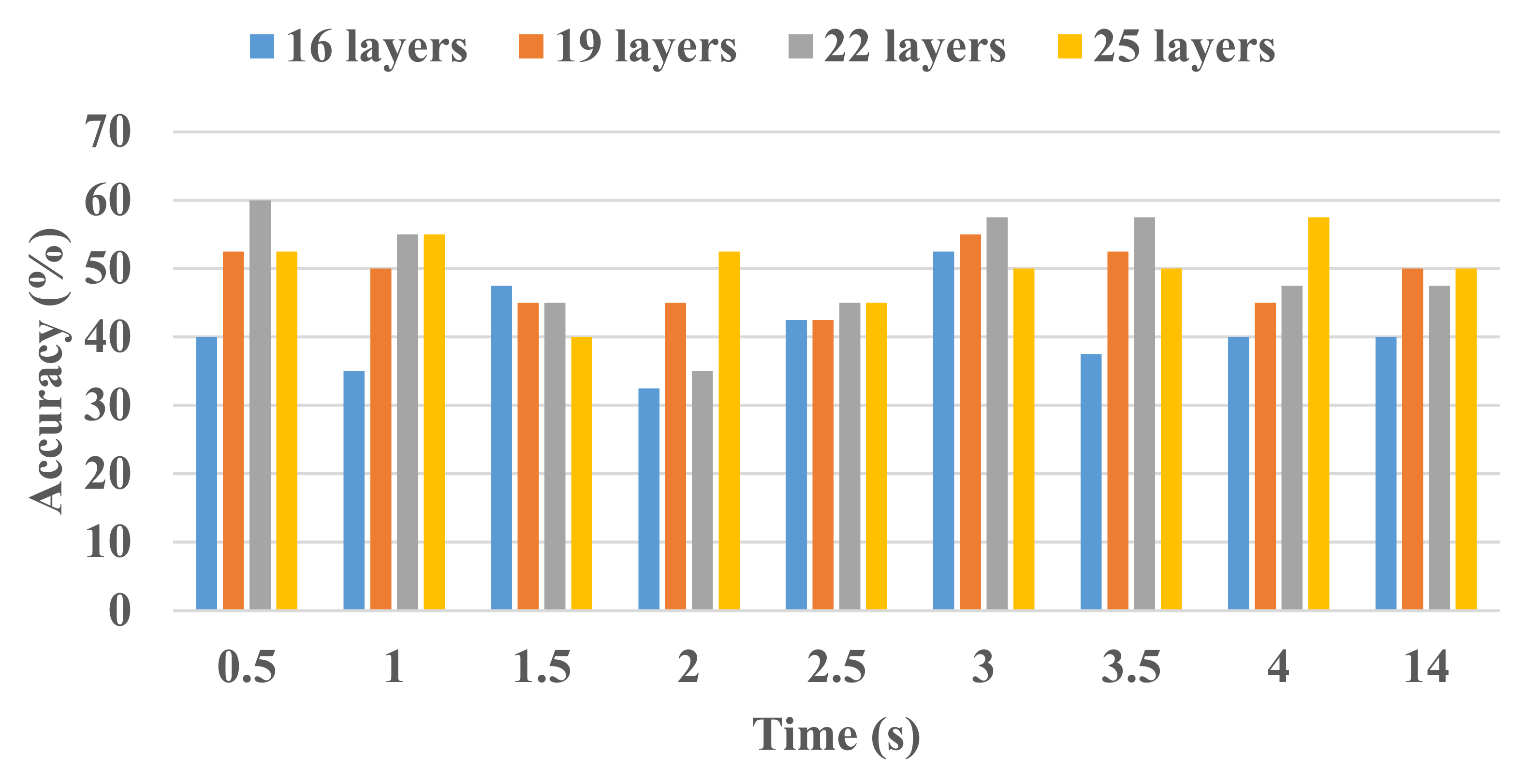

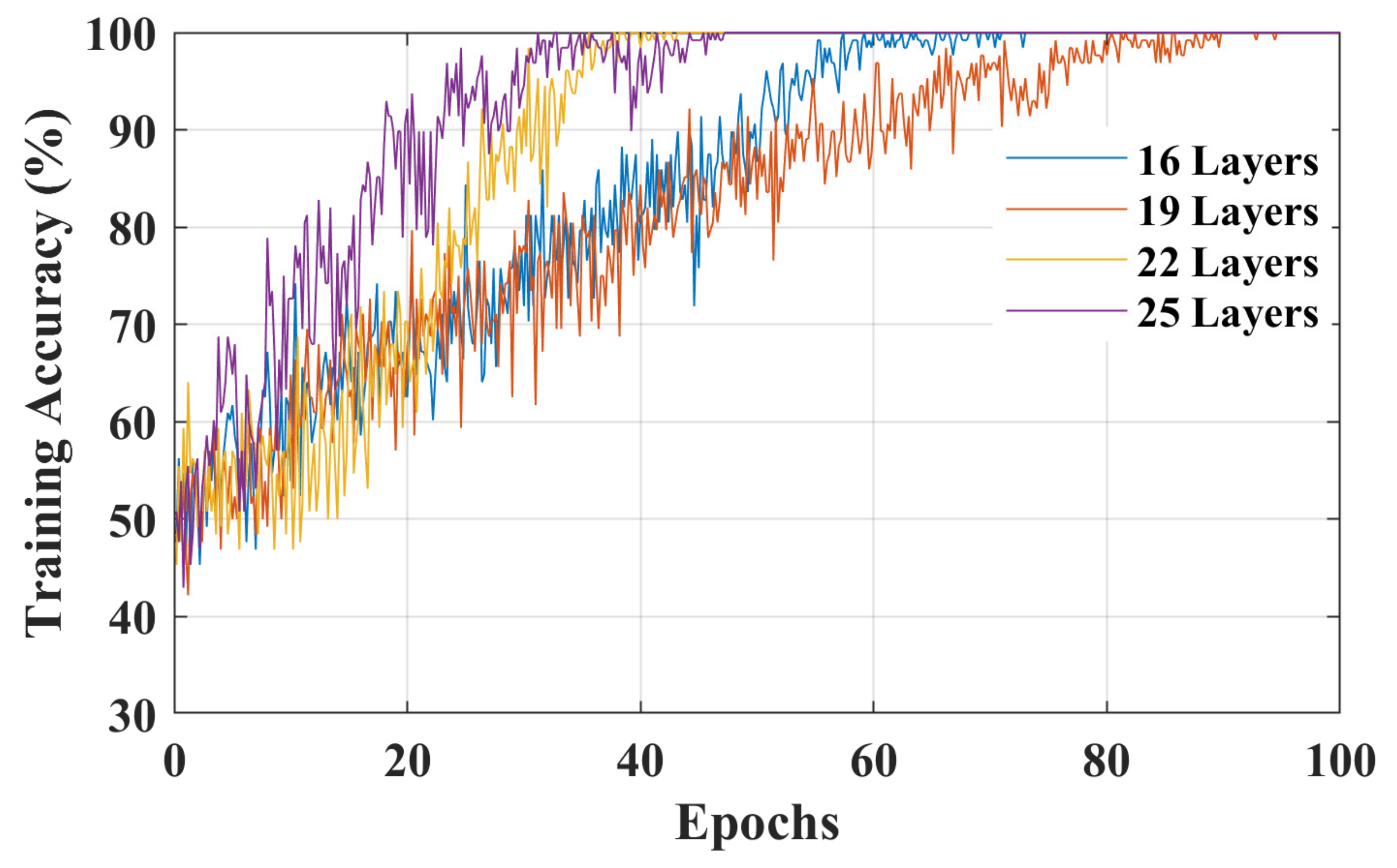

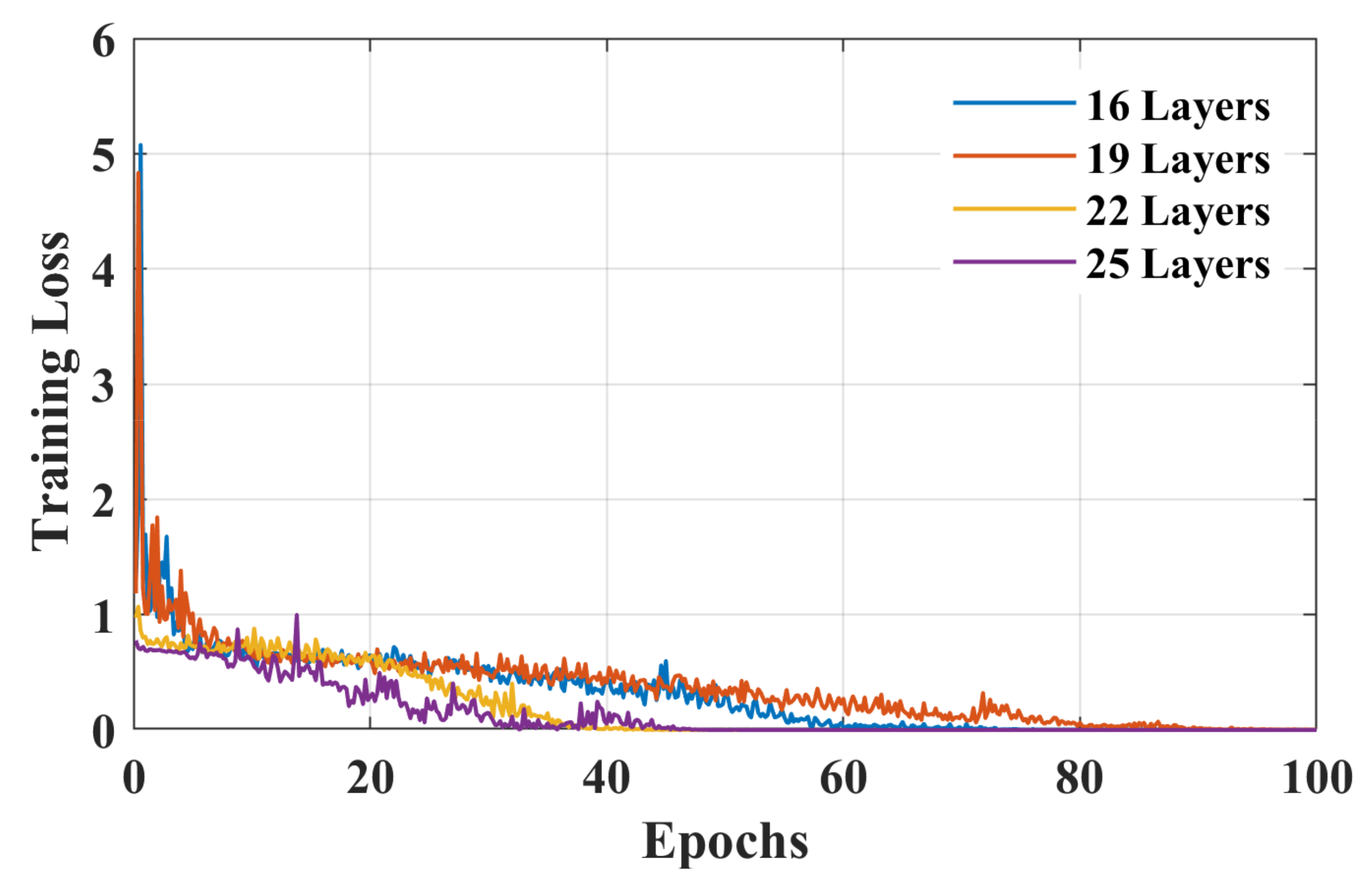

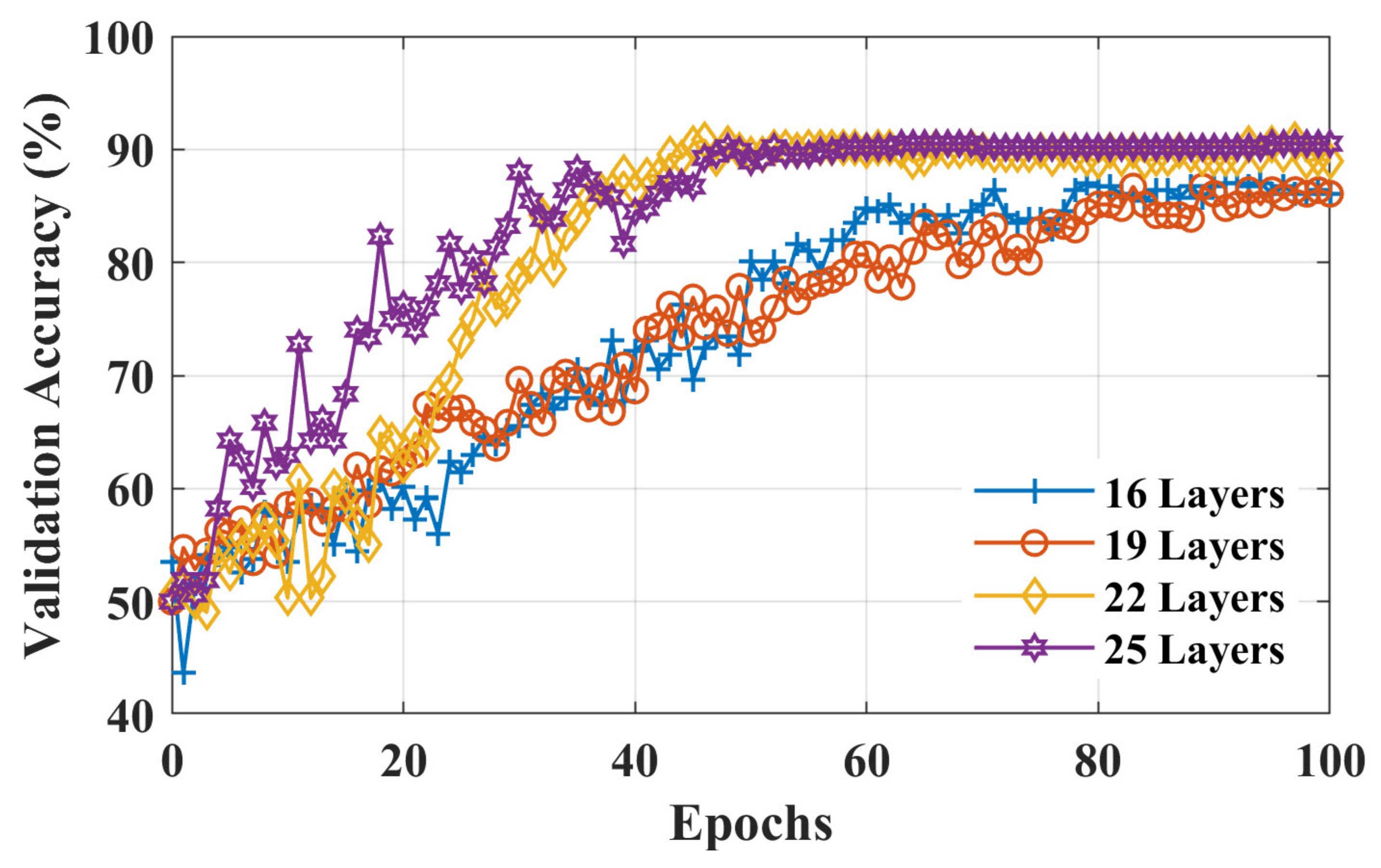

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Karen, T.; Kleiser, S.; Ostojic, D.; Isler, H.; Guglielmini, S.; Bassler, D.; Wolf, M.; Scholkmann, F. Cerebral hemodynamic responses in preterm-born neonates to visual stimulation: Classification according to subgroups and analysis of frontotemporal–occipital functional connectivity. Neurophotonics 2019, 6, 045005. [Google Scholar] [CrossRef] [PubMed]

- Llana, T.; Fernandez-Baizan, C.; Mendez-Lopez, M.; Fidalgo, C.; Mendez, M. Functional near-infrared spectroscopy in the neuropsychological assessment of spatial memory: A systematic review. Acta Psychol. 2022, 224, 103525. [Google Scholar] [CrossRef]

- Bonetti, L.V.; Hassan, S.A.; Lau, S.-T.; Melo, L.T.; Tanaka, T.; Patterson, K.K.; Reid, W.D. Oxyhemoglobin changes in the prefrontal cortex in response to cognitive tasks: A systematic review. Int. J. Neurosci. 2019, 129, 194–202. [Google Scholar] [CrossRef]

- Yoshino, K.; Oka, N.; Yamamoto, K.; Takahashi, H.; Kato, T. Correlation of prefrontal cortical activation with changing vehicle speeds in actual driving: A vector-based functional near-infrared spectroscopy study. Front. Hum. Neurosci. 2013, 7, 895. [Google Scholar] [CrossRef]

- Carius, D.; Hörnig, L.; Ragert, P.; Kaminski, E. Characterizing cortical hemodynamic changes during climbing and its relation to climbing expertise. Neurosci. Lett. 2020, 715, 134604. [Google Scholar] [CrossRef] [PubMed]

- Gateau, T.; Durantin, G.; Lancelot, F.; Scannella, S.; Dehais, F. Real-time state estimation in a flight simulator using fNIRS. PLoS ONE 2015, 10, e0121279. [Google Scholar] [CrossRef]

- Delaire, É.; Abdallah, C.; Uji, M.; Cai, Z.; Brooks, M.; Minato, E.; Mozhentiy, E.; Spilkin, A.; Keraudran, H.; Bakian, S. Personalized EEG/fNIRS: A promising tool to study whole-night sleep in healthy and pathological conditions. Sleep Med. 2022, 100, S21–S22. [Google Scholar] [CrossRef]

- Shoaib, Z.; Akbar, A.; Kim, E.S.; Kamran, M.A.; Kim, J.H.; Jeong, M.Y. Utilizing EEG and fNIRS for the detection of sleep-deprivation-induced fatigue and its inhibition using colored light stimulation. Sci. Rep. 2023, 13, 6465. [Google Scholar] [CrossRef]

- Hong, K.-S.; Zafar, A. Existence of initial dip for BCI: An illusion or reality. Front. Neurorobot. 2018, 12, 69. [Google Scholar] [CrossRef]

- Scarapicchia, V.; Brown, C.; Mayo, C.; Gawryluk, J.R. Functional magnetic resonance imaging and functional near-infrared spectroscopy: Insights from combined recording studies. Front. Hum. Neurosci. 2017, 11, 419. [Google Scholar] [CrossRef]

- Chiarelli, A.M.; Zappasodi, F.; Di Pompeo, F.; Merla, A. Simultaneous functional near-infrared spectroscopy and electroencephalography for monitoring of human brain activity and oxygenation: A review. Neurophotonics 2017, 4, 041411. [Google Scholar] [CrossRef]

- Liu, Z.; Shore, J.; Wang, M.; Yuan, F.; Buss, A.; Zhao, X. A systematic review on hybrid EEG/fNIRS in brain-computer interface. Biomed. Signal Process. Control 2021, 68, 102595. [Google Scholar] [CrossRef]

- Gao, F.; Hua, L.; He, Y.; Xu, J.; Li, D.; Zhang, J.; Yuan, Z. Word Structure Tunes Electrophysiological and Hemodynamic Responses in the Frontal Cortex. Bioengineering 2023, 10, 288. [Google Scholar] [CrossRef] [PubMed]

- Huppert, T.; Barker, J.; Schmidt, B.; Walls, S.; Ghuman, A. Comparison of group-level, source localized activity for simulta-neous functional near-infrared spectroscopy-magnetoencephalography and simultaneous fNIRS-fMRI during parametric median nerve stimulation. Neurophotonics 2017, 4, 015001. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.-L.; Wagner, J.; Heugel, N.; Sugar, J.; Lee, Y.-W.; Conant, L.; Malloy, M.; Heffernan, J.; Quirk, B.; Zinos, A. Functional near-infrared spectroscopy and its clinical application in the field of neuroscience: Advances and future directions. Front. Neurosci. 2020, 14, 724. [Google Scholar] [CrossRef]

- Ali, M.U.; Kim, K.S.; Kallu, K.D.; Zafar, A.; Lee, S.W. OptEF-BCI: An Optimization-Based Hybrid EEG and fNIRS-Brain Computer Interface. Bioengineering 2023, 10, 608. [Google Scholar] [CrossRef]

- Shoaib, Z.; Chang, W.K.; Lee, J.; Lee, S.H.; Phillips, V.Z.; Lee, S.H.; Paik, N.-J.; Hwang, H.-J.; Kim, W.-S. Investigation of neuromodulatory effect of anodal cerebellar transcranial direct current stimulation on the primary motor cortex using functional near-infrared spectroscopy. Cerebellum 2023, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Boas, D.A.; Elwell, C.E.; Ferrari, M.; Taga, G. Twenty years of functional near-infrared spectroscopy: Introduction for the special issue. Neuroimage 2014, 85, 1–5. [Google Scholar] [CrossRef]

- Scholkmann, F.; Kleiser, S.; Metz, A.J.; Zimmermann, R.; Pavia, J.M.; Wolf, U.; Wolf, M. A review on continuous wave functional near-infrared spectroscopy and imaging instrumentation and methodology. Neuroimage 2014, 85, 6–27. [Google Scholar] [CrossRef]

- Reganova, E.; Solovyeva, K.; Buyanov, D.; Gerasimenko, A.Y.; Repin, D. Effects of Intermittent Hypoxia and Electrical Muscle Stimulation on Cognitive and Physiological Metrics. Bioengineering 2023, 10, 536. [Google Scholar] [CrossRef]

- Ernst, T.; Hennig, J. Observation of a fast response in functional MR. Magn. Reson. Med. 1994, 32, 146–149. [Google Scholar] [CrossRef]

- Duong, T.Q.; Kim, D.S.; Uğurbil, K.; Kim, S.G. Spatiotemporal dynamics of the BOLD fMRI signals: Toward mapping submillimeter cortical columns using the early negative response. Magn. Reson. Med. Off. J. Int. Soc. Magn. Reson. Med. 2000, 44, 231–242. [Google Scholar] [CrossRef]

- Abdelnour, A.F.; Huppert, T. Real-time imaging of human brain function by near-infrared spectroscopy using an adaptive general linear model. Neuroimage 2009, 46, 133–143. [Google Scholar] [CrossRef] [PubMed]

- Huppert, T.J.; Diamond, S.G.; Franceschini, M.A.; Boas, D.A. HomER: A review of time-series analysis methods for near-infrared spectroscopy of the brain. Appl. Opt. 2009, 48, D280–D298. [Google Scholar] [CrossRef]

- Lindquist, M.A.; Loh, J.M.; Atlas, L.Y.; Wager, T.D. Modeling the hemodynamic response function in fMRI: Efficiency, bias and mis-modeling. Neuroimage 2009, 45, S187–S198. [Google Scholar] [CrossRef]

- Ye, J.C.; Tak, S.; Jang, K.E.; Jung, J.; Jang, J. NIRS-SPM: Statistical parametric mapping for near-infrared spectroscopy. Neuroimage 2009, 44, 428–447. [Google Scholar] [CrossRef]

- Tanveer, M.A.; Khan, M.J.; Qureshi, M.J.; Naseer, N.; Hong, K.-S. Enhanced drowsiness detection using deep learning: An fNIRS study. IEEE Access 2019, 7, 137920–137929. [Google Scholar] [CrossRef]

- Ghonchi, H.; Fateh, M.; Abolghasemi, V.; Ferdowsi, S.; Rezvani, M. Deep recurrent–convolutional neural network for classification of simultaneous EEG–fNIRS signals. IET Signal Process. 2020, 14, 142–153. [Google Scholar] [CrossRef]

- Yang, D.; Huang, R.; Yoo, S.-H.; Shin, M.-J.; Yoon, J.A.; Shin, Y.-I.; Hong, K.-S. Detection of mild cognitive impairment using convolutional neural network: Temporal-feature maps of functional near-infrared spectroscopy. Front. Aging Neurosci. 2020, 12, 141. [Google Scholar] [CrossRef] [PubMed]

- Eastmond, C.; Subedi, A.; De, S.; Intes, X. Deep Learning in fNIRS: A review. arXiv 2022, arXiv:2201.13371. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Lee, S.; Dan, I.; Tak, S. A deep convolutional neural network for estimating hemodynamic response function with reduction of motion artifacts in fNIRS. J. Neural Eng. 2022, 19, 016017. [Google Scholar] [CrossRef] [PubMed]

- Saadati, M.; Nelson, J.; Ayaz, H. Multimodal fNIRS-EEG classification using deep learning algorithms for brain-computer interfaces purposes. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Washington, DC, USA, 24–28 July 2019; pp. 209–220. [Google Scholar]

- Khalil, K.; Asgher, U.; Ayaz, Y. Novel fNIRS study on homogeneous symmetric feature-based transfer learning for brain–computer interface. Sci. Rep. 2022, 12, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Ramirez, M.; Kaheh, S.; Khalil, M.A.; George, K. Application of Convolutional Neural Network for Classification of Consumer Preference from Hybrid EEG and FNIRS Signals. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 26–29 January 2022; pp. 1024–1028. [Google Scholar]

- Bandara, D.; Hirshfield, L.; Velipasalar, S. Classification of affect using deep learning on brain blood flow data. J. Near Infrared Spectrosc. 2019, 27, 206–219. [Google Scholar] [CrossRef]

- Xu, L.; Geng, X.; He, X.; Li, J.; Yu, J. Prediction in autism by deep learning short-time spontaneous hemodynamic fluctuations. Front. Neurosci. 2019, 13, 1120. [Google Scholar] [CrossRef]

- Sirpal, P.; Kassab, A.; Pouliot, P.; Nguyen, D.K.; Lesage, F. fNIRS improves seizure detection in multimodal EEG-fNIRS recordings. J. Biomed. Opt. 2019, 24, 051408. [Google Scholar] [CrossRef]

- Khan, M.A.; Ghafoor, U.; Yoo, H.-R.; Hong, K.-S. Evidence of Neuroplasticity Due to Acupuncture: An fNIRS Study. Preprint 2020. [Google Scholar] [CrossRef]

- Yang, D.; Hong, K.-S.; Yoo, S.-H.; Kim, C.-S. Evaluation of neural degeneration biomarkers in the prefrontal cortex for early identification of patients with mild cognitive impairment: An fNIRS study. Front. Hum. Neurosci. 2019, 13, 317. [Google Scholar] [CrossRef]

- Wang, R.; Hao, Y.; Yu, Q.; Chen, M.; Humar, I.; Fortino, G. Depression analysis and recognition based on functional near-infrared spectroscopy. IEEE J. Biomed. Health Inform. 2021, 25, 4289–4299. [Google Scholar] [CrossRef]

- Yang, D.; Hong, K.-S. Quantitative assessment of resting-state for mild cognitive impairment detection: A functional near-infrared spectroscopy and deep learning approach. J. Alzheimer’s Dis. 2021, 80, 647–663. [Google Scholar] [CrossRef]

- Ho, T.K.K.; Kim, M.; Jeon, Y.; Kim, B.C.; Kim, J.G.; Lee, K.H.; Song, J.-I.; Gwak, J. Deep Learning-Based Multilevel Classification of Alzheimer’s Disease Using Non-invasive Functional Near-Infrared Spectroscopy. Front. Aging Neurosci. 2022, 14. [Google Scholar] [CrossRef]

- Zafar, A.; Hong, K.-S. Neuronal activation detection using vector phase analysis with dual threshold circles: A functional near-infrared spectroscopy study. Int. J. Neural Syst. 2018, 28, 1850031. [Google Scholar] [CrossRef]

- Zafar, A.; Hong, K.-S. Reduction of onset delay in functional near-infrared spectroscopy: Prediction of HbO/HbR signals. Front. Neurorobotics 2020, 14, 10. [Google Scholar] [CrossRef]

- Tak, S.; Ye, J.C. Statistical analysis of fNIRS data: A comprehensive review. Neuroimage 2014, 85, 72–91. [Google Scholar] [CrossRef] [PubMed]

- Doğantekin, A.; Özyurt, F.; Avcı, E.; Koc, M. A novel approach for liver image classification: PH-C-ELM. Measurement 2019, 137, 332–338. [Google Scholar] [CrossRef]

- Zafar, A.; Hong, K.-S. Detection and classification of three-class initial dips from prefrontal cortex. Biomed. Opt. Express 2017, 8, 367–383. [Google Scholar] [CrossRef] [PubMed]

- Siero, J.C.; Hendrikse, J.; Hoogduin, H.; Petridou, N.; Luijten, P.; Donahue, M.J. Cortical depth dependence of the BOLD initial dip and poststimulus undershoot in human visual cortex at 7 Tesla. Magn. Reson. Med. 2015, 73, 2283–2295. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.D.; Chen, G.; Cai, J.; Roe, A.W. Intrinsic signal optical imaging of visual brain activity: Tracking of fast cortical dynamics. Neuroimage 2017, 148, 160–168. [Google Scholar] [CrossRef] [PubMed]

- Akiyama, T.; Ohira, T.; Kawase, T.; Kato, T. TMS orientation for NIRS-functional motor mapping. Brain Topogr. 2006, 19, 1–9. [Google Scholar] [CrossRef]

- Hu, X.; Yacoub, E. The story of the initial dip in fMRI. Neuroimage 2012, 62, 1103–1108. [Google Scholar] [CrossRef]

- Wylie, G.R.; Graber, H.L.; Voelbel, G.T.; Kohl, A.D.; DeLuca, J.; Pei, Y.; Xu, Y.; Barbour, R.L. Using co-variations in the Hb signal to detect visual activation: A near infrared spectroscopic imaging study. Neuroimage 2009, 47, 473–481. [Google Scholar] [CrossRef]

| Network Layers | Class | Classified as | TPR (%) | FNR (%) | PPV (%) | FDR (%) | Accuracy (%) | |

|---|---|---|---|---|---|---|---|---|

| RHLF | RHTF | |||||||

| 16 | RHLF | 136 | 22 | 86.1 | 13.9 | 85.5 | 14.5 | 85.8 |

| RHTF | 23 | 135 | 85.4 | 14.6 | 86.0 | 14.0 | ||

| 19 | RHLF | 134 | 24 | 84.8 | 15.2 | 87.0 | 13.0 | 86.1 |

| RHTF | 20 | 138 | 87.3 | 12.7 | 85.2 | 14.8 | ||

| 22 | RHLF | 140 | 18 | 88.6 | 11.4 | 89.7 | 10.3 | 89.2 |

| RHTF | 16 | 142 | 89.9 | 10.1 | 88.8 | 11.3 | ||

| 25 | RHLF | 144 | 14 | 91.1 | 8.9 | 88.9 | 11.1 | 89.9 |

| RHTF | 18 | 140 | 88.6 | 11.4 | 90.9 | 9.1 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, M.U.; Zafar, A.; Kallu, K.D.; Yaqub, M.A.; Masood, H.; Hong, K.-S.; Bhutta, M.R. An Isolated CNN Architecture for Classification of Finger-Tapping Tasks Using Initial Dip Images: A Functional Near-Infrared Spectroscopy Study. Bioengineering 2023, 10, 810. https://doi.org/10.3390/bioengineering10070810

Ali MU, Zafar A, Kallu KD, Yaqub MA, Masood H, Hong K-S, Bhutta MR. An Isolated CNN Architecture for Classification of Finger-Tapping Tasks Using Initial Dip Images: A Functional Near-Infrared Spectroscopy Study. Bioengineering. 2023; 10(7):810. https://doi.org/10.3390/bioengineering10070810

Chicago/Turabian StyleAli, Muhammad Umair, Amad Zafar, Karam Dad Kallu, M. Atif Yaqub, Haris Masood, Keum-Shik Hong, and Muhammad Raheel Bhutta. 2023. "An Isolated CNN Architecture for Classification of Finger-Tapping Tasks Using Initial Dip Images: A Functional Near-Infrared Spectroscopy Study" Bioengineering 10, no. 7: 810. https://doi.org/10.3390/bioengineering10070810

APA StyleAli, M. U., Zafar, A., Kallu, K. D., Yaqub, M. A., Masood, H., Hong, K.-S., & Bhutta, M. R. (2023). An Isolated CNN Architecture for Classification of Finger-Tapping Tasks Using Initial Dip Images: A Functional Near-Infrared Spectroscopy Study. Bioengineering, 10(7), 810. https://doi.org/10.3390/bioengineering10070810