Abstract

Craniotomy is a fundamental component of neurosurgery that involves the removal of the skull bone flap. Simulation-based training of craniotomy is an efficient method to develop competent skills outside the operating room. Traditionally, an expert surgeon evaluates the surgical skills using rating scales, but this method is subjective, time-consuming, and tedious. Accordingly, the objective of the present study was to develop an anatomically accurate craniotomy simulator with realistic haptic feedback and objective evaluation of surgical skills. A CT scan segmentation-based craniotomy simulator with two bone flaps for drilling task was developed using 3D printed bone matrix material. Force myography (FMG) and machine learning were used to automatically evaluate the surgical skills. Twenty-two neurosurgeons participated in this study, including novices (n = 8), intermediates (n = 8), and experts (n = 6), and they performed the defined drilling experiments. They provided feedback on the effectiveness of the simulator using a Likert scale questionnaire on a scale ranging from 1 to 10. The data acquired from the FMG band was used to classify the surgical expertise into novice, intermediate and expert categories. The study employed naïve Bayes, linear discriminant (LDA), support vector machine (SVM), and decision tree (DT) classifiers with leave one out cross-validation. The neurosurgeons’ feedback indicates that the developed simulator was found to be an effective tool to hone drilling skills. In addition, the bone matrix material provided good value in terms of haptic feedback (average score 7.1). For FMG-data-based skills evaluation, we achieved maximum accuracy using the naïve Bayes classifier (90.0 ± 14.8%). DT had a classification accuracy of 86.22 ± 20.8%, LDA had an accuracy of 81.9 ± 23.6%, and SVM had an accuracy of 76.7 ± 32.9%. The findings of this study indicate that materials with comparable biomechanical properties to those of real tissues are more effective for surgical simulation. In addition, force myography and machine learning provide objective and automated assessment of surgical drilling skills.

1. Introduction

Craniotomy is a surgical procedure in which a flap of the skull bone is removed in order to expose the dura and access the brain [1]. It is an integral part of all neurosurgical procedures and involves the usage of high-speed drills and other specialized instruments [2]. During a craniotomy procedure, dural tear or rupture can have several adverse consequences, such as cerebrospinal fluid (CSF) leak, infection, hematoma, or brain herniation [3,4]. Furthermore, patients suffering from traumatic head injuries in rural areas require immediate surgical intervention even in the absence of neurosurgeons [5]. Therefore, competent training of craniotomy procedures in a safe and repeatable environment is essential for neurosurgeons as well as community general surgeons [6,7]. However, the typical time-based apprenticeship paradigm for craniotomy training lacks hands-on experience in the early stages and may compromise patient safety later on. Therefore, the concept of competency-based training is being adopted widely [8]. This method includes use of simulation-based training models to hone skills outside the operating room in a safe and repeatable manner [9].

Physical as well as virtual reality simulators can be used to provide simulation-based training [10,11]. Among these, physical simulators provide real-time haptic feedback and are more effective for psychomotor skills training [10]. Patient-specific physical simulators constructed using computed tomography (CT) and magnetic resonance imaging (MRI) data of patients provide a more realistic training environment [12]. Typically, 3D printing is employed to fabricate the intricate structures of a physical simulator due to their complex shape [13]. Various 3D printed materials, such as acrylonitrile butadiene styrene (ABS) plastic, polyamide, gypsum, and polymers are used to fabricate cranial bones for surgical simulators [14]. However, the majority of these lack biomechanical properties and do not accurately replicate natural bone drilling [15]. Realistic tissue fidelity is essential to provide a tool–tissue interaction feel similar to surgery. Therefore, physical neurosurgical simulators require materials that mimic the biomechanical properties of the skull [16].

Evaluation of surgical skills is essential for competency-based training, since it provides trainees with feedback on their performance [17]. Traditionally, the evaluation of surgical skills relied on an expert surgeon assessing the performance of trainees; however, this method suffers from inter-observer bias and the limited availability of experts [18]. The virtual reality simulators have a defined workspace, which makes skill validation considerably more straightforward [19]. However, with physical simulators, evaluating surgical skills is more challenging. The objective evaluation on physical simulators can be carried out using computer vision techniques or by using electronic sensors. Video-based analysis includes tracking and analysis of the surgical instrument movements [20]. However, video cannot capture the data related to tool–tissue interaction, such as applied force, muscular workload, cognitive workload, and gaze pattern. Therefore, various sensors, including electroencephalography (EEG) [21], electromyography (EMG) [22], inertial measurement units (IMU) [23], force sensors [24], and eye-tracking [25], have been employed in previous studies to evaluate surgical skills. However, the sensor-based data collection system must be minimal and should not impede the natural movements of the surgeons [26].

The aim of the present study was to develop a craniotomy simulator and system for the objective evaluation of surgical skills. The specific contributions of the present work are as follows: (i) The study described the development and validation of a realistic neurosurgical craniotomy simulator for microscopic drilling activity; (ii) 3D printed materials that mimic biomechanical properties were used to fabricate skull and dura; (iii) a force myography band was developed to measure the variation in forearm muscle radial force patterns during surgical drilling activity; (iv) various machine learning algorithms were used to classify the level of surgical expertise.

2. Methodology

2.1. Simulator Design and Fabrication

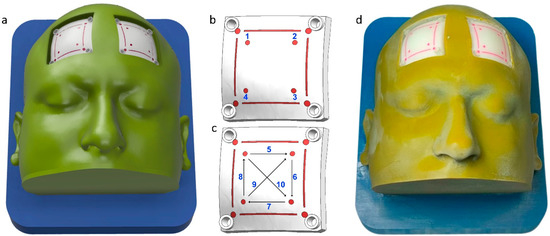

A patient’s CT scan data were obtained after written consent. The data were used to segment the scalp, skull, and dura using Simpleware ScanIP software R-2021.03 (Synopsys Inc, Mountain View, CA, United States). The editable 3D models of these anatomical structures were exported in the STEP format using the ScanIP NURBS module. The STEP files were imported into the NX 2007 (Siemens, Munich, Germany) computer-aided design software. Two square-shaped cutouts were created in the frontal region of the scalp to expose the skull. Inside these cutouts, two flaps for the craniotomy training were created. Support structures were modeled around these two flaps and holes were made to fix the flaps to the scalp. An angulated base plate was designed to provide surgical position for the craniotomy procedure. Burr holes and lines were also designed on the drilling patches to provide markings for defined drilling activity [27]. The drilling activity includes making four burr holes, four linear lines, and two diagonal lines. A digital anatomy 3D printer (J750, Stratasys, Rehovot, Israel) was utilized to fabricate the components of the simulator. The outer body and base plate were fabricated using a combination of Vero cyan, magenta, and yellow materials. The drilling patches were fabricated using bone matrix and dura in Agilus materials, respectively [16]. The CAD model, drilling activity, and 3D printed prototype of the craniotomy simulator are shown in Figure 1a–d, respectively.

Figure 1.

Craniotomy simulator with removable flaps for drilling activity. (a) CAD model, (b) 1–4 burr hole drilling activity, (c) 5–8 straight lines, and 9–10 diagonal line drilling activity, and (d) 3D printed prototype.

2.2. Force Myography Band

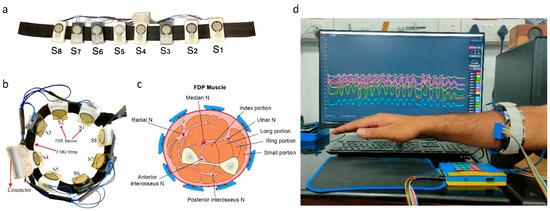

For neurosurgical drilling, small forces are applied on the bone using brush-like strokes of the drill bit. Evaluation of tool–tissue contact forces can, therefore, provide insights of surgical competency. As it was difficult and non-ergonomic to place force sensors between a tool and a surgeon’s hand, force myography (FMG) was used to evaluate the applied forces. FMG measures the volumetric changes in the arm muscles via radial force and has been widely used for hand gesture prediction [28]. Accordingly, an FMG band with eight flexible force sensitive resistors (FSR) was designed as a wearable force-sensing device (FSR 400, sensitivity 0.2–20 N, Interlink Electronics Inc., Camarillo, CA, United States). The FSR sensors were calibrated using a universal testing machine (UTM) (H5KS, SDL Atlas, Rock Hill, SC, USA). The sensors were fixed on the custom-made 3D printed mounts (40 × 22 mm) with hard ABS plastic material to support the back filament of FSR. The design of the FSR mount allowed for the passage of wiring and an elastic strap. The average circumference range of the band was kept at 22 cm non-stretched, but can be extended to 28 cm after stretching. The data collection setup is depicted in Figure 2.

Figure 2.

Data collection setup. (a) Force myography band using an array of eight FSR sensors, 3D printed mounts, and elastic strap, (b) FMG band sensor placement sites on the forearm, (c) forearm cut section depicting sensor placement sites with respect to muscles, and (d) FMG band donned on the forearm and data recording.

A custom-made printed circuit board (PCB) was used to capture the signal of eight FSR sensors of the FMG band. The PCB consists of a Arduino mega 2560 microcontroller (Arduino, Budapest, Hungary), Bluetooth module (HC-05), and Battery monitoring system (3S 20A Li-ion Lithium Battery, 18650 Charger PCB BMS Protection Board 12.6 V Cell) to provide power to the system and receive data at a remote desktop. A voltage divider circuit was used to capture the variation in the sensitivity of the FSR sensors and tune them to operate in the desired curve. A constant voltage of 5 volts was supplied at one terminal of the FSR, and the other terminal was connected to the analog input pin of the microcontroller. The same analog pin was connected with a 10 kΩ resistor. To receive and visualize the signal, a freeware serial terminal application (IDE processing, version 3.5.3) was used. IDE is an open-source platform to receive the signal serially and store it in the .txt or .csv formats.

2.3. Data Collection and Experimental Protocol

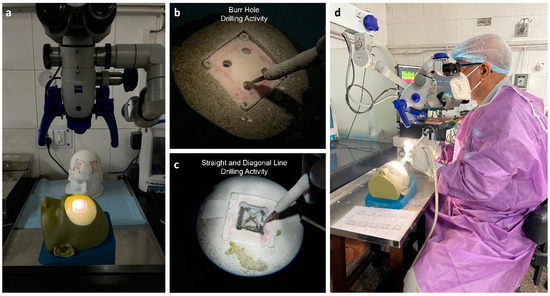

Twenty-two subjects were recruited for this study after giving written consent. All subjects were male and right-handed. Among these, 6 were experienced neurosurgeons (age: 49 ± 3.5 years) with more than 10 years of experience, 8 were senior residents (age: 29 ± 1.4 years) with 3 years experience, and the other 8 were junior residents (age: 26 ± 1 years) with no experience of craniotomy procedures. The study was approved by the institute’s ethics committee (IEC-206/9 April 2021). The FMG strap was donned on the dominant hand (forearm) of the participants. The seventh sensor was positioned at the line between the styloid process of the ulna and the medial epicondyle of the humerus. The remaining sensors were evenly distributed along the forearm’s diameter. This configuration of sensors enabled comparable forearm muscle radial force measurements among different participants. The participants were advised to perform three different activities, i.e., (1) drilling four burr holes, (2) drilling four linear lines, and (3) drilling two diagonal lines. All participants performed the defined drilling task on the patches placed on the right side of the simulator. An encoder (grove rotary angle sensor) was attached on the foot pedal of the drilling machine to identify the drill on and off position based on the threshold values. During the drilling trials, eight-channel FMG data were acquired using the experimental setup depicted in Figure 3.

Figure 3.

Simulation-based training using a craniotomy simulator. (a) Operating microscope and craniotomy simulator, (b) burr hole drilling activity, (c) straight- and diagonal-line drilling activity, and (d) experimental setup.

2.4. Simulator Validation

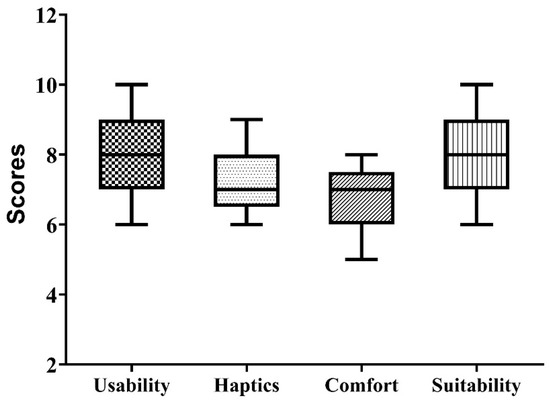

The effectiveness of the craniotomy simulator was evaluated by conducting a survey among all participants, including expert neurosurgeons, senior residents, and junior residents. After performing the training experiment with the simulator, the participants provided feedback on parameters including usability, haptics, comfort, and suitability. Usability refers to the simulator’s usefulness for effective skill development outside the operating room. The haptic feedback evaluates the degree of resemblance between 3D printed bone surrogate and real bone drilling. The comfort relates to the ease of performing the drilling activity while donning the FMG band on the forearm. Suitability refers to the neurosurgeons’ recommendation to include the developed simulator in the residency program. All participants rated their experience on a scale ranging from 1 to 10, with 1–3 indicating some value, 4–6 indicating good value, and 7–10 indicating excellent value. The neurosurgeons’ scores were tabulated in a Microsoft Excel spreadsheet.

2.5. FMG Data Preprocessing

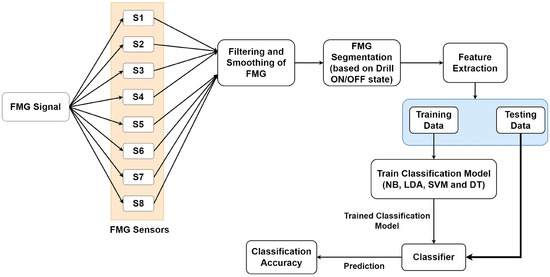

Participants’ eight-channel FMG data were imported into MATLAB R2020b for further analysis. As reported in the literature, a typical human hand movement frequency is less than 4.5 Hz [29]. Therefore, the collected FMG data of each participant was filtered using the low pass Butterworth filter of the fourth order with a cut-off frequency of 10 Hz. In addition, a 10-point moving average filter was used to further smoothen the FMG data. The complete FMG data of each participant was segmented into drilling and resting state based on the threshold value of the foot potentiometer marker. The segmented data were then forwarded to the feature extraction step. The complete methodology for FMG data analysis is shown in Figure 4.

Figure 4.

Methodology for the analysis of force myography data (FMG) data for skills evaluation.

2.6. Feature Extraction

Feature extraction of the FMG signals was based on the earlier literature [30,31]. The simplified and computationally less complex features were used in the present study. Based on the preliminary analysis, five features were selected, as depicted in Table 1.

Table 1.

Features extracted from the eight-channel FMG data.

2.7. Classification

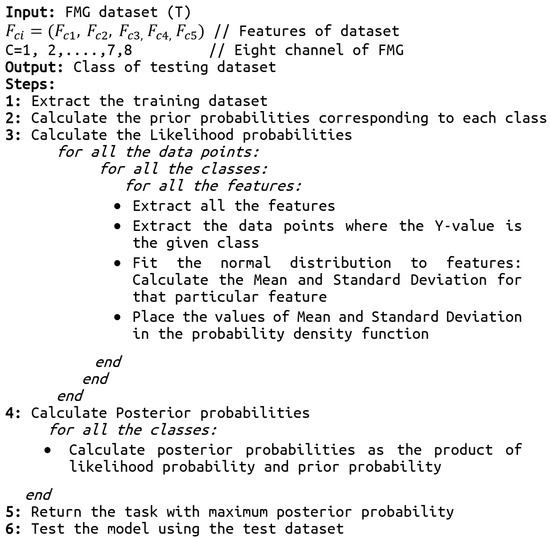

In this study, we evaluated the performance of naïve Bayes, linear discriminant analysis (LDA), support vector machine (SVM), and decision tree classifiers for classification of surgical skills into expert, intermediate, and novice categories. The naïve Bayes (NB) classifier is a subcategory of the Bayes classifier [32]. It is a supervised machine learning algorithm that is based on a probabilistic classification approach. It is best suited for the high dimensional dataset with the assumption that the predictors are independent of each other. The pseudo-code for skills assessment using FMG data with the naïve Bayes algorithm is given in Figure 5. LDA is a classifier which is flexible, powerful, and has low computational cost [33]. By examining the linear combinations of input variables, it separates the assigned classes. SVM classifiers are capable of learning from smaller amounts of data [34]. The implementation of SVM involves creating a hyperplane as a decision surface to maximize the distance between a positive and a negative example. It performs on a higher-level feature space which is created by nonlinearly transforming the n-dimensional input vector into a K-dimensional feature space. The decision tree is a classifier with tree structure, and is a powerful tool for classification [35]. It is composed of inner and leaf nodes, which represent decision thresholds and predictions, respectively. Comparison of extracted features with each inner node of the decision tree, from the root node to the leaf node, is the typical process of classification.

Figure 5.

The pseudo-code of the skills assessment using the naïve Bayes algorithm.

2.8. Evaluation

As the present study contains a limited dataset of 22 participants, we employed the leave one subject out cross-validation for the training and testing of the classifier. In this procedure, data from one of the subjects was used as the testing dataset, while the data of the rest of the subjects were used as the training dataset. This process was repeated until all subjects’ data were used once as a testing dataset. The performance parameters including accuracy, precision, recall, and f1 score were calculated using the following equations:

For a better understanding of the classification performance, we have calculated the confusion matrix. The diagonal values of the confusion matrix show the correctly classified subjects between the actual class and estimated class.

2.9. Statistical Analysis

Due to the non-parametric nature of the data, we utilized the Kruskal–Wallis test with Dunn’s multiple comparison test to compare the classification accuracy of various classifiers. The significance level was considered to be 0.05.

3. Results

3.1. Simulator Validation by Neurosurgeons

The results of the validation survey by neurosurgeons were compared by plotting boxplots, as shown in Figure 6. The results show that the vast majority of participants strongly felt that the craniotomy simulator was easy to use and had excellent value (average score = 8.0) for skill building outside the operating room. Regarding the haptic properties of the 3D printed bone matrix material, the material was found to have a good value (average score = 7.1) for use in surgical drilling simulation. The comfort of performing the activity while wearing the FSR band was also found to have a good value (average score = 6.7), and the participants reported no ergonomic discomfort while wearing the FMG band. The developed simulator was found to have excellent value (average score = 7.9) for inclusion in the neurosurgery residency program.

Figure 6.

Result of neurosurgeon’s survey-based evaluation of the developed craniotomy simulator.

3.2. Classification

The performance of four different classifiers used in the present study was analyzed using accuracy, precision, recall, and F1 score. The classification accuracy was highest for the naïve Bayes classifier (90.0 ± 14.8), followed by the decision tree classifier (86.2 ± 20.8) and linear discriminant classifier (81.9 ± 23.6). The least performing classifier was the support vector machine classifier (76.7 ± 32.9). The precision, recall and F1 score also followed the pattern of the classification accuracy, as depicted in Table 2.

Table 2.

Performance metrics for the four different classifiers [Mean (SD)].

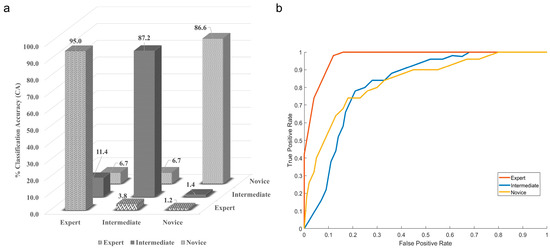

The results of the Kruskal–Wallis test with Dunn’s multiple comparison test showed that there was no statistically significant difference (p = 0.6) between the accuracy of different classifiers. However, the maximum mean classification accuracy, precision, and recall with lesser standard deviation were achieved using the naïve Bayes (NB) classifier. This shows that the naïve Bayes classifier was the best classifier for categorizing neurosurgeons into expert, intermediate, and novice categories. Figure 7a shows the confusion matrix for the naïve Bayes classifier.

Figure 7.

(a) Confusion matrix, (b) ROC curve for the highest performing naïve Bayes classifier.

Here, the diagonal elements show the correctly classified class, while the non-diagonal shows the misclassification. The experts are 95% correctly classified as the experts, and the NB classifier misclassified experts as intermediate 3.8% of the time and as novices 1.2% of the time. The classification accuracy for correctly classifying intermediates was 87.2, while the NB classifier misclassified intermediate as experts 11.4% of the time and as novices 1.4% of the time. The novices are correctly classified 86.6% of the time, and the misclassification equally divides between the expert and novice at 6.7%. The graphical representation of the naïve Bayes classifier is also shown with the help of the ROC curve in Figure 7b. The confusion matrix and ROC curve indicate that the classifier is majorly confused between the expert and the intermediate class.

4. Discussion

Adequate resident training requires hands-on experience, but operative neurosurgery affords few such chances [36]. Moreover, the pressure of performing well, time constraints, and the fear of mistakes hinder adequate learning [37]. It may also lead to an erroneous evaluation of the residents’ surgical aptitude on the part of the supervisor [38]. Simulation systems offer a unique solution for resident training in a safe environment as well as their unbiased evaluation [39]. In the present study, a craniotomy simulator was developed for teaching high-speed drilling skills. In addition, an FMG band was developed for objective and automated evaluation of surgical skills using machine learning.

The cranial bone is a complex structure consisting of external outer layers of compact, high density cortical bone and an inner layer consisting of a low density, irregular porous structure [40]. The surgeons experience a unique haptic sensation when bone dust is produced by surgical drilling [41]. In the past decade, a variety of materials have been employed to fabricate cranial bones for surgical simulators, as detailed in Table 3. However, these materials lack haptic feedback comparable to cranial bones. Therefore, we used the recently introduced bone matrix material of the Digital Anatomy printer (J750, Stratasys, Rehovot, Israel) to fabricate skull bone. The results of the neurosurgeon evaluation indicate that this material provided a comparable surgical drilling feel. Since high-speed surgical drilling generates heat, continuous irrigation is necessary to dissipate the heat [42]. However, with bone matrix material, the use of irrigation caused reduced drilling efficiency. Consequently, continuous drilling during the experiment caused the drill bit to become extremely hot, and a piece of moistened gauze was used to cool it in between. In addition, the lack of irrigation caused bone dust to spread across the drilling site, which was cleared by using suction. Therefore, further improvements in the bone material are required for effective drilling simulation.

Table 3.

Materials used for fabrication of cranial bones for surgical simulation.

The evaluation of the surgical skills and competence is a challenging task even in simulation models. Different scoring methods, such as OSATS, are widely used and are validated assessment scales. Using these scales, an expert surgeon evaluates and scores the performance of trainee surgeons. However, such an evaluation unavoidably increases human bias and places an excessive load on experts, making adaptation and generalization difficult on a larger scale. Therefore, automatic evaluation of surgical skills is important to provide feedback to trainees and to reduce the burden of expert evaluators. Different wearable sensors have been used in various studies for automatic objective evaluation of surgical skills, as depicted in Table 4. However, the sensor-based data collection system must be compact and cause minimal disruption to the natural movements of the surgeons. Therefore, we collected forearm muscle radial force data using an FMG band for skills evaluation. Neurosurgeons determined that the band, which was worn on the forearm, did not interfere with the surgical training experiments. The accuracy achieved using the present system was on par with the existing sensors used for the assessment of surgical skills.

Table 4.

Wearable sensors used for objective evaluation of surgical skills.

The data collected from the FMG band was used to classify the surgical expertise using machine learning algorithms. The naïve Bayes classifier achieved the highest classification accuracy. During the study, we found that out of 22 subjects, the classifier was able to achieve acceptable classification accuracy for 19 subjects but, for 3 subjects, the classifier demonstrated a minimum classification accuracy of 60%, and this accuracy decreased even further for the other 3 classifiers. The classification accuracy, therefore, displays a considerable standard deviation. If these three participants are excluded from the study, the mean classification accuracy increases from 90 to 95%, and the standard deviation decreases from 14.8 to 9%. Therefore, we might consider these individuals to be non-conventional neurosurgeons with a unique approach of performing the high-speed drilling-based procedure. The present study can be extended by including the data of a large number of participants. Moreover, simulators for other surgical tasks, such as suturing, cutting, incising, and tumor resection can be developed using similar methods, and the potential of FMG bands or other electronic sensors for surgical skills evaluation can be analyzed.

5. Conclusions

It is concluded that the anatomically accurate surgical simulator with materials having comparable haptic properties to real tissue has great potential for use in surgical training. The study participants opined that the bone matrix material used to fabricate cranial bones provided comparable haptic feedback for surgical drilling. Objective assessment of surgical skills provides trainees with immediate feedback on their performance and reduces the burden on expert evaluators. The FMG band developed in the present study was found to be a simple and cost-effective solution for machine learning-based automated evaluation of surgical drilling skills.

Author Contributions

Conceptualization, R.S. and A.S.; methodology, R.S.; software, R.S.; validation, R.S. and P.C.; formal analysis, R.S. and A.K.G.; investigation, R.S. and A.K.G.; resources, A.S.; data curation, R.S. and P.C.; writing—original draft preparation, R.S. and A.K.G.; writing—review and editing, R.S. and A.S.; visualization, R.S.; supervision, A.S.; project administration, R.S.; funding acquisition, A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This manuscript is the result of Research Projects funded by extramural grants from: (i) Department of Health Research-DHR, Ministry of Health and Family Welfare, Govt. of India. DHR-ICMR/GIA/18/18/2020. (ii) Department of Biotechnology-DBT, Ministry of Science and Technology, Govt. of India. BT/PR13455/CoE/34/24/2015.

Institutional Review Board Statement

The study was approved by Institute Ethics Committee (Approval No. IEC-206/9 April 2021).

Informed Consent Statement

Written informed consent for publication was obtained from participating neurosurgeons.

Data Availability Statement

The data are not publicly available due to privacy restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Umana, G.E.; Scalia, G.; Fricia, M.; Nicoletti, G.F.; Iacopino, D.G.; Maugeri, R.; Tomasi, S.O.; Cicero, S.; Alberio, N. Diamond-shaped mini-craniotomy: A new concept in neurosurgery. J. Neurol. Surg. Part A Cent. Eur. Neurosurg. 2022, 83, 236–241. [Google Scholar] [CrossRef]

- Welcome, B.M.; Gilmer, B.B.; Lang, S.D.; Levitt, M.; Karch, M.M. Comparison of manual hand drill versus an electric dual-motor drill for bedside craniotomy. Interdiscip. Neurosurg. 2021, 23, 100928. [Google Scholar] [CrossRef]

- Kinaci, A.; Algra, A.; Heuts, S.; O’Donnell, D.; van der Zwan, A.; van Doormaal, T. Effectiveness of dural sealants in prevention of cerebrospinal fluid leakage after craniotomy: A systematic review. World Neurosurg. 2018, 118, 368–376. [Google Scholar]

- Chughtai, K.A.; Nemer, O.P.; Kessler, A.T.; Bhatt, A.A. Post-operative complications of craniotomy and craniectomy. Emerg. Radiol. 2019, 26, 99–107. [Google Scholar] [CrossRef]

- Raman, V.; Jiwrajka, M.; Pollard, C.; Grieve, D.A.; Alexander, H.; Redmond, M. Emergent craniotomy in rural and regional settings: Recommendations from a tertiary neurosurgery unit: Diagnosis and surgical decision-making. ANZ J. Surg. 2022, 92, 1609–1613. [Google Scholar] [CrossRef]

- Hewitt, J.N.; Ovenden, C.D.; Glynatsis, J.M.; Sabab, A.; Gupta, A.K.; Kovoor, J.G.; Wells, A.J.; Maddern, G.J. Emergency Neurosurgery Performed by General Surgeons: A Systematic Review. World J. Surg. 2021, 46, 347–355. [Google Scholar] [CrossRef]

- Patel, E.A.; Aydin, A.; Cearns, M.; Dasgupta, P.; Ahmed, K. A Systematic Review of Simulation-Based Training in Neurosurgery, Part 1: Cranial Neurosurgery. World Neurosurg. 2020, 133, e850–e873. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Losee, J.E. Time-versus competency-based residency training. Plast. Reconstr. Surg. 2016, 138, 527–531. [Google Scholar] [CrossRef]

- Marcus, H.; Vakharia, V.; Kirkman, M.A.; Murphy, M.; Nandi, D. Practice makes perfect? The role of simulation-based deliberate practice and script-based mental rehearsal in the acquisition and maintenance of operative neurosurgical skills. Neurosurgery 2013, 72 (Suppl. S1), 124–130. [Google Scholar] [CrossRef]

- Baby, B.; Singh, R.; Singh, R.; Suri, A.; Arora, C.; Kumar, S.; Kalra, P.K.; Banerjee, S. A review of physical simulators for neuroendoscopy skills training. World Neurosurg. 2020, 137, 398–407. [Google Scholar] [CrossRef]

- Baby, B.; Singh, R.; Suri, A.; Dhanakshirur, R.R.; Chakraborty, A.; Kumar, S.; Kalra, P.K.; Banerjee, S. A review of virtual reality simulators for neuroendoscopy. Neurosurg. Rev. 2020, 43, 1255–1272. [Google Scholar] [CrossRef]

- Panesar, S.S.; Magnetta, M.; Mukherjee, D.; Abhinav, K.; Branstetter, B.F.; Gardner, P.A.; Iv, M.; Fernandez-Miranda, J.C. Patient-specific 3-dimensionally printed models for neurosurgical planning and education. Neurosurg. Focus 2019, 47, E12. [Google Scholar] [CrossRef]

- Waran, V.; Narayanan, V.; Karuppiah, R.; Owen, S.L.F.; Aziz, T. Utility of multimaterial 3D printers in creating models with pathological entities to enhance the training experience of neurosurgeons. J. Neurosurg. 2014, 120, 489–492. [Google Scholar] [CrossRef]

- Garcia, J.; Yang, Z.; Mongrain, R.; Leask, R.L.; Lachapelle, K. 3D printing materials and their use in medical education: A review of current technology and trends for the future. BMJ Simul. Technol. Enhanc. Learn. 2018, 4, 27. [Google Scholar] [CrossRef] [PubMed]

- Shujaat, S.; da Costa Senior, O.; Shaheen, E.; Politis, C.; Jacobs, R. Visual and haptic perceptibility of 3D printed skeletal models in orthognathic surgery. J. Dent. 2021, 109, 103660. [Google Scholar] [CrossRef]

- Heo, H.; Jin, Y.; Yang, D.; Wier, C.; Minard, A.; Dahotre, N.B.; Neogi, A. Manufacturing and characterization of hybrid bulk voxelated biomaterials printed by digital anatomy 3d printing. Polymers 2021, 13, 1–10. [Google Scholar] [CrossRef]

- Levin, M.; McKechnie, T.; Khalid, S.; Grantcharov, T.P.; Goldenberg, M. Automated methods of technical skill assessment in surgery: A systematic review. J. Surg. Educ. 2019, 76, 1629–1639. [Google Scholar] [CrossRef]

- Raison, N.; Dasgupta, P. Role of a Surgeon as an Educator. In Practical Simulation in Urology; Springer: Berlin/Heidelberg, Germany, 2022; pp. 27–39. [Google Scholar]

- Nor, S.; Ahmmad, Z.; Su, E.; Ming, L.; Fai, Y.C.; Khairi, F. Assessment Methods for Surgical Skill. World Acad. Sci. Eng. Technol. 2011, 5, 752–758. [Google Scholar]

- Zhang, Q.; Chen, L.; Tian, Q.; Li, B. Video-based analysis of motion skills in simulation-based surgical training. Multimed. Content Mob. Devices 2013, 8667, 80–89. [Google Scholar] [CrossRef]

- Manabe, T.; Walia, P.; Fu, Y.; Intes, X.; De, S.; Schwaitzberg, S.; Cavuoto, L.; Dutta, A. EEG Topographic Features for Assessing Skill Levels during Laparoscopic Surgical Training; Research Square: Durham, NC, USA, 2022. [Google Scholar]

- Zulbaran-Rojas, A.; Najafi, B.; Arita, N.; Rahemi, H.; Razjouyan, J.; Gilani, R. Utilization of flexible-wearable sensors to describe the kinematics of surgical proficiency. J. Surg. Res. 2021, 262, 149–158. [Google Scholar] [CrossRef]

- Lin, Z.; Zecca, M.; Sessa, S.; Sasaki, T.; Suzuki, T.; Itoh, K.; Iseki, H.; Takanishi, A. Objective skill analysis and assessment in neurosurgery by using an ultra-miniaturized inertial measurement unit WB-3—Pilot tests. In Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 2320–2323. [Google Scholar] [CrossRef]

- Chmarra, M.K.; Dankelman, J.; van den Dobbelsteen, J.J.; Jansen, F.-W. Force feedback and basic laparoscopic skills. Surg. Endosc. 2008, 22, 2140–2148. [Google Scholar] [CrossRef]

- Berges, A.J.; Vedula, S.S.; Chara, A.; Hager, G.D.; Ishii, M.; Malpani, A. Eye Tracking and Motion Data Predict Endoscopic Sinus Surgery Skill. Laryngoscope 2022, 133, 500–505. [Google Scholar] [CrossRef]

- Reiley, C.E.; Lin, H.C.; Yuh, D.D.; Hager, G.D. Review of methods for objective surgical skill evaluation. Surg. Endosc. 2011, 25, 356–366. [Google Scholar] [CrossRef]

- Tubbs, R.S.; Loukas, M.; Shoja, M.M.; Bellew, M.P.; Cohen-Gadol, A.A. Surface landmarks for the junction between the transverse and sigmoid sinuses: Application of the “strategic” burr hole for suboccipital craniotomy. Oper. Neurosurg. 2009, 65, ons37–ons41. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. A review of force myography research and development. Sensors 2019, 19, 4557. [Google Scholar] [CrossRef]

- Xiong, Y.; Quek, F. Hand motion gesture frequency properties and multimodal discourse analysis. Int. J. Comput. Vis. 2006, 69, 353–371. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. Performance of forearm FMG and sEMG for estimating elbow, forearm and wrist positions. J. Bionic Eng. 2017, 14, 284–295. [Google Scholar] [CrossRef]

- Ha, N.; Withanachchi, G.P.; Yihun, Y. Performance of forearm FMG for estimating hand gestures and prosthetic hand control. J. Bionic Eng. 2019, 16, 88–98. [Google Scholar] [CrossRef]

- Berrar, D. Bayes’ theorem and naive Bayes classifier. Encycl. Bioinforma. Comput. Biol. ABC Bioinforma. 2018, 403, 412. [Google Scholar]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear discriminant analysis. In Robust Data Mining; Springer: Berlin/Heidelberg, Germany, 2013; pp. 27–33. [Google Scholar]

- Pisner, D.A.; Schnyer, D.M. Support vector machine. In Machine Learning; Elsevier: Amsterdam, The Netherlands, 2020; pp. 101–121. [Google Scholar]

- Song, Y.-Y.; Ying, L.U. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar]

- Suri, A.; Patra, D.; Meena, R. Simulation in neurosurgery: Past, present, and future. Neurol. India 2016, 64, 387. [Google Scholar] [CrossRef]

- Haji, F.A.; Dubrowski, A.; Drake, J.; de Ribaupierre, S. Needs assessment for simulation training in neuroendoscopy: A Canadian national survey. J. Neurosurg. 2013, 118, 250–257. [Google Scholar] [CrossRef]

- Deora, H.; Garg, K.; Tripathi, M.; Mishra, S.; Chaurasia, B. Residency perception survey among neurosurgery residents in lower-middle-income countries: Grassroots evaluation of neurosurgery education. Neurosurg. Focus 2020, 48, E11. [Google Scholar] [CrossRef]

- Singh, R.; Baby, B.; Damodaran, N.; Srivastav, V.; Suri, A.; Banerjee, S.; Kumar, S.; Kalra, P.; Prasad, S.; Paul, K.; et al. Design and Validation of an Open-Source, Partial Task Trainer for Endonasal Neuro-Endoscopic Skills Development: Indian Experience. World Neurosurg. 2016, 86, 259–269. [Google Scholar] [CrossRef]

- Govsa, F.; Celik, S.; Turhan, T.; Sahin, V.; Celik, M.; Sahin, K.; Ozer, M.A.; Kazak, Z. The first step of patient-specific design calvarial implant: A quantitative analysis of fresh parietal bones. Eur. J. Plast. Surg. 2018, 41, 511–520. [Google Scholar] [CrossRef]

- McMillan, A.; Kocharyan, A.; Dekker, S.E.; Kikano, E.G.; Garg, A.; Huang, V.W.; Moon, N.; Cooke, M.; Mowry, S.E. Comparison of materials used for 3D-printing temporal bone models to simulate surgical dissection. Ann. Otol. Rhinol. Laryngol. 2020, 129, 1168–1173. [Google Scholar] [CrossRef]

- Shakouri, E.; Ghorbani, P. Infrared Thermography of Neurosurgical Bone Grinding: Determination of High-Speed Cutting Range in Order to Obtain the Minimum Thermal Damage; Research Square: Durham, NC, USA, 2021. [Google Scholar]

- Lai, M.; Skyrman, S.; Kor, F.; Homan, R.; Babic, D.; Edström, E.; Persson, O.; Burström, G.; Elmi-Terander, A.; Hendriks, B.H.W.; et al. Development of a CT-compatible anthropomorphic skull phantom for surgical planning, training, and simulation. In Proceedings of the Medical Imaging 2021: Imaging Informatics for Healthcare, Research, and Applications, Online, 15–19 February 2021; Volume 11601, pp. 43–52. [Google Scholar]

- Nagassa, R.G.; McMenamin, P.G.; Adams, J.W.; Quayle, M.R.; Rosenfeld, J. V Advanced 3D printed model of middle cerebral artery aneurysms for neurosurgery simulation. 3d Print. Med. 2019, 5, 1–12. [Google Scholar] [CrossRef]

- Ryan, J.R.; Almefty, K.K.; Nakaji, P.; Frakes, D.H. Cerebral aneurysm clipping surgery simulation using patient-specific 3D printing and silicone casting. World Neurosurg. 2016, 88, 175–181. [Google Scholar] [CrossRef]

- Lan, Q.; Zhu, Q.; Xu, L.; Xu, T. Application of 3D-printed craniocerebral model in simulated surgery for complex intracranial lesions. World Neurosurg. 2020, 134, e761–e770. [Google Scholar] [CrossRef]

- Licci, M.; Thieringer, F.M.; Guzman, R.; Soleman, J. Development and validation of a synthetic 3D-printed simulator for training in neuroendoscopic ventricular lesion removal. Neurosurg. Focus 2020, 48, E18. [Google Scholar] [CrossRef]

- Craven, C.L.; Cooke, M.; Rangeley, C.; Alberti, S.J.M.M.; Murphy, M. Developing a pediatric neurosurgical training model. J. Neurosurg. Pediatr. 2018, 21, 329–335. [Google Scholar] [CrossRef] [PubMed]

- Eastwood, K.W.; Bodani, V.P.; Haji, F.A.; Looi, T.; Naguib, H.E.; Drake, J.M. Development of synthetic simulators for endoscope-assisted repair of metopic and sagittal craniosynostosis. J. Neurosurg. Pediatr. 2018, 22, 128–136. [Google Scholar] [CrossRef] [PubMed]

- Mashiko, T.; Kaneko, N.; Konno, T.; Otani, K.; Nagayama, R.; Watanabe, E. Training in cerebral aneurysm clipping using self-made 3-dimensional models. J. Surg. Educ. 2017, 74, 681–689. [Google Scholar] [CrossRef] [PubMed]

- Muto, J.; Carrau, R.L.; Oyama, K.; Otto, B.A.; Prevedello, D.M. Training model for control of an internal carotid artery injury during transsphenoidal surgery. Laryngoscope 2017, 127, 38–43. [Google Scholar] [CrossRef] [PubMed]

- Cleary, D.R.; Siler, D.A.; Whitney, N.; Selden, N.R. A microcontroller-based simulation of dural venous sinus injury for neurosurgical training. J. Neurosurg. 2017, 128, 1553–1559. [Google Scholar] [CrossRef]

- Kondo, K.; Nemoto, M.; Masuda, H.; Okonogi, S.; Nomoto, J.; Harada, N.; Sugo, N.; Miyazaki, C. Anatomical reproducibility of a head model molded by a three-dimensional printer. Neurol. Med. Chir. 2015, 55, 592–598. [Google Scholar] [CrossRef]

- Coelho, G.; Zymberg, S.; Lyra, M.; Zanon, N.; Warf, B. New anatomical simulator for pediatric neuroendoscopic practice. Child’s Nerv. Syst. 2015, 31, 213–219. [Google Scholar] [CrossRef] [PubMed]

- Inoue, D.; Yoshimoto, K.; Uemura, M.; Yoshida, M.; Ohuchida, K.; Kenmotsu, H.; Tomikawa, M.; Sasaki, T.; Hashizume, M. Three-dimensional high-definition neuroendoscopic surgery: A controlled comparative laboratory study with two-dimensional endoscopy and clinical application. J. Neurol. Surg. Part A Cent. Eur. Neurosurg. 2013, 74, 357–365. [Google Scholar] [CrossRef] [PubMed]

- Evans-Harvey, K.; Erridge, S.; Karamchandani, U.; Abdalla, S.; Beatty, J.W.; Darzi, A.; Purkayastha, S.; Sodergren, M.H. Comparison of surgeon gaze behaviour against objective skill assessment in laparoscopic cholecystectomy-a prospective cohort study. Int. J. Surg. 2020, 82, 149–155. [Google Scholar] [CrossRef]

- Kowalewski, K.-F.; Garrow, C.R.; Schmidt, M.W.; Benner, L.; Müller-Stich, B.P.; Nickel, F. Sensor-based machine learning for workflow detection and as key to detect expert level in laparoscopic suturing and knot-tying. Surg. Endosc. 2019, 33, 3732–3740. [Google Scholar] [CrossRef]

- Sbernini, L.; Quitadamo, L.R.; Riillo, F.; Di Lorenzo, N.; Gaspari, A.L.; Saggio, G. Sensory-glove-based open surgery skill evaluation. IEEE Trans. Hum. Mach. Syst. 2018, 48, 213–218. [Google Scholar] [CrossRef]

- Uemura, M.; Tomikawa, M.; Miao, T.; Souzaki, R.; Ieiri, S.; Akahoshi, T.; Lefor, A.K.; Hashizume, M. Feasibility of an AI-based measure of the hand motions of expert and novice surgeons. Comput. Math. Methods Med. 2018, 2018, 1–6. [Google Scholar] [CrossRef]

- Rafii-Tari, H.; Payne, C.J.; Liu, J.; Riga, C.; Bicknell, C.; Yang, G.-Z. Towards automated surgical skill evaluation of endovascular catheterization tasks based on force and motion signatures. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1789–1794. [Google Scholar]

- Harada, K.; Morita, A.; Minakawa, Y.; Baek, Y.M.; Sora, S.; Sugita, N.; Kimura, T.; Tanikawa, R.; Ishikawa, T.; Mitsuishi, M. Assessing microneurosurgical skill with medico-engineering technology. World Neurosurg. 2015, 84, 964–971. [Google Scholar] [CrossRef]

- Suh, I.H.; LaGrange, C.A.; Oleynikov, D.; Siu, K.-C. Evaluating robotic surgical skills performance under distractive environment using objective and subjective measures. Surg. Innov. 2016, 23, 78–89. [Google Scholar] [CrossRef]

- Oropesa, I.; Sánchez-González, P.; Chmarra, M.K.; Lamata, P.; Pérez-Rodríguez, R.; Jansen, F.W.; Dankelman, J.; Gómez, E.J. Supervised classification of psychomotor competence in minimally invasive surgery based on instruments motion analysis. Surg. Endosc. Other Interv. Tech. 2014, 28, 657–670. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).