1. Introduction

In the transportation and logistics sector, quality management extends beyond regulatory compliance; it serves as a strategic driver of operational efficiency, service reliability, and customer satisfaction. Lean six sigma (LSS), as a structured methodology for process improvement, has proven effective in streamlining service flows, reducing variability, and eliminating inefficiencies. Its application is particularly critical in urban public transport systems, where subscription processes and service quality directly influence passenger flow management, commuter experience, and overall mobility performance.

Governmental transportation companies face unique challenges as they transition from traditional public service models to more competitive environments. Unlike private firms, these organizations must balance financial sustainability with strict regulatory and social obligations. They operate under complex legal and administrative constraints that limit flexibility, while unionized workforces often influence decision-making, affecting operational efficiency and scalability.

One of the most persistent challenges for public transport agencies is service inefficiency—especially in managing customer interactions and administrative workflows. As urban populations grow, the demand for reliable and responsive transportation increases, necessitating data-driven strategies to optimize operations. Structured improvement methodologies such as lean six sigma therefore become essential for addressing recurring issues like delays, resource misallocation, and customer dissatisfaction.

This research focuses on a public transport company that manages an extensive intercity and urban bus network supported by a large fleet capacity. Despite sustained government funding and revenue generation through transportation services—including school and university subscriptions—the organization continues to experience operational inefficiencies that affect service quality and public perception. Preliminary analysis indicated the presence of several systemic inefficiencies affecting administrative workflows, information management, and workforce coordination. The absence of integrated performance monitoring mechanisms limited the organization’s ability to evaluate operational effectiveness and service profitability. Within this context, the subscription management process for school and university passengers emerged as one of the most critical challenges, characterized by recurrent delays and inconsistencies in transaction handling. These issues were reflected in extended processing times, reduced customer satisfaction, and measurable impacts on overall service reliability. In response, this research applies the LSS methodology to systematically address these inefficiencies. Through a data-driven approach, the project aims to transform the company into a more efficient and customer-oriented service provider within the increasingly competitive transportation sector.

Addressing such multifaceted inefficiencies requires an empirically grounded framework, and lean six sigma has consistently demonstrated value through its emphasis on measurable outcomes and process standardization.

Accordingly, the primary objective of this study is to design and implement a lean six sigma DMAIC framework tailored to the operational reality of a public transportation organization. The framework aims to enhance administrative efficiency, reduce process delays, and improve service quality through data-driven analysis and continuous improvement practices. Beyond its practical objectives, this study contributes to the broader LSS literature by adapting a manufacturing-derived analytical framework to a public service environment, thereby reinforcing the methodological transferability of DMAIC.

Having established the study’s practical motivation and objectives, it is important to situate it within the broader context of LSS applications. While the present study focuses on the public transportation context, LSS has also been extensively applied in the broader logistics and supply chain domain to enhance efficiency, reliability, and cost-effectiveness. In global markets, transportation modes and distribution networks exert a decisive influence on financial performance, prompting organizations to adopt structured, data-driven improvement methodologies. Initially conceived for manufacturing environments [

1,

2], LSS has progressively evolved into a cornerstone framework for continuous improvement across both industrial and service sectors. Recent studies have reinforced this evolution: Parwani and Hu [

3] demonstrated how integrating Single Minute Exchange of Die (SMED) with production scheduling improves manufacturing supply chain performance through reduced downtime and enhanced task allocation, while Rahman and Kirby [

4] illustrated how Lean Six Sigma can transform e-commerce warehousing by combining Value Stream Mapping (VSM).

Lean Six Sigma combines Lean’s focus on waste elimination with the analytical rigor of Six Sigma, providing a structured framework to reduce variation, optimize process flow, and improve performance. Its methodologies have shown strong adaptability across manufacturing, healthcare, logistics, and service sectors, consistently enhancing quality, efficiency, and resource utilization. Its methodologies have enhanced process stability and reduced variability in both production and service delivery contexts [

5,

6,

7]. Beyond traditional industries, LSS principles have also been applied in smart city management and construction, contributing to process optimization, water resource management, and sustainable development initiatives [

8]. Moreover, non-profit organizations have leveraged LSS to improve efficiency in resource utilization and operational effectiveness, including production activities in workshops for people with disabilities [

6].

In healthcare, Lean Six Sigma (LSS) has reduced waste, stabilized key processes, and addressed variability in patient care, improving both cost efficiency and resource allocation [

9]. In logistics, LSS has enhanced the quality and timeliness of technological deployments in field operations [

5]. In clinical environments, its structured tools have optimized inventory management, improved tray accuracy, and strengthened overall workflow efficiency [

10]. Together, these examples highlight LSS’s adaptability and measurable impact across sectors, confirming its value as a universal framework for operational excellence.

At the practical level, LSS relies on a complementary set of qualitative and quantitative tools that enable both process visualization and statistical validation. The Lean dimension provides rapid diagnostic instruments such as Value Stream Mapping (VSM), Sort, Set in Order, Shine, Standardize, and Sustain (5S) workplace organization, Kaizen (continuous improvement) events, and Kanban (visual signal) systems, which help reveal non-value-adding activities, bottlenecks, and workflow imbalances [

1,

2]. Poka-Yoke (error-proofing) and Standard Work procedures reinforce consistency and prevent the recurrence of administrative or operational mistakes [

11,

12]. These techniques establish the foundation for the early DMAIC phases, allowing practitioners to define project boundaries and measure process performance in a structured way.

On the six sigma side, a diverse range of statistical and quality tools ensures analytical rigor and data-driven decision-making. Descriptive statistics, Pareto charts, and cause-and-effect diagrams support the identification of critical process variables, while Measurement System Analysis (MSA) and control charts validate data accuracy and process stability [

13,

14]. Advanced techniques such as Analysis of Variance (ANOVA), regression modeling, and hypothesis testing strengthen the

Analyze and

Improve phases by quantifying the impact of key factors and verifying improvement effectiveness [

14,

15]. Tools like Suppliers, Inputs, Processes, Outputs, and Customers (SIPOC) diagrams and Critical-to-Quality (CTQ) trees translate customer requirements into measurable performance indicators, bridging operational analysis with strategic quality objectives [

1]. Together, these Lean and statistical instruments provide a robust foundation for continuous improvement—balancing practical simplicity with analytical precision.

In the logistics and supply chain domain, Sisman [

16] demonstrated this potential in a Turkish plastics manufacturing company, where applying the DMAIC cycle reduced costly road transport from 13% to 5%, achieving significant cost savings. Similarly, Chopra et al. [

17] illustrated how LSS principles in a major FMCG supply chain (Fast-Moving Consumer Goods) in the UAE enhanced transportation planning, predictive maintenance, and container handling, leading to shorter lead times and improved shipment accuracy. The combined use of Lean and six sigma allows organizations to mitigate lead-time variation, demand uncertainty, and logistical inefficiencies through structured, cross-functional problem solving. However, successful implementation requires sustained leadership commitment, data integrity, and coordination between departments [

18].

Beyond corporate logistics, LSS methodologies have also been applied to city logistics, emphasizing their role in supporting sustainable urban mobility. Lemke et al. [

19] explored how LSS tools such as SIPOC diagrams and Critical-to-Quality (CTQ) trees could assess the impact of transport infrastructure on fuel consumption in Szczecin, Poland. Their findings revealed that systematic process mapping and performance measurement can optimize routing, reduce fuel waste, and improve environmental outcomes—demonstrating how data-driven methodologies can directly support green logistics strategies. These applications reveal how LSS principles transcend industry boundaries, aligning economic efficiency with sustainability and service reliability.

Recent literature has also underscored the importance of integrating multi-criteria and multivariate analytical approaches to strengthen data-driven decision-making in complex supply chains. Kumar [

20] conducted an extensive review of decision-making and statistical methods applied to the olive oil sector, highlighting how the combination of Multi-Criteria Decision Analysis (MCDA) and multivariate techniques can effectively balance quality, efficiency, and sustainability across interconnected production and logistics stages. This analytical perspective parallels the present study’s use of advanced statistical and Lean Six Sigma tools to achieve evidence-based process optimization, enhance transparency, and support strategic decision-making within public transportation systems.

The transport sector has increasingly adopted similar quality improvement methodologies, recognizing their relevance to managing large-scale, resource-intensive systems. Lean six sigma’s DMAIC framework—Define, Measure, Analyze, Improve, and Control—has been applied in road and public transport to reduce waste, improve scheduling accuracy, and enhance service quality [

21,

22]. For example, Yerubandi et al. [

21] demonstrated that systematic waste identification and process redesign in public road transportation reduced operational delays and improved reliability. Adhyapak et al. [

22] reported comparable results in a transportation service company, where DMAIC-driven analysis reduced call handling time and improved overall responsiveness. Collectively, these findings underline DMAIC’s adaptability as a structured and measurable framework capable of driving sustained improvement in complex, service-oriented environments.

More recently, LSS applications in urban public transportation have expanded from operational optimization to broader safety and sustainability goals. Kuvvetli and Firuzan [

23] conducted one of the earliest empirical studies using LSS in a municipal bus network, achieving a 20% reduction in accidents through a data-driven examination of route design and driver performance. Similarly, Papageorgiou [

24] and Nedeliaková et al. [

25] applied LSS Total Quality Management frameworks to Intelligent Transportation Systems (ITS) and railway operations, improving on-time performance and system reliability. These initiatives demonstrate the shift from purely operational metrics toward holistic service quality, sustainability, and passenger safety in transportation management.

In summary, the literature demonstrates the remarkable adaptability of LSS across diverse domains. From logistics optimization and hospital process improvement to urban transit modernization, LSS has proven to be a versatile and evidence-based framework for diagnosing inefficiencies and driving sustainable change.

Despite its broad success, the implementation of LSS within public transportation remains limited. Most existing studies have concentrated on optimizing healthcare processes or supply chain performance, while comparatively little attention has been given to urban mobility and public transport services. Within public organizations, the adoption of LSS—particularly through the DMAIC framework—has been sporadic, and few investigations have systematically explored its potential to address chronic inefficiencies and data integration challenges in transportation systems [

26]. The present research contributes to narrowing this gap by contextualizing the LSS DMAIC methodology within a public transportation organization to enhance administrative efficiency, reduce process delays, and improve customer satisfaction. A notable early contribution in this underexplored field is the study by Kuvvetli and Firuzan [

23], who implemented lean six sigma in an urban public transportation setting to reduce accidents involving municipal buses. Their findings highlighted the method’s capacity to enhance safety performance through systematic process analysis and data-driven improvement. Their adaptation of the DMAIC phases to the operational conditions of a municipal bus network demonstrated that even in bureaucratic environments with limited statistical expertise, structured quality initiatives can achieve measurable reductions in accident rates and operational variability.

In their study, authors in [

23] applied the DMAIC methodology to the safety management system of a municipal bus company. During the Define phase, they identified frequent traffic accidents as the critical problem affecting both passenger safety and service quality. The Measure phase involved collecting data from accident records over multiple years, followed by descriptive and Pareto analyses to categorize the most recurrent causes. In the Analyze phase, fishbone diagrams and brainstorming sessions were used to identify contributing factors related to driver behavior, vehicle maintenance, and environmental conditions. Subsequently, targeted training programs, preventive maintenance plans, and process standardization measures were implemented during the Improve phase. Finally, the Control phase established a monitoring system to sustain the achieved improvements. The results showed a notable decline in accident frequency and severity after the LSS intervention, confirming the approach’s effectiveness in enhancing safety and operational reliability within a public transportation context.

The principal advantage of their work lies in the demonstration that LSS can be successfully transferred from industrial to public service environments, bridging a critical gap in the literature. It also provided a practical framework for implementing improvement initiatives in data-constrained, non-manufacturing settings. However, despite these strengths, the study exhibits certain limitations that the present research seeks to overcome. Most notably, the Analyze phase of the DMAIC cycle was addressed using relatively simple tools such as Pareto charts and cause-and-effect diagrams, without deeper statistical or multivariate analysis to rigorously identify the root causes of accidents. This simplification, while suitable for preliminary awareness and engagement, limits the precision and robustness of the diagnostic phase.

In contrast, the current paper aims to strengthen this analytical step through the integration of advanced statistical and LSS tools, enabling a more accurate and data-supported understanding of process inefficiencies within governmental transportation systems. Furthermore, the study incorporates rigorous analytical methods grounded in a strong theoretical framework, including a detailed examination of production line dynamics, bottleneck identification, machine capacity assessment and availability analysis. This comprehensive approach ensures that improvement strategies are both empirically validated and operationally feasible within the context of public service operations.

The remainder of this paper is structured as follows:

Section 2 outlines the LSS DMAIC framework and the analytical methods employed in this study. The

Define phase is presented in

Section 4.1, followed by the

Measure phase in

Section 4.2, which details data collection and baseline performance assessment.

Section 4.3 describes the

Analyze phase, focusing on root-cause identification and statistical validation through production line analysis. The

Improve phase, discussed in

Section 4.4, introduces the implemented corrective actions and process redesigns, while the

Control phase in

Section 4.5 presents the monitoring mechanisms established to sustain the achieved improvements.

Section 3 presents the methodology of data analysis.

Section 4 summarizes the main findings and highlights the process performance improvements obtained through the proposed framework, followed by a discussion of practical implications and managerial insights. Finally,

Section 5 concludes the paper and outlines perspectives for future research.

3. Data Analysis Methodology

The data analysis methodology was designed to systematically examine the subscription production process. The process was divided into several interrelated steps aimed at generating accurate, reliable, and relevant findings to support process improvement initiatives.

The first step involved conducting a Measurement System Analysis (MSA) to verify the reliability and accuracy of the collected data. The MSA evaluated repeatability and reproducibility, assessing the consistency of measurements taken by the same operator and by different operators. This step was essential to minimize measurement variability and to ensure that the data accurately represented the process, preventing erroneous conclusions caused by measurement errors.

After the MSA, a normality test was applied to determine whether the process data followed a normal distribution, a necessary condition for using statistical tools such as control charts and capability analysis. The data distribution was examined both visually and statistically using a probability plot, confirming the suitability of the selected analytical methods for this dataset.

The verified data were then analyzed using control charts to assess process stability. Specifically, and R charts were used to monitor both the process mean and variability across subgroups. In the initial phase, the R chart received priority to evaluate short-term variation and confirm process stability before examining the chart for variation in the process mean. These charts were fundamental in determining whether the process was statistically in control, an essential condition for subsequent capability analysis.

Progressing to the next stage, process capability analysis was conducted to evaluate how well the process aligned with its specification limits. Capability indices such as , , , and were computed to measure the process’s ability to meet customer expectations. This stage focused on critical-to-quality (CTQ) parameters, particularly cycle time, which directly influences customer satisfaction and the achievement of operational goals.

Subsequently, a comprehensive analysis of the production line’s operational efficiency was carried out. Performance was evaluated using key metrics such as capacity, throughput, and cycle time. A value-added analysis identified non–value-added activities, including batching delays and waiting times, which revealed process inefficiencies and guided targeted improvement actions.

In summary, this structured methodology combined statistical tools with an in-depth assessment of production line performance. It ensured systematic evaluation of data reliability, statistical stability, and operational efficiency, leading to actionable insights that strengthened process improvement efforts and aligned operations with customer and organizational objectives.

Potential risks were identified and managed from the outset to ensure robust project execution in line with ISO 31000:2018 [

27] principles. The main risks included resistance to change, limited resources, supplier underpayment, technical difficulties, operational bottlenecks, and communication gaps. Remote employees and auditors were expected to resist workflow changes, so proactive communication, stakeholder involvement, and routine training were implemented to mitigate this risk.

Resource limitations in staffing, funding, or technology were addressed through continuous monitoring of resource use, early detection of shortages, and temporary expert support when needed. Technical risks related to new system implementation were minimized through thorough testing, IT assistance, and contingency planning.

Regular risk assessments and adaptive strategies ensured that emerging risks were anticipated and effectively managed. Through this active risk management approach, the project maintained stability and strengthened its strategic position.

4. Results and Discussion

This section presents the results of applying the DMAIC (Define, Measure, Analyze, Improve, Control) methodology to the subscription production process. The discussion will cover the outcomes at each phase of the DMAIC approach, highlighting key findings and their implications for process improvement. In the Define phase (

Section 4.1), we outlined the problem statement, set objectives, and scoped the project. During the Measure phase (

Section 4.2), we collected data on the current process performance, focusing on key metrics such as cycle time. The Analyze phase (

Section 4.3) involved statistical analyses to identify the primary factors contributing to process inefficiencies. In the Improve phase (

Section 4.4), we implemented targeted interventions aimed at reducing variation and optimizing resource usage. Finally, the Control phase (

Section 4.5) will focus on the sustainability of these improvements, discussing the measures put in place to ensure continued process stability and monitoring. This section synthesizes the findings from each phase, offering a comprehensive overview of the process performance improvements achieved through the DMAIC approach.

This section summarizes the implementation of the DMAIC to the subscription production process. Each phase’s key outcomes and their impact on process performance are discussed. The Define phase (

Section 4.1) established the problem statement, objectives, and project scope. The Measure phase (

Section 4.2) focused on current performance indicators, particularly cycle time. The Analyze phase (

Section 4.3) identified the main causes of inefficiency through an advanced analysis approach of the production line. The Improve phase (

Section 4.4) introduced targeted actions to reduce variation and enhance resource utilization. The Control phase (

Section 4.5) ensured the long-term stability of these improvements through systematic monitoring.

4.1. Define Phase

The Define phase establishes a clear understanding of the improvement opportunity and forms the foundation of the project. It involves specifying the problem, assessing its impact on customers and operations, and aligning objectives through a structured project charter. Core components include the problem statement, project scope, objectives, and customer requirements.

In this study, the focus is on inefficiencies within the subscription production process that cause delays, errors, and decreased customer satisfaction. Streamlining the process is expected to shorten cycle time, optimize resource utilization, and enhance service quality.

This phase aligns stakeholders, sets project boundaries, and provides a shared understanding of the problem and expected results, ensuring a focused direction for the following phases.

4.1.1. Project Overview and Rationale

The current subscription production process, managing over 20,000 school subscriptions per semester, suffers from significant inefficiencies. Major problems include inadequate resource planning, insufficiently trained staff, and delays in service delivery. The participation of unqualified personnel, such as bus drivers, in administrative tasks has resulted in inconsistent output and prolonged waiting times. Customers often make multiple visits due to incomplete or delayed subscriptions, and the use of temporary receipts without proper identification increases the risk of fraud. These issues generate customer dissatisfaction, weaken internal controls, and harm the company’s reputation and operational efficiency.

The process inefficiencies also elevate costs and reduce service quality. Optimizing workflows and improving resource utilization are projected to decrease cycle time by 30% and annual costs by 20%. These improvements will enhance customer satisfaction, increase retention, and reinforce the company’s competitive standing. Overcoming these challenges is vital to achieving long-term sustainability and operational excellence. The project focuses on the complete subscription production process, from application receipt to final delivery. It covers all internal activities, including document verification, data entry, processing, and plastification, while excluding external domains such as marketing or pricing. The objective is to enhance internal coordination and operational efficiency to ensure timely and reliable service delivery.

The specific goals are to reduce production cycle time by 30% and improve resource utilization by 25% within one year. Additionally, the project seeks a 20% increase in customer satisfaction with subscription services. These targets are linked to critical-to-quality (CTQ) metrics and will be achieved through workflow redesign and improved resource planning, leading to measurable and sustainable performance gains.

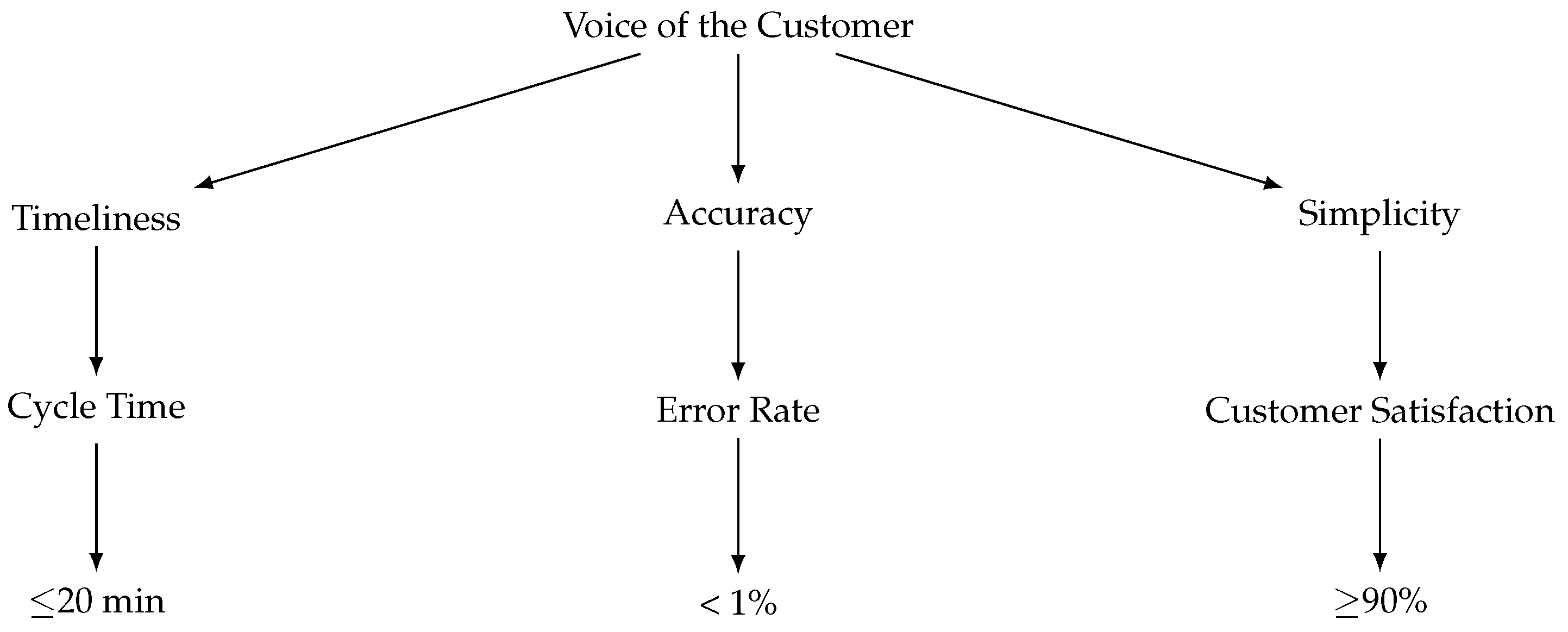

Voice of the Customer (VOC) and Critical-to-Quality (CTQ) Development

The subscription process involves two main customer groups: external users (students and parents) and internal stakeholders (operations and management). External customers prioritize accuracy, timeliness, and convenience, while internal stakeholders focus on efficiency, cost control, and quality consistency. Despite sporadic feedback initiatives, a structured framework for capturing and integrating the Voice of the Customer (VOC) had not been established.

To address this gap, the project implemented a systematic VOC framework combining surveys, interviews, and stakeholder consultations. The resulting insights guided process redesign to enhance customer satisfaction and align performance with organizational objectives.

Critical-to-Quality (CTQ) Trees were employed to translate customer expectations into measurable performance indicators. These trees define quantifiable metrics that reflect customer priorities and support data-driven improvement. Variation in achieving CTQ standards remains a key driver of improvement in Six Sigma methodology [

1], with CTQ Trees providing a structured linkage between customer expectations and process outcomes [

2].

VOC data were collected from internal stakeholders through interviews and from external customers via surveys at service counters, bus stations, and phone interviews with parents. The combined feedback supported the construction of a comprehensive CTQ Tree connecting customer expectations with quality drivers and measurable CTQ metrics.

Feedback from internal stakeholders highlighted recurring operational challenges during the subscription production process. Staff members reported frequent shortages of resources and incomplete documentation, which slowed down workflow execution. They emphasized the lack of adequate equipment and personnel during critical process stages, leading to delays that cascaded through subsequent operations and disrupted coordination between departments. Additionally, internal participants noted that clearer communication and timely updates regarding procedural changes would substantially improve task performance and interdepartmental alignment.

External customers, represented by students and parents, expressed similar concerns regarding efficiency and accuracy. Many reported extended waiting times for receiving their subscription cards, with occasional instances of lost or delayed deliveries. Errors in subscription details were also cited as a recurring issue, often requiring customers to return for corrections. While most respondents considered the process acceptable overall, they described it as unnecessarily complex and time-consuming, suggesting the need for simplification and shorter processing times. The following

Table 2 provides an example of how customer needs were translated into quality drivers and measurable CTQs:

Figure 1 presents the Critical-to-Quality (CTQ) Tree, mapping customer expectations into measurable metrics, focusing on timeliness, accuracy, and simplicity. The project will prioritize these CTQs but remain adaptable, incorporating other significant variables identified during later stages of the project.

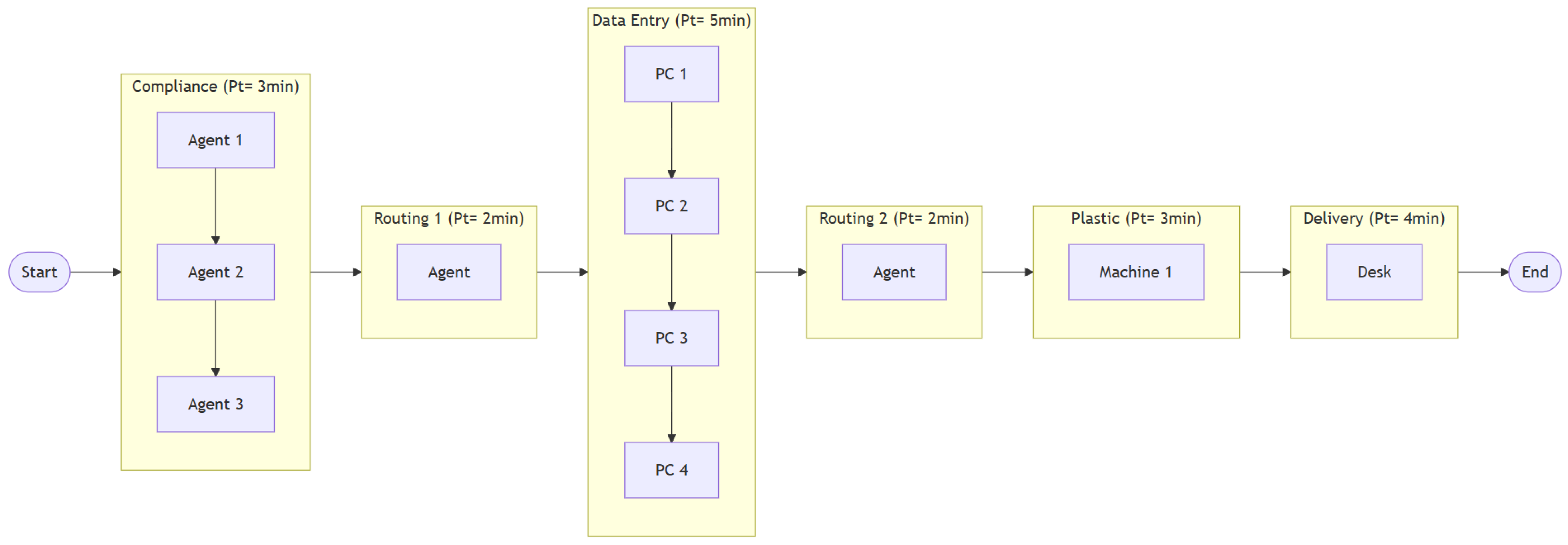

Process Description and SIPOC

The process of issuing school subscriptions begins when a client submits an application containing key information such as the student’s age, educational institution, residential address, and payment details. The initial review is performed by a generalist agent who verifies the application for completeness and compliance with required standards. Due to limited specialization, this stage is highly prone to errors and inconsistencies, often resulting in application rejections and processing delays.

The overall flow of the subscription production process, including suppliers, inputs, process steps, outputs, and customers, is represented in the SIPOC diagram shown in

Figure 2. This diagram provides a high-level overview of the process boundaries and the main elements influencing performance and quality outcomes.

Once the application is approved, it moves to the data entry stage, where additional challenges arise. The same agent, often handling multiple responsibilities, must accurately record all details in the system. The manual nature of this task and the absence of specialized data entry expertise increase the likelihood of errors and reduce overall efficiency.

After data entry, the application and related information are transferred to another agent for the final processing steps, which include preparing the subscription for plastification and archiving. These activities frequently become bottlenecks due to overlapping duties and limited task specialization.

A detailed representation of the production line, showing the sequence of operations and workflow dependencies, is provided in

Figure 3.

The concluding stage is managed by a designated processing agent responsible for plastification, during which the subscription card is sealed in plastic to ensure durability and prevent misuse or tampering. The process ends with document archiving, an essential record-keeping activity that is often compromised due to excessive workload and insufficient task allocation.

Once plastified, the completed subscription is delivered to the client. The process, largely dependent on agents performing multiple roles without specialized expertise, highlights significant operational challenges. These inefficiencies emphasize the need for process redesign to improve efficiency, minimize errors, and enhance the overall quality of service within this critical public sector operation.

4.2. Measure

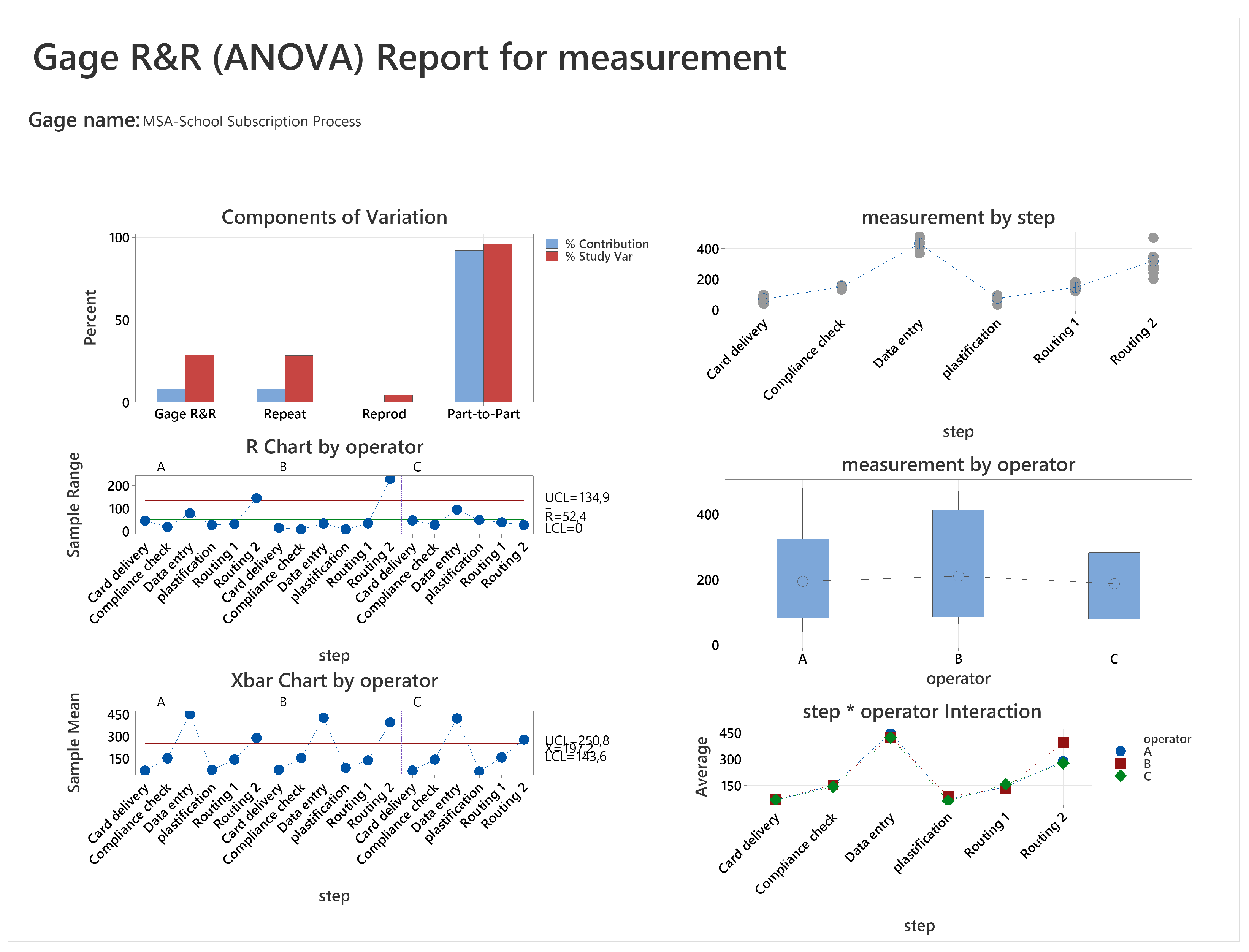

4.2.1. Measurement System Analysis (MSA)

The findings from the Measurement System Analysis (MSA) and ANOVA provide essential insights into the performance of the measurement system used in the subscription production process. Both analyses confirm that the system is suitable for process monitoring, with minimal contribution to total variation and acceptable levels of repeatability and reproducibility, as illustrated in

Table 3.

Table 3 indicates that total R&R (Repeatability and Reproducibility) accounts for 8.22% of the overall variation, with repeatability contributing 8.04% and reproducibility only 0.19%. This demonstrates that the majority of variation (91.78%) arises from actual piece-to-piece differences within the process.

Additional validation of the measurement system’s adequacy is presented in

Figure 4, which provides a graphical representation of Gage R&R performance.

As seen in

Figure 4, the system captures process variation effectively, with four distinct categories detected. The 28.67% study variation confirms that the system resolution is acceptable for this context, according to AIAG standards [

13].

To examine whether the operator effect interacts with the process step, a two-way ANOVA with interaction was performed. The analysis shows that the interaction term (Step*Operator) has a p-value of , indicating no significant interaction. As a result, a simplified ANOVA model without the interaction term was automatically generated.

As illustrated in

Table 4, the process step remains statistically significant (

p-value < 0.001), while the operator effect remains non-significant (

), confirming the decision to exclude the interaction term.

In summary, the MSA and ANOVA analyses confirm that the measurement system demonstrates adequate precision. Most of the observed variation is process-related, with minimal contribution from measurement error, ensuring the system’s reliability for ongoing process improvement initiatives.

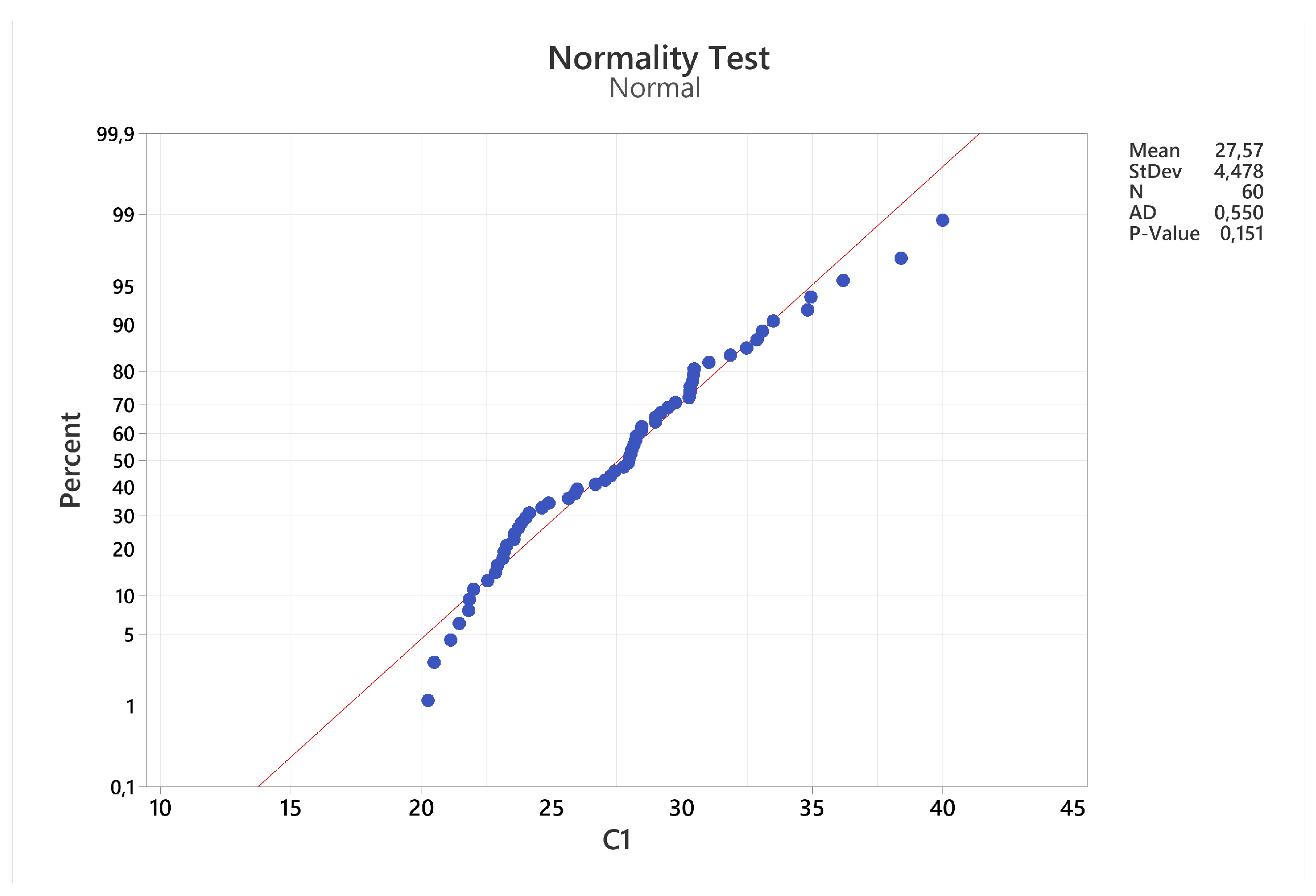

Cycle Time Measurement and Normality Verification

Following the Measurement System Analysis (MSA), cycle times were recorded six times per day over a ten-day period, yielding a comprehensive dataset that reflects process performance. The initial step in analyzing this data was to verify normality, ensuring that the process follows a normal distribution—a prerequisite for statistical tools such as control charts and capability analysis.

Figure 5 presents the outcome of the normality assessment performed on the cycle time data. The probability plot, generated using Minitab Statistical Software, V22.3.0, illustrates the alignment between the observed data and the theoretical normal distribution.

Analysis of

Figure 5 shows that the mean cycle time is

with a standard deviation of

, based on 60 observations. The Anderson–Darling (AD) statistic equals

, with a corresponding

p-value of

. Since the

p-value (

) exceeds the significance threshold (

), the null hypothesis of normality cannot be rejected, confirming that the cycle time data follow a normal distribution.

Given the confirmation of normality, the dataset is suitable for further statistical analysis using control charts and process capability assessment to evaluate process stability and performance.

Control Chart Analysis Using &R Charts

Control charts are key instruments in statistical process control, allowing continuous monitoring of process stability and variation over time. The &R chart is particularly suited for variable data with small to moderate subgroup sizes (typically 2 to 10). It enables simultaneous tracking of the process mean ( chart) and within-subgroup variability (R chart). The R chart must always be reviewed first, as it represents short-term variation; if variability is unstable, corrective actions are required before interpreting the process mean.

In this study, six subgroups were recorded per day across ten consecutive days, providing sufficient data to assess process stability.

Figure 6 presents the

&R chart constructed from the measured cycle time data, illustrating both mean and range behavior across subgroups.

The R chart in

Figure 6 presents the sample ranges (

R) for each subgroup, providing a measure of short-term variation. The control limits are defined as:

with a central line (

) at

. All plotted points fall within the control limits, and no unusual patterns or trends are observed. This confirms that short-term process variation is stable, with no evidence of special causes. Consequently, the variability component is under statistical control, allowing interpretation of the

chart.

The

chart in

Figure 6 displays the subgroup means (

) across the sampling period. The calculated control limits are:

with a central line at

. All subgroup means lie within the control limits, and no patterns or shifts are detected. This indicates a stable process mean, free from special-cause variation.

In summary, both the R chart and the chart demonstrate that the process is statistically stable and predictable. The consistency of variability and the steady mean confirm that the system operates under control. With stability verified, the next step involves conducting a capability analysis to evaluate the process’s ability to meet its specification limits.

4.2.2. Process Capability Analysis

Process capability analysis assesses how effectively a process can produce outcomes within defined specification limits. It determines whether the process consistently operates within the acceptable tolerance range. The lower specification limit (LSL) and upper specification limit (USL) define the performance boundaries used to evaluate process capability.

For this study:

The LSL was established by considering only the essential processing activities—compliance verification, data entry, plastification, and delivery—excluding non-value-added times such as batching delays or routing intervals. This ensures that the LSL represents the minimum achievable processing time under optimal efficiency.

The USL was determined according to customer expectations, reflecting the maximum acceptable cycle time from the end user’s perspective.

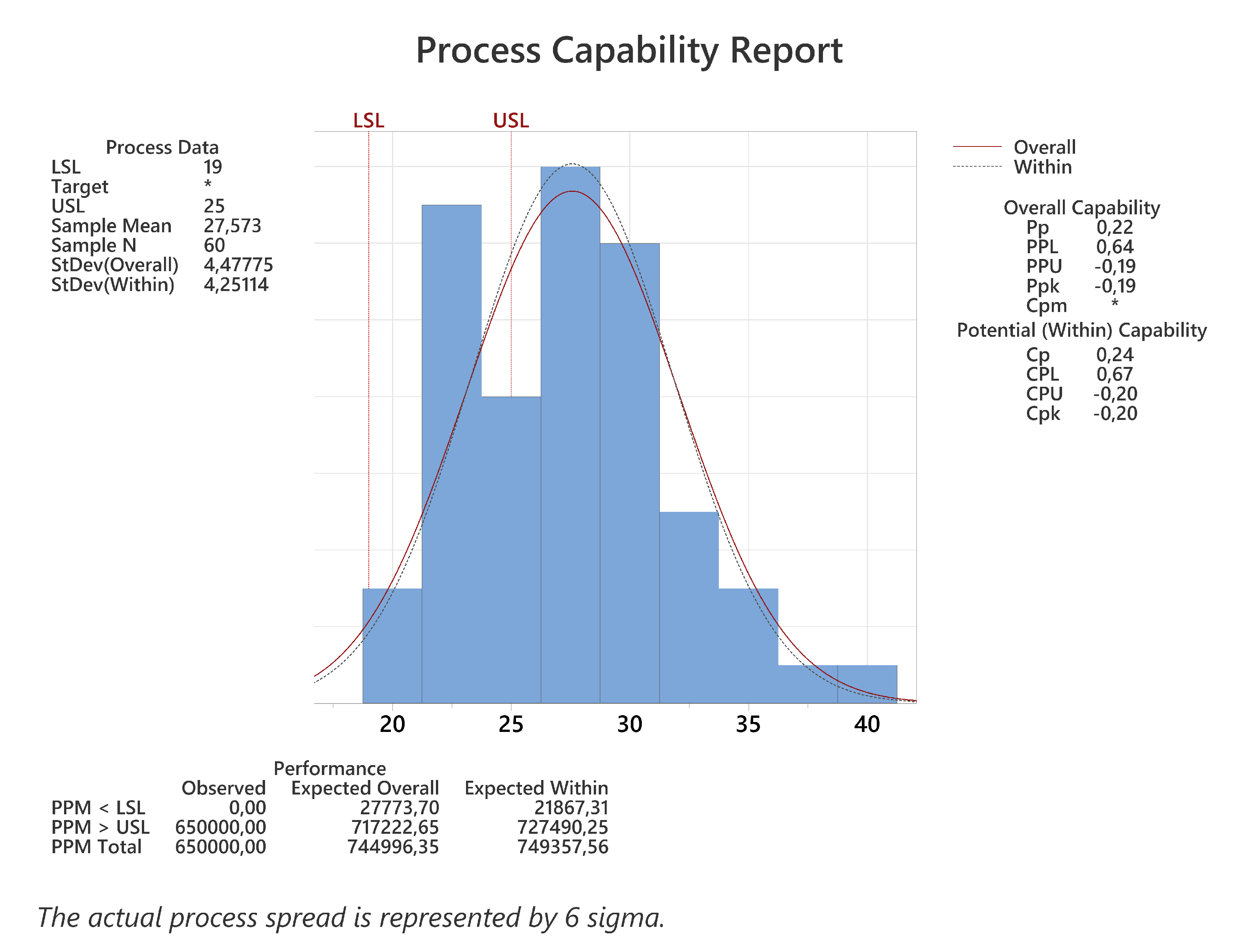

The process capability report, presented in

Figure 7, summarizes the calculated capability indices and illustrates the process distribution relative to the established specification limits.

The process capability analysis highlights several important findings:

The lower specification limit (LSL) is 19, representing the theoretical minimum processing time achievable under ideal operating conditions without delays or inefficiencies.

The upper specification limit (USL) is 25, corresponding to the maximum acceptable cycle time defined by customer expectations.

The process mean is , which exceeds the USL, indicating that the process is not capable of consistently meeting customer requirements.

The process exhibits notable variation, with an overall standard deviation of and a within-subgroup standard deviation of .

These results confirm substantial variability at both the overall and subgroup levels, reflecting inconsistencies that hinder process capability and customer satisfaction.

Capability Indices

The process capability indices quantify the alignment between process performance and specification limits.

and : These values indicate that process variability is excessive relative to the tolerance range. A capable process typically requires .

and : The negative values confirm that the process mean exceeds the USL, leading to inadequate performance.

The one-sided indices and : These show that the process is somewhat aligned with the LSL but fails significantly with respect to the USL.

Defect Rates

Defect rates, expressed in parts per million (PPM), are as follows:

PPM below LSL: 0, indicating no output below the minimum acceptable performance level.

PPM above USL: 650,000, showing that a large proportion of results exceed the upper specification limit.

These results confirm that the process is neither capable nor centered within the defined limits. The elevated mean and high variability indicate the need for immediate corrective action. Improvement efforts should focus on reducing variation by eliminating non–value-added activities such as batching and routing delays, and on re-centering the process mean within the specification limits to meet customer expectations.

4.2.3. Descriptive Statistics and Variability Analysis

To further assess variability in the process, all recorded cycle time data were consolidated and analyzed. This evaluation provides an overview of the main descriptive statistics, including the mean, standard deviation, and percentile values.

Figure 8 displays the descriptive statistics for the measured cycle time (C1), based on 60 observations.

The analysis yields the following findings:

The mean cycle time is with a standard deviation of , indicating a moderate spread around the mean.

The minimum observed value is , while the maximum is , revealing a wide range of observed cycle times.

The interquartile range (Q1–Q3) extends from to , showing that the central 50% of values fall within this interval.

The noticeable spread between the minimum and maximum values highlights process variability that warrants closer examination, even though control charts indicate statistical stability.

Although the control charts confirm process stability, the observed dispersion suggests that variation within the process may still affect output consistency. Further investigation is recommended to ensure sustained quality and to mitigate potential deviations in future operations.

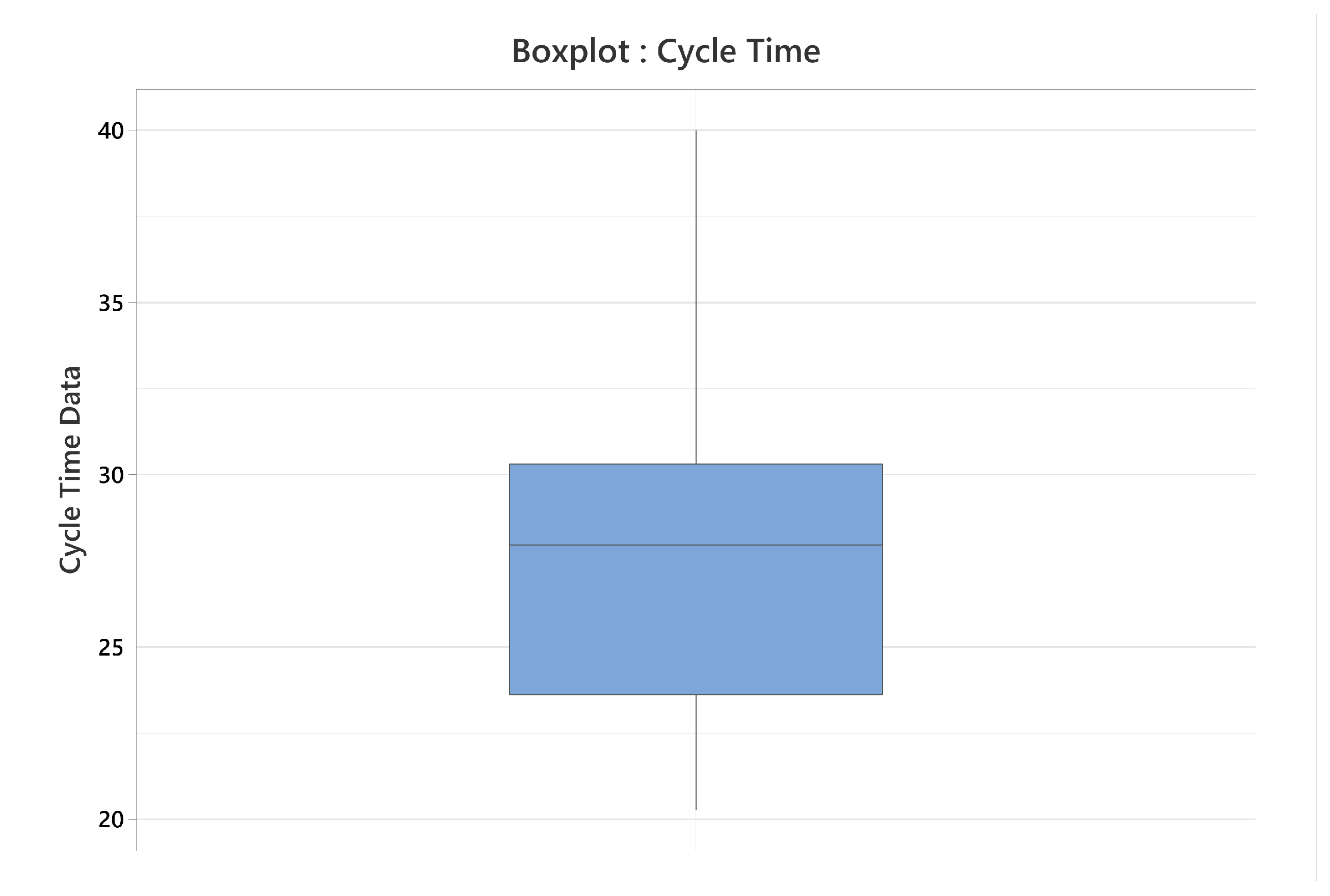

The boxplot in

Figure 8 illustrates the distribution of the cycle time data. The median is centered around 28, indicating that most cycle times cluster near this value. The interquartile range (IQR), spanning from

to

, captures the middle 50% of observations. Several outliers appear above the upper whisker, with values exceeding 40, indicating that certain cycle times are considerably longer than the rest. This pattern highlights additional variability in the process.

Although the process is statistically stable, the spread and presence of outliers suggest inconsistency that could affect performance predictability. Such variability may arise from differences in human resources, process execution, or equipment reliability. Further investigation is therefore necessary to identify the underlying causes and to introduce corrective actions aimed at reducing variation and enhancing process consistency.

4.2.4. Overall Statistical Performance

The control chart analysis confirms that the process operates under statistical stability, with no evidence of special-cause variation affecting either the mean or variability. Although some internal variation exists, the process remains stable but not fully capable of meeting the established specification limits. The capability analysis reveals a clear gap between current performance and the expected standards, indicating a misalignment between process outcomes and customer expectations.

This issue is particularly critical because cycle time represents a key critical-to-quality (CTQ) parameter directly influencing customer satisfaction. While the process demonstrates consistency, its lack of capability highlights weaknesses in process design and execution that require immediate attention. Targeted improvements are necessary to realign performance with customer and operational requirements.

The next phase will involve a detailed assessment of production line capacity, cycle time behavior, and related performance drivers to identify the root causes of inefficiency and misalignment. The insights from this analysis will support process optimization and establish a foundation for sustained improvement.

4.3. Analyze

The Analyze phase focuses on identifying the root causes of inefficiencies affecting process performance. Using statistical tools and process data, this phase examines variations, bottlenecks, and critical factors contributing to delays and errors in the subscription production process.

4.3.1. Production Line Analysis

The production line analysis evaluates capacity, bottlenecks, cycle time, and throughput to assess overall process efficiency. The model accounts for batching delays, network interruptions, and setup time on the plastification machine. Key operational parameters summarized in

Table 5 include stage-specific processing times and batching rules that shape system performance. The analysis also incorporates average network downtime of two hours per day and a plastification setup time of two minutes per 15 units. As shown in

Table 5, multi-stage batching generates waiting periods between operations, contributing to production delays and reduced throughput efficiency.

On the other hand, the effective time per unit for each stage, considering batching delays and setups, is summarized in

Table 6. These values are then used to compute the total cycle time. Note that for Routing 1, the batch size is 3 units. Suppose the first unit exits Compliance and enters Routing 1. The system must wait for the remaining two units to complete processing in Compliance before the batch can start in Routing 1. The waiting time for the first unit is calculated as:

min. The effective time for the first unit in Routing 1 is therefore

min. For the last unit, there is no waiting time, so

min. The total waiting time for the three units is

min. Hence, the average effective time per unit for Routing 1 is

min/unit.

For Routing 2, with a batch size of 3 units, the waiting time for the first unit to start processing is min. The effective time for the first unit is min. For the last unit in the batch, the effective time becomes min. The total waiting time for the batch is min. Thus, the average effective time per unit for Routing 2 is min/unit.

Since the data entry station operates with four machines, the effective time per unit is calculated based on the combined processing capacity. The computation includes both machine processing time and idle time resulting from system delays, such as network interruptions. The following steps outline the procedure used to determine the total effective working time and the corresponding effective time per unit, providing insight into the overall efficiency of the process.

- Step 1:

Calculate Total Idle Time for All Machines

First, we calculate the total idle time for all 4 machines. Since each machine experiences 2 h of idle time per day (120 min), the total idle time for all machines is:

This accounts for the non-productive time when the machines are waiting due to network issues.

- Step 2:

Calculate Total Processing Capacity

Next, the total processing capacity for the four machines is calculated under the assumption of no idle time. Each machine operates for 480 min per day, with a process time of 5 min per unit. Therefore, the total daily capacity for all four machines is:

This value represents the maximum number of units that can be processed per day under ideal conditions, assuming continuous operation with no idle time or interruptions.

- Step 3:

Adjust for Idle Time

The next step adjusts the total working time to account for idle periods caused by network interruptions and other delays. The effective working time for all four machines is therefore calculated as:

This value reflects the actual productive working time representing the effective duration during which the machines actively contribute to processing units.

- Step 4:

Calculate Estimated Units per Day

To estimate the number of units produced per day, the effective working time is divided by the process time per unit:

This represents the estimated daily output after accounting for the impact of idle time on overall productivity.

- Step 5:

Calculate Effective Time per Unit

Finally, the effective time per unit is determined by incorporating the idle time, distributed evenly across all produced units, into the base processing time:

This calculation provides a realistic measure of the actual time required to complete one unit under existing operating conditions.

Table 6 incorporates all relevant sources of delay, including batching effects in Routing 1 and Routing 2, network interruptions during Data Entry, and setup times in the Plastification stage. For plastification, each batch of five units is processed simultaneously on a single sheet. Consequently, each unit experiences a base processing time of 3 min plus a distributed setup delay of 2 min per 15 units, resulting in a total effective time of 3.133 min per unit.

The total cycle time, obtained by summing all effective times across stages, is calculated as: min per unit.

In this study, two complementary cycle time values are referenced. The first, a measured mean of min per unit, was recorded during the Measure phase from real-time data collected over 60 cycles. The second, a calculated total of min per unit, was derived during the Analyze phase by aggregating effective times across all process stages, including modeled delays due to batching, setup, and idle time. Although these results stem from distinct analytical approaches, the modeled value remains within the expected variation range. Together, they provide a coherent and reliable view of current process performance and potential areas for optimization.

Capacity and Bottleneck Identification

The bottleneck in a production system is defined as the stage with the highest long-term utilization, corresponding to the lowest effective capacity [

28]. Accordingly, the

base capacity of workstation

i is expressed as the ratio between the number of parallel machines and the base process time:

where

represents the number of machines and

the base processing time at workstation

i, both expressed in consistent time units (e.g., jobs per minute).

Following Hopp and Spearman [

28], the

bottleneck rate of the production line is determined as the minimum base capacity among all stages:

where

N denotes the total number of workstations in the process. In the context of the subscription production line, each stage is considered an independent workstation, and the bottleneck corresponds to the stage exhibiting the lowest

, which restricts the overall system throughput. This formulation—previously applied in production system analysis—has been clarified here to better illustrate how downtime and variability affect throughput in the studied service process.

The bottleneck rate

represents the system’s performance under ideal operating conditions, excluding disruptions such as machine breakdowns. In practice, however, production systems experience random failures that reduce effective capacity over time. To reflect this, the

effective capacity is introduced, which adjusts the base rate

by incorporating machine availability. As defined by Hopp and Spearman [

28], the effective capacity of a workstation is expressed as:

where

A denotes the

availability of the machine or workstation, and

m is the number of parallel machines.

Availability

A quantifies the proportion of time a machine is operational and is calculated from the mean time to failure

and the mean time to repair

using:

This formulation captures the combined effect of frequent short failures and infrequent long ones, yielding a realistic estimate of long-term throughput. Incorporating availability into the capacity calculation thus provides a more accurate representation of actual system performance under stochastic operating conditions.

In this context,

denotes the

mean time to failure (MTTF), the average duration a machine operates before a failure occurs, while

represents the

mean time to repair (MTTR), the average duration required to restore the machine to working condition. These two quantities determine the proportion of uptime and downtime in a stochastic production environment. For a more detailed discussion of these parameters and their application in capacity modeling, readers are referred to Chapter 8 of Hopp and Spearman [

28].

This formulation ensures that both frequent short outages and rare long interruptions are accurately represented in process capacity. Incorporating

A into the analysis provides a realistic estimate of long-term throughput under variable operating conditions. Availability is expressed as:

This formulation is employed to estimate the base and effective capacity of each workstation in the subscription production line. Except for the Compliance stage, most stages experience delays due to batching (e.g., Routing 1 and Routing 2), connectivity interruptions (e.g., two hours of daily network downtime in Data Entry), or setup times (e.g., Plastification).

To compute availability and effective capacity, productive process time is treated as

, while delays—regardless of cause—are treated as

. For instance, in Routing 1, the workstation operates for 2 min before waiting 18 min for a batch to form, repeating this cycle. Availability for this stage is therefore:

The corresponding effective capacity is calculated as:

This approach enables realistic representation of structural and operational inefficiencies across process stages.

Table 7 summarizes the calculated base and effective capacities for all workstations in the subscription production line.

The bottleneck in the production process is identified as Routing 1, with an effective capacity of , representing a critical constraint that substantially limits overall throughput. This stage operates considerably slower than the others, creating downstream delays throughout the process. In comparison, Data Entry (), Routing 2 (), and Plastification () demonstrate higher effective capacities but remain restricted by the low performance of Routing 1.

Although these stages exhibit better nominal capacities, their output is further limited by reduced availability. Routing 1 operates with only availability, making it the primary constraint. Routing 2 achieves , while Plastification maintains availability. Data Entry, despite its relatively higher capacity, is affected by network-related downtime, resulting in only availability.

Overall system performance is therefore constrained by the low capacity and limited availability of Routing 1. Even stages with higher capacities are unable to perform optimally due to these interdependencies. Increasing the availability and operational efficiency of Routing 1, followed by improvements in Routing 2 and Data Entry, is essential to enhance throughput and align production performance with customer expectations.

Value-Added vs. Non-Value-Added Times

In the production process, non–value-added (NVA) time refers to activities and delays that do not increase the product or service value from the customer’s perspective. These include:

Batch delays: Waiting for batch completion, as seen in Routing 1 and Routing 2.

Idle time: Periods with no active work, such as Data Entry downtime due to network interruptions.

Setup time: Time required to prepare equipment or machines between production cycles, such as plastification setup.

Non-value-added time, primarily from Routing 1 and Routing 2, amounts to , representing approximately of total process time. Necessary non–value-added time (NNVA), such as the Compliance stage, accounts for , which is required but does not directly add customer value. Value-added (VA) time—comprising Data Entry, Plastification, and Delivery—totals , or roughly of the total cycle.

This breakdown reveals considerable potential for improvement by targeting batching delays and idle periods, particularly in Routing 1 and Routing 2. Reducing these inefficiencies would streamline the workflow, increase the proportion of value-added activities, and significantly enhance overall process efficiency.

4.3.2. Pareto Analysis of Non-Value-Added Time

To quantify the impact of delays at each stage, the total non–value-added (NVA) time was analyzed using a Pareto approach.

Table 8 presents the contribution of each process stage to overall inefficiency. The analysis identifies Routing 2 (batch delays) and Routing 1 (batch delays) as the main sources of NVA time, together accounting for

of total inefficiencies—

from Routing 2 and

from Routing 1. These results show that batching is the principal cause of process delay, as units must wait for others to complete before moving to the next step.

Data Entry (idle time) contributes of total inefficiency, mainly due to network downtime causing work interruptions. Although its impact is smaller than that of Routing 1 and Routing 2, improving system reliability would further enhance efficiency. Plastification (setup time) contributes of total NVA time and represents a secondary but still measurable delay source.

The cumulative contribution illustrated in

Figure 9 shows that addressing Routing 2 and Routing 1 can eliminate over

of total non–value-added time. Prioritizing improvements in these stages will yield the greatest impact on throughput and process performance. Remaining inefficiencies, such as network-related idle time and setup delays, can then be addressed in later optimization efforts. This Pareto analysis provides a clear basis for setting priorities in the improvement phase.

4.4. Improve

The Improve phase implements targeted actions to resolve bottlenecks and inefficiencies in the subscription production process. The main constraint is Routing 1, where batching delays significantly extend cycle time. The proposed improvements aim to remove batch-related waiting, automate data entry, optimize resource use, and introduce real-time monitoring and feedback mechanisms.

4.4.1. Proposed Improvements

Eliminate Batch Processing in Routing 1 and Routing 2

The current system groups units in batches of three in Routing 1 and Routing 2, creating unnecessary waiting time before each transfer. The proposed solution replaces batching with a single-piece flow, allowing each unit to move immediately to the next step once processed. This will reduce waiting time and increase throughput. Implementation will require workflow redesign, operator training for continuous flow, and real-time tracking of unit progress.

Automate Data Entry

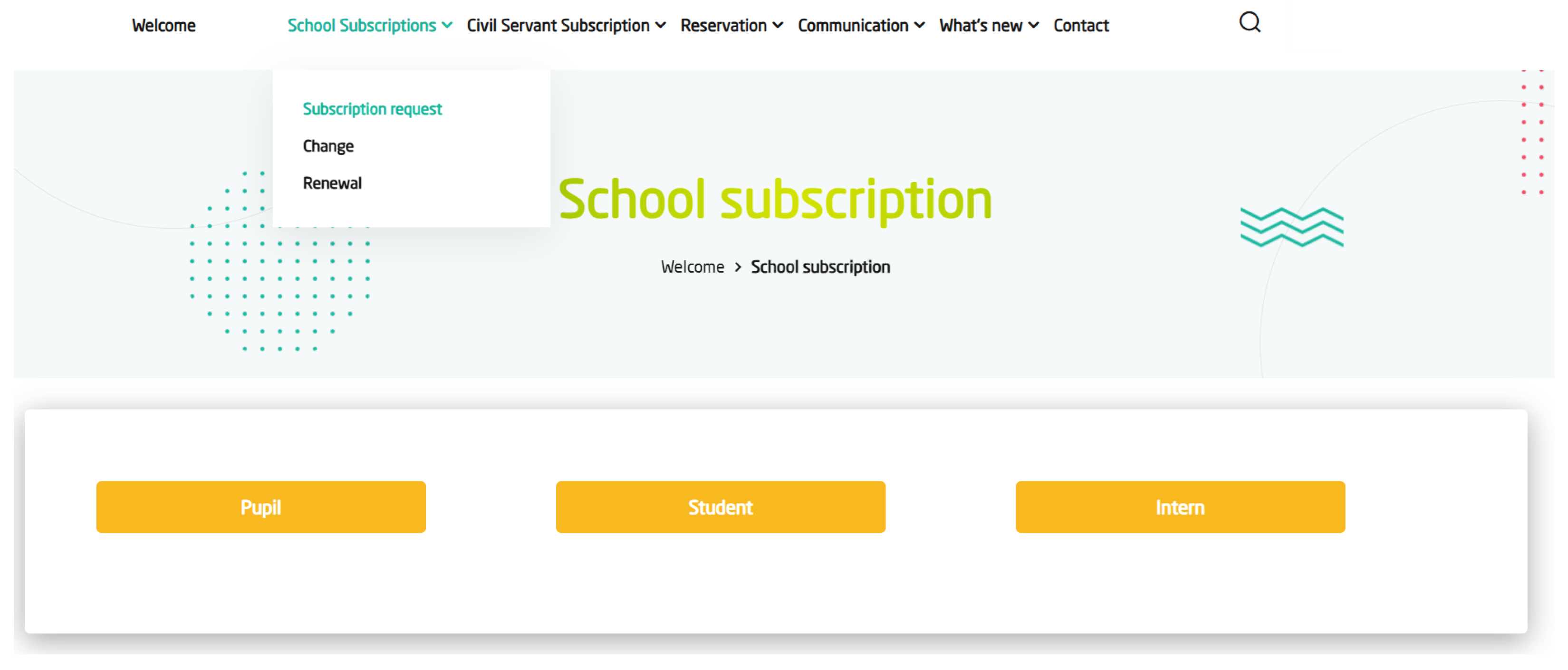

The manual data entry stage is error-prone and delayed by network interruptions. A proposed customer web portal will enable direct data entry with built-in validation to ensure accuracy and completeness (see

Figure 10 and

Figure 11). Automation will shorten processing time, improve data reliability, and reduce staff workload. The solution requires web development, system integration, and user training for both staff and customers. The new platform for school subscriptions has now been fully integrated into the company website.

Note that

Figure 10 and

Figure 11 show screenshots that were designed in Arabic and translated into English to facilitate readers’ understanding.

Enhance Resource Allocation

The current workforce structure includes unqualified personnel, such as bus drivers performing critical production tasks. To address this, the project recommends reallocating resources so that essential activities are handled only by appropriately skilled staff. This will require a skills assessment to identify competent agents, redesigning job roles to align with qualifications, and conducting targeted training on tasks such as data entry, compliance verification, and plastification. Improved role assignment and focused training will enhance process reliability, reduce errors, and strengthen overall performance.

Implement Real-Time Monitoring and Feedback

The process currently operates without adequate tracking of performance indicators, resulting in inefficiencies and delays. The proposed solution introduces a real-time monitoring system to track key metrics such as cycle time, error rate, and resource utilization. Collected data will support timely decision-making and continuous improvement through analytical feedback. Real-time visibility of operations will help maintain process stability and sustain performance gains.

4.4.2. Before-and-After Comparison of KPIs

The proposed improvements are anticipated to lead to measurable performance gains across key performance indicators (KPIs). As summarized in

Table 9, notable enhancements are projected in several areas. Cycle time shows a reduction from 29 to 18 min per unit, indicating faster throughput and a more efficient process flow. The error rate drops from 5% to 0.9%, suggesting higher process reliability and improved quality consistency. Customer satisfaction rises from 65% to 90%, reflecting the positive influence of the proposed measures on perceived service quality. Throughput increases from 288 to 400 units per day, highlighting greater productivity using the same resources. Collectively, these improvements point to the strong potential of the proposed actions to enhance both efficiency and quality performance.

4.4.3. Addressing Resistance to Change and Resource Constraints

Successful implementation of these improvements depends on managing resistance to change and overcoming resource limitations. Transparent communication, focused training, and performance-based incentives will build acceptance and ensure staff readiness for new practices. To address resource constraints, the project will prioritize critical actions, allocate specific budgets, run pilot tests to validate feasibility, and encourage cross-departmental collaboration to maximize results.

4.5. Control Phase: Institutionalizing Improvements and Ensuring Sustainability

The Control phase ensures the long-term sustainability of the improvements achieved through the DMAIC process. Implementation will follow a structured, gradual approach—starting with the establishment of a robust control system, followed by continuous monitoring and refinement over a 6–12 month period.

4.5.1. Standardized Process Governance

A key focus of the Control phase is establishing standardized procedures across all stages of the subscription production process. This involves documenting each process step as a detailed Standard Operating Procedure (SOP) accessible to all operators through a digital knowledge base. Visual management tools, such as color-coded floor markings and defined quality checkpoints, will support process discipline. Abnormal conditions will be signaled through Andon lights, prompting immediate corrective action.

The data entry system will incorporate automated validation of critical process data to reduce human error. In addition, the manual tracking of subscriptions will be replaced by a barcode-based system, ensuring accurate routing and traceability.

4.5.2. Real-Time Process Monitoring

Real-time process monitoring will maintain stability and ensure that improvements are sustained. Key metrics, such as cycle time, will be continuously tracked using –R control charts to identify variations beyond acceptable limits. Alerts will automatically notify supervisors when deviations occur, such as equipment parameters drifting from target values.

Monthly capability studies will confirm that the process maintains a long-term for critical parameters like cycle time and error rate. This continuous evaluation will ensure the process remains stable, capable, and aligned with performance goals.

4.5.3. Sustained Cultural Adoption

Long-term success depends on embedding continuous improvement into the organization’s culture. Daily ownership of process performance will be promoted at all levels. Frontline operators will conduct daily 5S audits to maintain workplace organization, while leadership will perform weekly Gemba walks to verify adherence to SOPs and identify improvement opportunities.

Quarterly executive reviews will evaluate performance using balanced scorecards that track financial, operational, and customer indicators. A continuous improvement (Kaizen) pipeline will also be established to encourage employees to submit improvement ideas, which will be reviewed and prioritized by a cross-functional team.

4.5.4. Risk-Mitigated Transition

During implementation, potential disruptions such as IT downtime or equipment failure will be managed through a risk-mitigated transition plan. Backup systems and cross-trained staff will be in place to ensure process continuity under any unforeseen circumstances.

4.5.5. Audits and Monitoring

Periodic audits will be conducted according to ISO 9001:2015 [

29] and ISO 19011:2018 [

30] standards to maintain compliance and ensure process integrity. In line with Clauses 9.2 and 9.3, management reviews will evaluate implemented changes to verify their effectiveness and conformity to planned arrangements.

The audit framework will help institutionalize improvements across departments. Key indicators—cycle time, error rate, and customer satisfaction—will be continuously monitored during audits to ensure performance remains within acceptable limits. Any deviation will trigger prompt corrective action to eliminate root causes and maintain process control.

5. Conclusions

This study applied the Lean Six Sigma (LSS) methodology to optimize the subscription production process in a governmental transportation company, proving that structured quality frameworks can be effectively adapted to public-sector administration. Using the DMAIC (Define, Measure, Analyze, Improve, Control) approach, the research identified major inefficiencies, validated them statistically, and implemented sustainable solutions that enhanced efficiency, accuracy, and customer satisfaction.

The Define and Measure phases exposed core issues—batching delays, redundant data handling, and manual workflows—causing instability and waste. MSA confirmed data reliability, while ANOVA and capability analysis quantified performance gaps, showing that non-value-added activities represented 38.7% of total cycle time. In the Improve phase, introducing one-piece flow, automating data entry, and reallocating resources reduced cycle time by 37.5%, process errors by 80%, and increased customer satisfaction to 90%, with throughput gains of 38.9% and annual cost savings near 20%. The Control phase institutionalized improvements through Standard Operating Procedures, periodic capability reviews, and real-time monitoring aligned with ISO 9001:2015 and ISO 19011:2018. Clarifying the relationships among availability, effective capacity, and process variability reinforced the theoretical interpretation of these results, ensuring that lasting impact was achieved through evidence-based governance, transparency, and continuous improvement. A comparative reflection with previous research, notably the work of [

23], underscores the methodological advancement achieved in this study. Whereas earlier studies primarily emphasized safety performance and used descriptive tools, the present research integrated advanced statistical validation, real-time measurement, and digital process automation. This expansion of analytical scope strengthened the reliability of findings and positioned the study at the intersection of operational excellence, digital transformation, and sustainable governance. The inclusion of ISO-based control standards represents a further enhancement, ensuring that improvement outcomes are both auditable and institutionally anchored. From a practical perspective, this research provides a replicable and scalable framework for governmental organizations seeking to modernize administrative workflows and achieve higher service quality. It demonstrates that public institutions, often constrained by bureaucratic structures, can attain private-sector levels of efficiency when adopting structured, data-driven improvement frameworks. The LSS-based approach introduced here supports managers to align process performance with strategic objectives while promoting a culture of continuous improvement and accountability across departments.

From a societal perspective, the achieved improvements create measurable public value. Faster and more accurate subscription processing enhances accessibility for citizens—especially students and families—while improved transparency reinforces institutional credibility. These outcomes promote sustainable governance through efficient resource utilization, digital transformation, and inclusive service delivery.

The study highlights Lean Six Sigma as a catalyst for governance modernization and social sustainability in public services. It bridges operational excellence with citizen trust, demonstrating how data-driven continuous improvement strengthens accountability, transparency, and resilience in public institutions.

Future research could expand upon this foundation by integrating predictive analytics, artificial intelligence, and machine learning to anticipate demand fluctuations and optimize service allocation in real time. Extending this methodology to other areas such as fleet maintenance, human resource management, or customer service could further enhance organizational performance and citizen satisfaction. Additionally, longitudinal studies could assess the long-term impact of LSS-driven reforms on institutional agility, environmental sustainability, and public trust.