NARX Neural Network for Safe Human–Robot Collaboration Using Only Joint Position Sensor

Abstract

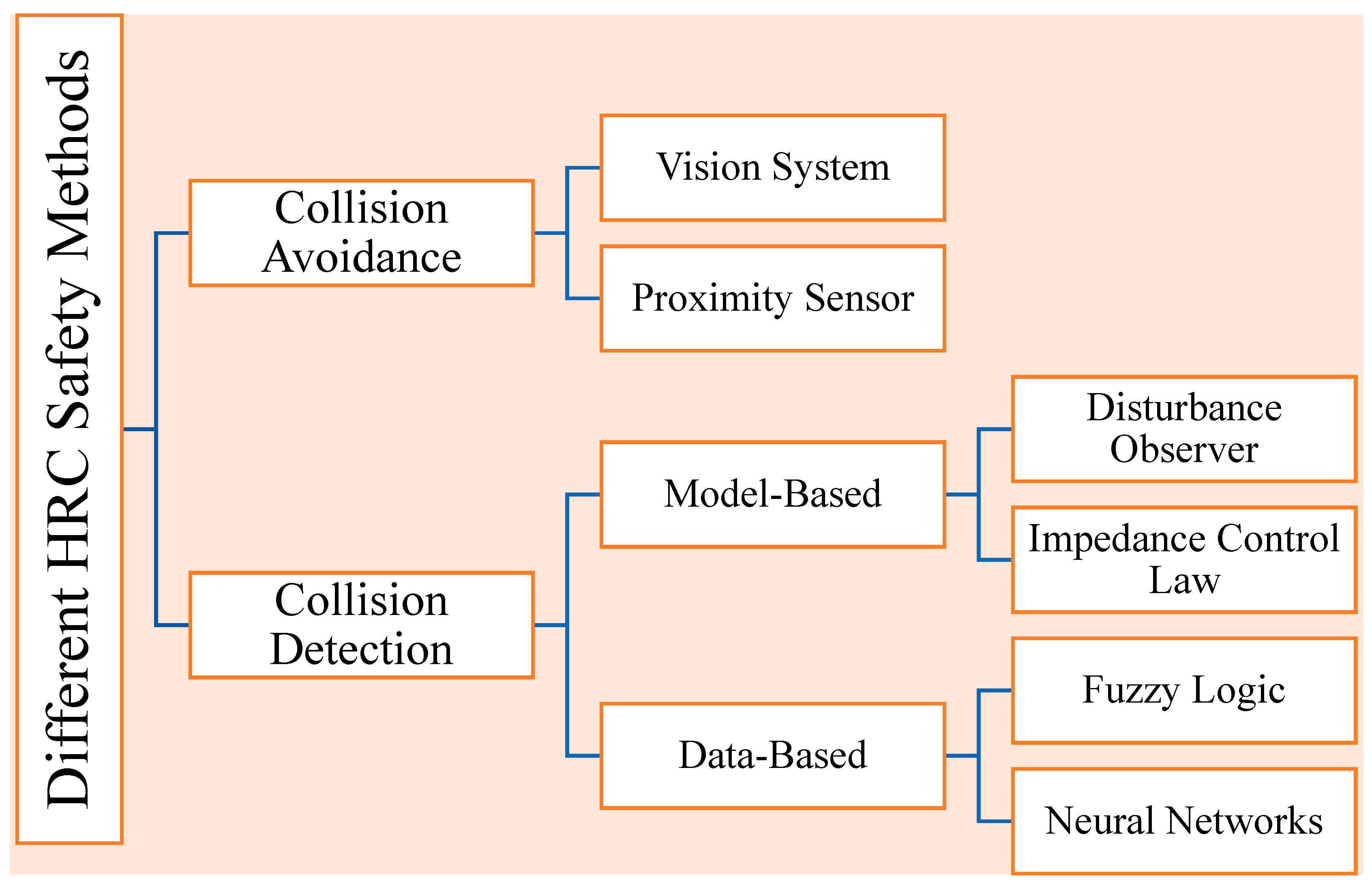

1. Introduction

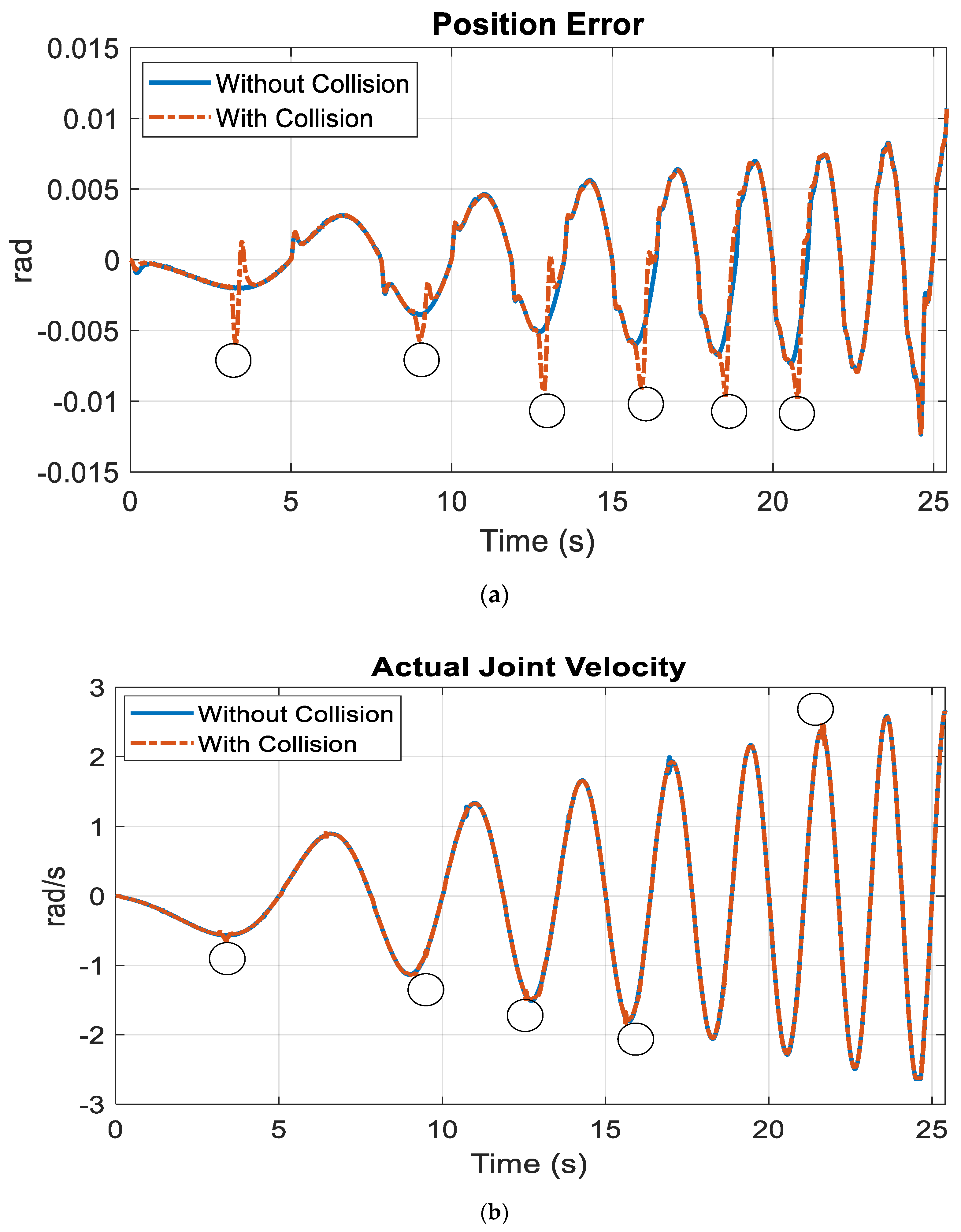

2. Dynamics of Manipulator Joints

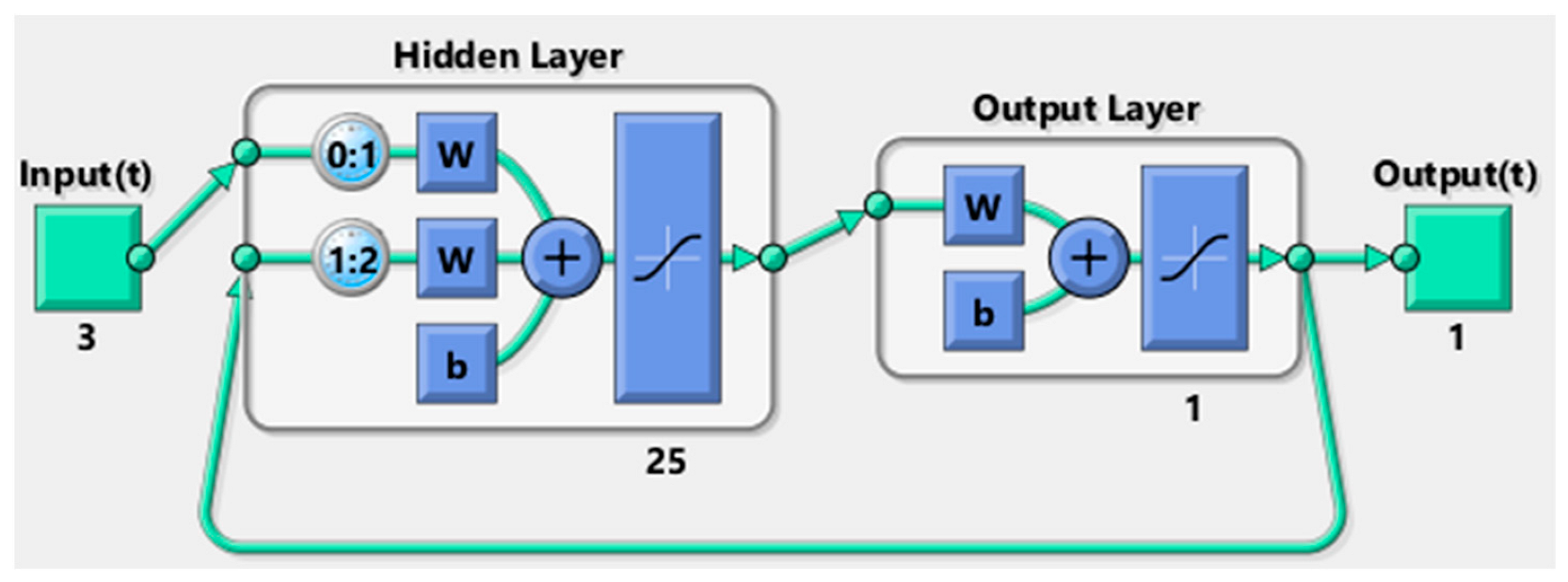

3. NARXNN Design

- (1)

- The current position error of the joint , presenting the difference between the desired and the actual value of the joint’s position;

- (2)

- The previous position error of the joint ;

- (3)

- The actual velocity of the joint .

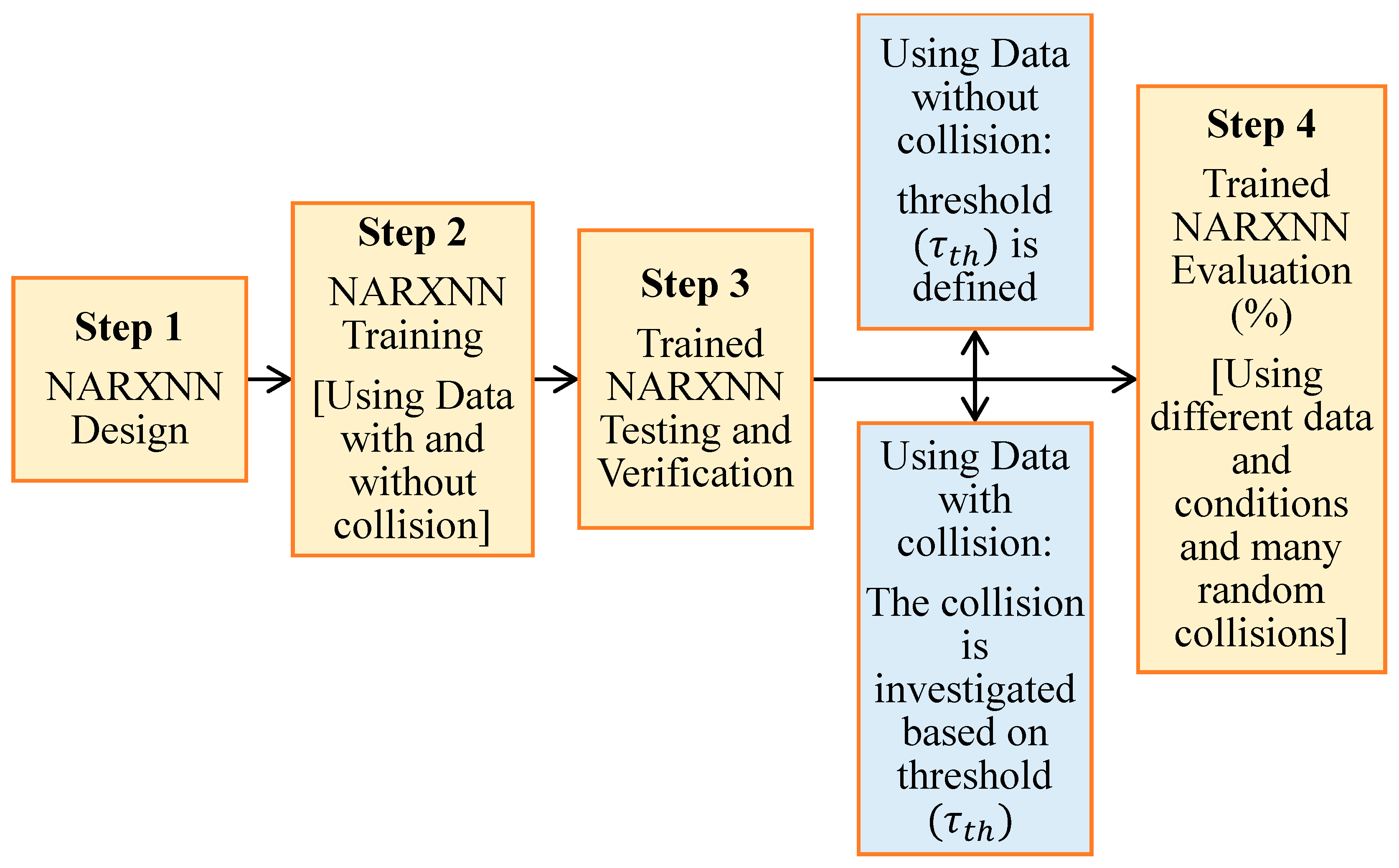

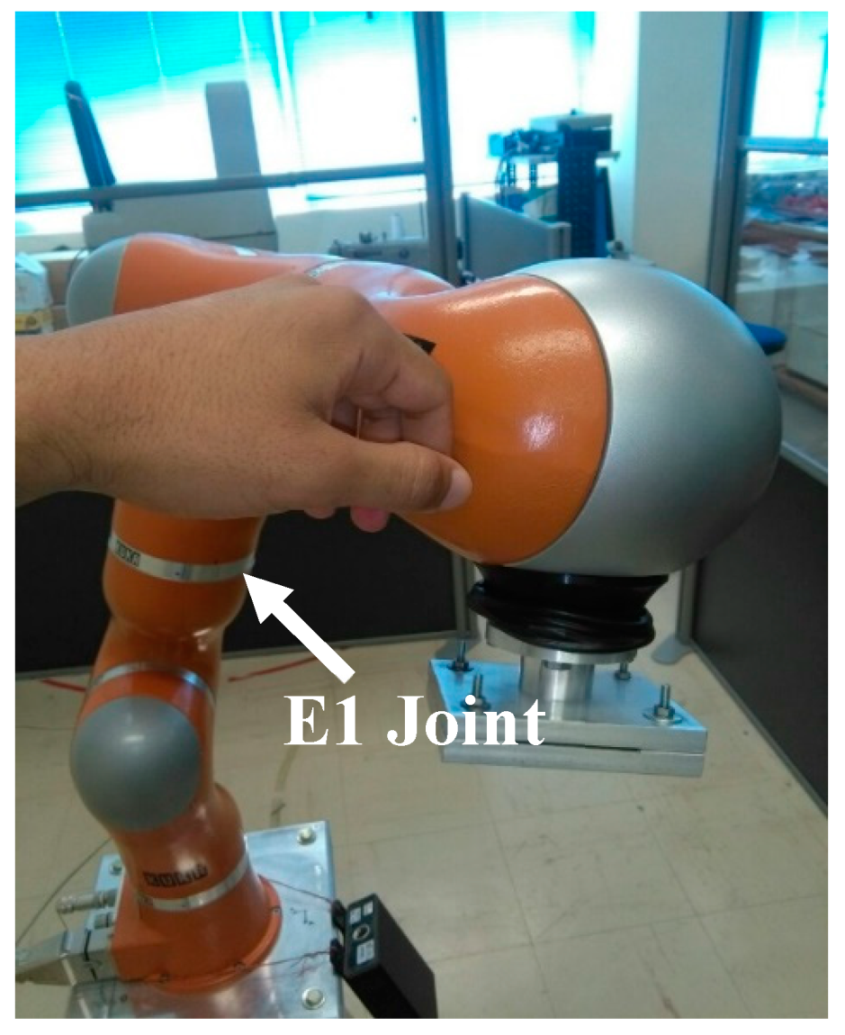

4. NARXNN Training Then Testing

- (1)

- Collect the data with and without collisions from the experiments with the KUKA LWR robot.

- (2)

- Initialize the parameters of the NARXNN and select the suitable number of hidden neurons.

- (3)

- Train the designed NARXNN, using the collected data with and without collisions.

- (4)

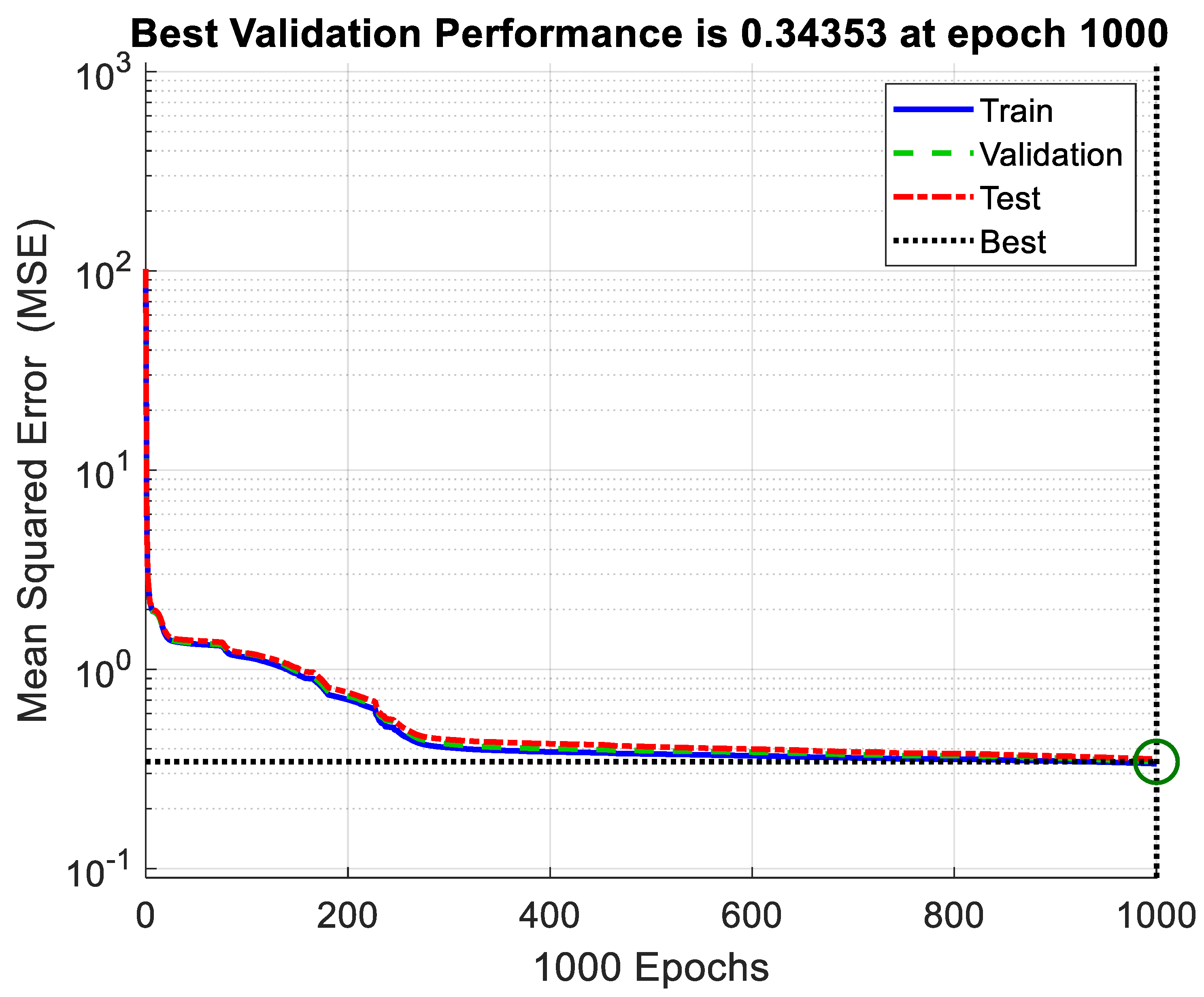

- After the training is completed, check the performance of the NARXNN by investigating the resulting MSE.

- (5)

- If the resulting MSE is a high value and is not satisfactory, go again to step 2.

- (6)

- If the resulting MSE is very small and close to zero (satisfactory), perform the following:

- ➢

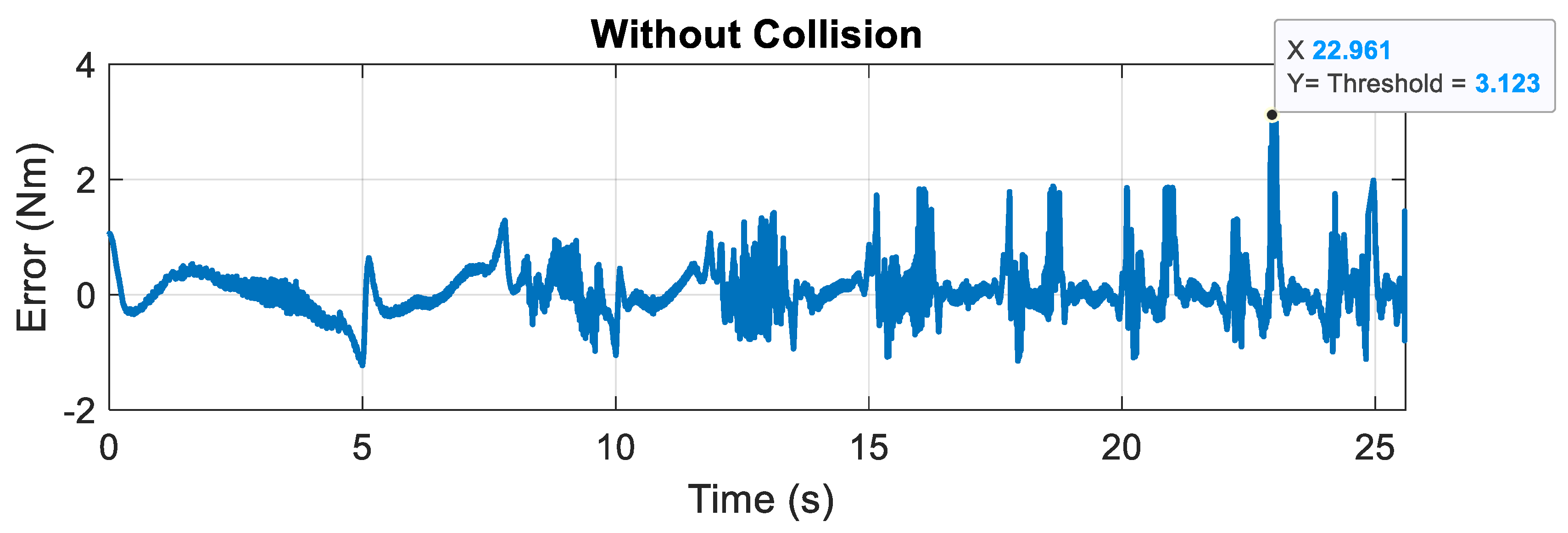

- 6.1 Test the trained NARXNN by using the data without collisions that is used for training and check the training/approximation error.

- ➢

- 6.2 If this training/approximation error is a low value and is satisfactory, calculate the collision threshold and then go to step 7.

- ➢

- 6.3 If this training/approximation error is a high value and is not satisfactory, go again to step 2.

- (7)

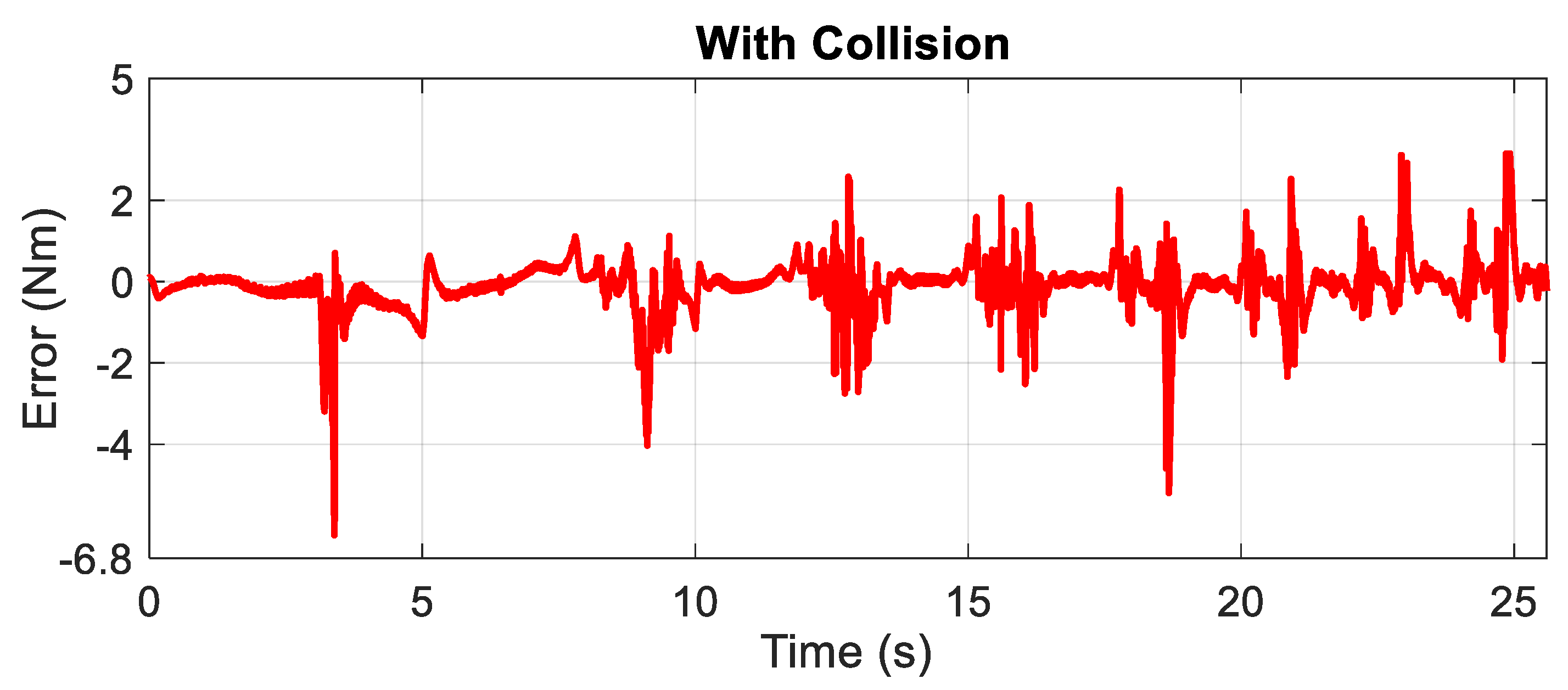

- Test the trained NARXNN using the data with collision that is used for the training process and check the collisions using the determined collision threshold.

- (8)

- Check the effectiveness (%) of the trained NARXNN by performing many random different collisions with the robot based on the determined collision threshold.

- (1)

- The hidden neuron number was 25.

- (2)

- The iteration/repetition number was 1000.

- (3)

- The lowest MSE was 0.34353. The equation used for calculating this MSE is given as follows:

- (4)

- The training time was 34 min and 28 s. This time had no importance because the important aim is to obtain a very well trained NARXNN that can detect and identify the robot’s collisions with humans efficiently. In addition, the training occurs offline.

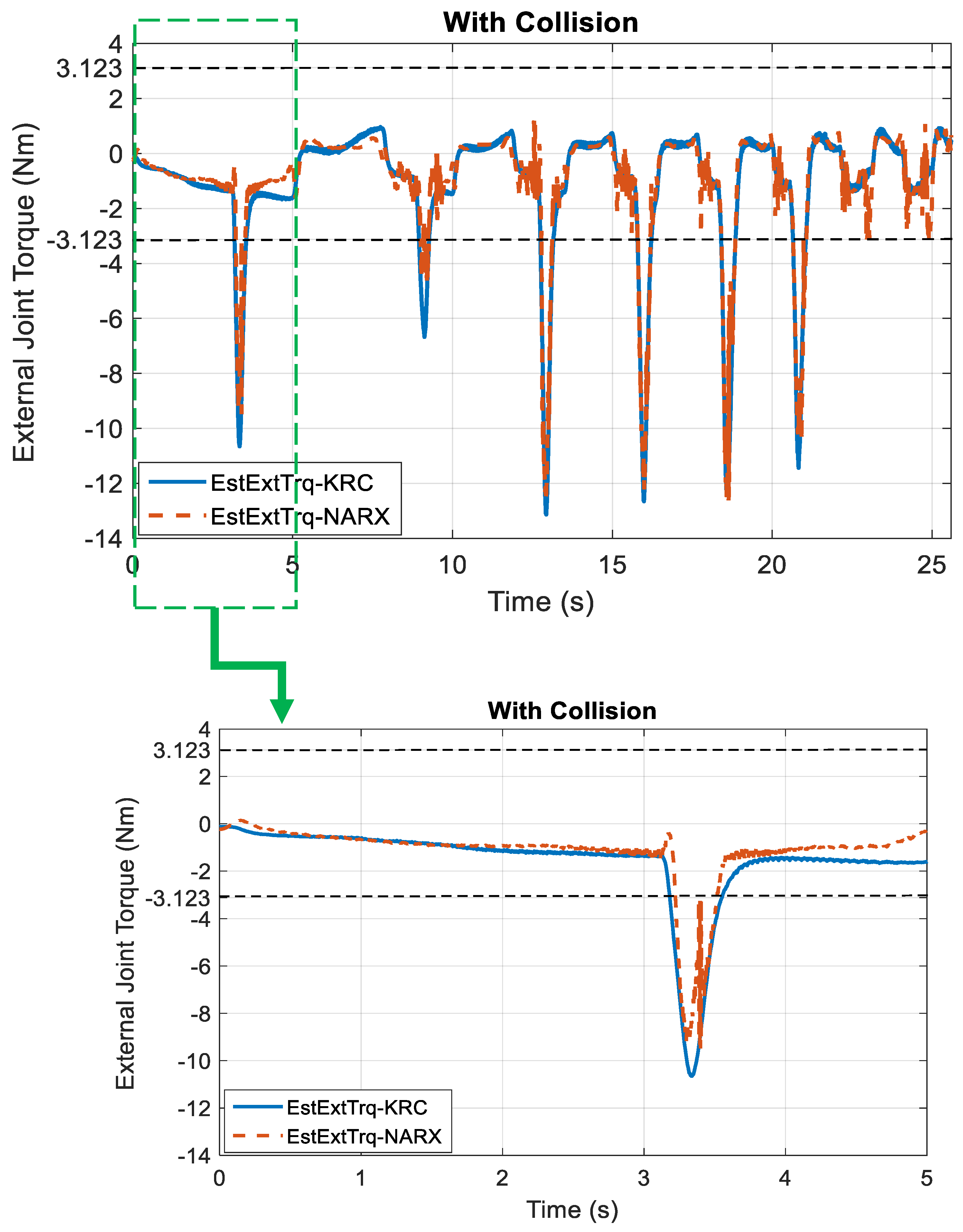

5. NARXNN Evaluation and Effectiveness

- (1)

- Correctly detected collisions are represented as true positives (TP).

- (2)

- Actual collisions not detected by the NARXNN are represented as false negatives (FN).

- (3)

- Alerts of collisions obtained by the trained NARXNN when no actual collision occurs are represented as false positives (FP).

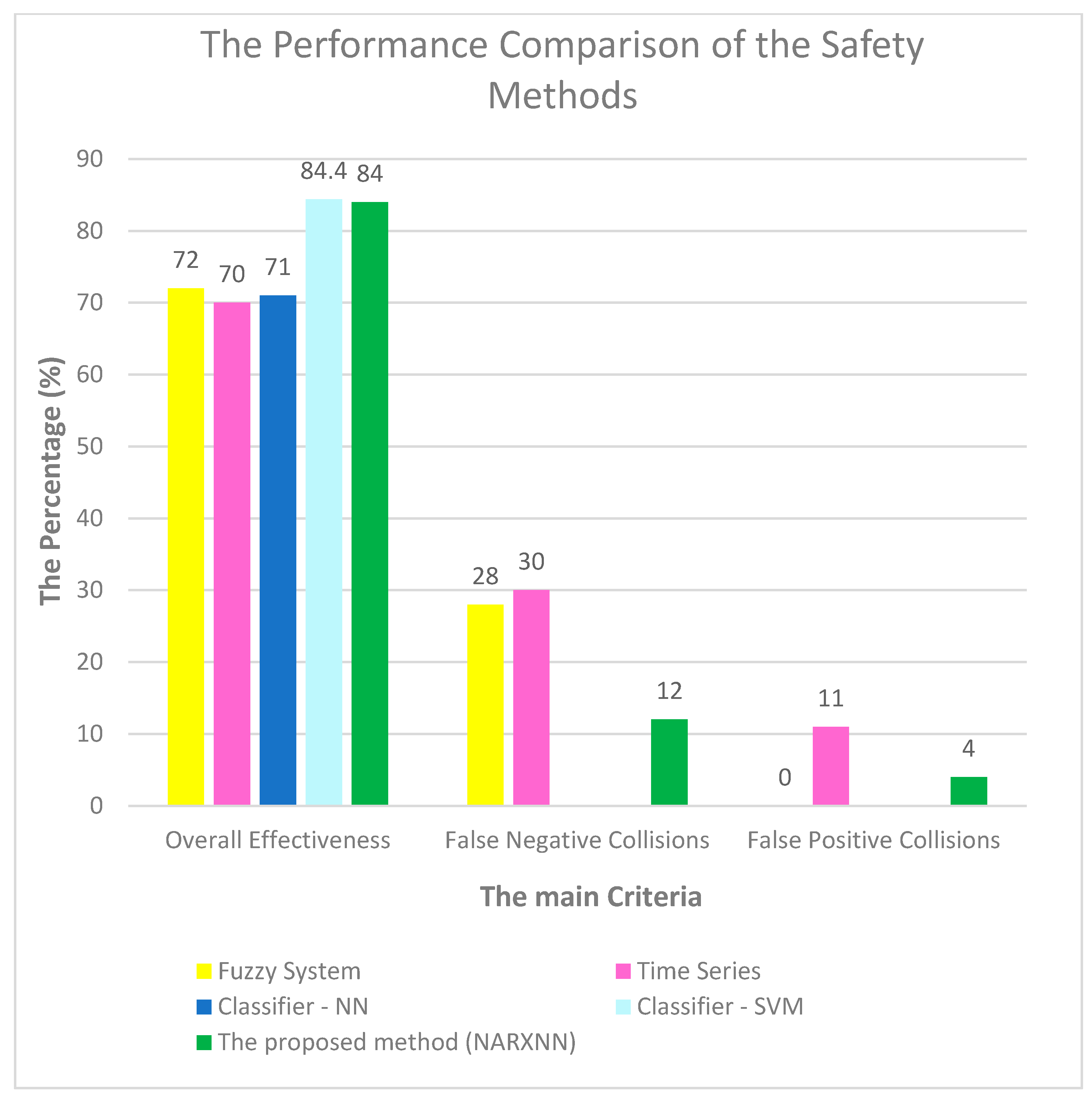

6. Quantitative and Qualitative Comparisons

- ▪

- MLFFNN-1;

- ▪

- MLFFNN-2;

- ▪

- CFNN;

- ▪

- RNN.

7. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Flacco, F.; Kroger, T.; De Luca, A.; Khatib, O. A Depth Space Approach to Human-Robot Collision Avoidance. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 338–345. [Google Scholar]

- Schmidt, B.; Wang, L. Contact-less and Programming-less Human-Robot Collaboration. In Proceedings of the Forty Sixth CIRP Conference on Manufacturing Systems 2013, Setubal, Portugal, 29–30 May 2013; Volume 7, pp. 545–550. [Google Scholar]

- Anton, F.D.; Anton, S.; Borangiu, T. Human-Robot Natural Interaction with Collision Avoidance in Manufacturing Operations. In Service Orientation in Holonic and Multi Agent Manufacturing and Robotics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 375–388. ISBN 9783642358524. [Google Scholar]

- Kitaoka, M.; Yamashita, A.; Kaneko, T. Obstacle Avoidance and Path Planning Using Color Information for a Biped Robot Equipped with a Stereo Camera System. In Proceedings of the 4th Asia International Symposium on Mechatronics, Singapore, 15–18 December 2010; pp. 38–43. [Google Scholar]

- Lenser, S.; Veloso, M. Visual Sonar: Fast Obstacle Avoidance Using Monocular Vision. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Ali, W.G. A semi-autonomous mobile robot for education and research. J. King Saud Univ. Eng. Sci. 2011, 23, 131–138. [Google Scholar] [CrossRef]

- Lam, T.L.; Yip, H.W.; Qian, H.; Xu, Y. Collision Avoidance of Industrial Robot Arms using an Invisible Sensitive Skin. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 4542–4543. [Google Scholar]

- Haddadin, S.; Albu-sch, A.; De Luca, A.; Hirzinger, G. Collision Detection and Reaction: A Contribution to Safe Physical Human-Robot Interaction. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3356–3363. [Google Scholar]

- Cho, C.; Kim, J.; Lee, S.; Song, J. Collision detection and reaction on 7 DOF service robot arm using residual observer. J. Mech. Sci. Technol. 2012, 26, 1197–1203. [Google Scholar] [CrossRef]

- Morinaga, S.; Kosuge, K. Collision Detection System for Manipulator Based on Adaptive Impedance Control Law. In Proceedings of the 2003 IEEE International Conference on Robotics & Automation, Taipei, Taiwan, 14–19 September 2003; pp. 1080–1085. [Google Scholar]

- Jung, B.; Koo, J.C.; Choi, H.R.; Moon, H. Human-robot collision detection under modeling uncertainty using frequency boundary of manipulator dynamics. J. Mech. Sci. Technol. 2014, 28, 4389–4395. [Google Scholar] [CrossRef]

- Min, F.; Wang, G.; Liu, N. Collision Detection and Identification on Robot Manipulators Based on Vibration Analysis. Sensors 2019, 19, 1080. [Google Scholar] [CrossRef] [PubMed]

- Indri, M.; Trapani, S.; Lazzero, I. Development of a Virtual Collision Sensor for Industrial Robots. Sensors 2017, 17, 1148. [Google Scholar] [CrossRef]

- Lu, S.; Chung, J.H.; Velinsky, S.A. Human-Robot Collision Detection and Identification Based on Wrist and Base Force/Torque Sensors. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; Volume i, pp. 796–801. [Google Scholar]

- Cho, C.; Kim, J.; Kim, Y.; Song, J.-B.; Kyung, J.-H. Collision Detection Algorithm to Distinguish Between Intended Contact and Unexpected Collision. Adv. Robot. 2012, 26, 1825–1840. [Google Scholar] [CrossRef]

- Dimeas, F.; Avendano-valencia, L.D.; Aspragathos, N. Human–Robot collision detection and identification based on fuzzy and time series modelling. Robotica 2014, 33, 1886–1898. [Google Scholar] [CrossRef]

- Kim, D.; Lim, D.; Park, J. Transferable Collision Detection Learning for Collaborative Manipulator Using Versatile Modularized Neural Network. IEEE Trans. Robot. 2021, 38, 2426–2445. [Google Scholar] [CrossRef]

- Czubenko, M.; Kowalczuk, Z. A simple neural network for collision detection of collaborative robots. Sensors 2021, 21, 4235. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Aspragathos, N. Human-Robot Collision Detection Based on Neural Networks. Int. J. Mech. Eng. Robot. Res. 2018, 7, 150–157. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Mostfa, A.A. Neural Networks’ Design and Training for Safe Human-Robot Cooperation. J. King Saud Univ. Eng. Sci. 2021, 1–15. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. Manipulator Collision Detection and Collided Link Identification based on Neural Networks. In Advances in Service and Industrial Robotics. RAAD 2018. Mechanisms and Machine Science; Nikos, A., Panagiotis, K., Vassilis, M., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–12. [Google Scholar]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. Neural Network Design for Manipulator Collision Detection Based only on the Joint Position Sensors. Robotica 2020, 38, 1737–1755. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. Human–robot collisions detection for safe human–robot interaction using one multi-input–output neural network. Soft Comput. 2020, 24, 6687–6719. [Google Scholar] [CrossRef]

- Sharkawy, A.-N. Intelligent Control and Impedance Adjustment for Efficient Human-Robot Cooperation. Doctoral Dissertation, University of Patras, Patras, Greece, 2020. [Google Scholar]

- Murray, R.M.; Li, Z.; Sastry, S.S. A Mathematical Introduction to Robotic Manipulation; CRC Press: Boca Raton, FL, USA, 1994; ISBN 9780849379819. [Google Scholar]

- Sharkawy, A.N.; Koustoumpardis, P.N. Dynamics and computed-torque control of a 2-DOF manipulator: Mathematical analysis. Int. J. Adv. Sci. Technol. 2019, 28, 201–212. [Google Scholar]

- Haykin, S. Neural Networks and Learning Machines, 3rd ed.; Pearson: London, UK, 2009; ISBN 9780131471399. [Google Scholar]

- Nielsen, M.A. Neural Networks and Deep Learning; Determination Press: San Francisco, CA, USA, 2015; Available online: http://neuralnetworksanddeeplearning.com/ (accessed on 18 May 2022).

- Sharkawy, A.-N. Principle of Neural Network and Its Main Types: Review. J. Adv. Appl. Comput. Math. 2020, 7, 8–19. [Google Scholar] [CrossRef]

- Smith, A.C.; Hashtrudi-Zaad, K. Application of neural networks in inverse dynamics based contact force estimation. In Proceedings of the 2005 IEEE Conference on Control Applications, Toronto, ON, Canada, 28–31 August 2005; pp. 1021–1026. [Google Scholar]

- Patiño, H.D.; Carelli, R.; Kuchen, B.R. Neural Networks for Advanced Control of Robot Manipulators. IEEE Trans. Neural. Networks 2002, 13, 343–354. [Google Scholar] [CrossRef]

- Goldberg, K.Y.; Pearlmutter, B.A. Using a Neural Network to Learn the Dynamics of the CMU Direct-Drive Arm II; Carnegie Mellon University: Pittsburgh, PA, USA, 1988. [Google Scholar]

- Leontaritis, I.J.; Billings, S.A. Input-output parametric models for non-linear systems Part I: Deterministic non-linear systems. Int. J. Control 1985, 41, 303–328. [Google Scholar] [CrossRef]

- Boussaada, Z.; Curea, O.; Remaci, A.; Camblong, H.; Bellaaj, N.M. A nonlinear autoregressive exogenous (NARX) neural network model for the prediction of the daily direct solar radiation. Energies 2018, 11, 620. [Google Scholar] [CrossRef]

- Mohanty, S.; Patra, P.K.; Sahoo, S.S. Prediction of global solar radiation using nonlinear auto regressive network with exogenous inputs (narx). In Proceedings of the 2015 39th National Systems Conference, NSC 2015, Greater Noida, India, 14–16 December 2015. [Google Scholar]

- Pisoni, E.; Farina, M.; Carnevale, C.; Piroddi, L. Forecasting peak air pollution levels using NARX models. Eng. Appl. Artif. Intell. 2009, 22, 593–602. [Google Scholar] [CrossRef]

- Zibafar, A.; Ghaffari, S.; Vossoughi, G. Achieving transparency in series elastic actuator of sharif lower limb exoskeleton using LLNF-NARX model. In Proceedings of the 4th RSI International Conference on Robotics and Mechatronics, ICRoM 2016, Tehran, Iran, 26–28 October 2016; pp. 398–403. [Google Scholar]

- Bouaddi, S.; Ihlal, A.; Ait mensour, O. Modeling and Prediction of Reflectance Loss in CSP Plants using a non Linear Autoregressive Model with Exogenous Inputs (NARX). In Proceedings of the 2016 International Renewable and Sustainable Energy Conference (IRSEC), Marrakech, Morocco, 14–17 November 2016; pp. 1–4. [Google Scholar]

- Du, K.; Swamy, M.N.S. Neural Networks and Statistical Learning; Springer: Berlin/Heidelberg, Germany, 2014; ISBN 9781447155706. [Google Scholar]

- Marquardt, D.W. An Algorithm for Least-Squares Estimation of Nonlinear Parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Hagan, M.T.; Menhaj, M.B. Training Feedforward Networks with the Marquardt Algorithm. IEEE Trans. Neural. Networks 1994, 5, 2–6. [Google Scholar] [CrossRef] [PubMed]

- ISO/TS 15066; Robots and Robotic Devices–Collaborative Robots 2016. International Organization for Standardization: Geneva, Switzerland, 2016; pp. 1–40.

- Sharkawy, A.-N.; Koustoumpardis, P.N. Human–Robot Interaction: A Review and Analysis on Variable Admittance Control, Safety, and Perspectives. Machines 2022, 10, 591. [Google Scholar] [CrossRef]

- Briquet-Kerestedjian, N.; Wahrburg, A.; Grossard, M.; Makarov, M.; Rodriguez-Ayerbe, P. Using neural networks for classifying human-robot contact situations. In Proceedings of the 2019 18th European Control Conference, ECC 2019, Naples, Italy, 25–28 June 2019; pp. 3279–3285. [Google Scholar]

- Franzel, F.; Eiband, T.; Lee, D. Detection of Collaboration and Collision Events during Contact Task Execution. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Munich, Germany, 19–21 July 2021; pp. 376–383. [Google Scholar]

| Symbol | Meaning |

|---|---|

| A vector representing the positions of the manipulator joints | |

| A vector representing the velocities of the manipulator joints | |

| A vector representing the accelerations of the manipulator joints | |

| The inertia matrix of the manipulator | |

| The Coriolis and centrifugal matrix of the manipulator | |

| The gravity vector of the manipulator | |

| The actuator torque of the manipulator joints | |

| The number of the links or the joints of the manipulator |

| The Trained NARXNN Effectiveness | ||

|---|---|---|

| The Parameter | The Number | The Percentage (%) |

| TN | 25 | 100 |

| TP | 22 | 88 |

| FN | 3 | 12 |

| FP | 1 | 4 |

| The overall effectiveness | 84% | |

| Parameter | NN’s Structure | ||||

|---|---|---|---|---|---|

| MLFFNN-1 | MLFFNN-2 | CFNN | RNN | NARXNN | |

| Layers | 3 | 4 | 3 | 3 | 3 |

| Main Inputs | , , , , | , | , | , | |

| Hidden neurons | 90 | 35 in the first hidden layer, and 35 in the second hidden layer | 35 | 20 | 25 |

| Epochs/ Repetitions | 932 | 1000 | 952 | 906 | 1000 |

| Smallest MSE | 0.040644 | 0.21682 | 0.392 | 0.43078 | 0.34353 |

| Training time | 29 min and 47 s | 1 h, 53 min, and 18 s | 4 min and 24 s | 4 h, 41 min, and 53 s | 34 min and 28 s |

| Average or mean of absolute of approximation error—case of free of contact motion | 0.0955 Nm | 0.2362 Nm | 0.2992 Nm | 0.3061 Nm | 0.2759 Nm |

| Average or mean of absolute of approximation error—case of collision | 0.1398 Nm | 0.2779 Nm | 0.4365 Nm | 0.4456 Nm | 0.3965 Nm |

| Collision threshold | 1.6815 Nm | 2.7423 Nm | 3.4520 Nm | 3.7500 Nm | 3.123 Nm |

| FP collisions | 8% | 4% | 0% | 0% | 4% |

| FN collisions | 16% | 16% | 16% | 20% | 12% |

| Overall effectiveness | 76% | 80% | 84% | 80% | 84% |

| Application | This structure is used in robots with torque sensors. | The structures are used with any conventional robot. | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharkawy, A.-N.; Ali, M.M. NARX Neural Network for Safe Human–Robot Collaboration Using Only Joint Position Sensor. Logistics 2022, 6, 75. https://doi.org/10.3390/logistics6040075

Sharkawy A-N, Ali MM. NARX Neural Network for Safe Human–Robot Collaboration Using Only Joint Position Sensor. Logistics. 2022; 6(4):75. https://doi.org/10.3390/logistics6040075

Chicago/Turabian StyleSharkawy, Abdel-Nasser, and Mustafa M. Ali. 2022. "NARX Neural Network for Safe Human–Robot Collaboration Using Only Joint Position Sensor" Logistics 6, no. 4: 75. https://doi.org/10.3390/logistics6040075

APA StyleSharkawy, A.-N., & Ali, M. M. (2022). NARX Neural Network for Safe Human–Robot Collaboration Using Only Joint Position Sensor. Logistics, 6(4), 75. https://doi.org/10.3390/logistics6040075