Computer Vision-Based Deep Learning Modeling for Salmon Part Segmentation and Defect Identification

Abstract

1. Introduction

2. Materials and Methods

2.1. Sample Preparation

2.2. Image Preprocessing

2.3. Data Set Construction

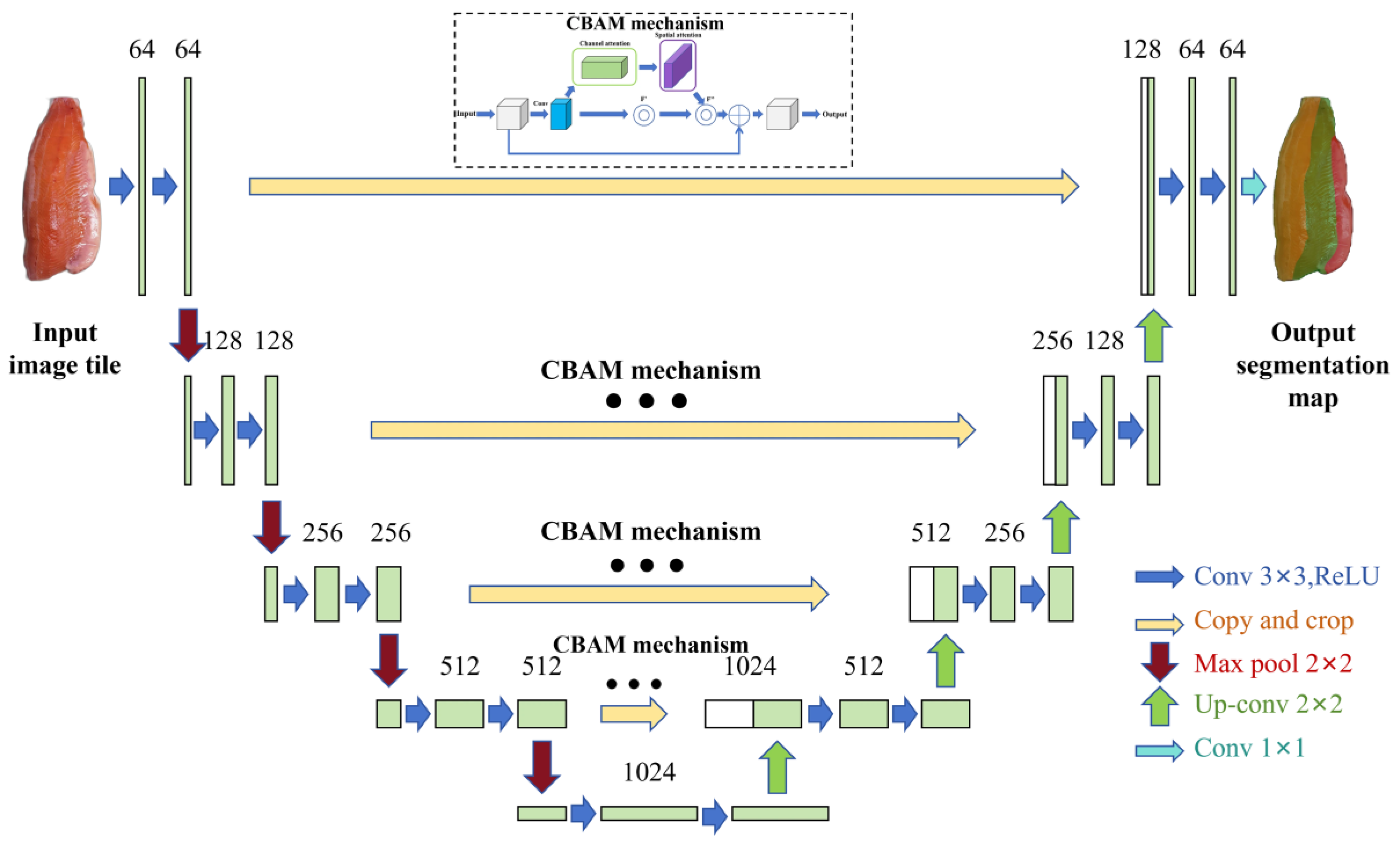

2.4. Part Identification Segmentation Model Based on Improved U-Net

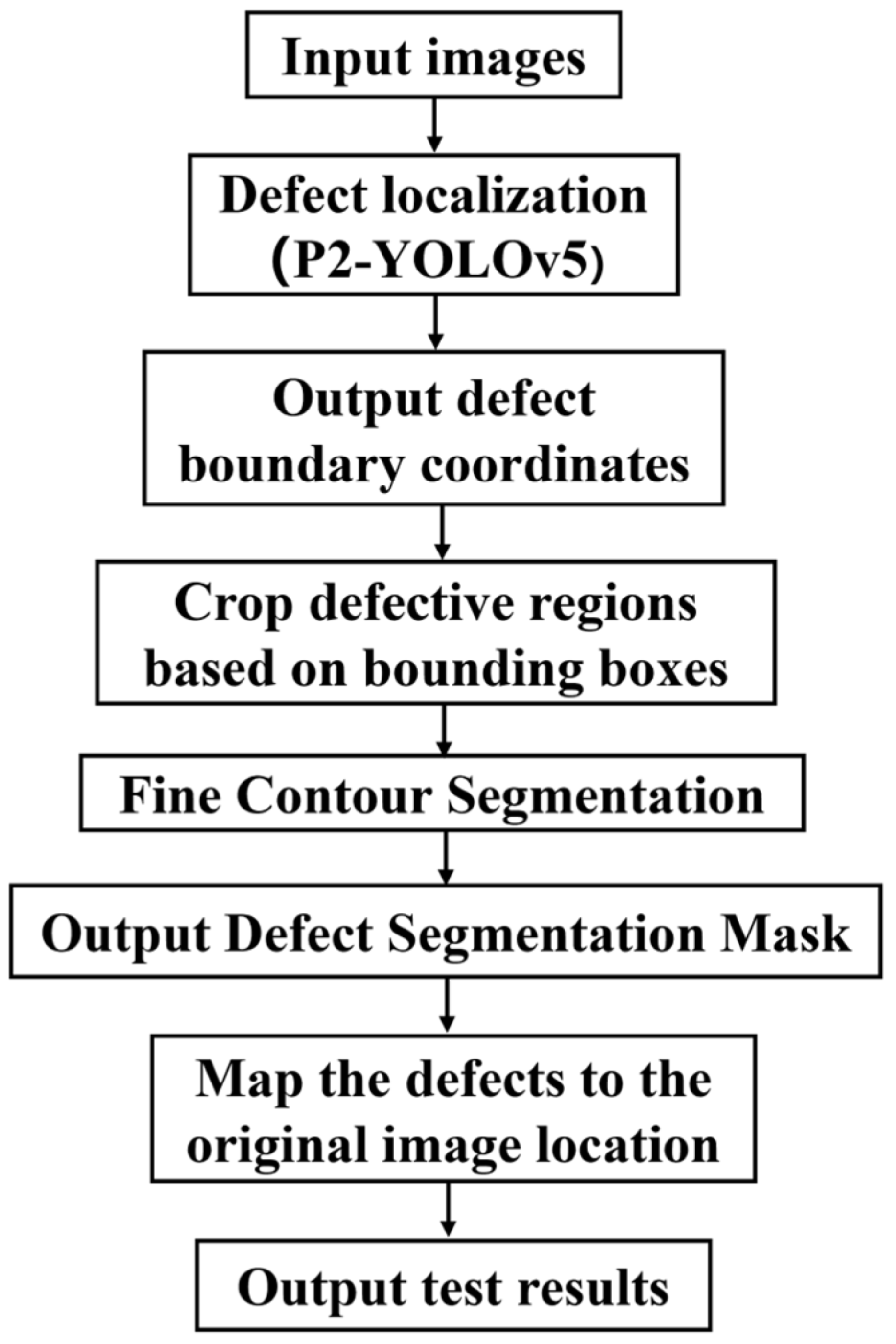

2.5. Two-Stage Defect Detection Model

2.5.1. Two-Stage Defect Detection Model Architecture

2.5.2. Original YOLOv5 Network Structure

2.5.3. Introduction of Small Target Detection Layer

2.6. Evaluation Indicators

3. Results

3.1. Image Preprocessing Effects

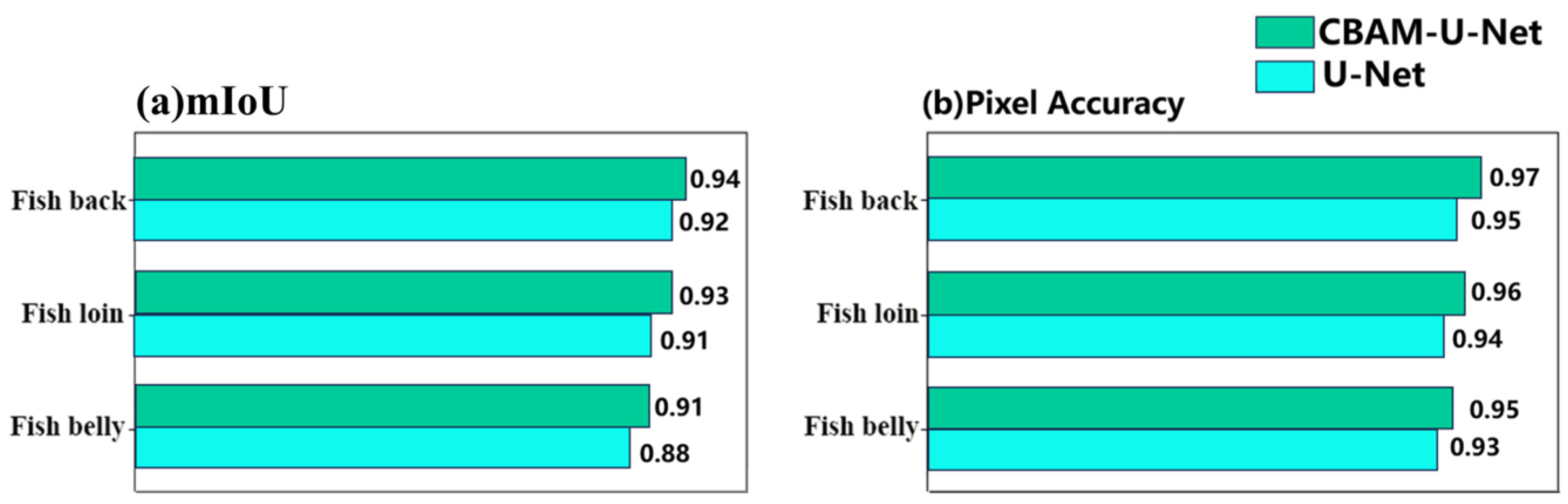

3.2. Part Identification Segmentation Model

3.3. Two-Stage Defect Detection Modeling

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, L.L.; Li, C.Y.; Zhang, Y.J.; Wang, M.J.; Zhong, S.Y.; Hong, P.Z.; Liu, S.C.; Lin, J.Y. Sensory flavor and physicochemical quality analysis of baked Tilapia fillets. Food Res. Dev. 2025, 46, 145–150. [Google Scholar]

- Zhou, B.W.; Hu, Q.; Li, G.P.; Xing, R.R.; Zhang, J.K.; Du, X.J.; Chen, Y. Construction of a freshness grade evaluation model for refrigerated salmon based on multivariate statistical analysis. Meat Res. 2025, 39, 57–64. [Google Scholar]

- Zhang, Z.C.; Chen, Y.K.; Luo, Z.H.; Luo, Z.S.; Lin, H.D.; He, X.B.; Yan, Y.R. Genetic analysis of South China Sea grouper (Epinephelus punctatus) based on whole genome resequencing. J. Guangdong Ocean. Univ. 2025, 45, 35–42. [Google Scholar]

- Zhao, N.; Gao, Y.; Li, F.; Shi, J.; Huang, Y.; Ma, H. Detection of precancerous lesions in cervical images of perimenopausal women using U-net deep learning. Afr. J. Reprod. Health 2025, 29, 108–119. [Google Scholar] [CrossRef] [PubMed]

- Barrett, T.L.; Oppedal, F. Characterisation of the underwater soundscape at Norwegian salmon farms. Aquaculture 2025, 602, 742334. [Google Scholar] [CrossRef]

- Romeyn, R.; Ortega, S.; Heia, K. Hyperspectral detection of blood and melanin defects in salmon fillets. Aquaculture 2025, 602, 742319. [Google Scholar] [CrossRef]

- Benini, E.; Musmeci, E.; Busti, S.; Biagi, E.; Ciulli, S.; Volpe, E.; Errani, F.; Oterhals, Å.; Romarheim, O.H.; Aspevik, T.; et al. Bioactive peptides from salmon aquaculture processing by-product affect growth performance, plasma biochemistry, gut health, and stress resistance of gilthead seabream (Sparus aurata). Aquac. Rep. 2025, 42, 102740. [Google Scholar] [CrossRef]

- Yuan, X.L. Research on Semantic Segmentation Method of Urban Nighttime Images Based on Adaptive Image Enhancement. Master’s Thesis, Chongqing University of Technology, Chongqing, China, 2025. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, J.; Zhang, Y.S. Lightweight infrared small target detection algorithm in tilted view based on YOLOv5. Infrared Technol. 2025, 47, 217–225. [Google Scholar]

- Horn, S.S.; Sonesson, K.A.; Krasnov, A.; Aslam, M.L.; Hillestad, B.; Ruyter, B. Genetic and metabolic characterization of individual differences in liver fat accumulation in Atlantic salmon. Front. Genet. 2025, 16, 1512769. [Google Scholar] [CrossRef]

- Tian, X.H.; Zuo, W.M.; Liu, L.K.; Li, J.P.; Zeng, Y. Mechanism of antimyocardial ischemic effect of inlume based on zebrafish model and network pharmacology. Sci. Technol. Eng. 2025, 25, 942–952. [Google Scholar]

- Yi, S.H.; Li, D.; Luo, T.; Liu, H.Y. Intelligent and precise material separation system based on YOLOv5. Ind. Control. Comput. 2025, 38, 53–54+148. [Google Scholar]

- Zhang, R.B.; Lin, Q.L.; Zhang, T.Y. A review of deep learning based image tampering detection methods. J. Intell. Syst. 2025, 20, 283–304. [Google Scholar]

- Wang, Y.F.; Yuan, T.; Wu, P.F. A method for recognizing body surface pathology in fish based on dual attention mechanism. J. Huazhong Agric. Univ. 2025, 44, 73–82. [Google Scholar] [CrossRef]

- Liu, Z.; Hou, W.; Chen, W.; Chang, J. The algorithm for foggy weather target detection based on YOLOv5 in complex scenes. Complex Intell. Syst. 2024, 11, 71. [Google Scholar] [CrossRef]

- Wang, Z.; Lan, X.; Zhou, Y.; Wang, F.; Wang, M.; Chen, Y.; Zhou, G.; Hu, Q. A Two-Stage Corrosion Defect Detection Method for Substation Equipment Based on Object Detection and Semantic Segmentation. Energies 2024, 17, 6404. [Google Scholar] [CrossRef]

- Ji, Z.X.; Yang, H.B. Improved algorithm for visible small target detection based on deep learning. Optoelectron. Technol. Appl. 2024, 39, 49–53. [Google Scholar]

- Qian, C.; Zhang, J.P.; Tu, X.Y.; Liu, H.; Qiao, G.; Liu, S.J. A CBAM-UNet-based egg identification and counting method for turbot. South. Fish. Sci. 2024, 20, 132–144. [Google Scholar]

- Wang, H.; Lu, X.; Li, Z. Autoencoder-based image fusion network with enhanced channels and feature saliency. Optik 2024, 319, 172104. [Google Scholar] [CrossRef]

- Chen, R.; Chen, Y.; Yu, S.; Xu, F.; Zeng, Y.W.H.; Yang, M.Z. SwinIdentity-YOLO: A defect detection algorithm based on Transformer improved YOLOv5 and its applications. Microelectron. Comput. 2025, 42, 136–148. [Google Scholar] [CrossRef]

- Rajamani, S.T.; Rajamani, K.; Angeline, J.; Karthika, R.; Schuller, B.W. CBAM_SAUNet: A novel attention U-Net for effective segmentation of corner cases. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Orlando, FL, USA, 15–19 July 2024. [Google Scholar]

- Li, H. Research on Vehicle Information Detection and Recognition Based on Deep Learning. Master’s Thesis, Shijiazhuang Railway University, Shijiazhuang, China, 2024. [Google Scholar] [CrossRef]

- Zhang, H. Research on IMPROVED U-Net Enhanced SiamBAN Overlap Maximization Target Tracking Algorithm. Master’s Thesis, Yanshan University, Yanshan, China, 2024. [Google Scholar] [CrossRef]

- Wu, Q. Research on Hyperspectral Imaging Detection Method for Salmon Origin Traceability and Quality Attributes. Master’s Thesis, Sichuan Agricultural University, Chengdu, China, 2024. [Google Scholar] [CrossRef]

- Liu, Z.; Gong, Z.; Xia, Y.; Chen, X.; Wu, J. KU-Net: A building extraction method for high-resolution remote sensing images with improved U-Net. Remote Sens. Inf. 2024, 39, 121–131. [Google Scholar] [CrossRef]

- Cao, D.; Guo, C.; Shi, M.; Liu, Y.; Fang, Y.; Yang, H.; Cheng, Y.; Zhang, W.; Wang, Y.; Li, Y.; et al. A method for custom measurement of fish dimensions using the improved YOLOv5-keypoint framework with multi-attention mechanisms. Water Biol. Secur. 2024, 3, 100293. [Google Scholar] [CrossRef]

- Wang, H. Research on Super-Resolution Reconstruction Algorithm of Oral CT Images Based on Deep Learning. Master’s Thesis, Chang’an University, Xi’an, China, 2024. [Google Scholar] [CrossRef]

- Li, Q.; Wen, X.; Liang, S.; Sun, X.; Ma, H.; Zhang, Y.; Tan, Y.; Hong, H.; Luo, Y. Enhancing bighead carp cutting: Chilled storage insights and machine vision-based segmentation algorithm development. Food Chem. 2024, 450, 139280. [Google Scholar] [CrossRef]

- Zhou, X. Research on Fish Quality Based on Machine Learning and Hyperspectral Imaging. Master’s Thesis, East China Jiaotong University, Nanchang, China, 2024. [Google Scholar] [CrossRef]

- Shu, D. Research and Realization of Fish Genome Big Data Platform and Its Key Technologies. Master’s Thesis, Shanghai Ocean University, Shanghai, China, 2024. [Google Scholar] [CrossRef]

- Xie, Z. Research on Background Removal Algorithm of Flame Image. Master’s Thesis, North China Electric Power University (Beijing), Beijing, China, 2024. [Google Scholar] [CrossRef]

- Chen, S.; Xie, F.; Chen, S.; Liu, S.; Li, H.; Gong, Q.; Ruan, G.; Liu, L.; Chen, H. TdDS-UNet: Top-down deeply supervised U-Net for the delineation of 3D colorectal cancer. Phys. Med. Biol. 2024, 69, 55018. [Google Scholar] [CrossRef]

- Zhu, Y. Research on Fine Structure Analysis and 3D Simulation Printing of Salmon. Master’s Thesis, Zhejiang University, Hangzhou, China, 2023. [Google Scholar] [CrossRef]

- Lin, T.; Wei, J.; Liu, X.; He, J. An unmanned aircraft bridge crack detection method based on CLAHE and U-Net model. West. Transp. Sci. Technol. 2024, 9, 1–3+8. [Google Scholar] [CrossRef]

- Mo, Y.; Li, Y.; Zhang, B.; Lu, Z.; Mo, H.; Li, Z. Motor imagery EEG classification based on weight fusion feature recalibration network. J. Electron. Meas. Instrum. 2025, 39, 70–79. [Google Scholar] [CrossRef]

| Dataset Purpose | Model(s) Used | Total Images | Training Set | Validation Set | Test Set |

|---|---|---|---|---|---|

| Background Removal | U-Net | 1529 | 1223 | 153 | 153 |

| Part Segmentation | CBAM-U-Net | 489 | 391 | 49 | 49 |

| Defect Detection | YOLOv5, P2-YOLOv5 | 424 | 339 | 43 | 42 |

| Defect Segmentation | Two-stage Model | 616 | 493 | 61 | 62 |

| Model | mAP/% | mIoU/% | fps |

|---|---|---|---|

| U-NET | 94.43 | 93.27 | 2.97 |

| CBAM-U-NET | 96.87 | 94.33 | 3.13 |

| Model | mAP/% | fps |

|---|---|---|

| U-Net | 62.50 | 2.15 |

| YOLOv5 | 80.25 | 57.50 |

| CBAM-U-Net | 66.26 | 2.17 |

| P2-YOLOv5 | 84.12 | 58.82 |

| Two-stage model | 94.28 | 7.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Zhao, Y.; Yang, W.; Gao, L.; Zhang, W.; Liu, Y.; Zhang, X.; Wang, H. Computer Vision-Based Deep Learning Modeling for Salmon Part Segmentation and Defect Identification. Foods 2025, 14, 3529. https://doi.org/10.3390/foods14203529

Zhang C, Zhao Y, Yang W, Gao L, Zhang W, Liu Y, Zhang X, Wang H. Computer Vision-Based Deep Learning Modeling for Salmon Part Segmentation and Defect Identification. Foods. 2025; 14(20):3529. https://doi.org/10.3390/foods14203529

Chicago/Turabian StyleZhang, Chunxu, Yuanshan Zhao, Wude Yang, Liuqian Gao, Wenyu Zhang, Yang Liu, Xu Zhang, and Huihui Wang. 2025. "Computer Vision-Based Deep Learning Modeling for Salmon Part Segmentation and Defect Identification" Foods 14, no. 20: 3529. https://doi.org/10.3390/foods14203529

APA StyleZhang, C., Zhao, Y., Yang, W., Gao, L., Zhang, W., Liu, Y., Zhang, X., & Wang, H. (2025). Computer Vision-Based Deep Learning Modeling for Salmon Part Segmentation and Defect Identification. Foods, 14(20), 3529. https://doi.org/10.3390/foods14203529