Identifying the “Dangshan” Physiological Disease of Pear Woolliness Response via Feature-Level Fusion of Near-Infrared Spectroscopy and Visual RGB Image

Abstract

1. Introduction

2. Materials and Methods

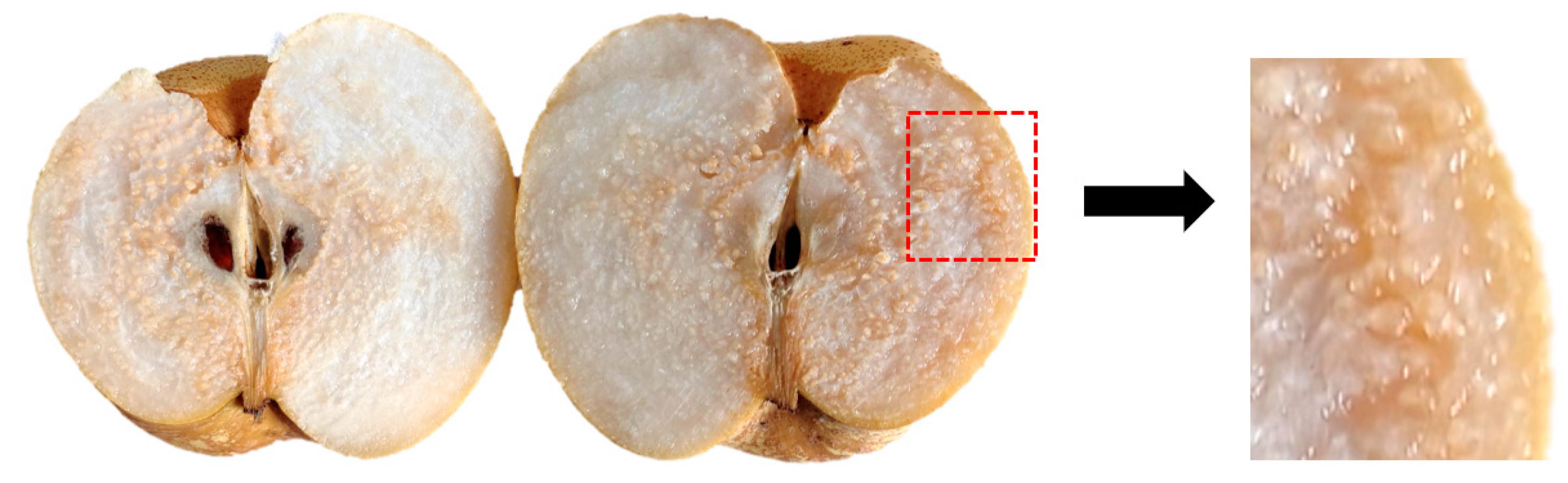

2.1. Samples

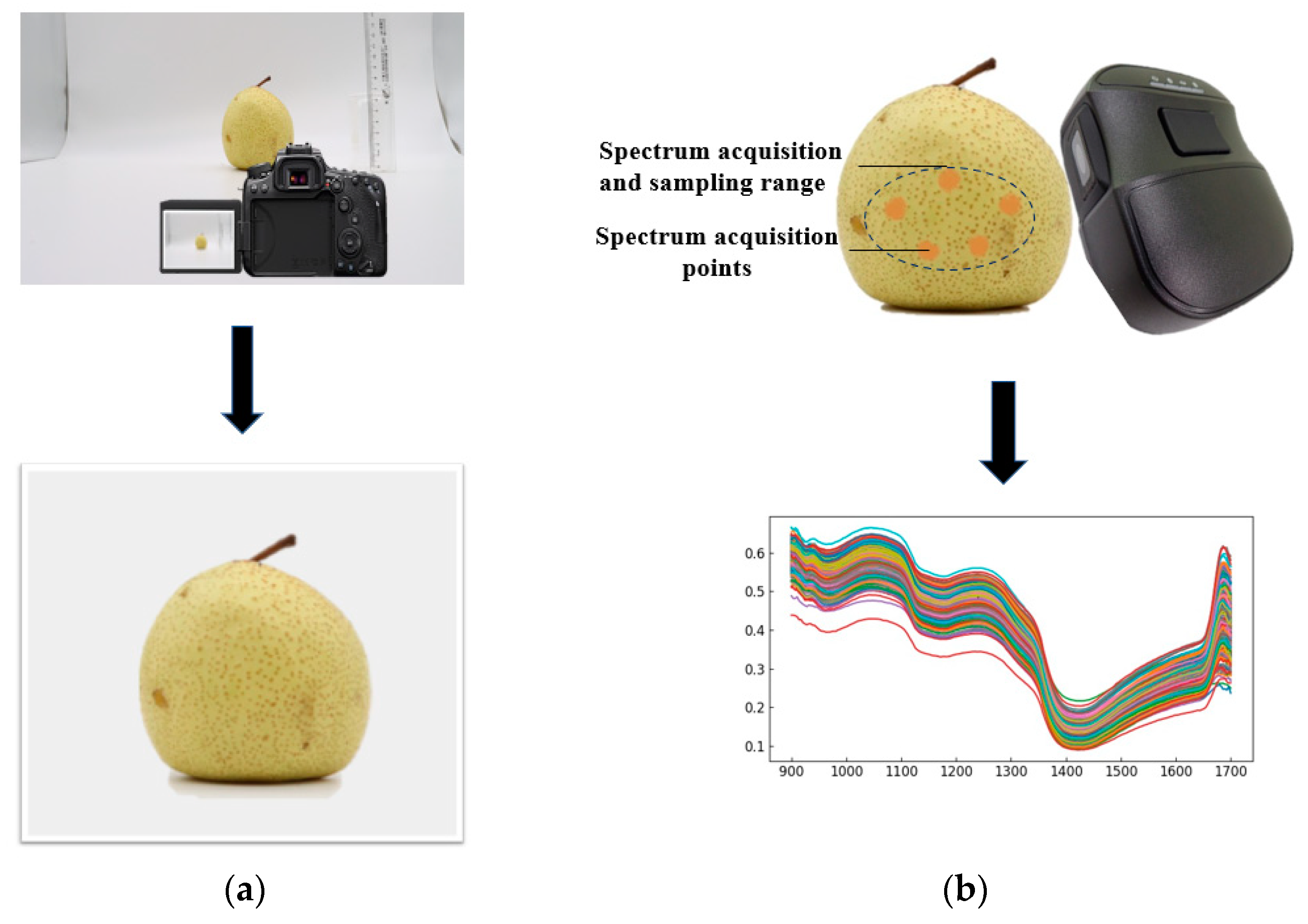

2.2. Data Acquisition Instruments

2.3. Machine-Learning Methods for Near-Infrared Spectroscopy

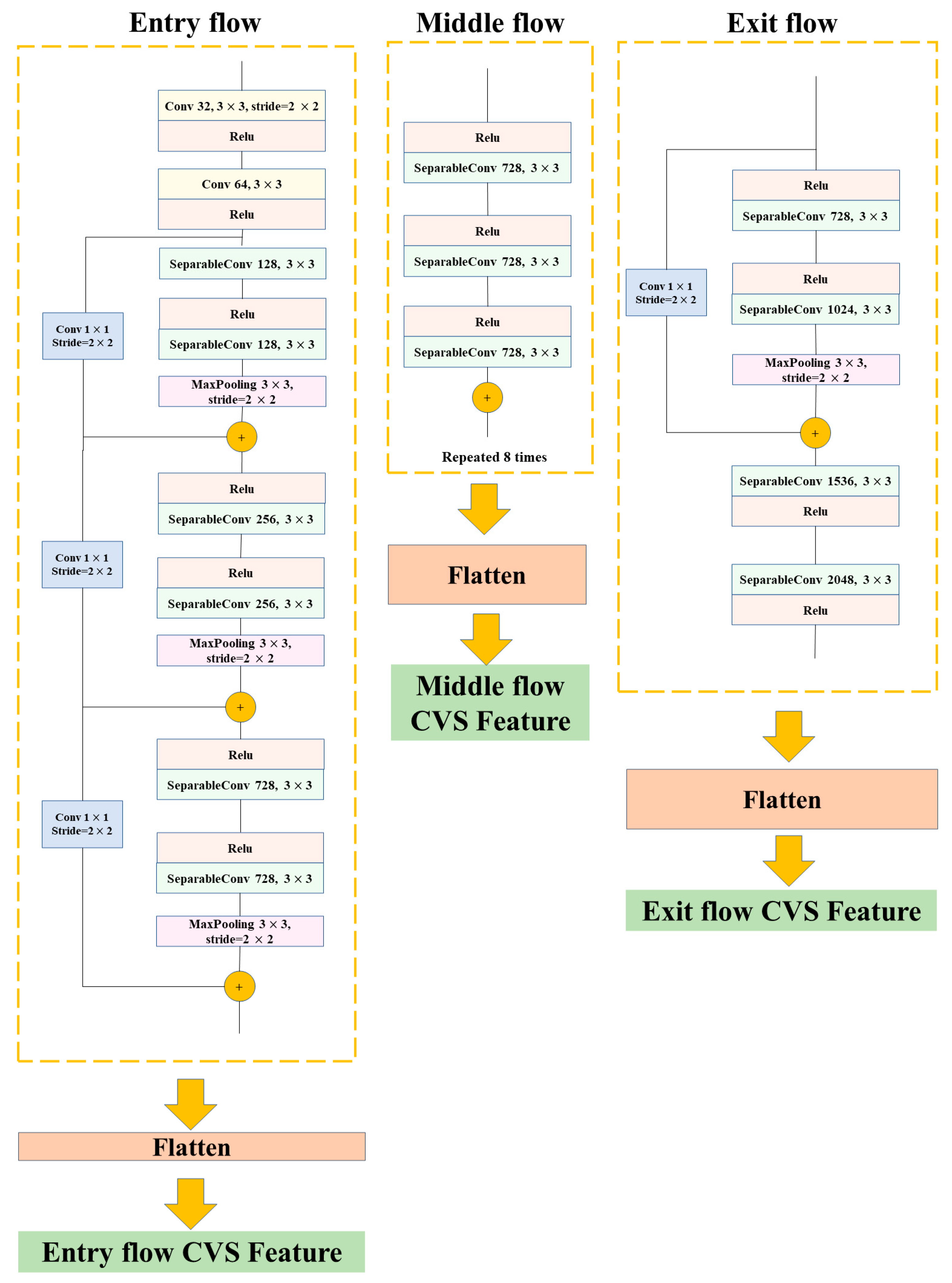

2.4. Deep Neural Network Methods

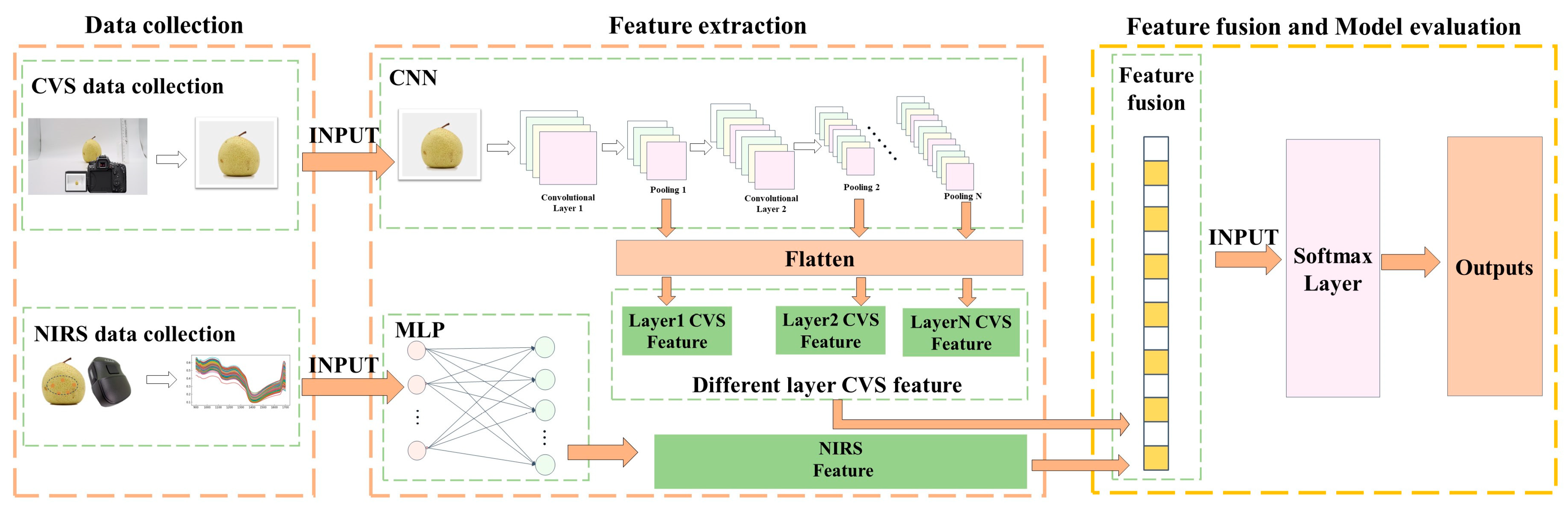

2.5. Near-Infrared Spectroscopy and Visual Image Feature Fusion Methods

2.6. Evaluation

3. Results and Discussion

3.1. Division of the Training and Validation Sets

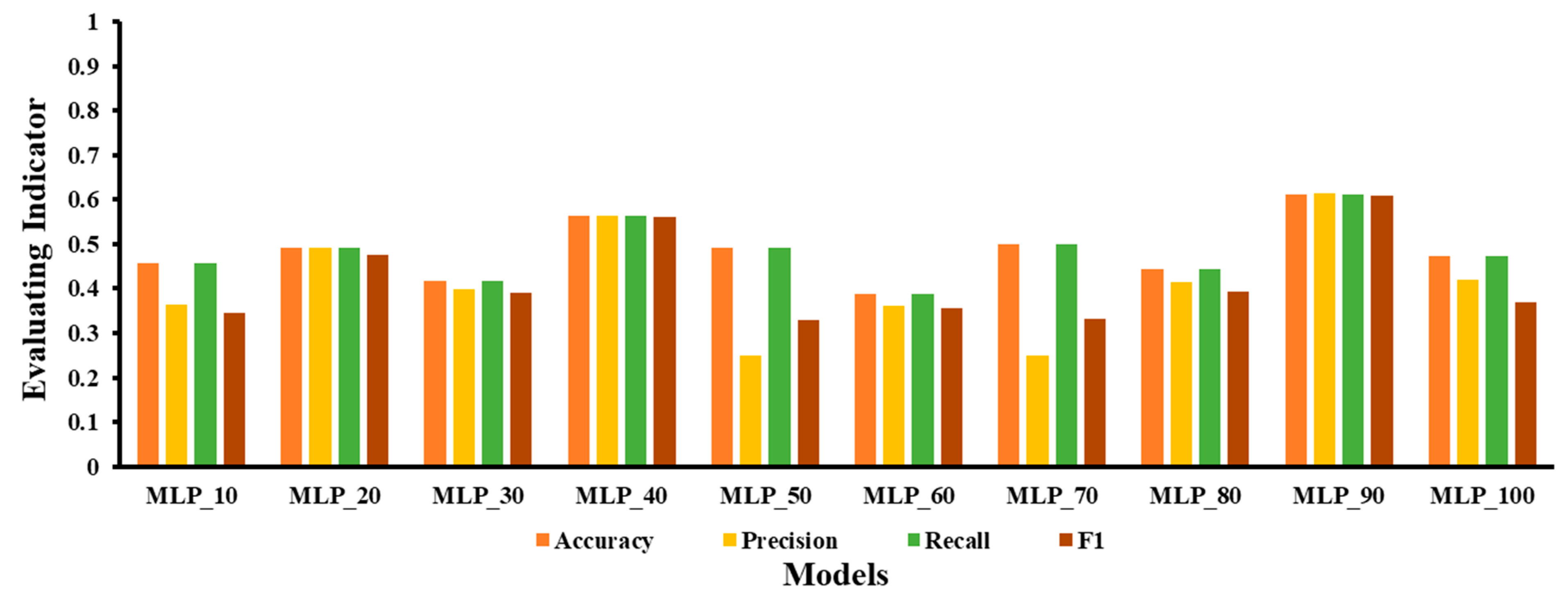

3.2. No-Fusion Separate Modeling Evaluation

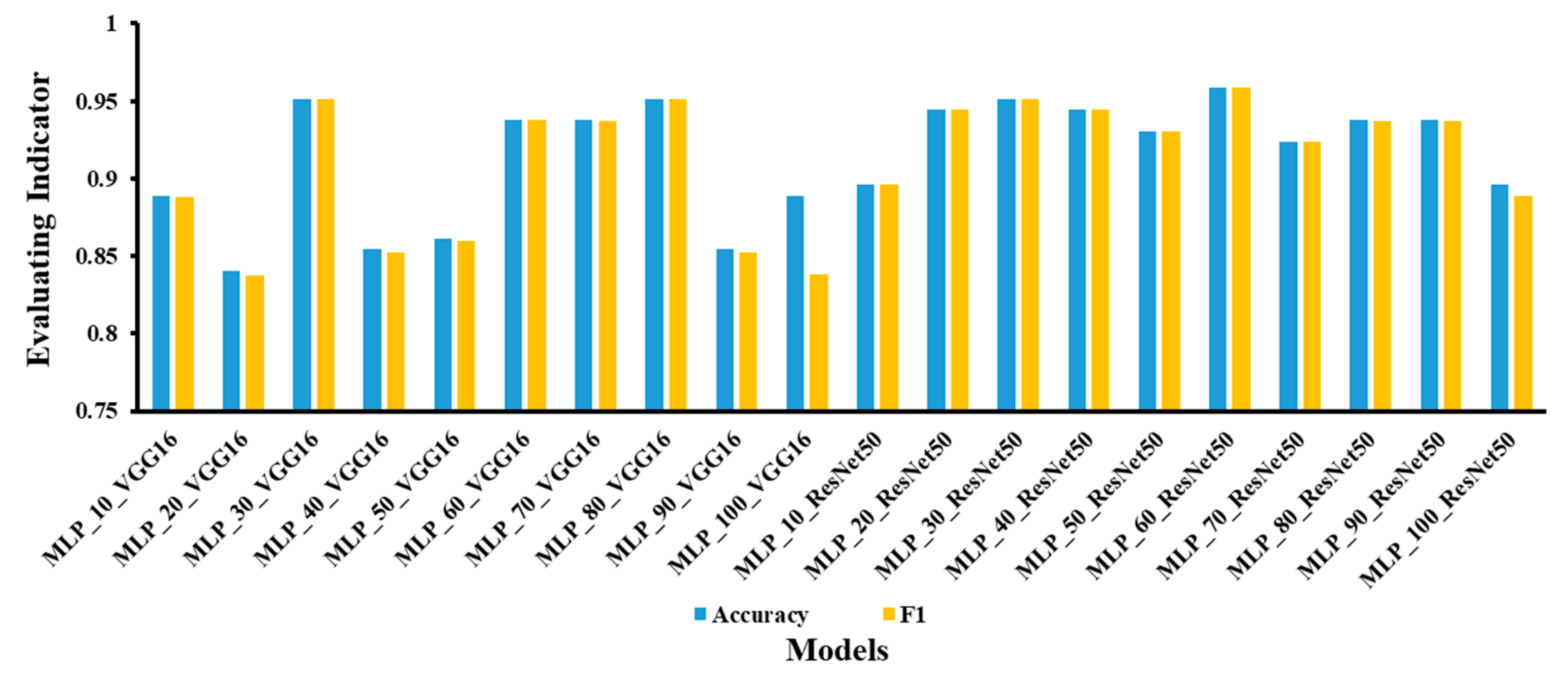

3.3. Modeling of Spectral and Image Fusion Features

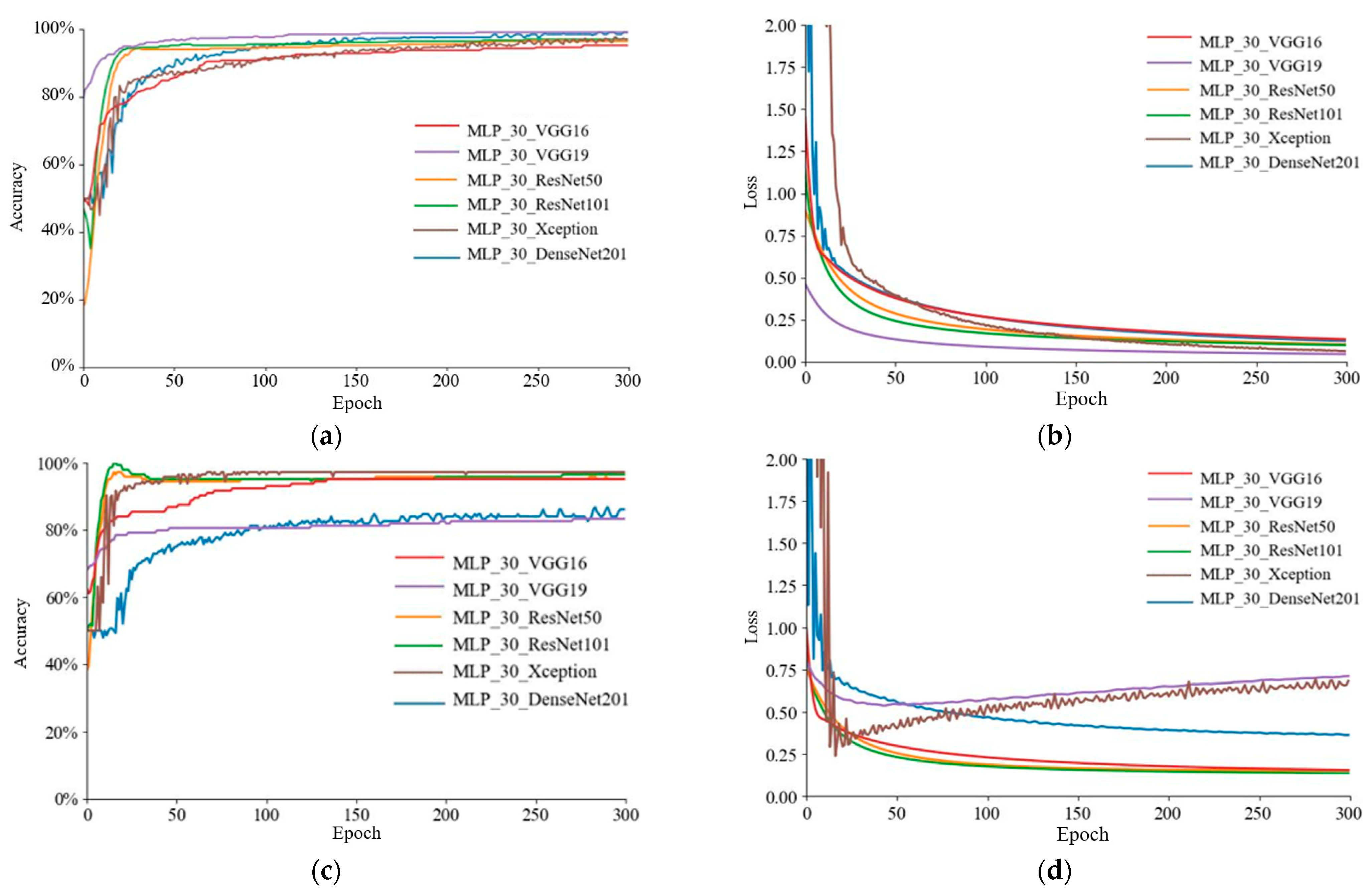

3.4. Optimization of Fusion Models for Different Depth Feature Layers of Visual Images

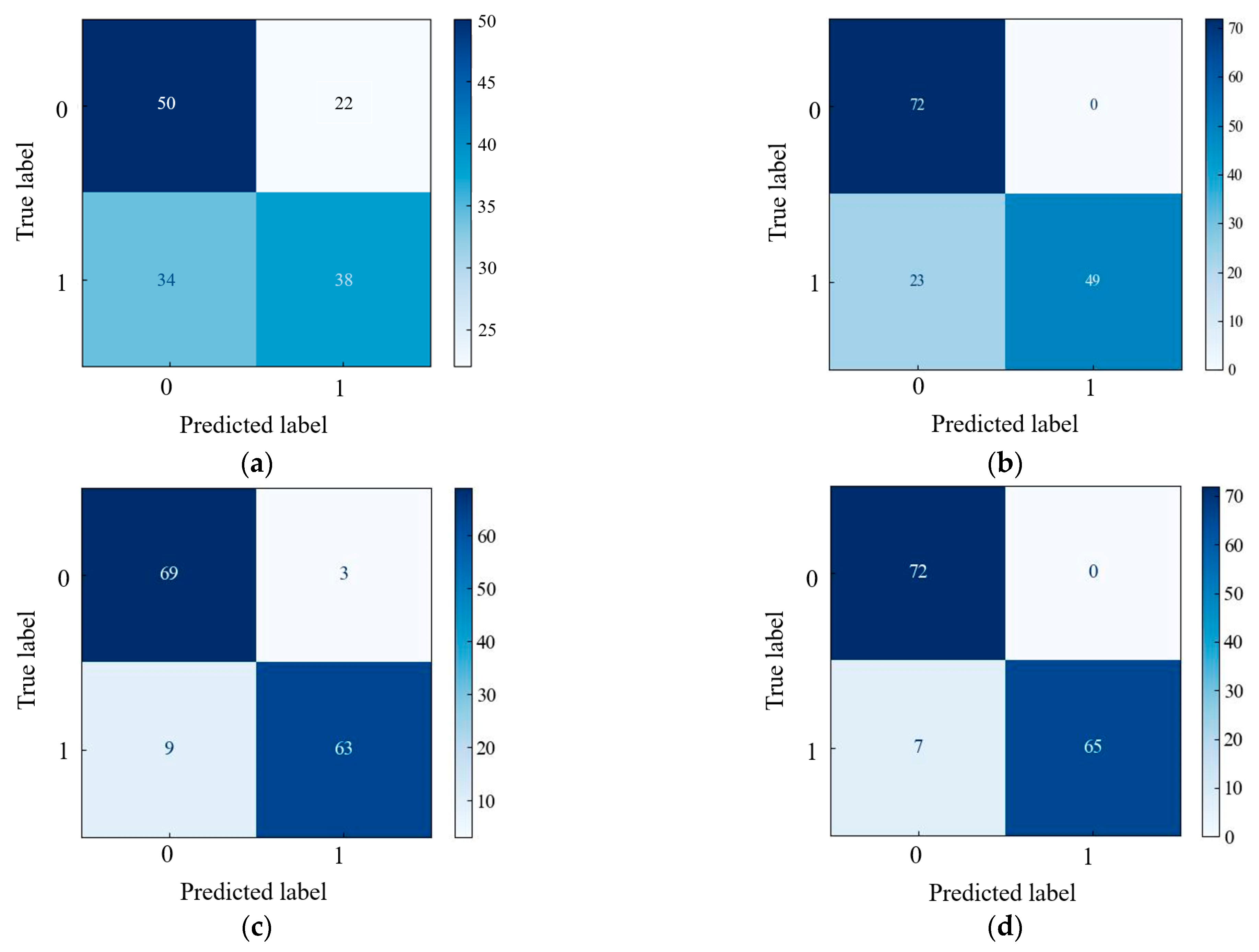

3.5. Optimal Model Analysis and Comparison

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zeng, W.; Qiao, X.; Li, Q.; Liu, C.; Wu, J.; Yin, H.; Zhang, S. Genome-Wide Identification and Comparative Analysis of the ADH Gene Family in Chinese White Pear (Pyrus Bretschneideri) and Other Rosaceae Species. Genomics 2020, 112, 3484–3496. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhang, J.; Gao, W.; Wang, H. Study on Chemical Composition, Anti-Inflammatory and Anti-Microbial Activities of Extracts from Chinese Pear Fruit (Pyrus Bretschneideri Rehd.). Food Chem. Toxicol. 2012, 50, 3673–3679. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Wang, Z.; Wu, J.; Wang, Q.; Hu, X. Chemical Compositional Characterization of Eight Pear Cultivars Grown in China. Food Chem. 2007, 104, 268–275. [Google Scholar] [CrossRef]

- Li, X.; Wang, T.; Zhou, B.; Gao, W.; Cao, J.; Huang, L. Chemical Composition and Antioxidant and Anti-Inflammatory Potential of Peels and Flesh from 10 Different Pear Varieties (Pyrus Spp.). Food Chem. 2014, 152, 531–538. [Google Scholar] [CrossRef]

- De, L.I.; Wen-lin, Z.; Yi, S.U.N.; Hui-he, S.U.N. Climatic Suitability Assessment of Dangshansu Pear in the Area along the Abandoned Channel of the Yellow River Based on Cloud Model. Chin. J. Agrometeorol. 2017, 38, 308. [Google Scholar] [CrossRef]

- González-Agüero, M.; Pavez, L.; Ibáñez, F.; Pacheco, I.; Campos-Vargas, R.; Meisel, L.A.; Orellana, A.; Retamales, J.; Silva, H.; González, M.; et al. Identification of Woolliness Response Genes in Peach Fruit after Post-Harvest Treatments. J. Exp. Bot. 2008, 59, 1973–1986. [Google Scholar] [CrossRef]

- Hamida, S.; Ouabdesslam, L.; Ladjel, A.F.; Escudero, M.; Anzano, J. Determination of Cadmium, Copper, Lead, and Zinc in Pilchard Sardines from the Bay of Boumerdés by Atomic Absorption Spectrometry. Anal. Lett. 2018, 51, 2501–2508. [Google Scholar] [CrossRef]

- Rodríguez-Bermúdez, R.; Herrero-Latorre, C.; López-Alonso, M.; Losada, D.E.; Iglesias, R.; Miranda, M. Organic Cattle Products: Authenticating Production Origin by Analysis of Serum Mineral Content. Food Chem. 2018, 264, 210–217. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, R.; Li, Y.C.; Wen, X.; Peng, Y.; Ni, X. Use of Mineral Multi-elemental Analysis to Authenticate Geographical Origin of Different Cultivars of Tea in Guizhou, China. J. Sci. Food Agric. 2020, 100, 3046–3055. [Google Scholar] [CrossRef]

- Jackman, P.; Sun, D.-W.; ElMasry, G. Robust Colour Calibration of an Imaging System Using a Colour Space Transform and Advanced Regression Modelling. Meat Sci. 2012, 91, 402–407. [Google Scholar] [CrossRef]

- Yu, Y.; Yao, M. Is This Pear Sweeter than This Apple? A Universal SSC Model for Fruits with Similar Physicochemical Properties. Biosyst. Eng. 2023, 226, 116–131. [Google Scholar] [CrossRef]

- Yuan, L.-M.; Mao, F.; Chen, X.; Li, L.; Huang, G. Non-Invasive Measurements of ‘Yunhe’ Pears by Vis-NIRS Technology Coupled with Deviation Fusion Modeling Approach. Postharvest Biol. Technol. 2020, 160, 111067. [Google Scholar] [CrossRef]

- Cavaco, A.M.; Pinto, P.; Antunes, M.D.; da Silva, J.M.; Guerra, R. ‘Rocha’ Pear Firmness Predicted by a Vis/NIR Segmented Model. Postharvest Biol. Technol. 2009, 51, 311–319. [Google Scholar] [CrossRef]

- Santos Pereira, L.F.; Barbon, S.; Valous, N.A.; Barbin, D.F. Predicting the Ripening of Papaya Fruit with Digital Imaging and Random Forests. Comput. Electron. Agric. 2018, 145, 76–82. [Google Scholar] [CrossRef]

- Zhu, H.; Ye, Y.; He, H.; Dong, C. Evaluation of Green Tea Sensory Quality via Process Characteristics and Image Information. Food Bioprod. Process. 2017, 102, 116–122. [Google Scholar] [CrossRef]

- Chen, S.; Xiong, J.; Guo, W.; Bu, R.; Zheng, Z.; Chen, Y.; Yang, Z.; Lin, R. Colored Rice Quality Inspection System Using Machine Vision. J. Cereal Sci. 2019, 88, 87–95. [Google Scholar] [CrossRef]

- Shi, H.; Yu, P. Comparison of Grating-Based near-Infrared (NIR) and Fourier Transform Mid-Infrared (ATR-FT/MIR) Spectroscopy Based on Spectral Preprocessing and Wavelength Selection for the Determination of Crude Protein and Moisture Content in Wheat. Food Control 2017, 82, 57–65. [Google Scholar] [CrossRef]

- Shao, X.; Ning, Y.; Liu, F.; Li, J.; Cai, W. Application of Near-Infrared Spectroscopy in Micro Inorganic Analysis. Acta Chim. Sin. 2012, 70, 2109. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, D.; Su, Y.; Zheng, X.; Li, S.; Chen, L. Research on the Authenticity of Mutton Based on Machine Vision Technology. Foods 2022, 11, 3732. [Google Scholar] [CrossRef]

- Chmiel, M.; Słowiński, M.; Dasiewicz, K.; Florowski, T. Use of Computer Vision System (CVS) for Detection of PSE Pork Meat Obtained from m. Semimembranosus. LWT- Food Sci. Technol. 2016, 65, 532–536. [Google Scholar] [CrossRef]

- Yang, Z.; Gao, J.; Wang, S.; Wang, Z.; Li, C.; Lan, Y.; Sun, X.; Li, S. Synergetic Application of E-Tongue and E-Eye Based on Deep Learning to Discrimination of Pu-Erh Tea Storage Time. Comput. Electron. Agric. 2021, 187, 106297. [Google Scholar] [CrossRef]

- Wei, H.; Jafari, R.; Kehtarnavaz, N. Fusion of Video and Inertial Sensing for Deep Learning–Based Human Action Recognition. Sensors 2019, 19, 3680. [Google Scholar] [CrossRef] [PubMed]

- Miao, J.; Luo, Z.; Wang, Y.; Li, G. Comparison and Data Fusion of an Electronic Nose and Near-Infrared Reflectance Spectroscopy for the Discrimination of Ginsengs. Anal. Methods 2016, 8, 1265–1273. [Google Scholar] [CrossRef]

- Xu, J.-L.; Sun, D.-W. Identification of Freezer Burn on Frozen Salmon Surface Using Hyperspectral Imaging and Computer Vision Combined with Machine Learning Algorithm. Int. J. Refrig. 2017, 74, 151–164. [Google Scholar] [CrossRef]

- Caporaso, N.; Whitworth, M.B.; Fisk, I.D. Protein Content Prediction in Single Wheat Kernels Using Hyperspectral Imaging. Food Chem. 2018, 240, 32–42. [Google Scholar] [CrossRef] [PubMed]

- Mishra, P.; Woltering, E.; El Harchioui, N. Improved Prediction of ‘Kent’ Mango Firmness during Ripening by near-Infrared Spectroscopy Supported by Interval Partial Least Square Regression. Infrared Phys. Technol. 2020, 110, 103459. [Google Scholar] [CrossRef]

- Rungpichayapichet, P.; Mahayothee, B.; Nagle, M.; Khuwijitjaru, P.; Müller, J. Robust NIRS Models for Non-Destructive Prediction of Postharvest Fruit Ripeness and Quality in Mango. Postharvest Biol. Technol. 2016, 111, 31–40. [Google Scholar] [CrossRef]

- Brereton, R.G.; Lloyd, G.R. Partial Least Squares Discriminant Analysis: Taking the Magic Away: PLS-DA: Taking the Magic Away. J. Chemom. 2014, 28, 213–225. [Google Scholar] [CrossRef]

- Mammone, A.; Turchi, M.; Cristianini, N. Support Vector Machines. WIREs Comput. Stat. 2009, 1, 283–289. [Google Scholar] [CrossRef]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Tin Kam Ho The Random Subspace Method for Constructing Decision Forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [CrossRef]

- Schapire, R.E. The Boosting Approach to Machine Learning: An Overview. In Nonlinear Estimation and Classification; Denison, D.D., Hansen, M.H., Holmes, C.C., Mallick, B., Yu, B., Eds.; Lecture Notes in Statistics; Springer: New York, NY, USA, 2003; Volume 171, pp. 149–171. ISBN 978-0-387-95471-4. [Google Scholar]

- Hamidi, S.K.; Weiskittel, A.; Bayat, M.; Fallah, A. Development of Individual Tree Growth and Yield Model across Multiple Contrasting Species Using Nonparametric and Parametric Methods in the Hyrcanian Forests of Northern Iran. Eur. J. For. Res. 2021, 140, 421–434. [Google Scholar] [CrossRef]

- Ashraf, M.I.; Zhao, Z.; Bourque, C.P.-A.; MacLean, D.A.; Meng, F.-R. Integrating Biophysical Controls in Forest Growth and Yield Predictions with Artificial Intelligence Technology. Can. J. For. Res. 2013, 43, 1162–1171. [Google Scholar] [CrossRef]

- Chen, T.; Xu, R.; He, Y.; Wang, X. A Gloss Composition and Context Clustering Based Distributed Word Sense Representation Model. Entropy 2015, 17, 6007–6024. [Google Scholar] [CrossRef]

- Rodrigues, L.F.; Naldi, M.C.; Mari, J.F. Comparing Convolutional Neural Networks and Preprocessing Techniques for HEp-2 Cell Classification in Immunofluorescence Images. Comput. Biol. Med. 2020, 116, 103542. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kwasigroch, A.; Mikolajczyk, A.; Grochowski, M. Deep Neural Networks Approach to Skin Lesions Classification—A Comparative Analysis. In Proceedings of the 2017 22nd International Conference on Methods and Models in Automation and Robotics (MMAR), Miedzyzdroje, Poland, 28–31 August 2017; pp. 1069–1074. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Kassani, S.H.; Kassani, P.H.; Khazaeinezhad, R.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Diabetic Retinopathy Classification Using a Modified Xception Architecture. In Proceedings of the 2019 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Ajman, United Arab Emirates, 10–12 December 2019; pp. 1–6. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper With Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Matsubayashi, S.; Suzuki, R.; Saito, F.; Murate, T.; Masuda, T.; Yamamoto, K.; Kojima, R.; Nakadai, K.; Okuno, H.G.; Graduate School of Information Science, Nagoya University; et al. Acoustic Monitoring of the Great Reed Warbler Using Multiple Microphone Arrays and Robot Audition. J. Robot. Mechatron. 2017, 29, 224–235. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Rinnan, Å.; van den Berg, F.; Engelsen, S.B. Review of the Most Common Pre-Processing Techniques for near-Infrared Spectra. TrAC Trends Anal. Chem. 2009, 28, 1201–1222. [Google Scholar] [CrossRef]

| No. Layer | Layer Name | Net | Output |

|---|---|---|---|

| 1 | Conv1 | 7 × 7, 64, stride2 | 112 × 112 |

| 2 | Conv2_x | 3 × 3 max pool, stride2 | 56 × 56 |

| 3 | Conv3_x | 28 × 28 | |

| 4 | Conv4_x | 14 × 14 | |

| 5 | Conv5_x | 7 × 7 | |

| Average pool, 1000-d fc, SoftMax | 1 × 1 |

| Models | Parameters |

|---|---|

| PLS_DA | n_components = 8. |

| MLP | Neurons in hidden layers: 90; activation: Rectified Linear Unit (Relu); solver: Adam |

| SVM | C = 601, gamma = 0.15; kernel = “poly” |

| Random Forest | Limit the maximal tree depth:20; Number of trees: 15; Do not split subsets smaller than: 3. |

| AdaBoost | Number of estimators: 50; learning rate: 1.0; classification algorithm: SAMME.R; Regression loss function: Square. |

| XGBoost | Number of estimators: 50; learning rate: 1.0; classification algorithm: SAMME.R; Regression loss function: Square. |

| Models | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| PLS_DA | 0.597 | 0.601 | 0.597 | 0.593 |

| MLP | 0.611 | 0.614 | 0.611 | 0.608 |

| SVM | 0.507 | 0.510 | 0.507 | 0.468 |

| Random Forest | 0.403 | 0.393 | 0.403 | 0.389 |

| AdaBoost | 0.451 | 0.428 | 0.451 | 0.403 |

| XGBoost | 0.340 | 0.318 | 0.340 | 0.320 |

| Models | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| VGG16 | 0.833 | 0.875 | 0.833 | 0.829 |

| VGG19 | 0.806 | 0.831 | 0.806 | 0.802 |

| ResNet50 | 0.833 | 0.875 | 0.833 | 0.829 |

| ResNet101 | 0.833 | 0.875 | 0.833 | 0.829 |

| Xception | 0.840 | 0.879 | 0.840 | 0.836 |

| DenseNet201 | 0.764 | 0.769 | 0.764 | 0.763 |

| Models | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| MLP_30_VGG16 | 0.951 | 0.956 | 0.951 | 0.951 |

| MLP_30_VGG19 | 0.833 | 0.875 | 0.833 | 0.829 |

| MLP_30_ResNet50 | 0.951 | 0.952 | 0.951 | 0.951 |

| MLP_30_ResNet101 | 0.965 | 0.966 | 0.965 | 0.965 |

| MLP_30_Xception | 0.972 | 0.974 | 0.972 | 0.972 |

| MLP_30_DenseNet201 | 0.861 | 0.862 | 0.861 | 0.861 |

| Models | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| MLP_30_ResNet101_layer1 | 0.819 | 0.852 | 0.819 | 0.815 |

| MLP_30_ResNet101_layer2 | 0.833 | 0.875 | 0.833 | 0.829 |

| MLP_30_ResNet101_layer3 | 0.826 | 0.864 | 0.826 | 0.822 |

| MLP_30_ResNet101_layer4 | 0.833 | 0.875 | 0.833 | 0.829 |

| MLP_30_ResNet101_layer5 | 0.917 | 0.920 | 0.951 | 0.917 |

| MLP_30_Xception_Entryflow | 0.833 | 0.875 | 0.833 | 0.829 |

| MLP_30_Xception_Middleflow | 0.792 | 0.853 | 0.792 | 0.782 |

| MLP_30_Xception_Exitflow | 0.951 | 0.956 | 0.951 | 0.951 |

| Characteristic Category | Models | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|

| NIRS | MLP_90 | 0.611 | 0.614 | 0.611 | 0.608 |

| CVS | Xception | 0.840 | 0.879 | 0.840 | 0.836 |

| Fusion Feature | MLP_30_ResNet101_layer5 | 0.917 | 0.920 | 0.951 | 0.917 |

| Fusion Feature | MLP_30_Xception_Exitflow | 0.951 | 0.956 | 0.951 | 0.951 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Liu, L.; Rao, Y.; Zhang, X.; Zhang, W.; Jin, X. Identifying the “Dangshan” Physiological Disease of Pear Woolliness Response via Feature-Level Fusion of Near-Infrared Spectroscopy and Visual RGB Image. Foods 2023, 12, 1178. https://doi.org/10.3390/foods12061178

Chen Y, Liu L, Rao Y, Zhang X, Zhang W, Jin X. Identifying the “Dangshan” Physiological Disease of Pear Woolliness Response via Feature-Level Fusion of Near-Infrared Spectroscopy and Visual RGB Image. Foods. 2023; 12(6):1178. https://doi.org/10.3390/foods12061178

Chicago/Turabian StyleChen, Yuanfeng, Li Liu, Yuan Rao, Xiaodan Zhang, Wu Zhang, and Xiu Jin. 2023. "Identifying the “Dangshan” Physiological Disease of Pear Woolliness Response via Feature-Level Fusion of Near-Infrared Spectroscopy and Visual RGB Image" Foods 12, no. 6: 1178. https://doi.org/10.3390/foods12061178

APA StyleChen, Y., Liu, L., Rao, Y., Zhang, X., Zhang, W., & Jin, X. (2023). Identifying the “Dangshan” Physiological Disease of Pear Woolliness Response via Feature-Level Fusion of Near-Infrared Spectroscopy and Visual RGB Image. Foods, 12(6), 1178. https://doi.org/10.3390/foods12061178