Abstract

The purpose of this study is to present a new scientometric model for measuring individual scientific performance in Scopus article publications in the field of Business, Management, and Accounting (BMA). With the help of this model, the study also compares the publication performance of the top 50 researchers according to SciVal in the field of BMA, in each of the Central European V4 countries (Czech Republic; Hungary; Poland; Slovakia). To analyze the scientific excellence of a total of top 200 researchers in the countries studied, we collected and analyzed the data of a total of 1844 partially redundant and a total of 1492 cleansed BMA publications. In the scope of the study, we determined the quality of the journals using SCImago, the individual contributions to the journal articles, and the number of citations using Scopus data. A comparison of individual performance, as shown by published journal articles, can be made based on the qualities of the journals, the determination of the aggregated co-authorship ratios, and the number of citations received. The performance of BMA researchers in Hungary lags behind the average of V4s in terms of quantity, but in terms of quality it reaches this average. As for BMA journal articles, the average number of co-authors is between two and three; concerning Q4 to Q2 publications, this number typically increases. In fact, in the case of these Q journals multiple co-authorship results in higher citations, but it is not the case concerning Q1 journals.

1. Introduction

When it comes to evaluating researchers’ publication performance, the number of citations received for publications is still the primary criterion [1,2]), especially in the STEM (Science, Technology, Engineering, and Mathematics) field. In HASS (Humanities, Arts and Social Sciences) disciplines, characterized by more modest citation indicators, the number of references shows a larger variance, which calls into question performance evaluation based purely on citation data. In this study, we argue that in addition to citations, the ratios of co-authorships present in articles and the quality of the journal that publishes the article also influence the researchers’ publication performance. It is also true to HASS sciences that, in addition to journal articles, researchers also extensively publish other types of works, e.g., conference papers, books, and book chapters. To date, for these types of publications, reliable evaluation methods have not been developed [3]; therefore, we do not address them in this study, and for this reason, we only examine journal articles in assessing researchers’ excellence.

Most scientometrics research that examines the relationship between, and compares, co-authorship and scientific performance primarily raises the question whether international collaborations, as an indicator of effectiveness, have a positive effect on citations of publications (see, e.g., [4,5,6,7]). In the scope of co-authorship-based publishing strategy, or in those disciplines where joint scientific works by larger teams are more common, the proportion of individual authorship is lower, but a higher number of journal articles also contributes to a higher number of citations within shorter periods of time. This is because more publications have higher visibility, appear on more forums, and have a higher total number of readers, thereby the number of citations also increases rapidly [8]. In this strategy, the fact whether the co-authors are foreign or domestic is less dominant in terms of individual publications and citation indicators. Although various databases (e.g., Web of Science Core Collection [WoS]; Scopus; SciVal) are able to display the co-authorships and the qualities of journals in each publication (WoS: JCR Quartile; Scopus: CiteScore, SJR), the aggregation of co-authorship and journals quality data requires the construction of a database if we consider them as a dimension defining research performance.

To measure the quality of journal articles, the number of citations received may seem appropriate at first, as exemplified by the Journal Impact Factor [IF] [9,10], the number of Scopus citations [11,12], the Article Influence Score [13], etc. Many researchers believe that a single indicator, such as IF, is not enough to evaluate the quality of journals [14,15,16]). Indeed, a citation index of a journal cannot provide reliable information about a certain publication of a researcher because studies do not get balanced citations in any discipline. For example, [17], analyzing the publication characteristics of the field of immunology, have found that one-sixth of the articles receive half of all citations to journals and that nearly a quarter of journal articles do not receive any citations at all. However, the rank of journals depends on the number of citations to the articles and possibly the quality of the citation journal itself [18], see, e.g., SCImago Journal Rankings [SCImago]. Each publication of the researchers may be better or worse than the quality of the journal, but if we measure the publication performance of a sufficiently large group of researchers in the journal article category and over a long enough period, the average number of a researcher’s citations will approach the average of the journal’s citation rate over the same period. Therefore, for one measure of quality, SCImago Journal Rankings Index [SJR] is appropriate, which classifies scientific journals in an online, publicly available database by disciplines and by quartiles on an annual basis, based on Scopus data [19].

In performance evaluation, in addition to quality, the assessment of quantity is even more hazy. Science ethics deals, to a relatively large extent, with the indication of undeserved co-authorships and the non-indication of deserved co-authorships [20], as well as with the impact of these phenomena on research careers. Indeed, national and international research collaborations are becoming increasingly common in almost all disciplines today [21]. Nonetheless, each of the authors acknowledges that they have played an active role in research when publishing studies with multiple authorships. If we want to realistically compare researchers’ publication performance, we must also recognize that joint performance constitutes a cake that, even after its division among the actual number of authors, cannot be bigger than it was before the division. In many parts of the world, the co-authorship ratio is not distributed or at least not evenly distributed in the evaluation of research achievements, which issue raises the question of fairness, as the sum of individual research achievements may represent more than the performance of someone who has carried out a research project alone and published it alone. In other words, we claim that journal articles with multiple authorships are better than single-authored articles in all respects. The strategy of publishing multi-authored articles also entails an increase in the number of citations, but to disregard the real proportions of authorship in articles is unfair to authors in smaller groups or to authors who work alone. In this way, a significantly higher publication performance can be established due to a distorted assessment of authorship ratios (if the co-authors of the publication get more recognition overall than the sum of the co-authorships indicated in the publication), if such is considered at all.

The use of science metrics for highlighting performance is much less common in the HASS discipline than in the fields of STEM, and what is used is not in line with publishing practices and characteristics in the discipline [22]. The performance of scientists working at universities is determined by the combined performance of their teaching and research work. Out of these, research performance is more important. This is so as, on the one hand, it constitutes the basis of one’s scientific career and, on the other hand, it has a positive effect on educational performance, while educational performance does not affect research effectiveness [23]. Currently applied methods of researcher performance measurement vary from institution to institution, and there is no consensus either on which aspects should be taken into account or who the evaluators should be. This study proposes a model to measure a part of this complex issue: publication performance. Our article attempts to propose a new performance assessment model for comparing individual researcher performance in international journal articles, which we propose to be used for comparing the performance of researchers working in the BMA discipline primarily. To illustrate the use of this model, we compare the top performances of scholars in the BMA field in the V4 countries of Central Europe between 2015 and 2020, with such comparison including qualitative, quantitative and citation aspects.

On the other hand, publication performance does not show significant correlation with the GDP of a given country [24], i.e., scientific performance is not related to wealth or money. Publication performance, however, is determined by the existence of a conscious publication strategy and research site performance evaluation methods. In this context, the study of the scientific effectiveness of the Central European region is desirable because a common problem in the Central European region is that a significant proportion of Central European authors publish in less prestigious journals, thus impairing the visibility of the region’s scientific results [25]. On the other hand, studies conducted in the Central European region are less markedly characterized by international cooperation, even at the regional level [26]. This suggests that these countries are slow to catch up with international scientific achievements. Given this situation, the aim of this study is to compare the publication performance of top researchers in selected Central European countries over the past few years and to highlight those fields of individual and national excellence where performance can further be enhanced through exploiting potentials of research collaboration. In addition, the goal of this paper is to establish such a model for the BMA field that is capable of realistically integrating both quantitative and qualitative aspects of publication performance.

In the following literature analysis, the advantages and disadvantages of purely quantity- and quality-based publication performance evaluations are discussed, taking into account that a reliable bibliometric performance evaluation must reflect both quantitative and qualitative aspects [27]. Based on these findings, a self-developed model, the RPSA model is used in the methodological section for calculating and ranking individual publication performance. The Results section also makes national performances comparable based on the performances of top researchers, which allows science policy makers in countries lagging behind in relative performance to draw important conclusions.

2. The Qualities and Quantities of Scopus Journals

Citation indicators, such as citedness rate; CiteScore [CS]; Source Normalized Impact per Paper [SNIP]; and SCImago Journal Rank [SJR] [28], can be used to assess the quality of journals indexed in Scopus. Scopus indicators show the quality of the journals indexed in Scopus in the following way: if relevant quality criteria are not met, the indexation of the journal may be removed from a given year. The fact that the indexation of a journal in Scopus has been terminated is often not communicated to the public on the websites of the journals concerned [29]. Tracking the qualities of journals is even more difficult in SCImago, which ranks journals by disciplines using Scopus data, with its own scientific metrics, updated once a year. This is because the SCImago database registers at the beginning of June the journals that were already indexed in Scopus in the previous year, and after a potential deterioration in the quality of a journal, the given journal can only be removed from the SCImago database in June after the termination of Scopus indexing. When examining the quality of a journal or the performance of a researcher over a broader time horizon, the fact that the SCImago database is only updated annually plays a lesser role. Compared to simple citation indicators and IF, SCImago gives a more reliable picture of the quality of a journal, as it also considers the prestige and quality of the citation and, in addition, is accessible to all as it is available free of charge [30,31]). SCImago’s journal ranking is also a good means of judging quality, as it is able to calculate the journal’s rank taking into consideration the amount of self-citations and the lack of international cooperation, which is a shortcoming of both IF and CS [32]. At the same time, large Open Access [OA] journal publishers have the means to reduce self-citations by citing each other’s articles in sister journals [33], yet their average quality lags behind non-OA journals [28]. An unresolved problem is that some predator OA journals are also indexed in larger journal databases like Scopus [34], but these typically show low Q-ratings in SCImago and are present in small proportions. This indicates a problem because OA journal articles have a greater research impact [35]. However, trust in science may be shaken if lower quality and less reliable studies reach a wider research audience.

SCImago is therefore suitable for assessing the quality of journals, but this in itself does not yet provide direct information on the quality of the article published in a given journal. In fact, to some extent it does provide direct information, however, as higher quality journals use more rigorous peer review processes, and their rejection rates are also higher. It is also necessary to examine the citation indicators of specific articles either in relation to the citation index of the journal (whether or not the researcher’s publication reaches the average quality of the journal) or in relation to other researchers’ own citation indices (whether or not the researcher’s citation data reaches the average of the other researchers’ concerned). The advantage of the CS introduced by Scopus in 2016 is that it considers most types of publications, while the IF does not, and the IF, which is for a smaller group of journals, only considers citations for two years, while the CS currently considers four years [36]. All in all, none of the indicators is suitable for judging the quality of a particular publication, even if we have data on the average citation of the journal and the number of citations of the article. This is because these indicators consider the number of citations and publications for a number of years at a time, from which a citation/publication ratio for a year cannot be calculated, given that publications of later years are less likely to receive similar numbers of citations than older articles. For all these reasons, it is necessary to judge the researcher’s quality rather than the quality of the articles when evaluating performance over the time horizon examined.

As far as the quantitative dimension is concerned, the lower the willingness of researchers to collaborate in certain disciplines, the greater the significance of the number of co-authors. The social and business sciences are typically of the kind of research areas characterized by lower researcher willingness to collaborate, which—like computer science and engineering—show high R values [37]. Research collaboration in all areas of science should be encouraged and welcomed as long as it is not abused by researchers. For example, [38] have shown that the subsequent success of early-career researchers is crucially influenced by co-publication with highly-scientist professionals. If co-authorship ratios are also considered when evaluating publication performance, unethical publishing practices can also be reduced. This decreases research collaboration, but only to the extent where collaboration aims to achieve exclusively apparent performance gains.

Some researches, nonetheless, considered it important to analyze the co-authoring characteristics of publications as early as in the last decade [27]. The results of such analyses are hardly taken into account in the evaluation of performance, but they are rather used for the analysis of collaboration and dynamics between researchers [39,40,41]. In terms of performance, the relevant literature describes the development of institutional, professional, national, or journal indicators, while the evaluation of researchers’ individual performance, for the time being, is left to university leaders and/or HR practices. This situation is also interesting as aggregate performance can be traced back to individuals’ publication performance, which is driven by different motivations important to each individual [42]. The coordinated nature of individuals’ performance motivations increases the reliability and purity of aggregate performances on condition such motivations are free from counter-interests. This can be based on a commonly used performance evaluation model that captures the two main aspects of performance (quantity and quality) in a simple and realistic manner.

3. Evaluating Researchers’ Publication Performance: The Need for a Reliable Model

Researchers’ publication performance is often evaluated solely on the basis of the h-index [43,44]). The disadvantage of this approach is that it does not relate to the performance of a momentary or certain stage of research career, but to the success achieved during the whole career. This is because the h-index can only increase over time, so it does not necessarily encourage further research and publications. For example, if the publication performance of the previous year is to be evaluated, citation indicators cannot be used, as in this case, due to the shortness of time, it has also a significant effect whether the publication was published in January or December in the previous year. However, there is a relationship between the length of the period concerning which we examine research performance and the h-index—and similar citation indicators such as hI-index [45], hs-index [46], e-index [47].

In addition to citations, the number of publications, more precisely the aggregated co-authorship ratios in publications, also reflect research performance (see, e.g., [48,49,50]). In these approaches, however, co-authorship is the key to qualitative growth in group output, and such approaches do not deal with the performance evaluation of individual contributions. Co-authorships themselves have a performance-enhancing effect [42,51], but not necessarily because better research can be done in a research team, but rather because the proportion of self-citations to publications increases with the average co-authorship rate [52]. This is also a concern if we want to capture research performance solely on the basis of citations or the number of publications, regardless of co-authorship ratios.

The number of publications and citations are also taken into account when comparing relative institutional or departmental publication performance [53]. The importance of both factors is supported by the observation that the entry of lower quality journals into OA gives an even weaker rating, while the entry of top journals into OA gives an even more favorable rating [54]. That is, as a journal, which is not in the top tier, becomes OA, it may appear that the quality of its publications increases (with the more citations), while the journal’s overall rating deteriorates. Therefore, in the case of Scopus journal articles, we get a more accurate picture of publication performance by concurrently considering the added value created by the journal articles, the quality of the journal, and the citation index. [55], examining institutional excellence, also found that both quantitative and qualitative aspects are important in evaluation, and that results obtained in international collaborations favor different groups of countries to varying degrees. In their study, the quantitative aspect was interpreted based on the number of publications, while the qualitative aspect was interpreted based on the field-weighted citation impact and the share of publications.

In this present study, the quantitative aspect is taken into account based on the co-authorship ratios obtained in the publications (assuming that more authors share the same performance). The qualitative aspect, on the other hand, is partly based on the journal’s current SCImago rating and partly on the researcher’s CS, which is calculated as a quotient of the number of citations and number of publications for the period 2015–2020. Based on the above, publication performance is determined by the number of publications, its qualities, and the number of citations; therefore, a performance indicator should be developed in such a way that both the citations of the different quality articles and aggregated co-authorship ratios in journals must have a significant impact on assessing researcher excellence.

It should be noted, however, that publication performance may also be affected by expectations independent of researchers, which may adversely affect the performance such researchers could have achieved through their original and non-influenced abilities or motivations. The above-mentioned expectations include educational policy goals set at an institutional or national level, which all researchers have to observe. Writing textbooks and university lecture notes, holding additional university lectures, organizing conferences, proofreading and editing tasks, training young researchers, etc., are just a few examples that require attention and time, and thus hinder research performance.

On the other hand, even if we examine publication performance only concerning a specific period in which all researchers in the sample actively publish, a performance index-based comparison will not necessarily be fair. Previous publication expectations set to older generations were fundamentally different from today’s requirements, which can be seen in the diminishing prestige of books and the rising importance of journal articles. Therefore, a purely quantitative assessment of differences in performance can lead to potential misinterpretations with no clue to analyzing underlying causes.

4. Method

Using the SciVal database which is a system that able to make ranking lists based on individual or institutional performances, we have compiled the list of researchers with affiliation in the V4 countries of Central Europe (Czech Republic; Hungary; Poland; Slovakia). The researchers included in this database are among the top 50 researchers of these countries in the subject area of BMA in terms of simple quantitative outputs, namely based on the number of their Scopus publications in the period 2015–2020 (according to SciVal). We have excluded those authors from the lists of the top 50 of each country who are rated by SciVal based on their secondary affiliation, but whose primary affiliation is not in that country. We have replaced the data of such scholars with the data of the next researchers in the top list in each case. In line with the list compiled this way, we have collected the data of the authors’ Scopus publications using the publications’ ID numbers ignoring the following types of publications: book; book review; book chapter; conference paper; and editorials. Due to terminological issues, we refer to publications considered in the scope of this research as publications or journal articles throughout the study, but this term in fact includes a wider range than Scopus journal articles and also contains, e.g., review; note; data. For each of these publications, we have recorded:

- The researcher’s real co-authorship ratio in the given publication (1/[total number of authors]);

- The SCImago quartile classification of the given journal according to the year of publication indicated in Scopus, with the addition that we have applied the SCImago rankings of 2019 to the articles of 2020;

- The number of Scopus citations of the publication, regardless of the type and quality of citations.

For this analysis, the SciVal data were filtered on 21 January 2021, and the Scopus data were filtered between 20 January and 1 February 2021. Furthermore, we have also considered that the analyzed publications should belong exclusively to the BMA discipline (based on the Scopus classification), and thus excluded those who are not researchers primarily of this discipline but are still included in the SciVal toplist, as some of their journal publications are also indexed in this field. The compiled database does not include publications that do not have a SCImago rating in BMA in the year of their publication. Some researchers have 0 publications or are with 0 total scores in this database, which can be due to four reasons:

- The researcher has only those types of Scopus publications in the period that are not taken into account in this analysis (e.g., book chapter; editorial);

- None of the researcher’s publications included in the database has at least one citation;

- The researcher works and publishes in a field different from the BMA discipline, and at least one of the journals of his/her publications not considered in the scope of this study has also been ranked in the BMA discipline in SCImago;

- Although the journal of the publication is included in SCImago, the journal only has a SCImago rating for a year different from the year of publication (except 2020).

For each researcher, we have performed an index calculation to assess the publication performance of the period 2015–2020 according to the following formula:

where

- RPSA: Researcher Performance in Scopus Articles, an indicator developed for the relative evaluation of researchers’ publication performance, which also takes into account individual performance (quantity), journal quality and citation in existing Scopus journal publications in the period 2015–2020;

- Q1, Q2, Q3, Q4: SCImago quartile classification of the journal for the given year, based on the 2019 SCImago rankings for publications of 2020;

- ∑(C–A): aggregated Co-Authorship ratios, the sum of individual authorship ratios in the publications per qualitative quartile, taking the co-authorship ratio of a publication calculated as (1/[total number of authors]);

- CinS*: Citations in Scopus*, the number of citations obtained by the author in the publications considered in the scope of this research and indexed in Scopus;

- PinS*: Publications in Scopus*, the number of publications of the author excluding book, book review, book chapter, conference paper and editorial indexed in Scopus for the period under scrutiny.

The first component of the RPSA indicator () shows the aggregate individual contribution of a given researcher according to the quality of the journals in which the given researcher published the studies in question. Based on the SCImago quartiles, we have weighted the journals and accordingly the journal article publications as follows: RPSA regards the scores of co-authorship ratios with a 4-fold value in the case of Q1 articles, with a 3-fold value in the case of Q2 articles, with a 2-fold value in the case of Q3 articles, and with a 1-fold value in the case of Q4 articles. These weights represent the citation-based quality differences between journals. The second component of the RPSA indicator is a reference index for the researchers themselves. This is similar to CS (but for six years instead of four) and is the quotient of the number of Scopus citations the publications considered got and the number of publications considered during the period.

Based on SCImago’s annual ranking and in order to avoid bias, we classified the qualities of journals into the appropriate quartiles by considering only journal articles in the BMA subject area, and, if a journal was evaluated in more than one subcategory within the BMA in the same year by SCImago, we calculated the highest quartile. Finally, it is to be noted that we have considered also those publications whose journal was since removed from Scopus, but SCImago is still calculating the journal’s quartile rating for that year. Publications whose journal already has Scopus indexing but does not yet have a SCImago quartile have not been included in this analysis.

The following provide two examples of the calculation of the RPSA index:

- Researcher A’s performance (between 2015–2020, in BMA journals): 1 Q1 article with 0.5 co-authorship ratio and 6 citations, 1 Q2 article with 1.0 co-authorship ratio and 4 citations, and 1 Q4 article with 0.25 co-authorship ratio and 2 citations:

- Researcher B’s performance (between 2015 and 2020, in BMA journals): 20 Q1 articles, with 0.1 co-authorship ratios each in 14 articles and 0.2 co-authorship ratios each in 6 articles, and 1 Q4 article with a 0.1 co-authorship ratio. These articles altogether received a total of 42 citations in Scopus:

The performance of Researchers A and B is equal based on the RPSA model. Although Researcher B has seven times (21 pieces) as many articles as Researcher A (3 pieces), their publication performances are similar, mainly due to the co-authorship ratios as articles with 5–10 co-authors are uncommon in the field of BMA. This would be somewhat altered by the overall number of citations received. The citations, however, were obtained from many more publications, which is made more realistic by RPSA with its ratio. The result of quality weighting of articles (journals) can be seen in the numerical expression of both performances. Once again, it is important to emphasize that the RPSA has not been developed to judge the value of individual research, but it is appropriate for assessing the performance of individual contributions to individual publications based on the quality of the journal and the citations of the studies.

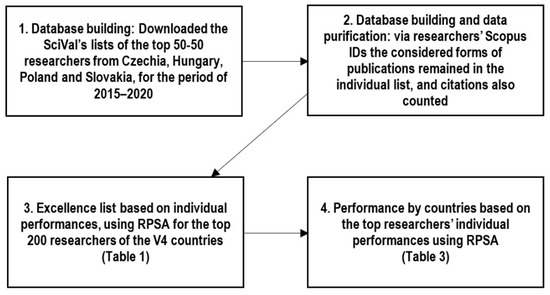

Figure 1 shows the model of the research methodology used in this project.

Figure 1.

The research methodology.

5. Results

Relative publication performance is shown in Table 1 for the top 200 researchers (based on SciVal) from the four countries. The red and blue scores in Table 1 (columns No.; G; H; I; J; K) have been calculated by the author, and the rest of the data are from SciVal exports. In terms of each country, scores A indicate researchers’ rankings based on SciVal’s publication performance between 2015 and 2020, given that SciVal indicator does not take into account citation data, co-authorship weights, or journal qualities. The rows of the table show individual publication performance. Researchers are listed in descending order in column K (RPSA). Column J shows the number of Scopus publications considered, regardless of co-authorship weights and other factors. The score of column I modifies this by taking into account the performance presented in the articles only in proportion to the co-authorship contribution, which consists in the aggregated authorship ratios published by the researcher during the period. In column G, in addition to the quantitative aspect, there surfaces a qualitative aspect: when calculating the data in column G, we have weighted the co-authorship ratios of each article by the quality of the journal according to SCImago and these have been totaled for each researcher. Column H is the citation-based component of the quality aspect. When calculating these data, we have divided the number of Scopus citations received for the Scopus articles by the number of Scopus articles considered. The product of G and H gives the RPSA index, which is shown in column K. This score thus also takes into account the extent of real co-authorship contribution to the publication, the researcher quality by citation ratio calculation and the publication quality by the journal’s quartile classification. RPSA is suitable for comparing researcher performance as any change in citations, co-authorship ratio, and journal quality all trigger a proportional change in performance. However, it follows from the RPSA formula that publications without citations are considered worthless.

Table 1.

Scientific metrics data for determining the individual publication performance of top 50 researchers in each Central European V4 country (in the period 2015–2020).

Based on the data shown in Table 1, significant differences in the publication performances of top researchers in the countries involved can be established. In the order of researchers calculated by RPSA, 6 researchers from Poland of the total 200 researchers were included in the top 10, while only 1 researcher was Hungary and 1 from Slovakia. For this reason and in order to obtain an answer as to what research excellence means in each country, in the scope of this study, we have also compared all the performance provided by the top researchers in the V4 countries.

SciVal ranks top researchers based on their scholarly output (Column C in Table 1), which differs from the order that could be generated using the data in Column J, because this latter column only counts the journal publications considered. There are statistically significant correlations with varying extents between C and K values, which are considered as indicators of performance, and between J and K values (Table 2), i.e., the number of publications and the number of BMA articles do not affect publication performance exclusively.

Table 2.

Correlations between scholarly output based on SciVal (C) and RPSA (K), and between the number of Scopus publications considered (J) and RPSA (K).

Scopus h-indexes show a significant standard variation as a function of calculated order (No.): the coefficient of determination of the trend line calculated based on the h-indexes adjusted to No. is R2 = 0.0006, which confirms that there is practically no correlation. Out of the top 10 researchers ranked according to the Scopus h-index (their h-index is between 58 and 21), five researchers are in the first quartile, and four researchers are in the last quartile in the ranking determined based on the calculated publication performance. Based on the results, the h-index is exceptionally high in two cases: in the case of junior researchers with intensive performance and rapid development, and, in the case of senior researchers h-index becomes high in the longer term, but in these cases publication performance of the last six years is no longer outstanding. More accurate information about individual performance is provided by the values of column H , where only those researchers who are below No. 100 belong to the first 40 highest values. Here, the coefficient of determination is R2 = 0.562, which indicates a much stronger trend compared to the previous one (No. vs. h-index): researchers ranked higher have higher CinS*/PinS* values (p = 0.000).

Quality-independent aggregated co-authorship ratios (column I in Table 1) show that the first decile of the top 200 researchers has the largest differences in performance across the countries. For example, while the researcher from Poland with the highest number of publications published 12.25 BMA journal articles, the top researcher from Slovakia published 12.17, the top researcher from the Czech Republic 11.64, and the top researcher from Hungary 7.00 articles. In the ranking based on the values of column I containing the aggregated co-author ratios, the first researcher from Hungary appears only at 23rd place. If we examine the publication performance of the top 50 researchers in each country in an aggregate way by country, we get an idea of the overall performance of these top researchers, i.e., the quality of the publications produced by the best researchers in each country. If we rank the researchers based on the data in column I, compared to No., we can see that there is a strong but straightforward relationship between the rankings according to the two criteria: the rank correlation coefficient ρ = 0.738. This suggests that the two rankings show parallel changes.

Based on the evaluation of the national top 50 researchers’ performance, it is also possible to ascertain the publication success of each country in the field of BMA. Table 3 shows the aggregated performance of the top 50 researchers in each of the V4 countries in total and in groupings according to the SCImago classification of the journals. Each examined country (PL, CZ, SK, HU) has two groups of columns. The values in the first group of columns (*) are derived from the data of all the publications of the top 50 researchers by country, based on the logic of SciVal’s statistics, i.e., like SciVal’s database. Furthermore, there may be redundancies among publications: if the same article is also co-authored by other researchers who are also in the top 50 according to SciVal, then these publications count more times. The values in the second group of columns (**) were calculated by implementing data cleansing, and recurring redundant publications were taken into account only once in compiling national performance figures.

Table 3.

Scientific metrics data determining the quality of publication performance of top 50 researchers in the Central European V4 countries (in the period of 2015–2020).

Based on the performance of the top 50 researchers in each country, most BMA publications were published by authors from Poland (477), then by authors from Czechia (431), from Slovakia (356), and finally by authors from Hungary (228) (Table 3 SUMMARY**). This order does not change even if we examine the total co-authorship ratios of the top 50s instead of the number of publications, which is 210.13; 149.74; 114.26; 96.86, respectively. This is related to the fact that the average number of authors of the considered publications does not vary greatly, it is around two to three (the lowest value is 2.27 in the top 50 of Poland, and the highest value is 3.12 in the top 50 of Slovakia). Overall, the Czech top 50 received the most citations for their publications (average 8.06 citations per publication), and the Slovak top 50 received the least (average 5.09 citations per publication). If we examine the data aggregated by country (without considering the quality of the journals) within the top 50s, we can see that there is a varied picture concerning the extent of cooperation in the production of publications. From the differences between the corresponding values of the SUMMARY* and SUMMARY** parts of Table 3, the degree of cooperation within the top 50s is the lowest between researchers from Poland (12.6%) and the highest in Slovakia (30.1%) taking into account the overlap between publications. Finally, it is important to note that journal diversity varies greatly from country to country. If we examine the journal diversity index, it can be seen from the data of Table 3 SUMMARY*-11 AND SUMMARY*-10 that the least diverse publishing platform was chosen by the top 50 of Slovakia (17.4%), and the most diverse journals were chosen by Hungary’s top 50 compared to the number of published articles (the journal diversity index is 53.1%).

After the examination of the aggregated data and the indicators of the top 50s by country and quartile, it can be highlighted that the distribution of Q1/Q2/Q3/Q4 publications differs significantly from even distribution, and such indicators from the countries also differ from each other. On the one hand, whether we look at the number of different publications (1** values in Table 3, respectively) or the aggregated of co-authorship ratios (8** values in Table 3, respectively), we see that in the lowest quality Q4 category, the top 50s publish significantly less than the 25% average. For example, in the period of 2015–2020, based on the aggregated co-authorship ratios of publications by the top 50 considered, the ratio of Q4 publications of Hungary (the lowest value in the comparison of the four countries) is only 8.8%, compared to all non-Q-rated publications produced by Hungarian researchers, while the same indicator is 19.0% in Poland (the highest value in the comparison of the countries). At the same time, as for the V4s, only to the top 50 in Hungary is it true that, albeit to a small extent, in the Q1 category considered the best quality, both Hungarian authors’ publications and the aggregated co-authorship ratios represent a higher proportion than average (26.3% and 26.0%, respectively). Q2 and Q3 together represent a significantly higher proportion in the publication pattern of all four countries: the lowest for the top 50 of Poland (60.2%) and the highest for the top 50 of Slovakia (71.1%), compared to the total number of publications published by the top 50 researchers during the period considered in this study.

On the other hand, if we examine the number of the top 50s’ publications by country, by Q-quartile and by year of publications, we can see that only concerning one quartile, Q1 does the number of publications increase in all V4 countries year by year (Table 3 2**–7**). In the other quartiles, the direction and pace of the annual change in the number of publications is varied and unclear.

Based on the top performance of the V4 countries, the top 50 of the Czech Republic would have the opportunity to target higher quality journals with their publications in general, as they have significantly higher average citation ratios in both Q4 and Q1 (3.04 and 20.95 respectively), compared to the same indicator for the other three countries (Table 3 12**). Finally, it is interesting to note that the average number of co-authors per publication varies greatly according to the quartiles. Moving forward in quality from Q4 to Q2, the number of co-authors generally increases. An exception to this is the top 50 of Slovakia, where the average number of co-authors is slightly lower in Q3 compared to Q4. Then again, in the case of three of the four countries, the average number of co-authors in the Q1 category drops to a value typical of average Q3 and Q2 quality publications. An exception to this is the top 50 in the Czech Republic, where the average number of co-authors is the highest in Q1 (3.61).

6. Discussion

Table 1 summarizes those science metrics indicators that show publication performance of the total top 200 researchers broken down by country. The results show that the distribution of researchers by country in the list of the top 200 is not balanced, the best researchers in Poland’s top 50 perform significantly above the researchers from other countries, while e.g., there is only one researcher from Hungary in the total top 10. One reason for this may be that researchers from Poland are more consciously striving for better publication quality and higher performance, but it is also possible that the practice of measuring excellence in Poland is closer to the logic of RPSA, which researchers’ publication activity is a response to. The development of conscious publishing strategies of Hungarian researchers are less effectively facilitated by current standards of excellence, and researchers still overestimate the importance and the weight of national journal articles [56]. In Hungary, one of the research funds of national importance for senior, junior and postdoctoral researchers is the so-called “OTKA”, for which 800–1100 applications were received annually between 2016 and 2020, regardless of disciplines (see, e.g., National Research, Development and Innovation Office [NKFIH], [57,58]). The NKFIH, which is responsible for Hungarian research, decides on the applications, attaching great importance to the publication performance of applicants, which is currently evaluated by https://tudomanymetria.com (accessed on 27 April 2021), based on different aspects by the scientific fields concerned [59,60,61]. In the BMA, until the beginning of 2021, this system basically took into account three aspects when evaluating scientific performance assigned to certain age groups: (1) h-index (not based on Scopus, but all researcher’s scientific publications are considered); (2) independent citation per year; (3) number of publications in the past five years (including all types of scientific publications). These criteria did not prioritize the achievements of international publications, and therefore at the beginning of 2021 a discussion has been initiated in the Hungarian scientific community in order to renew the criteria system in the field. If this is completed, the importance of producing international and high-quality publications for Hungarian researchers will be clear, so it will be possible for Hungary to approach the average publication performance of V4s in the BMA field.

The RPSA indicator, which considers all citation data, journal quality and aggregated co-authorship ratios, shows only a partly significant correlation with SciVal’s scholarly output. This is because SciVal only includes the number of Scopus publications in its researcher ranking. This suggests that the data in such databases should be treated with reservations in the scope of performance evaluation, although the raw data of such databases can be an indispensable basis for developing performance evaluation models. The results shown in Table 1 also confirmed that the predominantly h-index-based performance evaluation does not give a reliable result. This finding is supported by other previous research [43,62,63].

It is important to note that RPSA alone is not suitable for assessing the full performances of researchers. As it follows from its name, it takes only one but the most significant factor in comparing individual, institutional or national performances in international environment: the co-authoring ratios and the impact of the articles in the classified journal articles. The co-authorship ratio that reflects the researchers’ efforts in a quantitative approach, while the average citation number on these publications can give a reference to quantifying the effect, which reflects the average quality of the researchers’ publications. Consideration should be given to the RPSA certainly does not take into account the contribution to other forms of publications, or activities related to organizing research, educating doctoral students, etc. The top 50 researchers from Hungary produce fewer publications than the top 50 from other countries, but this only partially determines their performance. Considering the quality of the journal and the citations of the publications as well, the difference between average publication performance is smaller between researchers from Hungary and from the other countries. A further development of the scientific metrics database would be needed to more accurately determine the relative publication performance of countries based on data of all researchers in the countries. Implicitly, the analysis of the performance of the top 50 is only an illustration of their scientific efforts and the quality of their publishing activities. This publication quality is related to the degree of collaboration between researchers, but this quality cannot be analyzed exclusively concerning top researchers. As we have seen when comparing the performance of the top 200, cooperation is the lowest among researchers from Poland, and the highest among the researchers from Slovakia. It is possible, however, that the vertical composition of research teams generally differs in a significant manner from country to country if there are more common researcher collaborations of the same rank (with similar career successes), or when there is more support for junior researchers by senior researchers, which latter scenario is coupled with a lower cooperation ratio within the top 50 researchers of the country. In any case, it is clear from the data that among the V4s, Q1, Q2, and Q3 quality publications are produced by researchers from Poland with the lowest average number of co-authors.

Publication performance cannot be established solely based on citations, the number of publications, and the quality per quartiles, as there is no performance evaluation system that could lack non-quantifiable aspects [64]. In publishing practice, there may be unethical cases when some authors use their ability for assertion of interests in the publishing process. Certainly, the journal diversity index does not necessarily refer to unethical procedures. However, if the quotient of the number of publications and the number of different journals publishing such studies (the degree of diversity of the journals) show markedly and significantly distorted results, this may indicate the existence of such a risk. The lower the journal diversity index, the more the professional and personal relationship between the journal and the author may play a role in the successful publication process. It should be noted that the more intensive spread of OA journals also plays a role in the expected future decline in the journal diversity index. In addition, the number of direct citations does not necessarily provide reliable information about the scientific value of studies [65], and this figure is further distorted by the algorithms used by various online search engines like Google Scholar.

The results also show that the importance of publishing articles of the highest quality (Q1) was recognized in all V4 countries, as the number of such studies is steadily increasing year by year. The aggregated co-authorship ratios achieved in the case of such publications are still slightly below 25% but publishing medium-quality articles (Q2 and Q3) is also a common practice. Due to their research experiences, some of the top researchers are moving towards Q1 of their will and accord, as their study writing skills are constantly developing during their publishing activities. At the same time, further research is needed on how conscious researchers are when selecting journals (and journal quality), whether this awareness stems from their own goal setting, and to what extent researchers are oriented by the aspects of performance evaluation systems. Science policy makers in each country assume great responsibility in applying aspects that contribute to a high level of international visibility of research results when defining criteria of scholarships and funding schemes, as well as qualifications requirements.

The following features are considered the advantages of the RPSA model: (1) simplicity, (2) comprehensiveness, and (3) fairness. The model is simple because it interprets only the absolutely necessary quantitative and qualitative factors that have the greatest impact on performance (number of co-authors, quality of the journal, number of articles, number of citations). It is also comprehensive because, even if the logic of RPSA could be applied to WoS, Scopus indexes a higher number of journals, and SCImago’s journal ranking system based on the Scopus database is considered an accepted and popular source of information worldwide. RPSA is considered fair in the sense that it is suitable for ranking researchers’ publication performance based on public data, so that the performance evaluated through the above-mentioned dimensions becomes comparable and transparent. We also deem RPSA fair as it can reveal the kind of researcher behavior that is only meant to increase publication performance through academically questionable means.

Also, RPSA represents a new approach compared to other performance measurement models. For example, in the ERC application system, the output of publication performance is determined only by the number of articles, with no regard to the number of co-authors [66]. In the ERC evaluation, the field-normalized citation rate (FNCR) is of paramount importance. In any case, just like in the case of RPSA, the ERC’s evaluation model favors international journal articles in contrast to the approach of [67], who argued that publications, book chapters and conference articles have the same weight in publication performance. However, as the Australian example shows [68], such an approach clearly undermines scholars’ intention to be present in high-quality publication forums. In contrast, [69] only consider ISI articles in their model when calculating individual performances regardless of citations, journal quality, or individual contribution to the article. The other extreme of evaluation systems is represented by the Norwegian Model, which strives for perfection by using weights as far as performance evaluation in different forms of publication are concerned [70]. This approach, however, raises the question of the reliability of weights used. In contrast to this, based on the RPSA, a database can be built that can provide up-to-date information on researchers’ publication performances based on the qualitative and quantitative characteristics of Scopus journal articles. In fact, in other disciplines, the status of an article’s authors is more dominant (e.g., single, first, last, correspondent) [71]; this, however, does not significantly affect performance judgment in the field of BMA.

7. Conclusions

Among the top 200 scholars analyzed in the study there are some researchers from the V4 countries who are not economists or are not working primarily in the BMA field, but have been included in the list as their high-quality publishing performance can also be measured in the BMA in addition to their main research areas. This, however, distorts the results because the average citation indicators of the disciplines may differ significantly [22,72]. Therefore, there is a need for a system in which the research field can be recorded and filtered in a uniform way.

The RPSA indicator presented here was used to determine the relative publication performance of researchers in the BMA area, but this might also be applied to any discipline, since RPSA does not measure the effectiveness of research, or the importance or relevance of the published discovery, but only relative publication performance. At the same time, as this approach cannot handle differences in the specifics of fields, it is not suitable for the comparison of publication performance of researchers in different fields. However, there are limitations for RPSA’s use as it has been shown in Discussion. There are no any indicator that can be able to measure all kinds of research or publishing performance reliably, nor RPSA can. However, this model may be a solution to judge performances on articles on a quantitative and qualitative basis. Certainly, this cannot replace the experts’ performance evaluation, but can serve for that as a basis.

Another unresolved problem is that, in addition to journal articles, researcher performance concerning other types of publications need to be reliably measured, even if book, book chapter, etc., types of publications are in fact considered taking into account citations only (e.g., [73]) or the number of publications only (e.g., [74]). This is a particularly important issue in HASS disciplines, where book chapters and conference articles dominate individual publication lists.

Finally, we recommend that decision-makers of Central European science policy take an unambiguous position on the expectations about the different forms of publication in order to support predictable and plannable research progresses and scientific developments. They are also advised to communicate their position clearly so that such considerations can be integrated into researchers’ publication strategies. This requires horizontal and vertical coordination of performance appraisal systems, and conscious planning as far as the hierarchical nature and interrelatedness of such systems are concerned. We recommend that, in the scope of performance appraisal systems, publication-related requirements concerning habilitation (where applicable) and Doctor of Science (where applicable) qualifications should reflect—at least at a national level—differences between such qualifications in prestige. In the absence of performance appraisal systems of this kind, the publication performances of researchers belonging to different generations will not be comparable either, which is difficult in itself anyway due to the differences of tasks and expectations associated with diverse stages of a scholar’s life.

Funding

This article was supported by the János Bolyai Research Scholarship of the Hungarian Academy of Sciences.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Abbasi, A.; Chung, K.S.K.; Hossain, L. Egocentric analysis of co-authorship network structure, position and performance. Inf. Process. Manag. 2012, 48, 671–679. [Google Scholar] [CrossRef]

- Kato, M.; Ando, A. The relationship between research performance and international collaboration in chemistry. Scientometrics 2013, 97, 535–553. [Google Scholar] [CrossRef]

- Wouters, P.; Thelwall, M.; Kousha, K.; Waltman, L.; De Rijcke, S.; Rushforth, A.; Franssen, T. The Metric Tide: Literature Review (Supplementary Report I to the Independent Review of the Role of Metrics in Research Assessment and Management); HEFCE: Bristol, UK, 2015.

- Endenich, C.; Trapp, R. Cooperation for Publication? An Analysis of Co-authorship Patterns in Leading Accounting Journals. Eur. Account. Rev. 2016, 25, 613–633. [Google Scholar] [CrossRef]

- De Stefano, D.; Fuccella, V.; Vitale, M.P.; Zaccarin, S. The use of different data sources in the analysis of co-authorship networks and scientific performance. Soc. Netw. 2013, 35, 370–381. [Google Scholar] [CrossRef]

- Khor, K.A.; Yu, L.-G. Influence of international co-authorship on the research citation impact of young universities. Scientometrics 2016, 107, 1095–1110. [Google Scholar] [CrossRef] [Green Version]

- Ordonez-Matamoros, G.; Cozzens, S.E.; Garcia, M. International Co-Authorship and Research Team Performance in Colombia. Rev. Policy Res. 2010, 27, 415–431. [Google Scholar] [CrossRef] [Green Version]

- Abramo, G.; D’Angelo, C.A. The relationship between the number of authors of a publication, its citations and the impact factor of the publishing journal: Evidence from Italy. J. Informetr. 2015, 9, 746–761. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.J.; Weinberger, C.; Bruna, E.M.; Allesina, S. The Scientific Impact of Nations: Journal Placement and Citation Performance. PLoS ONE 2014, 9, e109195. [Google Scholar] [CrossRef]

- Garfield, E. The History and Meaning of the Journal Impact Factor. J. Am. Med Assoc. 2006, 295, 90–93. [Google Scholar] [CrossRef] [PubMed]

- Martín-Martín, A.; Orduna-Malea, E.; Thelwall, M.; López-Cózar, E.D. Google Scholar, Web of Science, and Scopus: A systematic comparison of citations in 252 subject categories. J. Informet. 2018, 12, 1160–1177. [Google Scholar] [CrossRef] [Green Version]

- Thelwall, M. Do females create higher impact research? Scopus citations and Mendeley readers for articles from five countries. J. Informet. 2018, 12, 1031–1041. [Google Scholar] [CrossRef] [Green Version]

- Jurajda, S.; Kozubek, S.; Münich, D.; Skoda, S. Scientific publication performance in post-communist countries: Still lagging far behind. Scientometrics 2017, 112, 315–328. [Google Scholar] [CrossRef]

- Baum, J.A.C. Free-Riding on Power Laws: Questioning the validity of the Impact Factor as a measure of research quality in organization studies. Organization 2011, 18, 449–466. [Google Scholar] [CrossRef]

- Jarwal, S.D.; Brion, A.M.; King, M.L. Measuring research quality using the journal impact factor, citations and ‘Ranked Journals’: Blunt instruments or inspired metrics? J. High. Educ. Policy Manag. 2009, 31, 289–300. [Google Scholar] [CrossRef]

- Pranckutė, R. Web of Science (WoS) and Scopus: The Titans of Bibliographic Information in Today’s Academic World. Publications 2021, 9, 12. [Google Scholar] [CrossRef]

- Weale, A.R.; Bailey, M.; Lear, P.A. The level of non-citation of articles within a journal as a measure of quality: A comparison to the impact factor. BMC Med Res. Methodol. 2004, 4, 14. [Google Scholar] [CrossRef] [Green Version]

- Guerrero-Bote, V.P.; Anegón, F.D.M. A further step forward in measuring journals’ scientific prestige: The SJR2 indicator. J. Informet. 2012, 6, 674–688. [Google Scholar] [CrossRef] [Green Version]

- González-Pereira, B.; Guerrero-Bote, V.P.; Anegón, F.D.M. A new approach to the metric of journals’ scientific prestige: The SJR indicator. J. Informet. 2010, 4, 379–391. [Google Scholar] [CrossRef]

- Sheikh, A. Publication ethics and the research assessment exercise: Reflections on the troubled question of authorship. J. Med Ethics 2000, 26, 422–426. [Google Scholar] [CrossRef] [Green Version]

- Kyvik, S. The academic researcher role: Enhancing expectations and improved performance. High. Educ. 2013, 65, 525–538. [Google Scholar] [CrossRef]

- Hammarfelt, B.; Haddow, G. Conflicting measures and values: How humanities scholars in Australia and Sweden use and react to bibliometric indicators. J. Assoc. Inf. Sci. Technol. 2018, 69, 924–935. [Google Scholar] [CrossRef]

- Loyarte-López, E.; García-Olaizola, I.; Posada, J.; Azúa, I.; Flórez-Esnal, J. Enhancing Researchers’ Performance by Building Commitment to Organizational Results. Res. Manag. 2020, 63, 46–54. [Google Scholar] [CrossRef]

- Vinkler, P. Correlation between the structure of scientific research, scientometric indicators and GDP in EU and non-EU countries. Scientometrics 2008, 74, 237–254. [Google Scholar] [CrossRef]

- Jokić, M. Productivity, visibility, authorship, and collaboration in library and information science journals: Central and Eastern European authors. Scientometrics 2019, 122, 1189–1219. [Google Scholar] [CrossRef]

- Kozak, M.; Bornmann, L.; Leydesdorff, L. How have the Eastern European countries of the former Warsaw Pact developed since 1990? A bibliometric study. Scientometrics 2015, 102, 1101–1117. [Google Scholar] [CrossRef] [Green Version]

- Herrera-Franco, G.; Montalván-Burbano, N.; Carrión-Mero, P.; Apolo-Masache, B.; Jaya-Montalvo, M. Research Trends in Geotourism: A Bibliometric Analysis Using the Scopus Database. Geosciences 2020, 10, 379. [Google Scholar] [CrossRef]

- Erfanmanesh, M. Status and quality of open access journals in Scopus. Collect. Build. 2017, 36, 155–162. [Google Scholar] [CrossRef]

- Krauskopf, E. An analysis of discontinued journals by Scopus. Scientometrics 2018, 116, 1805–1815. [Google Scholar] [CrossRef]

- Falagas, M.E.; Kouranos, V.D.; Arencibia-Jorge, R.; Karageorgopoulos, D. Comparison of SCImago journal rank indicator with journal impact factor. FASEB J. 2008, 22, 2623–2628. [Google Scholar] [CrossRef]

- Jacsó, P. Comparison of journal impact rankings in the SCImago Journal & Country Rank and the Journal Citation Reports databases. Online Inf. Rev. 2010, 34, 642–657. [Google Scholar]

- Villaseñor-Almaraz, M.; Islas-Serrano, J.; Murata, C.; Roldan-Valadez, E. Impact factor correlations with Scimago Journal Rank, Source Normalized Impact per Paper, Eigenfactor Score, and the CiteScore in Radiology, Nuclear Medicine & Medical Imaging journals. La Radiol. Med. 2019, 124, 495–504. [Google Scholar]

- Copiello, S. On the skewness of journal self-citations and publisher self-citations: Cues for discussion from a case study. Learn. Publ. 2019, 32, 249–258. [Google Scholar] [CrossRef]

- Duc, N.; Hiep, D.; Thong, P.; Zunic, L.; Zildzic, M.; Donev, D.; Jankovic, S.; Hozo, I.; Masic, I. Predatory Open Access Journals are Indexed in Reputable Databases: A Revisiting Issue or an Unsolved Problem. Med. Arch. 2020, 74, 318–322. [Google Scholar] [CrossRef]

- Antelman, K. Do Open-Access Articles Have a Greater Research Impact? Coll. Res. Libr. 2004, 65, 372–382. [Google Scholar] [CrossRef]

- Da Silva, J.A.T.; Memon, A.R. CiteScore: A cite for sore eyes, or a valuable, transparent metric? Scientometrics 2017, 111, 553–556. [Google Scholar] [CrossRef]

- Parish, A.J.; Boyack, K.; Ioannidis, J.P.A. Dynamics of co-authorship and productivity across different fields of scientific research. PLoS ONE 2018, 13, e0189742. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Aste, T.; Caccioli, F.; Livan, G. Early coauthorship with top scientists predicts success in academic careers. Nat. Commun. 2019, 10, 5170. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.-W. Measuring international research collaboration of peripheral countries: Taking the context into consideration. Scientometrics 2006, 66, 231–240. [Google Scholar] [CrossRef]

- Abbasi, A.; Altmann, J.; Hossain, L. Identifying the effects of co-authorship networks on the performance of scholars: A correlation and regression analysis of performance measures and social network analysis measures. J. Informet. 2011, 5, 594–607. [Google Scholar] [CrossRef]

- Mena-Chalco, J.P.; Digiampietri, L.A.; Lopes, F.M.; Cesar, R.M., Jr. Brazilian bibliometric coauthorship networks. J. Assoc. Inf. Sci. Technol. 2014, 65, 1424–1445. [Google Scholar] [CrossRef]

- Ductor, L. Does Co-authorship Lead to Higher Academic Productivity? Oxf. Bull. Econ. Stat. 2015, 77, 385–407. [Google Scholar] [CrossRef]

- Bornmann, L.; Marx, W. The h index as a research performance indicator. Eur. Sci. Ed. 2011, 37, 77–80. [Google Scholar]

- Glänzel, W. On the h-index–A mathematical approach to a new measure of publication activity and citation impact. Scientometrics 2006, 67, 315–321. [Google Scholar] [CrossRef]

- Batista, P.D.; Campiteli, M.G.; Kinouchi, O. Is it possible to compare researchers with different scientific interests? Science 2006, 68, 179–189. [Google Scholar] [CrossRef]

- Schreiber, M. Self-citation corrections for the Hirsch index. Europhys. Lett. 2007, 78, 30002. [Google Scholar] [CrossRef]

- Zhang, C.-T. The e-Index, Complementing the h-Index for Excess Citations. PLoS ONE 2009, 4, e5429. [Google Scholar] [CrossRef] [PubMed]

- Hollis, A. Co-authorship and the output of academic economists. Labour Econ. 2001, 8, 503–530. [Google Scholar] [CrossRef] [Green Version]

- Li, E.Y.; Liao, C.H.; Yen, H.R. Co-authorship networks and research impact: A social capital perspective. Res. Policy 2013, 42, 1515–1530. [Google Scholar] [CrossRef]

- Koseoglu, M.A. Growth and structure of authorship and co-authorship network in the strategic management realm: Evidence from the Strategic Management Journal. Bus. Res. Q. 2016, 19, 153–170. [Google Scholar] [CrossRef] [Green Version]

- Ronda-Pupo, G.A. The effect of document types and sizes on the scaling relationship between citations and co-authorship patterns in management journals. Scientometrics 2017, 110, 1191–1207. [Google Scholar] [CrossRef]

- Lin, W.-Y.C.; Huang, M.-H. The relationship between co-authorship, currency of references and author self-citations. Scientometrics 2011, 90, 343–360. [Google Scholar] [CrossRef]

- Chatzimichael, K.; Kalaitzidakis, P.; Tzouvelekas, V. Measuring the publishing productivity of economics departments in Europe. Scientometrics 2017, 113, 889–908. [Google Scholar] [CrossRef]

- McCabe, M.J.; Snyder, C.M. Identifying the Effect of Open Access on Citations Using a Panel of Science Journals. Econ. Inq. 2014, 52, 1284–1300. [Google Scholar] [CrossRef] [Green Version]

- Aldieri, L.; Kotsemir, M.; Vinci, C.P. The impact of research collaboration on academic performance: An empirical analysis for some European countries. Socio-Econ. Plan. Sci. 2018, 62, 13–30. [Google Scholar] [CrossRef]

- Sasvári, P.; Nemeslaki, A.; Duma, L. Exploring the influence of scientific journal ranking on publication performance in the Hungarian social sciences: The case of law and economics. Scientometrics 2019, 119, 595–616. [Google Scholar] [CrossRef] [Green Version]

- NKFIH (2020). Az “OTKA” Kutatási Témapályázatok 2020. Évi Nyertesei (K_20)”. “Winners of the “OTKA” Research Topic Competitions, 2020 (K_20)”. Available online: https://nkfih.gov.hu/palyazoknak/nkfi-alap/tamogatott-projektek-k20 (accessed on 27 April 2021).

- NKFIH (2020). “Az “OTKA” Fiatal Kutatói Kiválósági Program 2020. Évi Nyertesei (FK_20)”. “Winners of the “OTKA” Young Researcher Excellence Program, 2020 (FK_20)”. Available online: https://nkfih.gov.hu/palyazoknak/nkfi-alap/tamogatott-projektek-fk20 (accessed on 27 April 2021).

- NKFIH (2021). “Felhívás “OTKA” Kutatási Témapályázathoz”. “Call for “OTKA” research topic competition.”. Available online: https://nkfih.gov.hu/palyazoknak/nkfi-alap/kutatasi-temapalyazat-k21/palyazati-felhivas (accessed on 27 April 2021).

- Győrffy, B.; Herman, P.; Szabó, I. Research funding: Past performance is a stronger predictor of future scientific output than reviewer scores. J. Informet. 2020, 14, 101050. [Google Scholar] [CrossRef]

- Győrffy, B.; Csuka, G.; Herman, P.; Török, Á. Is there a golden age in publication activity?—An analysis of age-related scholarly performance across all scientific disciplines. Scientometrics 2020, 124, 1081–1097. [Google Scholar] [CrossRef]

- Ding, J.; Liu, C.; Kandonga, G.A. Exploring the limitations of the h-index and h-type indexes in measuring the research performance of authors. Scientometrics 2020, 122, 1303–1322. [Google Scholar] [CrossRef]

- Waltman, L.; Van Eck, N.J. The inconsistency of the h-index. J. Am. Soc. Inf. Sci. Technol. 2012, 63, 406–415. [Google Scholar] [CrossRef]

- Crespo, N.; Simoes, N. Publication Performance through the Lens of the h-index: How Can We Solve the Problem of the Ties? Soc. Sci. Q. 2019, 100, 2495–2506. [Google Scholar] [CrossRef]

- Klosik, D.F.; Bornholdt, S. The Citation Wake of Publications Detects Nobel Laureates’ Papers. PLoS ONE 2014, 9, e113184. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neufeld, J.; Huber, N.; Wegner, A. Peer review-based selection decisions in individual research funding, applicants’ publication strategies and performance: The case of the ERC Starting Grants. Res. Eval. 2013, 22, 237–247. [Google Scholar] [CrossRef]

- Diamantopoulos, A. A model of the publication performance of marketing academics. Int. J. Res. Mark. 1996, 13, 163–180. [Google Scholar] [CrossRef]

- Butler, L. What Happens When Funding is Linked to Publication Counts. In Handbook of Quantitative Science and Technology; Moed, H.F., Glänzel, W., Schmoch, U., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2004; pp. 340–389. [Google Scholar]

- Jacobsen, C.B.; Andersen, L.B. Performance Management for Academic Researchers: How Publication Command Systems Affect Individual Behavior. Rev. Public Pers. Adm. 2014, 34, 84–107. [Google Scholar] [CrossRef]

- Aagaard, K.; Bloch, C.W.; Schneider, J.W. Impacts of performance-based research funding systems: The case of the Norwegian Publication Indicator. Res. Eval. 2015, 24, 106–117. [Google Scholar] [CrossRef]

- Carpenter, C.; Cone, D.C.; Sarli, C.C. Using Publication Metrics to Highlight Academic Productivity and Research Impact. Acad. Emerg. Med. 2014, 21, 1160–1172. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nederhof, A.J. Bibliometric monitoring of research performance in the Social Sciences and the Humanities: A Review. Scientometrics 2006, 66, 81–100. [Google Scholar] [CrossRef]

- Butler, L.; Visser, M. Extending citation analysis to non-source items. Scientometrics 2006, 66, 327–343. [Google Scholar] [CrossRef]

- Mayer, S.J.; Rathmann, J.M.K. How does research productivity relate to gender? Analyzing gender differences for multiple publication dimensions. Scientometrics 2018, 117, 1663–1693. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).