The Economic Impacts of Open Science: A Rapid Evidence Assessment

Abstract

1. Introduction

- To identify the types of direct and indirect economic impact that open science has been empirically demonstrated to have.

- To identify the mechanisms by which economic impacts come about.

- To identify the contextual factors that affect whether or not (and the extent to which) economic impacts occur, and the extent to which the open science approach was a necessary condition of their occurring.

- To assess the magnitude and relative importance of different types of impact (positive and negative).

- To identify methods by which economic impacts have been (or have been suggested to be) recorded and/or quantified, and how counterfactuals (i.e., non-open science approaches) have been estimated.

- To identify policies (or other interventions including [but not limited to] clearer/better communications about knowledge exchange and open science) which can help maximize the economic benefits and reduce the costs associated with open science.

- To identify trade-offs between economic and other (e.g., academic, societal) impacts.

2. Materials and Methods

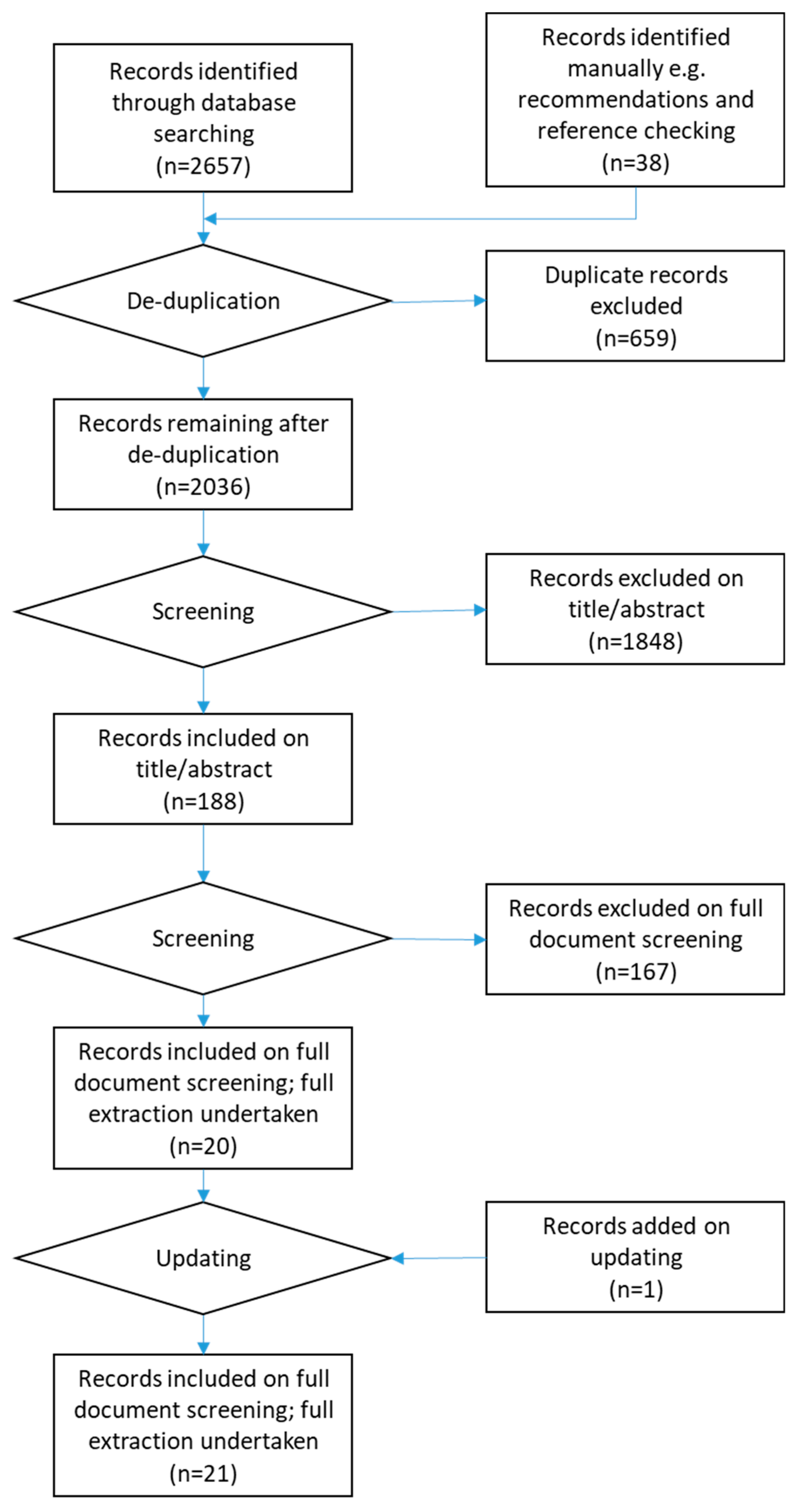

2.1. Search, Screening and Quality Assessment

- Scopus

- Web of Science (all databases)

- ScienceDirect

- JISC

- UK Government website (gov.uk)

- Innovate UK

- UK Research Council and HEFCE websites

- Google scholar (searches of title only, limited to review of first 300 results [14])

- Open Data Institute

- Digital Curation Centre (DCC)

- Nesta

- Centre for Open Science

- Open Research Funders Group

- Open Scholarship Initiative

- Open Access Bibliography

- Open Access Directory

- Universities UK

- OECD Library

- Europa.eu

- European Universities Association

2.2. Extraction

- Organizational affiliation of authors

- ○

- Academia

- ○

- Industry

- ○

- Governmental

- ○

- Other non-governmental

- Study country

- Funding source (if applicable)

- Coverage

- ○

- Open access publishing

- ○

- Open research data

- ○

- Other

- Key aims

- Data collection and analysis methods

- ○

- Interviews

- ○

- Social surveys

- ○

- Macroeconomic modelling

- ○

- Cost–benefit analysis

- ○

- Case study

- ○

- Other

- Key findings (on economic impact)

- Relevant policies cited

- Recommendations

- Quality assessment (scored 0–2)

- ○

- Transparency

- ○

- Accuracy

- ○

- Purposivity

- ○

- Utility

- ○

- Propriety

- ○

- Accessibility

- ○

- Specificity

2.3. Synthesis

2.4. Updating

2.5. Description of Results

3. General Review Summary

4. Methods for Assessing Economic Impacts

4.1. Question-Based Approaches

4.2. Cost–Benefit Analysis (and Similar) Approaches

5. Economic Impacts

- Efficiency means getting the same output from research or innovation for less input (principally public research funding). For example, if open access publishing can be shown to allow access to research findings for the same number of researchers for a lower overall cost, this would represent an efficiency saving. While this review is not principally concerned with economic impacts within the university/publishing sector, such efficiencies are also relevant to wider economic impacts through improved returns to R&D.

- Enablement signifies ways in which open science approaches have led to economically impactful activities which would have been less likely to occur in a closed environment.

5.1. Efficiency

5.1.1. Access Cost Savings

5.1.2. Labour Cost Savings or Productivity Improvements

5.1.3. Other Efficiency Benefits

5.2. Enablement

5.2.1. New Products/Services/Companies

5.2.2. Collaborations

5.2.3. Permitting Work

- Accessibility, or the extent to which research findings can be accessed by users.

- Efficiency, or the extent to which R&D generates knowledge that is useful socially or economically.

5.3. Costs and Challenges

5.4. Contextual Issues

5.5. Recommendations Captured in the Review

5.6. Study Limitations

6. Conclusions and Recommendations

6.1. Conclusions

- Accessibility, or the extent to which research findings can be accessed by users.

- Efficiency, or the extent to which R&D generates knowledge that is useful socially or economically.

6.2. Recommendations

Supplementary Materials

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Ref | Study Aims and Approach |

|---|---|

| [15] | Beagrie, N. & Houghton, J. The Value and Impact of Data Sharing and Curation: A synthesis of three recent studies of UK research data centres. (Jisc, 2014). |

| A synthesis of three similar studies, the full report for each of which was also drawn on in this review [27,35,36]. They are treated together here as they formed part of a programme of work with very similar aims and methods. Aims: To identify the value for, and impacts on, users and depositors of three research data repositories: The Economic and Social Data Service, the Archaeology Data Service, and the British Atmospheric Data Centre. Methods:

| |

| [16] | Beagrie, N. & Houghton, J. The value and impact of the European Bioinformatics Institute. (EMBL-EBI, 2016). |

| Aims: To explore the costs and cost savings of the EMBL-EBI, its value to users, and wider impacts. Methods:

| |

| [24] | CEPA LLP & Mark Ware Consulting Ltd. Heading for the open road: costs and benefits of transitions in scholarly communications. (Research Information Network (RIN), JISC, Research Libraries UK (RLUK), the Publishing Research Consortium (PRC) and the Wellcome Trust, 2011). |

| This work is primarily focused on informing transitions to open access. Aims: Provide evidence to inform scholarly communications stakeholders around dynamics of transition towards improved access to research outputs. Methods: Regarding impacts beyond the scholarly communications system, the Solow–Swan model approach is used to quantify potential UK economy-wide impacts under different scenarios. | |

| [62] | Chataway, J., Parks, S. & Smith, E. How Will Open Science Impact on University-Industry Collaborations? Foresight and STI Governance 11, 44–53 (2017). |

| Aims: To consider the possible impacts of open science approaches on university-industry collaboration. Methods: This was not an empirical study, but combines findings from previous research to develop and support arguments. | |

| [78] | Giovani, B. Open Data for Research and Strategic Monitoring in the Pharmaceutical and Biotech Industry. Data Science Journal 16, (2017). |

| Aims: to explore how to extract value from data in the biotech sector, and how companies manage intellectual property to so as to benefit from open data while also protecting their business. Method: Interviews and an online survey. Response rates were very small and limited methodological detail are supplied. | |

| [79] | Houghton, J. Open Access: What are the Economic Benefits? A Comparison of the United Kingdom, Netherlands and Denmark. (Social Science Research Network, 2009). |

| Applied the same approach as [23], but also encompassing the Netherland and Denmark. | |

| [45] | Houghton, J. & Sheehan, P. The economic impact of enhanced access to research findings. (Centre for Strategic Economic Studies, Victoria University, 2006). |

| Aims: To assess the value of increasing open access to research findings in OECD countries. Methods:

| |

| [18] | Houghton, J., Swan, A. & Brown, S. Access to research and technical information in Denmark. (2011). |

| Aims: to examine access to, and use of, technical information by knowledge-based SMEs in Denmark, as well identifying barriers, costs and benefits. Approach:

| |

| [23] | Houghton, J. et al. Economic implications of alternative scholarly publishing models: Exploring the costs and benefits. (Jisc, 2009). |

| Aims: To identify costs and benefits of open access within scholarly publishing, as well as to the UK economy more broadly. Methods (relating to broader economic impacts outside the scholarly communications ecosystem, which are the subject of this review):

| |

| [59] | Johnson, P. A., Sieber, R., Scassa, T., Stephens, M. & Robinson, P. The Cost(s) of Geospatial Open Data. Transactions in GIS 21, 434–445 (2017). |

| This source does not focus on research data specifically, but was included as it provides a useful perspective on costs associated with open data. Aims: To identify externalized and unintentional impacts of open data. Methods: This was not an empirical study, but combines findings from previous research to develop and support arguments. | |

| [25] | Jones, M. M. et al. The Structural Genomics Consortium: A Knowledge Platform for Drug Discovery. 19 (RAND Corporation, 2014). |

| Aims: To understand the nature and diversity of benefits gained through the Structural Genomics Consortium both for partners and the wider research community including consideration of relative merits of the open vs more closed models of operation. Methods:

| |

| [30] | Lateral Economics. Open for Business: How Open Data Can Help Achieve the G20 Growth Target. (Omidyar Network, 2014). |

| Aims: Quantify and illustrate potential of open data (in general, but including research data) to meet G20’s growth target (with focus on Australia), and to estimate what proportion of the target open data policy could deliver. Methods:

| |

| [17] | McDonald, D. & Kelly, U. Value and benefits of text mining. (Jisc, 2017). |

| Aims: To explore value and benefits of text mining under existing and alternative licensing conditions, including consideration of costs, risks, and barriers. Methods:

| |

| [64] | Mueller-Langer, F. & Andreoli-Versbach, P. Open access to research data: Strategic delay and the ambiguous welfare effects of mandatory data disclosure. Information Economics and Policy 42, 20–34 (2018). |

| Aims: To investigate the effects of mandatory data disclosure policies on researcher decisions (e.g., around publication and data disclosure). Methods: Mathematical modelling of researcher decisions. | |

| [21] | ODI. Open data means business. (Open Data Institute, 2015). |

| Aims: To identify use of open data by UK companies (without specific focus on research data, but use of scientific and research data is considered). Methods:

| |

| [19] | Parsons, D., Willis, D. & Holland, J. Benefits to the private sector of open access to higher education and scholarly research. (Jisc, 2011). |

| Aims: To identify and quantify benefits to the private sector of open access to university research outputs, along with the enablers of these benefits, mechanisms and contextual factors. Methods:

| |

| [49] | RAND Europe. Open Science Monitoring Impact Case Study—Structural Genomics Consortium. (European Commission Directorate-General for Research and Innovation, 2017). |

| Aims: To give an overview of the impact of the Structural Genomics Consortium. Methods: This was not an empirical study, but combines findings from previous research to describe impacts (including economic/financial). | |

| [26] | Sullivan, K. P., Brennan-Tonetta, P. & Marxen, L. J. Economic Impacts of the Research Collaboratory for Structural Bioinformatics (RCSB) Protein Data Bank. RCSB Protein Data Bank (2017). doi:10.2210/rcsb_pdb/pdb-econ-imp-2017 |

| Aims: To examine the value and economic impacts of the RCSB. Methods: This study largely followed the research approach set out in [26]. Information on (for example) willingness to pay and salary costs were transferred directly from that study, while usage data were based on usage of the RCSB website. | |

| [8] | Tennant, J. P. et al. The academic, economic and societal impacts of Open Access: an evidence-based review. F1000Research 5, 632 (2016). |

| Aims: To review evidence on academic, economic and social impacts of open access publishing, including brief consideration of open research data. Methods: This was not an empirical study, but gives examples of impacts based on a non-systematic literature review. A short section is included considering evidence on economic impacts on non-publishers. | |

| [22] | Tripp, S. & Grueber, M. Economic Impact of the Human Genome Project. (Battelle Memorial Institute, 2011). |

| Aims: To assess the economic (and other) impacts of genome sequencing. Methods: Input-output modelling based around direct investment in the HGP, investments in follow-on research connected with the HGP, and the wider genomics industry developed and fostered through the HGP. | |

| [20] | Tuomi, L. Impact of the Finnish Open Science and Research Initiative (ATT). (Profitmakers Ltd., 2016). |

| Aims: To analyse the impacts of the Open Science and Research Initiative both nationally and internationally, and to offer recommendations for remainder of the programme. Methods:

|

Appendix B

| Database (+#) | Date | String | Hits | Comments |

|---|---|---|---|---|

| Scopus 1 | 20/09/17 | TITLE-ABS (“open scien*” OR “open data” OR “open research data” OR (“open access” W/1 publ* OR paper* OR journal* OR book*) OR “open metric*”) OR TITLE (“open access”) AND TITLE-ABS-KEY (econom* OR financ* OR cost* OR mone*) | 1926 | Removed keywords from first part as some papers with open data tag that fact there. |

| Web of Science 1 | 20/09/17 | (TS = (“open scien*” OR “open data” OR “open research data” OR “open access publ*” OR “open access paper*” OR “open access journal*” OR “open access book*” OR “open metric*”) OR TI = (“open access”)) AND TS = (econom* OR financ* OR cost* OR mone*) Timespan: All years. Indexes: SCI-EXPANDED, SSCI, A&HCI, CPCI-S, CPCI-SSH, BKCI-S, BKCI-SSH, ESCI, CCR-EXPANDED, IC. | 982 | |

| Science Direct 1 | 20/09/17 | (tak (“open scien*” OR “open data” OR “open research data” OR “open access publ*” OR “open access paper*” OR “open access journal*” OR “open access book*” OR “open metric*”) OR ttl(“open access”)) AND tak (econom* OR financ* OR cost* OR mone*) | 197 | Unclear why this is so limited compared to Scopus. |

| JISC 1 (via Google) | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” site:jisc.ac.uk filetype:pdf | 60 (2 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| Gov.uk (via Google) | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” “research” -”open-access-land” site:gov.uk filetype:pdf | 33,100 (15 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. Excluding departmental OD strategies except BIS. Reviewed until 5 pages passed with no relevant material. |

| Innovate UK | 20/09/17 | N/A—hosted at gov.uk so would be picked up by above. | ||

| AHRC 1 | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” site:ahrc.ac.uk filetype:pdf | 51 (7 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| BBSRC 1 | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” site:bbsrc.ac.uk filetype:pdf | 60 (3 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| ESRC 1 | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” site:esrc.ac.uk filetype:pdf | 78 (1 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| EPSRC 1 | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” site:epsrc.ac.uk filetype:pdf | 74 (5 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| MRC 1 | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” site:mrc.ac.uk filetype:pdf | 107 (1 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| NERC 1 | 20/09/17 | “open science” OR “open data” OR “open research data” site:nerc.ac.uk filetype:pdf | 124 (8 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. NOTE “open access” removed for this search as many open access papers included and over 2000 hits. |

| STFC 1 | 20/09/17 | “open science” OR “open access” OR “open data” OR “open research data” site:stfc.ac.uk filetype:pdf | 65 (2 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| HEFCE 1 | 21/9/17 | “open science” OR “open access” OR “open data” OR “open research data” site:hefce.ac.uk filetype:pdf | 10 (0 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| Google Scholar 1 | 21/9/17 | allintitle: economic “open science” OR “open access” OR “open data” OR “open research data” | 199 (7 saved) | Downloaded sources judged to have a chance of passing screening. |

| Google Scholar 2 | 21/9/17 | allintitle: impact “open science” OR “open data” OR “open research data” | 90 (5 saved) | Downloaded sources judged to have a chance of passing screening. Removed ‘open access’ as many articles on citation impact. |

| ODI 1 | 21/9/17 | “open science” OR “open access” OR “open data” OR “open research data” site:theodi.org filetype:pdf | 44 (0 saved) | Downloaded sources judged to have a chance of passing screening. |

| ODI 2 | 21/9/17 | “open research data” site:theodi.org | 4 (0 saved) | |

| DCC 1 | 21/9/17 | impact “open science” OR “open access” OR “open data” OR “open research data” site:dcc.ac.uk | 2480 (0 saved) | First 100 hits reviewed. |

| Nesta 1 | 21/9/17 | “open science” OR “open access” OR “open data” OR “open research data” site:nesta.org.uk filetype:pdf | 143 (2 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| Nesta 2 | 21/9/17 | impacts “open science” OR “open access” OR “open data” OR “open research data” site:nesta.org.uk | 85 (1 saved) | Downloaded sources judged to have a chance of passing screening. |

| Centre for Open Science 1 | 21/9/17 | “open science” OR “open access” OR “open data” OR “open research data” site:cos.io | 120 (0 saved) | |

| Open Research Funders Group 1 | 21/9/17 | “open science” OR “open access” OR “open data” OR “open research data” site:orfg.org | 5 (0 saved) | |

| Open Access Bibliography 1 | 21/9/17 | Scanned through bibliography, more on impacts on publishers/institutions. | ||

| Universities UK 1 | 27/9/17 | “open science” OR “open access” OR “open data” OR “open research data” site:universitiesuk.ac.uk filetype:pdf | 38 (1 saved) | Limited to PDF, downloaded sources judged to have a chance of passing screening. |

| Universities UK 2 | 27/9/17 | impacts “open science” OR “open access” OR “open data” OR “open research data” site:universitiesuk.ac.uk | 29 (0 saved) | |

| OECD Library 1 | 27/9/17 | impacts “open science” OR “open access” OR “open data” OR “open research data” site:oecd-ilibrary.org | 46 (4 saved) | Downloaded sources judged to have a chance of passing screening. |

| OECD Library 2 | 27/9/17 | “open research data” OR “open data” OR “open access” in title/abstract. | 24 (2 saved) | Search on OECD Library advanced search. |

| Europa.eu 1 | 27/9/17 | “economic impacts” “open science” OR “open access” OR “open data” OR “open research data” site:europa.eu | 2670 (7 saved) | First 50 results reviewed, progressed to next search. |

| Europa.eu 2 | 27/9/17 | impacts “open science” OR “open access” OR “open data” OR “open research data” site:europa.eu | 49,000 (12 saved) | First 100 results reviewed. |

| Europa.eu 3 | 27/9/17 | “open research data” site:europa.eu | 2800 (1 saved) | Link saved is to EU Open Research Data Pilot—to look at in general. |

| EUA 1 | 27/9/17 | “open science” OR “open access” OR “open data” OR “open research data” site:eua.be | 514 (0 saved) | More on implications for universities. |

| Google 1 | 27/9/17 | “economic impacts” “open science” OR “open access” OR “open research data” filetype:pdf | 81300 (6 saved) | First 80 results reviewed (until no relevant links for several pages). |

| Google 2 | 27/9/17 | “economic impacts” “open science” OR “open access” OR “open research data” | 184k (7 saved) | First 80 results reviewed (until no relevant links for several pages). Did not download some docs which were also identified in other searches. |

| Google 3 | 27/9/17 | economic impacts “open science” OR “open access” OR “open research data” | 59m | No additional useful docs identified in first 50 results. |

| Scopus 2 | 27/9/17 | TITLE-ABS (“open scien*” OR “open data” OR “open research data” OR (“open access” W/1 publ* OR paper* OR journal* OR book*) OR “open metric*”) OR TITLE (“open access”) AND TITLE-ABS-KEY (cba OR bca OR “input-output” OR “general equilibrium” OR “return on investment” OR “growth accounting”) | 21 | Terms suggested by reviewer. |

| Scopus 3 | 5/12/17 | ALL (“open access” W/2 “research data”) | 90 | Added to include this term. |

| Scopus 4 | 13/4/19 | TITLE-ABS-KEY (“open scien*” OR “open data” OR “open research data” OR (“open access” W/1 publ* OR paper* OR journal* OR book*) OR “open metric*”) OR TITLE (“open access”) AND TITLE-ABS-KEY (econom* OR financ* OR cost* OR mone* OR cba OR bca OR “input-output” OR “general equilibrium” OR “return on investment” OR “growth accounting”) AND (LIMIT-TO (PUBYEAR, 2019) OR LIMIT-TO (PUBYEAR, 2018)) | 628 | Update search. Items were screened by title and abstract in Scopus, and 11 which met initial criteria were downloaded. |

| Google 4 | 13/4/19 | “economic impacts” “open science” OR “open research data” filetype:pdf | No value provided (3 saved) | Update search. Limited to 2018 onwards. First 80 results reviewed (until no relevant links for several pages). |

| Google 5 | 13/4/19 | “economic impacts” “open science” OR “open research data” | No value provided (0 saved) | Update search. Limited to 2018 onwards. First 80 results reviewed. |

References

- Research Councils UK. RCUK Policy on Open Access and Supporting Guidance. 2013. Available online: https://www.ukri.org/files/legacy/documents/rcukopenaccesspolicy-pdf/ (accessed on 27 June 2019).

- Higher Education Funding Council for England; Research Councils UK; Universities UK. Wellcome Concordat on Open Research Data. 2016. Available online: https://www.ukri.org/files/legacy/documents/concordatonopenresearchdata-pdf/ (accessed on 27 June 2019).

- European Open Science Cloud EOSC Declaration. 2017. Available online: https://ec.europa.eu/ (accessed on 27 June 2019).

- European Commission Open Science Monitor. Available online: https://ec.europa.eu/info/research-and-innovation/strategy/goals-research-and-innovation-policy/open-science/open-science-monitor_en (accessed on 12 April 2019).

- Doyle, M.F. H.R.3427—115th Congress (2017–2018): Fair Access to Science and Technology Research Act of 2017. Available online: https://www.congress.gov/bill/115th-congress/house-bill/3427 (accessed on 14 April 2019).

- Elsabry, E. Claims about benefits of open access to society (Beyond Academia). Expand. Perspect. Open Sci. Commun. C. Diver. Concepts Pract. 2017, 34–43. [Google Scholar] [CrossRef]

- ElSabry, E. Who needs access to research? Exploring the societal impact of open access. Rev. Fr. Sci. l’inform. Commun. 2017, 11. [Google Scholar] [CrossRef]

- Tennant, J.P.; Waldner, F.; Jacques, D.C.; Masuzzo, P.; Collister, L.B.; Hartgerink, C.H. The academic, economic and societal impacts of Open Access: an evidence-based review. F1000Research 2016, 5, 632. [Google Scholar] [CrossRef] [PubMed]

- OECD. Making Open Science a Reality; Organisation for Economic Co-Operation and Development: Paris, France, 2015. [Google Scholar]

- Vicente-Saez, R.; Martinez-Fuentes, C. Open Science now: A systematic literature review for an integrated definition. J. Bus. Res. 2018, 88, 428–436. [Google Scholar] [CrossRef]

- Wilson, S.; Sonderegger, S. Understanding the Behavioural Drivers of Organisational Decision-Making: Rapid Evidence Assessment; HM Government: London, UK, 2016.

- RAND Europe. What Works in Changing Energy-Using Behaviours in the Home? A Rapid Evidence Assessment; Department of Energy and Climate Change: London, UK, 2012.

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.A.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ 2009, 339, b2700. [Google Scholar] [CrossRef] [PubMed]

- Haddaway, N.R.; Collins, A.M.; Coughlin, D.; Kirk, S. The Role of Google Scholar in Evidence Reviews and Its Applicability to Grey Literature Searching. PLoS ONE 2015, 10, e0138237. [Google Scholar] [CrossRef] [PubMed]

- Beagrie, N.; Houghton, J. The Value and Impact of Data Sharing and Curation: A Synthesis of Three Recent Studies of UK Research Data Centres; JISC: London, UK, 2014. [Google Scholar]

- Beagrie, N.; Houghton, J. The Value and Impact of the European Bioinformatics Institute; EMBL-EBI: Cambridge, UK, 2016. [Google Scholar]

- McDonald, D.; Kelly, U. Value and Benefits of Text Mining; JISC: London, UK, 2017. [Google Scholar]

- Houghton, J.; Swan, A.; Brown, S. Access to Research and Technical Information in Denmark; University of Southampton Institutional Research Repository: Southampton, UK, 2011. [Google Scholar]

- Parsons, D.; Willis, D.; Holland, J. Benefits to the Private Sector of Open Access to Higher Education and Scholarly Research; JISC: London, UK, 2011. [Google Scholar]

- Tuomi, L. Impact of the Finnish Open Science and Research Initiative (ATT). 2016. Available online: http://www.doria.fi/handle/10024/127285 (accessed on 27 June 2019).

- ODI. Open Data Means Business; Open Data Institute: London, UK, 2015. [Google Scholar]

- Tripp, S.; Grueber, M. Economic Impact of the Human Genome Project; Battelle Memorial Institute: Columbus, OH, USA, 2011. [Google Scholar]

- Houghton, J.; Rasmussen, B.; Sheehan, P.; Oppenheim, C.; Morris, A.; Creaser, C.; Greenwood, H.; Summers, M.; Gourlay, A. Economic Implications of Alternative Scholarly Publishing Models: Exploring the Costs and Benefits; JISC: London, UK, 2009. [Google Scholar]

- CEPA LLP; Mark Ware Consulting Ltd. Heading for the Open Road: Costs and Benefits of Transitions in Scholarly Communications; Research Information Network (RIN), JISC, Research Libraries UK (RLUK), the Publishing Research Consortium (PRC) and the Wellcome Trust: London, UK, 2011. [Google Scholar]

- Jones, M.M.; Castle-Clarke, S.; Brooker, D.; Nason, E.; Huzair, F.; Chataway, J. The Structural Genomics Consortium: A Knowledge Platform for Drug Discovery; RAND Corporation: Santa Monica, CA, USA, 2014; p. 19. [Google Scholar]

- Sullivan, K.P.; Brennan-Tonetta, P.; Marxen, L.J. Economic Impacts of the Research Collaboratory for Structural Bioinformatics (RCSB) Protein Data Bank. RCSB Protein Data Bank 2017. [Google Scholar] [CrossRef]

- Charlies Beagrie Ltd.; Centre for Strategic Economic Studies. Economic Impact Evaluation of the Economic and Social Data Service; Economic and Social Research Council: Swindon, UK, 2012. [Google Scholar]

- Stuermer, M.; Dapp, M.M. Measuring the promise of open data: Development of the impact monitoring framework. In Proceedings of the 2016 Conference for E-Democracy and Open Government (CeDEM) 2016, Krems, Austria, 18–20 May 2016; pp. 197–203. [Google Scholar]

- Manyika, J.; Chui, M.; Groves, P.; Farrell, D.; van Kuiken, S.; Almasi Doshi, E. Open Data: Unlocking Innovation and Performance with Liquid Information; McKinsey: New York, NY, USA, 2013. [Google Scholar]

- Lateral Economics. Open for Business: How Open Data Can Help Achieve the G20 Growth Target; Omidyar Network: Redwood City, CA, USA, 2014. [Google Scholar]

- Research Information Network; Publishing Research Consortium; JISC. Access to Scholarly Content: Gaps and Barriers; JISC: London, UK, 2011. [Google Scholar]

- Davis, P. Challenging Assumptions on Open Access Cost Savings. Available online: https://scholarlykitchen.sspnet.org/2009/07/16/challenging-assumptions-on-open-access-cost-savings/ (accessed on 12 April 2019).

- McCulloch, A. Discussion on JISC Report on Economic Implications of Alternative Business Models. 2009. Available online: https://blog.alpsp.org/2009/02/discussion-on-jisc-report-on-economic.html (accessed on 27 June 2019).

- Jubb, M.; Plume, A.; Oeben, S.; Brammer, L.; Johnson, R.; Butun, C.; Pinfield, S. Monitoring the Transition to Open Access; Universities UK: London, UK, 2017. [Google Scholar]

- Beagrie, N.; Houghton, J. The Value and Impact of the Archaeology Data Service: A Study and Methods for Enhancing Sustainability; Charles Beagrie Ltd.: Salisbury, UK, 2013. [Google Scholar]

- Beagrie, N.; Houghton, J. The Value and Impact of the British Atmospheric Data Centre; JISC: London, UK, 2013. [Google Scholar]

- Breidert, C.; Hahsler, M.; Reutterer, T. A Review of Methods for Measuring Willingness-to-Pay. Innov. Mark. 2006, 2, 8–32. [Google Scholar]

- Lee, W.H. Open access target validation is a more efficient way to accelerate drug discovery. PLoS Biol. 2015, 13, e1002164. [Google Scholar] [CrossRef]

- JISC The Text and Data Mining Copyright Exception: Benefits and Implications for UK Higher Education. Available online: https://www.jisc.ac.uk/guides/text-and-data-mining-copyright-exception (accessed on 12 April 2019).

- LIBER Copyright Reform: Help us Ensure an Effective TDM Exception! LIBER: The Hague, The Netherlands, 2017.

- Leeson, P.D.; St-Gallay, S.A. The influence of the “organizational factor” on compound quality in drug discovery. Nat. Rev. Drug Discov. 2011, 10, 749–765. [Google Scholar] [CrossRef]

- Savage, N. Competition: Unlikely partnerships. Nature 2016, 533, S56–S58. [Google Scholar] [CrossRef] [PubMed]

- Chalmers, I.; Glasziou, P. Avoidable waste in the production and reporting of research evidence. Lancet 2009, 374, 86–89. [Google Scholar] [CrossRef]

- Bloom, N.; Jones, C.I.; Van Reenen, J.; Webb, M. Are Ideas Getting Harder to Find? National Bureau of Economic Research: Cambridge, MA, USA, 2017. [Google Scholar]

- Houghton, J.; Sheehan, P. The Economic Impact of Enhanced Access to Research Findings; Centre for Strategic Economic Studies, Victoria University: Melbourne, Australia, 2006. [Google Scholar]

- SPARC From Ideas to Industries: Human Genome Project. Available online: https://sparcopen.org/impact-story/human-genome-project/ (accessed on 12 April 2019).

- Williams, H.L. Intellectual Property Rights and Innovation: Evidence from the Human Genome. J. Polit. Econ. 2013, 121, 27. [Google Scholar] [CrossRef]

- Structural Genomics Consortium Mission and Philosophy. Available online: https://www.thesgc.org/about/what_is_the_sgc (accessed on 12 April 2019).

- RAND Europe. Open Science Monitoring Impact Case Study—Structural Genomics Consortium; European Commission Directorate-General for Research and Innovation: Brussel, Belgium, 2017. [Google Scholar]

- Arshad, Z.; Smith, J.; Roberts, M.; Lee, W.H.; Davies, B.; Bure, K.; Hollander, G.A.; Dopson, S.; Bountra, C.; Brindley, D. Open access could transform drug discovery: A case study of JQ1. Expert Opin. Drug Discov. 2016, 11, 321–332. [Google Scholar] [CrossRef] [PubMed]

- Tensha Therapeutics Tensha Therapeutics to Be Acquired by Roche. Available online: https://www.businesswire.com/news/home/20160111005488/en/Tensha-Therapeutics-Acquired-Roche (accessed on 12 April 2019).

- Perkmann, M.; Schildt, H. Open data partnerships between firms and universities: The role of boundary organizations. Res. Policy 2015, 44, 1133–1143. [Google Scholar] [CrossRef]

- Structural Genomics Consortium International Structural Genomics Consortium Announces $48.9 MILLION in Additional Funding to Continue the Search for New Medicines 2011. Available online: https://www.pfizer.com/sites/default/files/partnering/092811_international_structural_genomics_consortium.pdf (accessed on 12 April 2019).

- Montreal Neurological Institute and Hospital Open Science. Available online: https://www.mcgill.ca/neuro/open-science-0 (accessed on 12 April 2019).

- Montreal Neurological Institute and Hospital Measuring the Impact of Open Science. Available online: https://www.mcgill.ca/neuro/open-science-0/measuring-impact-open-science (accessed on 12 April 2019).

- SPOMAN Open Science About OS|Spoman OS. Available online: https://spoman-os.org/about-os/ (accessed on 12 April 2019).

- Weeber, M.; Vos, R.; Klein, H.; de Jong-van den Berg, L.T.W.; Aronson, A.R.; Molema, G. Generating Hypotheses by Discovering Implicit Associations in the Literature: A Case Report of a Search for New Potential Therapeutic Uses for Thalidomide. J. Am. Med. Inform. Assoc. 2003, 10, 252–259. [Google Scholar] [CrossRef] [PubMed]

- Houghton, J.; Sheehan, P. Estimating the Potential Impacts of Open Access to Research Findings. Econ. Anal. Policy 2009, 39, 127–142. [Google Scholar] [CrossRef]

- Johnson, P.A.; Sieber, R.; Scassa, T.; Stephens, M.; Robinson, P. The Cost(s) of Geospatial Open Data. Trans. GIS 2017, 21, 434–445. [Google Scholar] [CrossRef]

- Huber, F.; Wainwright, T.; Rentocchini, F. Open data for open innovation: managing absorptive capacity in SMEs. R&D Manag. 2018. [Google Scholar] [CrossRef]

- Morgan, M.R.; Roberts, O.G.; Edwards, A.M. Ideation and implementation of an open science drug discovery business model—M4K Pharma. Welcome Open Res. 2018, 3, 154. [Google Scholar] [CrossRef]

- Chataway, J.; Parks, S.; Smith, E. How Will Open Science Impact on University-Industry Collaboration? Foresight STI Gov. 2017, 11, 44–53. [Google Scholar] [CrossRef]

- European Commission. Validation of the Results of the Public Consultation on Science 2.0: Science in Transition; European Commission: Brussels, Belgium, 2015. [Google Scholar]

- Mueller-Langer, F.; Andreoli-Versbach, P. Open access to research data: Strategic delay and the ambiguous welfare effects of mandatory data disclosure. Inf. Econ. Policy 2017, 42, 20–34. [Google Scholar] [CrossRef]

- Caulfield, T.; Harmon, S.H.; Joly, Y. Open science versus commercialization: a modern research conflict? Genome Med. 2012, 4, 17. [Google Scholar] [CrossRef] [PubMed]

- De Vrueh, R.L.A.; Crommelin, D.J.A. Reflections on the Future of Pharmaceutical Public-Private Partnerships: From Input to Impact. Pharm. Res. 2017, 34, 1985–1999. [Google Scholar] [CrossRef] [PubMed]

- Mark Ware Consulting Ltd. Access by UK Small and Medium-Sized Enterprises to Professional and Academic Information; Publishing Research Consortium: Hamburg, Germany, 2009. [Google Scholar]

- Vines, T. Is There a Business Case for Open Data? Available online: https://scholarlykitchen.sspnet.org/2017/11/15/business-case-open-data/ (accessed on 12 April 2019).

- Bilder, G. Crossref’s DOI Event Tracker Pilot. Available online: https://www.crossref.org/blog/crossrefs-doi-event-tracker-pilot/ (accessed on 12 April 2019).

- Mowery, D.C.; Ziedonis, A.A. Markets versus spillovers in outflows of university research. Res. Policy 2015, 44, 50–66. [Google Scholar] [CrossRef]

- Fukugawa, N. Knowledge spillover from university research before the national innovation system reform in Japan: localisation, mechanisms, and intermediaries. Asian J. Technol. Innov. 2016, 24, 100–122. [Google Scholar] [CrossRef]

- Keseru, J. A New Approach to Measuring the Impact of Open Data. Available online: https://sunlightfoundation.com/2015/05/05/a-new-approach-to-measuring-the-impact-of-open-data/ (accessed on 12 April 2019).

- Publication Bias in Meta-Analysis: Prevention, Assessment and Adjustments; Rothstein, H., Sutton, A.J., Borenstein, M., Eds.; Wiley: Chichester, UK; Hoboken, NJ, USA, 2005; ISBN 978-0-470-87014-3. [Google Scholar]

- Gold, E.R.; Ali-Khan, S.E.; Allen, L.; Ballell, L.; Barral-Netto, M.; Carr, D.; Chalaud, D.; Chaplin, S.; Clancy, M.S.; Clarke, P.; et al. An open toolkit for tracking open science partnership implementation and impact. Gates Open Res. 2019, 3, 1442. [Google Scholar] [CrossRef]

- OpenMinted About. OpenMinTeD. 2015. Available online: http://openminted.eu/omtd-publications/ (accessed on 27 June 2019).

- Konfer About Konfer. Available online: https://www.konfer.online/media (accessed on 12 April 2019).

- Bishop Grosseteste University About LORIC—BGU. Available online: https://www.bishopg.ac.uk/loric/about-loric/ (accessed on 27 June 2019).

- Giovani, B. Open data for research and strategic monitoring in the pharmaceutical and biotech industry. Data Sci. J. 2017, 16. [Google Scholar] [CrossRef]

- Houghton, J. Open Access: What are the Economic Benefits? A Comparison of the United Kingdom, Netherlands and Denmark; Social Science Research Network: Rochester, NY, USA, 2009. [Google Scholar]

| 1 | These interviews will also be used to inform and refine the case study objectives and interview schedules. |

| 2 | This criterion was added after the protocol was finalised as many documents were identified in this category and, as set out in the introduction, such impacts were not the focus of this review. |

| 3 | |

| 4 | |

| 5 | Please note, however, that no financial estimates were discovered for the scale of such savings. |

| 6 | For comparison, UK GDP is approximately 8.5 times that of Denmark. |

| 7 | Please note that the authors emphasize that, because of some differences in the way these figures were calculated between studies, they are not directly comparable with each other. |

| 8 | |

| 9 |

| Open Science | Economic Impact | |

|---|---|---|

| Concept | Open science Open data Open research data Open access Open metrics | Economic impact Financial impact Monetary impact Cost/benefit analysis Input-output General equilibrium modelling Return on investment Growth accounting |

| Search term | “open scien*” “open data” “open research data” “open access” W/1 publ* OR paper* OR journal* OR book* “open metric*” | econom* financ* cost* mone* cba bca “input-output” “general equilibrium” “return on investment” “growth accounting” |

| Scopus example | TITLE-ABS-KEY (“open scien*” OR “open data” OR “open research data” OR (“open access” W/1 publ* OR paper* OR journal* OR book*) OR “open metric*”) OR TITLE (“open access”) AND TITLE-ABS-KEY (econom* OR financ* OR cost* OR mone* OR cba OR bca OR “input-output” OR “general equilibrium” OR “return on investment” OR “growth accounting”) | |

| Include if Source | Exclude if Source |

|---|---|

| Is in English | Is not in English |

| Includes discussion or analysis of making research methods/findings freely available (open access publishing). AND/OR Includes discussion or analysis of making research data freely available (open research data). | Does not include discussion or analysis of the economic impacts of making research methods, findings or data openly available. |

| Includes explicit consideration (informed by empirical evidence) of direct or indirect economic impacts (with or without quantitative value estimates). | Does not explicitly consider economic impacts based on empirical evidence. |

| Only considers open source software without discussion of open data or open access publishing. | |

| Reports work in an open access publication or based on open data, but is not explicitly about these concepts. | |

| Focuses only on impacts within the scholarly communications ecosystem of publishers, universities and research funders2. | |

| Only considers open data in general, as opposed to specifically research data. |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fell, M.J. The Economic Impacts of Open Science: A Rapid Evidence Assessment. Publications 2019, 7, 46. https://doi.org/10.3390/publications7030046

Fell MJ. The Economic Impacts of Open Science: A Rapid Evidence Assessment. Publications. 2019; 7(3):46. https://doi.org/10.3390/publications7030046

Chicago/Turabian StyleFell, Michael J. 2019. "The Economic Impacts of Open Science: A Rapid Evidence Assessment" Publications 7, no. 3: 46. https://doi.org/10.3390/publications7030046

APA StyleFell, M. J. (2019). The Economic Impacts of Open Science: A Rapid Evidence Assessment. Publications, 7(3), 46. https://doi.org/10.3390/publications7030046