Abstract

In the landscape of Open Science, Open Data (OD) plays a crucial role as data are one of the most basic components of research, despite their diverse formats across scientific disciplines. Opening up data is a recent concern for policy makers and researchers, as the basis for good Open Science practices. The common factor underlying these new practices—the relevance of promoting Open Data circulation and reuse—is mostly a social form of knowledge sharing and construction. However, while data sharing is being strongly promoted by policy making and is becoming a frequent practice in some disciplinary fields, Open Data sharing is much less developed in Social Sciences and in educational research. In this study, practices of OD publication and sharing in the field of Educational Technology are explored. The aim is to investigate Open Data sharing in a selection of Open Data repositories, as well as in the academic social network site ResearchGate. The 23 Open Datasets selected across five OD platforms were analysed in terms of (a) the metrics offered by the platforms and the affordances for social activity; (b) the type of OD published; (c) the FAIR (Findability, Accessibility, Interoperability, and Reusability) data principles compliance; and (d) the extent of presence and related social activity on ResearchGate. The results show a very low social activity in the platforms and very few correspondences in ResearchGate that highlight a limited social life surrounding Open Datasets. Future research perspectives as well as limitations of the study are interpreted in the discussion.

1. Introduction

Open Science is the movement that advocates for more public and accessible science [1,2], and has progressively encompassed new researchers’ practices and identities that go beyond the idea of digital science towards open and social activities [3,4,5]. In the Educational Technology sector, Open Science is also regarded as a shorthand for the transformative intersection of digital content, networked distribution, and open practices [5]. Authors have conceptualized theoretical frameworks and epistemological approaches to analyse the relationship between scholarly practice and technology, and have explored new forms of scholarship fostered by social media and social network sites [6,7,8].

In this scenario, Open Data (OD) as “data that anyone can access, use and share”1, plays a crucial role, as data are one of the most basic components of research, despite format differences in scientific disciplines [9]. Opening up data is a recent concern for policy makers and researchers, as the basis for good Open Science practices [10]. In fact, data-driven research encompasses a massive production of digitalized data, which in turn allows for appropriate communication and sharing, thus implying new discoveries and more balanced efforts from the community of researchers. A common factor underlying these new practices concerns the relevance of promoting Open Data circulation and reuse, which is often considered solely a social form of knowledge sharing and construction. However, whilst data sharing is strongly encouraged by policy making in disciplines such as Physics and Genomics, this concept is far less developed in the Social Sciences [9].

In this article, the authors explore practices of OD publication and sharing in the field of Educational Technology, a branch of educational research under the Social Sciences. Educational Technology is relatively young academic discipline, with academic references starting to appear in the 1970s [11,12]. The idea to do this arose from the extensive research experience of both authors in this field. The overarching aim of the study is to demonstrate how Open Data practices are emerging, and to what extent these align with the principles of Open Science. The focus on the social life of Open Data goes beyond the specific Open Data portals and their social features (that is, who has been reading, citing, recommending, sharing, or downloading for reuse), and considers academic social networks, such as ResearchGate, for sharing Open Datasets with a wider public. The choice to investigate the social activity concerned with Open Datasets in ResearchGate derives from its increasing popularity among scholarly communities, as previously reported in recent studies [13], as well as being a competitor of institutional repositories for some scholars’ habits [14]. Furthermore, as academic social network sites primarily focus on social interest and activity around science, and for fostering networked practice in scholarly professional learning [15], another assumption is that identifying the social activity concerned with Open Data might indicate dynamics of professional learning and scholars’ engagement with Open Science on these platforms.

Finally, this study also discusses the characteristics and the quality of Open Datasets, as well as their “social life”, in terms of the visible activity traced back by the platform where the OD is stored and the convergent storage and activity on ResearchGate.

2. Background

2.1. From Open Science to Open Data: An Emergent Agenda

While the European Union initially adopted the term Science 2.0 [16] in an attempt to follow the participatory nature of the Web 2.0, the concept of Open Science has gained ground and covered a number of goals, namely, public accessibility and transparency of scientific communication, public availability and reusability of scientific data, transparency in experimental methodology, observation and collection of data, and use of web-based tools/infrastructure to facilitate collaboration [17]. In this scenario, Open Data acquire a crucial importance in Open Science as an object of socialization and exchange, thus shaping the ideals of openness in science [10]. Open Data allows for all researchers to replicate scientific experiments, thus aligning with the goals of transparency, and could be reused in further processing to generate new results as secondary data. As such, not only can Open Data be adopted by other researchers, but they can be also mined by the industry in faster cycles of research and development (R&D) [13]. Moreover, Open Data aligns with the goals of the open access movement, also embedded into the concept of Open Science. Although this movement has a prior trajectory, it also addresses the idea that all public research should be made freely available and accessible. The concept of Open Data expands on this idea by making the units of research (data) open [18].

Although the centrality of Open Data became a reality for the European Commission throughout the Mallorca Declaration of 2016 [18], today, several international organizations are increasingly dealing with data sharing through a number of funded projects. The Open Aire portal, to ensure visibility of Open Data produced within the European research framework Horizon 20202 [19], the Wellcome Trust [20], the Netherlands Organization for Scientific Research (NWO) [21], the European Organization for Nuclear Research (CERN)’s policies [22]; and the Bill and Melinda Gates Foundation [23], are among the most relevant initiatives.

Nevertheless, the practices around Open Data are unevenly distributed across scientific fields, and in most areas, the concepts, tools, and techniques to share data are little known [24]. For example, McKiernan et al. [24] have shown several benefits of data sharing in Applied Sciences, Life Sciences, Maths, Physical Science, and Social Sciences, areas where the advantages are often reflected in the visibility of research in terms of citation rates. However, they have also pointed out the need for deconstructing several “myths” in adopting Open Science practices and data sharing. In this regard, the effort made by the research community to generate common principles for the quality of Open Data should be considered. The FAIR (Findability, Accessibility, Interoperability, and Reusability) data principles [25] are an expression of this endeavour, aiming at introducing clear parameters for Open Data associated not only with humans, but also with machine tasks throughout algorithms and workflows. In the field of educational science, the issue is relatively new, and thus requires specific attention in order to overcome the initial state of aversion, as well as the fragmentation of incipient practices [26].

Moreover, an important dimension of new scholarly practices has been the bottom-up movement of networked scholarship, which went in the direction of sharing scientific knowledge throughout novel channels [27,28,29]. Although academic social network sites have played an important role in this sense, their usage has been questioned because of their non-institutional nature, or because they are challenging scholars’ habits in how they deposit their publications [14]. However, as discussed in the next section, the social features of academic practice reveal pathways of engagement and professional learning that could cover significant scholars’ knowledge and skills gap. To our knowledge, there is no research that has investigated the relevance of social activity on Open Datasets as primary objects of scientific knowledge.

2.2. Social Media and Networked Scholarship: How Scholars Share and Build Professionalism in the Digital Era

Recent research has suggested that scholars are increasingly using social media to enhance scholarly communication by strengthening mutual relationships, facilitating peer collaboration, publishing and sharing research products, and discussing research topics in open and public formats [29,30,31]. Studies have stressed how scholars today are familiar with blogs, wikis, general and academic social network sites, and multimedia sharing—at all stages of the research lifecycle—from identifying research opportunities to disseminating final findings [32,33,34,35]. Among the reasons for using social media in academic practice, keeping up to date, maintaining and strengthening networks, and increasing visibility with positive implications for career progression have been identified as major factors for engaging in social media [7]. Other factors that influence scholars’ use of social media are concerned with making connections and developing networks, openness and sharing, self-promotion, and peer support [36].

Along with the investigation of different social media for scholarly communication, authors have also studied digital scholarship as an emergent scholarly system that intersects mainstream academia with its proper techno-cultural system [7,15]. The four dimensions of scholarship—discovery, integration, application, and teaching—as redefined by Boyer in his seminal work [37], have been increasingly affected by the values and the ideology of digital and Open Science, with the broad aim of promoting scholarly networking and the public sharing of scientific knowledge among a wider public. In this light, digital scholarship is increasingly being conceived as a more inclusive approach to the construction and sharing of knowledge and means of scholarly public engagement [5].

The authors have also contended the fragmentation of studies relating to digital scholarship, with diversified disciplinary perspectives [38]. At least two theoretical approaches have recently emerged, with the aim of conceptualizing the relationship between scholarly practice and technology, as well as new forms of scholarship fostered by social media. The first, networked participatory scholarship, has been conceived as the emergent practice of scholarly use of participatory technologies and social network sites, with the purpose of sharing, improving, and validating scholarship [27]. In this approach, platforms such as Facebook, Twitter, Academia.edu, and Mendeley are found to provide support for acquiring, testing, validating, and sharing scholarly knowledge in university subcultures of “invisible college”. In the second approach, social scholarship, increasing social media use in scholarly practice is examined as a means through which to encompass new ways for academia to accomplish scholarship, through values such as the promotion of users and decentralized accessible knowledge [30].

However, other authors have pointed out that scholars’ experiences in social media are fragmented and not well understood, thus demanding more focused research on the day-to-day realities of social media for scholarship [31]. Others have highlighted how the three dimensions of scholarly practice—scholarship, openness, and digitality—seem to resemble an impossible triangle that creates tensions in practice between the traditional values of disciplinary scholarship (e.g., record of publication integrity) and open teaching/public engagement (e.g., communication of research results with the general public) [39]. Other challenges were reported concerning institutional policies that tend to discourage scholars from unconventional publishing practices [15,40]. The result is that network engagement today is progressively involving individuals rather than roles or institutions, and is creating an emergent scholarly system of its own. In this respect, scientists are progressively shaping an increasingly complex academic system, with its own values and demands of new responses to both internal and external stimuli [27].

When analysing the social media that are influential for academic practice, most studies are focused on Twitter [4,8,15], or on academic social network sites such as ResearchGate and Academia.edu [13]. Indeed, ResearchGate and Academia.edu have become the most popular social networking services developed specifically to support academic and research practices [41]. However, while most of the research on these platforms has been conducted in the library and information sciences as deployments for reputation building and alternative ranking systems [42,43,44,45], very few studies have investigated the use of ResearchGate and Academia.edu in the light of the diverse theoretical frameworks, and have aimed at analysing the social digital scholarship practice [46]. The reasons for the limited adoption of these platforms might be related to criticism raised in the scholarly community, which has questioned the reliability and impact of ResearchGate metrics, which makes it hard to compare with other popular standard scores [47,48,49,50].

In one of these studies, it was found that scientists are apparently willing to share copies of their publications on academic social networking sites more than in institutional repositories [14]. In the 13 top Spanish universities that were investigated, the majority of the articles that were not available in the institutional repositories were made available in full text on ResearchGate. However, to our knowledge, no specific investigation on Open Datasets sharing in ResearchGate has been conducted.

This study aims to fill this gap by analysing the extent of the presence and related social activity of a number of Open Datasets in the Educational Technology field. They were identified in Open Data repositories and sought in ResearchGate, so as to provide preliminary evidence of scholarly practice concerned with data sharing as open scholarly resources.

3. Method

3.1. Rationale of the Study and Research Questions

The aim of the study is to show how Open Data related practices are emerging, and to which extent these align with the principles of Open Science. In order to achieve this aim, the research design was based on an exploratory study that analysed Open Datasets in the field of Educational Technology, and explored the academics’ social practices relating to these objects. The operationalization of the construct Open Data refers here to open research data. According to the recent European Union guidelines, “In a research context, examples of data include statistics, results of experiments, measurements, observations resulting from fieldwork, survey results, interview recordings and images. The focus is on research data that is available in digital form. Users can normally access, mine, exploit, reproduce and disseminate openly accessible research data free of charge” [18]. We will also adopt the key term “Open Datasets”, which will refer to the packages and presentation of Open Data. The term comes from the research area of computer science, but has been adopted more recently for all types of data, particularly because of the digital form in which most data are presented nowadays (https://en.wikipedia.org/wiki/Data_set). We further defined the quality of a dataset adopting the FAIR data principles. The FAIR data principles compliance [19] are a set of guiding principles in order to make data findable, accessible, interoperable, and reusable [51]. These principles provide guidance for scientific data management and stewardship, and are relevant to all stakeholders in the digital ecosystem. The detection of these principles was made on the basis of the following conceptualizations: (1) the principle of findability encompasses global and unique digital identifiers, rich metadata, and indexation as searchable resources; (2) the principle of accessibility requires metadata that can be retrieved through a standardized communication protocol, which is open, free, and universally implementable; (3) the principle of interoperability encompasses formal, accessible, shared, and broadly applicable language for knowledge representation, with metadata that uses vocabularies compliant with the FAIR principles and includes qualified references; and (4) the principle of re-usability implies that there is a plurality of accurate and relevant attributes of metadata in place. It requires that licenses of usage are clear, associated with metadata provenance, and in accordance with disciplinary field standards. It is worth mentioning that all of the FAIR principles support the semantic interoperability, but the “I” stands more for the syntax, and hence the formal interoperability of programs adopted to process and present data.

Finally, the operationalization of the construct “academics’ social practices” in this study is based on the metrics made available by the digital platforms where the OD are placed. These metrics generally consist of information on the number of downloads, citations, comments, and sharing.

In light of these aims, the research questions addressed by this study are as follows:

- Do researchers in the field of Educational Technology publish Open Datasets (ODs)?

- To which extent are ODs compliant with the FAIR data principles?

- What is the social life relating to the ODs in terms of the metrics provided by the OD portals? As a subsidiary question, (3a.) to what extent do Open Data portals allow researchers to cultivate social practices around OD?

In order to investigate OD presence in ResearchGate, the following research aim guided the second part of the study:

- 4.

- Analysis of the presence of the selected OD in ResearchGate and of the type of social activity OD exhibited by OD according to ResearchGate metrics.

3.2. Sampling

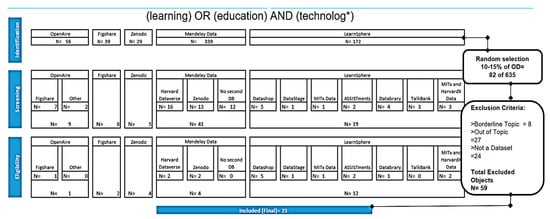

The systematic search of ODs in the area of Educational Technology was deployed as follows (Figure 1):

Figure 1.

Workflow: Selection of Open Datasets in five Open Data (OD) repositories.

- Five OD repositories were employed, namely: OpenAire (https://www.openaire.eu/), Figshare (https://figshare.com/), Zenodo (https://about.zenodo.org/), MendeleyData (https://data.mendeley.com/), and LearnSphere (http://learnsphere.org/). They were selected taking into consideration their geographical and political importance, as in the case of OpenAire; the number of objects archived and the number of years operating with OD, as in the case of Figshare, Zenodo, and Mendeley Data (these are OD repositories that have operated for more than five years, with above one million of objects and several millions of visits); and the relevance of the thematic sector, which aggregates the data from other seven Open Data repositories on educational research data (e.g., LearnSphere).

- A general search was conducted on the OD repositories search engines, which included key terms such as “learning”, or “education” and “technolog*”. This research yielded 56 objects for OpenAire, 39 for Figshare, 29 for Zenodo, 339 for MendeleyData, and 172 for LearnSphere. Overall, an initial number of 635 Open Datasets were found. Data extraction was conducted on 5 May 2018.

- A progressive number was assigned to each of the 635 objects. Hence, a sample of 15% of Open Datasets was randomly selected using the technique of generating a random sequence from 1 to 635, and extracting a sample of 82 objects with the number randomly assigned. The random list was created with the tool “Sequence Generator“ from Random.org (https://www.random.org/). This random extraction was adopted as the type of analysis over each of the 635 objects extracted (ODs) could not be performed manually within the given time assigned to the research project. This limitation was overcome both by the simple random sampling of a minimum number of objects that respected the 95% confidence level and at the higher confidence interval of 10%. While these are not optimal measures, they are acceptable for exploratory study purposes [45].

- The files and metadata of the 82 objects were analysed and some exclusion criteria were applied, as follows: the alignment of the object with the concept of dataset (a file or number of files containing raw data that can be analysed by other researchers as it is), and the pertinence with the topic of Educational Technology (e.g., technology-enhanced learning, online learning, and the adoption of digital tools in education). However, we still defined OD as a broad concept, because of the initial diversification of the objects observed on the OD repositories. To this regard, we selected all of the OD that was at least human readable with no limitations of access (paywalls, registration to see the full files, and requests to authors). After this step, 24 objects were eliminated, because they could not be considered a dataset (these files consisted of PDF files with presentations or the full article); eight objects were eliminated for being “borderline” (studies on learning machine code applied to education), and 27 objects were considered completely out of topic (most of them relating to machine learning studies). Overall, 56 objects were excluded.

- Following this, for each of the remaining 23 objects, the metadata were verified. The characteristics were annotated in a database where the objects were classified according to the analytic dimensions generated by the authors (see the section “Instruments and data collection”).

- Moreover, the 23 objects were also sought on the commercial academic social network site ResearchGate in order to analyse social activity in this platform.

Appendix A shows main information on the 23 Open Datasets.

3.3. Instruments and Data Collection

The analysis was divided into two steps, as follows: (1) exploration of Open Datasets in OD repositories and (2) complementary analysis on ResearchGate.

In regards to the first step, the 23 objects were analysed according to a number of categories, in order to explore and characterize each object. The categories were elaborated and discussed between the two authors, on the basis of the research questions. Table 1 shows the complete set of categories and codes. The categories are defined in the second column, and refer to a diverse conceptual basis. The research topics were established through an inductive process based on the analysis of the keywords and abstracts of the Open Datasets, and agreement between the authors was almost fully achieved (=20; 87%). The data type and number of downloads and views were extracted directly from the datasets. As for the FAIR principles, each Open Dataset was analysed on the basis of the existing principles, as reported in Table 1.

Table 1.

Codebook with the labels and values used in the codification of datasets.

After the analysis, the descriptive statistics were calculated for the frequencies of cases under a specific category. For social activity, the metrics in the OD repositories—consisting of the number of downloads—were calculated.

In terms of step two, the 23 Open Datasets were sought in ResearchGate using the same title adopted in the OD repository. In this case, the metrics of social activity were retrieved for both the Open Dataset and, if existing, for the publication to which the Open Dataset could be associated. Following this, the metrics of ResearchGate—citations, recommendations, reads, followers, and comments—were calculated.

All of the data collected and analysed in this study have been published at the OD repository Zenodo as Open Data [52].

4. Results

The following section presents the research results in response to the four research aims.

Q1. Do researchers in the field of Educational Technology publish Open Data sets?

Prior to conducting specific searches and selecting the 23 datasets, all of the datasets within two relevant data repositories—Mendeley Data (international) and Zenodo (European)—were explored. Table 2 shows the numbers for Social Sciences and Educational Technology as research areas.

Table 2.

Datasets concerned with Social Sciences and Educational Technology areas.

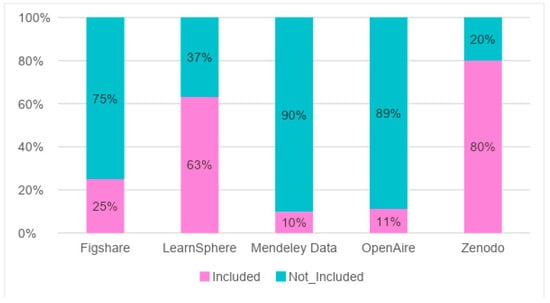

Initially, we observed that the datasets in Social Sciences across the data repositories remained stable, while this was not the case for the Educational Technology sector. While the number of objects supports the assumption that there are practices of Open Data in the field of Educational Technology, the difference between the two portals is puzzling. One possible explanation is that the search tools of Zenodo encompass a more accurate classification of the datasets. This is also confirmed by the data shown in Figure 2, which reports the distribution of the 82 selected datasets that were included and excluded across the five portals. However, while some data repositories specifically curate the topic of Educational Technology (LearnSphere), other generalist repositories (e.g., OpenAire) collect objects whose identification through the category of “educational” and “technolog*” is more imprecise. An exception of this was Zenodo, which mostly collects projects related to the Horizon 2020 program, and provides more elaborated instruments for the storage and retrieval of research data.

Figure 2.

Datasets included and excluded in the five data repositories.

As for the research topics covered in the datasets, a relevant part (n = 11) relates to the data mining and analytics of students’ logs tracked in online platforms, with three concerned with massively open online courses (MOOCs), four with intelligent tutoring systems, and four with prediction in learning processes. Another group of topics relate to the innovations in teaching and learning, analysis, and models in learning processes (n = 6); the topic of teachers and trainers’ professional development is represented in three datasets. Only one is related to a literature review that analyses the theoretical issues in Educational Technology, and two refer to the topic of open education science.

Q2. To which extent are ODs compliant with the FAIR data principles?

As for the analysis of the 23 datasets according to the four FAIR categories, it was found that the findability principle was fully accomplished in all 23 datasets. All of the datasets are placed in data repositories that have digital and permanent identifiers where data are indexed and searchable under database queries, and require a minimum of metadata in order to accomplish the process of data uploading. Conversely, issues such as interoperability and accessibility presented some problems. In a number of cases, the type of files required proprietary software to be opened, and were not interoperable. One of the weakest issues was the lack of references to other qualified metadata systems. There was no evidence of cases in which metadata were present, which followed a standardized protocol and other well-known ontologies. Finally, relating to the reusability, 26% (n = 6) of cases that could not be classified as reusable do not possess a clear usage license.

The categorization of file typology containing raw data revealed that 12 datasets contained a main file in .CSV or .TXT format, which is an interoperable format (data use a formal, accessible, shared, and broadly applicable language for knowledge representation). While other types of formats are also interoperable (PDF, SAV, XLSX, and ZIP), they might not be machine readable3 and would require procedures of data extraction and transformation for successive analysis, in spite of being “human readable” and open under these last criteria.

Q3. What is the social life relating to the ODs in terms of the metrics provided by the OD portals? Q3a. To what extent do Open Data portals allow researchers to cultivate social practices around OD?

While the three Open Data repositories (Figshare, Zenodo, and Mendeley Data) and the two Open Data portals (OpenAire and LearnSphere) present diversified features, one common characteristic is that their main goals are content searchability and retrieval.

In the case of the two data portals, one issue in common is the aggregation of several data repositories. Moreover, the two portals endow researchers with contextual information on the provided affordances. In the case of OpenAire, where the main target is European researchers and research institutions, information on the policy context is provided. On the contrary, LearnSphere provides more educational and pedagogical information for consultation, as well as tools for the specific target of international educational researchers (most of the contributors are affiliated to the United States). Both portals offer an engine to make general and advanced searches; however, while OpenAire concentrates all sorts of objects concerned with European Union research, LearnSphere is specific for data. For example, even when selecting the data option, many retrievable objects are not proper datasets. In both portals, there are tools for community collaboration and dialogue external to the objects’ search. Once a dataset is retrieved, there is no possibility to see if the object is downloaded, consulted, or used by others. However, in some repositories, it is possible to find some social indicators. For instance, in the case of OpenAire, a relevant part of the datasets is actually located on the data repository Zenodo, hence the metrics of this can be considered. In the case of LearnSphere, some datasets relate to data repositories that show some social metrics (e.g., downloads—in the case of Harvard Dataverse), while others do not consider social metrics at all (e.g., DataShop).

As for the three data repositories, the targets were supposedly international and undifferentiated. All three allow for researchers to upload all types of objects (from final publications to reports, working documents, pre-registration documents, etc.), whilst Mendeley can embed data repositories from other data repositories, and as such, can be considered as a “meta” repository. The payoff in this case, as reported in response to Q1, is less accuracy at the time of retrieval (both Mendeley and OpenAire showed the lowest number of the datasets selected). However, it is worth noting there was evidence of strong imprecision in the type of objects one can retrieve, despite having specified the search for datasets. All of the repositories offer categories to restrict search, as well as an internal engine based on Boolean operators.

Whilst Mendeley Data4 does not provide any metrics of social activity in terms of downloads, citations, recommendations, or comments, Figshare5 and Zenodo include minimal indicators. Moreover, social activity is also shown in terms of an “Altmetric Attention Score”. Almetrics is a commercial tool embedded in other platforms; it calculates the amount of attention derived from an automated algorithm, representing a weighted count of the number of shares on generic social networks—such as Twitter or Facebook—and other specific professional and academic social networks—such as Mendeley or ResearchGate6. In the case of Zenodo, only views and downloads are computed. In the case of portals, because of their connection with repositories, the number of downloads can be retrieved, but the information concerned with the views is not always available. Table 3 shows the social activity in terms of downloads, views, and Altmetrics for the 23 datasets.

Table 3.

Metrics of social activity.

Nonetheless, in all three repositories, there is some kind of evidence of partial coherence between the number of views (initial contact), downloads (potential interest in use), and shares on social media. However, this trend cannot be considered for the whole sample, as 20 cases do not display this information. Another significant trend is the irregular distribution of downloads. While a “champion” dataset has 11,417 downloads (topic: MOOCs and LearnSphere), and three others have more than 100 downloads, the remaining 10 datasets have obtained limited attention. It must be acknowledged that 9 out of 23 datasets did not have any tool to observe users’ activity, as the platforms where the data was stored adopted older technologies with regard to most data repositories operating recently. This was the case for DataShop and other datasets accessible via LearnSphere, belonging to pioneering projects relating to OD in education.

Q4. Analysis of the presence of the selected OD in ResearchGate and of the type of social activity OD exhibited by OD according to ResearchGate metrics.

To investigate the social activity around Open Datasets in academic social network sites, the 23 datasets were sought in ResearchGate. ResearchGate has specific affordances to endow researchers to become “more social”. Researchers can view, download, or cite articles, as well as comment and recommend datasets. However, the associated search engine does not allow for the search of specific datasets. These can be found by browsing the type of publication on researchers’ pages; retrieval of datasets is tightly connected to a good knowledge of a researcher’s trajectory and work, which is indeed a social activity.

As for ResearchGate, only two datasets were found. Table 4 shows the social metric retrieved in ResearchGate and in the data portals. Only the data concerned with the datasets that have associated metrics are reported.

Table 4.

ResearchGate metrics on social activity.

5. Discussion and Conclusions

This study investigated the Open Data practices in the field of Educational Technology through the analysis of a number of data portals as well as ResearchGate, with the aim of studying the social life connected with Open Datasets. The results show that Open Data publishing is becoming a trend in the field of Educational Technology, which is demonstrated clearly when compared to the overall Open Data publications in Social Sciences. However, only some subfields of Educational Technology are represented in the sample if we consider the landscape of topics recently identified in the sector [11]. The significant presence of datasets concerned with educational data mining and learning analytics may be properly explained with the increasing popularity of this research topic in recent years [11,46,53,54].

As for compliance with the FAIR principles, the results show that these are only partially implemented. The great variance of features provided by the diverse portals and repositories allows for only partial compliance with the recommended principles, which might also be explained by the diversity of research objects made available in the portals.

Despite an overall low social activity concerned with the datasets, which is also strictly connected with the poor range of indicators provided in the data portals and repositories, some initial activity of views and downloads were reported. The downloads were mostly found for the datasets related to the topics that are considered popular in the research field, such as the MOOCs produced by MIT. Based on the only portal that provides information on Altmetrics, in a few cases, researchers made use of social network sites to share their Open Datasets. As for ResearchGate, although the platform provides a better range of metrics, the limited number of datasets that were retrieved restricts the generalization of results. With reference to the low results obtained in the platform, the questionable reliability of academic social network sites metrics might have prevented the same research groups from making the same datasets also available in ResearchGate. However, the absence of Open Data on general social media as well as on ResearchGate is informative with regards to the level of progress of social activity connected to Open Data.

A number of factors may be identified to explain the results of the study. The first relates to issues concerned with the culture of career advancement in the studied field of research. In the EU, where the majority of the observed practices occur, the visibility of Open Data sharing policies is rapidly increasing, especially with the introduction of specific related policies; however, the recognition of activities relating to Open Data is still in its infancy. In fact, research institutions and national research assessment systems still tend to favor the publication of accomplished research products (i.e., articles), and to support their publication in prestigious and indexed journals that mostly have restricted access policies [15,40]. In the disciplinary field we studied, this tendency, hence, seems to conflict with both Open Science and with Open Data in particular [55]. Furthermore, Open Data engagement in Educational Technology is progressively involving small groups or individual researchers rather than roles or institutions, thus shaping an increasingly complex academic system with its own values [4,56,57].

Secondly, it appears there is a general lack of professional competency in the area of Open Data. Some authors have highlighted the barriers preventing a full uptake of Open Science practices, which in turn go hand in hand with the need to acquire appropriate skills in order to navigate the digital abundance (of data) continuously produced in the digital and open world [58]. Some have compared the problem of the appropriation of Open Data to the phenomenon of the digital divide [58]. Indeed, there are complex skills that are required for the new approaches to data, such as appropriate metadata that explain the complexity of structures of data, the use of appropriate licenses for access, and of proper software connected to the machine readability of data to support interoperability. Moreover, the way data is packaged and presented influences its re-use and sharing in expanded networks [59,60,61]. The potential embedded in Open Data in science cannot be directly transformed into effective practices towards Open Science, unless stakeholders’ skills and purposive professional development programs are guaranteed [60,62].

While this study has provided a preliminary analysis of the Open Data practices in the field of Educational Technology, a number of limitations also need to be highlighted. Firstly, the sample used for this study was selected as a small percentage of the global results retrieved in the five portals/repositories, and cannot be considered as representative of the total number of datasets. Although it was selected through a robust method (randomization), we cannot exclude that significant results were omitted. While this is a preliminary study on this topic, further research should enlarge the sample size in order to draw more robust conclusions. A second limitation concerns the use of certain keywords through Boolean operators. Despite the fact that they all use metadata for indexing and retrieving datasets, diverse repositories/portals mediate access to the datasets differently. Another limitation concerns the method used to build the codebook, as its qualitative categories were derived inductively or applied according to the researchers’ judgment when pre-defined (the FAIR principles). However, the first limitation may be slightly counterbalanced with the lack of shared ontologies to classify subareas in the sector of Educational Technology. The interrater agreement served the purpose of controlling the second limitation. Another important limitation relates to the type of data collected and the method connected to the construct of “academics’ social practices”. We extracted the data manually from the ODs and from a single academic social network site (ResearchGate), which as a quantitative method is limited to anonymous users’ parameters. Instead, the user experience could be further explored for insights on the activities, habitudes, and motivations, throughout qualitative and ethnographic approaches.

Future studies might profit from these limitations, and consider larger samples along with diverse methods of investigation. In fact, while this study was conducted in the sector of Educational Technology, it would be of particular importance for further elaboration on the scholarly appropriation of Open Data practices in order to investigate scholars’ habits and attitudes in different disciplinary areas as well as in diverse social media platforms. Moreover, along with observational approaches, mixed methods and the triangulation of methodological approaches could provide an extensive overview of the changes today that are concerning the landscape of Open Data sharing in social media as a practice that reinforces the principles of Open Science.

Author Contributions

Conceptualization: J.E.R. and S.M.; Investigation: J.E.R. and S.M.; Methodology: J.E.R.; Writing—original draft: J.E.R.; Writing—review & editing: S.M.

Funding

Ministerio de Ciencia e Innovación: RYC-2016-19589.

Conflicts of Interest

The authors declare no conflict of interests.

Appendix A

| Progressive Number | Title | Author | Url |

| 1 | Community health workers and mobile technology: a systematic review of the literature | Braun, Rebecca; Catalani, Caricia; Wimbush, Julian; and Israelski, Dennis | https://explore.openaire.eu/search/dataset?datasetId=r37980778c78::f9238306682bb3e6f158e0654a120d42 |

| 2 | 1.2 Million kids and counting—mobile science laboratories drive student interest in STEM | Amanda L. Jones and Mary K. Stapleton | https://figshare.com/collections/1_2_million_kids_and_counting_Mobile_science_laboratories_drive_student_interest_in_STEM/3780572 |

| 3 | Technology, attributions, and emotions in post-secondary education: an application of Weiner’s attribution theory to academic computing problems | Rebecca Maymon, Nathan C. Hall, Thomas Goetz, Andrew Chiarella, and Sonia Rahim | https://figshare.com/collections/Technology_attributions_and_emotions_in_post-secondary_education_An_application_of_Weiner_s_attribution_theory_to_academic_computing_problems/4029124 |

| 4 | PyramidApp configurations and participants behavior data set | Kalpani Manathunga and Davinia Hernández-Leo | https://zenodo.org/record/375555#.W7yH9WgzY2w |

| 5 | Classification of word levels with usage frequency, expert opinions, and machine learning | Guzey, Onur; Sohsah, Gihad; and Unal, Muhammed | https://zenodo.org/record/12501#.W7yIBWgzY2w |

| 6 | Human-centered design methods to empower “teachers as designers” | Garreta Domingo, Muriel; Sloep, Peter; and Hernández-Leo, Davinia | https://zenodo.org/record/1181955#.W7yIFWgzY2w |

| 7 | Supporting awareness in communities of learning design practice | Konstantinos Michos and Davinia Hernández-Leo | https://zenodo.org/record/1209079#.W7yIJ2gzY2w |

| 8 | Massively open online course for educators (MOOC-Ed) network data set | Kellogg, Shaun and Edelmann, Achim | http://dx.doi.org/10.7910/DVN/ZZH3UB |

| 9 | On technological determinism: a typology, scope | Dafoe, Allan | http://dx.doi.org/10.7910/DVN/28473 |

| Conditions and a mechanism | |||

| 10 | Towards vocational translation in German studies in Nigeria and beyond: lessons from translation teaching and practice in Germany | Oyetoyan, Oludamilola Iyadunni | https://zenodo.org/record/57199 |

| 11 | Results of a research software programming and development survey at the University of Reading | Darby, Robert | https://zenodo.org/record/1166019 |

| 12 | Mathan—fostering the intelligent novice: learning from errors with metacognitive tutoring | Ken Koedinger | https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=1007 |

| 13 | Geometry angles—North Hills Spring 2003 | John Stamper and Steve Ritter | https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=886 |

| 14 | Dataset: Assistments Math 2004–2005 | Neil Heffernan | https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=92 |

| 15 | Middle school gaming the system (two schools and four lessons) 2002–2005 v1 | Ryan Baker | https://pslcdatashop.web.cmu.edu/DatasetInfo?datasetId=379 |

| 16 | Instructional factors analysis | Min Chi | https://pslcdatashop.web.cmu.edu/Project?id=158 |

| 17 | The Stanford MOOCPosts data set | Akshay Agrawal and Andreas Paepcke | https://datastage.stanford.edu/StanfordMoocPosts/ |

| 18 | 2009-2010 ASSISTment data | Neil Heffernan | https://sites.google.com/site/assistmentsdata/home/assistment-2009-2010-data |

| 19 | 2015 ASSISTments skill builder data | Neil Heffernan | https://sites.google.com/site/assistmentsdata/home/2015-assistments-skill-builder-data |

| 20 | Head-mounted eye tracking: a new method to describe infant looking | Franchak, J. M., Kretch, K. S., Soska, K. C., and Adolph, K. E. | https://nyu.databrary.org/volume/124 |

| 21 | Socioeconomic status indicators of HarvardX and MITx participants 2012–2014 | Hansen, John and Reich, Justin | https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/29779 |

| 22 | CAMEO Dataset: detection and prevention of “multiple account” cheating in massively open online courses | Northcutt, Curtis; Ho, Andrew; and Chuang, Isaac | https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/3UKVOR |

| 23 | HarvardX-MITx person-course academic year 2013 de-identified dataset, version 2.0 | MITx and HarvardX | https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/26147 |

References

- DG CONNECT European Commission. Digital Science in Horizon 2020; DG CONNECT European Commission: Brussels, Belgium, 2013. [Google Scholar]

- Fecher, B.; Friesike, S. Open Science: One Term, Five Schools of Thought. In Opening Science; Bartling, S., Friesike, S., Eds.; Springer: Cham, Switzerland, 2014; pp. 17–47. [Google Scholar] [CrossRef]

- Nielsen, M.A. Reinventing Discovery: The New Era of Networked Science; Princeton University Press: Princeton, NJ, USA, 2012; ISBN 9780691148908. [Google Scholar]

- Veletsianos, G.; Kimmons, R. Scholars in an increasingly open and digital world: How do education professors and students use Twitter? Internet High. Educ. 2016, 30, 1–10. [Google Scholar] [CrossRef]

- Weller, M. The Digital Scholar: How Technology Is Transforming Scholarly Practice; Bloomsbury Academic: London, UK, 2011; ISBN 1849666253. [Google Scholar]

- Veletsianos, G.; Shepherdson, P. Who studies MOOCs? Interdisciplinarity in MOOC research and its changes over time. Int. Rev. Res. Open Distrib. Learn. 2015, 16, 1–17. [Google Scholar] [CrossRef]

- Manca, S.; Ranieri, M. Exploring Digital Scholarship. A Study on Use of Social Media for Scholarly Communication among Italian Academics. In Research 2.0 and the Impact of Digital Technologies on Scholarly Inquiry; Esposito, A., Ed.; IGI Global: Hershey, PA, USA, 2017; pp. 117–142. ISBN 9781522508311. [Google Scholar]

- Li, J.; Greenhow, C. Scholars and social media: tweeting in the conference backchannel for professional learning. EMI. Educ. Media Int. 2015, 52, 1–14. [Google Scholar] [CrossRef]

- Borgman, C.L. Big Data, Little Data, No data: Scholarship in the Networked World; MIT Press: Cambridge, MA, USA, 2015; ISBN 978-0-262-02856-1. [Google Scholar]

- Molloy, J.C. The open knowledge foundation: Open data means better science. PLoS Biol. 2011, 9, e1001195. [Google Scholar] [CrossRef] [PubMed]

- Zawacki-Richter, O.; Latchem, C. Exploring four decades of research in Computers & Education. Comput. Educ. 2018, 122, 136–152. [Google Scholar] [CrossRef]

- Bond, M.; Zawacki-Richter, O.; Nichols, M. Revisiting five decades of educational technology research: A content and authorship analysis of the British Journal of Educational Technology. Br. J. Educ. Technol. 2019, 50, 12–63. [Google Scholar] [CrossRef]

- Manca, S. ResearchGate and Academia.edu as networked socio-technical systems for scholarly communication: A literature review. Res. Learn. Technol. 2018, 26, 1–16. [Google Scholar] [CrossRef]

- Borrego, Á. Institutional repositories versus ResearchGate: The depositing habits of Spanish researchers. Learn. Publ. 2017, 30, 185–192. [Google Scholar] [CrossRef]

- Stewart, B.E. In abundance: Networked participatory practices as scholarship. Int. Rev. Res. Open Distrib. Learn. 2015, 16, 318–340. [Google Scholar] [CrossRef]

- Burgelman, J.-C.; Osimo, D.; Bogdanowicz, M. Science 2.0 (change will happen….). First Monday 2010, 15. [Google Scholar] [CrossRef]

- Baack, S. Datafication and empowerment: How the open data movement re-articulates notions of democracy, participation, and journalism. Big Data Soc. 2015, 2. [Google Scholar] [CrossRef]

- European Commission—RISE—Research Innovation and Science Policy Experts. Mallorca Declaration on Open Science: Achieving Open Science; European Commission: Mallorca, Spain, 2016. [Google Scholar]

- H2020 Programme Guidelines on FAIR Data Management (V3.0); European Commission: Brussels, Belgium, 2016.

- Wellcome Trust. Wellcome signs open data concordat. Wellcome Trust Blog, 28 July 2016. [Google Scholar]

- NOW. Open Science. Available online: https://www.nwo.nl/en/policies/open+science (accessed on 2 November 2018).

- CERN. CMS Data Preservation, Re-Use and Open Access Policy; CERN Open Data Portal; CERN: Geneve, Switzerland, 2018. [Google Scholar]

- Bill & Melinda Gates Foundation. Gates Open Research. 2017. Available online: https://gatesopenresearch.org/about/policies#dataavail (accessed on 2 November 2018).

- McKiernan, E.C.; Bourne, P.E.; Brown, C.T.; Buck, S.; Kenall, A.; Lin, J.; McDougall, D.; Nosek, B.A.; Ram, K.; Soderberg, C.K.; et al. How open science helps researchers succeed. eLife 2016, 5, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Bournea, P.E.; Clarkb, T.; Dalec, R.; De Waardd, A.; Hermane, I.; Hovyf, E.; Shottong, D. Improving future research communication and e-scholarship: A summary of findings. Informatik-Spektrum 2012, 35, 56–58. [Google Scholar] [CrossRef]

- van der Zee, T.; Reich, J. Open Education Science. AERA Open 2018, 4, 1–15. [Google Scholar] [CrossRef]

- Veletsianos, G.; Kimmons, R. Networked Participatory Scholarship: Emergent techno-cultural pressures toward open and digital scholarship in online networks. Comput. Educ. 2012, 58, 766–774. [Google Scholar] [CrossRef]

- Scanlon, E. Digital futures: Changes in scholarship, open educational resources and the inevitability of interdisciplinarity. Arts Humanit. High. Educ. 2011, 11, 177–184. [Google Scholar] [CrossRef]

- Pearce, N.; Weller, M.; Scanlon, E.; Kinsley, S. Digital Scholarship Considered: How New Technologies Could Transform Academic Work. in Education 2010, 16, 33–44. [Google Scholar]

- Greenhow, C.; Gleason, B. Social scholarship: Reconsidering scholarly practices in the age of social media. Br. J. Educ. Technol. 2014, 45, 392–402. [Google Scholar] [CrossRef]

- Veletsianos, G. Social Media in Academia: Networked Scholars; Routledge: Abingdon, UK, 2016; ISBN 9781138822757. [Google Scholar]

- Manca, S.; Ranieri, M. “Yes for sharing, no for teaching!”: Social Media in academic practices. Internet High. Educ. 2016, 29, 63–74. [Google Scholar] [CrossRef]

- Donelan, H. Social media for professional development and networking opportunities in academia. J. Furth. High. Educ. 2016, 40, 706–729. [Google Scholar] [CrossRef]

- Gu, F.; Widén-Wulff, G. Scholarly communication and possible changes in the context of social media. Electron. Libr. 2011, 29, 762–776. [Google Scholar] [CrossRef]

- Rowlands, I.; Nicholas, D.; Russell, B.; Canty, N.; Watkinson, A. Social media use in the research workflow. Learn. Publ. 2011, 24, 183–195. [Google Scholar] [CrossRef]

- Lupton, D. “Feeling Better Connected”: Academics’ Use of Social Media; News and Media Research Centre (UC): Canberra, Australia, 2014. [Google Scholar]

- Boyer, E.L. Scholarship Reconsidered: Priorities of the Professoriate; Carnegie Foundation for the Advancement of Teaching: San Francisco, CA, USA, 1990; Volume 1997, ISBN 0787940690. [Google Scholar]

- Raffaghelli, J.E.; Cucchiara, S.; Manganello, F.; Persico, D. Different views on digital scholarship: Separate worlds or cohesive research field? Res. Learn. Technol. 2016, 24, 1–17. [Google Scholar] [CrossRef]

- Goodfellow, R. Scholarly, digital, open: an impossible triangle? Res. Learn. Technol. 2014, 21, 1–15. [Google Scholar] [CrossRef]

- Scanlon, E. Scholarship in the digital age: Open educational resources, publication and public engagement. Br. J. Educ. Technol. 2014, 45, 12–23. [Google Scholar] [CrossRef]

- Nicholas, D.; Herman, E.; Jamali, H.R. Emerging Reputation Mechanisms for Scholars; European Commission: Seville, Spain, 2015. [Google Scholar]

- Hoffmann, C.P.; Lutz, C.; Meckel, M. A relational altmetric? Network centrality on ResearchGate as an indicator of scientific impact. J. Assoc. Inf. Sci. Technol. 2016, 67, 765–775. [Google Scholar] [CrossRef]

- Kuo, T.; Tsai, G.Y.; Jim Wu, Y.-C.; Alhalabi, W. From sociability to creditability for academics. Comput. Hum. Behav. 2017, 75, 975–984. [Google Scholar] [CrossRef]

- Niyazov, Y.; Vogel, C.; Price, R.; Lund, B.; Judd, D.; Akil, A.; Mortonson, M.; Schwartzman, J.; Shron, M. Open Access Meets Discoverability: Citations to Articles Posted to Academia.edu. PLoS ONE 2016, 11, e0148257. [Google Scholar] [CrossRef]

- Thelwall, M.; Kousha, K. ResearchGate: Disseminating, communicating, and measuring Scholarship? J. Assoc. Inf. Sci. Technol. 2015, 66, 876–889. [Google Scholar] [CrossRef]

- Viberg, O.; Hatakka, M.; Bälter, O.; Mavroudi, A. The current landscape of learning analytics in higher education. Comput. Human Behav. 2018, 89, 98–110. [Google Scholar] [CrossRef]

- Kraker, P.; Lex, E. A Critical Look at the ResearchGate Score as a Measure of Scientific Reputation. In Proceedings of the Quantifying and Analysing Scholarly Communication on the Web Workshop (ASCW’15), Oxford, UK, 28 June–1 July 2015. [Google Scholar]

- Nicholas, D.; Clark, D.; Herman, E. ResearchGate: Reputation uncovered. Learn. Publ. 2016, 29, 173–182. [Google Scholar] [CrossRef]

- Orduna-Malea, E.; Martín-Martín, A.; Thelwall, M.; Delgado López-Cózar, E. Do ResearchGate Scores create ghost academic reputations? Scientometrics 2017, 112, 443–460. [Google Scholar] [CrossRef]

- Ortega, J.L. Relationship between altmetric and bibliometric indicators across academic social sites: The case of CSIC’s members. J. Informetr. 2015, 9, 39–49. [Google Scholar] [CrossRef]

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.-W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef] [PubMed]

- Raffaghelli, J.E.; Manca, S. Is there a social life in Open Data? Open datasets exploring practices in Educational Technology Research. Zenodo 2019. [Google Scholar] [CrossRef]

- FitzGerald, E.; Jones, A.; Kucirkova, N.; Scanlon, E. A literature synthesis of personalised technology-enhanced learning: what works and why. Res. Learn. Technol. 2018, 26, 1–16. [Google Scholar] [CrossRef]

- Bodily, R.; Leary, H.; West, R.E. Research trends in instructional design and technology journals. Br. J. Educ. Technol. 2019, 50, 64–79. [Google Scholar] [CrossRef]

- Salmi, J. Study on Open Science: Impact, Implications and Policy Options; European Commission: Brussels, Belgium, 2015; ISBN 9789279501814. [Google Scholar]

- Verhaar, P.; Schoots, F.; Sesink, L.; Frederiks, F. Fostering Effective Data Management Practices at Leiden University. Lib. Q. 2017, 27, 1–22. [Google Scholar] [CrossRef]

- Veletsianos, G. A Case Study of Scholars’ Open and Sharing Practices. Open Prax. 2015, 7, 199–209. [Google Scholar] [CrossRef]

- Gurstein, M.B. Open data: Empowering the empowered or effective data use for everyone? First Monday 2011, 16, 1–8. [Google Scholar] [CrossRef]

- Zuiderwijk, A.; Janssen, M.; Choenni, S.; Meijer, R.; Alibaks, R.S. Socio-technical Impediments of Open Data. Electron. J. e-Gov. 2012, 10, 156–172. [Google Scholar] [CrossRef]

- Janssen, M.; Charalabidis, Y.; Zuiderwijk, A. Benefits, Adoption Barriers and Myths of Open Data and Open Government. Inf. Syst. Manag. 2012, 29, 258–268. [Google Scholar] [CrossRef]

- Hey, A.J.G. The Fourth Paradigm: Data-Intensive Scientific Discovery; Microsoft Research: Redmond, WA, USA, 2009; ISBN 0982544200. [Google Scholar]

- Sieber, R.E.; Johnson, P.A. Civic open data at a crossroads: Dominant models and current challenges. Gov. Inf. Q. 2015, 32, 308–315. [Google Scholar] [CrossRef]

| 1 | European Data Portal - https://www.europeandataportal.eu/elearning/en/module1/#/id/co-01. |

| 2 | The framework programme for research in Europe, https://ec.europa.eu/programmes/horizon2020/en/. |

| 3 | |

| 4 | See for example: https://data.mendeley.com/datasets/7yj5w435hh/2. |

| 5 | |

| 6 | For the former case in Figshare, see the Altmetrics: https://figshare.altmetric.com/details/20198125. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).