Who Is (Likely) Peer-Reviewing Your Papers? A Partial Insight into the World’s Top Reviewers

Abstract

:Commentary

- Is it healthy for the advancement of science that academics review three or more papers per week?

- Is it worth to reward this frenzy over peer-reviewing more and more papers and try to excel in yet another metric imposed on us?

- Is there a need to control the profiles of the world’s top reviewers in platforms like Publons and the journals for which they review in order to avoid that the places high up in the list are often populated by inexperienced academics reviewing for predatory journals?

- Can one still be considered an academic if their full-time job is reviewing other people’s papers?

- Is the current peer-reviewing system best suited to meet the future challenges of academic publishing with impressive annual growth rates of papers produced?

Funding

Conflicts of Interest

References

- Azoulay, P.; Graff-Zivin, J.; Uzzi, B.; Wang, D.; Williams, H.; Evans, J.A.; Jin, G.Z.; Lu, S.F.; Jones, B.F.; Börner, K. Toward a more scientific science. Science 2018, 361, 1194–1197. [Google Scholar] [CrossRef] [PubMed]

- Smith, R. Peer review: A flawed process at the heart of science and journals. J. R. Soc. Med. 2006, 99, 178–182. [Google Scholar] [CrossRef] [PubMed]

- Cole, S.; Simon, G.A. Chance and consensus in peer review. Science 1981, 214, 881–886. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Agha, L. Big names or big ideas: Do peer-review panels select the best science proposals? Science 2015, 348, 434–438. [Google Scholar] [CrossRef] [PubMed]

- Bornmann, L. Scientific peer review. Annu. Rev. Inf. Sci. Technol. 2011, 45, 197–245. [Google Scholar] [CrossRef]

- Nielsen, M. Three Myths about Scientific peer Review. Michael Neilsen Blog on January. 2009, Volume 8. Available online: http://michaelnielsen.org/blog/three-myths-about-scientific-peer-review/ (accessed on 18 November 2018).

- Bohannon, J. Who’s Afraid of Peer Review? AM. Assoc. Adv. Sci. 2013, 342, 60–65. [Google Scholar]

- Ioannidis, J.P. Why most published research findings are false. PLoS Med. 2005, 2, e124. [Google Scholar] [CrossRef] [PubMed]

- Bunner, C.; Larson, E.L. Assessing the quality of the peer review process: Author and editorial board member perspectives. Am. J. Infect. Control 2012, 40, 701–704. [Google Scholar] [CrossRef] [PubMed]

- Evans, A.T.; McNutt, R.A.; Fletcher, S.W.; Fletcher, R.H. The characteristics of peer reviewers who produce good-quality reviews. J. Gen. Intern. Med. 1993, 8, 422–428. [Google Scholar] [CrossRef] [PubMed]

- Cabezas De Fierro, P.; Meruane, O.S.; Espinoza, G.V.; Herrera, V.G. Peering into peer review: Good quality reviews of research articles require neither writing too much nor taking too long. Transinformação 2018, 30, 209–218. [Google Scholar] [CrossRef]

- Publons|Clarivate Analytics. Global State of Peer Review. 2018. Available online: https://publons.com/community/gspr/ (accessed on 18 November 2018).

- Clarivate Analytics|Web of Science. Look up to the Brightest Stars Introducing 2017’s Highly Cited Researchers. 2018. Available online: https://hcr.clarivate.com/wp-content/uploads/2017/11/2017-Highly-Cited-Researchers-Report-1.pdf (accessed on 18 November 2018).

| 1 | For a definition of the different blindness levels the reader is referred to: “Global State of Peer Review—2018”, Publons—Clarivate Analytics [12]. |

| 2 | This aspect of global peer review is comprehensively dealt with in [12] |

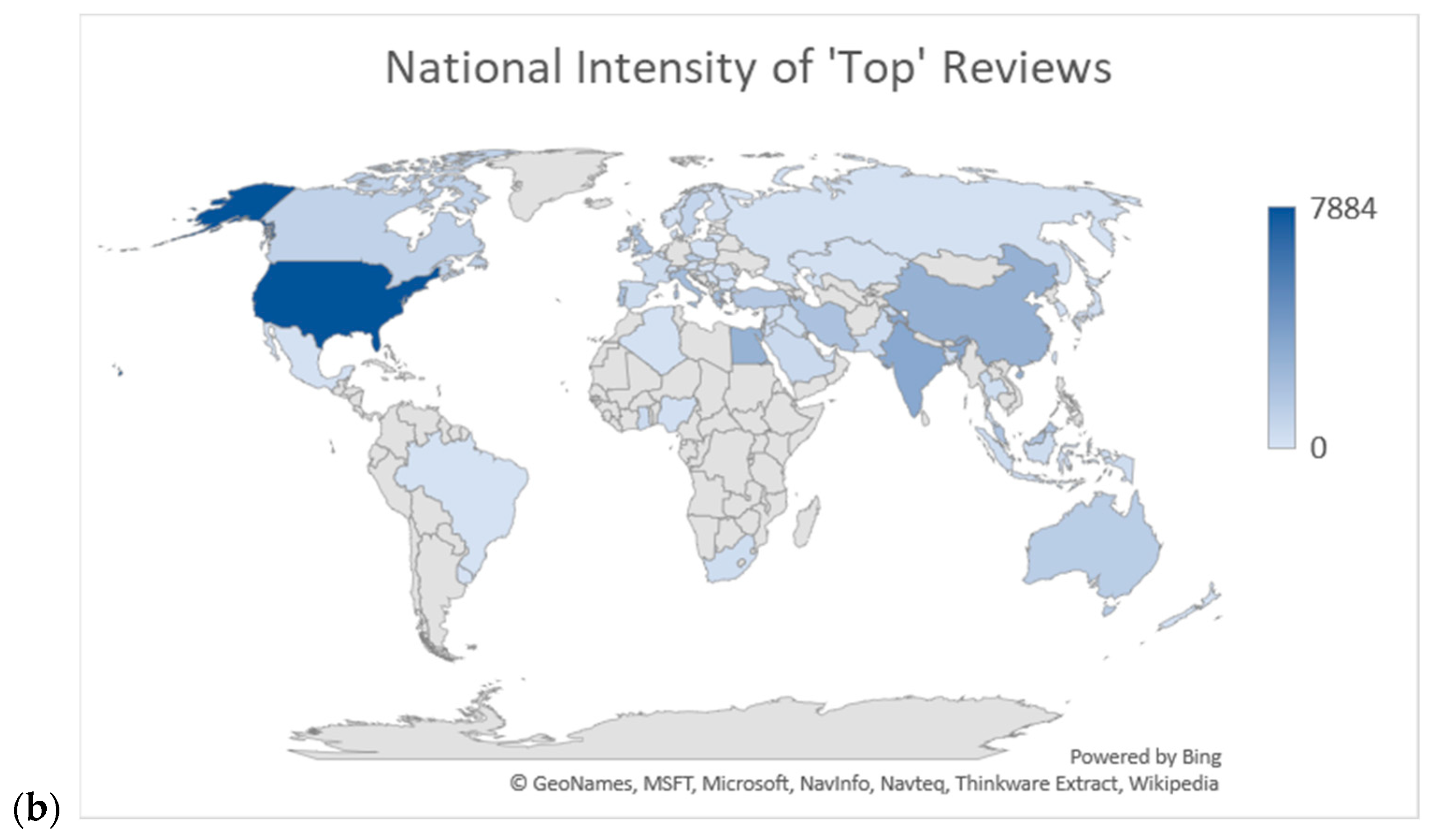

| Publications | Outputs * | Reviews | Reviewers |

|---|---|---|---|

| USA | 1st | 1st | 1st |

| China | 2nd | 3rd | 3rd |

| India | 3rd | 2nd | 2nd |

| UK | 4th | 8th | 9th |

| Japan | 5th | 30th | 29th |

| Iran | 6th | 9th | 6th |

| Brazil | 7th | 52nd | 35th |

| Australia | 8th | 12th | 12th |

| Germany | 9th | N/A | N/A |

| Canada | 10th | 14th | 13th |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pomponi, F.; D’Amico, B.; Rye, T. Who Is (Likely) Peer-Reviewing Your Papers? A Partial Insight into the World’s Top Reviewers. Publications 2019, 7, 15. https://doi.org/10.3390/publications7010015

Pomponi F, D’Amico B, Rye T. Who Is (Likely) Peer-Reviewing Your Papers? A Partial Insight into the World’s Top Reviewers. Publications. 2019; 7(1):15. https://doi.org/10.3390/publications7010015

Chicago/Turabian StylePomponi, Francesco, Bernardino D’Amico, and Tom Rye. 2019. "Who Is (Likely) Peer-Reviewing Your Papers? A Partial Insight into the World’s Top Reviewers" Publications 7, no. 1: 15. https://doi.org/10.3390/publications7010015

APA StylePomponi, F., D’Amico, B., & Rye, T. (2019). Who Is (Likely) Peer-Reviewing Your Papers? A Partial Insight into the World’s Top Reviewers. Publications, 7(1), 15. https://doi.org/10.3390/publications7010015