Abstract

Scholarly research faces threats to its sustainability on multiple domains (access, incentives, reproducibility, inclusivity). We argue that “after-the-fact” research papers do not help and actually cause some of these threats because the chronology of the research cycle is lost in a research paper. We propose to give up the academic paper and propose a digitally native “as-you-go” alternative. In this design, modules of research outputs are communicated along the way and are directly linked to each other to form a network of outputs that can facilitate research evaluation. This embeds chronology in the design of scholarly communication and facilitates the recognition of more diverse outputs that go beyond the paper (e.g., code, materials). Moreover, using network analysis to investigate the relations between linked outputs could help align evaluation tools with evaluation questions. We illustrate how such a modular “as-you-go” design of scholarly communication could be structured and how network indicators could be computed to assist in the evaluation process, with specific use cases for funders, universities, and individual researchers.

Keywords:

evaluation; network; communication; paper; metaresearch; decentralization; decentralisation; publishing 1. Introduction

Scholarly research faces threats to its sustainability and has been said to face a reproducibility crisis [1], amongst other pernicious problems such as access and exclusivity. The underlying cause might be the way we have collectively designed the reporting and rewarding of research (implicitly or explicitly). The current scholarly communication system is primarily organized around researchers who publish static (digital) research papers in scholarly journals. Many of these journals have artificial page limits (in the digital age), which leads to artificial scarcity and subsequently increases the perceived prestige of such a journal due to high rejection rates (71% on average for journals of the American Psychological Association in 2016; https://perma.cc/Q7AT-RN5C). Furthermore, scholarly communication has become highly centralized, where over 50% of all papers are published by as little as five publishers (over 70% for social sciences) [2]. Centralization has introduced knowledge discrimination, as publishers are able to influence who can access scholarly knowledge, what gets published, and allows for other single points of failure to arise with their own consequences (e.g., censorship; https://perma.cc/HDX8-DJ8F). In order to have a sustainable scholarly research system, we consider it necessary to implement changes that provide progress on many of these threats at once instead of addressing them individually.

Systems design directly affects what the system and the people who use it can do; scholarly communication still retains an analog-based design, affecting the effectivity of the dissemination and production of knowledge (see also [3]). Researchers and institutions are evaluated on where and how many papers they publish (as a form of prestige). For example, an oft-used measure of quality is the Journal Impact Factor (JIF) [4]. The JIF is also frequently used to evaluate the ‘quality’ of individual papers under the assumption that a high impact factor predicts the success of individual papers (this assumption has been debunked many times) [5,6,7]. Many other performance indicators in the current system (e.g., citation counts and h-indices) resort to generic bean counting. Inadequate evaluation measures leave universities, individual researchers, and funders (amongst others) in the dark with respect to the substantive questions they might have about the produced scholarly knowledge. Additionally, work that is not aptly captured by the authorship of papers is likely to receive less recognition (e.g., writing software code) due to reward systems counting publications instead of contributions (see also https://perma.cc/MUH7-VCA9). It is unfeasible that a paper-based approach to scholarly communication can escape the consequences of paper’s limitations.

A scholarly communication system is supposed to serve five functions; it can do so in a narrow sense, as it currently does, or in a wider sense. These functions of the scholarly communication system are: (1) registration; (2) certification; (3) awareness; (4) archival [8]; and (5) incentives [9]. A narrow fulfillment of, for example, the registration function would mean that findings that are published are registered; however, not all findings are registered (e.g., due to selective publication; [10]). Similarly, certification is supposed to occur through peer review, but peer review can exacerbate human biases in the assessment of quality (e.g., statistical significance increasing the perceived quality of methods; [11]).

We propose an alternative design for scholarly communication based on modular research outputs with direct links between subsequent modules, forming a network. In contrast, a paper-based approach communicates after a whole research cycle is completed. This concept of modular communication was proposed two decades ago [9,12,13,14,15,16]. These modules could be similar to sections of a research paper, but extend to modular research outputs such as software or materials. We propose to implement this modular communication on an “as-you-go” basis and include direct links to indicate provenance. This respects the chronological nature of research cycles and decreases the possibility for pernicious problems such as selective publication and altering hypotheses after results are known (HARKing) [17].

With a network structure between modules of knowledge, we can go beyond citations and facilitate different questions about single items or collectives of knowledge. For example, how central is a single module in the larger network? Or, how densely interconnected is this collective of knowledge modules? A network could facilitate question-driven evaluation where an indicator needs to be operationalized per question, instead of indicators that have become a goal in themselves and become invalidated by clear cheating behaviors [18,19]. As such, we propose to undertake an evaluation of the research process itself with question formulation, operationalizations, and data collection (i.e., constructing the network of interest).

2. Network Structure

Research outputs are typically research papers, which report on at least one research cycle after it has occurred. The communicative design of papers embeds hindsight and its biases in the reporting of results by being inherently reconstructive. Moreover, this design eliminates the verification of the chronology within a paper. On the other hand, the paper encompasses so much that citations to other papers can indicate a tangent or a crucial link. Additionally, the paper is a bottleneck for what is communicated; it cannot properly deal with code, data, materials, etc.

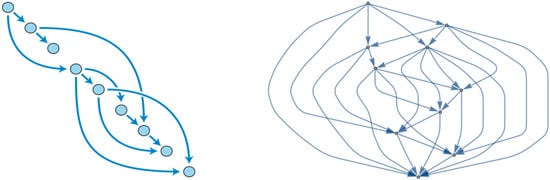

When stages of research are communicated separately and as they occur, it changes the communicative design to eliminate hindsight and allows more types of outputs to be communicated as separate modules. For example, a theory can be communicated first and hypotheses communicated second, as a direct descendant of the theory. Subsequently, a study design can be linked as a direct descendant of the hypotheses, materials as a direct descendant of the design, and so on. This would allow for the incorporation of materials, data, and analysis code (amongst others). In this structure, many modules could link to a single module (e.g., replication causes many data modules to connect to the same materials module) but one module can also link to many other modules (e.g., when hypotheses follow from multiple theories or when a meta-analytic module is linked to many results modules).

Figure 1 shows two simple examples of how these different modular research outputs (i.e., modules) would directly connect to each other. The connection between these modules only shows the direct descendance and could still include citations to other pieces of information. For example, a discussion module could be a direct descendant of a results module and could still include citations to other relevant findings (which can themselves be modules or research papers). When one research cycle ends, a new one can link to the last module, continuing the chain of descendance. Incorporating the direct descendance of these knowledge modules builds a different kind of network than that formed by citation and authorship. As such, this network would be an addition to these already existing citation and authorship networks; it does not seek to replace them.

Figure 1.

Two Directed Acyclic Graphs (DAGs) of connected research stages. The ordering is chronological (top-bottom) and therefore modules that are situated below one another cannot refer upwards. The left panel shows a less complex network of modules; the right panel shows a more extensive network of modules.

Given that these modular outputs would be communicated as they occur, chronology is directly embedded in the communication process with many added benefits. For example, preregistration of hypotheses is embedded with modular communication where predictions are communicated when they are made by default [20]. Moreover, if modular outputs are communicated as they are produced, selective reporting (i.e., publication bias) would be reduced by having already communicated the data before results are generated and could affect the decision to select.

With immutable append-only registers, the chronology and content integrity of these outputs can be ensured and preserved over time. This can occur efficiently and elegantly with the Dat protocol (without a blockchain; https://perma.cc/GC8X-VQ4K). In short, the Dat protocol is a peer-to-peer protocol (i.e., decentralized and openly accessible by design) that provides immutable logs of each change that occurs within a folder, which is given a permanent unique address on the peer-to-peer Web (3664 addresses possible) [21]. The full details, implications, and potential implementations of this protocol for scholarly communication fall outside of the scope of this paper (an extended technical explanation of the application of the Dat protocol can be found here: https://dat-com-chris.hashbase.io).

A continuous and network-based communication system could take a wider interpretation of the scholarly functions it is supposed to serve [8,9]. Registration would become more complete, because selective publication based on results is preempted by embedding communication before any results are known. Certification would be improved by embedding the chronology of a research cycle into the communication of research, ensuring that predictions precede results [20]. Awareness would be improved by using open by design principles, whereas awareness is now limited by financial means to access scholarly papers [22]. The archival function would not only be simplified with peer-to-peer protocols, but would also allow anyone to create a copy and could result in excessive redundancy under the Lots Of Copies Keeps Stuff Safe principle [23]. In the next sections, we extend on how incentives could be adjusted in such a network structure to facilitate both the evaluation of research(ers) and the planning of research.

3. Indicators

With a chronological ordering of various modular research outputs and their parent relations, a directional adjacency matrix can be extracted for network analysis. Table 1 shows the directional adjacency matrix for Figure 1. Parent modules (i.e., modules) must precede the child modules in time, therefore only of cells of the adjacency matrix are filled in, where J is the number of research modules.

With a directional adjacency matrix, countless network indicators can be calculated that could be useful in research evaluation depending on the questions asked. However, not all network indicators are directly applicable because a time-based component is included in the network (i.e., new outputs cannot refer to even newer outputs). Below, we propose some basic network indicators for evaluating past and future research outputs.

Networks indicators could be used to evaluate the network as it exists now or how it developed in the past (i.e., backward-looking evaluation). For example, in-degree centrality could be used to identify highly interconnected modules of information. This measure indicates how many child modules are spawned by a parent module and indicates how much new work a researcher’s output stimulates (e.g., module04 in Table 1 would have an in-degree centrality of 3). To contextualize this, a data module could spawn four results modules, hence has an in-degree centrality of 4. This measure would look only at one generation of child modules, but other measures extend this to incorporate multiple generations of child modules. Katz centrality extends this and computes the centrality over generations of child modules [24], whereas traditional in-degree centrality calculates centrality for generations. For example, two data modules that each spawn five results modules would have the same in-degree centrality, but could have different Katz centralities if only one of those two networks has a third-generation of modules included. If multi-generation indicators are relevant, Katz centrality measures could provide operationalizations of such measures.

Another set of network indicators could be used to evaluate how the network would change when new modules are added in the future (i.e., forward-looking evaluation). For example, a researcher who is looking for ways to increase the density in their own network could ask the question, “If I add one module that has parents, which addition would increase the density the most?” Subsequently, the researcher could inspect the identified connections for inspiration and feasibility. The complexity of the new module could be increased by increasing the number of parent modules to connect ( in the question; e.g., five instead of two). Potentially, this could facilitate creative thinking, where is gradually increased over time to increase the complexity of the issue from a network perspective.

The indicators we highlighted here are simple proposals. Other indicators from network analysis and graph theory could be applied to the study of knowledge development when a network structure is available, and we hope to see suggestions to answer questions about the network. These kinds of analyses are already done within citation networks (e.g., [25]) and authorship networks (e.g., [26]), but we cannot do so with the provenance or planning of knowledge generation in the current scholarly communication system.

4. Use Cases

We describe three use cases of network-based evaluation to contextualize the ideas proposed above. For each use case, we first provide a general and non-exhaustive overview of the possibilities with network-based evaluation. Subsequently, we specify a scenario for that use case, how an evaluation question flows from that scenario, how an indicator to answer that question could be operationalized, and how that indicator could inform the evaluation process. With these use cases we hope to illustrate that network-based evaluation could align better with the implicit evaluation criteria already present in common research evaluation scenarios.

4.1. Funders

Funders of scholarly research often have specific aims when distributing their financial resources amongst researchers. Funders often use generic “one size fits all” indicators to evaluate the quality of researchers and research (e.g., JIF, h-index, citation counts). Given that funding calls often have specific aims, these funding calls could be used as the basis of research evaluation if we move beyond these generic measures.

A scenario could exist where a funding agency wants to fund researchers to extend an existing and interconnected research line. This is not an implausible scenario, where funding agencies aim to provide several million dollars (or a similar amount in other currencies) in order to increase follow-through in research lines. A specific example might be the Dutch national funding agency “Vici” funding scheme, which aims to fund “senior researchers who have successfully demonstrated the ability to develop their own innovative lines of research” (https://perma.cc/GB83-RE4J).

Whether researchers who submitted proposals have actually built a connected research line could be evaluated by looking at how interconnected each researcher’s personal network of modules is. Let us assume that a research line here would mean that new research efforts interconnect with previous efforts by that same researcher (i.e., building on previous work). Additionally, we could assume that building a research line means that the research line becomes more present in the network over the years. Building a research line thus could be reformulated into questions about the network of directly linked output and its development over time.

Operationalizing the concept ‘research line’ as increased interconnectedness of modules over time, we could compute the network density per year. One way of computing density would be to tally the number of links and divide them by the number of possible links. By taking snapshots of the network of outputs of that researcher over, for example, the last five years on 1 January, we could compute an indicator to inform us about the development of the researcher’s network of outputs.

The development of network density over time could help inform the evaluation, but one measure could hardly be deemed the only decision criterion. As such, it only provides an indication as to whether an applicant aligns with the aim of the funding agency. Other questions would still need to be answered by the evaluation committee. For example, is the project feasible or does the proposal extend the previous research line? Some of these other questions could also be seen as questions about the future development of the network and serve as further points of investigation concerning the applicant.

4.2. Universities

Universities can use research evaluation for the internal allocation of resources and to hire new scientists. As such, a research group within a university could apply network analysis to assess how (dis)connected a group’s modules are or how their group compares to similar groups at other institutions. Using network indicators, it could become possible to assess whether a job applicant fulfills certain criteria, such as whether their modules connect to existing modules of a group. If a university wants to stimulate more diversity in research background, network analysis could also be used to identify those who are further removed from the current researchers at the institution. Considering that universities are often evaluated on the same generic indicators as individual researchers (e.g., JIF) in the rankings, such new and more precise evaluation tools might also help specify university goals.

Extending the scenario above, imagine a research group that is looking to hire an assistant professor with the aim of increasing connectivity between the group’s members. The head of the research group made this her personal goal in order to facilitate more information exchange and collaborative potential within the group. By making increased connectivity within the group an explicit aim of the hiring process, it can be incorporated into the evaluation process.

In order to achieve the increased connectivity within the research group, the head of the research group wants to evaluate applicants relatively but also with an absolute standard. Relative evaluation could facilitate applicant selection, but absolute evaluation could facilitate insight into whether any applicant is sufficient to begin with. In other words, relative evaluation here asks who the best applicant is, whereas absolute evaluation asks whether the best applicant is good enough. These decision criteria could be preregistered in order to ensure a fair selection process.

Increased connectivity could be computed as a difference measure of the research group’s network density with and without the applicant. In order to take into account the number of produced modules, the computed density could consider the number of modules of an applicant. Moreover, the head stipulates that the minimum increase in network density needs to be 5 percentage points. To evaluate applicants, each receives a score that is made up of the difference between the current network density and the network density in the event that they were hired. For example, baseline connectivity within a group might be 60%, so the network density would have to be at least 65% for one of the applicants to pass the evaluation criterion.

If the head of the research group relied purely on the increase in network density as an indicator without further evaluation, a hire that decreases morale in the research group could easily be made. For example, it is reasonable to assume that critics of a research group often link research outputs in a criticism of their work. If such a person would apply for a job within that group, the density within the network might be increased but subsequently result in a more hostile work climate. Without evaluating the content of the applicant that increases the network density, it would be difficult to assess whether they would actually increase information exchange and collaborative potential instead of stifling it.

4.3. Individuals

Individual researchers could use networks to better understand their research outputs and plan new research efforts. For example, simply visualizing a network of outputs could prove a useful tool for researchers to view relationships between their outputs from a different perspective. Researchers looking for new research opportunities could also use network analysis to identify their strengths, by comparing whether specific sets of outputs are more central than others in a larger network. For example, a researcher who writes software for their research might find that their software is more central in a larger network than their theoretical work, which could indicate a fruitful specialization.

One scenario where network evaluation tools could be valuable for individual researchers is the optimization of resource allocation. A researcher might want to revisit previous work and conduct a replication, but only has funds for one such replication. Imagine a researcher wants to identify an effect that they previously studied and has been central to their new research efforts. The identification of which effect to replicate is intended by this researcher as a safeguard mechanism to prevent further investment in new studies, should a fundamental finding prove to be not replicable.

In this resource allocation scenario, the researcher aims to identify the most central finding in a network. The researcher has conducted many studies throughout their career and does not want to identify the most central finding in the entire network of outputs over the years, but only of the most recent domain in which they have been working. As such, the researcher takes the latest output and traces all preceding outputs automatically to five generations, to create a subset of the full network and to incorporate potential work not done by themselves.

Subsequently, by computing the Katz centrality of the resulting subnetwork, the researcher can compute the number of outputs generated by a finding and how many outputs those outputs generated in return. By assigning this value to each module in the network, the researcher can identify the most central modules. However, these modules need to be investigated subsequently in order to see whether they are findings or something else (e.g., theory; we assume an agnostic infrastructure that does not classify modules).

Katz centrality can be a useful measure to select which finding to replicate in a multi-generational network, but fails to take into account what replications have already been conducted. When taking the most recent output and looking at its parent(s), grandparent(s), etc., this only looks at the lineage of the finding. However, the children of all these parents are not taken into account in such a trace. As such, the researcher in our scenario might identify an important piece of research to replicate, but fail to recognize that it has already been replicated. Without further inspection of the network for already available replications, resource allocation might be suboptimal after all.

5. Discussion

We propose to communicate research in modular “as-you-go” outputs (e.g., theory followed by hypotheses, etc.) instead of large “after-the-fact” papers. Modular communication opens up the possibility of a network of knowledge to come into existence when these pieces are linked (e.g., results descend from data). This network of knowledge would be supplementary to traditional citation networks and could facilitate new evaluation tools that are based on the question of interest rather than generic “one size fits all” indicators (e.g., Journal Impact Factor, citation counts, number of publications). Given the countless questions and operationalizations possible to evaluate research in a network of knowledge, we hope that this method would increase the focus on indicators as a tool in the evaluation process instead of contributing to the view of indicators being the evaluation process itself [27,28].

We highlighted a few use cases and potential indicators for funders, research collectives, and individuals, but recognize that we are merely scratching the surface of possible use cases and implementations of network analysis in research evaluation. The use cases presented for the various target groups (e.g., universities) can readily be transferred to suit other target groups (e.g., individuals). Award committees might use critical path analysis or network stability analysis to identify key hubs in a network to recognize. Moreover, services could be built to harness the information available in a network to identify people who could be approached for collaborations or to facilitate the ease with which such network analyses can be conducted. Future work could investigate more use cases, qualitatively identify what researchers (or others) would like to know from such networks, and determine how existing network analysis methods could be harnessed to evaluate research and better understand its development over time. Despite our enthusiasm for network-based evaluation, we also recognize the need for exploring the potential negative sides of this approach. Proximity effects might increase bias towards people already embedded in a network and might exacerbate inequalities already present. Researchers might also find ways to game these indicators, which warrants further investigation.

Communicating scholarly research in modular “as-you-go” outputs might also address other threats to research sustainability. In modular “as-you-go” communication, selective publication based on results would be reduced because data would be communicated before results are known. Similarly, the practice of adjusting predictions after results are known would be reduced because predictions would be communicated before data are available (i.e., preregistration by design). Replications (or reanalyses) would be encouraged both for the replicated (the replicated module would lead to more child modules, increasing its centrality) and the replicator (the time investment would be lower; it merely requires adding a data module linked to the materials module of the replicated). Self-plagiarism could be reduced by not forcing researchers to rehash the same theory across papers that spawn various predictions and studies. These various issues (amongst other out of scope issues) could be addressed jointly, rather than dealing with individual issues vying for importance among researchers, funders, and policymakers (amongst others).

To encourage cultural and behavioral change, “after-the-fact” papers and modular “as-you-go” outputs could co-exist (initially) and would not require researchers to make a zero-sum decision. Copyright is often transferred to publishers upon publication (resulting in pay-to-access), but only after a legal contract is signed. Hence, preprints cannot legally be restricted by publishers when they precede a copyright transfer agreement. However, preprints face institutional and social opposition [29], where preprinting could exclude a manuscript for publication depending on editorial policies or due to fears of non-publication or scooping (itself a result of hyper-competition). In recent years, preprints have become more widely accepted and less likely to exclude manuscript publication (e.g., Science accepts preprinted manuscripts) [30]. Similarly, sharing modular “as-you-go” outputs cannot legally be restricted by publishers and can ride the wave of preprint acceptance, but might also face institutional or social counterchange similar to preprints. Researchers could communicate “as-they-go” and compile “after-the-fact” papers, facilitating co-existence and minimizing negative effects on career opportunities. Additionally, “as-you-go” modules could be used in any scholarly field where the provenance of information is important to findings and is not restricted to empirical and hypothesis-driven research per se.

As far as we know, a linked modular “as-you-go” scholarly communication infrastructure has not yet been made available to researchers in a sustainable way. One of the few thought styles that has facilitated “as-you-go” reporting in the past decade is that of Open Notebook Science (ONS) [31], where researchers share their day-to-day notes and thoughts. However, ONS has remained on the fringes of the Open Science thought style and has not matured, limiting its usefulness and uptake. For example, ONS increases user control because communication occurs on personal domains, but does not have a mechanism of preserving the content. Considering that reference rot occurs in seven out of 10 scholarly papers containing weblinks [32], concern for sustainable ONS is warranted without further development of content integrity and persistent links. Moreover, ONS increases information output without providing more possibilities of discovering that content.

Digital infrastructure that facilitates “as-you-go” scholarly communication is now feasible and potentially sustainable. It is feasible because the peer-to-peer protocol Dat provides persistent addresses for versioned content and ensures content integrity across those versions. It is sustainable because preservation in a peer-to-peer network is relatively trivial (inherent redundancy, anyone can rehost information and libraries could be persistent hosts) and removes (or at least reduces) the need for centralized services in scholarly communication. Consequently, this could decrease the need for inefficient server farms of centralized services [33]. However, preservation is a social process that requires commitment. Hence, a peer-to-peer infrastructure would require committed and persistent peers (e.g., libraries) to make sure content is preserved. Another form of sustainability is knowledge inclusion, which is facilitated by a decentralized network protocol that is openly accessible without restrictions based on geographic origin, cultural background, or financial means (amongst others).

Finally, we would like to note that communication was not instantly revolutionized by the printing press, but changed society over the centuries that followed. The Web has only been around since 1991 and its effect on society is already pervasive, but far from over. We hope that individuals who want change do not despair the feelings of inertia in scholarly communication throughout recent years or the further entrenching of positions and interests. We remain optimistic for substantial change to occur within scholarly communication that improves the way we communicate research and we hope that the ideas out forth in the present paper contribute in working towards this outcome.

6. Conclusions

The current scholarly communication system based on research papers is “after-the-fact” and can be supplemented by a modular “as-you-go” communication system. By doing so, the functions of a scholarly communication system can be interpreted more widely, making registration complete, rendering certification part of the process instead of just the judgment of peers, allowing access to everything for everyone based on peer-to-peer protocols, simplifying the archival process, and facilitating incentive structures that could align researchers’ interests with those of scholarly research.

Author Contributions

Conceptualization: C.H.J.H. and M.v.Z. Methodology: C.H.J.H. and M.v.Z. Writing-Original Draft Preparation: C.H.J.H. and M.v.Z. Writing-Review and Editing: C.H.J.H. and M.v.Z. Visualization: C.H.J.H.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Baker, M. 1500 scientists lift the lid on reproducibility. Nature 2016, 533, 452–454. [Google Scholar] [CrossRef] [PubMed]

- Larivière, V.; Haustein, S.; Mongeon, P. The Oligopoly of Academic Publishers in the Digital Era. PLoS ONE 2015, 10, e0127502. [Google Scholar] [CrossRef] [PubMed]

- Kling, R.; Callahan, E. Electronic journals, the Internet, and scholarly communication. Annu. Rev. Inf. Sci. Technol. 2005, 37, 127–177. [Google Scholar] [CrossRef]

- Garfield, E. The History and Meaning of the Journal Impact Factor. JAMA 2006, 295, 90. [Google Scholar] [CrossRef] [PubMed]

- Prathap, G.; Mini, S.; Nishy, P. Does high impact factor successfully predict future citations? An analysis using Peirce’s measure. Scientometrics 2016, 108, 1043–1047. [Google Scholar] [CrossRef]

- Seglen, P.O. The skewness of science. J. Am. Soc. Inf. Sci. 1992, 43, 628–638. [Google Scholar] [CrossRef]

- Seglen, P.O. Causal relationship between article citedness and journal impact. J. Am. Soc. Inf. Sci. 1994, 45, 1–11. [Google Scholar] [CrossRef]

- Roosendaal, H.E.; Geurts, P.A.T.M. Forces and Functions in Scientific Communication: An Analysis of Their Interplay; University of Twente: Enschede, The Netherlands, 1997; pp. 1–32. [Google Scholar]

- Van de Sompel, H.; Payette, S.; Erickson, J.; Lagoze, C.; Warner, S. Rethinking Scholarly Communication. D-Lib Mag. 2004, 10. [Google Scholar] [CrossRef]

- Franco, A.; Malhotra, N.; Simonovits, G. Publication bias in the social sciences: Unlocking the file drawer. Science 2014, 345, 1502–1505. [Google Scholar] [CrossRef] [PubMed]

- Mahoney, M.J. Publication prejudices: An experimental study of confirmatory bias in the peer review system. Cognit. Ther. Res. 1977, 1, 161–175. [Google Scholar] [CrossRef]

- Kircz, J.G. Modularity: The next form of scientific information presentation? J. Doc. 1998, 54, 210–235. [Google Scholar] [CrossRef]

- Kuhn, T.; Chichester, C.; Krauthammer, M.; Queralt-Rosinach, N.; Verborgh, R.; Giannakopoulos, G.; Ngonga Ngomo, A.-C.; Viglianti, R.; Dumontier, M. Decentralized provenance-aware publishing with nanopublications. PeerJ Comput. Sci. 2016, 2, e78. [Google Scholar] [CrossRef]

- Groth, P.; Gibson, A.; Velterop, J. The anatomy of a nanopublication. Inf. Serv. Use 2010, 30, 51–56. [Google Scholar] [CrossRef]

- Velterop, J. Nanopublications the Future of Coping with Information Overload. Logos 2010, 21, 119–122. [Google Scholar] [CrossRef]

- Nielsen, M. Reinventing Discovery: The New Era of Networked Science; Princeton University Press: Princeton, NJ, USA, 2012. [Google Scholar]

- Kerr, N.L. HARKing: Hypothesizing after the Results are Known. Personal. Soc. Psychol. Rev. 1998, 2, 196–217. [Google Scholar] [CrossRef] [PubMed]

- Seeber, M.; Cattaneo, M.; Meoli, M.; Malighetti, P. Self-citations as strategic response to the use of metrics for career decisions. Res. Policy 2017. [Google Scholar] [CrossRef]

- PLoS Medicine Editors. The Impact Factor Game. PLoS Med. 2006, 3, e291. [Google Scholar] [CrossRef]

- Nosek, B.A.; Ebersole, C.R.; DeHaven, A.C.; Mellor, D.T. The preregistration revolution. Proc. Natl. Acad. Sci. USA 2018, 115, 2600–2606. [Google Scholar] [CrossRef] [PubMed]

- Ogden, M. Dat—Distributed Dataset Synchronization and Versioning. OSF Preprints 2017. [Google Scholar] [CrossRef]

- Tennant, J.P.; Dugan, J.M.; Graziotin, D.; Jacques, D.C.; Waldner, F.; Mietchen, D.; Elkhatib, Y.; Collister, L.B.; Pikas, C.K.; Crick, T.; et al. A multi-disciplinary perspective on emergent and future innovations in peer review. F1000Research 2017, 6, 1151. [Google Scholar] [CrossRef] [PubMed]

- Reich, V.; Rosenthal, D.S.H. LOCKSS. D-Lib Mag. 2001, 7. [Google Scholar] [CrossRef]

- Wasserman, S.; Faust, K. Social Network Analysis: Methods and Applications; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Fortunato, S.; Bergstrom, C.T.; Börner, K.; Evans, J.A.; Helbing, D.; Milojević, S.; Petersen, A.M.; Radicchi, F.; Sinatra, R.; Uzzi, B.; et al. Science of science. Science 2018, 359, eaao0185. [Google Scholar] [CrossRef] [PubMed]

- Morel, C.M.; Serruya, S.J.; Penna, G.O.; Guimarães, R. Co-authorship Network Analysis: A Powerful Tool for Strategic Planning of Research, Development and Capacity Building Programs on Neglected Diseases. PLoS Negl. Trop. Dis. 2009, 3, e501. [Google Scholar] [CrossRef] [PubMed]

- Hicks, D.; Wouters, P.; Waltman, L.; de Rijcke, S.; Rafols, I. Bibliometrics: The Leiden Manifesto for research metrics. Nature 2015, 520, 429–431. [Google Scholar] [CrossRef] [PubMed]

- Wilsdon, J.; Allen, L.; Belfiore, E.; Campbell, P.; Curry, S.; Hill, S.; Jones, R.; Kain, R.; Kerridge, S.; Thelwall, M.; et al. The Metric Tide: Report of the Independent Review of the Role of Metrics in Research Assessment and Management; HEFCE: Stoke Gifford, UK, 2015. [Google Scholar]

- Kaiser, J. Are preprints the future of biology? A survival guide for scientists. Science 2017. [Google Scholar] [CrossRef]

- Berg, J. Preprint ecosystems. Science 2017, 357, 1331. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Bradley, J.-C. Open Notebook Science Using Blogs and Wikis. Nat. Preced. 2007. [Google Scholar] [CrossRef]

- Klein, M.; Van de Sompel, H.; Sanderson, R.; Shankar, H.; Balakireva, L.; Zhou, K.; Tobin, R. Scholarly Context Not Found: One in Five Articles Suffers from Reference Rot. PLoS ONE 2014, 9, e115253. [Google Scholar] [CrossRef] [PubMed]

- Cavdar, D.; Alagoz, F. A survey of research on greening data centers. In Proceedings of the 2012 IEEE Global Communications Conference (Globecom), Anaheim, CA, USA, 3–7 December 2012; pp. 3237–3242. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Public Domain Dedication (CC 0) license (https://creativecommons.org/publicdomain/zero/1.0/).