1. Introduction

There is something wrong with science. On the one hand, science somehow failed to supply the miracles it promised (or what was believed to have been promised) [

1]. Instead of flying cars, colonies on other planets and endless, clean energy sources, we got climate change, emerging diseases, and increasing poverty. Instead of a cure for cancer, we got Ebola, Zika and the threat of malaria spreading to the temperate zone. On the other hand, there is an increased concern that recent results are not reliable enough. There is a reproducibility crisis in science as some experiments were found to be not repeatable (e.g., in drug discovery and medical trials [

2,

3,

4,

5], medical genetics [

6,

7]; and psychology [

8,

9,

10]). Quite a number of the published findings might be false [

11] because of (1) a widespread bias to report positive results (e.g., there are statistics and a

p-value which say that the null-hypothesis can be rejected); and (2) the manipulation or outright fabrication of data. We do not know just how much of the scientific literature is not true. A rise in retraction—which is only the tip of an iceberg—is the consequence of scientific misconduct [

12,

13]. All the above points suggest that there is something wrong with how novel scientific results are generated and presented.

A result from one experiment is not enough. This insight was deeply instilled into us during labs when I trained to be a chemist. Thus, we—at least in theory—publish results coming from repetitions of the same experiment [

14]. Thus, we should be able to reproduce our own experiments, at least. The published papers tell us that there were enough experiments performed to arrive at the reported results. Repetition of the same experiment by others should be possible, and ideally should lead to the same results. However, as referenced earlier, quite a few of the results cannot be reproduced. A repeated study might not arrive at the same conclusion for valid reasons, such as actual differences in the test subject (in which case it is a novel result), or some factors in the methodology which are not clear for others who want to repeat the experiment based on the method section of original the paper [

15]. However, then there are papers with manipulated or fabricated data. To say it bluntly, there are lies in those papers. Lying is unethical, so why would a scientist risk the scorn of others, ostracism from the community, and the possibility of losing his or her job?

In this paper, I argue that it is the pressure to produce eye-catching results, which are publishable in prestigious journals, that undermines the integrity of science. Scientists live in a competitive, but underpaid environment. Advancement offers the opportunity to stay in science and get a decent salary. Those who can land more papers in high-profile journals will rise faster and higher. There are considerable individual benefits to be gained, and the individual costs of misconduct are little. In the end, science suffers, but when facing the dilemma of choosing between one’s life and good science, the rational actor (sensu the Homo economicus) will choose the former.

This paper is subjective. It contains my thoughts on issues that threaten the integrity of science and the well-being of scientists. When asked, scientist say roughly the same things about the hurdles, problems and unnecessary challenges faced by us [

16]. This paper is also the result of discussions during conference dinners about the evils of academia. For most of us, the literature on science ethics, problems of the peer-review system, granting efficiency, etc. are not known. We focus on our narrow field of science, but encounter the drudgery of writing applications, the stress associated with papers rejected based on interest and not on scientific soundness, and the pressure to hoard bibliometric numbers. Consequently, this paper is my grumbling on the above issues and my first appreciation of the literature on publishing, science ethics, and grant giving.

2. Working in a Competitive Environment for Little Money

Science is pursued in a rather unusual environment. People who spend an extraordinary amount of time in school to barely qualify for a job in science, get a low starting salary and a fixed-term contract. From the employee’s point of view this sector is a nightmare.

An M.Sc. training in biology or chemistry (the degrees I hold) prepares students to be researchers. When I attended university, it was openly admitted that we were being trained to be scientist as opposed to be teachers, the two most common career options for those who study the natural sciences. There might now be a few more jobs in the industry requiring a trained biologist, but most students still dream of being a scientist. To be a scientist, one needs a Ph.D.

A new graduate outside of academia can expect to get a work contract with benefits that come with such employment, such as social security, pension contribution, and some kind of job security offered by the state. In academia, young scientists first become Ph.D. students. Thus, legally, they are still students, just as they have been since starting elementary school. This is the case worldwide, albeit in some countries young scientists working on their doctorate thesis are employed by their universities (for example, in the Netherlands, there are a considerable number of Ph.D. students on fellowships [

17]). In Hungary, in the natural sciences, 41% of the Ph.D. students were on stipends, and only 32% were employed as teacher’s assistants or junior researchers in 2010 [

18]. There can be advantages to being a student (e.g., cheaper public transportation in Hungary), but there are disadvantages to having a tax-free stipend instead of a normal salary with a work contract. For example, the time spent on studies does not count towards pension (this was different before 1998, when tertiary education and above were considered to be working years). Consequently, Ph.D. students sacrifice working years, even though actual studies are only a minor part of their day-to-day routines, which is filled with research and teaching. For the very same activity, someone with an employment contract would be considered to work; a Ph.D. student is only considered to be studying, not working.

Getting a Ph.D. can take 6–8 years (7.5 years on average in Hungary [

18]) after which one will wish to stay in academia [

19]. However, very few can get into this sector. My senior colleagues report that before the 1990s they could choose between the university and a research institute to work in. Everyone could pursue a scientific career who had the right university education. There were more faculty positions and there were considerably fewer students [

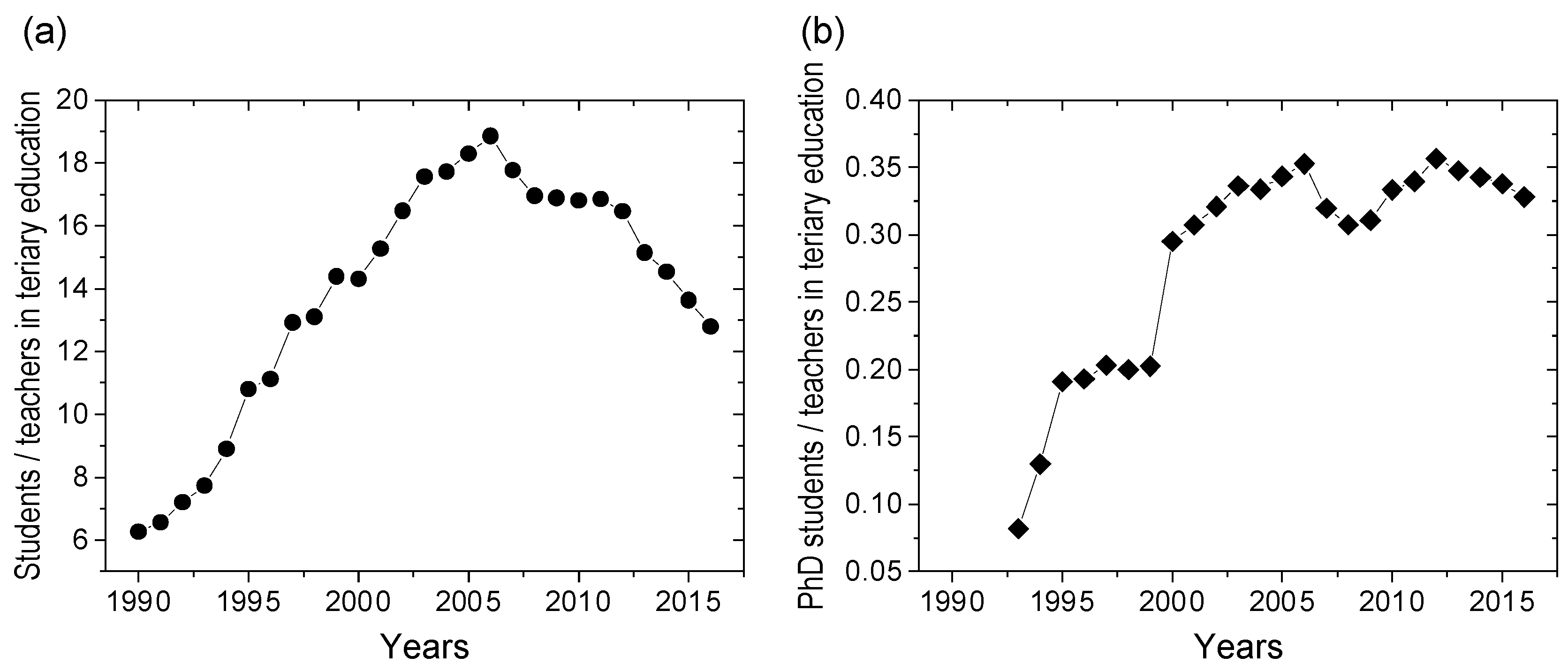

20] (

Figure 1). From the mid-1990s (roughly from the time I got in contact with academia), it became more and more difficult to get a permanent job at my university. The main reasons of this decline in opportunities are failing R&D expenditures and an increasing supply of Ph.D. graduates. In 1989, 2% of the GDP was devoted to R&D, which quickly fell to 1% or less afterwards (the all-time low was 0.67% in 1996) [

20]. Hungary now spends less, in relative terms, on tertiary education than ten or twenty years ago [

21]. At the same time, the number of Ph.D. students increased five-fold.

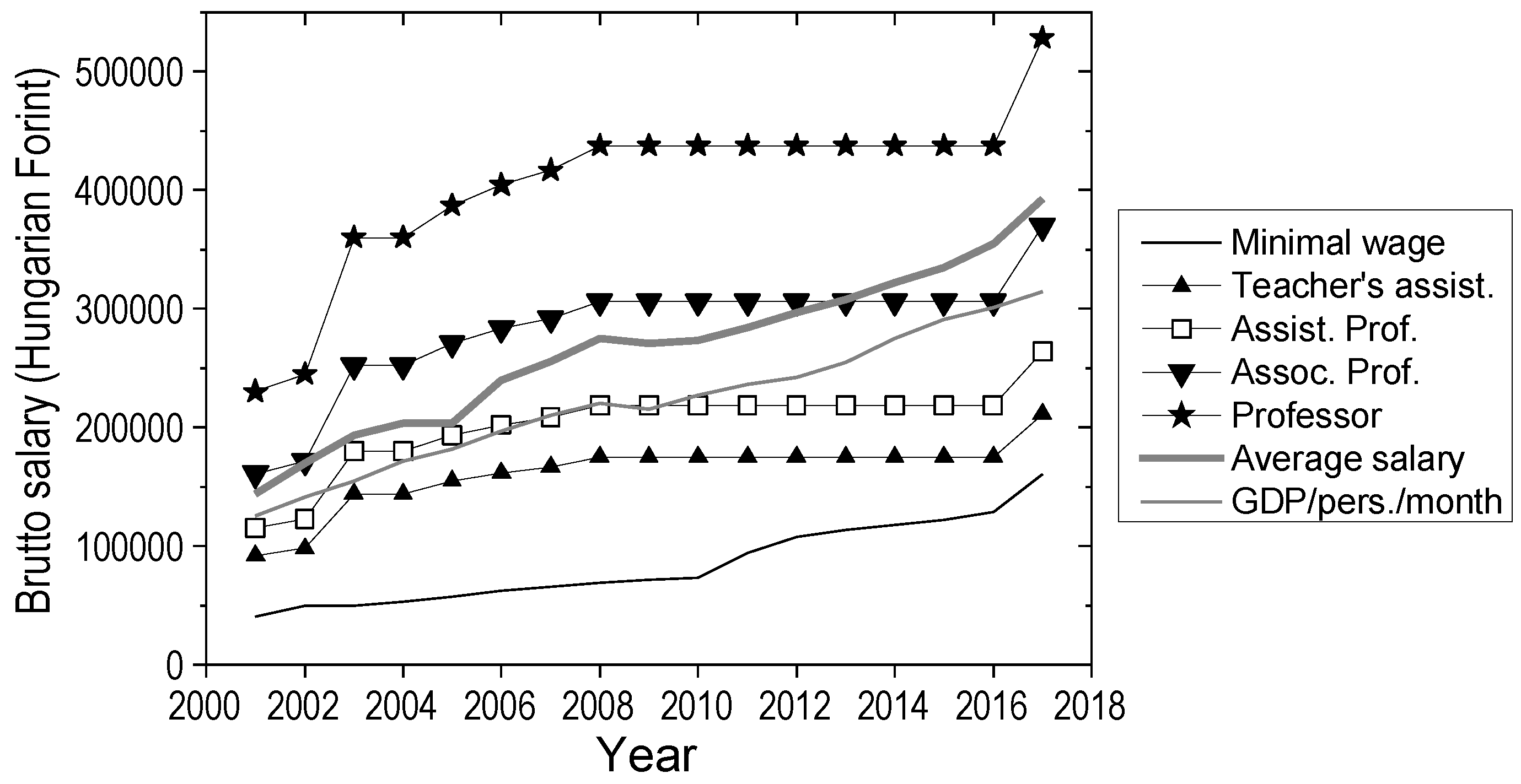

Even if one can get a postdoc position (which might be his/her first real employment contract), the hurdles are not over. Salaries in academia lag behind those of industry [

18,

22,

23]. At the teacher’s assistant/junior researcher/Ph.D. student level, as well as at the assistant professor/postdoc level the salaries are well below the average salaries for white-collar jobs (

Figure 2). Lately, even associate professors earn less than the national average for those having a university degree. At a full professor level, scientists began to get a somewhat decent salary. This is the coveted position by everyone, the level when the years spent studying and working begin to pay-off.

Academia is not only very competitive to get into and pays little; it also has an overabundance of fixed-term employments. Fixed-term contract is for a fixed time-period after which it just terminates. The time-period can be anything from a month to—the longest I had—five years (in Hungary it cannot be more than five years). A “normal” indeterminate employment offers some kind of security as it is an indication that the employer would like to hire the employee for a long time (at least indeterminate time) and the employer at least must give a reason for firing someone. Thus, indeterminate employment is not permanent in the sense of guaranteed employment till the employee’s retirement but feels more secure than the fixed-term one that needs to be renewed after each period (no reason needs to be given for not extending a contract, but it is difficult to end a fixed term contract mid-term).

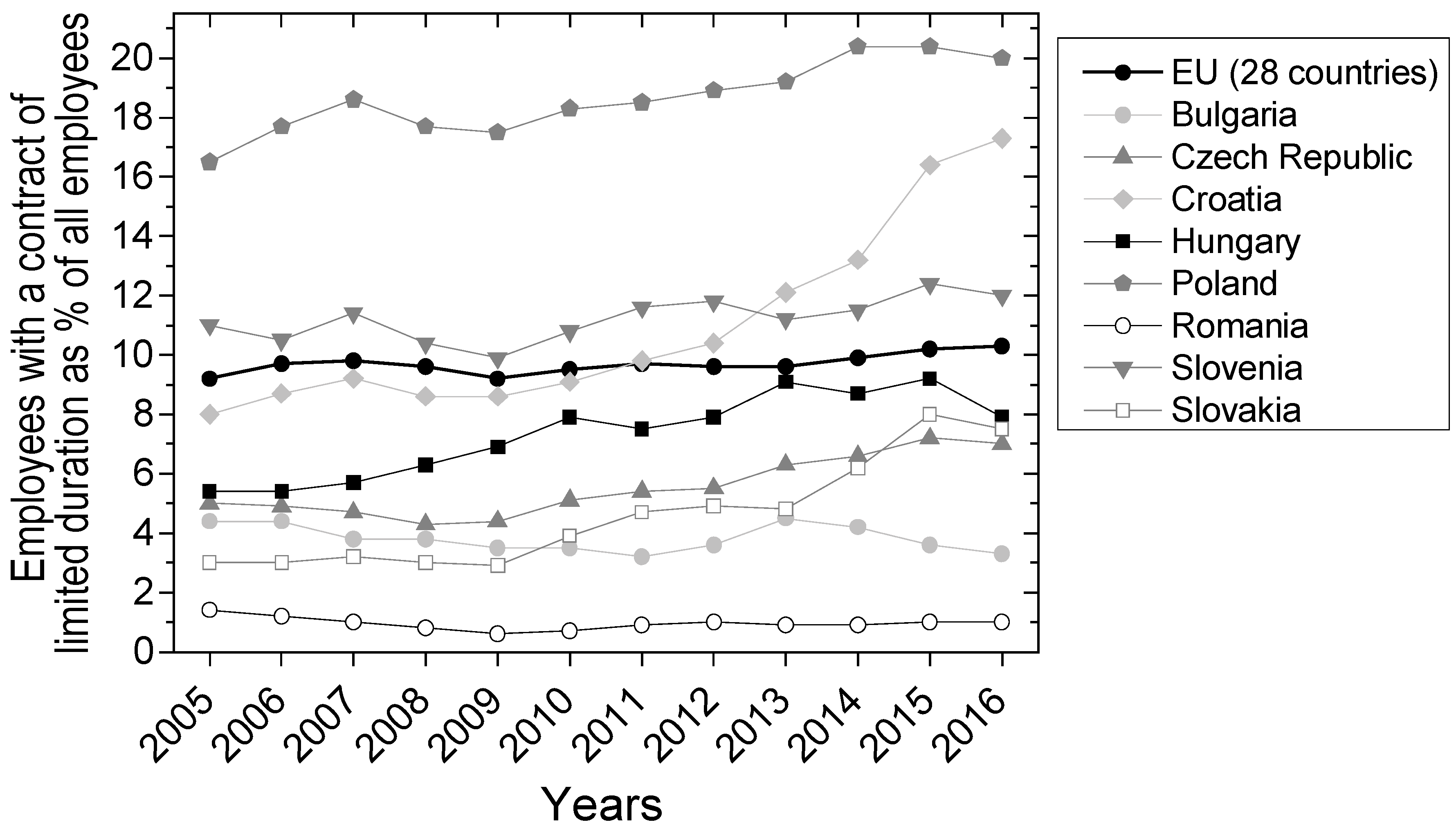

Fixed-term contracts are quite uncommon in the EU, especially in Central-Eastern Europe (in the former communist block) (

Figure 3). In Hungary, between 6–10% of the employees were on a fixed term contract (8.7% in 2016 for employees aged 15–65) in the last decade [

25]. The actual percentage is higher for those aged between 15 and 20, and lower for those aged above 25 (postdocs fall into this category). Thus, people in academia are in the minority with their employment contracts. People in countries where indeterminate employment is the norm (“normal” employment) are puzzled by the short-term employment contracts so common in academia. One needs to explain to bank representatives, for example, when applying for a loan, that one is not a day-laborer or someone living from odd jobs, but that these contracts are just normal in the sector (academia) he/she works in. I moved office twice since graduating from university: first in 2001 when the department moved to a new building, and then around 2008 when I moved to another office a couple of meters from the former one. During the same period, I had to sign so many new contracts and renewals that I lost count of them (my employer also changed several times, without my desk moving an inch).

Indeterminate work contract is called tenure in academia (albeit there could be differences on whether one can be fired, and under what conditions). Such a contract, which is available to 90% of the employees right from their entrance to the workforce, opens for scientists quite late. There is a considerable country-to-country variation. For example, tenure in France can happen at an average age of 33 [

26], but in contrast, in Germany, a scientist can become tenured around the age of 42 [

26].

In Hungary, the situation is still a strange mix of the old system, where everyone got a secure job after finishing university, and the new one—with fixed-term Ph.D. studentship, junior researcher, and postdoc status. In principle, one can get an indeterminate contract at a university or a research institute right after graduation. On the other hand, one can be a full professor and still be on a fixed-term contract. The latter is the case for a scientist working in one of the research groups funded by the Hungarian Academy of Sciences and located at one of the universities [

27].

In summary, academia is a competitive sector in which one earns a lot less compared to what one can earn outside of academia with the same qualification. The only way of enjoying the positive side of being a scientist and also having a decent standard of living is to rise to the top quickly and/or obtain grants that allow for a higher salary.

3. Publish or Perish

Rising to the top, and winning grants require that one stands out of the crowd. Because of dwindling resources, competition for positions and funding has increased. Increased competition also implies that there is a stronger selection mechanism in place. A solid scientific track record is no longer a guarantee of success. The core of the problem is what Merton calls the 41st chair [

28]: if there is a fixed number of honors, such as the Nobel prize, or the 40 chairs in the French Academy, then people will be left out who nonetheless contributed significantly to the advancement of human knowledge. There is only a finite number of papers accepted for publication in Nature, Science, or PNAS. There is only a finite amount of grant money that can be distributed. There is only a fixed number of positions at a university. Therefore, not everyone can get their research funded, their paper published in their target journal, or gain a professorship.

Selection among scientists should, in principle, be based on merit; however, it is mostly based on some quantifiable achievement, such as number of papers, number of independent citations, cumulative impact factor, h-index, etc. This was the case in the West for decades, and now also in Central and Eastern Europe there is an increased pressure to publish internationally [

29,

30]. These numbers can hardly reflect on the scientific potential of the scientist in question as they measure the ability of generating these numbers. Sydney Brenner wrote in the obituary to Frederick Sanger [

31]: “A Fred Sanger would not survive today’s world of science. With continuous reporting and appraisals, some committee would note that he published little of import between insulin in 1952 and his first paper on RNA sequencing in 1967 with another long gap until DNA sequencing in 1977. He would be labeled as unproductive, and his modest personal support would be denied. We no longer have a culture that allows individuals to embark on long-term—and what would be considered today extremely risky—projects.” Thus, Professor Sanger’s pioneering work on sequencing for which he got two Nobel Prizes in 1958 and 1980 would not have been possible today. Nobel Laurate Richard Heck’s story is even more alarming. His breakthrough achievements published in the end of the ’60s is now the basis of an array of organic chemical synthesis, including ones used in automatic sequencing. He had to retire in 1989 after his funding discontinued [

32], well before he was awarded the Nobel Prize in 2010. Where would biology be without these methods? How much does today’s system hold back the advancement of science?

So why the obsession with bibliometric numbers? Supposedly granting agencies and university committees love bibliometric/scientometric numbers, as they are considered an objective measure of a scientist’s quality. However, we all know that they are not. I think we (as scientists who sit on these boards and committees) prefer them to judging individual merits, as they allow us to not pass judgement. We do not like to pass judgement on others and say it aloud. It is easier to devise rules and numbers that take this burden from our shoulders. This is self-cheating. I have seen it first hand, when there was some problem with some faculty’s performance. Nobody wanted to go up to him/her and say: There is this problem, can you do something about it? Instead, they were drafting a rule, that the faculty member would clearly fail, and then debated on what should be the penalty for failing to comply with the rule. In that way, it is not individual colleagues, department heads or institute directors who had to pass on a judgement, but cold paragraphs in some regulatory text. However, the regulations are not written by God, but by the scientist themselves. It is just an extra layer of detachment from what they do not want to do face-to-face.

Even if we would all agree to judge each other’s work based on individual merits (for example, on the content of a few papers) and not on some numbers, governments and funding agencies still love numbers. They feel obliged to show to tax-payers that public money was well invested. When it is a new highway, renovation of hospitals or less crowded schools, it can be shown directly. However, what about the results of basic research? The significance can only be understood by a few professionals, and the potential for commercialization, if it existed, is still years away. Governments have a biased view of what the public wants from science. It might be different from the public demands from their government. The public is not only interested in questions related to health, sustainability, security and energy (the broad categories many national research agendas emphasize [

33]). A public consultation by the Dutch Government [

34] has led to 140 questions which will be a priority in the upcoming years. Origin of life—my research field—is among them. This is purely basic research, but one that deemed fundamental by the public. Curiosity is something humans retain, unlike other mammals, into their adulthood [

35,

36]. This is the basis of all scientific enterprise: we want to uncover something new. We should go wherever our curiosity leads us (cf. the Mertonian value of disinterestedness [

37]). There will be dead-ends, failed experiments and research projects that do not lead anywhere. Basic research is risky. However, funding agencies do not want risky projects: they want to report on a steady production of science (the economic term here is not a mistake). In addition, this has slowly undermined the value of disinterestedness, which is no longer supported by the majority of scientists [

38].

The products of science are publications and their reception (e.g., citations). However, “When science becomes a business, what counts is not whether the product is of high quality, but whether it sells.” [

39] There is an emphasis on quantity, without much regard to quality. For example, publishing in top-tier journals not only gives fame and a boost to one’s career [

40], but it can also boost one’s bank account. There is a trend of having a monetary incentive to achieve publication in certain prestigious journals (mainly in

Nature or

Science) [

41,

42]. Hungary’s primary granting agency (National Research, Development and Innovation Office) just launched a new grant that awards a considerable sum to highly cited individuals and automatically awards 20 million Hungarian Forints (cca. 64,000 Euro or 76,000 USD) to those who can get their paper into the top 5% in their discipline within 2 years [

43].

“There is an excessive focus on the publication of groundbreaking results in prestigious journals. But science cannot only be groundbreaking, as there is a lot of important digging to do after new discoveries—but there is not enough credit in the system for this work, and it may remain unpublished because researchers prioritise their time on the eye-catching papers, hurriedly put together” [

44]. Those who cannot produce the required number of papers, get their papers in prestigious journals, or gather citations, will be out of business. This is the “publish or perish” principle in academia. It is no wonder, then, that unethical and questionable practices such as authorships bought for money [

45,

46], tailoring data and their analysis to support desired outcomes [

47] are on the rise.

4. The Matthew Effect and the Luck Factor

We have seen that there is little money to be earned in academia. Unless one is at the top (full professor level) and/or one can obtain a grant allowing a higher salary. There is considerable monetary incentive to get to the top as quickly as possible. In this respect, academia resembles the pay structure of drug gangs [

48]: a cadre of low-paid people aspire to reach the secure and well-paid top levels. The way to the top in academia is through the hoarding of bibliometric numbers. These already tempt practitioners of science to sacrifice quality on the altar of achievements. However, there is yet another factor worsening the situation: any early difference in achievement is enhanced by a positive feedback loop.

As Sydney Brenner said in an interview “The way to succeed is to get born at the right time and in the right place. If you can do that, then you are bound to succeed. You have to be receptive and have some talent as well.” [

49] There is a lot of luck involved, and there are considerably more talented individuals than the ones that succeed. Any initial difference or advantage gained at an early stage will be magnified [

50]. Those who have more publications, have accumulated more impact factors, or were trained in more prestigious institutions have a higher chance of being given a position, getting tenure, securing grants, and continuing to be productive [

51]. Having a stable position and grants helps in producing even more publications, getting more grants, and accumulating more impact factors. On the other hand, those that have fewer publications, publish in less prestigious journals, and whose grant proposals have been rejected have less potential to get a new publication, land a paper in a prestigious journal and win the next proposal. This is the Matthew effect named after a quote from the King James version of the Bible: “For unto every one that hath shall be given, and he shall have abundance: but from him that hath not shall be taken away even that which he hath.” (Matthew 25:29)

Robert Merton—who coined the term [

28]—argues, based on interviews with Nobel laureates [

52], that the prestige associated with the Prize also gave the laureates extra credit for their current work. “Nobel laureates provide presumptive evidence of the effect, since they testify to its occurrence, not as victims—which might make their testimony suspect—but as unwitting beneficiaries” [

28]. The paper mostly deals with the extra prestige and credit bestowed upon the laureates and how it affects their scientific life. There are some stories from these eminent scientists in which they testify that, well before they became famous, they also encountered the other side of the coin—extraordinary claims by less-known scientists are suspect and, given the same quality of papers/results/application, the more famous scientist will be awarded. Some of the papers describing the work on which a Nobel Prize was later awarded got rejected at first [

53].

Merton focused on prestige awarded or not awarded to people and on the visibility or invisibility of research done by scientist. Both the prestige and the visibility of one’s research can be disproportionately elevated once one gets recognition or diminished—when recognition does not come. The Matthew effect does not only affect prestige and visibility, as these are connected to positions, salaries, and grants (i.e., the livelihood of scientists). These latter are greater problems. Because there are considerable differences in salaries between institutions [

54], and even the top 10% have a hard time securing funds for their research [

55], there is a greater pressure to get into the small cadre of successful scientists. In addition, as differences magnify, the sooner one secures a seat among the selected few, the better. The Matthew effect affects young researchers disproportionately [

55,

56]. Established scientists could have bad years and go without funding (or without enough funding), but young and early-stage researchers do not have that luxury. Without a permanent position, failing to secure the next postdoctoral position, or the grant that leads to the tenure track can mean that you are out of academia.

5. You Get What You Select for

Coming from evolutionary biology, the first law of directed evolution comes to my mind: ”you get what you screen for” [

57]. When asked, both scientists and granting bodies would say that they want the brightest minds to do great science. This is what they want to select for. However, the selection criteria are often quantitative measures, such as number of papers, cumulative impact factor, number of independent citations, h-index, etc. It is then no wonder that scientist will pursue goals that increase these measures. These goals can be at odds with the quality of science, our ultimate goal.

Scientists have many tasks as part of their jobs and generating knowledge (research) is only one of them. Those employed at universities might be obliged to teach. We all should participate in peer review. If positions and promotions are solely based on publications and/or its metric, then one should pursue these at the expense of others. Thus, those that can somehow escape teaching and turn down peer-review requests are at an advantage. The system actively selects against any activity which does not increase the metrics that are the basis of one’s assessment. As successful scientists are also role-models for future generations, and as they have the resources to hire Ph.D. students and postdocs, we are training a new generation of scientists to pursue bibliometric numbers instead of novel knowledge. I am not saying that every successful lab is based on bad ethics and avoiding tasks which should be integral part of a scientist life (for example, teaching), but if there are such successful labs, they pass on their “culture”. This is evolution, which requires reproduction, inheritance, and variation. New scientists are “produced” in labs around the word, and the working atmosphere affects the people trained there. Scientific practices—mostly methodological—are inherited from one’s supervisors. In addition, there is variation. A new variant, which emphasizes the ruthless pursuit of bibliometric numbers over honest science, will increase in frequency (it does, this is what we observe).

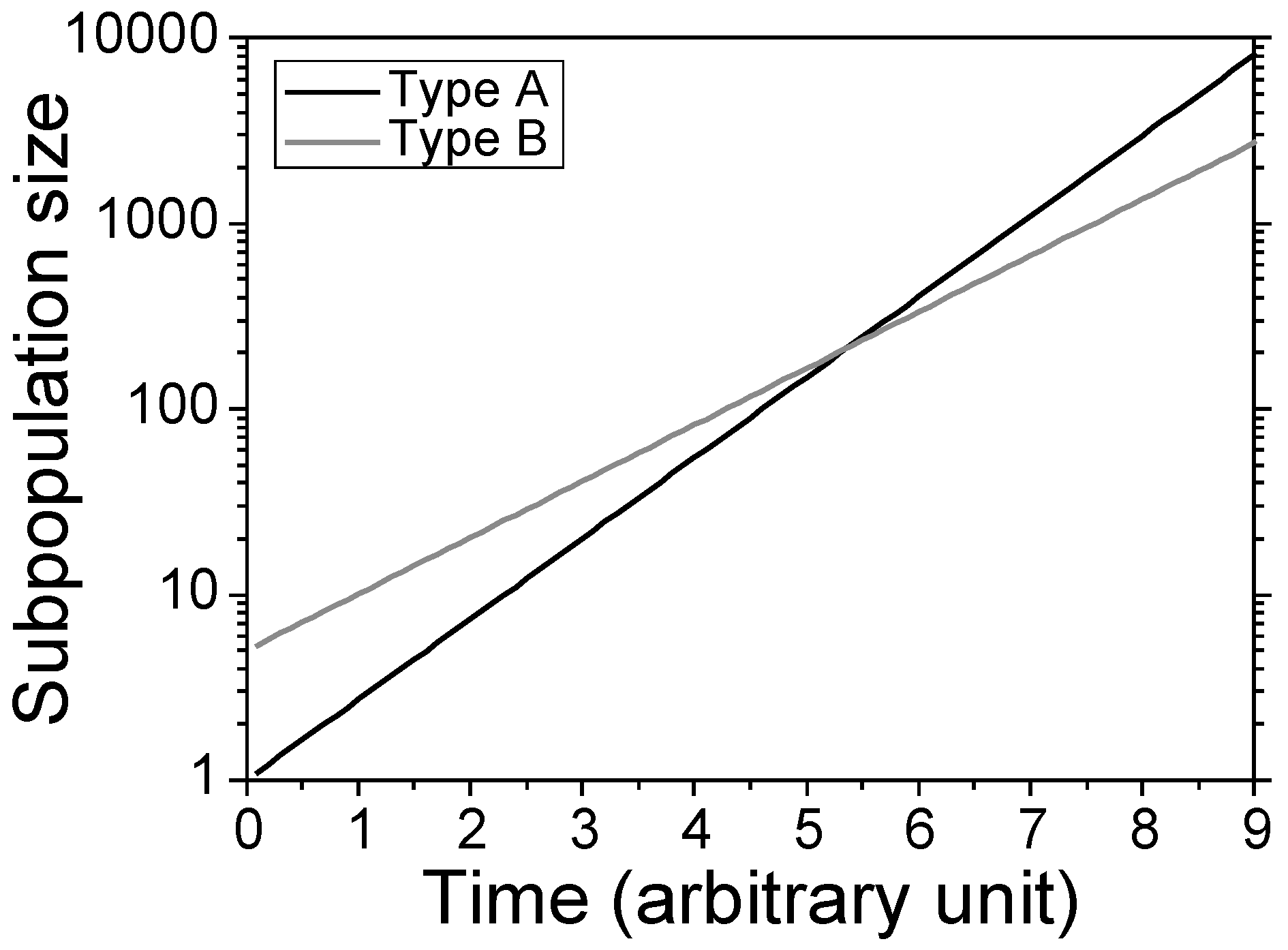

Evolutionary biology can come to our help in two other ways too. First, subpopulations with phenotypes having higher fitness will increase in frequency over subpopulations with lower fitness. The simplest mathematical way to show evolution is to follow the frequency of phenotypes (A and B) within two exponentially growing subpopulations. Evolution requires (1) reproduction (the capacity of exponential growth), (2) heredity, and (3) variation [

58]. We assume exponential growth, heredity (A begets A and B begets B), and variation (as there are two phenotypes present). The exponential growth of the two subpopulations can be written as

where

is the population size;

is the initial population size at time

; and

is the per capita instantaneous rate of population increase [

59]. If

then the frequency of A will reach 1 given enough time, that is

Here the per-capita instantaneous rates of population increase (

and

) are the fitnesses of the phenotypes. The phenotype having higher fitness will, in time, dominate the population (

Figure 4). Therefore, any initial difference between initial population sizes will not affect the outcome. I emphasize the “in time” part. The initial population size (

and

) influences the frequency of the types initially (

Figure 4).

Now I do not claim that an exponential growth or even a bounded logistic growth describes the increase of some bibliometric number, but I evoke it as an analogy. Ultimately, what matters in evolution is fitness, but it is a dynamic and rather elusive characteristic [

60,

61,

62,

63], not unlike scientific excellence. Evolutionary biologists want to measure some demographic or morphological characteristic and use it as proxy for fitness. Fitness—growth rate—can be measured, but it takes time, and it does not solve the problem of somehow predicting it from data that can be obtained here and now. Similarly, we would like to predict which scientist is able to have a long and productive scientific career. Either we let people have a lifetime in science and measure their worth in retrospect, or—as we do nowadays—we base our assessment on something we can measure here and now. In evolutionary biology, we know that population size is a poor proxy for fitness. A new promising scientist will have lower publication count, citations, h-index, etc. than the more established scientist. This does not mean that she or he is worth less; it is just the effect of her or his time spent in science.

The most severe selection is on the young generation of scientists. Getting into graduate school, obtaining a Ph.D. and then a postdoc position are all bottlenecks that weed out those whom the system deems unworthy. The selection criteria are publication count, cumulative impact factor, the prestige of the institution the candidate comes from, and so forth. These characteristics are poor indicators of the young scientist’s merits, as they may correlate more with the prestige of her/his supervisor/lab head/institute. Characteristics, which are determined by the genotype of the parents and not by the genotype of the individual, are considered parental effects in biology. That is basically the initial population size mentioned above, the baggage one carries with her/himself from their alma mater. However, we do not want to select young scientists based on the merits of their supervisors/lab heads.

There is a second lesson from evolutionary biology worth taking. If populations grow exponentially, then the frequency of the fitter will increase. This outcome changes if population growth is hyperbolic [

64], i.e., faster than exponential. In this regime, the outcome of selection also depends on the initial population sizes [

65]. Given two subpopulations having equal growth rates, the one having higher initial population size will win. Moreover, a subpopulation with lower growth rate (fitness) can still win if its initial population size is considerably higher than the other’s. The theory was applied to monetary growth [

66]: if the interest rate increases with the capital, then we are in the supraexponential growth regime, and differences in the intrinsic interest rates are masked by the amount of capital the actors have. Interest rates actually grow with the capital invested [

67,

68]. Thus, inequality will rise. This finding of Thomas Piketty is basically the Matthew effect at work in economy. Taking the above together, we can see that the Matthew effect leads to a selection regime, in which the winner is the one with more initial prestige/merit, irrespective of the potential for further gain of prestige. Therefore, scientists who can amass prestige/merit early in their careers will rise higher compared to ones with less initial prestige. Consequently, a selection based on initial differences would be very aggravated. We are selecting for precocity [

56], i.e., selecting those “who are uncommonly bright for their age” [

69]. Very few late bloomers can enter the top, as a strong publication record at the beginning is a must [

51].

Summarizing the above, we can see that a competitive environment, in which achievement is measured by bibliometric numbers exacerbated with the Matthew effect leads to a selection regime, in which there is a pressure to quickly amass as much bibliometric number/prestige as possible. It is in the self-interest of individuals to pursue quick ascension even if the way is paved with scientific fraud, misconduct, and unrepeatable results.

6. Solution Cannot Come from Individuals

The usual answer to an uncovered fraud or misconduct is to penalize the perpetrator. This is right. Then there are press conferences and reports in which the host institute promises to be more careful and that its scientists should have more integrity (e.g., [

70]). Thus, it seems that there is a belief—hope or wishful thinking—that this behavior is just the result of a character flaw in some “bad people”, and proper education can prevent this. Unfortunately, this is not the case. When there is a strong selection toward a certain goal (more papers, higher citations, higher cumulative impact factor, so on and so forth), there will be strategies to achieve these goals.

One can do a quick cost/benefit analysis. Honest science has its cost: it might require more work, and in the end, no guarantees for publication in a good journal. Society at large definitely benefits from valid scientific results: our accumulated knowledge increases. However, is there a benefit for the one doing the research? Having a reputation of doing solid, reliable experiment can have its benefits, but developing such reputation takes time, and if one cannot get to the next stage in academia (from Ph.D. student to postdoc, from postdoc to tenured researcher) then this long-term benefit will not be realized. On the other hand, the benefits from manipulating/faking data can be considerable (publication in better journal, saved time and resources for more research), while the cost is little. Outright faking data might result in losing one’s job, but for that one needs to be very careless—like using the same faked figures in many publications [

71], using the same figure to show replicates [

72,

73], referring to a section of a third-party medical dataset that does not exist [

74], or boasting about questionable practices in a blog [

75,

76,

77].

Sometimes insiders warn the journals and/or the institutes that something was amiss during the investigation [

78]. Unfortunately, one can publish based on made up data for decades [

79]. In many fields, there is little risk of one’s study to be repeated, because it is expensive, time consuming (for example, those requiring considerable computer power [

80]), or not possible (for example, each estimate of heritability on different populations are expected to be different; in similar veins genetic association studies are expected to give different results in different populations [

81]). The huge benefit of misconduct is evident from the fact that the papers affected are mostly in high-profile journals. The above stories are just the tip of the iceberg. High-profile publications are under more scrutiny, and repeated manipulation and faking have a higher chance of detection. However, many of these took years to uncover and then investigate. How many small manipulations and fabrications pass undetected? When 2% of the researchers admit that they have fabricated or manipulated data [

82], then the problem is systemic rather than the act of some isolated “bad people”.

If the advancement of individuals (high stakes) depends on measures that can be increased by low cost misconduct, rational people will be tempted to do so. Is misconduct the only way to advancement? Master Yoda’s answer to Luke’s question whether the Dark Side is more powerful is also apt here: “No, no. Easier and more seductive; not more powerful.” Individuals will be tempted to walk the easier path if this path leads to higher salary, a permanent position or more grant money. We cannot expect individuals to act against their best interests. Therefore, we need to change the selection regime so that it selects for scientific excellence and not for a proxy that can be accumulated by misconduct. Such change requires that granting agencies and institutional committees change how they assess applications.

7. Proposals for a Better System

This paper would not be complete without some proposals for a better system. We all know that more resources would lead to less competition, and that, by itself, would solve some of the problems discussed here. Unfortunately, I do not think that governments will increase their R&D spending just to uplift the ethics in science. Only policy makers can change the system so that the benefits of scientific misconduct will lessen.

(a) Do not base selection on bibliometric numbers

A joint statement by three scientific academies requests that bibliometric numbers should be avoided in evaluations, especially for early stage researchers [

83]. A similar statement was already made in the San Francisco Declaration on Research Assessment [

84]. We have seen the damage the “publish or perish” mentality caused and the foul atmosphere it fosters. We need to let go of our fond numbers and “let’s read people’s work and evaluate each study or proposal on its merits” [

85]. We need to judge people [

86], even if it brings us out of our comfort zone. Many of the numbers we employ today reflect on the journal and not on the individual scientist. Individuals, on the other hand, can also be judged based on their other skills and characteristics (for example, teaching activity). Therefore, there are ways to assess people without falling back on bibliometric numbers.

It is especially important to judge a young scientist on something other than impact factors and citations. For example, the work they have actually performed, or the experiment they have set up, might be a better indication of their merits, than the journal they have published in. If people feel that their advancement depends on the rigorous and unbiased science they do, then there is less incentive to fake or manipulate data (on the contrary).

(b) Select out only those applications that should not be funded

The application procedures are generally such that they can weed out unworthy individuals (not always, as a project aiming at constructing a

perpetuum mobile had been funded by the Hungarian government a few years back [

87]). When selecting out those that are less fit, an evolving population can stay at a fitness peak. However, when the selection is among individuals who are equally fit (i.e., all should get the honor, but there is only a finite number to be handed out), then it is not selection, it is drift. Drift changes the frequency of a heritable trait and can lead to the fixation of a trait over another, but it does not lead to adaptation. Sometimes it is not easy to distinguish the result of drift from selection, but when, for example, the success rate of a certain grant proposal is very low, there is a suspicion that there is considerable randomness in who gets the grant. The success rate of the European Research Council’s (ERC) Consolidator Grant—the flagship basic research grant in Europe—in 2017 was 13% [

88]; similarly, the success rate of NIH (USA) applications are well below 20% [

89]. I am sure all recipients are worthy individuals, but at the same time, there are a lot of worthy projects in the not funded 80+%.

(c) Baseline funding

If grants are basically distributed only to a fraction of the qualified and worthy projects, then we need to admit that it is basically a lottery. The entrance fee to this lottery is huge: the cost of writing application and assessing them are considerable [

90]. Just by freeing up this money, there would be more to distribute. Funding then could be an honest lottery, in which case the winner of a grant is chosen randomly from the pool of qualified individuals. Alternatively, baseline funding could be introduced. In this case, grant money is distributed equally among all qualified personnel, and thus each would be able to maintain their research [

91].

A lottery style of resource distribution would be the easier to implement. Many of the funding schemes have a rating system in which applications get approval for funding or are rejected. However, because of lack of resources, only some of the approved applications are actually funded. All approved applications should enter the pool for random picks. However, this system can be rigged in the sense of choosing only so many applications for the pool as could be funded, and disqualifying applications that, given enough funding, would be funded. Furthermore, this system does not solve the waste of resources associated with writing and evaluating applications.

Baseline funding solves the problem of wasting time and resources on writing and evaluating grant applications. Every qualified scientist gets a share of the available resources. Shares might not be equal as some fields require more resources than others; for example, research in the humanities costs less than research in the life sciences. However, shares should be as similar as possible. Please note that salaries, conference fees, travel and accommodation, and overheads are the same, irrespective of the field. Differences come from the costs of equipment and consumables.

(d) Peer-review should ensure scientific soundness and good presentation, and not identify eye-catching claims

I do not think that there is a fundamental problem with the peer-review system. Qualified scientists can catch problematic methodology, conclusions that are too removed from the results presented, lack of in-depth knowledge of the field, etc. This is one of the tasks of the peer-review system. Furthermore, peers can offer advice on how to improve the presentation of the manuscript. This is the other tasks of the peer-review system. Unfortunately, there is a third aspect of the peer-review system: barring or opening possibilities for publication in the target journal. When journals/editors ask reviewers to comment on how interesting or ground-breaking the presented results are, then the game is no longer about catching scientifically unsound papers, but to allow or disallow the accumulation of merit by the authors. As a reviewer, I explicitly refuse to answer these questions.

Journals are mostly for-profit enterprises that need to sell. Subscriptions to high impact-factor journals sell better and it is easier to attract paid publications (open access) to them. Thus, editors also shape their product by selecting papers that will attract citations, and thus increase the perceived value of the journal. Again, it is in the self-interest of editors to behave this way. At the extreme, predatory journals accept nearly anything to generate revenue [

92,

93]. Either journals should mostly be non-profit outlets managed by societies, universities, academies, and governments (which would lessen the cost of publications and accessing publications), or we should stop assessing people and their results based on the journal their papers appear in (in alignment with the San Francisco Declaration on Research Assessment [

84], see my point (a) above). The latter route is easier to take, as it requires a policy change and not the acquisition of journals and/or the launching and establishment of new ones.

(e) Try to eliminate status bias

When reviewers are aware of the authors or the authors’ institution, it can influence their decision about the papers. A recent study compared the acceptance rate of abstracts for an IT conference of eminent researcher and researchers from prestigious institutions in single and double- blind settings [

94]. In the traditional single-blind setting, an abstract from an eminent scientist and prestigious institutions had a better chance to be accepted than in a double-blind setting. Therefore, the Matthew effect is operating without hindrance. A double-blind review system, on the other hand, can ameliorate the problem.

8. Conclusions

Low personal incomes and low chances of advancement create a competitive environment with high stakes. Add to this that personal advancement depends not on diligence, rigor, hard work, and the ability to do solid science, but on bibliometric numbers. These numbers can be gamed. An initial rise gives a momentum that can carry someone for the rest of her/his career via a positive feedback (the Matthew effect). Therefore, eye catching, glamorous results that can be placed in high prestige journals become more important than rigorous results that can be replicated. Scientists, especially young scientists, are tempted to skip some repetitions, “enhance” some of the results, set aside data that do not support a good story, tailor statistical procedures to obtain desired outcome, etc. Consequently, the quality of science deteriorates. As the individuals’ livelihood depends on their publication record, we cannot expect them to always adhere to high ethical standards despite the competitive disadvantages it imposes. The solution must come from the granting agencies/research institutes, which should abolish the “publish or perish” mentality.