A Decade of Deepfake Research in the Generative AI Era, 2014–2024: A Bibliometric Analysis

Abstract

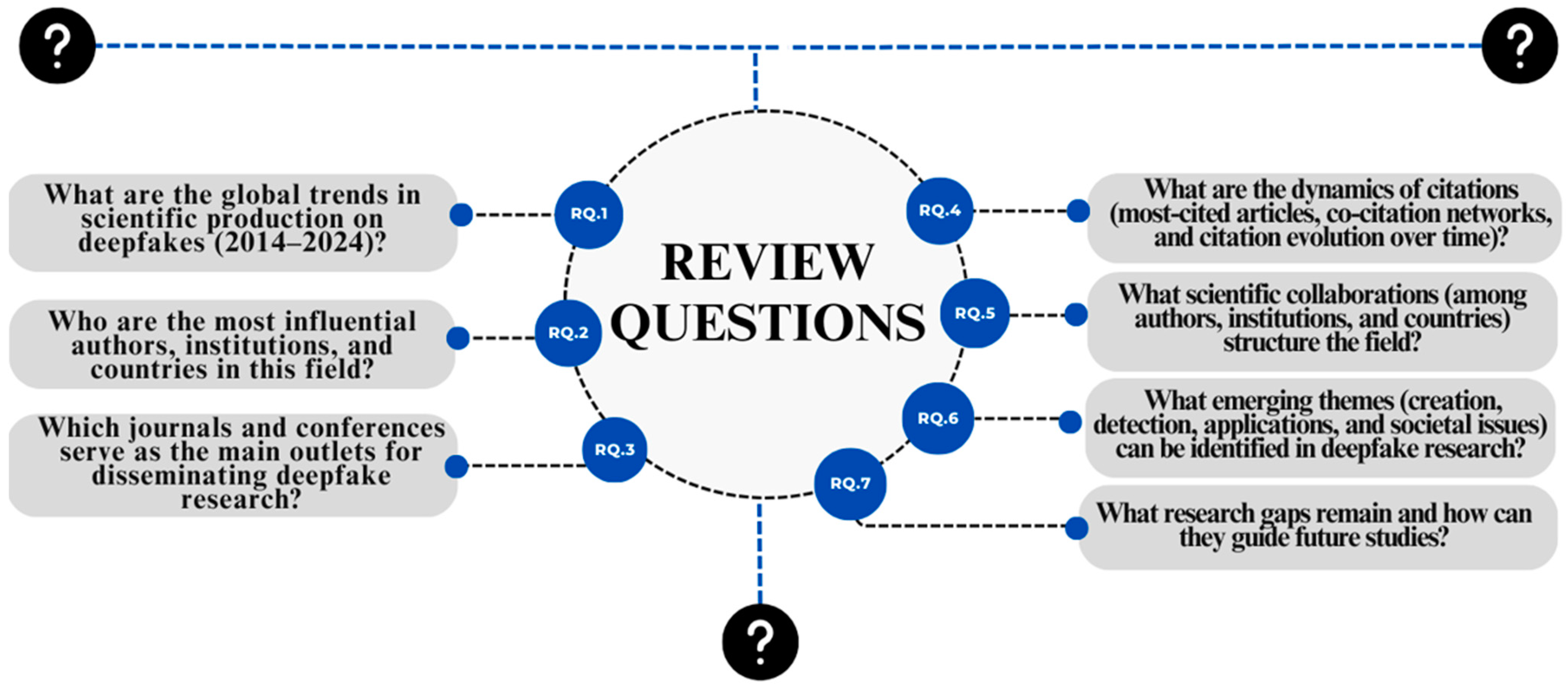

1. Introduction

- (i)

- Combining a selection of bibliometric indicators for more nuanced examination;

- (ii)

- Covering a full ten years (2014–2024), recognized as a landmark moment in the history of deepfakes and related events, thus providing a pioneering longitudinal overview of deepfake research in the era of generative AI;

- (iii)

- Identifying underrepresented topics and collaboration hotspots to guide future research trends in generative AI.

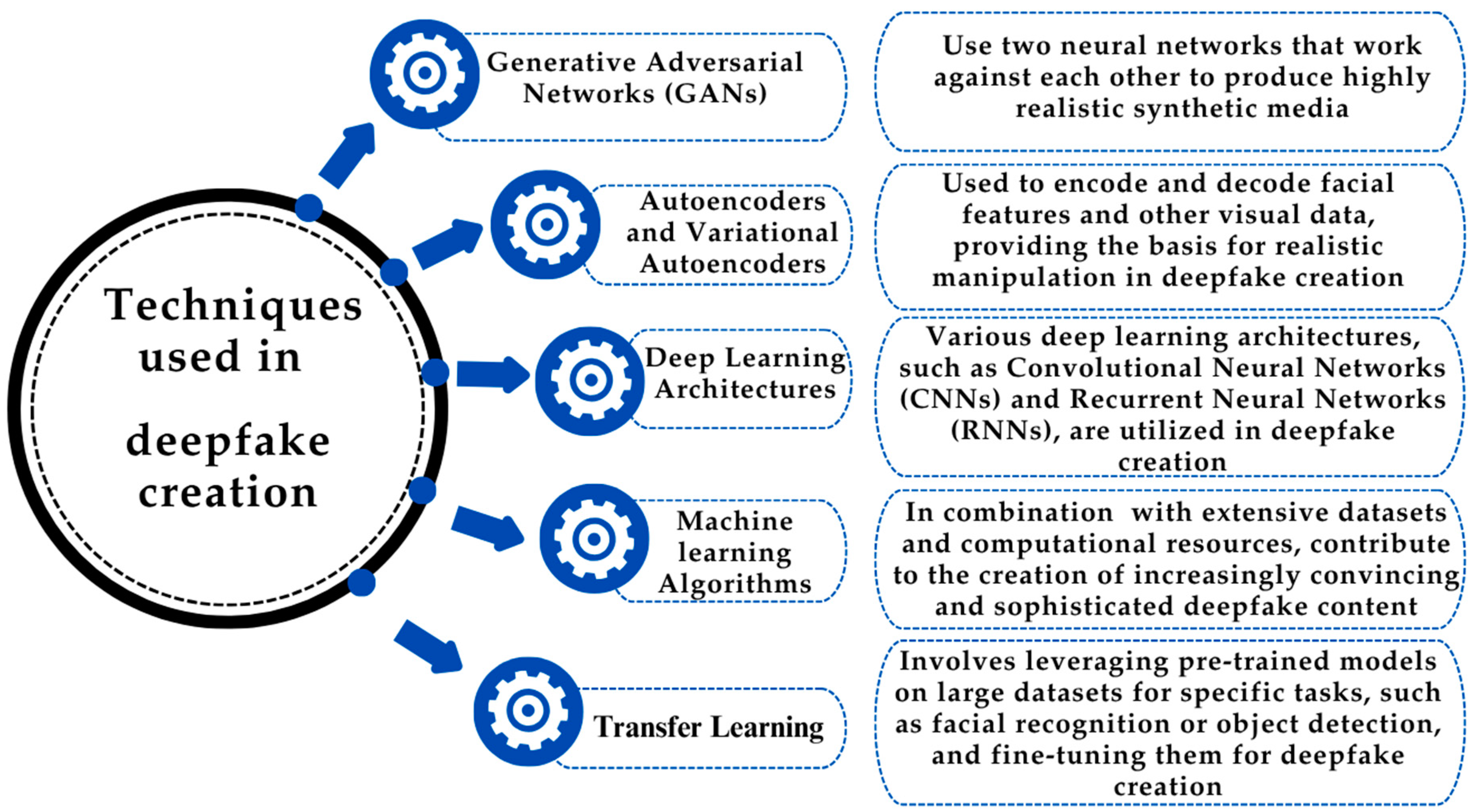

2. A Brief Literature Review on Deepfake Research

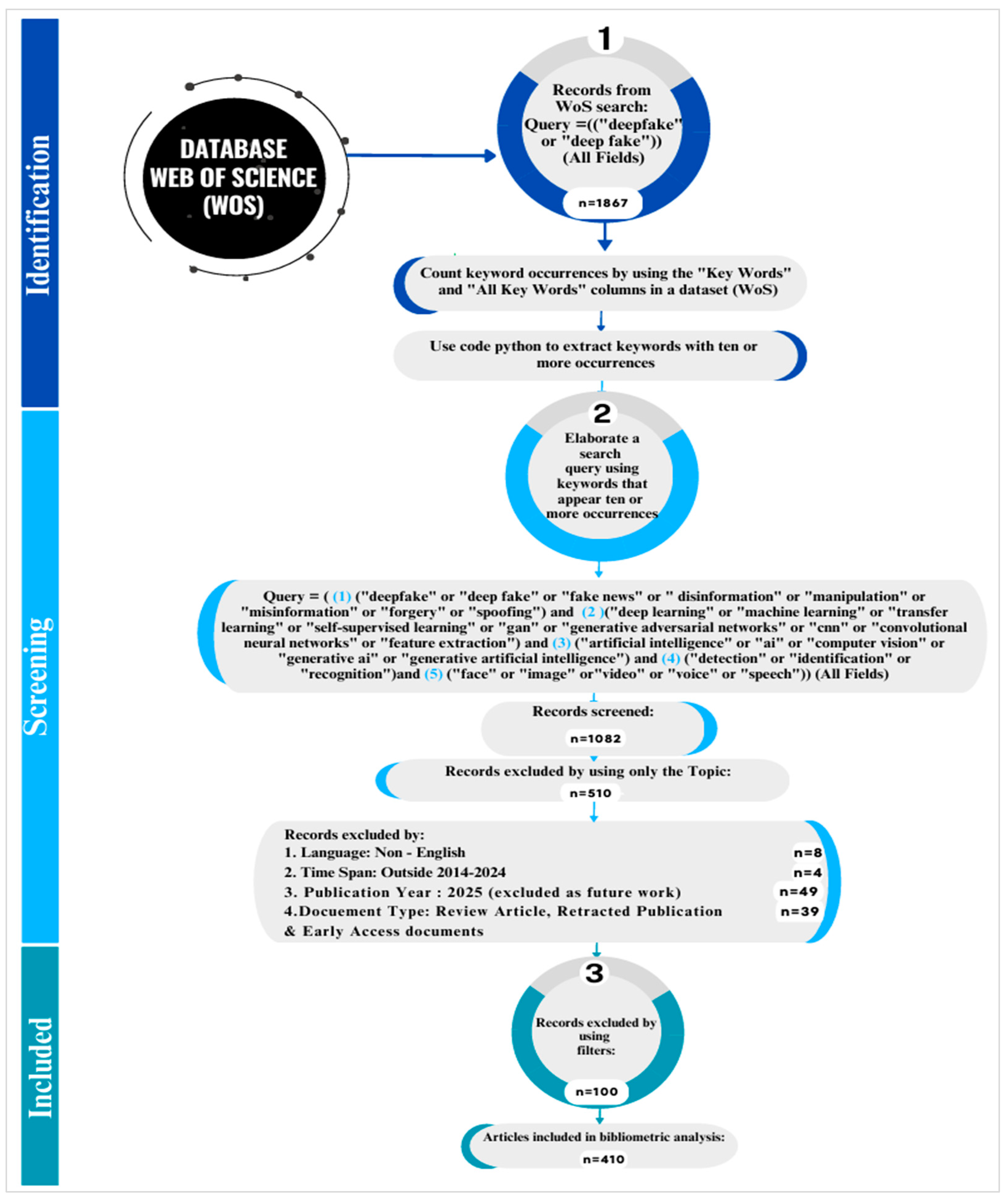

3. Materials and Methods

3.1. Data Collection and Filtering

TS = ( (1) (“deepfake” or “deep fake” or “fake news” or “ disinformation” or “manipulation” or “misinformation” or “forgery” or “spoofing”) and (2) (“deep learning” or “machine learning” or “transfer learning” or “self-supervised learning” or “gan” or “generative adversarial networks” or “cnn” or “convolutional neural networks” or “feature extraction”) and (3) (“artificial intelligence” or “ai” or “computer vision” or “generative ai” or “generative artificial intelligence”) and (4) (“detection” or “identification” or “recognition”) and (5) (“face” or “image” or “video” or “voice” or “speech”)).

- (1)

- Capture the broad spectrum of deceptive practices associated with deepfakes, ensuring the inclusion of diverse forms of media falsification.

- (2)

- Reflect the technological foundations used both to generate and detect deepfakes.

- (3)

- Position the study within the broader context of intelligent systems and content synthesis.

- (4)

- Emphasize the focus on methods for identifying manipulated content, a core concern in deepfake research.

- (5)

- Specify the formats most affected by deepfakes, enabling precise targeting of relevant studies.

3.2. Bibliometric Methodology

4. Results

4.1. Overview Analysis

4.1.1. Main Data

4.1.2. Annual Scientific Production and Average Citation per Year

4.2. Sources Analysis

4.2.1. Top 10 Most-Cited and Productive Sources

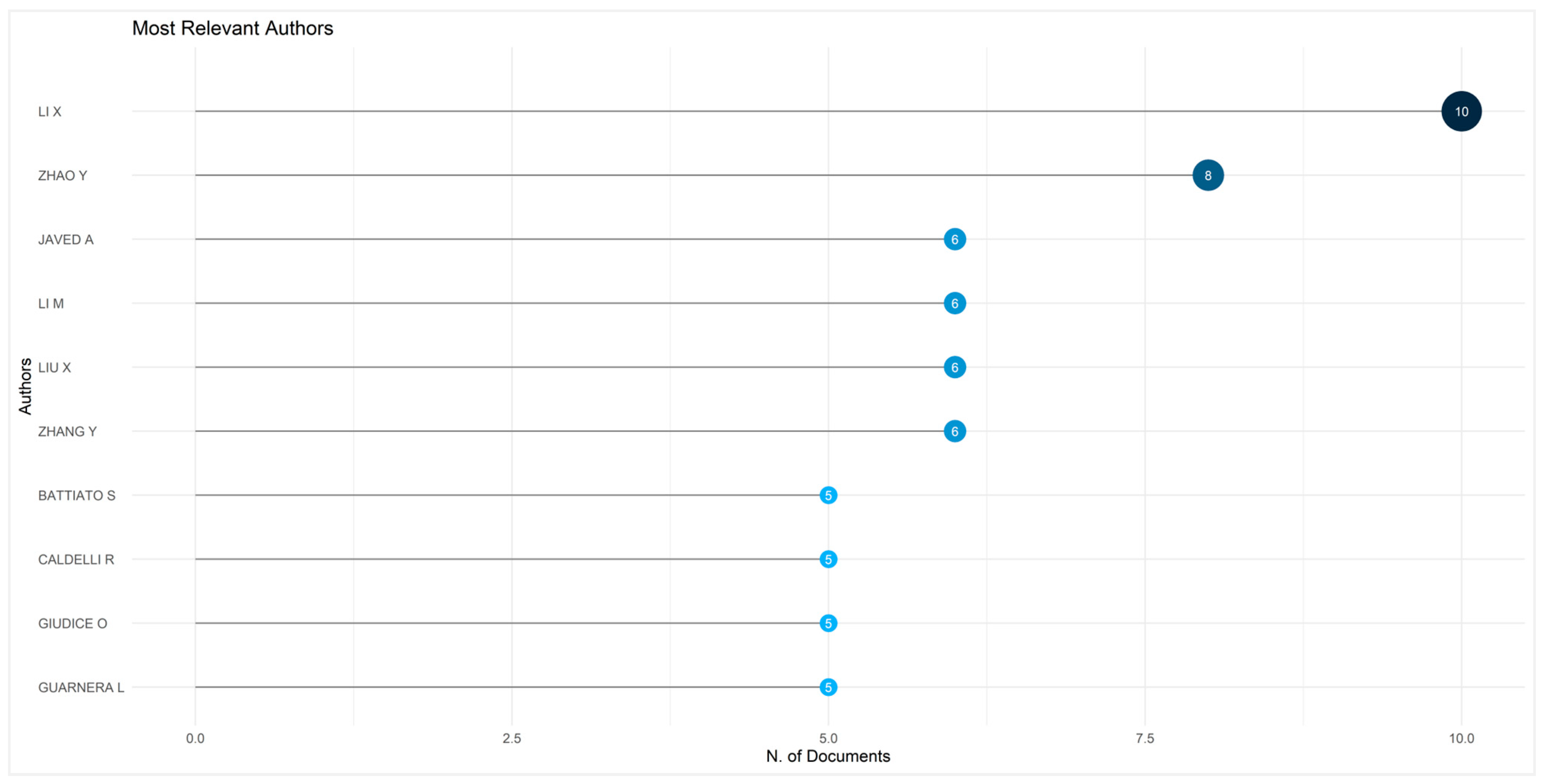

4.2.2. Top 10 Most Relevant Authors

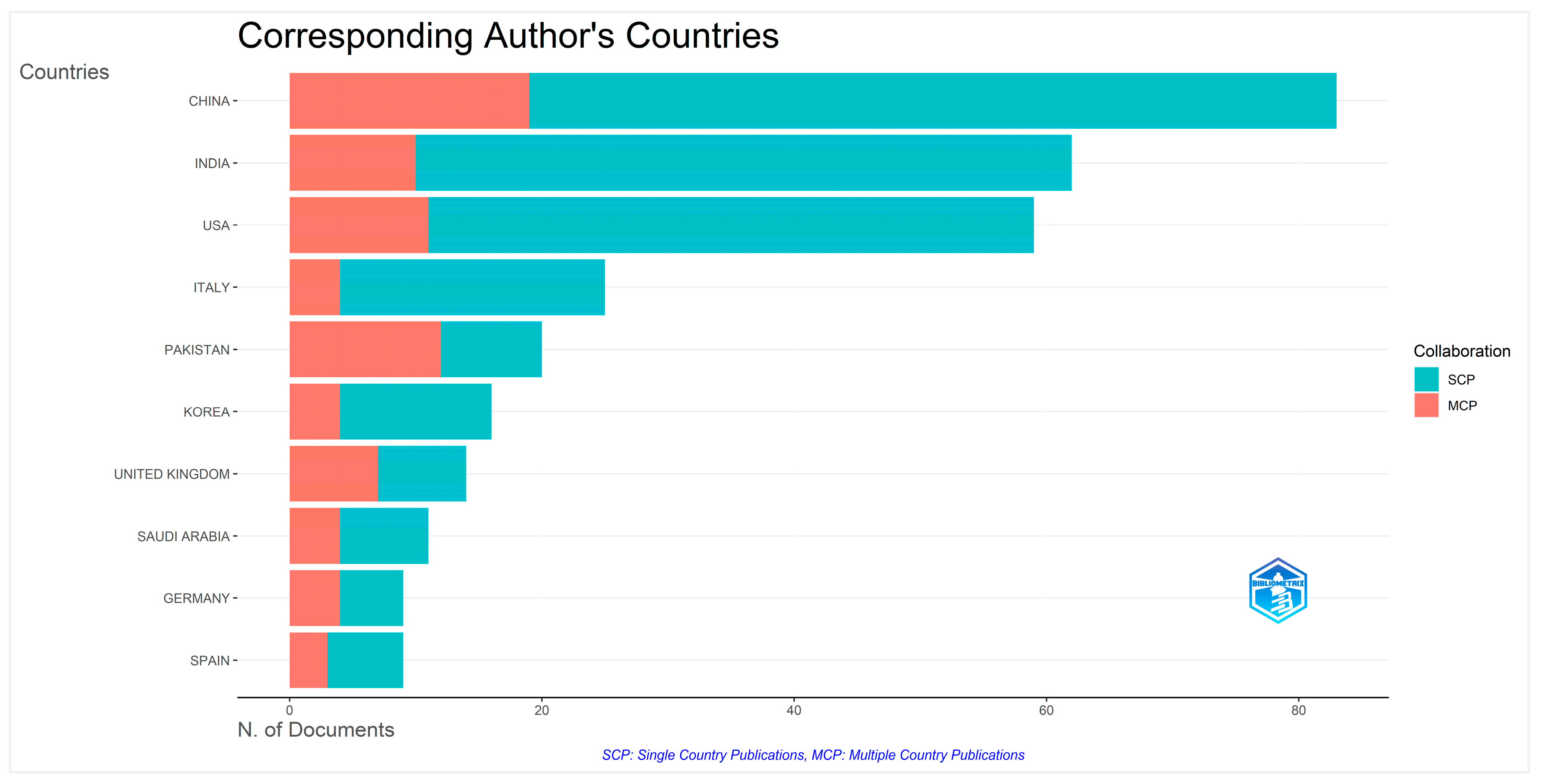

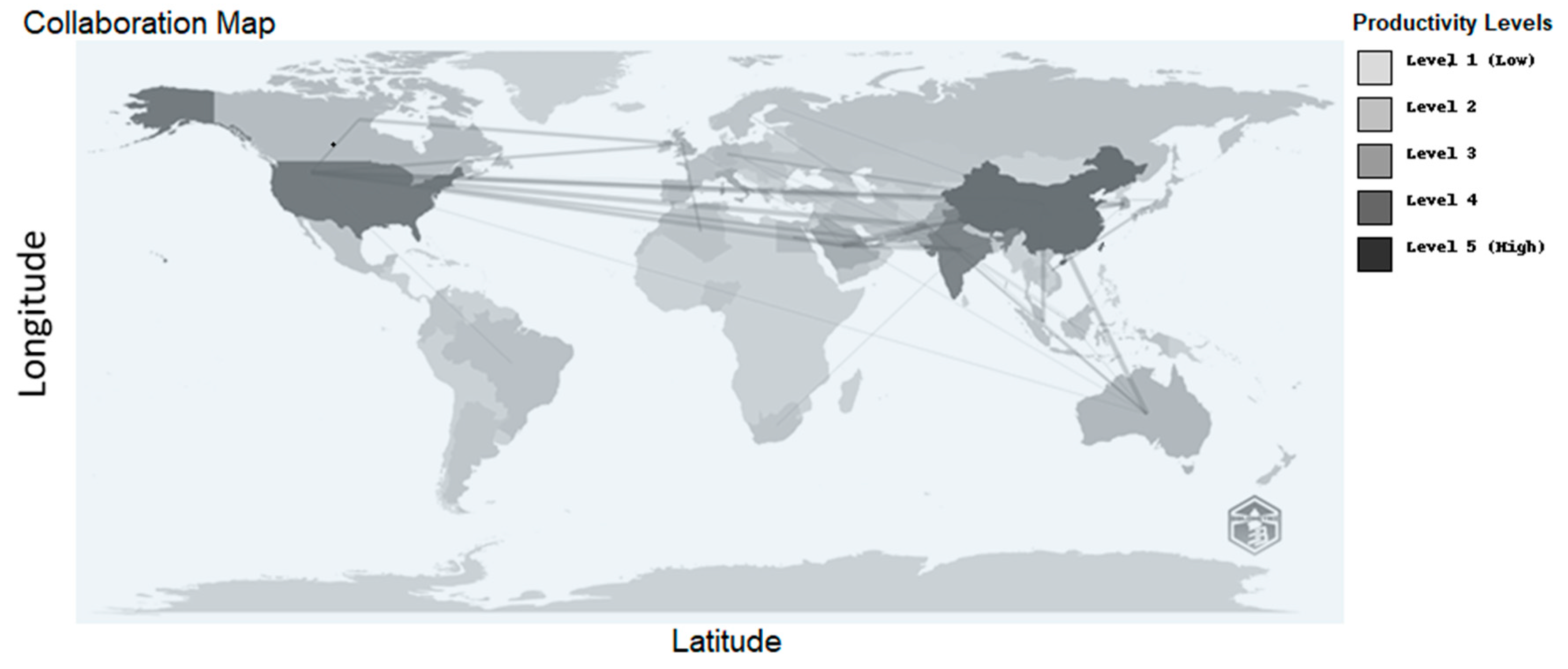

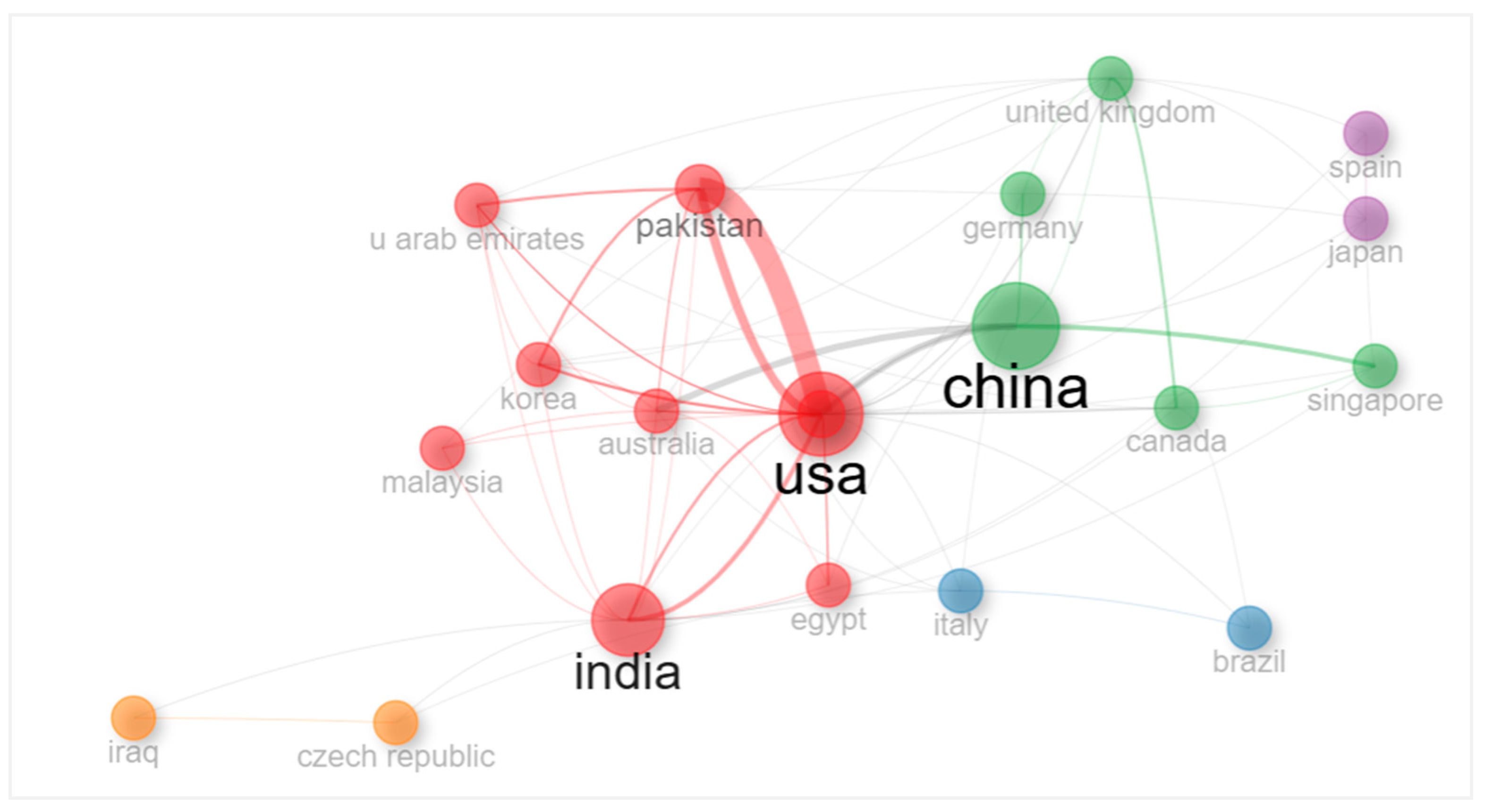

4.2.3. Top 10 Corresponding Author’s Countries

4.2.4. Top 10 Most Cited Authors Globally

4.2.5. Leading Contributors and Influential Publications

4.2.6. Top 10 Most Cited Documents Locally

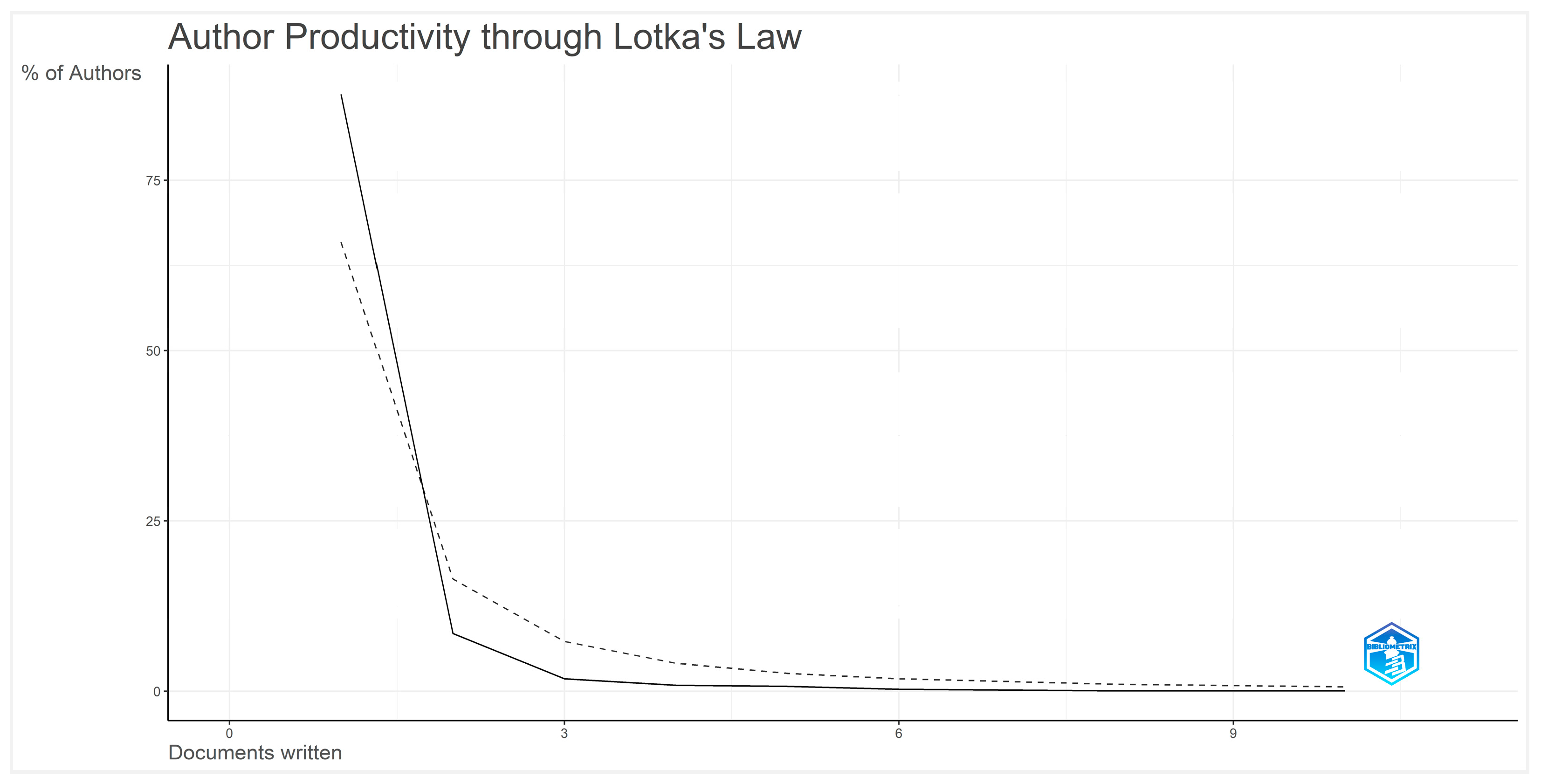

4.2.7. Lotka’s Frequency Distribution of Scientific Productivity

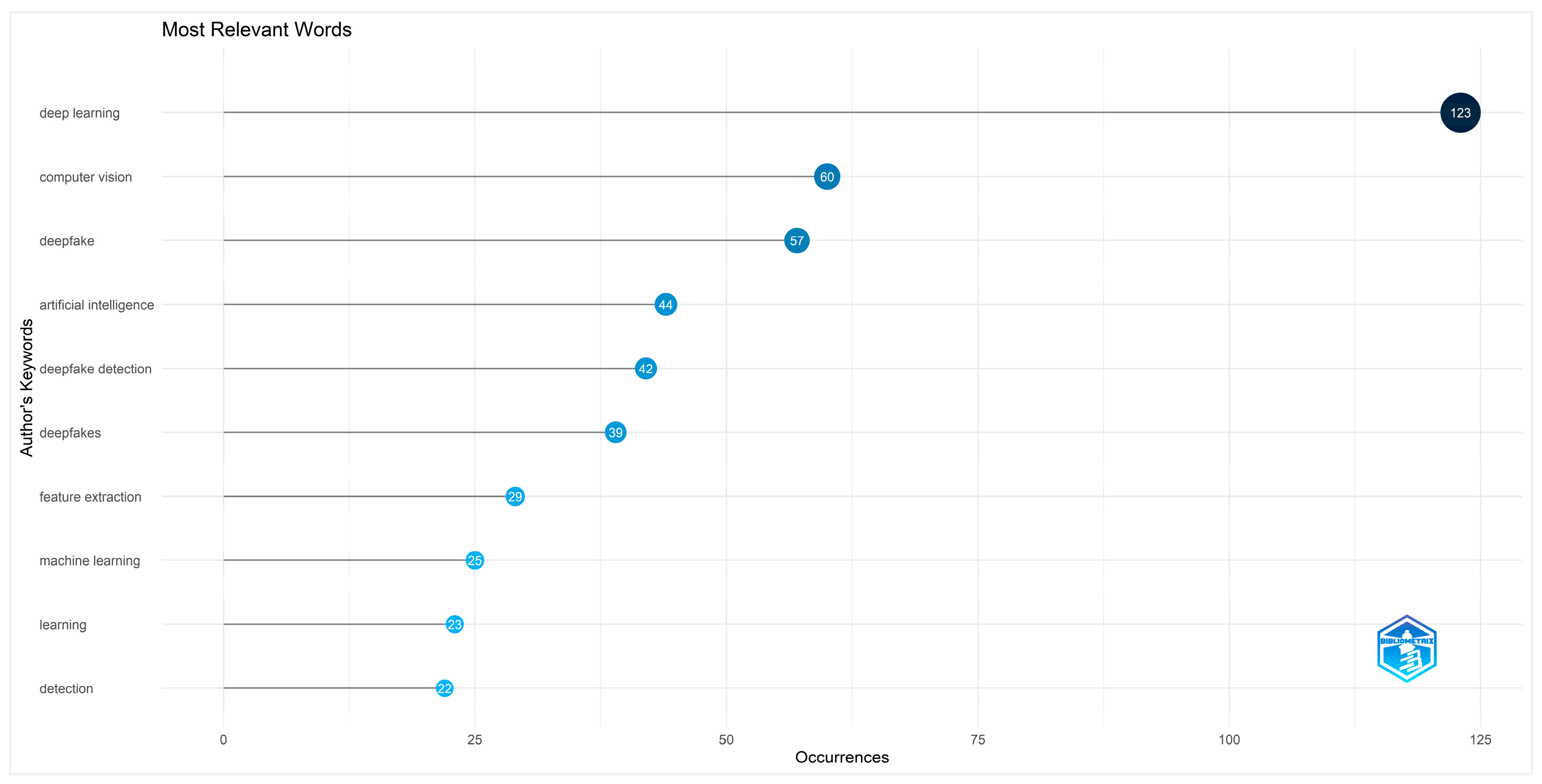

4.2.8. Lot Top 10 Most Frequent Words

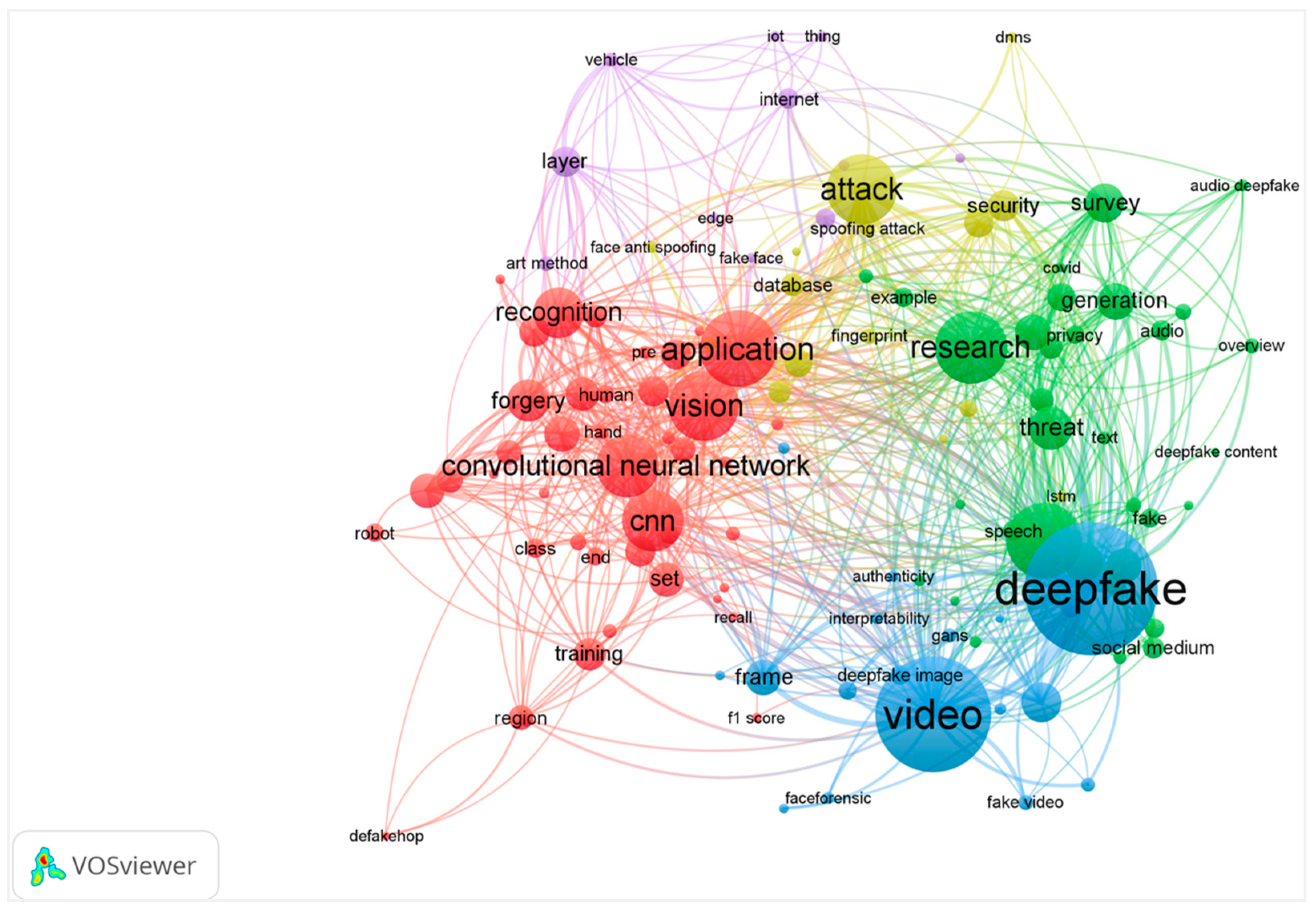

4.2.9. Keywords Co-Occurrence Network

4.2.10. Keywords Co-Occurrence Network

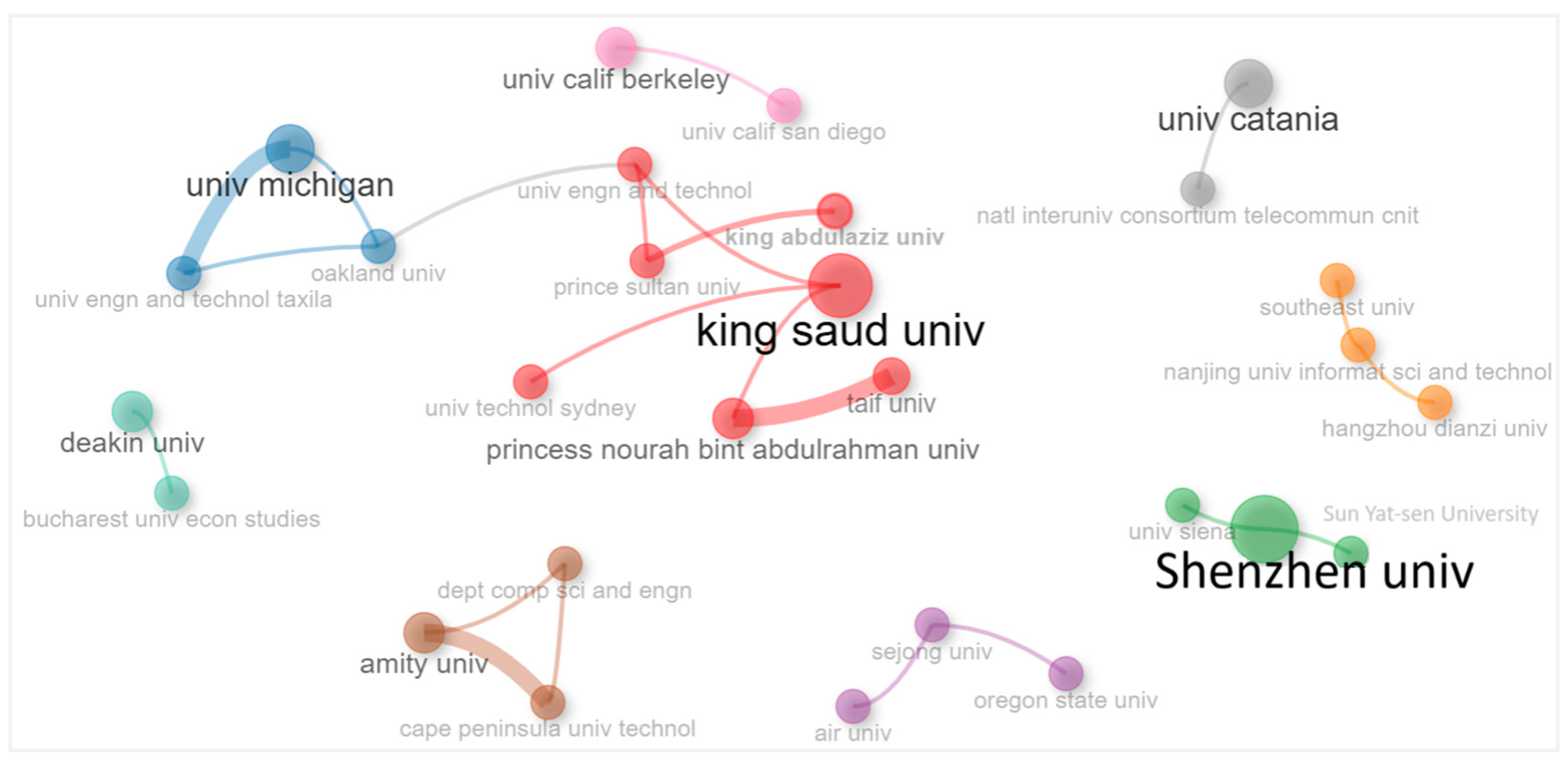

4.2.11. Organizations Co-Authorship

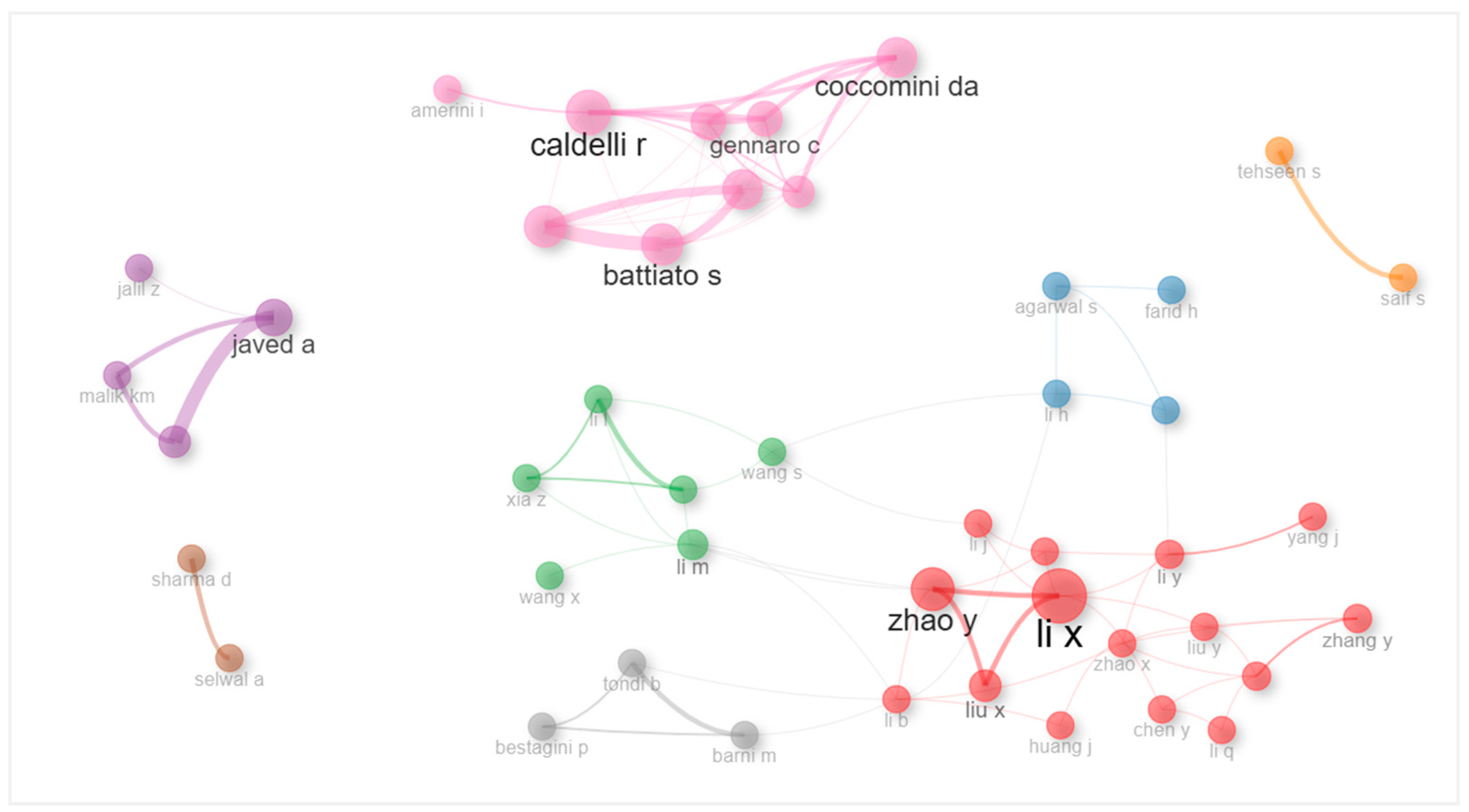

4.2.12. Author Co-Citation Network

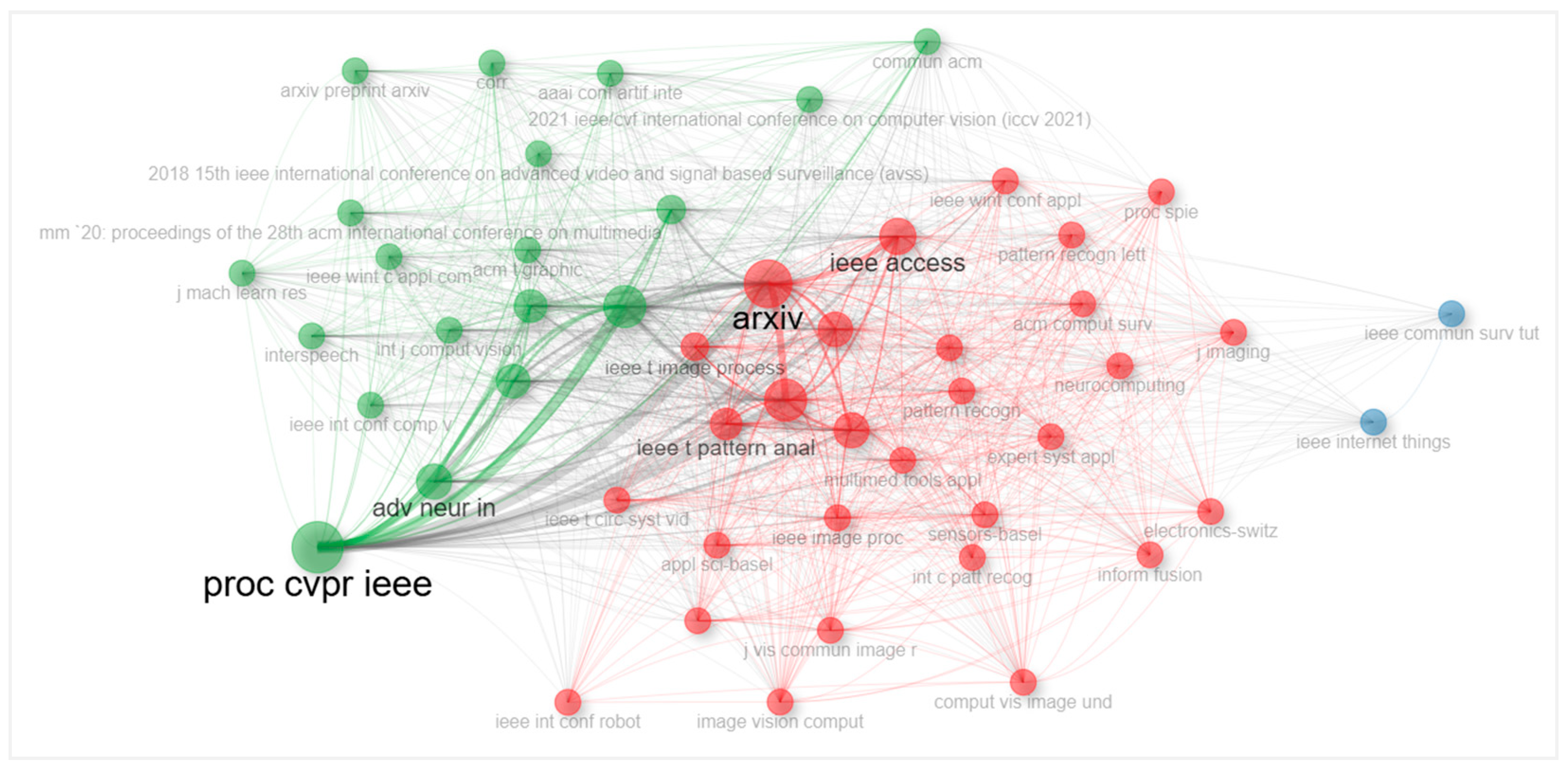

4.2.13. Network Visualization Map of Journal Co-Citations

4.3. Network Analysis

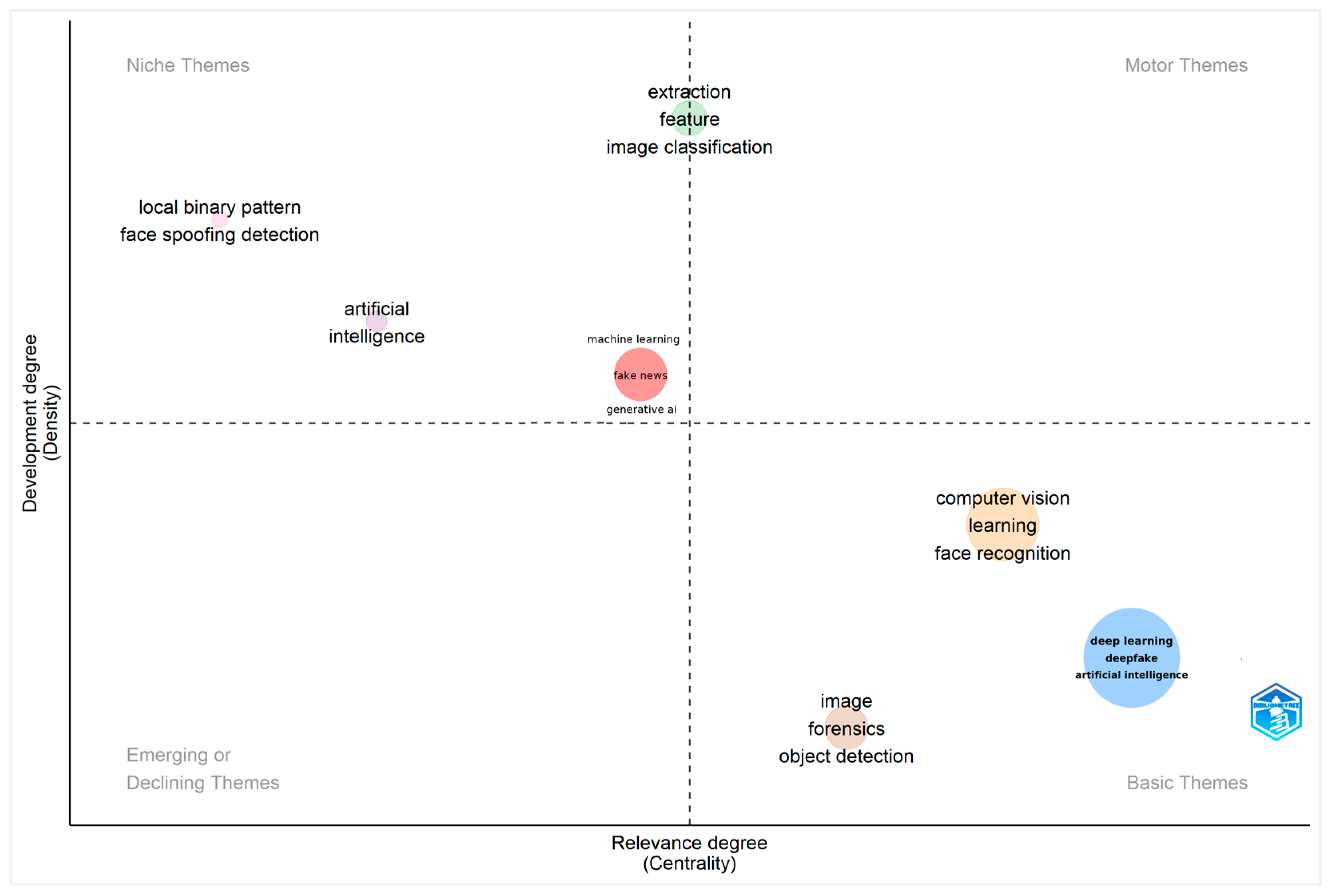

4.3.1. Thematic Mapping

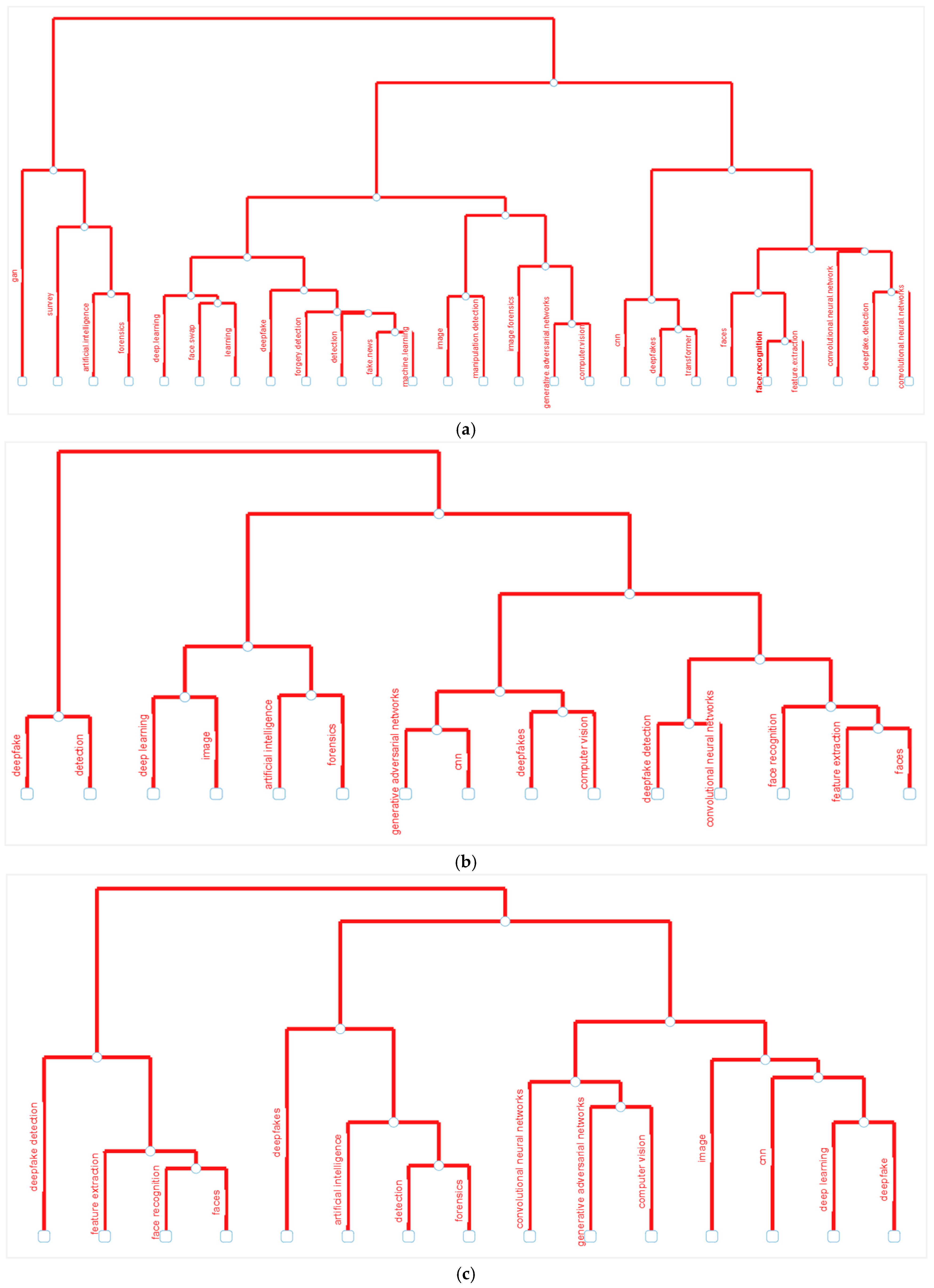

4.3.2. Conceptual Mapping of Keywords (MCA, CA, MDS)

5. Discussion

- The first group includes 13 keywords related to “deepfakes”, “fake news” scenarios, and their effects, highlighting their role in accelerating the spread of misinformation.

- The second group of 12 keywords is predominately AI-related, particularly generative AI and GANs (generative adversarial networks), which are extremely important for creating deepfakes.

- The third group includes 11 keywords pertaining to the detection of “deepfakes”, emphasizing methods for identifying and combating manipulated content.

5.1. Implications

5.2. Limitations

5.3. Future Research Directions

- Targeting emerging niches and generating novel insights in under-researched subfields.

- Examining interdisciplinary dimensions through co-citation analysis, thematic evolution, or the study of emerging research communities.

- Mixed-method or qualitative studies to complement bibliometric findings.

- Ethical, legal, and societal considerations for the implications of deepfakes.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abafe, E. A., Bahta, Y. T., & Jordaan, H. (2022). Exploring Biblioshiny for historical assessment of global research on sustainable use of water in agriculture. Sustainability, 14(17), 10651. [Google Scholar] [CrossRef]

- Abbaoui, W., Retal, S., El Bhiri, B., Kharmoum, N., & Ziti, S. (2024). Towards revolutionizing precision healthcare: A systematic literature review of artificial intelligence methods in precision medicine. Informatics in Medicine Unlocked, 46, 101475. [Google Scholar] [CrossRef]

- Acim, B., Kharmoum, N., Ezziyyani, M., & Ziti, S. (2025a). Mental health therapy: A comparative study of generative AI and deepfake technology. In M. Ezziyyani, J. Kacprzyk, & V. E. Balas (Eds.), International conference on advanced intelligent systems for sustainable development (AI2SD 2024) (Vol. 1403, pp. 351–357). Lecture Notes in Networks and Systems. Springer. [Google Scholar] [CrossRef]

- Acim, B., Kharmoum, N., Lagmiri, S. N., & Ziti, S. (2025b). The role of generative AI in deepfake detection: A systematic literature review. In S. N. Lagmiri, M. Lazaar, & F. M. Amine (Eds.), Smart business and technologies. ICSBT 2024 (Vol. 1330, pp. 349–357). Lecture Notes in Networks and Systems. Springer. [Google Scholar] [CrossRef]

- Agarwal, S., Farid, H., Fried, O., & Agrawala, M. (2020, June 14–19). Detecting deep-fake videos from phoneme–Viseme mismatches. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW 2020) (pp. 2814–2822), Seattle, WA, USA. [Google Scholar] [CrossRef]

- Aghaei Chadegani, A., Salehi, H., Md Yunus, M. M., Farhadi, H., Fooladi, M., Farhadi, M., & Ale Ebrahim, N. (2013). A comparison between two main academic literature collections: Web of science and scopus databases. Asian Social Science, 9(5), 18–26. [Google Scholar] [CrossRef]

- Alkhammash, R. (2023). Bibliometric, network, and thematic mapping analyses of metaphor and discourse in COVID-19 publications from 2020 to 2022. Frontiers in Psychology, 13, 1062943. [Google Scholar] [CrossRef] [PubMed]

- Alnaim, N. M., Almutairi, Z. M., Alsuwat, M. S., Alalawi, H. H., Alshobaili, A., & Alenezi, F. S. (2023). DFFMD: A deepfake face mask dataset for infectious disease era with deepfake detection algorithms. IEEE Access, 11, 16711–16722. [Google Scholar] [CrossRef]

- Amerini, I., Galteri, L., Caldelli, R., & Del Bimbo, A. (2019, October 27–28). Deepfake video detection through optical flow based CNN. IEEE/CVF International Conference on Computer Vision Workshops (ICCVW) (pp. 1205–1207), Seoul, Republic of Korea. [Google Scholar] [CrossRef]

- Anker, M. S., Hadzibegovic, S., Lena, A., & Haverkamp, W. (2019). The difference in referencing in Web of Science, Scopus, and Google Scholar. ESC Heart Failure, 6(6), 1291–1312. [Google Scholar] [CrossRef]

- Ansorge, L. (2024). Bibliometric studies as a publication strategy. Metrics, 1(1), 5. [Google Scholar] [CrossRef]

- Apolo, Y., & Michael, K. (2024). Beyond a reasonable doubt? Audiovisual evidence, AI manipulation, deepfakes, and the law. IEEE Transactions on Technology and Society, 5(2), 156–168. [Google Scholar] [CrossRef]

- Aria, M., & Cuccurullo, C. (2017). Bibliometrix: An R-tool for comprehensive science mapping analysis. Journal of Informetrics, 11(4), 959–975. [Google Scholar] [CrossRef]

- Arruda, H., Silva, E. R., Lessa, M., Proença, D., & Bartholo, R. (2022). VOSviewer and Bibliometrix. Journal of the Medical Library Association, 110(4), 482–488. [Google Scholar] [CrossRef]

- Birkle, C., Pendlebury, D. A., Schnell, J., & Adams, J. (2020). Web of Science as a data source for research on scientific and scholarly activity. Quantitative Science Studies, 1(1), 363–376. [Google Scholar] [CrossRef]

- Bisht, V., & Taneja, S. (2024). A decade and a half of deepfake research: A bibliometric investigation into key themes. In G. Lakhera, S. Taneja, E. Ozen, M. Kukreti, & P. Kumar (Eds.), Navigating the world of deepfake technology (pp. 1–25). IGI Global. [Google Scholar] [CrossRef]

- Boukhlif, M., Hanine, M., & Kharmoum, N. (2023). A decade of intelligent software testing research: A bibliometric analysis. Electronics, 12(9), 2109. [Google Scholar] [CrossRef]

- Boukhlif, M., Hanine, M., Kharmoum, N., Ruigómez Noriega, A., García Obeso, D., & Ashraf, I. (2024a). Natural language processing-based software testing: A systematic literature review. IEEE Access, 12, 79383–79400. [Google Scholar] [CrossRef]

- Boukhlif, M., Kharmoum, N., Hanine, M., Elasri, C., Rhalem, W., & Ezziyyani, M. (2024b). Exploring the application of classical and intelligent software testing in medicine: A literature review. In Lecture notes in networks and systems (Vol. 904, pp. 37–46). Springer. [Google Scholar] [CrossRef]

- Bukar, U. A., Sayeed, M. S., Razak, S. F. A., Yogarayan, S., Amodu, O. A., & Mahmood, R. A. R. (2023). A method for analyzing text using VOSviewer. MethodsX, 11, 102339. [Google Scholar] [CrossRef]

- Chintha, A., Thai, B., Sohrawardi, S. J., Bhatt, K., Hickerson, A., & Wright, M. (2020). Recurrent convolutional structures for audio spoof and video deepfake detection. IEEE Journal of Selected Topics in Signal Processing, 14(5), 1024–1037. [Google Scholar] [CrossRef]

- Coile, R. C. (1977). Lotka’s frequency distribution of scientific productivity. Journal of the American Society for Information Science, 28(6), 366–370. [Google Scholar] [CrossRef]

- Cover, R. (2022). Deepfake culture: The emergence of audio-video deception as an object of social anxiety and regulation. Continuum, 36(4), 609–621. [Google Scholar] [CrossRef]

- Cozzolino, D., Poggi, G., & Verdoliva, L. (2017, June 20–22). Recasting residual-based local descriptors as convolutional neural networks: An application to image forgery detection. 5th ACM Workshop on Information Hiding and Multimedia Security (IH&MMSec ’17) (pp. 159–164), Philadelphia, PA, USA. [Google Scholar] [CrossRef]

- Dervis, H. (2019). Bibliometric analysis using Bibliometrix: An R package. Journal of Scientific Research, 8(3), 156–160. [Google Scholar] [CrossRef]

- Dhiman, B. (2023). Exploding AI-generated deepfakes and misinformation: A threat to global concern in the 21st century. Qeios. [Google Scholar] [CrossRef]

- Di Franco, G. (2016). Multiple correspondence analysis: One only or several techniques? Quality & Quantity, 50, 1299–1315. [Google Scholar] [CrossRef]

- Ding, F., Zhu, G., Li, Y., Zhang, X., Atrey, P. K., & Lyu, S. (2022). Anti-forensics for face swapping videos via adversarial training. IEEE Transactions on Multimedia, 24, 3429–3441. [Google Scholar] [CrossRef]

- Domenteanu, A., Tătaru, G.-C., Crăciun, L., Molănescu, A.-G., Cotfas, L.-A., & Delcea, C. (2024). Living in the age of deepfakes: A bibliometric exploration of trends, challenges, and detection approaches. Information, 15(9), 525. [Google Scholar] [CrossRef]

- Donthu, N., Kumar, S., Mukherjee, D., Pandey, N., & Lim, W. M. (2021). How to conduct a bibliometric analysis: An overview and guidelines. Journal of Business Research, 133, 285–296. [Google Scholar] [CrossRef]

- El-Gayar, M. M., Abouhawwash, M., Askar, S. S., & Sweidan, S. (2024). A novel approach for detecting deep fake videos using graph neural network. Journal of Big Data, 11(1), 27. [Google Scholar] [CrossRef]

- Ennejjai, I., Ariss, A., Kharmoum, N., Rhalem, W., Ziti, S., & Ezziyyani, M. (2023). Artificial intelligence for fake news. In J. Kacprzyk, M. Ezziyyani, & V. E. Balas (Eds.), International conference on advanced intelligent systems for sustainable development (AI2SD) (Vol. 637, pp. 65–74). Lecture Notes in Networks and Systems. Springer. [Google Scholar] [CrossRef]

- Garg, D., & Gill, R. (2023, December 1–3). Deepfake generation and detection—An exploratory study. 2023 10th IEEE Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON) (pp. 888–893), Gautam Buddha Nagar, India. [Google Scholar] [CrossRef]

- Garg, D. P., & Gill, R. (2024). A bibliometric analysis of deepfakes: Trends, applications and challenges. ICST Transactions on Scalable Information Systems, 11(6), e4883. [Google Scholar] [CrossRef]

- Gil, R., Virgili-Gomà, J., López-Gil, J. M., & García, R. (2023). Deepfakes: Evolution and trends. Soft Computing, 27(14), 11295–11318. [Google Scholar] [CrossRef]

- Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2020). Generative adversarial networks. Communications of the ACM, 63(11), 139–144. [Google Scholar] [CrossRef]

- Guarnera, L., Giudice, O., & Battiato, S. (2020). Fighting deepfake by exposing the convolutional traces on images. IEEE Access, 8, 165085–165098. [Google Scholar] [CrossRef]

- Guo, Z., Yang, G., Chen, J., & Sun, X. (2021). Fake face detection via adaptive manipulation traces extraction network. Computer Vision and Image Understanding, 204, 103170. [Google Scholar] [CrossRef]

- Hagele, D., Krake, T., & Weiskopf, D. (2023). Uncertainty-aware multidimensional scaling. IEEE Transactions on Visualization and Computer Graphics, 29(1), 23–32. [Google Scholar] [CrossRef]

- Hamza, A., Javed, A. R., Iqbal, F., Kryvinska, N., Almadhor, A. S., & Jalil, Z. (2022). Deepfake audio detection via MFCC features using machine learning. IEEE Access, 10, 134018–134028. [Google Scholar] [CrossRef]

- Harbath, K., & Khizanishvili, A. (2023). Insights from data: What the numbers tell us about elections and future of democracy. Integrity Institute. Available online: https://integrityinstitute.org/blog/insights-from-data (accessed on 15 July 2025).

- Hu, J., Liao, X., Wang, W., & Qin, Z. (2022). Detecting compressed deepfake videos in social networks using frame-temporality two-stream convolutional network. IEEE Transactions on Circuits and Systems for Video Technology, 32(3), 1089–1102. [Google Scholar] [CrossRef]

- Huang, L., Li, J., Hao, H., & Li, X. (2018). Micro-seismic event detection and location in underground mines by using Convolutional Neural Networks (CNN) and deep learning. Tunnelling and Underground Space Technology, 81, 265–276. [Google Scholar] [CrossRef]

- Hussain, M., Bird, J. J., & Faria, D. R. (2019). A study on CNN transfer learning for image classification. In A. Lotfi, H. Bouchachia, A. Gegov, C. Langensiepen, & M. McGinnity (Eds.), Advances in computational intelligence systems. UKCI 2018; Advances in intelligent systems and computing (Vol. 840, pp. 191–202). Springer. [Google Scholar] [CrossRef]

- Hydara, E., Kikuchi, M., & Ozono, T. (2024). Empirical assessment of deepfake detection: Advancing judicial evidence verification through artificial intelligence. IEEE Access, 12, 151188–151203. [Google Scholar] [CrossRef]

- Kalaiarasu, S., Rahman, N. A. A., & Harun, K. S. (2024). Deepfake impact, security threats and potential preventions. In AIP conference proceedings (Vol. 2802, p. 050020). AIP Publishing. [Google Scholar] [CrossRef]

- Kasita, I. D. (2022). Deepfake pornografi: Tren kekerasan gender berbasis online (KGBO) di era pandemi COVID-19. Jurnal Wanita dan Keluarga, 3(1), 16–26. [Google Scholar] [CrossRef]

- Kietzmann, J., Lee, L. W., McCarthy, I. P., & Kietzmann, T. C. (2020). Deepfakes: Trick or treat? Business Horizons, 63, 135–146. [Google Scholar] [CrossRef]

- Kılıç, B., & Kahraman, M. E. (2023). Current usage areas of deepfake applications with artificial intelligence technology. İletişim ve Toplum Araştırmaları Dergisi, 3(2), 301–332. [Google Scholar] [CrossRef]

- Kohli, A., & Gupta, A. (2021). Detecting DeepFake, FaceSwap and Face2Face facial forgeries using frequency CNN. Multimedia Tools and Applications, 80, 18461–18478. [Google Scholar] [CrossRef]

- Lakshmi, D., & Hemanth, D. J. (2024). An overview of deepfake methods in medical image processing for health care applications. In Frontiers in artificial intelligence and applications (Vol. 383, pp. 304–311). IOS Press. [Google Scholar] [CrossRef]

- Lee, S., Tariq, S., Shin, Y., & Woo, S. S. (2021). Detecting handcrafted facial image manipulations and GAN-generated facial images using Shallow-FakeFaceNet. Applied Soft Computing, 105, 107256. [Google Scholar] [CrossRef]

- Li, Y., Chang, M.-C., & Lyu, S. (2018, December 11–13). In ictu oculi: Exposing AI-created fake videos by detecting eye blinking. IEEE International Workshop on Information Forensics and Security (WIFS) (pp. 1–7), Hong Kong, China. [Google Scholar] [CrossRef]

- Lim, S. Y., Chae, D. K., & Lee, S. C. (2022). Detecting deepfake voice using explainable deep learning techniques. Applied Sciences, 12(8), 3926. [Google Scholar] [CrossRef]

- Lim, W. M., & Kumar, S. (2023). Guidelines for interpreting the results of bibliometric analysis: A sensemaking approach. Global Business and Organizational Excellence, 43(1), 17–26. [Google Scholar] [CrossRef]

- Liu, K., Perov, I., Gao, D., Chervoniy, N., Zhou, W., & Zhang, W. (2023). DeepFaceLab: Integrated, flexible and extensible face-swapping framework. Pattern Recognition, 141, 109628. [Google Scholar] [CrossRef]

- Lu, Y., & Ebrahimi, T. (2024). Assessment framework for deepfake detection in real-world situations. EURASIP Journal on Image and Video Processing, 2024(1), 16. [Google Scholar] [CrossRef]

- Maddi, A., Maisonobe, M., & Boukacem-Zeghmouri, C. (2025). Geographical and disciplinary coverage of open access journals: OpenAlex, Scopus, and WoS. PLoS ONE, 20(4), e0320347. [Google Scholar] [CrossRef]

- Maddocks, S. (2020). ‘A deepfake porn plot intended to silence me’: Exploring continuities between pornographic and ‘political’ deep fakes. Porn Studies, 7(4), 415–423. [Google Scholar] [CrossRef]

- Malik, A., Kuribayashi, M., Abdullahi, S. M., & Khan, A. N. (2022). Deepfake detection for human face images and videos: A survey. IEEE Access, 10, 18757–18775. [Google Scholar] [CrossRef]

- Mao, D., Zhao, S., & Hao, Z. (2022). A shared updatable method of content regulation for deepfake videos based on blockchain. Applied Intelligence, 52(14), 15557–15574. [Google Scholar] [CrossRef]

- Martin-Rodriguez, F., Garcia-Mojon, R., & Fernandez-Barciela, M. (2023). Detection of AI-created images using pixel-wise feature extraction and convolutional neural networks. Sensors, 23(22), 9037. [Google Scholar] [CrossRef] [PubMed]

- Masood, M., Nawaz, M., Malik, K. M., Javed, A., Irtaza, A., & Malik, H. (2023). Deepfakes generation and detection: State-of-the-art, open challenges, countermeasures, and way forward. Applied Intelligence, 53(4), 3974–4026. [Google Scholar] [CrossRef]

- Mira, F. (2023, May 19–21). Deep learning technique for recognition of deep fake videos. 2023 IEEE IAS Global Conference on Emerging Technologies (GlobConET) (pp. 1–4), London, UK. [Google Scholar] [CrossRef]

- Mirsky, Y., & Lee, W. (2021). The Creation and Detection of Deepfakes: A Survey. ACM Computing Surveys (CSUR), 54(1), 1–41. [Google Scholar] [CrossRef]

- Mongeon, P., & Paul-Hus, A. (2016). The journal coverage of Web of Science and Scopus: A comparative analysis. Scientometrics, 106(1), 213–228. [Google Scholar] [CrossRef]

- Mubarak, R., Alsboui, T., Alshaikh, O., Inuwa-Dutse, I., Khan, S., & Parkinson, S. (2023). A survey on the detection and impacts of deepfakes in visual, audio, and textual formats. IEEE Access, 11, 144497–144529. [Google Scholar] [CrossRef]

- Nenadić, O., & Greenacre, M. (2007). Correspondence analysis in R, with two- and three-dimensional graphics: The ca package. Journal of Statistical Software, 20(3), 1–13. [Google Scholar] [CrossRef]

- Nguyen, T. T., Nguyen, Q. V. H., Nguyen, D. T., Nguyen, D. T., Huynh-The, T., Nahavandi, S., & Nguyen, C. M. (2022). Deep learning for deepfakes creation and detection: A survey. Computer Vision and Image Understanding, 223, 103525. [Google Scholar] [CrossRef]

- Nikkel, B., & Geradts, Z. (2022). Likelihood ratios, health apps, artificial intelligence and deepfakes. Forensic Science International: Digital Investigation, 41, 301394. [Google Scholar] [CrossRef]

- Ouhnni, H., Acim, B., Belhiah, M., El Bouchti, K., Seghroucheni, Y. Z., Lagmiri, S. N., Benachir, R., & Ziti, S. (2025). The evolution of virtual identity: A systematic review of avatar customization technologies and their behavioral effects in VR environments. Frontiers in Virtual Reality, 6, 1496128. [Google Scholar] [CrossRef]

- Öztürk, O., Kocaman, R., & Kanbach, D. K. (2024). How to design bibliometric research: An overview and a framework proposal. Review of Managerial Science, 18, 3333–3361. [Google Scholar] [CrossRef]

- Park, J., Park, L. H., Ahn, H. E., & Kwon, T. (2024). Coexistence of deepfake defenses: Addressing the poisoning challenge. IEEE Access, 12, 11674–11687. [Google Scholar] [CrossRef]

- Patel, Y., Goel, A., Mehra, S., Singh, R., Kumar, V., & Gupta, A. (2023a). An improved dense CNN architecture for deepfake image detection. IEEE Access, 11, 22081–22095. [Google Scholar] [CrossRef]

- Patel, Y., Tanwar, S., Gupta, R., Bhattacharya, P., Davidson, I. E., & Nyameko, R. (2023b). Deepfake generation and detection: Case study and challenges. IEEE Access, 11, 143296–143323. [Google Scholar] [CrossRef]

- Pranckutė, R. (2021). Web of Science (WoS) and Scopus: The titans of bibliographic information in today’s academic world. Publications, 9(1), 12. [Google Scholar] [CrossRef]

- Qu, Z., Yin, Q., Sheng, Z., Wu, J., Zhang, B., Yu, S., & Lu, W. (2024). Overview of deepfake proactive defense techniques. Journal of Image and Graphics, 29(2), 318–342. [Google Scholar]

- Radha, L., & Arumugam, J. (2021). The research output of bibliometrics using Bibliometrix R package and VOSviewer. Shanlax International Journal of Arts, Science and Humanities, 9(2), 44–49. [Google Scholar] [CrossRef]

- Ramluckan, T. (2024, March 26–27). Deepfakes: The legal implications. 19th International Conference on Cyber Warfare and Security (ICCWS) (pp. 120–130), Johannesburg, South Africa. [Google Scholar] [CrossRef]

- Raza, A., Munir, K., & Almutairi, M. (2022). A novel deep learning approach for deepfake image detection. Applied Sciences, 12, 9820. [Google Scholar] [CrossRef]

- Roe, J., Perkins, M., & Furze, L. (2024). Deepfakes and higher education: A research agenda and scoping review of synthetic media. Journal of University Teaching and Learning Practice, 21(3), 15. [Google Scholar] [CrossRef]

- Saadouni, C., Jaouhari, S. E., Tamani, N., Ziti, S., Mroueh, L., & El Bouchti, K. (2025). Identification techniques in the Internet of Things: Survey, taxonomy and research frontier. IEEE Communications Surveys & Tutorials. [Google Scholar] [CrossRef]

- Shorten, C., Khoshgoftaar, T. M., & Furht, B. (2021). Deep learning applications for COVID-19. Journal of Big Data, 8(1), 18. [Google Scholar] [CrossRef]

- Siegel, D., Kraetzer, C., Seidlitz, S., & Dittmann, J. (2021). Media forensics considerations on deepfake detection with handcrafted features. Journal of Imaging, 7(7), 108. [Google Scholar] [CrossRef]

- Singh, V. K., Singh, P., Karmakar, M., Leta, J., & Mayr, P. (2021). The journal coverage of Web of Science, Scopus and Dimensions: A comparative analysis. Scientometrics, 126, 5113–5142. [Google Scholar] [CrossRef]

- Sun, X., Ge, S., Wang, X., Lu, H., & Herrera-Viedma, E. (2022). A bibliometric analysis of IEEE T-ITS literature between 2010 and 2019. IEEE Transactions on Intelligent Transportation Systems, 23(4), 17157–17166. [Google Scholar] [CrossRef]

- Sun, Z., Ruan, N., & Li, J. (2025). DDL: Effective and comprehensible interpretation framework for diverse deepfake detectors. IEEE Transactions on Information Forensics and Security, 20, 3601–3615. [Google Scholar] [CrossRef]

- Suratkar, S., Kazi, F., Sakhalkar, M., Abhyankar, N., & Kshirsagar, M. (2020, December 10–13). Exposing deepfakes using convolutional neural networks and transfer learning approaches. 2020 IEEE 17th India Council International Conference (INDICON) (pp. 1–8), New Delhi, India. [Google Scholar] [CrossRef]

- Thelwall, M. (2018). Dimensions: A competitor to Scopus and the Web of Science? Journal of Informetrics, 12(2), 430–435. [Google Scholar] [CrossRef]

- Tolosana, R., Vera-Rodriguez, R., Fierrez, J., Morales, A., & Ortega-Garcia, J. (2020). Deepfakes and beyond: A survey of face manipulation and fake detection. Information Fusion, 64, 131–148. [Google Scholar] [CrossRef]

- Twomey, J., Ching, D., Aylett, M. P., Quayle, M., Linehan, C., & Murphy, G. (2025). What is so deep about deepfakes? A multidisciplinary thematic analysis of academic narratives about deepfake technology. IEEE Transactions on Technology and Society, 6, 64–79. [Google Scholar] [CrossRef]

- Ur Rehman Ahmed, N., Badshah, A., Adeel, H., Tajammul, A., Daud, A., & Alsahfi, T. (2025). Visual deepfake detection: Review of techniques, tools, limitations, and future prospects. IEEE Access, 13, 1923–1961. [Google Scholar] [CrossRef]

- Van Eck, N. J., & Waltman, L. (2010). Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics, 84, 523–538. [Google Scholar] [CrossRef]

- Wang, Y., Zhang, F., Wang, J., Liu, L., & Wang, B. (2021). A bibliometric analysis of edge computing for internet of things. Security and Communication Networks, 2021, 5563868. [Google Scholar] [CrossRef]

- Waseem, S., Abu Bakar, S. A. R. S., Ahmed, B. A., Omar, Z., Eisa, T. A. E., & Dalam, M. E. E. (2023). Deepfake on face and expression swap: A review. IEEE Access, 11, 117865–117906. [Google Scholar] [CrossRef]

- Whittaker, L., Mulcahy, R., Letheren, K., Kietzmann, J., & Russell-Bennett, R. (2023). Mapping the deepfake landscape for innovation: A multidisciplinary systematic review and future research agenda. Technovation, 125, 102780. [Google Scholar] [CrossRef]

- Xu, Z., Liu, J., Lu, W., Xu, B., Zhao, X., Li, B., & Huang, J. (2021). Detecting facial manipulated videos based on set convolutional neural networks. Communication and Image Representation, 77, 103119. [Google Scholar] [CrossRef]

- Yasrab, R., Jiang, W., & Riaz, A. (2021). Fighting deepfakes using body language analysis. Forecasting, 3(2), 303–321. [Google Scholar] [CrossRef]

| Most Relevant Sources | N. of Documents | Most Local Cited Sources | N. of Local Citations |

|---|---|---|---|

| IEEE Access | 30 | Proceedings CVPR IEEE | 1353 |

| Multimedia Tools and Applications | 7 | arXiv | 1250 |

| Sensors | 7 | IEEE Transactions on Information Forensics and Security | 350 |

| IEEE Transactions on Information Forensics and Security | 6 | Lecture Notes in Computer Science | 148 |

| ACM Transactions on Multimedia Computing Communication | 6 | IEEE Conference on Computer Vision | 101 |

| Expert Systems with Applications | 5 | IEEE Access | 80 |

| Journal of Imaging | 5 | Advances in Neural Information Processing Systems | 72 |

| PeerJ Computer Science | 5 | IEEE International Workshop on Information Forensics and Security | 72 |

| Applied Sciences-Basel | 4 | IEEE Computer Society Conference | 51 |

| Electronics | 4 | International Conference on Acoustics, Speech, and Signal Processing | 50 |

| Country | Articles | Single-Country Publication (SCP) | Multiple-Country Publication (MCP) | Frequency | Multiple-Country Publication Ratio |

|---|---|---|---|---|---|

| CHINA | 83 | 64 | 19 | 0.202 | 0.229 |

| INDIA | 62 | 52 | 10 | 0.151 | 0.161 |

| USA | 59 | 48 | 11 | 0.144 | 0.186 |

| ITALY | 25 | 21 | 4 | 0.061 | 0.160 |

| PAKISTAN | 20 | 8 | 12 | 0.049 | 0.600 |

| KOREA | 16 | 12 | 4 | 0.039 | 0.250 |

| UNITED KINGDOM | 14 | 7 | 7 | 0.034 | 0.500 |

| SAUDI ARABIA | 11 | 7 | 4 | 0.027 | 0.364 |

| GERMANY | 9 | 5 | 4 | 0.022 | 0.444 |

| SPAIN | 9 | 6 | 3 | 0.022 | 0.333 |

| Author | Year | Title | Journal | Global Citations | Average Citations per Document |

|---|---|---|---|---|---|

| Caldelli R. | 2019 | Deepfake video detection through optical flow based CNN | 2019 IEEE/CVF International Conference On Computer Vision Workshops (ICCVW) | 188 | 27.0 |

| Javed A. | 2023 | Deepfakes generation and detection: state-of-the-art, open challenges, countermeasures, and way forward | Applied Intelligence | 129 | 43.0 |

| Li X. | 2018 | Micro-seismic event detection and location in underground mines by using convolutional neural networks (CNN) and deep learning | Tunnelling And Underground Space Technology | 120 | 15.0 |

| Li M. | 2016 | An original face anti-spoofing approach using partial convolutional neural network | 2016 Sixth International Conference On Image Processing Theory, Tools And Applications (Ipta) | 65 | 6.5 |

| Caldelli R. | 2021 | Optical flow based CNN for detection of unlearnt deepfake manipulations | Pattern Recognition Letters | 57 | 11.4 |

| Guarnera L. | 2020 | Fighting deepfake by exposing the convolutional traces on images | IEEE Access | 39 | 6.5 |

| Giudice O. | 2020 | Fighting deepfake by exposing the convolutional traces on images | IEEE Access | 39 | 6.5 |

| Battiato S. | 2020 | Fighting deepfake by exposing the convolutional traces on images | IEEE Access | 39 | 6.5 |

| Zhao Y. | 2021 | Practical attacks on deep neural networks by memory trojaning | IEEE Transactions On Computer-Aided Design Of Integrated Circuits And Systems | 19 | 3.8 |

| Guarnera L. | 2022 | The face deepfake detection challenge | Journal Of Imaging | 17 | 4.25 |

| Top Authors | Top Countries | Top Keywords | Most Globally Cited Documents | Global Citations |

|---|---|---|---|---|

| Li JX | China | Deep Learning | Mirsky Y, 2021, ACM COMPUT SURV (Mirsky & Lee, 2021) | 326 |

| Zhao Y | USA | Deepfake Detection | Hussain M, 2019, ADV COMPUT INTELL SYST (Hussain et al., 2019) | 310 |

| Liu M | India | Deepfake | Cozzolino D, 2017, ACM Workshop on Info. Hiding & Multimedia Security (Cozzolino et al., 2017) | 200 |

| Liu X | Pakistan | Computer Vision | Shorten C, 2021, J BIG DATA (Shorten et al., 2021) | 194 |

| Zhang Y | Italy | Feature Extraction | Amerini I, 2019, IEEE/CVF ICCVW (Amerini et al., 2019) | 158 |

| Calderllir | Saudi Arabia | Artificial Intelligence | Masood M, 2023, APPL INTELL (Masood et al., 2023) | 92 |

| Battiato S | Korea | Deepfakes | Thanh Thi Nguyen TTN, 2022, COMPUT VIS IMAGE UNDERST (Nguyen et al., 2022) | 82 |

| Giudice O | Australia | Machine Learning | Huang L, 2018, TUNN UNDERGR SPACE TECHNOL (Huang et al., 2018) | 62 |

| Guarnera L | Spain | Learning | Xu X, 2021, J VIS COMMUN IMAGE REPRESENT (Xu et al., 2021) | 51 |

| Javed A | United Kingdom | Detection | Agarwal S, 2020, IEEE/CVF CVPRW (Agarwal et al., 2020) | 48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Acim, B.; Boukhlif, M.; Ouhnni, H.; Kharmoum, N.; Ziti, S. A Decade of Deepfake Research in the Generative AI Era, 2014–2024: A Bibliometric Analysis. Publications 2025, 13, 50. https://doi.org/10.3390/publications13040050

Acim B, Boukhlif M, Ouhnni H, Kharmoum N, Ziti S. A Decade of Deepfake Research in the Generative AI Era, 2014–2024: A Bibliometric Analysis. Publications. 2025; 13(4):50. https://doi.org/10.3390/publications13040050

Chicago/Turabian StyleAcim, Btissam, Mohamed Boukhlif, Hamid Ouhnni, Nassim Kharmoum, and Soumia Ziti. 2025. "A Decade of Deepfake Research in the Generative AI Era, 2014–2024: A Bibliometric Analysis" Publications 13, no. 4: 50. https://doi.org/10.3390/publications13040050

APA StyleAcim, B., Boukhlif, M., Ouhnni, H., Kharmoum, N., & Ziti, S. (2025). A Decade of Deepfake Research in the Generative AI Era, 2014–2024: A Bibliometric Analysis. Publications, 13(4), 50. https://doi.org/10.3390/publications13040050