Abstract

This study analyzes the research quality assurance processes in Chilean universities. Data from 29 universities accredited by the National Accreditation Commission were collected. The relationship between institutional accreditation and research performance was analyzed using length in years of institutional accreditation and eight research metrics used as the indicators of quantity, quality, and impact of a university’s outputs at an international level. The results showed that quality assurance in research of Chilean universities is mainly associated with quantity and not with the quality and impact of academic publications. There was also no relationship between the number of publications and their quality, even finding cases with negative correlations. In addition to the above, the relationship between international metrics to evaluate research performance (i.e., international collaboration, field-weighted citation impact, and output in the top 10% citation percentiles) showed the existence of three clusters of heterogeneous composition regarding the distribution of universities with different years of institutional accreditation. These findings call for a new focus on improving regulatory processes to evaluate research performance and adequately promote institutions’ development and the effectiveness of their mission.

1. Introduction

Research performance is evaluated mainly by the quantity and quality of publications [1]. Quantity is measured by research productivity limited to a certain type of publications and a certain period of time, which may vary according to the objective of the evaluation, while quality is measured through a set of metrics such as publications in international collaboration, publications in Q1 (top 25%) journal quartile, publications in top 10% journal percentile, publications in top 10% citation percentile, field-weighted citation impact, h-index, and g-index, among others [2,3].

In Chile, scientific and technological research was institutionalized and developed in the second half of the last century [4,5] and mainly carried out by universities and public funding [6]. In turn, national research productivity, measured by publications in the core collection of Web of Science per the number of researchers or economically active population, exhibits the highest levels in Latin America, above Brazil, Mexico, and Argentina [7]. The above occurs even when there are strong differences between disciplines, geographical location within the country or gender, with a high institutional concentration in two universities that generate half of the total number of publications in the country [8]. Only in recent years, mainly teaching universities become a minority, given that many have gradually overcome the economic restrictions and adherence to historical models that limited the development of research [9,10]. In addition, the positive impacts of financial resources and advanced human capital on scientific productivity have been increasing, but with differences in efficiency between universities [11]. Financial resources are quite limited, reaching barely 0.35% of the Gross Domestic Product, the lowest among all the countries of the Organization for Economic Cooperation and Development (OECD) [12], and the working conditions of many researchers have significant deficiencies [13]. It is known that the number of highly qualified researchers in Chile has increased exponentially due to the support of government scholarships in Chile and abroad; however, there is a lack of capacity to insert graduates into the national university system so that they can fully deploy their research skills [14]. On the other hand, despite the fact that the use of demanding scientometric indicators was considered very early on as a reference to support researchers and universities [15] and that productivity was mainly oriented toward mainstream journals [16,17], financial support for research has had a proven impact on the number of articles, but not on their quality measured by metrics that include the number of citations [18]. In the last twenty years, the number of citations in Web of Science journals for Chile has increased more than five times, in a similar proportion to the total number of publications [19].

In the case of higher education institutions, the evaluation of research performance is considered just one step in the quality assurance process of universities and programs, along with other academic and management activities. In the case of Chile, institutional accreditation is the main quality assurance mechanism of its universities [20,21]. Historically, research has been considered a voluntary accreditation area, even though it is one of the main attributes that differentiate universities from other higher education institutions [22]. Consequently, only about half of the Chilean universities are accredited in research. However, accreditation in research and graduate teaching explains most of the variance in institutional accreditation length in years [23].

Given the context described above, this study attempts to analyze quantitatively and qualitatively the effect of research quality assurance processes in Chilean universities on research performance, establishing the relationship between research accreditation and productivity.

The accreditation regulatory processes of Chilean universities started in response to the massive increase in institutions, programs, and students that occurred after the 1981 Reform, and the need to contribute to the public trust related to the quality and pertinence of their activities. Initially, it was an experimental and voluntary initiative, not established by law, which included the participation of public and private universities, that exceeded expectations [20,21,24,25,26]. Institutional accreditation was implemented separately from the accreditation of undergraduate and graduate teaching with a mixed evaluation model that considered self-assessment and hetero-evaluation by external peers that are common in other countries [27,28,29]. The concept of quality was that of double internal and external consistency, i.e., the degree of adjustment between institutional purposes and actions with their mission statements, and with academic and disciplinary requirements. In this accreditation scenario, research was considered a voluntary academic area at the start of the quality assurance processes alongside other voluntary areas, such as graduate teaching and community engagement, as well as the mandatory areas of undergraduate teaching and institutional management [26].

Starting in 2006, this experimental higher education quality assurance system was institutionalized with no substantive changes in conceptual aspects or initial procedures, maintaining the institutional accreditation and research as voluntary areas [30]. A new law on higher education, promulgated in 2018, introduced relevant changes in the process, with the mandatory accreditation of all universities and the introduction of standards for evaluating quality criteria. However, research remained a voluntary area, the only one among the five dimensions subject to evaluation, including the creative arts and innovation [31].

The accreditation processes of Chilean universities have shown positive effects on institutional management. The provision and use of information for decision-making, the improvement of indicators of pedagogical effectiveness and compliance with graduation profiles in teaching, the offering of graduate programs, and the coverage and involvement in community engagement activities are examples of these effects [32,33,34,35,36], in agreement with some international trends [37]. However, they have also raised criticisms regarding their procedures, limited social impact, conflicts of interest, and corruption [38,39,40,41].

The regulation applied to evaluating research in the accreditation of Chilean universities has mainly considered variables such as the existence of institutional policies and the effectiveness of their implementation, the productivity of publications in scientific journals and their indexing, research projects, and the availability of human, physical, and information resources [42]. Currently, the evaluation of research in institutional accreditation considers two criteria: policies and management, and results. The highest accreditation standard demands the systematic application of policies and management based on results that respond to the state of the art in disciplinary, productive, and social contexts. In relation to results, the focus is on conducting research in all areas of institutional work, the international quality of research outputs, and maintaining doctoral programs of high quality and international networks [43]. In the case of scientific and technological research, although there is no causal evidence available, institutional accreditation could partially explain the exponential increase in publications at the national level and Chile’s leadership in Latin America when scientific production is standardized by the number of inhabitants [7]. However, there are no data on the improvement of research quality. Examining the association between the accreditation timelines and productivity and quality indicators can help define the effect of the relationship between quality assurance and the improvement of research quality in Chilean universities. This relationship is not trivial since it is not always positive [44].

On the other hand, it is important to mention that in the accreditation of undergraduate programs, the creation and formative research of professors is considered as one of the twelve criteria used in the evaluation, but no reference is made to publications in journals. However, in the accreditation of graduate programs, the requirements for the formation of the professoriate (i.e., faculty member affiliated to a graduate program) vary according to the disciplinary area. These requirements consider research performance (e.g., the minimum number of publications in the last 5 years and the type of indexing databases) and, in some cases, journal impact levels (e.g., quartile as in the areas of biological sciences and economic and administrative sciences, or the impact factor in the field of chemistry) [43]. In international agencies, research has minimal incidence in undergraduate training programs, being limited to the learning of scientific protocols and the dedication of professors to research practices, but without considering the differences in impact or the diffusion of scientific journals.

As mentioned before, scientific and technological research in Chile was institutionalized in the second half of the last century [5] and carried out mainly in universities. Historically, it has exhibited a high level of concentration in a few institutions, even though it is part of the mission in most of them, differentiating themselves from other higher education institutions, such as professional institutes and technical training centers [6,45]. Therefore, there are also strong differences in the scientific capacities installed in the country, as well as among disciplines and gender authorship trends [8]. On the other hand, institutional performances are associated with ex-ante classifications and the different trajectories of Chilean universities. In the Chilean higher education system, both public and private universities coexist. Prior to the 1981 Reform, there were six “traditional” universities, two of which were state-owned, and among the private ones, there were confessional and regional universities [46,47]. Currently, there are 64 universities in the system that include public universities, traditional institutions (universities that were derived from them), and new private institutions. There is evidence of differences that exist in accreditation periods and the number of accredited voluntary areas according to the type of university [48]. Universities that make greater contributions to national research are both public and private [49]. Public, traditional private universities, and institutions derived from them show better performances in accrediting voluntary areas—including research—than new private universities, but as groups, public universities and traditional private universities and the institutions derived from them show better performances in accrediting voluntary areas including research, than the new private universities [50]. Changes in the interpretation of the regulations that modified the procedure of accrediting each area separately have influenced the increase in universities accredited in research; here, the period of joint accreditation for all areas, whether mandatory or voluntary, was considered. The accreditation results have generated controversy in a significant number of universities when their research performance is considered [51]. This highlights the importance of establishing whether accreditation, as a mechanism for ensuring quality, has an impact on the quality of national research in the entire university system, regardless of the legal status and trajectory.

It should also be considered that the demands for academic complexity, the access to public funding, and the contribution to corporate image have limited the development of universities with less academic trajectory. In addition to factors that have stimulated internal changes in the universities—expressed in greater commitments to research—there are other factors such as adherence to the Napoleonic model, the high costs of advanced human capital and equipment, and the growing competition for competitive funds, which limit the development of universities with less academic trajectory [10,17,52]. Although several universities allocate economic incentives to researchers, they are heterogeneous and generally not oriented to the quality of publications [17].

In this study, we evaluated the effect of research quality assurance processes in Chilean universities on research performance, establishing the relationship between research accreditation and productivity in quantitative and qualitative terms. Therefore, and supported by the empirical data, we hope to contribute to the development of new strategies for making changes in accreditation criteria and policies and establish a minimum for internationally recognized and high-level quality metrics to evaluate research performance. In addition, we hope to deepen the understanding of the concept of quality oriented to academic improvement with international standards and oriented to excellence, the use of three levels of quality standards, and the commitment of research quality assurance with other areas. All these are relevant issues that must be correctly understood to adequately promote institutions’ development and their mission’s effectiveness.

2. Methods

2.1. Data Collection

The data used in this study comprise a total of 29 Chilean universities accredited by the National Accreditation Commission (Comisión Nacional de Acreditación, CNA, Chile) in the voluntary area of research (Table 1), for which nine feature variables were chosen: years of accreditation, scholarly output, international collaboration, field-weighted citation impact, publications in Q1 journal quartile by CiteScore, publications in Q1 to Q2 journal quartiles by CiteScore, output in top 10%-citation percentiles, citations per publication, and views per publication. For each institution, its length in years of accreditation was obtained from official data at the CNA (https://www.cnachile.cl/Paginas/Inicio.aspx, accessed on 1 October 2021). In the time period considered in this study, the institutional accreditation length ranged from 2 to 7 years, considering the following five areas: undergraduate teaching, institutional management, graduate teaching, research, and community engagement. The last three areas are voluntary.

Table 1.

Chilean universities considered in the study (n = 29), alphabetically grouped into four sets according to the increasing years of institutional accreditation.

To provide a perspective on the quality and impact of the research output of the 29 Chilean universities considered in this study, the SciVal web-based data analytics platform (Elsevier, https://www.elsevier.com/solutions/scival) was used to analyze research performance (date exported: 13 December 2021). This analysis considered all types of publications and the Scopus All Science Journal Classification (ASJC) [53]. The time span considered for the scholarly output and metrics was 2016 to 2020. The eight metrics selected for the research performance analyses are as follows [54]:

- Scholarly output (ScholOutput): The number of publications (output) of a selected entity. It indicates its prolificacy.

- International collaboration, % (IntCollab%): extent to which its publications have international co-authorship.

- Field-weighted citation impact (FWCI): The ratio of citations received relative to the expected world average for the subject field, publication type, and publication year (also known as normalized citation impact). A FWCI of 1.00 indicates that the entity’s publications have been cited exactly as it would be expected on the global average for similar publications; the FWCI of the entity “World”, or the entire Scopus database, is 1.00. A FWCI > 1.0 indicates that the entity’s publications have been cited more than they are expected to be based on the global average for similar publications.

- Publications in Q1 journal quartile by CiteScore, % (PubQ1%): The number of high-quality publications of a selected entity that have been published in the Q1 journal quartile by CiteScore (%). It indicates the extent to which an entity’s publications are in the top 25%-journal percentile.

- Publications in Q1 to Q2 journal quartile by CiteScore, % (PubQ1Q2%): The number of quality publications of a selected entity that have been published in Q1 + Q2 journal quartiles by CiteScore (%). It indicates the extent to which an entity’s publications are in the top 50%-journal percentile.

- Output in top 10%-citation percentiles, field-weighted % (Top10%CitPerc%): The number of publications of a selected entity that are highly cited, having reached the top 10% of citations received (excellence). It indicates the extent to which an entity’s publications are present in the top 10%-citation percentile. For this metric, field weighting is used.

- Citations per publication (CitPub): The average number of citations received per publication. It indicates a measure of the impact of an entity’s publications.

- Views per publication (ViewsPub): The average number of views received per publication. It indicates a measure of usage of an entity’s publications.

These metrics were chosen as the indicators of quantity, quality, and impact of a university’s outputs.

2.2. Descriptive Analysis and Data Pre-Processing

Descriptive analyses, comprising the mean, median, and standard deviation, were performed on the raw data. Shapiro–Wilks (S-W) and Kolmogorov–Smirnov (KS) tests were used to evaluate if the data came from a normal distribution at a 95% confidence interval. The data were pre-processed because the studied variables have different scales, which can influence clustering. Most distance measures used by clustering algorithms are highly biased toward large-scale attributes. The purpose of normalization is to give all variables the same scaling, which is particularly useful for distance-based measurement [55]. The standardization procedure was carried out by removing the mean value of each feature variable and then scaling it by dividing the corrected features by their standard deviation.

2.3. Correlation of Feature Variables

The correlation between the feature variables was performed using the Pearson Product Moment Correlation analysis. Even though Pearson’s product-moment correlation coefficient is very sensitive to the presence of outliers [56], it will not cause a problem in this analysis because of the absence of outliers. This analysis allows calculating the strength of the relationship between two quantitative variables X and Y, each containing n values:

where xi and yi represent the ith value of X and Y, whereas and are the means of X and Y, respectively.

2.4. Cluster Analysis

It is known that for the same data set, different partition hierarchies can be constructed using alternative methods [57], such as distance measures, i.e., single (nearest neighbor), complete (furthest neighbor), and average linkage (average between nearest and furthest neighbor), and Ward’s minimum variance method. Here, Ward’s method [58] was selected since it produced the most meaningful results. Additionally, the selected interval measure was calculated as the squared Euclidean distance, which can be obtained according to the following equation:

The data used in the cluster analysis included three feature variables related to metrics that are commonly used internationally to evaluate research performance: international collaboration (%), field-weighted citation (or normalized) impact, and % output in the top 10% citation percentiles (excellence) [59,60,61,62]. Journal impact factor metrics were not considered after considering the limitations raised by the DORA declaration [63] and Leiden manifesto [64]. The average citations and views per publication were also not selected, as they do not consider distinct field compensations, necessary in this study of universities with different degrees of subject complexities.

All computations were performed using Statgraphics Centurion 19.2.01 (Statgraphics Technologies, Inc., The Plains, VA, USA).

3. Results

3.1. Descriptive Analysis

Descriptive analysis of the nine feature variables for the 29 Chilean universities is shown in Table 2. It is appreciated that the variables associated with quality and impact showed less variability in comparison to scholarly output. The international collaboration (%), field-weighted citation impact, publications in Q1 journal quartile by CiteScore (%), publications in Q1 and Q1 to Q2 journal quartiles by CiteScore (%), publications in top 10% citation percentiles (field-weighted, %), and citations and views per publication showed lower standard deviations. In contrast, the scholarly output showed the highest standard deviation among the universities analyzed (Table 2). In addition, this variable was the only one that did not show a normal distribution (p < 0.05 for S-W and KS tests).

Table 2.

Summary of descriptive analysis for the universities’ feature variables.

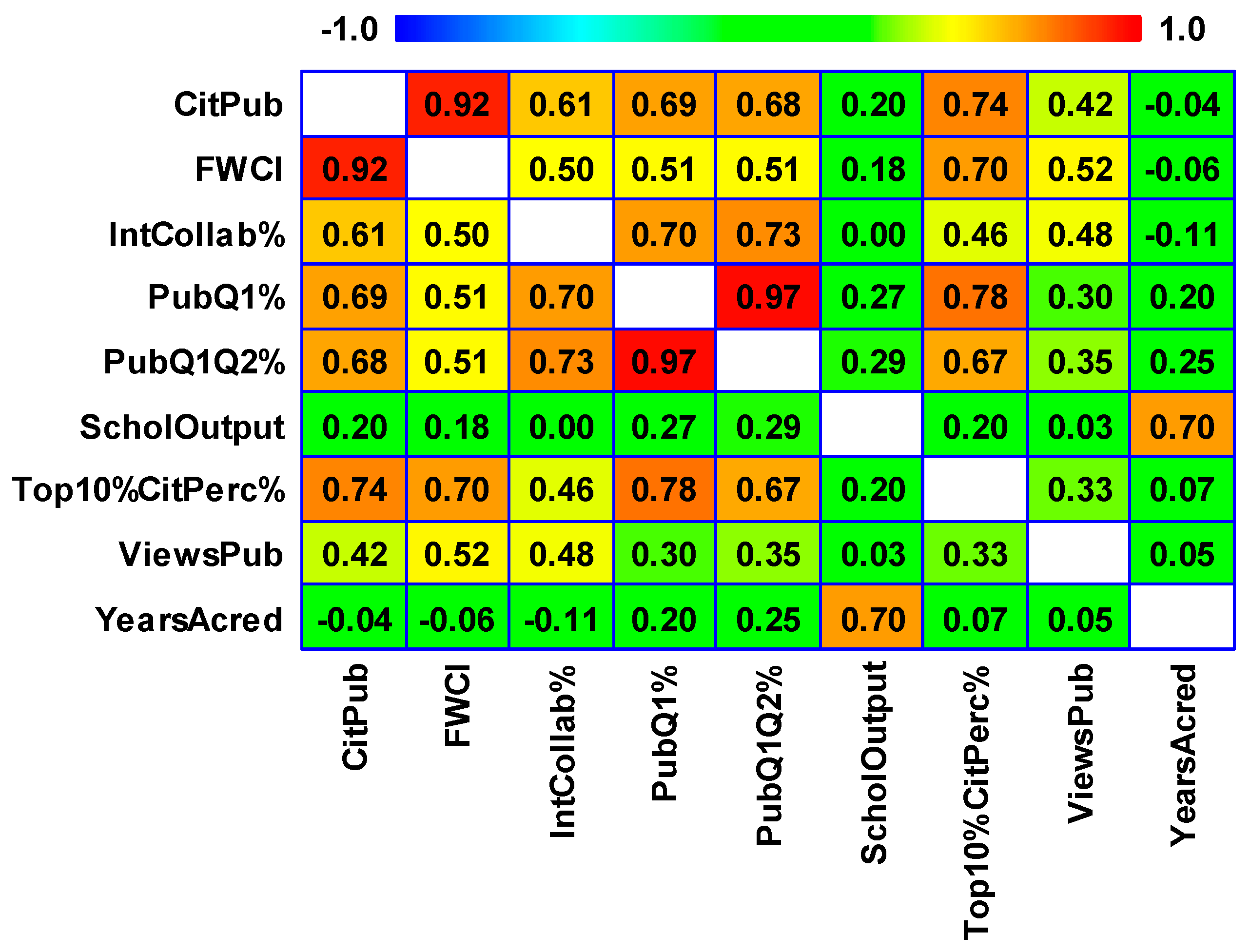

3.2. Correlation of Feature Variables

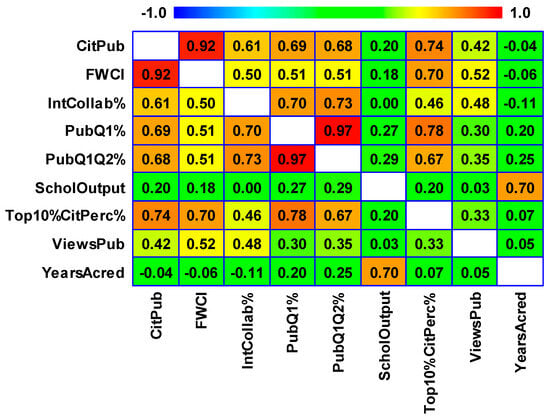

The Pearson product-moment correlation method for the nine feature variables considered in this study (Figure 1) showed, in general, lower correlations between scholarly output and the other chosen metrics considered in this study. This highlights that the length (in years) of institutional accreditation, a Chilean parameter used to quantify university quality assurance, is only correlated to scholarly output (r = 0.70 in Pearson product-moment correlation) without showing any significant correlation with respect to the quality and impact of publications. Also, from Figure 1, it can be appreciated that the years of institutional accreditation not only showed a lower correlation (near to zero) with features related to quality, but also in some cases negative values, as in citations per publications, field-weighted citation impact, and international collaboration.

Figure 1.

Pearson product-moment correlation for the nine universities’ feature variables.

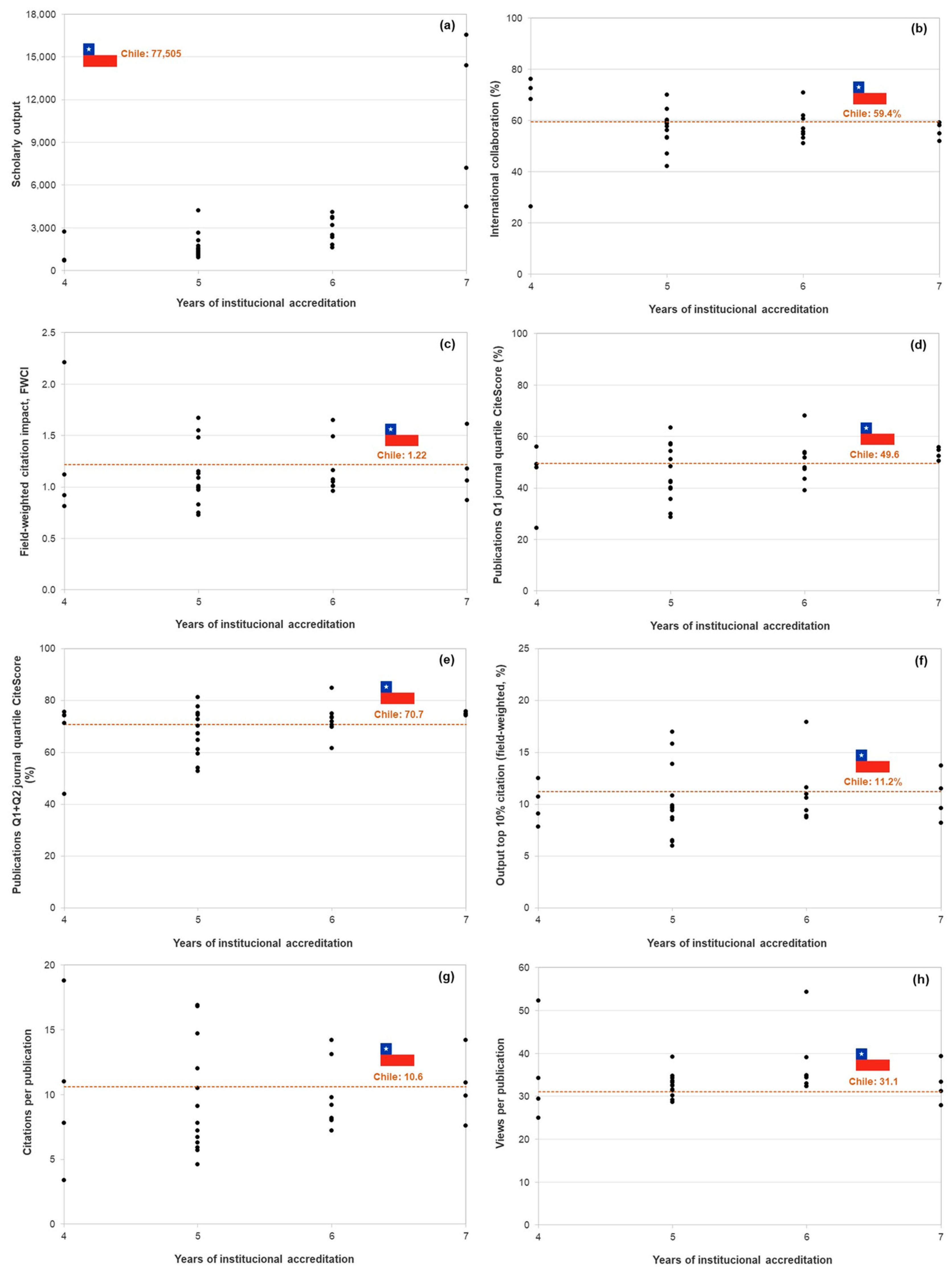

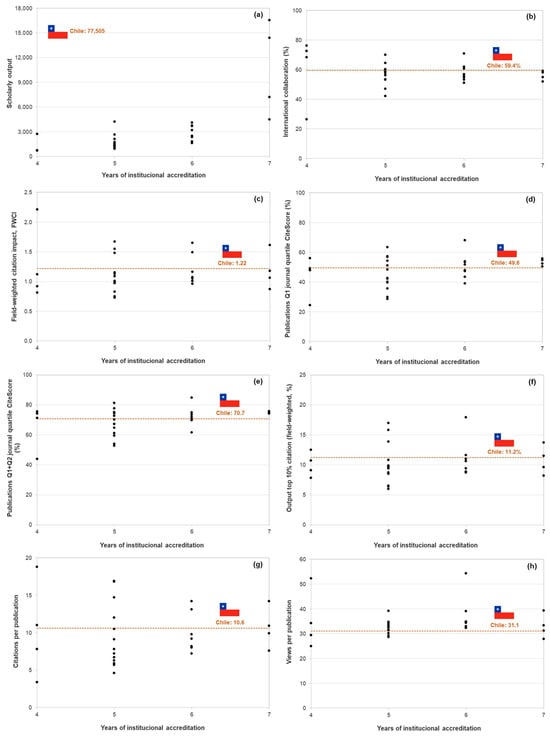

3.3. Comparative Analysis of the Research Metrics and Years of Institutional Accreditation

The 29 Chilean universities under study, with length of institutional accreditation ranging from 4 to 7 years, have different research performances when analyzing various metrics. As shown in Figure 2, there is a huge dispersion in research performances when comparing the values of the metrics analyzed for each university with respect to national levels. In addition, the only variable that correlates well with the institutional accreditation (length) is the scholarly output, whereas the other seven metrics that measure research quality and impact do not show this behavior. In fact, a positive correlation was found between the years of institutional accreditation and scholarly output.

Figure 2.

Correlation between years of institutional accreditation and eight different research metrics for 29 Chilean universities accredited in the voluntary area of research. (a) ScholOutput, (b) IntCollab%, (c) FWCI, (d) PubQ1%, (e) PubQ1Q2%, (f) Top10%CitPerc%, (g) CitPub, and (h) ViewsPub.

The group of universities accredited for seven years shows only the metrics of publications in Q1 (mean: 53.3%) and Q1–Q2 (mean: 74.9%) journal quartiles with values higher than those corresponding to Chile (49.6% and 70.7%, respectively). The other metrics exhibit high variability when compared to national values and to institutions that have received lower accreditation lengths.

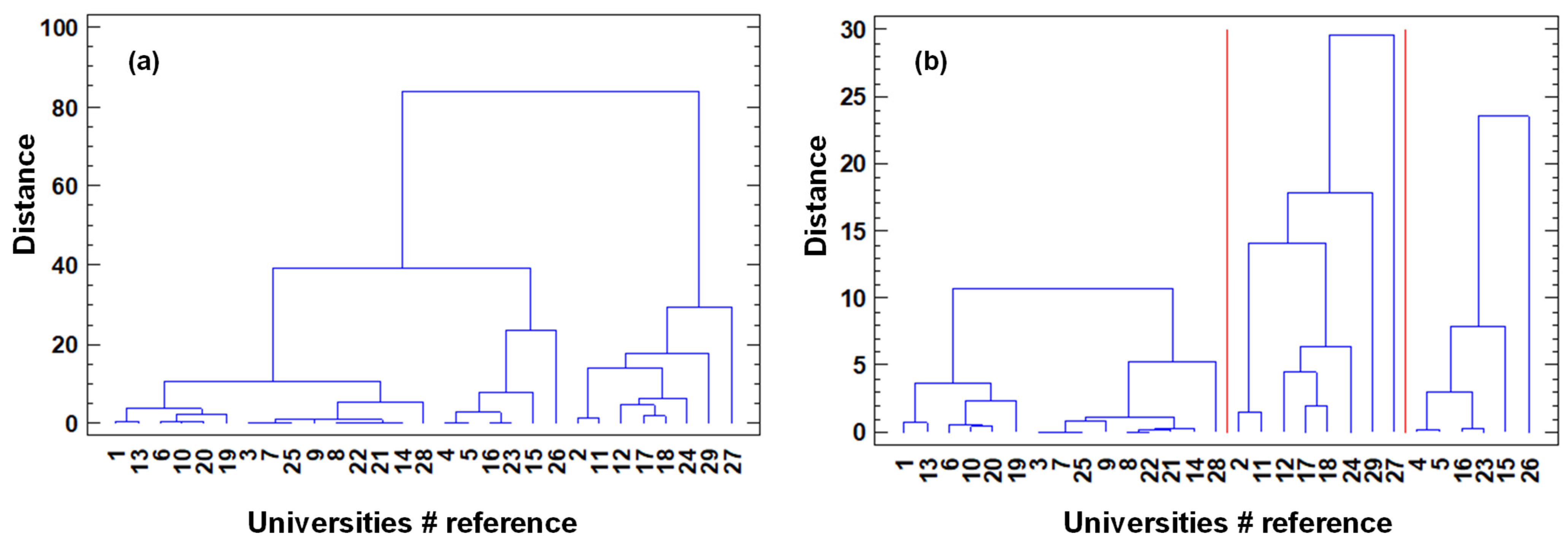

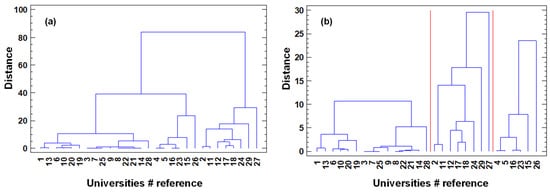

3.4. Cluster Analysis

Dendrogram plots representing the hierarchical clustering (using squared Euclidean distance and Ward’s criterion) were first built considering one cluster for the selected feature variables, i.e., international collaboration (%), field-weighted citation impact, and publications in the top 10% citation percentile (field-weighted) are shown in Figure 3a. The 29 universities were also grouped into three clusters with a different number of associated institutions (see Figure 3b). Cluster 1 includes 15 universities (1, 13, 6, 10, 20, 19, 3, 7, 25, 9, 8, 22, 21, 14, and 28), cluster 2 eight universities (2, 11, 12, 17, 18, 24, 29, and 27), and cluster 3 six universities (4, 5, 16, 23, 15 and 26). It can be concluded from Figure 3 that the four universities with the highest length of institutional accreditation (7 years; institutions referenced as 26, 27, 28, and 29) are distributed among the three different clusters. The same behavior is observed when analyzing those universities accredited with the lower lengths of institutional accreditation (4 and 5 years).

Figure 3.

Dendrogram representing the hierarchical clustering (using squared Euclidean distance and Ward’s criterion) for the three feature variables. (a) one cluster and (b) three defined clusters.

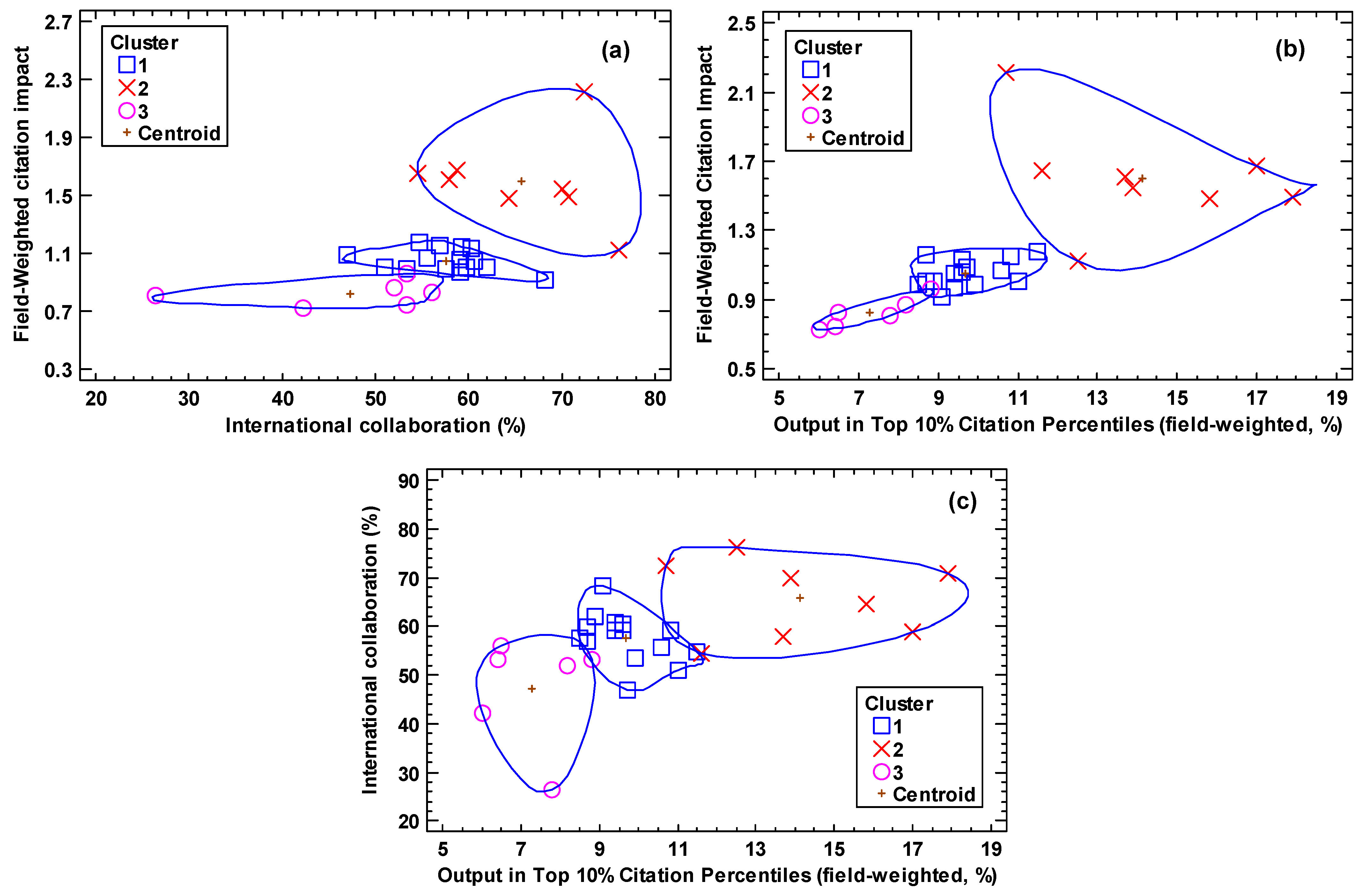

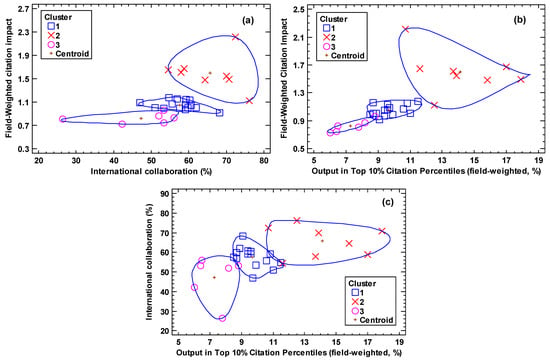

On the other hand, the clustering of the universities as discussed above shows a positive and increased correlation between the field-weighted citation impact, and the international collaboration (%) and publications in the top 10% citation percentiles (%) (Figure 4), in the following order of clusters: 2 > 1 > 3. This is also observed between non-FWCI metrics.

Figure 4.

Clusters in scatter plots for the three selected metrics used in the evaluation of research performance. (a) international collaboration (%), (b) field-weighted citation impact and (c) output in top 10% citation percentile (field-weighted, %).

4. Discussion

The accreditation results of the research area analyzed in this study reveal that the length (in years) of the institutional accreditation—a variable that defines institutional quality—is related to the scholarly output (production), and not to the quality and impact of a university’s research performance. In fact, the production positively correlates with institutional accreditation (length). The above results demonstrate the need to correct the institutional accreditation procedures of Chilean universities, given that they do not prioritize quality and impact metrics in the research evaluation criteria, and are based on the consideration of several academic areas (i.e., undergraduate teaching, research, graduate teaching, community engagement, and management) that generate tension and compromise in the definition of the accreditation length of the institutions. On the other hand, these findings contrast with previous studies that have suggested the use of multiple (or a basket of) metrics as a good practice for research assessment that can produce a more robust analysis [64,65]. Metrics focused on measuring research performance using the number of publications and citations are a valid tool to assess the quality of the research activity in most research subjects [66,67,68]. Furthermore, a recent Australian study deepened the use of quantitative indicators in the research assessment process, proposing different metrics to broaden the view in the design of normative data to track the quantity, quality, and impact of research outputs [69], in agreement with the Australian Research Council’s Excellence in Research for Australia (ERA) exercise which had its first full round in 2010 and whose results have been published in 2011, 2012, 2015 and 2018. The metrics proposed by the authors are the following: scholarly output, citation count, citations per publication, FWCI, publications in the top 10% percentile most cited in their field (%), publications in the top 10% ranked journals (CiteScore percentiles, %), publications with international collaboration (%), and publications with a corporate affiliation (%) [69]. In particular, the number of citations is an easy and effective way to measure influence in different research subjects and scientific career stages and also provides diverse variants (e.g., citations per publication, field-weighted citations, and citations in top% journals) according to the precision required in each scientometric study [70]. Moreover, and in relation to the collaboration metrics, it is recognized that international collaboration has positive effects on research productivity, quality, and FWCI [71], whereas academic–corporate collaboration can be seen as the relevant evidence for understanding the non-academic impacts of research [69,72,73].

On the other hand, the Chilean universities analyzed in this study showed different research performances based on the metrics of quantity, quality, and impact of a university’s outputs, even in comparison with national values. The metrics exhibited high variability when compared to national values and to institutions that have received low, medium, and high accreditation lengths (in years). In addition, universities with different lengths of institutional accreditation were distributed among three different clusters, when correlating metrics commonly used at the international level to evaluate research performance. In this analysis, it must be kept in mind that the metrics selected in this study agree with other robust scientometric studies that promote a more correct research performance evaluation for university decision-making and for quality assurance purposes at the system level [64,65,69].

In Chile, the instruments that have been used for the institutional accreditation of research at universities have focused on the following aspects: (i) the institutional policy of research development and its application according to the quality criteria accepted by the academic, scientific, technological, and innovation communities, (ii) the availability of economic resources for the development of systematic internal and external research, (iii) participation in open and competitive calls for funding at the national and international levels, (iv) the results of research projects (publication in indexed journals, books, patents, and others), (v) engagement with undergraduate and graduate teaching, and (vi) the impact of research at the national and international levels as a contribution to the scientific, technological, and disciplinary knowledge [74,75]. However, the new criteria for accreditation in the research area, applicable from October 2023, introduce the use of standards for the first time. The Research, Creation, and Innovation dimension disaggregates into the Policy and Administration and Results criteria, where both are categorized into three different performance levels [43]. These criteria are similar to the ones valid from 2006, where in the case of Results, the following must be provided: (i) an institutional plan for research, creation, and/or innovation, (ii) pertinent actions to regional or national demands, (iii) research evidence in some or all the disciplinary areas, according to the levels, (iv) the dissemination and transfer of creation and/or innovation activities at the national and international scales, according to the level, and (v) the results of the research activities that facilitate accredited graduate programs (PhDs in all the disciplinary areas of the university in the case of the highest level) [74,75].

The situation described above is the result of a new model of accreditation for universities in Chile. The key features of the institutional accreditation system for universities in Chile are outlined in Table 3. However, up to date, the contents of the accreditation resolutions for those universities with the highest accreditation level allow us to infer that the concept of research quality applied by the National Accreditation Committee (CNA, in Spanish) is emphasized through the existence and formalization of explicit policies. In addition, when it comes to the availability of external and internal resources, there is a reference to research centers, the number of researchers and their variation with different parameters, increase in internal and external economic resources with different parameters, facilities, and its evolution. Regarding participation in open and competitive calls, funding sources are referred to in different comparative parameters considering national and international agencies, seeking to show the evidence of the evolution of the number of projects and resources. In relation to research results, there is a systematic reference to the number of indexed publications in WoS, Scopus, ESCI, and SciELO and their variation with different parameters. There is also a reference to international cooperation, quality given by the journal quartile, patents, and comparisons with national figures. Regarding the engagement between research and undergraduate and graduate teaching, there is frequent reference to researchers who teach, students who participate in research projects, and relationships between graduate research with publications. In order to determine research impact, there is a use of several indicators and descriptors, such as the national place in the awarding of projects for the National Fund for Scientific and Technological Development (FONDECYT, in Spanish) and the Fund for Fostering Scientific and Technological Development (FONDEF, in Spanish), which are the two main sources of public funding to national research by the National Agency for Research and Development (ANID), indexed publications, normalized impact, leader excellence (1 and 10), QS Ranking, Scimago Ranking, citations per publication, h-index, Q1 or Q2 publications, impact factor, and patents [76]. However, the impact measurement is based on varied comparison parameters, and in some instances, figures are omitted, and only descriptive reference is made. In any case, the information provided by the accreditation resolutions allows us to establish the following: (i) the accreditation of the research area is not exclusive to universities that reach the excellence threshold (seven years), (ii) the new criteria and standards, and those applied to date, have no relevant differences, and the added value of the former does not depend on the quality indicators of research results, (iii) the accreditation resolutions of the universities with the best performance are based on descriptive arguments that mainly highlight the number of publications, awarded projects, and resources for research, (iv) the indicators used in the evaluative judgments are expressed in such a way that it is not possible to compare or measure their evolution, (v) the accreditation resolutions are justified on weakly consistent and discretionary arguments, and (vi) the CNA seems to not provide suggestions, observations, or weaknesses to the universities in the research area.

Table 3.

Key features of the institutional accreditation system of universities in Chile.

Although research quality indicators are considered in accreditation mechanisms and resolutions, they are a minor reference in performance evaluation processes. Additionally, given that institutional accreditation is carried out by evaluating several areas, the research results are influenced by the results of these areas, so the accreditation time is a compromise between them. In fact, during the period of this study, the institutional accreditation of Chilean universities considered two mandatory areas, i.e., institutional management and undergraduate teaching, and three voluntary ones, i.e., research, graduate teaching, and community engagement. In the CNA resolutions, there are different accreditation periods for mandatory areas and voluntary ones in the same university [76]. Therefore, the accrediting body apparently considered that the procedure was not indivisible but could be evaluated based on the performance of each area separately. Thus, the research accreditation time was independent of the other areas. However, in 2013 and 2015, two private universities appealed to the National Education Council (CNED, in Spanish), the appellate instance, against the resolution from the National Accreditation Commission, which provided them five years of accreditation in the areas of institutional management, undergraduate teaching, and community engagement, of a maximum of seven years, without accreditation in research and graduate teaching. The Council resolved the matter by accepting the applications, accrediting the universities in the five areas, including research (CNED Agreements No. 030/2014 and No. 035/2015) [77]. They argued that provided that there is only a unique procedure for university accreditation, there must be a unique and common accreditation period. Different deadlines would mean different, separate outputs, affecting the accreditation process. Therefore, instead of not providing accreditation in some areas while others are accredited, it was decided to accredit them for a longer period than they were supposed to [77]. Consequently, the National Accreditation Commission has continued working with these criteria in the institutional accreditation processes, so that the research quality assurance responds not only to the performance of this area but also to the results of other areas.

On the other hand, it has been demonstrated that in Chile, the different accreditation times of universities are mainly explained by the accreditation of the voluntary areas of research and graduate teaching [23]. This may be caused by national and institutional policies, expressed as requirements for academic hierarchy and careers, access to resources for research projects, faculty member election for doctoral programs, and economic benefits to researchers when generating research results (e.g., scientific publications). These and other aspects of promoting scientific development encourage the number of publications rather than metrics about quality and impact, regardless of the existence of strong differences among institutions, disciplines, and government grant funds [17]. In any case, the relationship between the quantity and the quality of scientific publications, measured as output, and the quality and the impact, respectively, has been a subject of analysis for over 40 years [17,78]. Eventually, the quality of the publications could be negatively influenced by the pressure to publish, emanating from academic policies related to the performance assessment of researchers, institutions, and countries. The popular aphorism “publish or perish” shows this situation through two opposing effects: a significant increase in human knowledge and a flow of scientific information on the one hand, and on the other hand, an increase in unethical publishing practices (i.e., predatory journals, ghost authors, multiple authorship without participation in the research, self-plagiarism, bioethical conflicts, and interference with peer-review processes) [79,80,81,82,83,84]. The privilege of quantity over quality of publications could also be considered a negative aspect. Time and resources could be used more effectively when prioritizing the quality over the quantity of publications [85]. However, the contribution to the subject fields and disciplinary knowledge, and the social role of research might be limited when the quantity is prioritized over the quality of publications. The exponential growth in the number of scientific journals has influenced the increase in the number of publications, particularly in those with lower requirements [86]. Quality is frequently associated with a journal’s reputation and the requirements of mainstream journals, which has different consequences for research development [87], given that to access these journals there are restrictions related to research competencies, the use of the English language, and other gaps that lead researchers to decide on other more flexible options [88]. Similarly, the relationship between the quantity and the quality of publications is associated with different factors, such as scholarly output, subject fields and disciplinary areas, and the burden of international quality standards in these journals [89,90,91,92].

It is necessary to mention that the quality assurance and accreditation processes of universities in Chile and the rest of the Latin American countries were initially influenced by the models used in Canada and the USA and later by the Bologna processes, particularly regarding policies and mechanisms. Chile and Mexico were the first countries to create agencies to evaluate the quality of their universities, adopting these international experiences [93]. However, there are differences in the public, governmental, or private nature of these agencies among the different countries [38].

Adopting the mixed processes of self-evaluation and external evaluation, institutional and formative program evaluation, the use of standards, the consequences of accreditation, and other aspects are derived from international experiences. They are explained by the results of the reference models of quality evaluation in higher education used in Chile [94,95,96,97].

Chile’s accreditation system has been compared with other Latin American countries, for example, in terms of the quality approach with Colombia [98], for its effects on private universities in Uruguay and Argentina [99] and in the effect of policies on the student body with Peru [100].

Although scientific and technological research is included in the evaluation of universities, it has not been analyzed as a separate component from teaching in the results of institutional accreditation. It should be considered that in Latin America over 50% of universities do not conduct scientific and technological research [101]. In the Chilean case, the results of accreditation have generated an increase in the number of universities accredited in research, even though it is a voluntary area. The research performance of universities in Latin America and Chile has been associated mainly with high-impact publications, and recently with the consideration of innovation and technological development [102]. In addition, the use of different university academic quality rankings that consider international research indicators has increased, but the interpretation of their results has generated controversy [103].

All the above shows that the results obtained in the accreditation of the research area of Chilean universities are contradictory to the purposes of the quality assurance processes. According to this study, they are not related to the quality but the quantity of publications, i.e., the total sum of documents. Similarly, the results suggest that the pressure to achieve a certain number of publications derived from national and institutional policies affects their quality. In fact, there is no evidence of a relationship between the quantity and quality of publications, and even negative correlations were found. This situation has been presented in general analyses of the results of scientific research [104,105]. Therefore, the procedures of research accreditation should be analyzed to achieve effectiveness and avoid the possible adverse effects at scientific and social levels.

5. Conclusions

The results of this study show that quality assurance in research of Chilean universities, established by the time (years) of institutional accreditation, is mainly associated with the quantity and not with the quality and the impact of the academic publications. It was also found that there is no relationship between the number of publications and their quality, even finding cases where scientific productivity has a negative association with some quality indicators. Moreover, quality indicators of these publications for the universities analyzed in this study showed less variability than the quantity of publications since the latter is the only variable with normal distribution. Finally, the relationship between international metrics to evaluate research performance, i.e., international collaboration (%), field-weighted citation (or normalized) impact, and % output in the top 10% citation percentiles (excellence), showed the existence of three clusters of heterogeneous composition concerning the distribution of universities with different years of institutional accreditation.

In summary, the results from this research highlight the need to make changes in regulatory processes and establish a minimum of internationally recognized and high-level quality metrics to evaluate research performance. Quality assurance in research in Chilean universities ought to be adjusted when it is expected that accreditation has positive effects on institutional performance. Particularly, the way to implement recent regulatory changes, the understanding of the concept of quality oriented to academic improvement with international standards and oriented to excellence, the use of three levels of quality standards, and the commitment of research quality assurance with other areas are relevant issues that must be correctly understood to adequately promote the development of institutions and the effectiveness of their mission.

Author Contributions

Conceptualization: E.T.; Data curation: E.T., D.A.L. and R.R.-F.; Formal analysis: E.T., D.A.L., R.R.-F., D.K. and R.R.; Investigation: E.T. and D.A.L.; Methodology: E.T., D.A.L. and R.R.-F.; Software: R.R.-F.; Writing: E.T., D.A.L., R.R.-F. and R.R.; Reviewing: E.T., D.A.L., D.K. and R.R.; Editing: E.T., D.A.L. and R.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

The authors appreciate the support provided by the Instituto Interuniversitario de Investigación Educativa IESED-Chile (Interuniversity Institute for Educational Research), and the Red 21995 en Educación de Universidades Estatales de Chile (Network 21995 of Education of State Universities of Chile).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| ANID | National Agency for Research and Development |

| CNA | National Accreditation Commission |

| CNED | National Council of Education |

| FONDECYT | National Fund for Scientific and Technological Development |

| FONDEF | Fund for Fostering Scientific and Technological Development |

References

- Altbach, P. The past, present and future of the research universities. In The Road to Academic Excellence: The Making of World-Class Research Universities; Altbach, P., Salmi, J., Eds.; World Bank: Washington, DC, USA, 2011; pp. 36–60. [Google Scholar]

- Vinkler, P. The Evaluation of Research by Scientometric Indicators; Chandos Publishing: Witney, UK, 2010. [Google Scholar]

- Kosten, J. A classification of the use of research indicators. Scientometrics 2016, 108, 457–464. [Google Scholar] [CrossRef] [PubMed]

- Bernasconi, A. Are there Research universities in Chile? In World Class Worldwide: Transforming Research Universities in Asia and Latin America; Altbach, P., Balán, J., Eds.; The John Hopkins University Press: Baltimore, MD, USA, 2007; pp. 234–259. [Google Scholar]

- Bernasconi, A. Private and public pathways to world-class research universities: The case of Chile. In The Road to Academic Excellence: The Making of World-Class Research Universities; Altbach, P., Salmi, J., Eds.; World Bank: Washington, DC, USA, 2011; pp. 229–260. [Google Scholar]

- Santelices, B. Investigación Científica Universitaria en Chile [University Scientific Research in Chile]. In La Educación Superior de Chile: Transformación, Desarrollo y Crisis [Chile’s Higher Education: Transformation, Development and Crisis]; Bernasconi, A., Ed.; Ediciones Universidad Católica de Chile. Colección Estudios en Educación: Santiago, Chile, 2015; pp. 409–446. [Google Scholar]

- RICYT. Indicators. Comparatives. Red de Indicadores de Ciencia y Tecnología Interamericana e Iberoamericana [Inter-American and Ibero-American Network of Science and Technology Indicators]. Available online: http://www.ricyt.org/en/category/indicators/ (accessed on 29 November 2023).

- López, D.; Sánchez, X. Desarrollo en ciencias y tecnologías en Chile. Avances, desigualdades y principales desafíos [Science and technology development in Chile. Advances, inequalities and main challenges]. In Desafíos y Dilemas de la Universidad y la Ciencia en América Latina y el Caribe en el Siglo XXI [Challenges and Dilemmas of the University and Science in Latin America and the Caribbean in the 21st Century]; Lago Martínez, S., Correa, N.H., Eds.; Teseo: Buenos Aires, Argentina, 2015; pp. 233–254. [Google Scholar]

- López, D. La investigación como desafío estratégico: Una experiencia de cambios en la gobernanza universitaria [Research as a strategic challenge: An experience of changes in university governance]. In Investigaciones Sobre Gobernanza Universitaria y Formación Ciudadana en Educación [Research on University Governance and Citizenship Training in Education]; Ganga, F., Leyva, O., Hernández, A., Tamez, G., Paz, L., Eds.; Universidad de Nuevo León & Editorial Fontamara: Nuevo León, Mexico, 2018; pp. 243–261. [Google Scholar]

- López, D.; Troncoso, E. La complejidad institucional como factor de cambio en la gobernanza universitaria. Análisis de un caso aplicado a la investigación científica y tecnológica [Institutional complexity as a factor of change in the university governance. Analysis of a case applied to scientific and technological research]. In Nuevas Experiencias en Gobernanza Universitaria [New Experiences in University Governance]; Ganga, F., González, E., Ostos, O., Hernández, M., Eds.; Ediciones USTA, Universidad Santo Tomás: Santiago, Chile, 2021; pp. 57–84. [Google Scholar]

- Colther, C.; Piffaut, P.; Montecinos, A. Analysis of the scientific productivity and technical efficiency of Chilean universities. Calid. Educ. 2021, 54, 245–270. [Google Scholar] [CrossRef]

- Astudillo Besnier, P. Chile needs better science governance and support. Nature 2014, 511, 385. [Google Scholar] [CrossRef] [PubMed]

- Rabesandratana, J. Chilean scientists protest poor working conditions. Science 2015, 367, 1260–1263. [Google Scholar] [CrossRef]

- Morales, N.S.; Fernández, I.C. Chile unprepared for Ph.D. influx. Science 2017, 356, 1131–1132. [Google Scholar] [CrossRef] [PubMed]

- Krauskopf, M. Scientometric indicators as a means to assess the performance of state supported universities in developing countries: The Chilean case. Scientometrics 1992, 23, 105–121. [Google Scholar] [CrossRef]

- Ramos-Zincke, C. A well-behaved population: The Chilean scientific researchers of the XXI century and the international regulation. Sociologica 2021, 15, 153–178. [Google Scholar] [CrossRef]

- Troncoso, E.; Ganga-Contreras, F.; Briceño, M. Incentive policies for scientific publications in the state universities of Chile. Publications 2022, 10, 20. [Google Scholar] [CrossRef]

- Benavente, J.M.; Crespi, G.; Figal, L.; Maffioli, A. The impact of national research funds: A regression discontinuity approach to the Chilean FONDECYT. Res. Policy 2012, 41, 1461–1475. [Google Scholar] [CrossRef]

- ANID. DataCiencia. Available online: https://dataciencia.anid.cl/ (accessed on 29 November 2023).

- Lemaitre, M. Aseguramiento de la calidad: Una política y sus circunstancias [Quality assurance: A policy and its circumstances]. In La Educación Superior de Chile: Transformación, Desarrollo y Crisis [Chile’s Higher Education: Transformation, Development and Crisis]; Bernasconi, A., Ed.; Ediciones Universidad Católica de Chile. Colección Estudios en Educación: Santiago, Chile, 2015; pp. 295–343. [Google Scholar]

- Espinoza, O.; González, L.E. Evolución del sistema de aseguramiento de la calidad y el régimen de acreditación en la educación superior chilena [Evolution of the quality assurance system and the accreditation regime in Chilean higher education]. In Calidad en la Universidad [Quality at the University]; Espinoza, O., López, D., González, L.E., Pulido, S., Eds.; Instituto Interuniversitario de Investigación Educativa [Interuniversity Institute for Educational Research]: Santiago, Chile, 2019; pp. 91–127. [Google Scholar]

- López, D.; Rojas, M.J.; Rivas, M. El aseguramiento de la calidad de las universidades chilenas: Diversidad institucional y áreas de acreditación [The quality assurance of Chilean universities: Institutional diversity and areas of accreditation]. In Calidad en la Universidad [Quality at the University]; Espinoza, O., López, D., González, L.E., Pulido, S., Eds.; Instituto Interuniversitario de Investigación Educativa [Interuniversity Institute for Educational Research]: Santiago, Chile, 2019; pp. 129–159. [Google Scholar]

- López, D.A.; Rojas, M.J.; López, B.; López, D.C. Chilean universities and institutional quality assurance processes. Qual. Assur. Educ. 2015, 23, 166–183. [Google Scholar] [CrossRef]

- Letelier, M.F.; Carrasco, R. Higher education assessment and accreditation in Chile: State-of-the art and trends. Eur. J. Eng. Educ. 2004, 29, 119–124. [Google Scholar] [CrossRef]

- Bernasconi, A. Chile: Accreditation versus proliferation. Int. High. Educ. 2007, 47, 18–19. [Google Scholar] [CrossRef][Green Version]

- CNAP. El Modelo Chileno de Acreditación de la Educación Superior (1997–2007) [The Chilean Higher Education Accreditation Model (1997–2007). 2007. Available online: https://www.cnachile.cl/Biblioteca%20Documentos%20de%20Interes/LIBRO_CNAP.pdf (accessed on 29 November 2023).

- Harvey, L.; Knightb, P. Transforming Higher Education. Society for Research into Higher Education; Open University Press: Buckingham, UK, 1996. [Google Scholar]

- Vanhoof, J.; Van Petegem, P. Matching international and external evaluation in an era of accountability and school development: Lessons from Flemish perspective. Stud. Educ. Evol. 2007, 33, 101–109. [Google Scholar] [CrossRef]

- Stensaker, B. External quality assurance in higher education. In Encyclopedia of International Higher Education System and Institutions; Teixeira, P., Shin, J., Eds.; Springer: Dordrecht, The Netherlands, 2018. [Google Scholar] [CrossRef]

- National Congress of Chile. Ley N°20.129 Establece un Sistema Nacional de Aseguramiento de la Calidad de la Educación Superior [Law No. 20.129 Establishes a National System for Quality Assurance in Higher Education]. 23 October 2006. Library of the National Congress of Chile. Available online: https://www.bcn.cl/leychile/navegar?idNorma=255323 (accessed on 29 November 2023).

- National Congress of Chile. Ley N°21.091 Sobre Educación Superior [Law No. 21.091 on Higher Education]. 11 May 2018. Library of the National Congress of Chile. Available online: https://www.bcn.cl/leychile/navegar?idNorma=1118991 (accessed on 29 November 2023).

- Lemaitre, M. Aseguramiento de la Calidad en Chile: Impacto y Proyecciones [Quality Assurance in Chile: Impact and Projections]; Serie de Seminarios Internacionales (International Seminar Series); Consejo Superior de Educación (Higher Council of Education): Santiago, Chile, 2005; pp. 55–69. [Google Scholar]

- Navarro, G. Impacto del proceso de acreditación de carreras en el mejoramiento académico [Impact of the career accreditation process on academic improvement]. Calid. Educ. 2007, 26, 247–288. [Google Scholar] [CrossRef]

- Zapata, G.; Tejeda, I. Impactos del aseguramiento de la calidad y acreditación de la educación superior: Consideraciones y proposiciones [Impacts of quality assurance and accreditation of higher education: Considerations and propositions]. Calid. Educ. 2009, 31, 192–209. [Google Scholar] [CrossRef]

- Rojas, M.J.; López, D.A. La Acreditación de la Gestión Institucional en Universidades Chilenas [Accreditation of Institutional management in Chilean Universities. Rev. Electrónica Investig. Educ. 2016, 18, 180–190. Available online: https://www.scielo.org.mx/scielo.php?script=sci_arttext&pid=S1607-40412016000200014 (accessed on 4 February 2024).

- Fernández, E.; Ramos, C. Acreditación y desarrollo de capacidades organizacionales en las universidades chilenas [Accreditation and development of organizational capabilities in chilean universities]. Calid. Educ. 2020, 53, 219–251. [Google Scholar] [CrossRef]

- López, D.A.; Espinoza, O.; Rojas, M.J.; Crovetto, M. External evaluation of university quality in Chile: An overview. Qual. Assur. Educ. 2022, 30, 272–288. [Google Scholar] [CrossRef]

- Espinoza, O.; González, L.E. Estado Actual del Sistema de Aseguramiento de la Calidad y del Régimen de Acreditación en la Educación Superior en Chile: Balance y Perspectivas [Current Status of the Quality Assurance System and the Accreditation regime in Higher Education in Chile: Balance and Perspectives]. Rev. Educ. Super. 2012, 41, 87–109. Available online: https://www.scielo.org.mx/pdf/resu/v41n162/v41n162a5.pdf (accessed on 4 February 2024).

- Salazar, J.; Leihy, P. La poética del mejoramiento: ¿hacia dónde nos han traído las políticas de calidad de la educación superior? [The poetics of improvement: Where have higher education quality policies brought us?]. Estud. Soc. 2014, 122, 125–191. [Google Scholar]

- Barroilhet, A. Problemas estructurales de la acreditación de la educación superior en Chile: 2006–2012 [Structural problems of higher education accreditation in Chile: 2006–2012]. Rev. Pedagog. Univ. Didáctica Derecho 2019, 6, 43–78. [Google Scholar] [CrossRef]

- Barroilhet, A.; Ortiz, R.; Quiroga, B.F.; Silva, M. Exploring conflict of interest in university accreditation in Chile. High. Educ. Policy 2021, 35, 479–497. [Google Scholar] [CrossRef]

- CNA. Reglamento Sobre Áreas de Acreditación [Regulation on Accreditation Areas]. Comisión Nacional de Acreditación [National Accreditation Commission]. Available online: https://www.cnachile.cl/documentos%20de%20paginas/res-dj-01.pdf (accessed on 29 November 2023).

- CNA. Nuevos Criterios y Estándares de Calidad [New Quality Criteria and Standards]. Comisión Nacional de Acreditación [National Accreditation Commission]. Available online: https://www.cnachile.cl/noticias/paginas/nuevos_cye.aspx (accessed on 29 November 2023).

- Williams, J. Quality assurance and quality enhancement: Is there a relationship? Qual. High. Educ. 2016, 22, 97–102. [Google Scholar] [CrossRef]

- Muñoz, D.A. Assessing the research efficiency of higher education institutions in Chile: A data envelopment analysis approach. Int. J. Educ. Manag. 2016, 30, 809–825. [Google Scholar] [CrossRef]

- Bernasconi, A.; Rojas, F. Informe Sobre la Educación Superior en Chile: 1980–2003 [Report on Higher Education in Chile: 1980–2003]; Editorial Universitaria: Santiago, Chile, 2004. [Google Scholar]

- OECD. La Educación Superior en Chile. Organización Para la Cooperación y el Desarrollo Económico. Revista de las Políticas Nacionales en Educación; OECD: Paris, France; World Bank: Washington, DC, USA; Ministerio de Educación de Chile: Santiago, Chile, 2009. [Google Scholar]

- López, D.A.; Rojas, M.J.; Rivas, M.C. ¿Existe aprendizaje institucional en la acreditación de universidades chilenas? [Is there organizational learning in the Chilean Universities accreditation?]. Avaliação Rev. Avaliação Educ. Super. (Camp.) 2018, 23, 391–404. [Google Scholar] [CrossRef][Green Version]

- Krauskopf, M. Las ciencias en las instituciones públicas y privadas de investigación [Science in public and private research institutions]. In El Conflicto de las Universidades Entre lo Público y Privado [The Conflict between Public and Private Universities]; Brunner, J.J., Peña, C., Eds.; Ediciones Diego Portales: Santiago, Chile, 2011; pp. 389–415. [Google Scholar]

- CNA. Cuenta Pública [Institutional Public Account]. Comisión Nacional de Acreditación [National Accreditation Commission]. Available online: https://www.cnachile.cl/Paginas/documentos-de-interes.aspx (accessed on 29 November 2023).

- Cossani, G.; Codoceo, L.; Cáceres, H.; Tabilo, J. Technical efficiency in Chile’s higher education system: A comparison of rankings and accreditation. Eval. Program Plan. 2022, 92, 102058. [Google Scholar] [CrossRef] [PubMed]

- Sthioul, A. Una Nueva Tipología Institucional de Investigación, Desarrollo, e Innovación (I+D+i) en las Universidades Chilenas [A New Institutional Typology of Research, Development and Innovation (R&D&I) in Chilean Universities]; Working Papers, No. 9. Centro de Estudios; División de Planificación y Presupuesto [Center of Studies. Planning and Budget Division], Ministry of Education of Chile: Santiago, Chile, 2017. [Google Scholar]

- Elsevier. What Is the Complete List of Scopus Subject Areas and All Science Journal Classification Codes (ASJC)? Available online: https://service.elsevier.com/app/answers/detail/a_id/15181/supporthub/scopus/ (accessed on 29 November 2023).

- Elsevier. Research Metrics Guidebook; Elsevier B.V.: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Kirchner, K.; Zec, J.; Delibašić, B. Facilitating data preprocessing by a generic framework: A proposal for clustering. Artif. Intell. Rev. 2016, 45, 271–297. [Google Scholar] [CrossRef]

- Abdullah, M.B. On a robust correlation coefficient. J. R. Stat. Soc. Ser. D (Stat.) 1990, 39, 455–460. [Google Scholar] [CrossRef]

- Kotz, S.; Balakrishnan, N.; Read, C.B.; Vidakovic, B.; Johnson, N.L. Hierarchical cluster analysis. In Encyclopedia of Statistical Sciences, 2nd ed.; John Wiley & Sons Inc.: Hoboken, NJ, USA, 2006; pp. 3142–3148. [Google Scholar]

- Ward, J.H. Hierarchical grouping to optimize an objective function. J. Am. Stat. Assoc. 1963, 58, 236–244. [Google Scholar] [CrossRef]

- Aldieri, L.; Kotsemir, M.; Vinci, C.P. The impact of research collaboration on academic performance: An empirical analysis for some European countries. Socio-Econ. Plan. Sci. 2018, 62, 13–30. [Google Scholar] [CrossRef]

- Ranjbar-Pirmousa, Z.; Borji-Zemeidani, N.; Attarchi, M.; Nemati, S.; Aminpour, F. Comparative analysis of research performance of medical universities based on qualitative and quantitative scientometric indicators. Acta Medica Iran. 2019, 57, 448–455. [Google Scholar] [CrossRef]

- Liu, X.; Sun, R.; Wang, S.; Wu, Y.J. The research landscape of big data: A bibliometric analysis. Libr. Hi Tech 2020, 38, 367–384. [Google Scholar] [CrossRef]

- Pakkan, S.; Sudhakar, C.; Tripathi, S.; Rao, M. Quest for ranking excellence: Impact study of research metrics. DESIDOC J. Libr. Inf. Technol. 2021, 41, 61–69. [Google Scholar] [CrossRef]

- American Society for Cell Biology. The San Francisco Declaration on Research Assessment (DORA). Available online: http://www.ascb.org/dora/ (accessed on 29 November 2023).

- Hicks, D.; Wouters, P.; Waltman, L.; de Rijcke, S.; Rafols, I. Bibliometrics: The Leiden Manifesto for research metrics. Nature 2015, 520, 429–431. [Google Scholar] [CrossRef]

- Wilsdon, J. We need a measured approach to metrics. Nature 2015, 523, 129. [Google Scholar] [CrossRef]

- Andras, P. Research: Metrics, quality, and management implications. Res. Eval. 2011, 20, 90–106. [Google Scholar] [CrossRef]

- Taylor, J. The assessment of research quality in UK universities: Peer review or metrics? Br. J. Manag. 2011, 22, 202–217. [Google Scholar] [CrossRef]

- Butler, J.S.; Sebastian, A.S.; Kaye, D.; Wagner, S.C.; Morrissey, P.B.; Schroeder, G.D.; Kepler, C.K.; Vaccaro, A.R. Understanding traditional research impact metrics. Clin. Spine Surg. 2017, 30, 164–166. [Google Scholar] [CrossRef]

- Craig, B.M.; Cosh, S.M.; Luck, C.C. Research productivity, quality, and impact metrics of Australian psychology academics. Aust. J. Psychol. 2021, 73, 144–156. [Google Scholar] [CrossRef]

- Van Noorden, R. Metrics: A profusion of measures. Nature 2010, 465, 864–866. [Google Scholar] [CrossRef]

- Abramo, G.; D’Angelo, C.A.; Solazzi, M. The relationship between scientists’ research performance and the degree of internationalization of their research. Scientometrics 2011, 86, 629–643. [Google Scholar] [CrossRef]

- Edwards, D.M.; Meagher, L.R. A framework to evaluate the impacts of research on policy and practice: A forestry pilot study. For. Policy Econ. 2020, 114, 101975. [Google Scholar] [CrossRef]

- Perkmann, M.; Salandra, R.; Tartari, V.; McKelvey, M.; Hughes, A. Academic engagement: A review of the literature 2011–2019. Res. Policy 2021, 50, 104114. [Google Scholar] [CrossRef]

- CNA. Guía Para la Autoevaluación Interna Acreditación Institucional. Universidades [Guide for Internal Self-Assessment Institutional Accreditation. Universities]. Comisión Nacional de Acreditación [National Accreditation Commission]. Available online: https://www.cnachile.cl/SiteAssets/Lists/Acreditacion%20Institucional/AllItems/Gui%CC%81a%20para%20la%20autoevaluacio%CC%81n%20interna%20Universidades.pdf (accessed on 29 November 2023).

- CNA. Guía Para la Evaluación Externa. Universidades [Guide for External Evaluation. Universities]. Retrieved 22 October 2022, from Comisión Nacional de Acreditación [National Accreditation Commission]. Available online: https://www.cnachile.cl/SiteAssets/Lists/Acreditacion%20Institucional/AllItems/Gui%CC%81a%20para%20la%20evaluacio%CC%81n%20externa%20Universidades.pdf (accessed on 29 November 2023).

- CNA. Búsqueda Avanzada de Acreditaciones [Advanced Accreditations Search]. Retrieved 22 October 2022, from Comisión Nacional de Acreditación [National Accreditation Commission]. Available online: https://www.cnachile.cl/Paginas/buscador-avanzado.aspx (accessed on 29 November 2023).

- CNED. Buscador de Resoluciones de Acuerdos [Agreements Resolutions Search]. Consejo Nacional de Educación [National Council of Education]. Available online: https://www.cned.cl/resoluciones-de-acuerdos (accessed on 29 November 2023).

- Feist, G.J. Quantity, quality, and depth of research as influences on scientific eminence: Is quantity most important? Creat. Res. J. 1997, 10, 325–335. [Google Scholar] [CrossRef]

- Demir, S.B. Predatory journals: Who publishes in them and why? J. Informetr. 2018, 12, 1296–1311. [Google Scholar] [CrossRef]

- Chen, X.-P. Author ethical dilemmas in the research publication process. Manag. Organ. Rev. 2011, 7, 423–432. [Google Scholar] [CrossRef]

- Robinson, S.R. Self-plagiarism and unfortunate publications: An essay on academic values. Stud. High. Educ. 2014, 39, 265–277. [Google Scholar] [CrossRef]

- Moffatt, B.; Elliott, C. Ghost marketing: Pharmaceutical companies and ghostwritten journal articles. Perspect. Biol. Med. 2007, 50, 18–31. [Google Scholar] [CrossRef]

- Pfleegor, A.G.; Katz, M.; Bowers, M.T. Publish, perish, or salami slice? Authorship ethics in an emerging field. J. Bus. Ethics 2019, 156, 189–208. [Google Scholar] [CrossRef]

- Tijdink, J.K. Publish & Perish; research on research and researchers. Tijdschr. Voor Psychiatr. 2017, 59, 406–413. [Google Scholar]

- Haslam, N.; Laham, S.M. Quality, quantity, and impact in academic publication. Eur. J. Soc. Psychol. 2010, 40, 216–220. [Google Scholar] [CrossRef]

- Collazo-Reyes, F. Growth of the number of indexed journals of Latin America and the Caribbean: The effect on the impact of each country. Scientometrics 2014, 98, 197–209. [Google Scholar] [CrossRef]

- Vessuri, H.; Guédon, J.-C.; Cetto, A.M. Excellence or quality? Impact of the current competition regime on science and scientific publishing in Latin America and its implications for development. Curr. Sociol. 2014, 62, 647–665. [Google Scholar] [CrossRef]

- Di Bitetti, M.S.; Ferreras, J.A. Publish (in English) or perish: The effect on citation rate of using languages other than English in scientific publications. Ambio 2017, 46, 121–127. [Google Scholar] [CrossRef] [PubMed]

- Moed, H.F. UK research assessment exercises: Informed judgments on research quality or quantity? Scientometrics 2008, 74, 153–161. [Google Scholar] [CrossRef]

- Huang, D. Positive correlation between quality and quantity in academic journals. J. Informetr. 2016, 10, 329–335. [Google Scholar] [CrossRef]

- Michalska-Smith, M.; Allisina, S. And, not or: Quality, quantity in scientific publishing. PLoS ONE 2017, 12, e0178074. [Google Scholar] [CrossRef]

- Forthmann, B.; Leveling, M.; Dong, Y.; Dumas, D. Investigating the quantity–quality relationship in scientific creativity: An empirical examination of expected residual variance and the tilted funnel hypothesis. Scientometrics 2020, 124, 2497–2518. [Google Scholar] [CrossRef]

- Fernández Lamarra, N. Higher education, quality evaluation and accreditation in Latin America and MERCOSUR. Eur. J. Educ. 2003, 38, 253–269. Available online: https://www.jstor.org/stable/1503502 (accessed on 18 November 2023). [CrossRef]

- Van Vught, F.A.; Westerheijden, D.F. Quality Management and Quality Assurance in European HigherEducation: Methods and Mechanics; Center for Higher Education Policy Studies, University of Twente: Enschede, The Netherlands, 1992. [Google Scholar]

- Brennan, J.; Shah, T. Quality assessment and institutional change: Experience from 14 countries. High. Educ. 2000, 40, 331–349. [Google Scholar] [CrossRef]

- Hoecht, A. Quality assurance in UK higher education: Issues of trust, control, professional autonomy, and accountability. High. Educ. 2006, 51, 541–563. [Google Scholar] [CrossRef]

- Stensaker, B.; Langferldt, L.; Harvey, L.; Huisman, J.; Westerheijden, D. An in-depth study on the impact of external quality assurance. Assess. Eval. High. Educ. 2011, 36, 465–478. [Google Scholar] [CrossRef]

- Duque, J.F. A comparative analysis of the Chilean and Colombian systems of quality assurance in higher education. High. Educ. 2021, 82, 669–683. [Google Scholar] [CrossRef]

- Landoni, P.; Romero, C. Aseguramiento de la calidad y desarrollo de la educación superior privada: Comparaciones entre las experiencias de argentina, chile y uruguay [quality assurance and development of private higher education: Comparisons between the experiences of argentina, chile and uruguay]. Calid. Educ. 2018, 25, 263–282. [Google Scholar] [CrossRef]

- Ahumada Bastidas, V.R. Entre Escila y Caribdis: Reflexiones en torno a las políticas de aseguramiento de la calidad universitaria en Chile y Perú [Between Scylla and Charybdis: Reflections on university quality assurance policies in Chile and Peru]. Rev. Educ. Soc. 2021, 2, 4–27. [Google Scholar] [CrossRef]

- Hernández-Díaz, P.M.; Polanco, J.-A.; Escobar-Sierra, M. Building a measurement system of higher education performance: Evidence from a Latin-American country. Int. J. Qual. Reliab. Manag. 2021, 38, 1278–1300. [Google Scholar] [CrossRef]

- Lemaitre, M.J.; Aguilera, R.; Dibbern, A.; Hayt, C.; Muga, A.; Téllez, J. Organización de las Naciones Unidas para la Educación, la Ciencia y la Cultura (UNESCO). Centro Regional para la Educación Superior en América Latina y el Caribe (CRES); Instituto Internacional de la UNESCO para la Educación Superior en América Latina y el Caribe (IESALC): Córdoba, Argentina, 2018. [Google Scholar]

- Kayyali, M. The Relationship between Rankings and Academic Quality. Int. J. Manag. Sci. Innov. Technol. 2023, 4, 1–11. Available online: https://ssrn.com/abstract=4497493 (accessed on 4 February 2024).

- Benedictus, R.; Miedema, F.; Ferguson, M. Fewer numbers, better science. Nature 2016, 538, 453–455. [Google Scholar] [CrossRef] [PubMed]

- Sarewitz, D. The pressure to publish pushes down quality. Nature 2016, 533, 147. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).