Abstract

The term “small journal” has been used for a journal published as a single journal or one of a few serials, mostly by an academic publisher. This case study showed the challenges that a journal must override to be indexed in Scopus and WoS, especially if Q1/Q2 are targeted. The number of submissions, and especially of the published papers, are not the most critical variables for an increase of journal citations. The most important is the further activity of researchers included in the paper’s authorship, their future publication rate and continuation of similar research, which implies the citations of previous works belonging to the same authors and/or research groups. The larger the number of papers per issue, the increased probability of such an event, but there is no linear correlation. Moreover, the editorial work, especially during the initial editorial screening of received submissions, makes the consequent reviewer’s work easier, faster, and of higher quality, which certainly increases the quality of publications and their further citation life. The cited half-life vs. cited half-life ratio in small journals would need to be less than one (here 0.25), making the published papers fast cited, with first citations coming early enough that they could fit in a three-year window, and be countable for the calculation of indexing measures like Citescore or the Impact Factor.

1. Introduction

The presented case study considers some challenges of the contemporary journal’s ranking systems from the point of view of the publisher of scientific journals with a few or even just one title. Moreover, the scope of the analysed title is in the natural and technical sciences, so the further similarities with other titles would need to be searched only in those two fields, even with respect to the considered subject categories (mining, geology, engineering…). However, such normalization of impact factors and similar measures based on database subject categories is a statistically demanding process (e.g., [1]) and is out of the scope of this work.

Consequently, the study included the publication, ranking, and citation patterns of a specific journal. This is a descriptive work, the results of which could be carefully generalized, looking for the specific properties of other titles. Such a comparison must also include two properties of the compared journal: (1) the title must be a “diamond” open access (OA) where the authors are not asked to pay during the entire process, from submission to publication, (2) the journal has to have strict, reasonable, and publicly visible deadlines for all stages during any kind of reviewing rounds, from editorial screening to the post-acceptance corrections.

The Open Journal System (OJS) was not a necessary tool for this analysis, or a tool that other journals need to use to be comparable with the observations and results presented here. However, the OJS is one of the most accessible free-of-costs platforms where the scholarly journals can handle, publish, and archive their content, whatever differences they have regarding the review procedure, layout process, open access model, etc. (e.g., [2]). The establishment of the OJS offered a chance to small scholarly publishers to compete with large corporate ones.

The presented statistics are very basic. However, as the main value of this study is the editor’s views and attitudes, as well as thoughts based on a year-long experience, to those in readership who are/were authors or reviewers in similar journals. As the main hypothesis, some specific properties of the analysed title are considered that are also mostly shared with similar journals. The analyses included several years of very propulsive title development and cannot be directly applied on titles that recorded striving for or decreasing their indexing variables.

The “Rudarsko-geološko-naftni zbornik” (abbreviated as ”Rud.-geol.-naft. zb.“; in English “The Mining-Geological-Petroleum Engineering Bulletin”) is a scientific journal of the Faculty of Mining, Geology, and Petroleum Engineering at the University of Zagreb. The publication started in 1989 and has been active to the present day. The journal is bibliographically recognised with ISSN 1849-0409 (online), 0353-4529 (print), and DOI prefix 10.17794/rgn. Generally, the journal publishes papers from different natural and technical fields, like energetics, physics, geology, geological engineering, mathematics, petroleum engineering, mining, and space sciences. The journal started with the publication of papers from mining, petroleum and geological engineering and geology (Universal Decimal Classification, UDC 622:55). In 2016, the new section “Mathematics, Physics, Space Sciences” was introduced, and in 2018, also “Other Sciences”, covering the manuscripts from interdisciplinary parts of basic sections. So, it finally covers, regarding UDC, the field 62:66:51–56. Consequently, the journal’s content volume gradually grew, and in 2021, it eventually reached five issues per year with approximately 14 papers per issue (Table 1). The manuscripts are constantly and stably increasing and there are always more than ten active submissions in the review process. In the 60 published papers, the scientific ones prevail, as shown in Table 1, where there are 42 original scientific papers, five review scientific papers, and nine preliminary communications listed. The rest (Table 1) belongs to three review and one case study professional papers. The dominance of original scientific papers, with a median of eight (Table 1), is constant, which is favourable for the expectance of future journal citations, which mostly come from original contributions later applied as a base for future research.

Table 1.

Number and categories of papers in five issues published during 2021. There were 60 papers organized into five categories, where 56 were selected as scientific papers.

Even though any growth of the journal virtually looks very favourable for the editors, the picture is not one-sided. More submissions mostly lead to more accepted papers per issues. In cumulative score, it means more citations; however, each citation variable has a denominator where the number of the published papers is counted. Ideally, more papers consequently lead to more citations per paper as a result of the higher interest of potential authors to publish and later to cite their work.

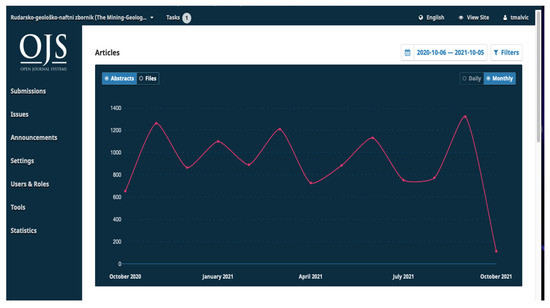

The number of citations can also be considered indirectly in correlation with the number of accesses to the journal content. Here is an example of a monthly period, 5 September–5 October 2021, when the daily median of access was about 40 abstracts/day. However, statistics are more relevant if observed on an annual basis. That is why the period October 2020–October 2021 has been taken as a sample and supported with Figure 1, and it shows that about 600–1350 abstracts had been accessed monthly (Figure 1), with a median around 1000. If access to full papers is considered (Figure 2) for the same period (October 2020–October 2021), the numbers are very correlative with abstract accessions, i.e., the median could be set again around 1000 per month. As expected, in most months, the number of accesses to abstracts is slightly lower than to full papers, but with exceptions like January–February 2021 (Figure 1 and Figure 2), when the access to papers is larger than to abstracts. These deviations could be easily explained with the fact that full papers can also be accessed from direct links that lead to its Open Journal System (OJS) archive, as well as from the issue page where titles and abstracts are listed on the front page. It is also worth mentioning that the access rate was approximately the same for Croatian and non-Croatian users.

Figure 1.

The number of monthly accesses to the journal’s OJS from October 2020 to October 2021—abstracts.

Figure 2.

The number of monthly accesses to the journal’s OJS from October 2020 to October 2021—files.

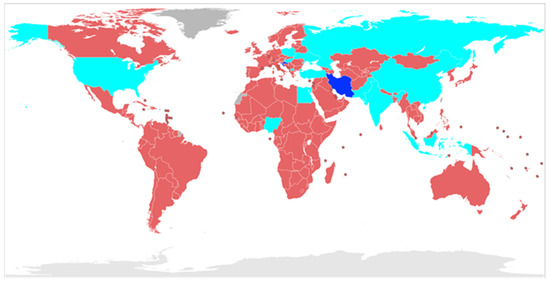

Summarily, during the period October 2020 to October 2021, the journal published 60 reviewed papers (Table 1) and reached 14 papers per issue (from No. 55 onward), which geographically came from 14 countries. Three contributions had international co-authorships. Looking at particular countries, 26 papers came from Iran, 14 from Croatia, six from Indonesia, three from Ukraine and Pakistan, respectively, two from the USA, and one from Poland, Egypt, Russia, Northern Macedonia, China, Nigeria, Turkey, and Hungary, respectively (Figure 3). In total, 232 authors shared co-authorship on those 60 papers. In the total number of published papers, two countries dominate—Iran with 43% and Croatia with 23% of the total publications. This was a slight change compared to the previous yearly period, when the same countries held the same positions, but with different percentages (37% vs. 32%). That shift indicated the more “international” content of the journal, meaning the decrease in the portion of the papers coming from the country of the publisher to less than one quarter. In general, the countries of authorship origin are majorly located in Central and Eastern Europe and Western Asia (Figure 3) and not publications came from South America, Australia, or Oceania (Figure 3).

Figure 3.

The countries where the submitted published papers in the period October 2020–October 2021 came from. The dark blue countries are those with more than 10 papers, the light blue countries are those with 10 or less papers (the red countries are not listed in the author’s addresses for the analysed period).

2. Analysis of the Journal Indexing and Archiving and Highlighted Results about Citation Rates

The journal has been indexed in eight databases, namely Emerging Sources Citation Index (Clarivate Analytics), Scopus + Compendex + Geo Abstracts (Geobase) + Fluidex (Elsevier), GeoRef, Geotechnical Abstracts, Google Scholar, Petroleum Abstracts. Moreover, the journal is available in six academic databases: DOAJ, CrossRef, Electronic Journals Library, Hrčak, JournalSeek, Publons, Scilit. Furthermore, two commercial searching databases included the content: EBSCO Publishing Services and ProQuest (SciTech, Natural Science, Environmental Science, Earth Science, Technology Collection, Materials Science collections).

Methodology and data sources included values available in the two largest indexing aggregators—Clarivate Web of Sciences (WoS) and Scopus. All of them are available publicly or with (institutional) subscriptions and included time spans from 1999 onward, with an emphasis on the period 2015–2022. Statistics are simple, considering one journal, which is represented with basic graphics of line and box bars. However, all necessary values are given so that conclusions and recommendations can be validated by readers.

2.1. Elsevier—Scopus Rankings

Scopus is one of two world-leading citation-index bibliographic databases. The journal continuously, from 2015 onward (https://www.scimagojr.com/journalsearch.php?q=101730&tip=sid&clean=0 (accessed on 19 July 2022)), has increased its score both in Scimago using SCImago Journal Rank (SJR), which measures weighted citations received by the serial, where citation weighting depends on the subject field and the prestige of the citing serial as well as Citescore. Both are cumulative values, but Citescore is not considered. The analysed journal is selected in five Scopus’ categories (Figure 4), namely (1) Earth and Planetary Sciences (miscellaneous) from 2019 onward, and from 1999 onward in the (2) Energy (miscellaneous), (3) Geology, (4) Geotechnical Engineering and Engineering Geology, (5) Water Sciences and Technology (Figure 4, from top to bottom).

Figure 4.

The journal quartiles in Scimago/Scopus based on SJR in five associated categories (colour legend indicates quartiles/Q as follows: Q2 yellow, Q3 orange, Q4 red). In the Scimago representations, the Q1 is marked green. The similar ranking in Scopus is done by Citescore value but it is not part of the Scimago team annual analysis.

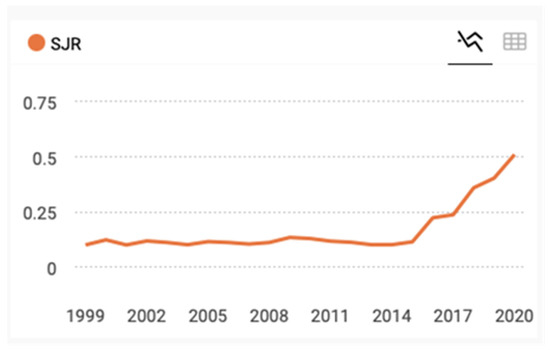

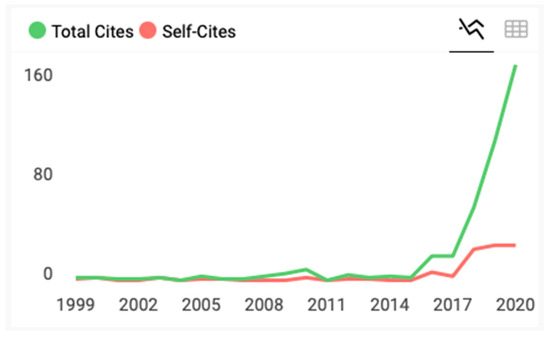

The assigned quartile is correlated with the considered SJR value (Figure 5), number of citations, including self-citations (Figure 6) and the number of citations vs. the number of the published papers (Figure 7). It has to be noted that SJR is the considered value, highly depending on citations received in other Scopus’ journals, especially in those ranked higher than the analysed journal (Figure 5). Figure 6 shows interestingly that as the number of citations rises, the relative ratio between citation and self-citation is drastically increased, which is favourable in the calculation of measures like the SJR.

Figure 5.

The analysed journal SJR values 1999–2020, where the increasing trend also follows a larger number of published papers per year.

Figure 6.

The analysed journal total citations vs. self-citations ratio, where the increasing trend also follows a larger number of published papers per year.

Figure 7.

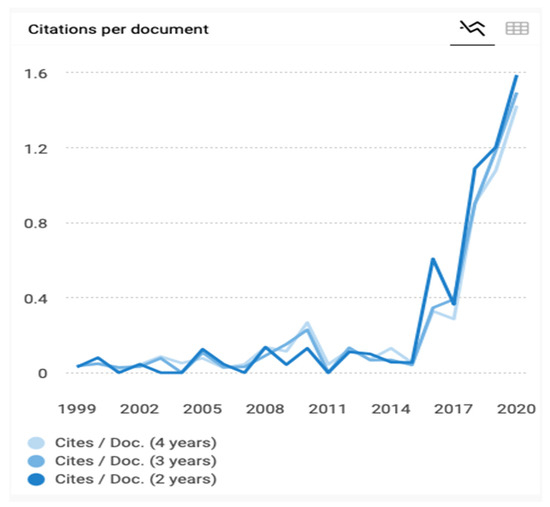

The journal citations vs. number of papers for two, three, and four yearly periods. Two yearly values corresponding with Clarivate Impact Factor (IF) measure. More about IF can be found in, e.g., [3].

More about the simultaneous increase in the number of publications and citations is revealed in Figure 7. In the stagnant period 1999–2015, the two-year ratio citations/papers mostly were lower than the three and four-year ratios. This is expected for journals where the citations score is low and the number of papers is small, because most of the citations came from a small portion of the content and after a relatively longer period. On the contrary, if the journal’s papers start to receive citations very fast, in the first or second year after publication, then most of them will have a majority of the total citations received in the two-year period, and such a measure will overcome the three and four-year period values (Figure 7). Such a trend will last as long as the number of citations grows strongly, eventually stabilising around similar values for all three period’s measures when the journal has reached (the next) limit of its citation/paper potential.

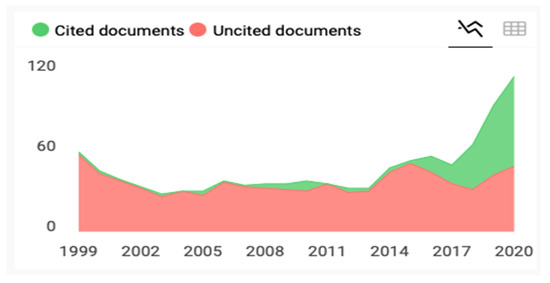

All the previous measures (Figure 5, Figure 6 and Figure 7) are also derived from another qualitative one, i.e., from the ratio of cited and uncited papers through the years (Figure 8). Indirectly, it influences the quantitative ranking measures in Scopus—SJR and especially Citescore (as a non-considered value), due to the fact that more sources started to be cited. It could largely boost the pondered SJR, if more cited sources are listed in the higher ranked journals, giving more “prestige” to the analysed journal. For Citescore, it is enough to receive more citations without regard to from where they come, and just a larger numerator with a constant denominator will launch the journal towards higher percentiles.

Figure 8.

The analysed journal cited vs. uncited documents ratio. Note the increasing trend reflected in fractions 48/39 = 1.23 in 2019, and 62/45 = 1.38 in 2020.

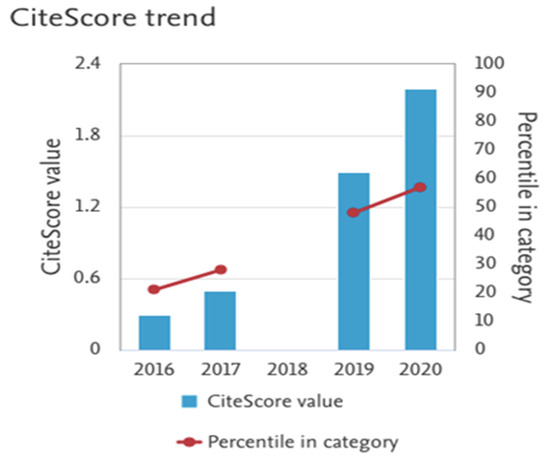

As it was mentioned, Citescore, as the uniform measure (e.g., [4,5]), is a simple calculation of citations in the last four years divided by the number of publications in the same period. As such, it favours the journals that usually received numerous citations without regard to the citation source journal’s ranks. It can slightly favour the journals located in the lower quartiles based on some considered measures, like the SJR. Both measures are expressed through percentiles and quartiles, which are usually somewhat lower regarding the SJR for the journals that received citations from lower ranked sources. This can be expected for the Q3–Q4 journals. Oppositely, if such journals received citations from Q1–Q2 sources it strongly boosts their SJR, while Citescore is not sensitive to it. For example, previously low-ranked, but pro-active journals, like the analysed one, showed such a trend, where in 2019, the journal was set in 4 × Q2 + 1 × Q3 based on SJR and 5 × Q3 based on Citescore. In 2020, it was set in 5 × Q2 based SJR, and 4 × Q2 + 1 × Q3 on Citescore, and in 2021, it was 4 × Q2 + 1 × Q3 for both classifications. Obviously, as the journal increased in rank, the citations received from “higher-ranked” journals are worth less and “equalised” their contribution in SJR as well as Citescore calculations. In this analysis, an increase in Citescore can be significant for several years (Figure 9), but after they reach the limit of exponential growth, they will start to oscillate around some stagnant value (Figure 10).

Figure 9.

Citescore increasing trend for the analysed journal, which is reflected in higher percentiles.

Figure 10.

Citescore increasing trend for the analysed journal, values in 2011 (Citescore) and 2021 (Citescore tracker).

The correlation between Citescore value and assigned quartile is not straightforward, but the value of about 3.0 in most fields was, for the studied period, sufficient to maintain a Q2 ranking in many categories defined as being in the natural or technical sciences/fields. For example, in the field of pharmacology (2017), the values between 1.02 and 3.14 were enough for Q1 (e.g., [6]), with 24 journals selected in the calculation. The same category in 2020 for Q1 required Citescore values of 1.9–8.3, with 33 journals obtained by Citescore and a total of 47 included in the category. So, the trend on Citescore increased, as visible in Figure 9 and Figure 10 for the analysed journal in this study, which is characteristic for many journals that successfully maintain their Q1/Q2 ranks in Scopus.

Such a proactive increasing of Citescore value is especially characteristic for the journals selected in Q1 (or 75% percentile onward). In the prime league, the journal starts to compete with the other journals that regularly and quickly receive a large number of citations compared with the annual paper’s score. Obviously, it is much harder to keep the position in the higher than in the lower quartiles, and such a journal must not depend only on the local community readership but must be visible as regional with a large number of active authors and readers willing to cite the journal’s content and publish such citations in their own papers in other Q1/Q2 journals.

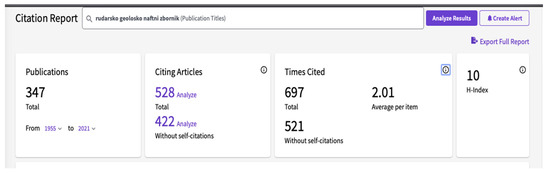

2.2. Clarivate Analytics—Web of Science Rankings

Web of Sciences (WoS) is the world leading base of indexed scientific literature, including all its derivates like the Journal Citation Report. The analysed journal is part of the lowest, so-called entry level, WoS base, named the Emerging Source Citation Index (ESCI). It is only part of WoS where the Journal Impact Factor (JIF; e.g., [7]) is not calculated. The journal does not have to be included in the same number of categories in WoS and Scopus, and these two databases do mostly not use the same category names. The analysed title is part of two (vs. five in Scopus) categories, namely Mining Mineral Processing and Geosciences Multidisciplinary. Similarly to Scopus, WoS calculate citations given in the other WoS journals as well as in all other sources (WoS plus other sources), including self-citations. The number of citations, as well as h-index value, in WoS and Scopus are not the same, but could be comparable (e.g., an h-index in Scopus of 12 corresponds to 10 in WoS, Figure 11). If ESCI is considered as “the waiting room” for numerous journals aspiring to be a part of the Science Citations Index Expanded, it is necessary to have the measure of how they improve their quality and increase world-wide readership. The number of citations per year, compared with publications in the same period, is one of value, where the increasing trend of both values and their ratio is a condition for being considered for promotion. Such graphs of citations vs. publication are shown for the analysed journal in Figure 12, clearly showing that increasing the number of papers per year leads to a large number of citations, but not linearly and with some delay. This can be seen, e.g., in 2018 (Figure 12), and especially in 2012 (Figure 12). The second one is a good example of the importance of reaching international visibility, readership, and authorship, because it was the year when one interesting and high quality special issue had been published but was read mostly among the local community and among authors, without reaching any further significant citations.

Figure 11.

The number of papers and citations of the analysed journal in WoS, including h-index (October 2021).

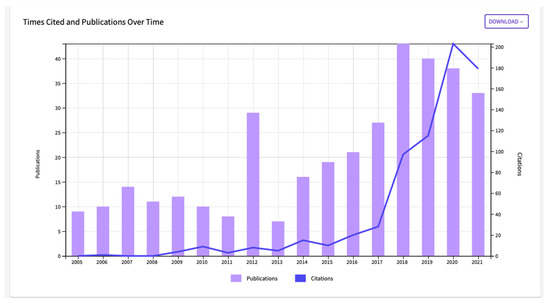

Figure 12.

Citations vs. number of publications per year of the analysed journal in the period 2005–2021.

The trend line on Figure 12 is an indication that, with the number of publications, the 200 citations per year could be a limit with the number of papers and the authors’ further dissemination of their work (also this analysis does not include November and December 2021).

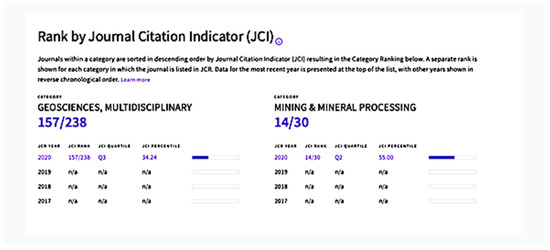

The Journal Citation Report (JCR) is the base that yields a lot of other information of a journal’s achievements during the years that could greatly improve the editorial knowledge and the quality of decision-making regarding insight in the journal’s potential breakthroughs and achievements. Until 2021, the JCR was restricted only to journals in the Science Citation Index Expanded (SCIEx), Science Citation Index (SCI), and Current Contents (CC). They annually received the Journal Impact Factor (JIF) and were selected in quartiles. However, 2021 was the first year when almost all ESCI journals were added to the JCR, still without a JIF, but with the calculation of a new measure, called the Journal Citation Indicator (JCI), and were divided into quartiles (Figure 13). The JCI is a normalised variable (e.g., [8]), like Scopus’ Source Normalized Impact per Paper (SNIP) that measures actual citations received relative to the citations expected for the serial’s subject field. The JCI value, according to Clarivate, can be easily interpreted and compared across disciplines, and is described as the WoS category normalised citation impact for articles published in a journal in the preceding three years. Although all normalised variables have some advantages, all of them are hidden behind complex equations, large data mining of commercial databases, and the selection of journal categories and are not easily reproducible in independent research (e.g., [9]).

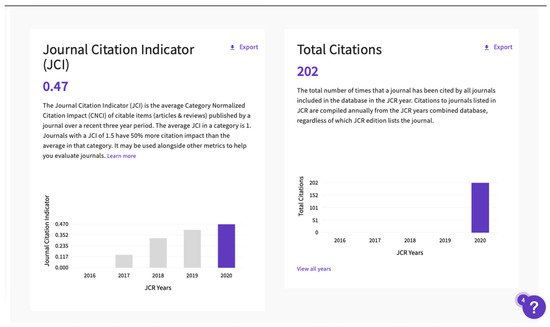

Figure 13.

The JCI value of the analysed journal (left) and the citations (right). In 2020, the JCI was first assigned to the journal, but there are also visible projections of that value in the past (2017–2019).

The average JCI value is set by default to 1, which means that a citation given in a journal with JCI = 1.5 has a 50% “stronger” influence than in a journal with JCI = 1. It is now easy to compare the number of citations in the analysed journal, not only the cumulative ones, but also by their influence on the other journals that received a citation in 2020 (Figure 13). Based on the JCI, the journals have been classified (Figure 14) into quartiles (like ranking based on JIF, which is not a normalised measure, but total citations vs. documents in the last two years). This additional ranking system, that included the JCI, resulted in that now almost all journals in WoS are ranked based on the JCI or both JCI + JIF, which is a great help for editors in following their improvements on an annual basis (Figure 13 and Figure 14), and for Clarivate teams to select new entries for premium databases like SCI or CC.

Figure 14.

Classification into WoS quartiles based on the JCI values for the analysed journal (take into consideration that some of these journals have IF and are also ranked into quartiles based on the JIF).

Eventually, it is easy to observe the largest “weakness” of the JCI as a normalised measure. A so-called default value of 1 is mathematically not average or median assigned to a journal between Q2/Q3 in the category, because even a journal with a significantly lower value (e.g., the analysed journal has 0.47, Figure 13) can reach Q2 and be better ranked than at least 50% of the other journals. This is the problem with all normalised measures in Scopus and WoS, which always favour some journal characteristics, neglecting the others or comparing journals selected in multi-categories with those in just a single one.

3. Discussion about Citation Challenges and Reflection of Ranks

For any journal, it is favourable to be included in both world-leading indexing databases, namely Scopus and WoS, and to compare its scores and metrics in both (Table 2). The values in these databases will not be the same, but they are comparable and can indicate mutual trends. However, there are also mathematical differences in the calculations of the considered values such as SJR, SNIP, and JCI.

Table 2.

Comparison of scoring values of the analysed journal in Scopus and WoS.

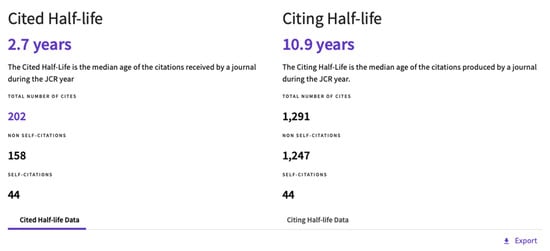

However, the JCR offers one very valuable piece of additional information, the so-called half-time of citations (e.g., [10]). The values for the analysed journal are given in Figure 15. The journal, which has grown fast in recent years, is characterised by relatively recently published papers cited in other journals, with only 2.7 years citation period as its half-life (two years and eight months), which is the average age of the first citation received in other journals after publication (Figure 15). On the contrary, the average age of papers cited in the analysed journal is significantly higher—10.9 years (10 years and 11 months), which means that the authors do not use the most recent sources in general (Figure 15).

Figure 15.

The (left) average age of the papers from the analysed journal cited in other journals and (right) the age of the papers cited in the analysed journal (2017–2019 period).

The cited half-life is an important variable for journal citation measures (JIF, JCI, SJR, Citescore, SNIP), because all of them are based on a “recent” period, i.e., papers published in the last two to four years, and have received a citation. In the same periods in other journals indexed in the same database, it is the journal to whom citations are added. The secondary valuable information is the self-citation rate because a journal with a high level of such citation could receive “lower” prestige in the calculation of considered measures.

There are obviously many challenges and dilemmas for editors who are aiming to continuously increase the journal’s citations and rankings. Of course, the primary condition is honest editorial work, with integrity and respect for a fair and concise review procedure. The number of papers is in direct connection only with such “variables” and attitudes, and gradually leads to increased visibility and popularity of the journal among a wider regional audience. Consequently, this will increase the number of submissions and probably the number of accepted papers. It does not need to lead to linearly more citations, i.e., maintaining the ratio of citations/papers is not a direct conclusion. It largely depends on the editorial decision on which type of papers to favour and the decision about the journal’s policy, which can favour younger researchers that could be considered as the active authors in the coming years or manuscripts from senior scientists that write less but could publish milestone papers, especially reviews, that will tend to be highly cited. Such decisions and results are especially important for small journals, or so-called scholarly journals from the scientific periphery [11] where ranking is a crucial variable for receiving public funds or be excluded from such financial sources. The term “scientific periphery” can be defined with numerous variables, but [12] outlined three as follows: size, imbalance with scientific community, and communication barriers.

It is worth mentioning that publishing from or in the “scientific periphery” has its own challenges for authors and publishers as well. As [13] pointed out: “Academic publishing is one of the most unequal areas of the circulation of ideas”, describing some deviations as an example of tenure evaluations for research positions in Argentina. She also extracted four publishing circuits in Latin America, dividing them into (1) mainstream international publisher, (2) transnational repositories and networks, (3) regional repositories and networks in the Southern Hemisphere, (4) national circuits. A much more pessimistic view was given by [14], expressing doubts that “small journals” can exist in the era of rankings, growing lists of publications, both printed and published online, as well as tensions to reach maximal benefits (scoring, rankings) for the authors’ parental institution. He concluded that such a journal can only serve the local community, filling the gap in some regional contributions, but cannot position itself as some “medium size/moderate profile” journal, as a step towards the large ones of commercial publishers.

The problem of a “small journal” is elaborated by [15], concluding that many elements can be taken into consideration when journals are put into classifications such as “financial, circulation, distribution, publication frequency and regularity, punctuality, language, quality of content, human resources, arbitration process, structure and content, visibility and presence in libraries, indexes and databases, Impact Factor, etc.”. He considered using such a term for publication in Latin America, particularly for biomedical journals.

Many journals, especially those in the earth sciences, are regionally oriented, which defines the origins of most of their citations, but also the editorial board composition. Interestingly, although those two parameters could, they are often not correlated, as shown in the relation between Citescore value and the editorial board composition for 80 Hindawi journals, where rank correlation coefficients were not significant [5], and all editorial boards, considered according to continents, had largely positive skewness, i.e., could not be described with normal distribution using a histogram or chi-square formal test. Moreover, academic journals often have the majority of editorials from the publisher institution, especially editors, which are often answer directly to the publisher’s representative for the journal’s activities. Of course, it is not, and cannot, compromise the editor’s independency but is rather related to the process of reporting the journal’s achievements to the supervisory body. For example, the analysed journal has a total of 12 members on the editorial board, five of which come from the publisher’s institution. All five sections’ editors also come from the same institution, where the editor-in-chief reports the journal’s activities once per year to the faculty council as the supervisory body. However, having an academic publisher yields one potential benefit—the journal is well-known to the institution members, which can cite their papers in other publications. Moreover, if some researcher groups are continuously submitting a part of their research results in the same journal, they will probably cite previously published papers, but, on the other hand, this will probably increase the self-citation score of the journal.

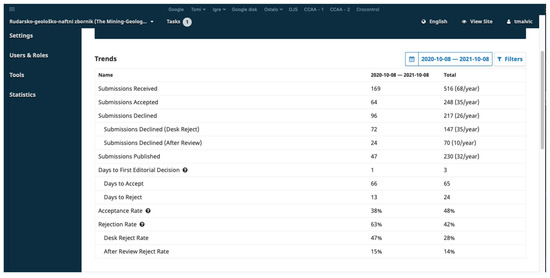

Furthermore, the review process can highly and indirectly influence the number of published papers and their citation score. Generally, a review is composed of two independent stages—the editorial screening and the reviewer work (Figure 16). Each journal develops its own policy on how these processes act together, especially on the criteria for the rejection decision within the editorial screening (like, for instance, compliance with the author’s instructions, plagiarism percentages and included content, respecting deadlines of the submission process and flawless communication with the corresponding author). Increased editorial rejection rate in the screening stage will certainly result in a more transparent and easier review process, enabling reviewers to concentrate on the content, not on formatting of the manuscript (Figure 16). It could be concluded that the number of published papers is not the only a critical variable for increasing citations, but also the content quality for the journal’s readers. For authors who submit to the journal, there is another important variable that can judge their willingness to submit another manuscript in the future or recommend the journal as fair and fast. It is “days to first editorial decision” and “days to reject” (Figure 16). The first variable is important so that authors can be quickly informed if there is any problem and the manuscript will be rejected (e.g., due to the misapplication of instructions or a large percentage in the similarity check results). The second is crucial so that the authors receive clear and comprehensive rejection reviews and could (or not) use it for improving research and submit later elsewhere.

Figure 16.

The rejection and acceptance rates (169 submissions, 96 rejected, 64 accepted) in the analysed journal (October 2020–October 2021); the total score of accepted vs. declined and its rates do not correspond to 100%, because the dataset also included active manuscripts in the OJS submitted before 8 October 2020.

The next, virtually hidden but real challenge, for all journals with a primary non-English name are their quotation in the published paper’s reference lists. Showing the problem on the analysed journal clearly outlines the case. The primary journal name is “Rudarsko-geološko-naftni zbornik”, which is used for registration in the ISSN centre (https://portal.issn.org/resource/ISSN/1849-0409 (accessed on 19 July 2022)) as well as in all the databases. As the Croatian primary name, some indexing databases slightly change the name. In Scopus, it is “Rudarsko Geolosko Naftni Zbornik”, in WoS “RUDARSKO-GEOLOSKO-NAFTNI ZBORNIK”. In both cases, the base’s searching/crawling algorithms will probably count citations with all those variants. Some journals in reference lists use an abbreviation of publication titles. The ISSN centre registered the form “Rud.-geol.-naft. zb.” (ISO abbreviation), Scopus does not register abbreviations and WoS uses both the ISO and the JCR version (“RUD-GEOL-NAFT ZB”). All such titles in the references are counted to the journal citation score. However, authors often used the English translation of the journal’s title, which is “The Mining-Geological-Petroleum Engineering Bulletin”, with the simple abbreviation “MGPB”. Although the English translation is registered in the ISSN centre as a parallel title, it is not registered in the two largest citation databases, Scopus and WoS, because they use only the primary title for each source.

This results in that numerous journal’s citations are just lost, i.e., not counted. This problem is even more emphasised when the author translates the journal’s name on their own when writing papers in English, missing the secondary publication title recognised by the ISSN centre. Even more, authors arbitrarily construct the journal title abbreviations from both the Croatian and the English versions, resulting in many variants like Rud. Zb., Min. Geol. Pet. Eng. Bull., Min. Geol. Pet. Bull., Min. Geol. Pet. Eng. Bull. Of course, none of these are counted as the journal’s citation. One recent statistical analysis, performed by several members of editorial staff, showed that the analysed journal had lost (rough estimate) about 25% of the journal’s citations in 2021, due to the improper use of the journal’s title and abbreviations in reference lists of papers published in secondary publications. In several instances, the corrections had been sent using “missing citations” channel to Scopus and WoS. Such analyses for sure could not encompass (even closely) all missing/wrong citation, but have solved at least dozens of them, catching examples like:

One used in Scopus missing citation process:

- (a)

- One cited paper from “Rud.-geol.-naft. zb.” is “Mijić et al.: The influence of TiO2 and SiO2 Nanoparticles on filtration properties of drilling muds” (available at: https://hrcak.srce.hr/ojs/index.php/rgn/article/view/9522 (accessed on 19 July 2022)). It was cited in “Hassanzadeh et al.: Nano-alumina based (alpha and gamma) drilling fluid system to stabilize high reactive shales” (see [8]), available at https://www.sciencedirect.com/science/article/pii/S2405656120300791 (accessed on 19 July 2022) as “Mining-Geology-Petroleum Engineering Bulletin”.

One used in WoS missing citation process:

- (b)

- One cited paper from “Rud.-geol-naft- zb-“ is “Vulin et al.: Slim-tube Simulation Model for Carbon Dioxide Enhanced Oil Recovery” (https://hrcak.srce.hr/ojs/index.php/rgn/article/view/5651 (accessed on 19 July 2022)). It was citied in “Ginting et al.: CO2 MMP determination on L Reservoir by using CMG simulation and correlations” (see REF. 20), available at: https://iopscience.iop.org/article/10.1088/1742-6596/1402/5/055107/meta (accessed on 19 July 2022) as “The Mining-Geology-Petroleum Engineering Bulletin”.

Many of the corrections sent to Scopus and WoS are now corrected also in citing publications; however, probably numerous remain undetected. This problem is strongly connected with “communication barriers” where [12] clearly pointed out the “language barrier” as the most important and emphasised that it is dangerous to replace the mother language in science with some other “commonly accepted” language because it can make the development of science in some ethnical societies difficult. Consequently, although publication in the English language is the current standard for any journal that tends to be visible internationally, especially in natural and technical sciences, the tradition of keeping the original journal name is very important for the local scientific community and their recognition worldwide. It is important to mention that this problem does not affect authors in full scale, because they will receive citation even if the journal name does not exist in the database as the primary title. The other data, like year, article title, volume, issue, pages, even DOI, will confirm that it is a published work. Such a citation will not be counted for their h-index or total score in Scopus or WoS if the journal has not been found but will be counted in, e.g., Google Scholar. However, the journal will not get (its deserved) recognition and it will not increase its score in Scopus and/or WoS. Unfortunately, this problem is much more common than can be found as described in literature and can affect very large geographical and political areas, i.e., can be a problem anywhere where English is not the official language. It can especially be visible in “old” non-English journals that existed before or in the early days when English started to be lingua franca in science, with established author/readership and a recognisable journal title. Sometimes, the editors can consider that changing the journal title into the English “form” can solve much of the visibility problems. As described previously for the analysed case, the voluntary change of the name of the “Rudarsko-geološko-naftni zbornik” by the authors caused the loss of citations, simply because the translation was not part of the database title’s list. However, even if such a “translation” from non-English into English form is made officially from the editor, through channels of Elsevier or Clarivate, it will cause problems and a drop in the citations rate by at least three, sometime even more, years, as [16] showed on the data taken from the Thomson Scientific for the years 1994 and 1995.

A similar problem, but much more important for author citation score, is the citation of papers written in non-English. Moreover, the problem of non-English title citations can often be closely connected with citation of authors’ early papers from when they were not published (solely) in English. For example, ref. [17] described a sample of 23 journals in terms of their availability and accessibility based on citations of non-English literature, the question of whether non-English materials can be cited in English language journals, and if so, how they should be cited, which has become an increasingly important issue. Similarly, ref. [18] found that “journals written in other languages are a valuable repository for much locally relevant applied science” and put an objection on reviewers that insist on English written sources as the only relevant ones, risking inappropriate credits for initial ideas and violating citation standards. Furthermore, ref. [19] cite both non-English and English journals in physics and chemistry and articles. In both cases, the average citations per paper were lower in the non-English journals as well as non-English papers compared with English ones in such journals.

4. Conclusions

A small journal could be used as a label for a journal published as a single journal or one of a few sources from its publisher. They are the most often considered as academic journals when a single faculty or department publishes a scientific journal. The “size” and the number of titles do not restrict their quality, but larger volumes always ask for additional work from editorial members, especially editors, which are in large publishers divided among many departments, boards, and editor roles (often done by professional librarians). Currently, journals depend heavily on their rankings in world-leading indexing databases—Scopus and WoS. Unfortunately, this favours large editorial offices that can more easily maintain the journal’s citation rate. Moreover, playing from the position of a single journal, any correspondence with the databases puts editors in the subjected position, because they send a request with very limited information about the indexing process, and there is a very long response time, especially when missing citations are asked to be checked or included. In the case of large publishers, such inquiries are usually handled by entire departments, i.e., personnel, in charge of bibliographic information, which eases the process of obtaining the response from databases, especially if their portfolio includes numerous Q1/Q2 journals.

Furthermore, high citation rates and quartile rankings attract more authors, especially ones with intensive publication activities, often in international research groups. Consequently, it is very hard for journals from Q3/Q4, or still not ranked journals, to attract such papers, and progress to higher quartiles. It is especially valid if a journal is published in a relatively small local scientific community, with a small number of scientists, who are the primary base for authorships and future citations in the secondary publications.

The primary goal of editors in a small journal (often the journals from the “scientific periphery”) is to attract more manuscripts, with international authorships, and preferably with authors from two or more countries. Their focus could often be on manuscripts derived from (post)doctoral research, supervised by their mentors or senior scientists from the same research group. It could be expected that such author groups will be active in the future and cite their work in the forthcoming papers.

The quality of the submitted manuscript given into the review process can be highly improved with high-quality editorial screening, making the review process more transparent and fairer towards reviewers. It can also prevent high-rate self-citations of authors in the journal, which can lead to more favourable values of citation/self-citation ratio according to journal measures like Citescore or the Impact Factor. The journal’s citation with its proper, primary title registered in the databases is crucial and one of the milestones of an author’s editorial and further post-publication works. All the mentioned procedures and recommendations can help small journals successfully participate in the ranking procedures with significant citation scores and reach or maintain Q1/Q2 rank in indexing databases.

It is obvious that the journals that share some trends and values shown in this analysis strive to reach upper quartiles in any indexing base. There are two foundations that they need to maintain. One is a stable and productive local research community, including numerous senior scientists, who are active in scientific publishing and consider the journal as one of their main publication channels. The second is attract enough large and high-quality international authors and collaboration groups who successfully publish in the journal and to maintain the quality of the process that attracted them to submit a new manuscript. This enables the journal to compete in a world of scientific publishing that may probably favour top journals, which attract the most submissions and whose papers are often cited “as the most relevant sources” only because they are published in such journals, and not because of their content.

Eventually, the problem of the authors’ voluntary translation of the journal’s primary title (recognised in WoS and Scopus) into English will decrease the citation scores, simply because such papers will not be counted as a citation of the journal. This problem can affect any journal from non-English speaking countries that decide to keep its original title; i.e., it will affect many journals from Central or Eastern Europe, Latin America, Africa, or Asia as well, probably because they started to publish scholarly articles before English took the place of lingua franca in science.

Author Contributions

Conceptualization, T.M., L.H., T.K. and K.P.; methodology, T.M., T.K., Z.K. and K.P.; validation, T.M., L.H. and B.P.; writing—original draft preparation, T.M. and U.B.; writing—review and editing, Ž.A., G.B. and J.I.; visualization, U.B., J.I. and Z.K.; supervision, T.M., Ž.A. and B.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Acknowledgments

The authors thank the anonymous reviewers and editors for their generous and constructive comments that have improved this paper. This research was partially carried out in the projects “Mathematical researching in geology VII” (led by T. Malvić) as well as “Scientometric analysis of the faculty’s journal” (led by T. Malvić).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dorta-González, P.; Dorta-González, M.I. Comparing journals from different fields of science and social science through a JCR subject categories normalized impact factor. Scientometrics 2013, 95, 645–672. [Google Scholar] [CrossRef]

- Edgar, B.D.; Willinsky, J. A Survey of the Scholarly Journals Using Open Journal Systems. Sch. Res. Commun. 2010, 1, 020105. [Google Scholar]

- Archambault, É.; Larivière, V. History of the journal impact factor: Contingencies and consequences. Scientometrics 2009, 79, 635–649. [Google Scholar] [CrossRef]

- James, C.; Colledge, L.; Meester, W.; Azoulay, N.; Plume, A. CiteScore metrics: Creating journal metrics from the Scopus citation index. Learn. Publ. 2019, 32, 367–374. [Google Scholar] [CrossRef]

- Okagbue, H.I.; Atayero, A.A.; Adamu, M.O.; Bishop, S.A.; Oguntunde, P.E.; Opanuga, A.A. Exploration of editorial board composition, Citescore and percentiles of Hindawi journals indexed in Scopus. Data Brief 2018, 19, 743–752. [Google Scholar] [CrossRef]

- Fernandez-Llimos, F. Differences and similarities between Journal Impact Factor and CiteScore. Pharm. Pract. 2018, 16, 1282. [Google Scholar] [CrossRef] [PubMed]

- Larivière, V.; Sugimoto, C.R. The Journal Impact Factor: A Brief History, Critique, and Discussion of Adverse Effects; Springer Handbook of Science and Technology Indicators; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–33. Available online: https://link.springer.com/chapter/10.1007/978-3-030-02511-3_1 (paid access) and; https://arxiv.org/ftp/arxiv/papers/1801/1801.08992.pdf (arxiv version, free access); (accessed on 19 July 2022).

- Huh, S. The Journal Citation Indicator has arrived for Emerging Sources Citation Index journals, including the Journal of Educational Evaluation for Health Professions, in June 2021. J. Educ. Eval. Health Prof. 2021, 18, 20. [Google Scholar] [CrossRef] [PubMed]

- Colledge, L.; de Moya Anegón, F.; Guerrero Bote, V.; López Illescas, C.; El Aisati, M.; Moed, H. SJR and SNIP: Two new journal metrics in Elsevier’s Scopus. Ser. J. Ser. Community 2010, 23, 215–221. [Google Scholar] [CrossRef]

- Davis, P.M.; Cochran, A. Cited Half-Life of the Journal Literature. arXiv 2015, arXiv:1504.07479. [Google Scholar]

- Maričić, S. Časopisi znanstvene periferiji—Prema zajedničkoj metodi vrednovanja njihove znanstvene komunikabilnosti (Scholarly journals from the scientific periphery—towards a common methodology for evaluating their scientific communicability—in Croatian with English abstract). Vjesn. Bibl. Hrvat. 2007, 50, 62–78. Available online: https://hrcak.srce.hr/16941 (accessed on 19 July 2022).

- Maričić, S. Evalauting periodicals at the scientific periphery. IASLIC Indian Assoc. Spec. Libr. Inf. Cent. Bull. 1993, 38, 1–16. [Google Scholar]

- Beigel, F. Publishing from the periphery: Structural heterogeneity and segmented circuits. The evaluation of scientific publications for tenure in Argentina’s CONICET. Curr. Sociol. 2014, 62, 743–765. [Google Scholar] [CrossRef]

- Donovan, S.K. Death of a Small Journal? J. Sch. Publ. 2013, 44, 289–293. [Google Scholar] [CrossRef]

- Stegemann, H. “Small Journals”, indexes and ecancer: An Opportunity for Latin America. Ecancer 2013, 7, ed30. [Google Scholar] [CrossRef]

- Tempest, D. The effect of journal title changes on impact factors. Learn. Publ. 2005, 18, 57–62. [Google Scholar] [CrossRef]

- Fung, I.C. Citation of non-English peer review publications—Some Chinese examples. Emerg. Themes Epidemiol. 2008, 5, 12. [Google Scholar] [CrossRef] [PubMed]

- Lazarev, V.S.; Nazarovets, S.A. Don’t dismiss citations to journals not published in English. Nature 2018, 556, 174. [Google Scholar] [CrossRef] [PubMed]

- Liang, L.; Rousseau, R.; Zhong, Z. Non-English journals and papers in physics and chemistry: Bias in citations? Scientometrics 2013, 95, 333–350. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).