Artificial Intelligence in Dental Education: A Scoping Review of Applications, Challenges, and Gaps

Abstract

1. Introduction

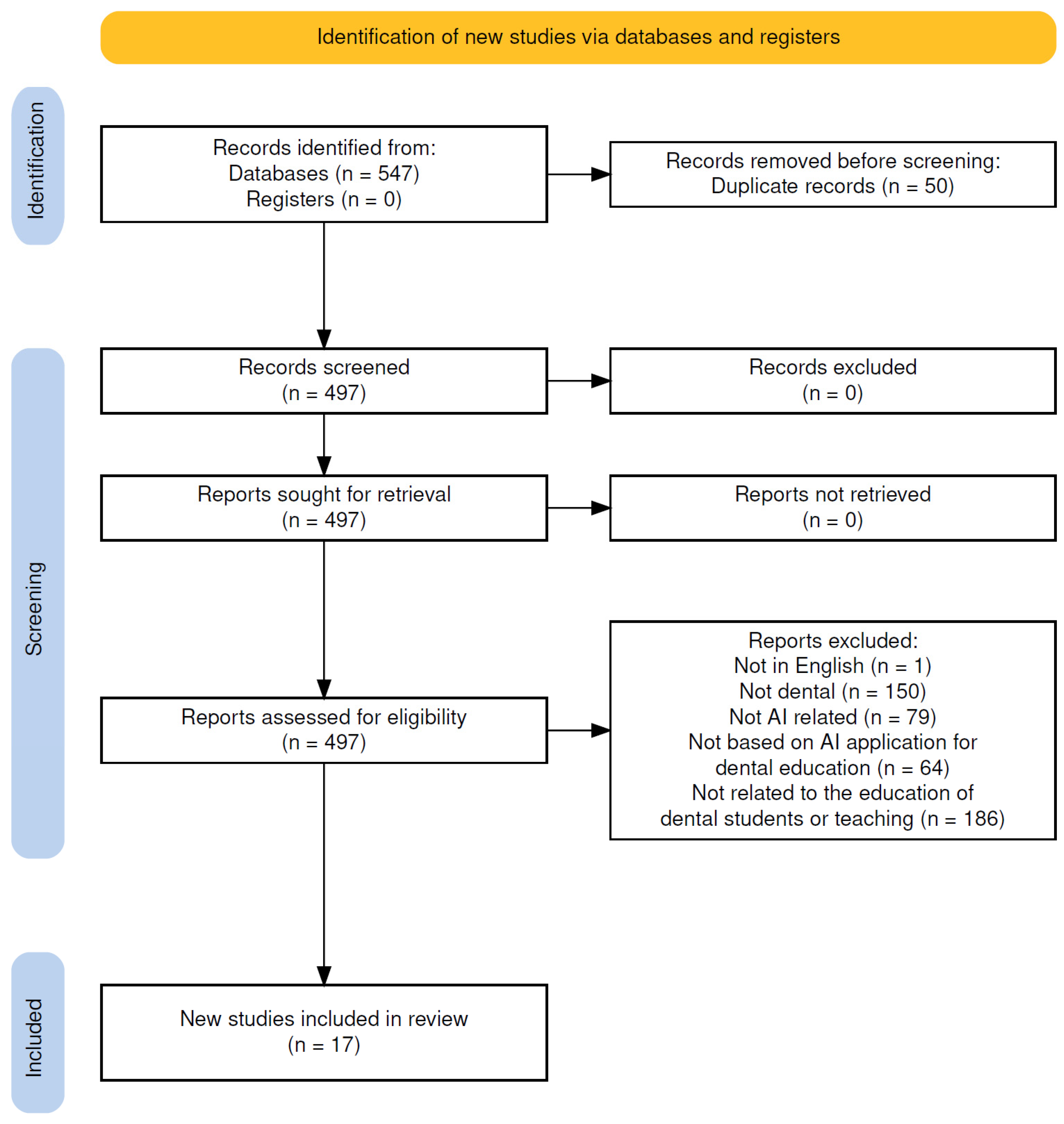

2. Materials and Methods

2.1. Research Question

2.2. Search Strategy

2.3. Eligibility Criteria

- English-language empirical studies

- Direct evaluation of AI in teaching, feedback, or dental student assessments

- Opinion pieces or perception-based surveys

- Studies unrelated to AI or dental education

- Reports on AI solving exams or general curriculum reform

- Studies not directly related to the education of dental students or their teaching

2.4. Data Charting and Synthesis

3. Results

3.1. AI in Preclinical Training

3.2. AI in Clinical, Diagnostic Training, and Radiographic Interpretation

3.3. AI as an Assessment Tool and Feedback System

3.4. AI in Content Generation

3.5. Key Findings

3.6. Identified Gaps

3.7. Challenges

| Category of AI Domain Classified by This Scoping Review | Author, Year, Location | Aim | Design | Strengths | Limitations | Findings | Scoping Review Conclusions |

|---|---|---|---|---|---|---|---|

| AI in Preclinical Training | Choi et al. (2023), Australia [18] | To develop and evaluate an interactive AI system for assessing student performance in endodontic access cavity preparation and provide immediate feedback. | Observational study; 79 fourth-year dental students participated, but only 44 completed the post-intervention evaluation survey. | Development and implementation of a novel AI assessment system Real-time, individualized feedback for skill refinement Use of a structured Likert-scale survey to gather student feedback | Only 44 out of 79 participants completed the post-survey (55.7%) Subjective self-reported feedback, no objective skill performance measure Single-center study limits generalizability | Students found the AI feedback system helpful for identifying and correcting errors High satisfaction was reported for system usability and self-directed learning support Most students preferred combining AI feedback with instructor feedback | The AI-based feedback system was positively received and promoted student engagement in access cavity preparation. However, further validation is required using objective outcome measures and control groups. Bias Risk: Moderate due to reliance on self-reported outcomes, and incomplete survey responses. |

| AI in Preclinical Training | Mahrous et al., 2023 USA [19] | To compare student performance in removable partial denture (RPD) design using traditional methods versus the AiDental AI and game-based learning software, and to assess student perceptions of the AI tool. | Quasi-experimental study; two-group comparison (AI-game group vs. control group); n = 56 (28 students per group). | Blinded assessment of practical exam Integration of AI and gamified learning Direct evaluation of student perception | Sample size: 73 students (n = 36 intervention, n = 37 control) Short intervention period (2 weeks before testing) Survey-based perception data without long-term follow-up | The AiDental group outperformed the control group in RPD design accuracy and completeness (statistically significant improvement). Survey results indicated positive student perception, with high ratings for engagement and usefulness. | The integration of AI and game-based learning improved short-term performance in RPD design and was well-received by students. However, the short duration and reliance on a single institution limit generalizability. Bias Risk: Moderate due to the short intervention period, absence of long-term outcomes, and reliance on self-reported perceptions. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Or et al., 2024, Australia [20] | To evaluate the feasibility and effectiveness of an AI-powered chatbot for improving patient history-taking skills among dental students. | Pilot observational study using a single cohort of third-year dental students interacting with an AI chatbot for simulated history-taking. | High engagement: 100% student participation with the chatbot compared to 2/13 in traditional tutorials Realistic simulation using GPT-3.5-based patient responses Scalability and accessibility for repetitive practice | Small sample size (n = 13 students) No control group or pre-post performance measures Subjective outcome assessment via perceived usefulness | Students reported increased engagement and perceived improvement in competence after using the chatbot Staff supported the tool’s educational value and future use potential Highlighted potential for expanding to early-year students and other case types | The chatbot was positively received and showed promise as a supplementary tool for dental education. However, due to the small sample size and lack of control group, findings must be interpreted cautiously. Bias Risk: High, due to subjective measures, small sample, and lack of comparative analysis. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Aminoshariae et al., 2024, USA [21] | To explore how artificial intelligence (AI) can be integrated into endodontic education and identify both its potential benefits and limitations. | Scoping review of 35 relevant studies, conducted through electronic and grey literature search across databases including MEDLINE, Web of Science, and Cochrane Library up to December 2023. | Comprehensive literature search strategy including grey literature and ongoing trials. Categorized AI applications into 10 key educational areas, providing structured thematic synthesis. Inclusion of multi-institutional and international author expertise. | No quality appraisal of included studies, limiting the ability to weigh strength of evidence. Possible selection bias due to broad inclusion criteria and lack of systematic review methodology. | Identified 10 domains where AI may enhance endodontic education: radiographic interpretation, diagnosis, treatment planning, case difficulty assessment, preclinical training, advanced simulation, real-time guidance, autonomous robotics, progress tracking, and educational standardization. AI supports individualized feedback, structured simulation, and automated decision support.Emphasizes that integration of AI will shift the traditional pedagogy of Endodontics. | AI holds promise to revolutionize endodontic education through personalized learning, diagnostic assistance, and simulation-based training. However, educators must acknowledge its current limitations and ensure its responsible implementation. Bias risk: Moderate due to lack of critical appraisal of included studies and variability in evidence quality across reviewed articles. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Ayan et al., 2024, Turkey [22] | To assess the diagnostic performance of dental students in identifying proximal caries lesions before and after training with an AI-based deep learning application. | Randomized experimental study involving pre- and post-testing of two student groups, one receiving AI-based training. | Use of a validated deep learning algorithm (YOLO—You Only Look Once) tailored for caries detection. Expert-labeled dataset (1200 radiographs) ensures strong ground truth reliability. Comparative pre- and post-intervention design increases internal validity. | Small participant sample size (n = 40 dental students). Only one institution involved, limiting generalizability. Increased post-test labeling time in AI-trained group may indicate increased complexity or cognitive load. | AI training led to statistically significant improvement in accuracy, sensitivity, specificity, and F1 scores (p < 0.05). No significant difference in precision score. Labeling time increased in the AI-trained group. | Training with AI significantly enhanced students’ ability to detect enamel and dentin caries on radiographs. Although longer labeling time was observed post-training, the educational benefit suggests that AI can serve as a valuable tool for radiographic interpretation training. Bias Risk: Moderate due to small sample size, single-site recruitment, and lack of long-term follow-up. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Chang et al., 2024, USA [23] | To evaluate (1) the efficiency and accuracy of dental students performing full-mouth radiograph mounting with and without AI assistance, and (2) student perceptions of AI’s usefulness in dental education. | Randomized controlled experimental study with two student groups: manual vs. AI-assisted radiograph mounting. Pre- and post-study surveys were also administered. | Real-world clinical simulation with third-year students. Inclusion of both objective (time and accuracy) and subjective (student perceptions) outcome measures. Random allocation of 40 participants enhances internal validity. | Small sample size (n = 40 dental students). Single institution study. AI assistance led to reduced accuracy, suggesting issues with over-reliance on automation. No long-term assessment of retention or skill transfer. | AI-assisted group completed radiograph mounting significantly faster (p < 0.05). However, the AI-assisted group demonstrated significantly lower accuracy than the manual group (p < 0.01). Student confidence and perceptions of AI did not differ significantly between groups, before or after the intervention. | While AI assistance improved efficiency, it negatively impacted accuracy, indicating that premature automation may hinder skill development in novice learners. Students maintained neutral perceptions toward AI, highlighting the need for careful integration of AI tools in early dental education. Bias Risk: Moderate due to small sample size, lack of blinding, and single-site scope. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Prakash et al., 2024, India [24] | To develop and evaluate DentQA, a GPT3.5-based dental semantic search engine aimed at improving information retrieval for dental students, while addressing issues like hallucination, bias, and misinformation. | Tool development and validation study using both non-human (BLEU score) and human performance metrics, including accuracy, hallucination rate, and user satisfaction. | Combines objective (BLEU score) and subjective (human evaluation) performance assessments. Tailored specifically to dental education content. Compared directly to GPT3.5 baseline for benchmarking. Evaluated on 200 questions across multiple categories. | Total of 4 human evaluators only and 200 questions evaluated. Lack of real-world classroom or clinical implementation. Evaluations limited to document-based Q&A rather than broader clinical decision-making tasks. | DentQA outperformed GPT3.5 in accuracy (p = 0.00004) and significantly reduced hallucinations (p = 0.026). Demonstrated consistent performance across question types (X2 = 13.0378, p = 0.012). BLEU Unigram score of 0.85 confirmed linguistic reliability. High user satisfaction with an average response time of 3.5 s | DentQA provides a promising AI-based solution for reliable and efficient information retrieval in dental education. Its reduced hallucination rate and consistent performance across question types support its potential as a domain-specific educational tool. Further testing in real academic settings is recommended. Bias Risk: Moderate due to a limited number of evaluators and absence of practical deployment data. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Qutieshat et al., 2024, Oman [25] | To compare the diagnostic accuracy of dental students (junior and senior cohorts) with that of a modified ChatGPT-4 model in endodontic assessments related to pulpal and apical conditions, and to explore the potential role of AI in supporting dental education. | Comparative observational study using seven standardised clinical scenarios. Diagnostic accuracy was measured for junior and senior dental students versus ChatGPT-4, with expert-derived gold standards as reference. | Included a large student sample (n = 109). Included junior (n = 54) and senior (n = 55) groups for subgroup analysis. Used gold-standard expert assessments for accuracy comparisons. Applied robust statistical analysis (Kruskal-Wallis and Dwass-Steel-Critchlow-Fligner tests). Clearly delineated performance metrics for AI vs. students. | Scenarios limited to seven predefined cases may not generalize across broader clinical complexity. No evaluation of long-term retention or educational impact post-AI exposure. Does not assess student reasoning or process—only final answer accuracy. | ChatGPT-4 achieved 99.0% accuracy, outperforming senior students (79.7%) and juniors (77.0%). Median diagnostic accuracy: ChatGPT = 100%, Seniors = 85.7%, Juniors = 82.1%. Statistically significant difference between ChatGPT and both student groups (p < 0.001). No statistically significant difference between senior and junior groups. | AI, specifically ChatGPT-4, demonstrated superior diagnostic performance compared to dental students in endodontic assessments. The findings highlight the potential of AI as an educational support tool, particularly for reinforcing diagnostic standards. However, caution is advised regarding overreliance, which could hinder the development of students’ critical thinking and clinical decision-making skills. Further studies are needed to explore ethical and regulatory implications for broader implementation in dental education. Bias Risk: Low due to robust sample size, expert-defined gold standard, and appropriate statistical testing. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Rampf et al., 2024, Germany [26] | To assess the impact of two feedback methods elaborated Feedback (eF) and Knowledge of Results (KOR) on radiographic diagnostic competencies in dental students, and to evaluate the diagnostic accuracy of an AI system (dentalXrai Pro 3.0) as a potential educational aid. | Randomized controlled trial (RCT). Fifty-five 4th-year dental students were randomly assigned to receive either eF or KOR while interpreting 16 virtual radiological cases over 8 weeks. Student diagnostic performance was assessed and compared, and the performance of the AI system was independently evaluated on the same tasks. | Randomized design with two intervention arms. Use of real radiographic cases and multiple diagnostic criteria (caries, apical periodontitis, image quality). Objective performance metrics (accuracy, sensitivity, specificity, ROC AUC). Inclusion of AI system benchmarking with near-perfect reported performance. Statistical comparisons conducted with appropriate tests (Welch’s t-test, ROC analysis). | Sample size: 55 students Some outcomes showed no significant differences between groups. Short duration (8 weeks) may not reflect long-term learning retention. No detailed analysis of how students interacted with AI or feedback content quality. No explicit control group without feedback or AI to isolate effects. | Students receiving elaborated feedback (eF) performed significantly better than those receiving only knowledge of results (KOR) in: Detecting enamel caries (↑ sensitivity, p = 0.037; ↑ AUC, p = 0.020) Detecting apical periodontitis (↑ accuracy, p = 0.011; ↑ sensitivity, p = 0.003; ↑ AUC, p = 0.001) Assessing periapical image quality (p = 0.031) No significant differences between groups were found for other diagnostic tasks. The AI system (dentalXrai Pro 3.0) showed near-perfect diagnostic performance: Enamel caries: Accuracy 96.4%, Sensitivity 85.7%, Specificity 7.4% Dentin caries: Accuracy 98.8%, Sensitivity 94.1%, Specificity 100% Overall: Accuracy 97.6%, Sensitivity 95.8%, Specificity 98.3% | Elaborated feedback significantly enhances diagnostic performance in selected radiographic categories compared to basic feedback. The AI system demonstrated near-perfect diagnostic capabilities, indicating strong potential as an alternative to expert-generated feedback in educational settings. However, the limited specificity in enamel caries AI performance (0.074) warrants cautious interpretation. The randomized design and direct AI benchmarking support the study’s reliability. Bias Risk: Low due to randomized controlled design, clear metrics, and direct AI-human comparison with transparent reporting. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Schoenhof et al., 2024, Germany [27] | To investigate whether synthetic panoramic radiographs (syPRs), generated using GANs (StyleGAN2-ADA), can be reliably distinguished from real radiographs and evaluate their potential use in teaching, research, and clinical education | Experimental study with survey and test-retest reliability evaluation. | Included both medical professionals (n = 54) and dental students (n = 33). Used a controlled number of real (20), synthetic (20), and control (5) PRs. Assessed image interpretation accuracy, perceived image quality, and item agreement. Included test-retest reliability analysis. | Test-retest reliability was low (Cohen’s kappa = 0.23). Sample size modest (total n = 87), particularly within student subgroup (n = 33). Study used a limited set of images for evaluation (45 total). | Overall sensitivity for identifying synthetic images was 78.2%; specificity was 82.5%. Professionals: sensitivity 79.9%, specificity 82.3%. Students: sensitivity 75.5%, specificity 82.7%. Median image quality score: 6/10. Median rating for profession-related importance: 7/10. 11 out of 14 radiographic items showed agreement with expected interpretation. | The study demonstrates that GANs can generate highly realistic panoramic radiographs that are often indistinguishable from real ones by professionals and students. These synthetic images have educational and research value without privacy concerns. Bias Risk: Moderate due to small sample size, low retest reliability, and subjective evaluation metrics. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Schropp et al., 2023, Denmark [28] | To evaluate whether training with AI software (AssistDent®) improves dental students’ ability to detect enamel-only proximal caries in bitewing radiographs and to assess whether proximal tooth overlap interferes with caries detection. | Randomized controlled study with two assessment sessions and reference-standard comparison. | Random allocation of 74 dental students to control and test groups. Use of validated software (AssistDent®). Two-session longitudinal structure allowed for measuring learning progression. Consideration of radiographic overlap as a variable influencing accuracy. | Only enamel-only caries assessed; may not generalize to more advanced lesions. Somewhat limited by moderate sample size (n = 74). AI assistance was only used in the first session | Session 1: No significant difference in positive agreement between control (48%) and test (42%) groups (p = 0.08). Test group had higher negative agreement (86% vs. 80%, p = 0.02). Session 2: No significant difference between groups. Within-group improvement: Test group improved in positive agreement over time (p < 0.001); control group improved in negative agreement (p < 0.001). Tooth overlap occurred in 38% of surfaces and significantly reduced diagnostic agreement (p < 0.001) | AI software did not significantly improve students’ diagnostic performance in detecting enamel-only proximal caries. However, both groups showed improvement over time. Tooth overlap negatively affected diagnostic accuracy regardless of AI use. Bias Risk: Low due to randomized design, adequate student sample, objective outcome comparison to reference standard. |

| AI in Clinical, Diagnostic Training, and Radiographic Interpretation | Suárez et al.,2022, Spain [29] | To evaluate dental students’ satisfaction and perceived usefulness after interacting with an AI-powered chatbot simulating a virtual patient (VP) to support development of diagnostic skills. | Descriptive cross-sectional study using a satisfaction survey following several weeks of interaction with the AI virtual patient. | Large and representative sample (n = 193, surpassing minimum of 169). Inclusion of students in two clinical years (4th and 5th year), allowing comparisons across experience levels. Gender-balanced data reporting. Integration of real student interaction with an AI tool over several weeks, enhancing ecological validity. | No control group; findings based solely on self-reported satisfaction rather than performance improvement. Cross-sectional design limits inference on causality or longitudinal impact. Outcomes focused on perception rather than objective diagnostic skill gain. | Overall high student satisfaction with the AI chatbot (mean score 4.36/5). Fifth-year students rated the tool more positively than fourth-year students. Students who arrived at the correct diagnosis through the chatbot interaction gave higher satisfaction ratings. Positive student perception supports the tool’s potential value for repeated diagnostic practice in a safe environment. | The AI-based virtual patient chatbot was well-received by students and viewed as a useful supplement for diagnostic training. It provided a cost-effective, space-saving educational solution that promotes engagement through natural language processing. Bias Risk: Moderate due to self-reported, perception-only data and absence of a comparator group, though the sample size and survey structure were strong. |

| AI as an Assessment Tool and Feedback System | Kavadella et al., 2024, Cyprus [30] | To evaluate the educational outcomes and student perceptions resulting from the real-life implementation of ChatGPT in an undergraduate dental radiology module using a mixed-methods approach. | Mixed-methods study involving a comparative learning task between two groups: one using ChatGPT and the other using traditional internet-based research. Data collection included knowledge exam scores and thematic analysis of student feedback. | Balanced sample size (n = 77) with well-structured group assignments. Combination of quantitative and qualitative data enhances depth of analysis. Statistical comparison of examination scores provides objective outcome data. | Small sample size per group (39 vs. 38), reducing generalizability. Short-term evaluation; no long-term retention or application assessment. Potential novelty bias influencing students’ enthusiasm for AI use. Single-institution study, limiting external applicability. | ChatGPT group outperformed traditional research group in knowledge exams (p = 0.045). Students highlighted benefits: fast response, user-friendly interface, broad knowledge access. Limitations identified: need for refined prompts, generic or inaccurate information, inability to provide visuals. Students expressed readiness to adopt ChatGPT in education, clinical practice, and research with appropriate guidance. | ChatGPT enhanced students’ performance in knowledge assessments and was positively received for its utility and adaptability in dental education. Students demonstrated critical awareness of its limitations and used it creatively. Bias Risk: Moderate due to short study duration, institution-specific sample, and self-reported feedback, although objective performance data strengthens credibility |

| AI as an Assessment Tool and Feedback System | Jayawardena et al., 2025, Sri Lanka [31] | To evaluate the effectiveness and perceived quality of feedback provided by an AI-based tool (ChatGPT-4) versus a human tutor on dental students’ histology assignments. | Comparative study analyzing 194 student responses to two histology questions. Feedback from ChatGPT-4 and human tutors was assessed using a standardized rubric. Students rated feedback on five dimensions, and an expert reviewed 40 randomly selected feedback samples. | Large sample size (n = 194) enhances reliability. Use of both student perception and expert evaluation provides multidimensional insight. Standardized rubric ensures consistency across assessments. Expert-blinded review of feedback enhances objectivity. | Focused only on histology questions; may not generalize to other areas of dentistry. Limited to one institution and subject area. Potential bias in student preferences due to familiarity with human feedback. | No significant score difference between AI and human feedback for one question; AI scored significantly higher for the second and overall scores. Students perceived no significant difference in understanding, critical thinking, relevance, or clarity, but preferred human feedback for comfort. Expert evaluation showed AI was superior in mistake identification, clarity (p < 0.001), and suggestions for improvement (p < 0.001). | ChatGPT-4 demonstrated effectiveness in providing clear and constructive feedback, performing comparably or better than human tutors based on expert analysis. Student preferences leaned toward human feedback due to emotional comfort, not quality. Bias Risk: Moderate while expert validation strengthens findings, the emotional bias in student responses and single-discipline scope limit broader generalization. |

| AI as an Assessment Tool and Feedback System | Ali et al., 2024, Qatar [32] | To explore the accuracy of ChatGPT in responding to various healthcare education assessment formats and discuss its implications for undergraduate dental education. | Exploratory study using 50 independently constructed questions spanning 5 common assessment formats (MCQs, SAQs, SEQs, true/false, fill-in-the-blank) and several academic writing tasks. Each format included 10 items. ChatGPT was used to attempt all questions. | Broad assessment coverage with 50 custom-developed items across multiple formats Realistic integration of AI use in student-assigned tasks Addresses both formative (e.g., feedback reports) and summative (e.g., MCQs) assessment types Explores qualitative and quantitative performance | ChatGPT’s inability to process image-based questions limits generalizability to clinical scenarios Only the free version of ChatGPT was tested Lack of benchmarking against student or educator performance Critical appraisal outputs from ChatGPT were found to be weaker | ChatGPT responded accurately to most knowledge-based question types (MCQs, SAQs, SEQs, true/false, fill-in-the-blank) ChatGPT struggled with image-based questions and critical appraisal tasks Generated reflective and research responses were mostly satisfactory Word count limitations noted with the free version | ChatGPT shows strong potential in supporting healthcare and dental education through accurate responses to diverse assessment types. However, its limitations in processing visual data and critical reasoning tasks highlight the need for educators to redesign assessments and learning approaches to integrate AI responsibly. Bias Risk: Moderate custom-designed questions ensure targeted evaluation, but lack of comparative analysis with human responses and reliance on only text-based questions limits generalizability. |

| AI in Content Generation for the Dental Field | Aldukhail et al., 2024, Saudi Arabia [33] | To evaluate and compare the performance of two large language models ChatGPT 3.5 and Google Bard in the context of dental education and research support. | Comparative evaluation using seven structured dental education-related queries assessed blindly by two reviewers. Scoring was based on pre-defined metrics and analysed using Wilcoxon tests for statistical significance. | Direct head-to-head comparison of two major LLMs in a dental education context Multi-domain evaluation covering exercises, simulations, literature critique, and tool generation Use of blind reviewers and statistical analysis to reduce subjective bias Practical relevance for educators seeking to integrate LLMs in curriculum design | Only ChatGPT 3.5 and Bard were tested, excluding newer or alternative models Evaluation was based on a limited number of prompts (n = 7), which may not generalise across broader contexts Reviewer subjectivity, despite blinding, could still influence scoring outcomes The study does not evaluate student learning outcomes following LLM use | ChatGPT 3.5 outperformed Google Bard in generating exercises, simulations, and assessment tools with higher clarity, accuracy, and specificity Google Bard showed strength in retrieving real research articles and critiquing them effectively Statistically significant differences (p ≤ 0.05) were found in scores for domains 1 (educational role) and 3 (simulations with treatment options) Both tools exhibited variability, highlighting the importance of user oversight in educational use | The study demonstrates that generative language models like ChatGPT and Bard can support dental education through simulation, content creation, and literature analysis. However, each model has distinct strengths and weaknesses, and critical judgment is essential when incorporating them into educational practice. Bias Risk: Moderate while the methodology includes blinding and structured scoring, the limited scope of prompts and model versions tested reduces the generalisability of findings. |

| AI in Content Generation for the Dental Field | Katebzad et al.,2024, USA [34] | To evaluate whether publicly available generative AI platforms can develop high-quality, standardized, and clinically relevant simulated pediatric dental cases for use in predoctoral education, including OSCE-style assessments. Pilot comparative study using standardized prompts across three de-identified AI platforms to generate pediatric dental cases on three themes. AI-generated cases were compared to investigator-generated (control) cases by two masked, board-certified evaluators using the AI-SMART rubric. Statistical analysis included ANOVA and Bonferroni correction. | Pilot comparative study using standardized prompts across three de-identified AI platforms to generate pediatric dental cases on three themes. AI-generated cases were compared to investigator-generated (control) cases by two masked, board-certified evaluators using the AI-SMART rubric. Statistical analysis included ANOVA and Bonferroni correction. | Use of standardized prompts ensures repeatability and consistency Evaluation performed by calibrated, blinded, board-certified examiners Introduces a novel rubric (AI-SMART) for systematic quality assessment Proof-of-concept for prompt engineering in dental education | Small sample size and pilot nature limits generalizability Control cases scored significantly higher, indicating AI limitations in clinical accuracy and OSCE formulation Some AI-generated answers conflicted with professional guidelines (e.g., AAPD) AI-SMART tool is not yet validated and requires further research Study did not assess student learning outcomes or implementation feasibility | No significant difference in clinical relevance (p = 0.44) and readability (p = 0.15) between AI and control cases Investigator-generated cases scored significantly higher in OSCE quality (p < 0.001) and answer accuracy (p = 0.001) AI platforms were efficient in producing interdisciplinary cases, but required manual review and correction Some AI-generated OSCE answers were incorrect or overly simplistic Highlighted the importance of standardized prompt design for effective AI use in education | AI platforms show promise for generating simulated pediatric dental cases efficiently, but human oversight and prompt engineering are essential to ensure clinical accuracy and alignment with professional guidelines. The study offers a valuable foundation for integrating AI in case-based learning, though further validation and broader testing are needed. Bias Risk: Moderate due to Using of blinded evaluators and statistical rigor adds reliability, but the tool used for scoring (AI-SMART) is unvalidated, and results are based on a pilot sample. |

4. Discussion

4.1. AI in Preclinical Training

4.2. AI in Clinical and Diagnostic Training and Radiographic Interpretation

4.3. AI as an Assessment Tool and Feedback System

4.4. AI in Content Generation for the Dental Education

4.5. Limitations

4.6. Recommendations for the Future

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.-W.; et al. Artificial intelligence: A powerful paradigm for scientific research. Innov. 2021, 2, 100179. [Google Scholar] [CrossRef]

- Claman, D.; Sezgin, E. Artificial Intelligence in Dental Education: Opportunities and Challenges of Large Language Models and Multimodal Foundation Models. JMIR Med Educ. 2024, 10, e52346. [Google Scholar] [CrossRef]

- Uribe, S.E.; Maldupa, I.; Schwendicke, F. Integrating Generative AI in Dental Education: A Scoping Review of Current Practices and Recommendations. Eur. J. Dent. Educ. 2025, 29, 341–355. [Google Scholar] [CrossRef]

- Thurzo, A.; Strunga, M.; Urban, R.; Surovková, J.; Afrashtehfar, K.I. Impact of Artificial Intelligence on Dental Education: A Review and Guide for Curriculum Update. Educ. Sci. 2023, 13, 150. [Google Scholar] [CrossRef]

- Schwendicke, F.; Chaurasia, A.; Wiegand, T.; Uribe, S.E.; Fontana, M.; Akota, I.; Tryfonos, O.; Krois, J. Artificial intelligence for oral and dental healthcare: Core education curriculum. J. Dent. 2022, 128, 104363. [Google Scholar] [CrossRef]

- Abdullah, S.; Hasan, S.R.; Asim, M.A.; Khurshid, A.; Qureshi, A.W. Exploring dental faculty awareness, knowledge, and attitudes toward AI integration in education and practice: A mixed-method study. BMC Med Educ. 2025, 25, 1–10. [Google Scholar] [CrossRef]

- Harte, M.; Carey, B.; Feng, Q.J.; Alqarni, A.; Albuquerque, R. Transforming undergraduate dental education: The impact of artificial intelligence. Br. Dent. J. 2025, 238, 57–60. [Google Scholar] [CrossRef] [PubMed]

- Islam, N.M.; Laughter, L.; Sadid-Zadeh, R.; Smith, C.; Dolan, T.A.; Crain, G.; Squarize, C.H. Adopting artificial intelligence in dental education: A model for academic leadership and innovation. J. Dent. Educ. 2022, 86, 1545–1551. [Google Scholar] [CrossRef]

- Ghasemian, A.; Salehi, M.; Ghavami, V.; Yari, M.; Tabatabaee, S.S.; Moghri, J. Exploring dental students’ attitudes and perceptions toward artificial intelligence in dentistry in Iran. BMC Med Educ. 2025, 25, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Çakmakoğlu, E.E.; Günay, A. Dental Students’ Opinions on Use of Artificial Intelligence: A Survey Study. Med Sci. Monit. 2025, 31, e947658. [Google Scholar] [CrossRef]

- Shaw, S.; Oswin, S.; Xi, Y.; Calandriello, F.; Fulmer, R. Artificial Intelligence, Virtual Reality, and Augmented Reality in Counseling: Distinctions, Evidence, and Research Considerations. J. Technol. Couns. Educ. Superv. 2023, 4, 4. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Peters, M.D.J.; Godfrey, C.M.; Khalil, H.; McInerney, P.; Parker, D.; Soares, C.B. Guidance for conducting systematic scoping reviews. JBI Evidence Implementation 2015, 13, 141–146. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Pollock, D.; Peters, M.D.; Khalil, H.; McInerney, P.; Alexander, L.; Tricco, A.C.; Evans, C.; de Moraes, É.B.; Godfrey, C.M.; Pieper, D.; et al. Recommendations for the extraction, analysis, and presentation of results in scoping reviews. JBI Évid. Synth. 2022, 21, 520–532. [Google Scholar] [CrossRef] [PubMed]

- Haddaway, N.R.; Page, M.J.; Pritchard, C.C.; McGuinness, L.A. PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis. Campbell Syst. Rev. 2022, 18, e1230. [Google Scholar] [CrossRef]

- Choi, S.; Choi, J.; Peters, O.A.; Peters, C.I. Design of an interactive system for access cavity assessment: A novel feedback tool for preclinical endodontics. Eur. J. Dent. Educ. 2023, 27, 1031–1039. [Google Scholar] [CrossRef] [PubMed]

- Mahrous, A.; Botsko, D.L.; Elgreatly, A.; Tsujimoto, A.; Qian, F.; Schneider, G.B. The use of artificial intelligence and game-based learning in removable partial denture design: A comparative study. J. Dent. Educ. 2023, 87, 1188–1199. [Google Scholar] [CrossRef]

- Or, A.J.; Sukumar, S.; Ritchie, H.E.; Sarrafpour, B. Using artificial intelligence chatbots to improve patient history taking in dental education (Pilot study). J. Dent. Educ. 2024, 88, 1988–1990. [Google Scholar] [CrossRef]

- Aminoshariae, A.; Nosrat, A.; Nagendrababu, V.; Dianat, O.; Mohammad-Rahimi, H.; O’KEefe, A.W.; Setzer, F.C. Artificial Intelligence in Endodontic Education. J. Endod. 2024, 50, 562–578. [Google Scholar] [CrossRef]

- Ayan, E.; Bayraktar, Y.; Çelik, Ç.; Ayhan, B. Dental student application of artificial intelligence technology in detecting proximal caries lesions. J. Dent. Educ. 2024, 88, 490–500. [Google Scholar] [CrossRef] [PubMed]

- Chang, J.; Bliss, L.; Angelov, N.; Glick, A. Artificial intelligence-assisted full-mouth radiograph mounting in dental education. J. Dent. Educ. 2024, 88, 933–939. [Google Scholar] [CrossRef] [PubMed]

- Prakash, K.; Prakash, R. An artificial intelligence-based dental semantic search engine as a reliable tool for dental students and educators. J. Dent. Educ. 2024, 88, 1257–1266. [Google Scholar] [CrossRef] [PubMed]

- Qutieshat, A.; Al Rusheidi, A.; Al Ghammari, S.; Alarabi, A.; Salem, A.; Zelihic, M. Comparative analysis of diagnostic accuracy in endodontic assessments: Dental students vs. artificial intelligence. Diagnosis 2024, 11, 259–265. [Google Scholar] [CrossRef]

- Rampf, S.; Gehrig, H.; Möltner, A.; Fischer, M.R.; Schwendicke, F.; Huth, K.C. Radiographical diagnostic competences of dental students using various feedback methods and integrating an artificial intelligence application—A randomized clinical trial. Eur. J. Dent. Educ. 2024, 28, 925–937. [Google Scholar] [CrossRef]

- Schoenhof, R.; Schoenhof, R.; Blumenstock, G.; Lethaus, B.; Hoefert, S. Synthetic, non-person related panoramic radiographs created by generative adversarial networks in research, clinical, and teaching applications. J. Dent. 2024, 146, 105042. [Google Scholar] [CrossRef]

- Schropp, L.; Sørensen, A.P.S.; Devlin, H.; Matzen, L.H. Use of artificial intelligence software in dental education: A study on assisted proximal caries assessment in bitewing radiographs. Eur. J. Dent. Educ. 2023, 28, 490–496. [Google Scholar] [CrossRef]

- Suárez, A.; Adanero, A.; García, V.D.-F.; Freire, Y.; Algar, J. Using a Virtual Patient via an Artificial Intelligence Chatbot to Develop Dental Students’ Diagnostic Skills. Int. J. Environ. Res. Public Heal. 2022, 19, 8735. [Google Scholar] [CrossRef]

- Kavadella, A.; da Silva, M.A.D.; Kaklamanos, E.G.; Stamatopoulos, V.; Giannakopoulos, K. Evaluation of ChatGPT’s Real-Life Implementation in Undergraduate Dental Education: Mixed Methods Study. JMIR Med Educ. 2024, 10, e51344. [Google Scholar] [CrossRef]

- Jayawardena, C.K.; Gunathilake, Y.; Ihalagedara, D. Dental Students’ Learning Experience: Artificial Intelligence vs Human Feedback on Assignments. Int. Dent. J. 2025, 75, 100–108. [Google Scholar] [CrossRef] [PubMed]

- Ali, K.; Barhom, N.; Tamimi, F.; Duggal, M. ChatGPT—A double-edged sword for healthcare education? Implications for assessments of dental students. Eur. J. Dent. Educ. 2023, 28, 206–211. [Google Scholar] [CrossRef]

- Aldukhail, S. Mapping the Landscape of Generative Language Models in Dental Education: A Comparison Between ChatGPT and Google Bard. Eur. J. Dent. Educ. 2024, 29, 136–148. [Google Scholar] [CrossRef] [PubMed]

- Katebzadeh, S.; Nguyen, P.R.; Puranik, C.P. Can artificial intelligence develop high-quality simulated pediatric dental cases? J. Dent. Educ. 2024, 89, 1021–1023. [Google Scholar] [CrossRef] [PubMed]

- World Health Organizatio. Ethics and governance of artificial intelligence for health: WHO guidance; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El-Hakim, M.; Anthonappa, R.; Fawzy, A. Artificial Intelligence in Dental Education: A Scoping Review of Applications, Challenges, and Gaps. Dent. J. 2025, 13, 384. https://doi.org/10.3390/dj13090384

El-Hakim M, Anthonappa R, Fawzy A. Artificial Intelligence in Dental Education: A Scoping Review of Applications, Challenges, and Gaps. Dentistry Journal. 2025; 13(9):384. https://doi.org/10.3390/dj13090384

Chicago/Turabian StyleEl-Hakim, Mohammed, Robert Anthonappa, and Amr Fawzy. 2025. "Artificial Intelligence in Dental Education: A Scoping Review of Applications, Challenges, and Gaps" Dentistry Journal 13, no. 9: 384. https://doi.org/10.3390/dj13090384

APA StyleEl-Hakim, M., Anthonappa, R., & Fawzy, A. (2025). Artificial Intelligence in Dental Education: A Scoping Review of Applications, Challenges, and Gaps. Dentistry Journal, 13(9), 384. https://doi.org/10.3390/dj13090384