Segmentation of Pulp and Pulp Stones with Automatic Deep Learning in Panoramic Radiographs: An Artificial Intelligence Study

Abstract

1. Introduction

2. Materials and Methods

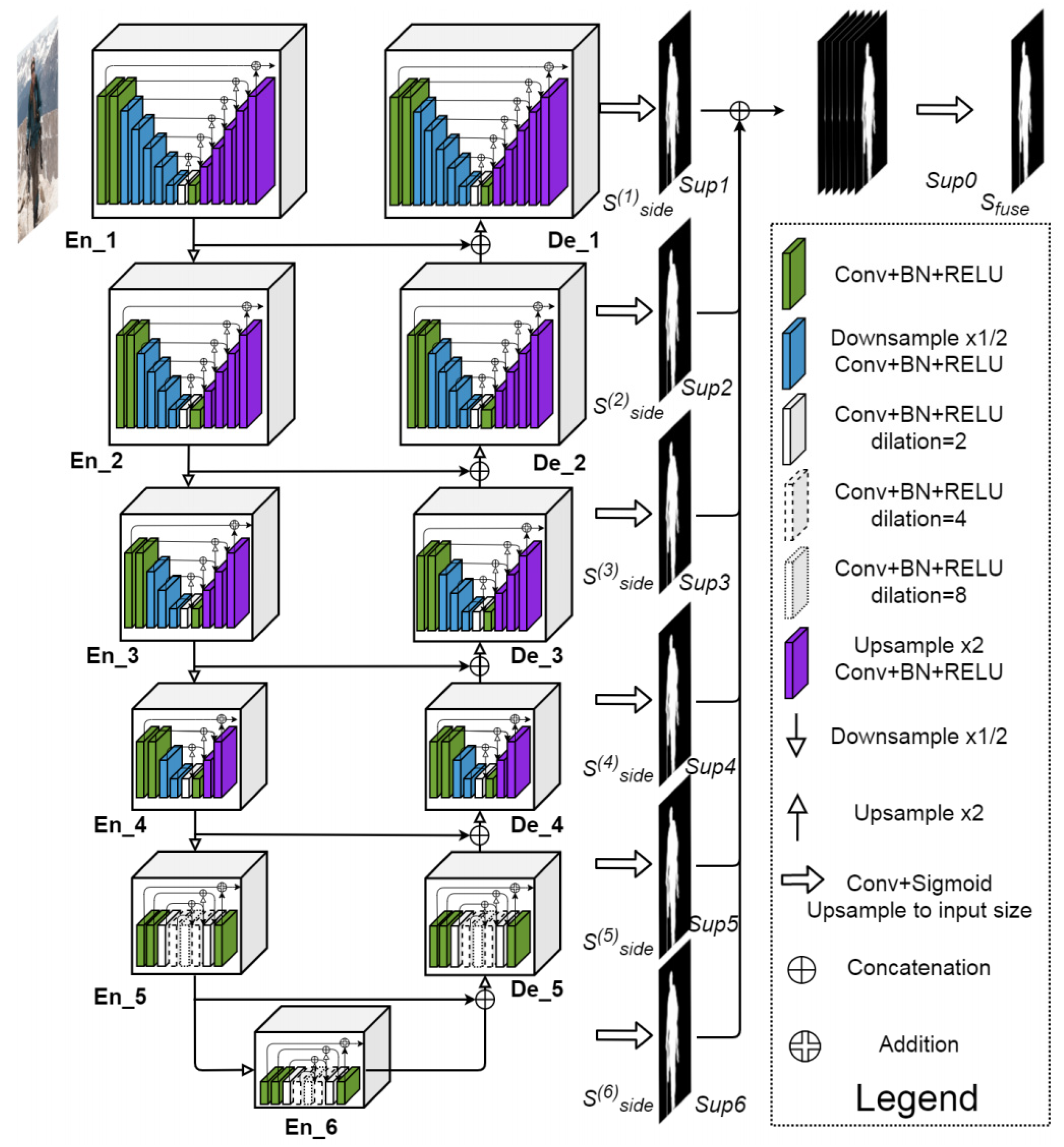

2.1. Model Pipeline

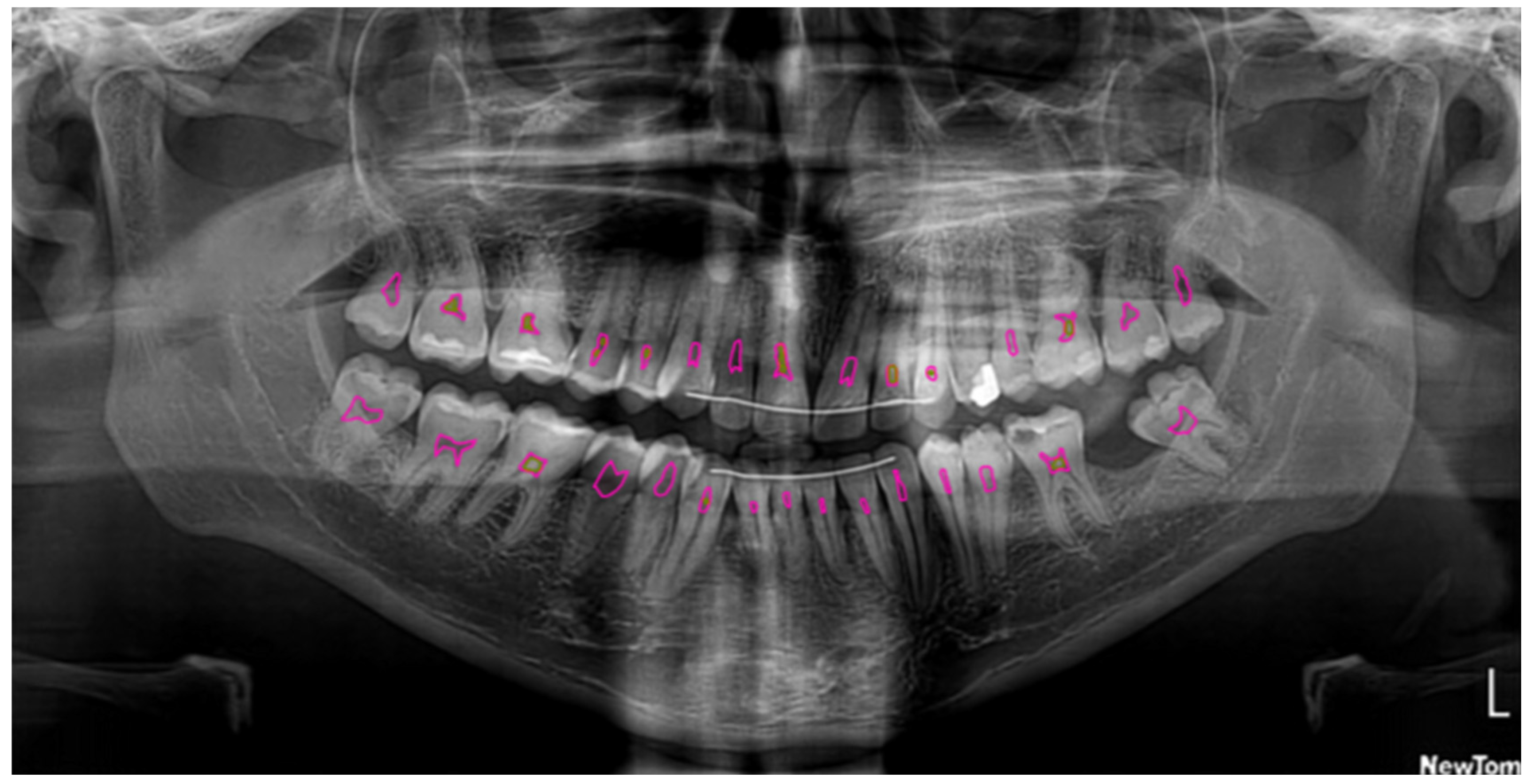

2.2. Dataset and Preprocessing

2.3. Semantic Segmentation

2.4. Model Configuration

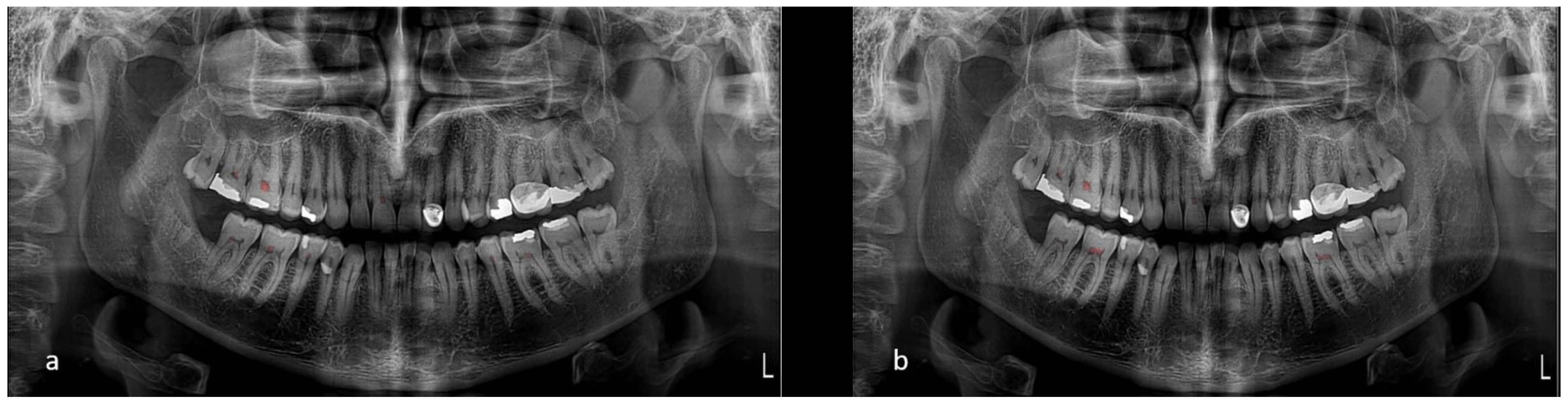

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CNN | Convolutional neural networks |

| CVAT | Computer Vision Annotation Tool |

| CBCT | Cone-beam computed tomography |

References

- Arys, A.; Philippart, C.; Dourov, N. Microradiography and light microscopy of mineralization in the pulp of demineralized human primary molars. J. Oral Pathol. Med. 1993, 22, 49–53. [Google Scholar] [CrossRef] [PubMed]

- Moss-Salentijn, L.; Hendricks-Klyvert, M. Calcified structures in human dental pulps. J. Endod. 1988, 14, 184–189. [Google Scholar] [CrossRef] [PubMed]

- Yaacob, H.B.; Hamid, J.A. Pulpal calcifications in primary teeth: A light microscope study. J. Pedod. 1986, 10, 254–264. [Google Scholar]

- Şener, S.; Cobankara, F.K.; Akgünlü, F. Calcifications of the pulp chamber: Prevalence and implicated factors. Clin. Oral Investig. 2009, 13, 209–215. [Google Scholar] [CrossRef]

- Herman, N.G. Radiographic oddities: Unusual calcifications in the dental pulp. N. Y. State Dent. J. 2004, 70, 28–29. [Google Scholar]

- Edds, A.C.; Walden, J.E.; Scheetz, J.P.; Goldsmith, L.J.; Drisko, C.L.; Eleazer, P.D. Pilot study of correlation of pulp stones with cardiovascular disease. J. Endod. 2005, 31, 504–506. [Google Scholar] [CrossRef] [PubMed]

- Bains, S.K.; Bhatia, A.; Singh, H.P.; Biswal, S.S.; Kanth, S.; Nalla, S. Prevalence of coronal pulp stones and its relation with systemic disorders in northern Indian central Punjabi population. Int. Sch. Res. Not. 2014, 2014, 617590. [Google Scholar] [CrossRef]

- Jena, D.; Balakrishna, K.; Singh, S.; Naqvi, Z.A.; Lanje, A.; Arora, N. A Retrospective Analysis of Pulp Stones in Patients Following Orthodontic Treatment. J. Contemp. Dent. Pract. 2018, 19, 1095–1099. [Google Scholar]

- Zeng, J.; Yang, F.; Zhang, W.; Gong, Q.; Du, Y.; Ling, J. Association between dental pulp stones and calcifying nanoparticles. Int. J. Nanomed. 2011, 6, 109–118. [Google Scholar]

- Srivastava, K.C.; Shrivastava, D.; Nagarajappa, A.K.; Khan, Z.A.; Alzoubi, I.A.; Mousa, M.A.; Hamza, M.; David, A.P.; Al-Johani, K.; Sghaireen, M.G.; et al. Assessing the Prevalence and Association of Pulp Stones with Cardiovascular Diseases and Diabetes Mellitus in the Saudi Arabian Population—A CBCT Based Study. Int. J. Environ. Res. Public Health 2020, 17, 9293. [Google Scholar] [CrossRef]

- Yuce, F.; Öziç, M.Ü.; Tassoker, M. Detection of pulpal calcifications on bite-wing radiographs using deep learning. Clin. Oral Investig. 2023, 27, 2679–2689. [Google Scholar] [CrossRef]

- Johnson, P.L.; Bevelander, G. Histogenesis and histochemistry of pulpal calcification. J. Dent. Res. 1956, 35, 714–722. [Google Scholar] [CrossRef] [PubMed]

- Deva, V.; Mogoantă, L.; Manolea, H.; Pancă, O.A.; Vătu, M.; Vătăman, M. Radiological and microscopic aspects of the denticles. Rom. J. Morphol. Embryol. 2006, 47, 263–268. [Google Scholar] [PubMed]

- Altındağ, A.; Sultan, U.Z.; Bayrakdar, İ.Ş.; Çelik, Ö. Detecting pulp stones with automatic deep learning in bitewing radiographs: A pilot study of artificial intelligence. Eur. Ann. Dent. Sci. 2023, 50, 12–16. [Google Scholar] [CrossRef]

- Willman, W. Numerical incidence of calcification in human pulps. J. Dent. Res. 1932, 14, 660. [Google Scholar]

- Tamse, A.; Kaffe, I.; Littner, M.; Shani, R. Statistical evaluation of radiologic survey of pulp stones. J. Endod. 1982, 8, 455–458. [Google Scholar] [CrossRef]

- Moss-Salentijn, L.; Klyvert, M.H. Epithelially induced denticles in the pulps of recently erupted, noncarious human premolars. J. Endod. 1983, 9, 554–560. [Google Scholar] [CrossRef]

- Goga, R.; Chandler, N.P.; Oginni, A.O. Pulp stones: A review. Int. Endod. J. 2008, 41, 457–468. [Google Scholar] [CrossRef]

- Nayak, M.; Kumar, J.; Prasad, L.K. A radiographic correlation between systemic disorders and pulp stones. Indian J. Dent. Res. 2010, 21, 369–373. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep learning for the radiographic detection of periodontal bone loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef] [PubMed]

- Çelik, B.; Çelik, M.E. Automated detection of dental restorations using deep learning on panoramic radiographs. Dentomaxillofac. Radiol. 2022, 51, 20220244. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Zhang, K.; Liu, P.; Li, H.; Zhang, L.; Wu, J.; Lee, C.H. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci. Rep. 2019, 9, 3840. [Google Scholar] [CrossRef] [PubMed]

- Khanagar, S.B.; Al-Ehaideb, A.; Maganur, P.C.; Vishwanathaiah, S.; Patil, S.; Baeshen, H.A.; Sarode, S.C.; Bhandi, S. Developments, application, and performance of artificial intelligence in dentistry—A systematic review. J. Dent. Sci. 2021, 16, 508–522. [Google Scholar] [CrossRef]

- Mazurowski, M.A.; Buda, M.; Saha, A.; Bashir, M.R. Deep learning in radiology: An overview of the concepts and a survey of the state of the art with a focus on MRI. J. Magn. Reson. Imaging 2019, 49, 939–954. [Google Scholar] [CrossRef]

- Bağ, İ.; Bilgir, E.; Bayrakdar, İ.Ş.; Baydar, O.; Atak, F.M.; Çelik, Ö.; Orhan, K. An artificial intelligence study: Automatic description of anatomic landmarks on panoramic radiographs in the pediatric population. BMC Oral Health 2023, 23, 764. [Google Scholar] [CrossRef]

- Chen, Y.W.; Stanley, K.; Att, W. Artificial intelligence in dentistry: Current applications and future perspectives. Quintessence Int. 2020, 51, 248–257. [Google Scholar]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Kılıc, M.C.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Orhan, K.; Aydın, O.B.; Kaplan, F.A.; Sağlam, H.; Odabaş, A.; Aslan, A.F.; et al. Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofac. Radiol. 2021, 50, 20200172. [Google Scholar] [CrossRef]

- Gwak, M.; Yun, J.P.; Lee, J.Y.; Han, S.S.; Park, P.; Lee, C. Attention-guided jaw bone lesion diagnosis in panoramic radiography using minimal labeling effort. Sci. Rep. 2024, 14, 4981. [Google Scholar] [CrossRef]

- Song, I.S.; Shin, H.K.; Kang, J.H.; Kim, J.E.; Huh, K.H.; Yi, W.J.; Lee, S.S.; Heo, M.S. Deep learning-based apical lesion segmentation from panoramic radiographs. Imaging Sci. Dent. 2022, 52, 351–357. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, N.; Abbasi, M.S.; Zuberi, F.; Qamar, W.; Halim, M.S.; Maqsood, A.; Alam, M.K. Artificial intelligence techniques: Analysis, application, and outcome in dentistry—A systematic review. Biomed. Res. Int. 2021, 2021, 9751564. [Google Scholar] [CrossRef] [PubMed]

- Putra, R.H.; Doi, C.; Yoda, N.; Astuti, E.R.; Sasaki, K. Current applications and development of artificial intelligence for digital dental radiography. Dentomaxillofac. Radiol. 2022, 51, 20210197. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Kim, S.H.; Kim, J.; Yang, S.; Oh, S.H.; Lee, S.P.; Yang, H.J.; Kim, T.I.; Yi, W.J. Automatic and quantitative measurement of alveolar bone level in OCT images using deep learning. Biomed. Opt. Express 2022, 13, 5468–5482. [Google Scholar] [CrossRef]

- Liu, Y.; Unsal, H.S.; Tao, Y.; Zhang, N. Automatic Brain Extraction for Rodent MRI Images. Neuroinformatics 2020, 18, 395–406. [Google Scholar] [CrossRef]

- Khocht, A.; Janal, M.; Harasty, L.; Chang, K.M. Comparison of direct digital and conventional intraoral radiographs in detecting alveolar bone loss. J. Am. Dent. Assc. 2003, 134, 1468–1475. [Google Scholar] [CrossRef]

- Wang, C.W.; Huang, C.T.; Lee, J.H.; Li, C.H.; Chang, S.W.; Siao, M.J.; Lai, T.M.; Ibragimov, B.; Vrtovec, T.; Ronneberger, O.; et al. A benchmark for comparison of dental radiography analysis algorithms. Med. Image Anal. 2016, 31, 63–76. [Google Scholar] [CrossRef]

- Rushton, V.E.; Horner, K.; Worthington, H.V. Factors influencing the selection of panoramic radiography in general dental practice. J. Dent. 1999, 27, 565–571. [Google Scholar] [CrossRef]

- White, S.C.; Pharoah Michael, J. Oral Radiology: Principles and Interpretation; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Arzani, S.; Soltani, P.; Karimi, A.; Yazdi, M.; Ayoub, A.; Khurshid, Z.; Galderisi, D.; Devlin, H. Detection of carotid artery calcifications using artificial intelligence in dental radiographs: A systematic review and meta-analysis. BMC Med. Imaging 2025, 25, 174. [Google Scholar] [CrossRef]

- Altındağ, A.; Bahrilli, S.; Çelik, Ö.; Bayrakdar, İ.Ş.; Orhan, K. The Detection of Pulp Stones with Automatic Deep Learning in Panoramic Radiographies: An AI Pilot Study. Diagnostics 2024, 14, 890. [Google Scholar] [CrossRef] [PubMed]

- Khanna, S.S.; Dhaimade, P.A. Artificial intelligence: Transforming dentistry today. Indian J. Basic Appl. Med. Res. 2017, 6, 161–167. [Google Scholar]

- Duan, W.; Chen, Y.; Zhang, Q.; Lin, X.; Yang, X. Refined tooth and pulp segmentation using U-Net in CBCT image. Dentomaxillofac. Radiol. 2021, 50, 20200251. [Google Scholar] [CrossRef]

- Lin, X.; Fu, Y.; Ren, G.; Yang, X.; Duan, W.; Chen, Y.; Zhang, Q. Micro-computed tomography–guided artificial intelligence for pulp cavity and tooth segmentation on cone-beam computed tomography. J. Endod. 2021, 47, 1933–1941. [Google Scholar] [CrossRef] [PubMed]

- Kenawi, L.M.; Jaha, H.S.; Alzahrani, M.M.; Alharbi, J.I.; Alharbi, S.F.; Almuqati, T.A.; Alsubhi, R.A.; Elkwatehy, W.M.; Jaha, H.; Alzahrani, M.; et al. Cone-Beam Computed Tomography-Based Investigation of the Prevalence and Distribution of Pulp Stones and Their Relation to Local and Systemic Factors in the Makkah Population: A Cross-Sectional Study. Cureus 2024, 16, e51633. [Google Scholar] [CrossRef]

- Selmi, A.; Syed, L.; Abdulkareem, B. Pulp Stone Detection Using Deep Learning Techniques. In Proceedings of the EAI International Conference on IoT Technologies for HealthCare, Aveiro, Portugal, 24–26 November 2021; Springer: Heidelberg, Germany, 2021; pp. 113–124. [Google Scholar]

- Boztuna, M.; Firincioglulari, M.; Akkaya, N.; Orhan, K. Segmentation of periapical lesions with automatic deep learning on panoramic radiographs: An artificial intelligence study. BMC Oral Health 2024, 24, 1332. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Firincioglulari, M.; Boztuna, M.; Mirzaei, O.; Karanfiller, T.; Akkaya, N.; Orhan, K. Segmentation of Pulp and Pulp Stones with Automatic Deep Learning in Panoramic Radiographs: An Artificial Intelligence Study. Dent. J. 2025, 13, 274. https://doi.org/10.3390/dj13060274

Firincioglulari M, Boztuna M, Mirzaei O, Karanfiller T, Akkaya N, Orhan K. Segmentation of Pulp and Pulp Stones with Automatic Deep Learning in Panoramic Radiographs: An Artificial Intelligence Study. Dentistry Journal. 2025; 13(6):274. https://doi.org/10.3390/dj13060274

Chicago/Turabian StyleFirincioglulari, Mujgan, Mehmet Boztuna, Omid Mirzaei, Tolgay Karanfiller, Nurullah Akkaya, and Kaan Orhan. 2025. "Segmentation of Pulp and Pulp Stones with Automatic Deep Learning in Panoramic Radiographs: An Artificial Intelligence Study" Dentistry Journal 13, no. 6: 274. https://doi.org/10.3390/dj13060274

APA StyleFirincioglulari, M., Boztuna, M., Mirzaei, O., Karanfiller, T., Akkaya, N., & Orhan, K. (2025). Segmentation of Pulp and Pulp Stones with Automatic Deep Learning in Panoramic Radiographs: An Artificial Intelligence Study. Dentistry Journal, 13(6), 274. https://doi.org/10.3390/dj13060274