Accuracy of Navigation and Robot-Assisted Systems for Dental Implant Placement: A Systematic Review

Abstract

1. Introduction

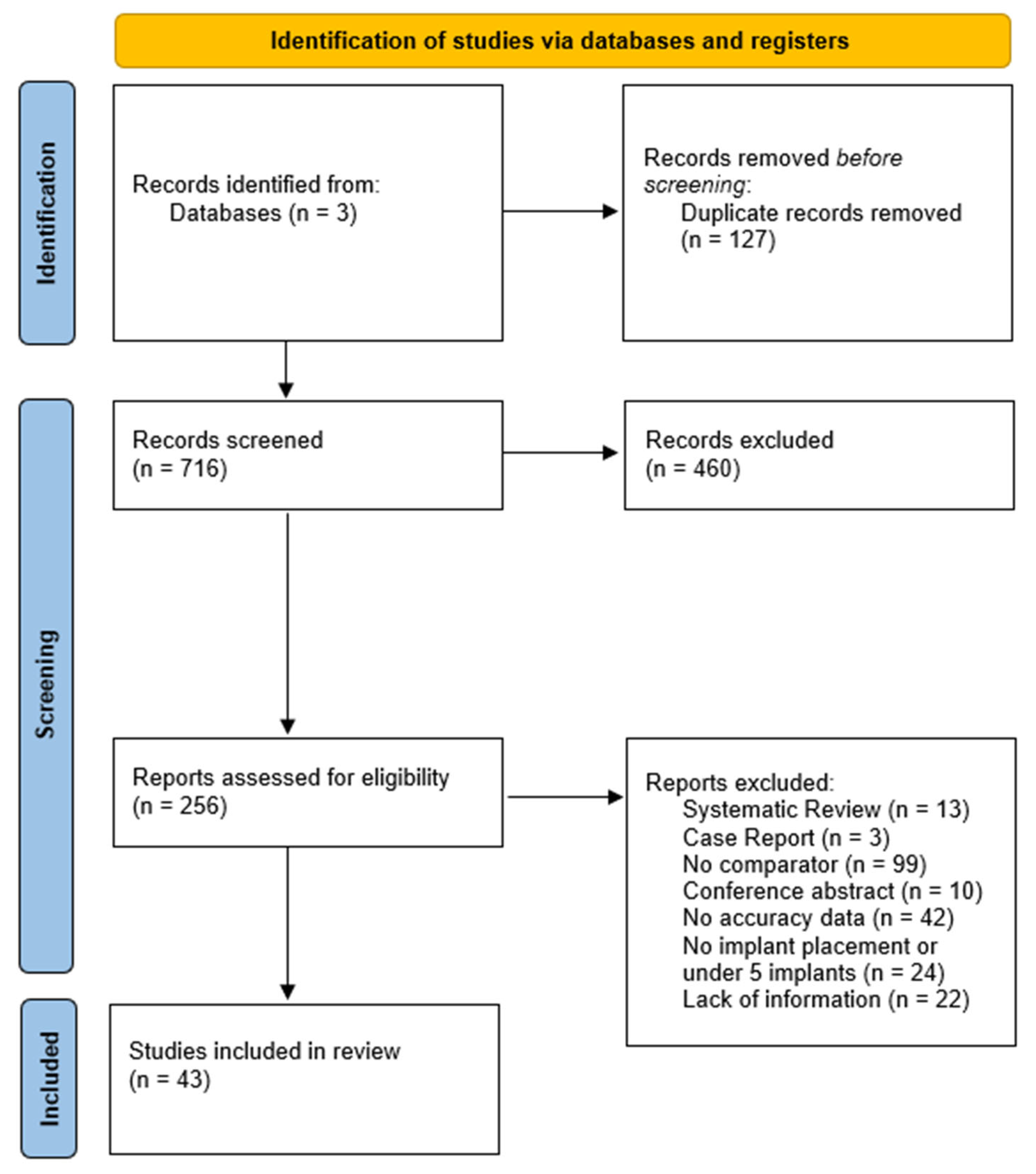

2. Methods

2.1. Protocol and Registration

2.2. Study Question and PICOS Strategy

2.3. Eligibility Criteria

2.4. Study-Selection and Data-Extraction Process

2.5. Search Strategy

2.6. Types of Outcome Measures

- ➢

- Coronal (platform) deviation is defined as the three-dimensional Euclidean distance, in millimetres, between the centre of the implant shoulder in the virtual plan and in the registered post-operative scan.

- ➢

- Apical deviation is defined as a 3-D Euclidean distance (mm) between the centroid of the apical endpoint in the plan and in the registered post-operative model, defined analogously to the platform to ensure symmetry of reference points.

- ➢

- Angular deviation represented the absolute inter-axis angle, in degrees, obtained from the arccosine of the dot-product of the planned and achieved implant vectors.

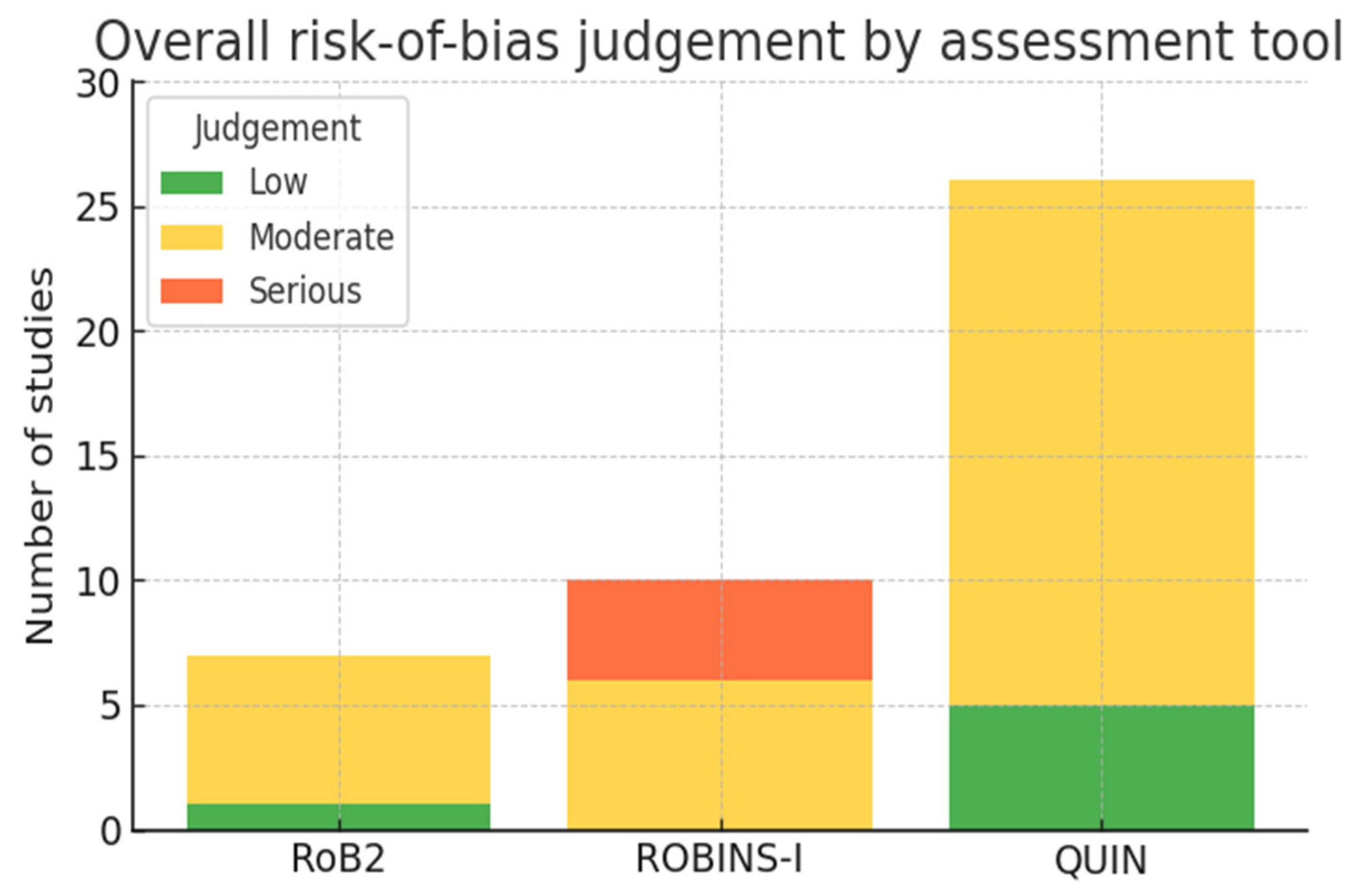

2.7. Risk-of-Bias Assessment

- ➢

- Randomized controlled trials (RCTs, n = 7) were appraised with the Cochrane RoB 2 tool (five domains).

- ➢

- Non-randomized clinical investigations, including prospective or retrospective cohorts and case-series (n = 10), were assessed with ROBINS-I (seven domains).

- ➢

- In vitro accuracy studies (n = 26) were analyzed with the QUIN checklist (five methodological domains).

2.8. Synthesis Methods

3. Results

3.1. Identification and Screening

3.2. Study Range and Characteristics

3.3. Risk-of-Bias Findings

3.4. Surgical Approaches Represented

3.5. Distribution of Implant-Placement Deviations

3.6. Studies Accuracy

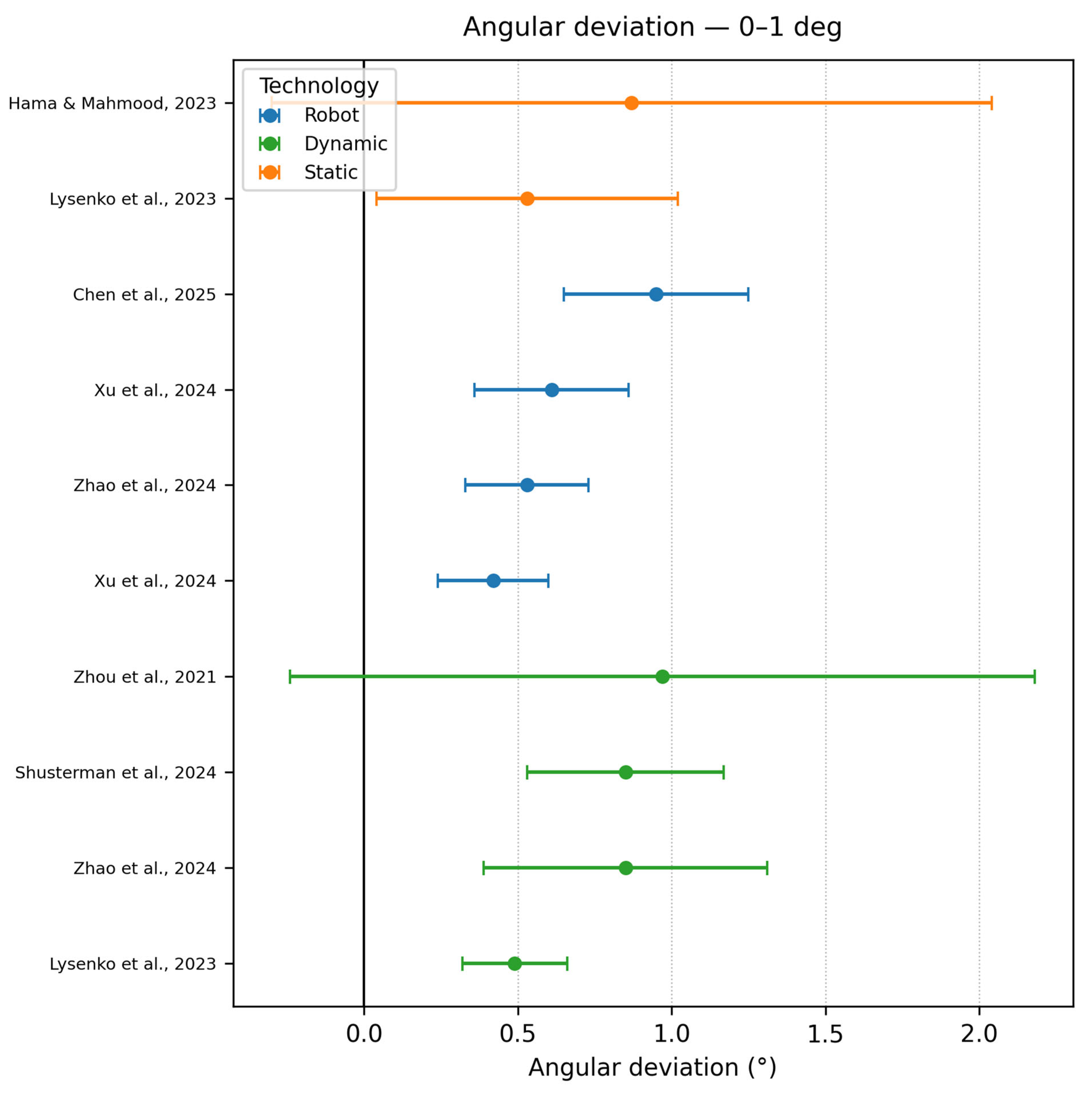

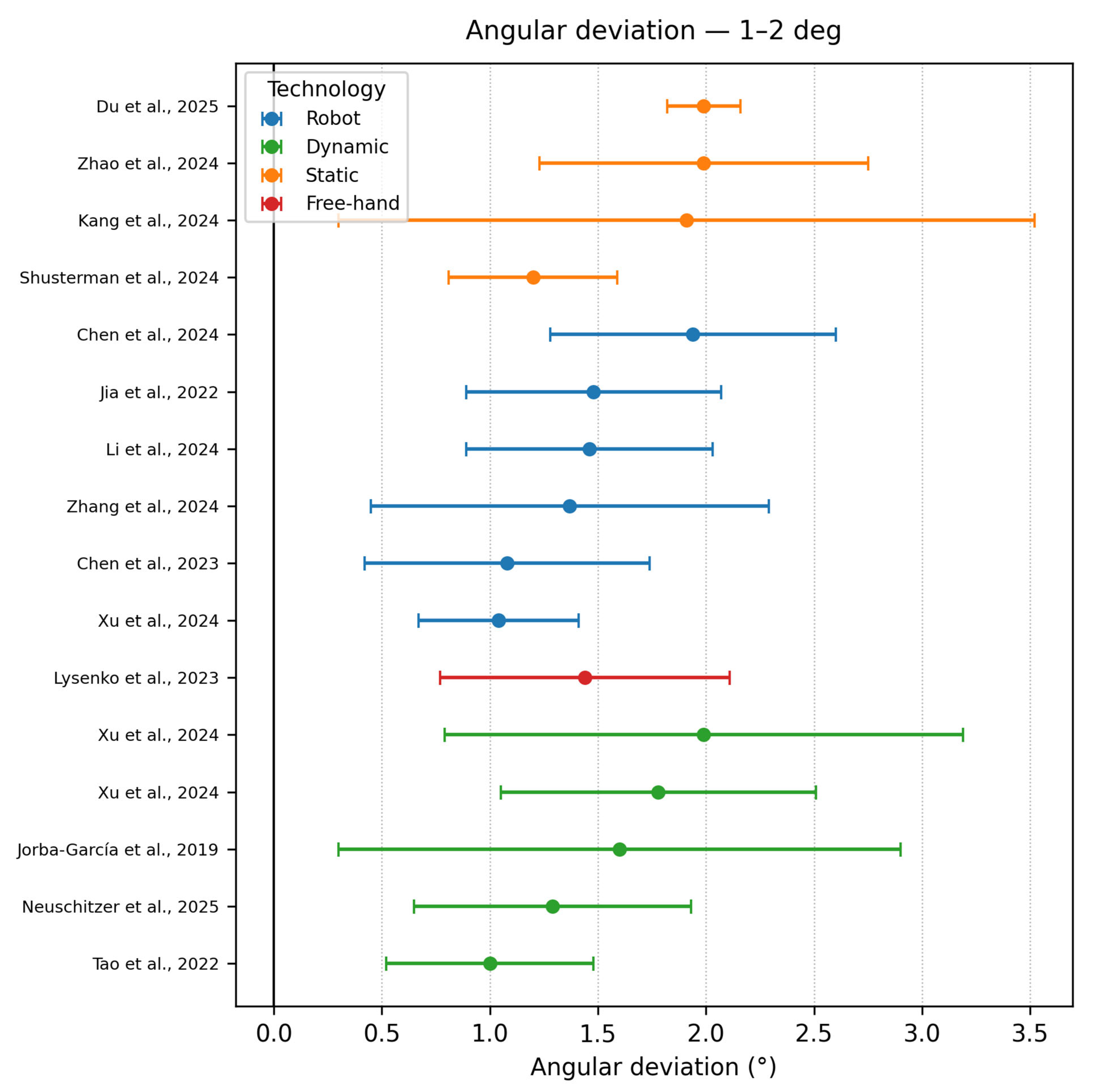

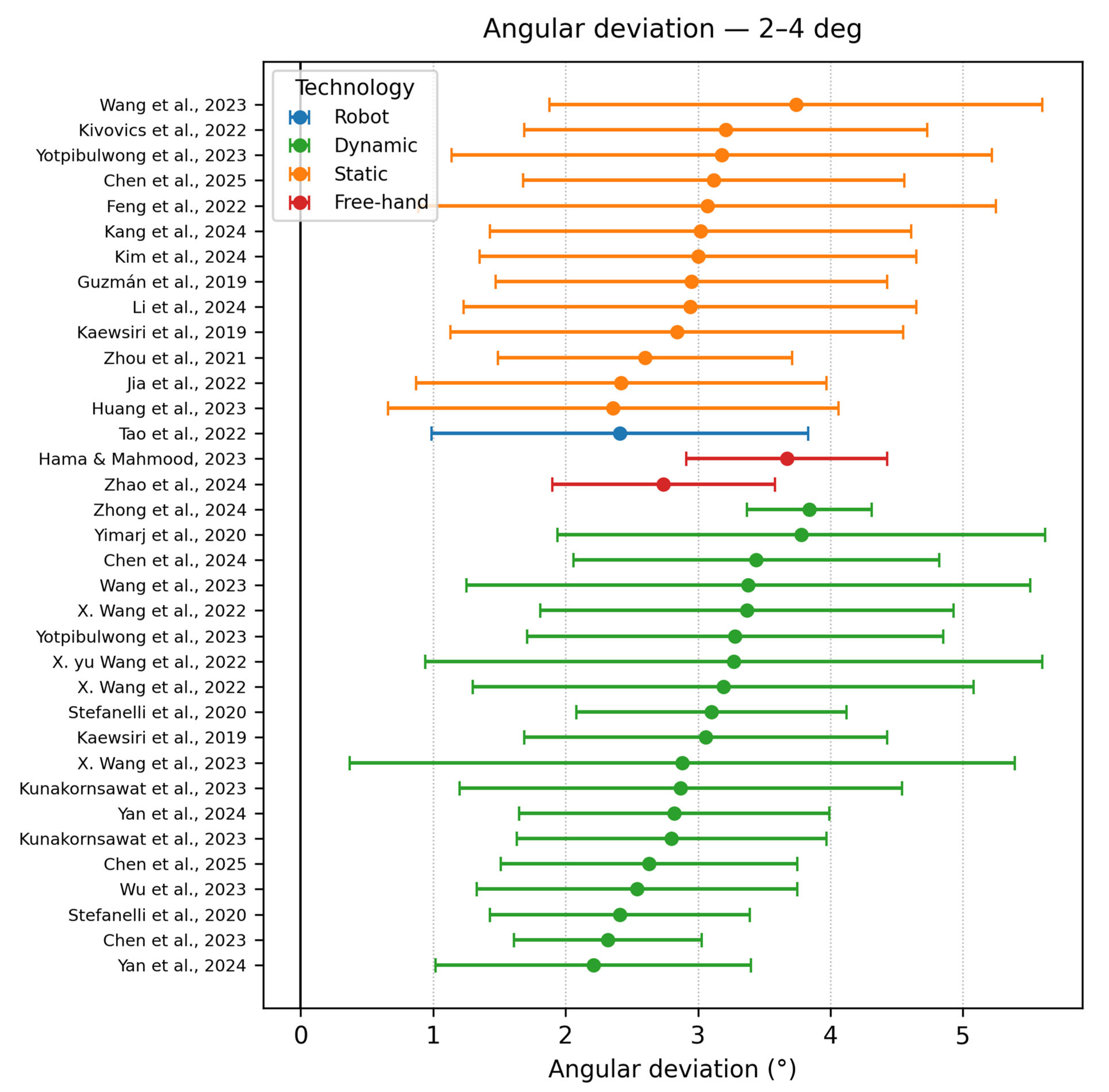

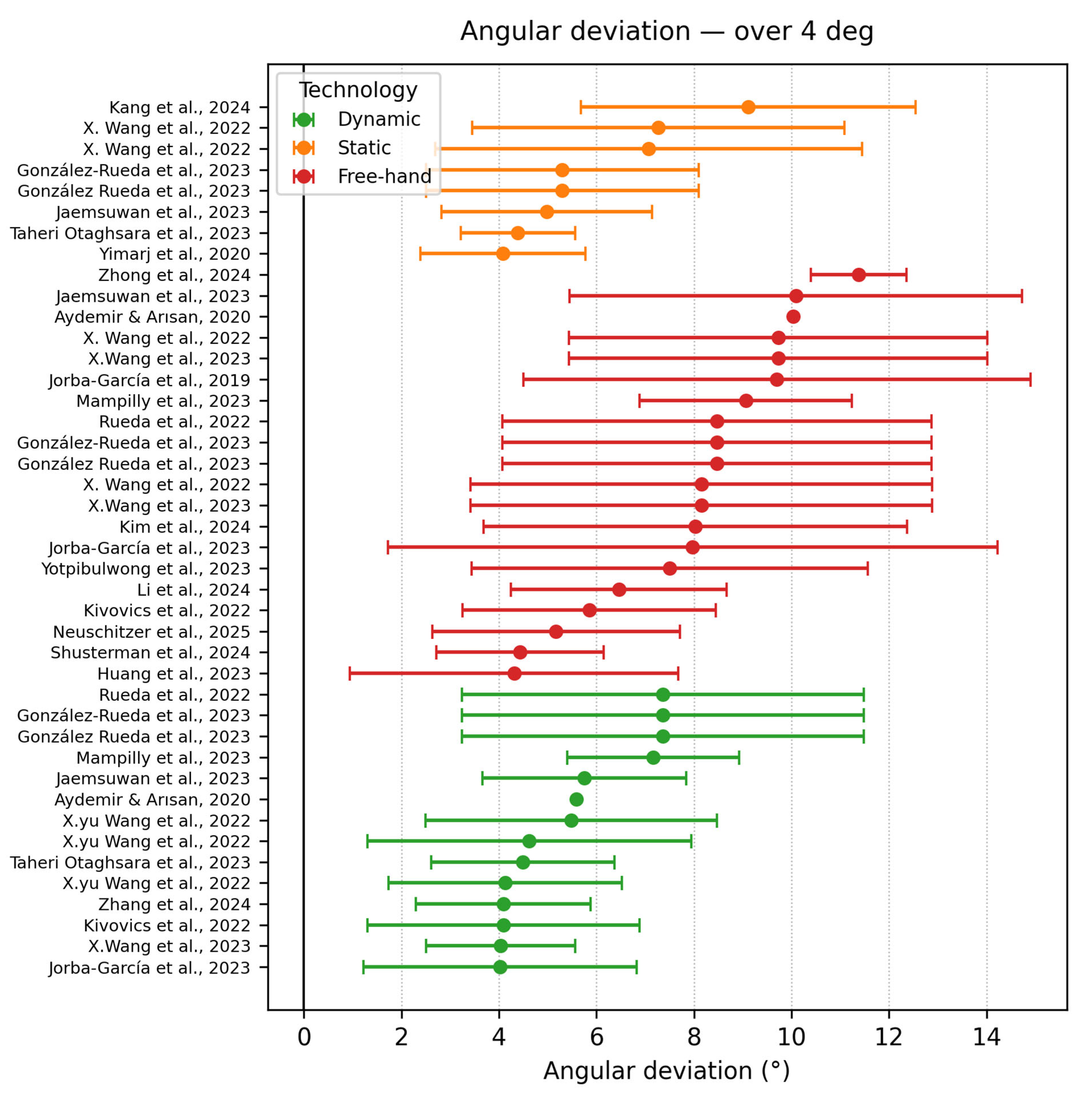

3.6.1. Angular Deviation

- Angle deviation 0–1°

- Angle deviation 1–2°

- Angle deviation 2–4°

- Angle deviation ≥ 4°

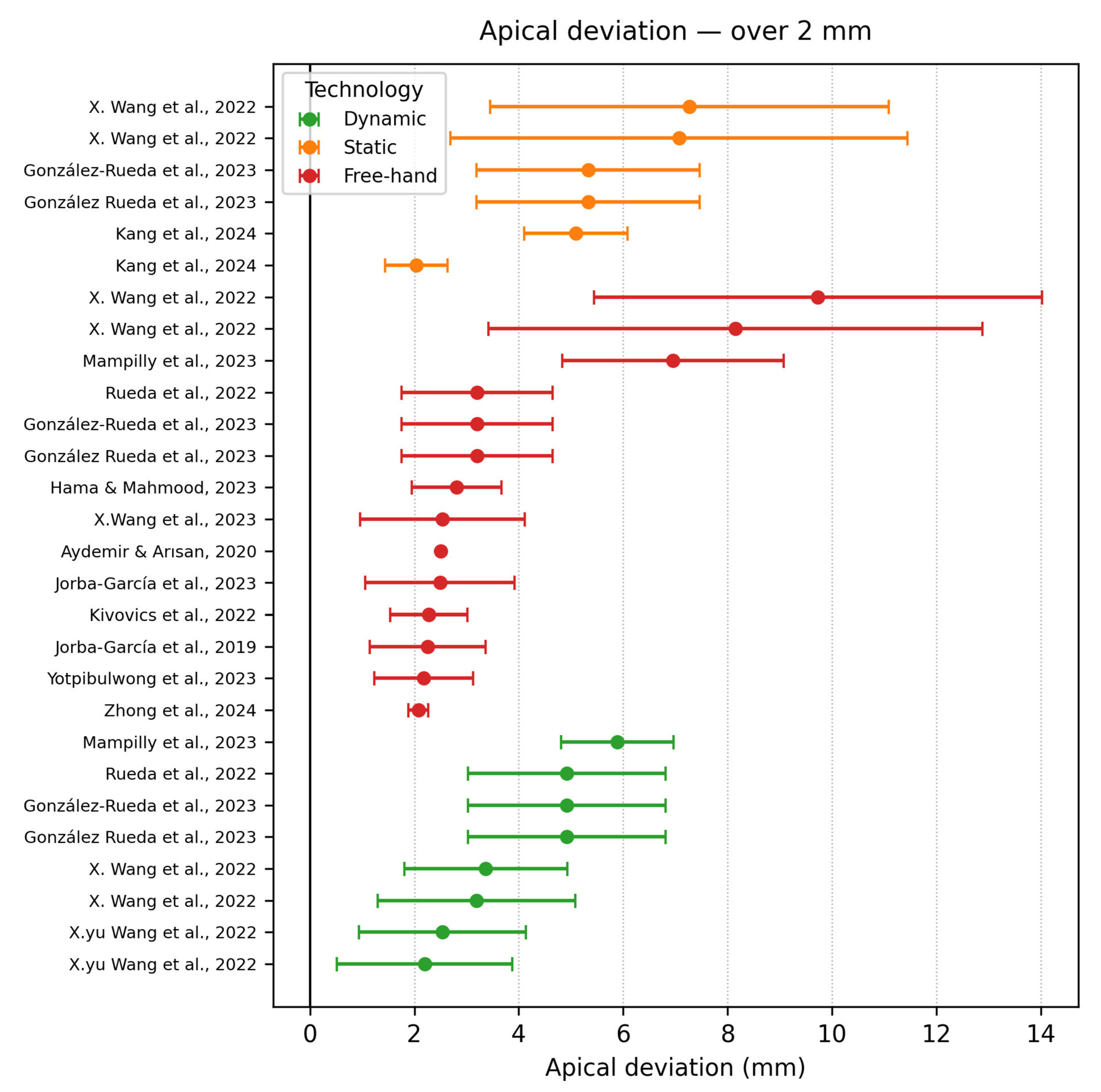

3.6.2. Apical Deviation

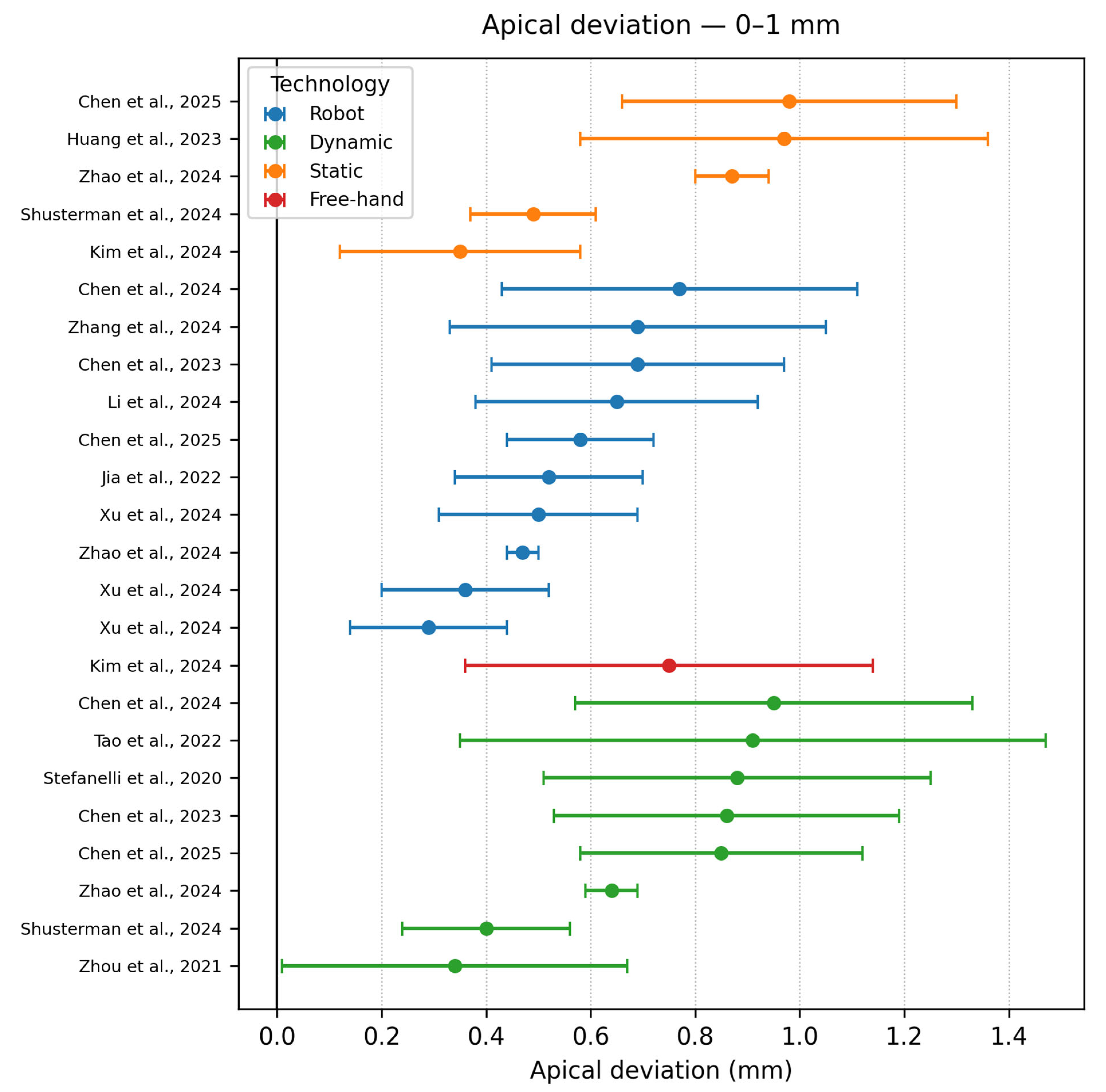

- Apical accuracy 0–1 mm

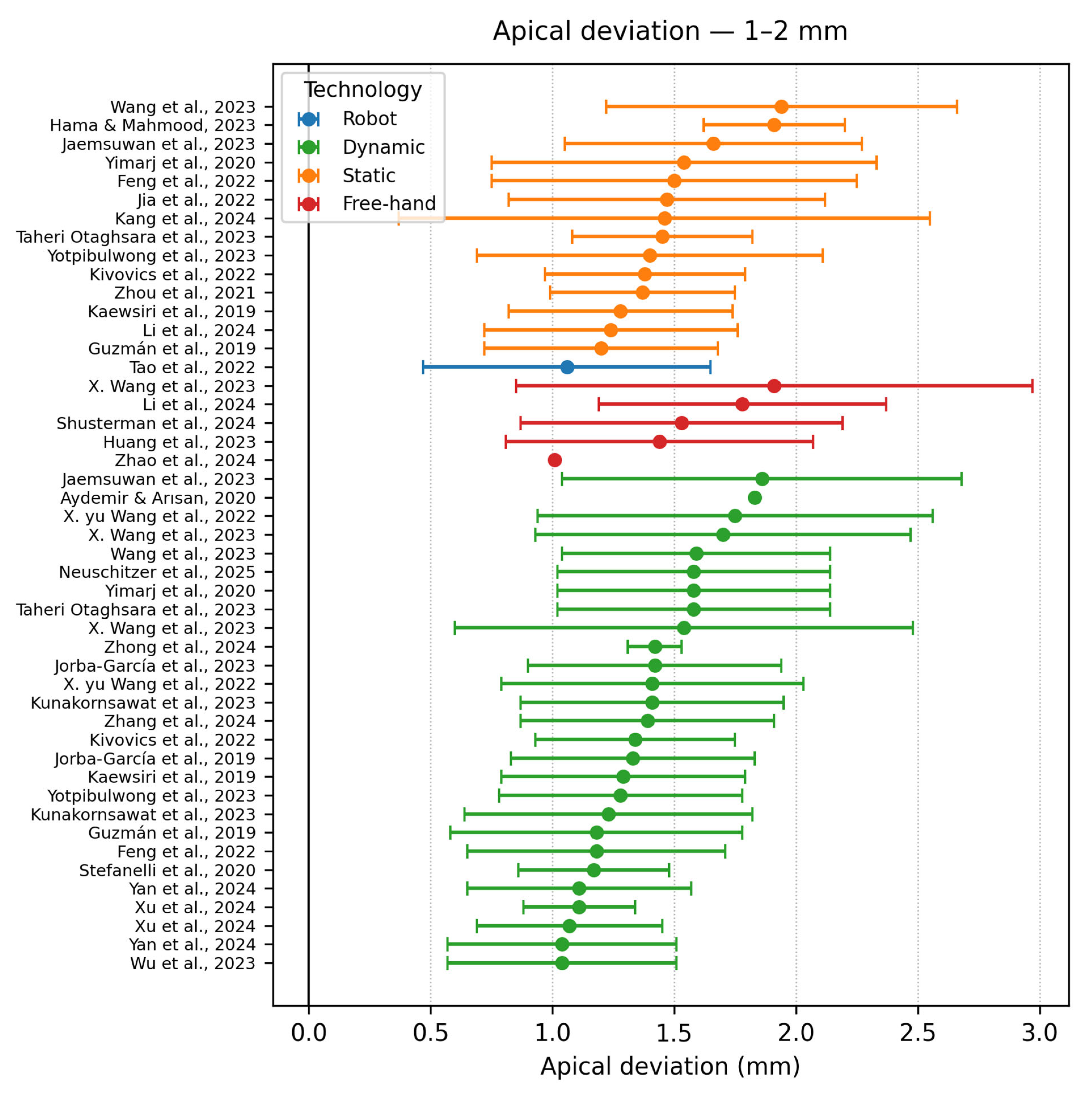

- Apical accuracy (1–2 mm)

- Apical deviation ≥ 2 mm

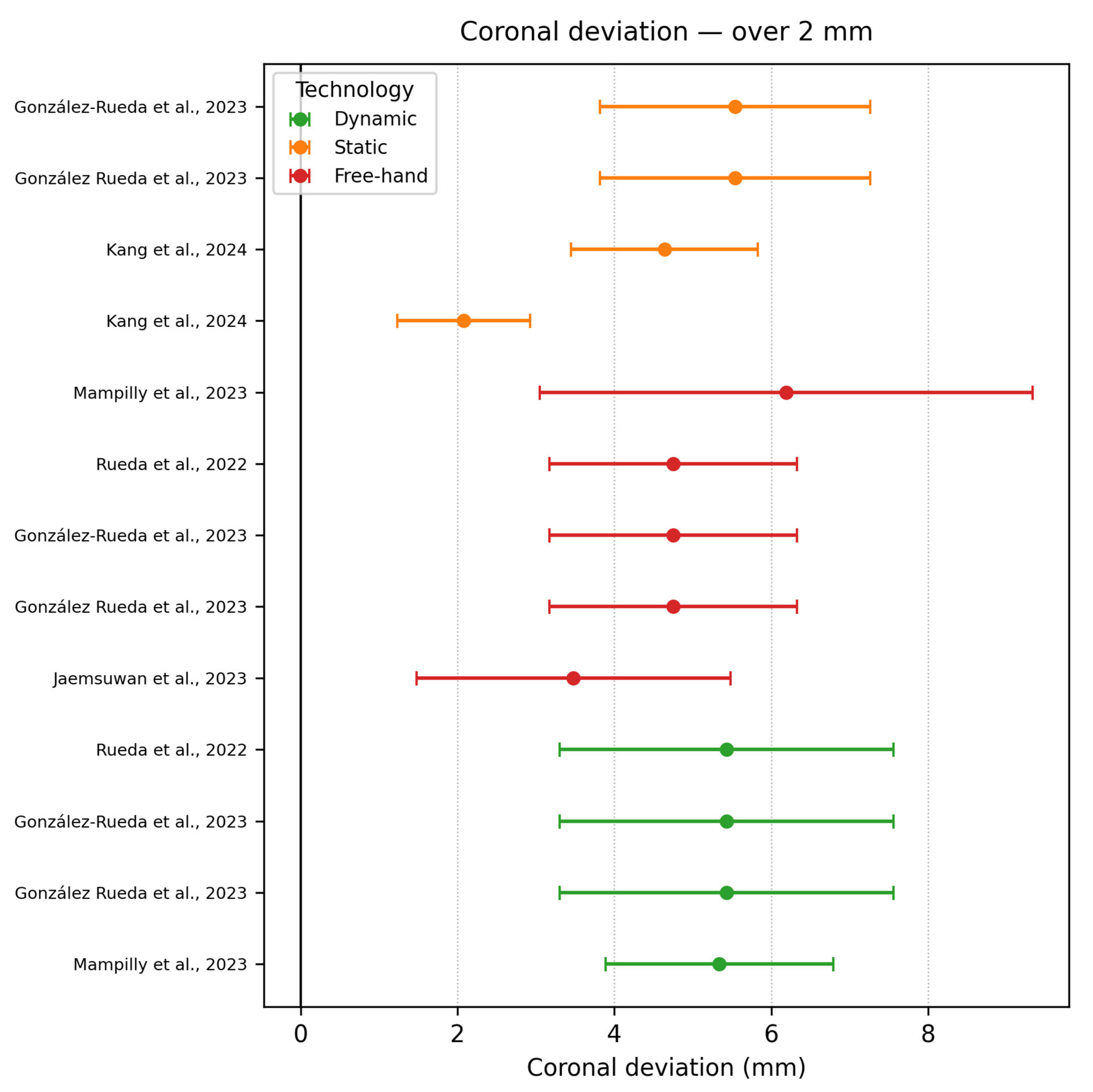

3.6.3. Coronal (Platform) Deviation

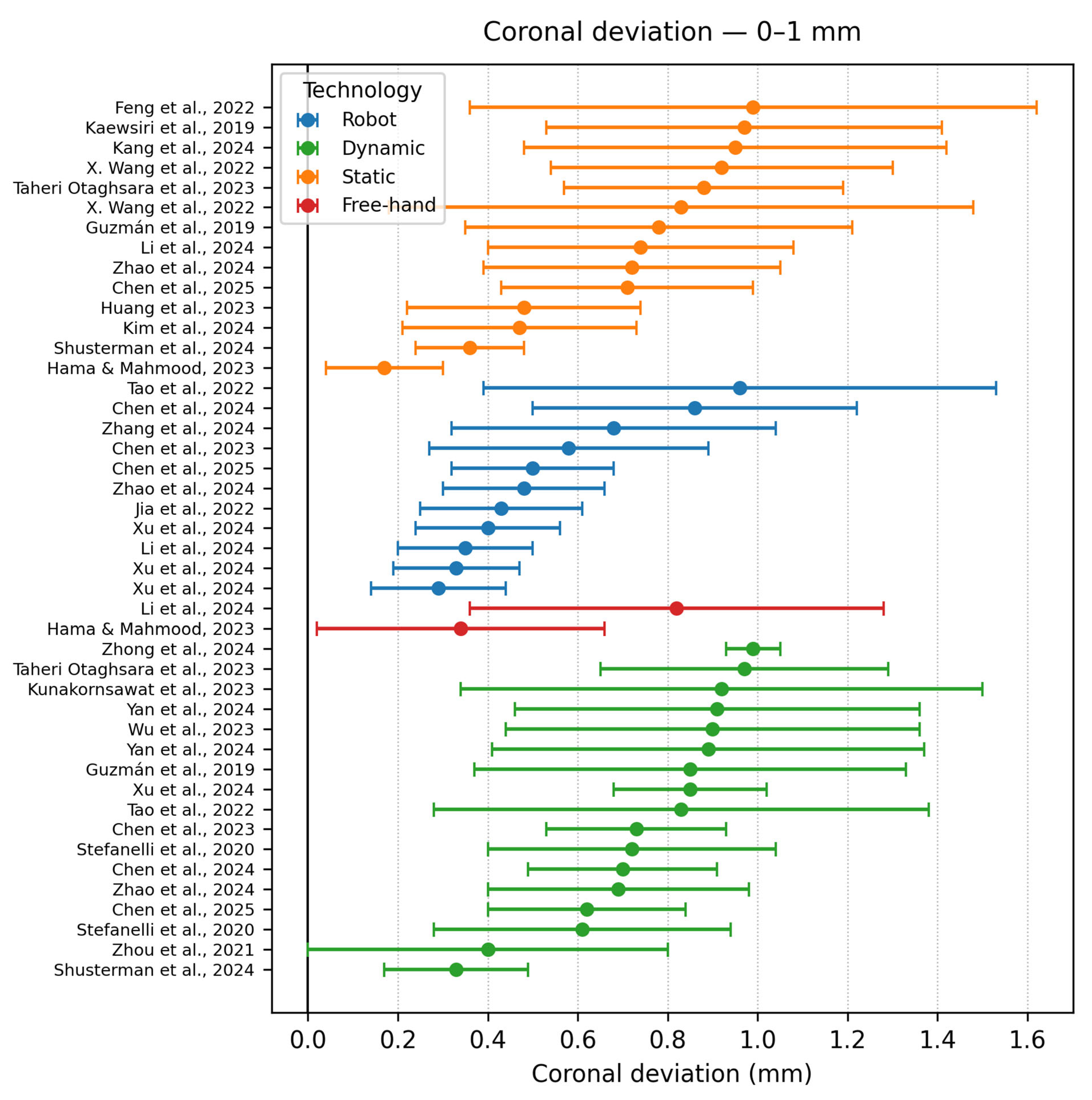

- Coronal deviation < 1 mm

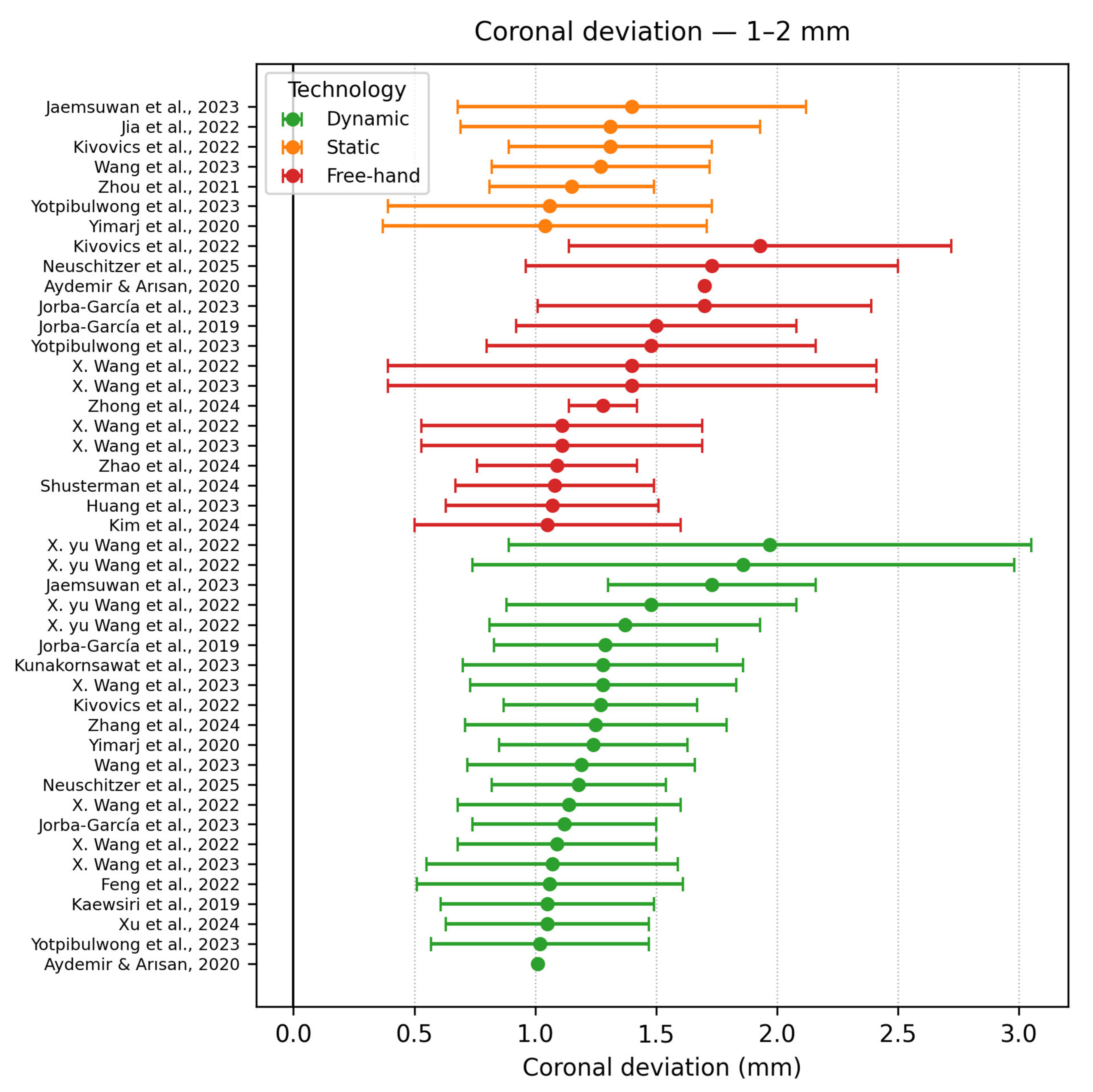

- Coronal deviation 1–2 mm

- Coronal deviation ≥ 2 mm

4. Discussion

4.1. Main Findings

4.2. Relation to Previous Work

4.3. Static vs. Dynamic Navigation

4.4. Dynamic vs. Robotic Guidance

4.5. Strengths and Limitations of the Evidence

4.6. Technology-Specific Considerations

4.7. Zygomatic Implants

4.8. Learning Curve and Training

4.9. Clinical Implications

4.10. Translational and Evidence Gaps

4.11. Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khaohoen, A.; Powcharoen, W.; Sornsuwan, T.; Chaijareenont, P.; Rungsiyakull, C.; Rungsiyakull, P. Accuracy of implant placement with computer-aided static, dynamic, and robot-assisted surgery: A systematic review and meta-analysis of clinical trials. BMC Oral Health 2024, 24, 359. [Google Scholar] [CrossRef]

- Younis, H.; Lv, C.; Xu, B.; Zhou, H.; Du, L.; Liao, L.; Zhao, N.; Long, W.; Elayah, S.A.; Chang, X.; et al. Accuracy of dynamic navigation compared to static surgical guides and the freehand approach in implant placement: A prospective clinical study. Head Face Med. 2024, 20, 30. [Google Scholar] [CrossRef]

- Xu, Z.; Zhou, L.; Han, B.; Wu, S.; Xiao, Y.; Zhang, S.; Chen, J.; Guo, J.; Wu, D. Accuracy of dental implant placement using different dynamic navigation and robotic systems: An in vitro study. npj Digit. Med. 2024, 7, 182. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, J.; Ma, C.; Shen, J.; Dong, X.; Lin, D. A systematic review of the accuracy of digital surgical guides for dental implantation. Int. J. Implant. Dent. 2023, 9, 38. [Google Scholar] [CrossRef]

- Khan, M.; Javed, F.; Haji, Z.; Ghafoor, R. Comparison of the positional accuracy of robotic guided dental implant placement with static guided and dynamic navigation systems: A systematic review and meta-analysis. J. Prosthet. Dent. 2024, 132, 746.e1–746.e8. [Google Scholar] [CrossRef] [PubMed]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A web and mobile app for systematic reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Higgins, J.P.T.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. (Eds.) Cochrane Handbook for Systematic Reviews of Interventions, 2nd ed.; John Wiley & Sons: Chichester, UK, 2019. [Google Scholar] [CrossRef]

- Tao, B.; Feng, Y.; Fan, X.; Zhuang, M.; Chen, X.; Wang, F.; Wu, Y. Accuracy of dental implant surgery using dynamic navigation and robotic systems: An in vitro study. J. Dent. 2022, 123, 104170. [Google Scholar] [CrossRef]

- Otaghsara, S.S.T.; Joda, T.; Thieringer, F.M. Accuracy of dental implant placement using static versus dynamic computer-assisted implant surgery: An in vitro study. J. Dent. 2023, 132, 104487. [Google Scholar] [CrossRef]

- Jorba-García, A.; Bara-Casaus, J.J.; Camps-Font, O.; Sánchez-Garcés, M.Á.; Figueiredo, R.; Valmaseda-Castellón, E. Accuracy of dental implant placement with or without the use of a dynamic navigation assisted system: A randomized clinical trial. Clin. Oral Implant. Res. 2023, 34, 438–449. [Google Scholar] [CrossRef]

- Kaewsiri, D.; Panmekiate, S.; Subbalekha, K.; Mattheos, N.; Pimkhaokham, A. The accuracy of static vs. dynamic computer-assisted implant surgery in single tooth space: A randomized controlled trial. Clin. Oral Implant. Res. 2019, 30, 505–514. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Zhuang, M.; Tao, B.; Wu, Y.; Ye, L.; Wang, F. Accuracy of immediate dental implant placement with task-autonomous robotic system and navigation system: An in vitro study. Clin. Oral Implant. Res. 2024, 35, 973–983. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Cai, Q.; Chen, W.; Lin, Y.; Gao, Y.; Wu, D.; Chen, J. Accuracy of implant placement via dynamic navigation and autonomous robotic computer-assisted implant surgery methods: A retrospective study. Clin. Oral Implant. Res. 2024, 35, 220–229. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Dai, M.; Wang, S.; Zhang, X.; Fan, Q.; Chen, L. Accuracy of immediate anterior implantation using static and robotic computer-assisted implant surgery: A retrospective study. J. Dent. 2024, 148, 105218. [Google Scholar] [CrossRef]

- Zhao, W.; Teng, W.; Su, Y.; Zhou, L. Accuracy of dental implant surgery with freehand, static computer-aided, dynamic computer-aided, and robotic computer-aided implant systems: An in vitro study. J. Prosthet. Dent. 2024. [Google Scholar] [CrossRef]

- González-Rueda, J.; Galparsoro-Catalán, A.; de Paz-Hermoso, V.; Riad-Deglow, E.; Zubizarreta-Macho, Á.; Pato-Mourelo, J.; Hernández-Montero, S.; Montero-Martín, J. Accuracy of zygomatic dental implant placement using computer-aided static and dynamic navigation systems compared with a mixed reality appliance. An in vitro study. J. Clin. Exp. Dent. 2023, 15, e1035–e1044. [Google Scholar] [CrossRef]

- Mampilly, M.; Kuruvilla, L.; Niyazi, A.A.T.; Shyam, A.; Thomas, P.A.; Ali, A.S.; Pullishery, F. Accuracy and Self-Confidence Level of Freehand Drilling and Dynamic Navigation System of Dental Implants: An In Vitro Study. Cureus 2023, 15, e49618. [Google Scholar] [CrossRef]

- Zhou, M.; Zhou, H.; Li, S.-Y.; Zhu, Y.-B.; Geng, Y.-M. Comparison of the accuracy of dental implant placement using static and dynamic computer-assisted systems: An in vitro study. J. Stomatol. Oral Maxillofac. Surg. 2021, 122, 343–348. [Google Scholar] [CrossRef]

- Wu, B.; Xue, F.; Ma, Y.; Sun, F. Accuracy of automatic and manual dynamic navigation registration techniques for dental implant surgery in posterior sites missing a single tooth: A retrospective clinical analysis. Clin. Oral Implant. Res. 2023, 34, 221–232. [Google Scholar] [CrossRef]

- Pomares-Puig, C.; Sánchez-Garcés, M.A.; Jorba-García, A. Dynamic and static computer-assisted implant surgery for completely edentulous patients. A proof of a concept. J. Dent. 2023, 130, 104443. [Google Scholar] [CrossRef]

- Wang, W.; Zhuang, M.; Li, S.; Shen, Y.; Lan, R.; Wu, Y.; Wang, F. Exploring training dental implant placement using static or dynamic devices among dental students. Eur. J. Dent. Educ. 2023, 27, 438–448. [Google Scholar] [CrossRef] [PubMed]

- Du, C.; Peng, P.; Guo, X.; Wu, Y.; Zhang, Z.; Hao, L.; Zhang, Z.; Xiong, J. Combined static and dynamic computer-guided surgery for prosthetically driven zygomatic implant placement. J. Dent. 2025, 152, 105453. [Google Scholar] [CrossRef] [PubMed]

- Rueda, J.R.G.; Catalán, A.G.; Hermoso, V.M.d.P.; Deglow, E.R.; Zubizarreta-Macho, Á.; Mourelo, J.P.; Martín, J.M.; Montero, S.H. Accuracy of computer-aided static and dynamic navigation systems in the placement of zygomatic dental implants. BMC Oral Health 2023, 23, 150. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Shujaat, S.; Meeus, J.; Shaheen, E.; Legrand, P.; Lahoud, P.; Gerhardt, M.D.N.; Jacobs, R. Performance of novice versus experienced surgeons for dental implant placement with freehand, static guided and dynamic navigation approaches. Sci. Rep. 2023, 13, 2598. [Google Scholar] [CrossRef]

- Kunakornsawat, W.; Serichetaphongse, P.; Arunjaroensuk, S.; Kaboosaya, B.; Mattheos, N.; Pimkhaokham, A. Training of novice surgeons using dynamic computer assisted dental implant surgery: An exploratory randomized trial. Clin. Implant. Dent. Relat. Res. 2023, 25, 511–518. [Google Scholar] [CrossRef]

- Wang, X.-Y.; Liu, L.; Guan, M.-S.; Liu, Q.; Zhao, T.; Li, H.-B. The accuracy and learning curve of active and passive dynamic navigation-guided dental implant surgery: An in vitro study. J. Dent. 2022, 124, 104240. [Google Scholar] [CrossRef]

- Jaemsuwan, S.; Arunjaroensuk, S.; Kaboosaya, B.; Subbalekha, K.; Mattheos, N.; Pimkhaokham, A. Comparison of the accuracy of implant position among freehand implant placement, static and dynamic computer-assisted implant surgery in fully edentulous patients: A non-randomized prospective study. Int. J. Oral Maxillofac. Surg. 2023, 52, 264–271. [Google Scholar] [CrossRef]

- Wang, X.; Shaheen, E.; Shujaat, S.; Meeus, J.; Legrand, P.; Lahoud, P.; Gerhardt, M.D.N.; Politis, C.; Jacobs, R. Influence of experience on dental implant placement: An in vitro comparison of freehand, static guided and dynamic navigation approaches. Int. J. Implant. Dent. 2022, 8, 42. [Google Scholar] [CrossRef]

- Zhong, X.; Xing, Y.; Yan, J.; Chen, J.; Chen, Z.; Liu, Q. Surgical performance of dental students using computer-assisted dynamic navigation and freehand approaches. Eur. J. Dent. Educ. 2024, 28, 504–510. [Google Scholar] [CrossRef]

- Lysenko, A.V.; Yaremenko, A.I.; Ivanov, V.M.; Lyubimov, A.I.; Leletkina, N.A.; Prokofeva, A.A. Comparison of Dental Implant Placement Accuracy Using a Static Surgical Guide, a Virtual Guide and a Manual Placement Method—An In-Vitro Study. Ann. Maxillofac. Surg. 2023, 13, 158–162. [Google Scholar] [CrossRef]

- Kivovics, M.; Takács, A.; Pénzes, D.; Németh, O.; Mijiritsky, E. Accuracy of dental implant placement using augmented reality-based navigation, static computer assisted implant surgery, and the free-hand method: An in vitro study. J. Dent. 2022, 119, 104070. [Google Scholar] [CrossRef] [PubMed]

- Aydemir, C.A.; Arısan, V. Accuracy of dental implant placement via dynamic navigation or the freehand method: A split-mouth randomized controlled clinical trial. Clin. Oral Implant. Res. 2020, 31, 255–263. [Google Scholar] [CrossRef]

- Chen, J.; Bai, X.; Ding, Y.; Shen, L.; Sun, X.; Cao, R.; Yang, F.; Wang, L. Comparison the accuracy of a novel implant robot surgery and dynamic navigation system in dental implant surgery: An in vitro pilot study. BMC Oral Health 2023, 23, 179. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Su, Z.; Mo, A.; Yang, X. Comparison of the accuracy of immediate implant placement using static and dynamic computer-assisted implant system in the esthetic zone of the maxilla: A prospective study. Int. J. Implant. Dent. 2022, 8, 65. [Google Scholar] [CrossRef] [PubMed]

- Yotpibulwong, T.; Arunjaroensuk, S.; Kaboosaya, B.; Sinpitaksakul, P.; Arksornnukit, M.; Mattheos, N.; Pimkhaokham, A. Accuracy of implant placement with a combined use of static and dynamic computer-assisted implant surgery in single tooth space: A randomized controlled trial. Clin. Oral Implant. Res. 2023, 34, 330–341. [Google Scholar] [CrossRef]

- Kim, Y.-J.; Kim, J.; Lee, J.-R.; Kim, H.-S.; Sim, H.-Y.; Lee, H.; Han, Y.-S. Comparison of the accuracy of implant placement using a simple guide device and freehand surgery. J. Dent. Sci. 2024, 19, 2256–2261. [Google Scholar] [CrossRef]

- Jia, S.; Wang, G.; Zhao, Y.; Wang, X. Accuracy of an autonomous dental implant robotic system versus static guide-assisted implant surgery: A retrospective clinical study. J. Prosthet. Dent. 2023, 133, 771–779. [Google Scholar] [CrossRef]

- Shusterman, A.; Nashef, R.; Tecco, S.; Mangano, C.; Lerner, H.; Mangano, F.G. Accuracy of implant placement using a mixed reality-based dynamic navigation system versus static computer-assisted and freehand surgery: An in Vitro study. J. Dent. 2024, 146, 105052. [Google Scholar] [CrossRef]

- Yimarj, P.; Subbalekha, K.; Dhanesuan, K.; Siriwatana, K.; Mattheos, N.; Pimkhaokham, A. Comparison of the accuracy of implant position for two-implants supported fixed dental prosthesis using static and dynamic computer-assisted implant surgery: A randomized controlled clinical trial. Clin. Implant. Dent. Relat. Res. 2020, 22, 672–678. [Google Scholar] [CrossRef]

- Neuschitzer, M.; Toledano-Serrabona, J.; Jorba-García, A.; Bara-Casaus, J.; Figueiredo, R.; Valmaseda-Castellón, E. Comparative accuracy of dCAIS and freehand techniques for immediate implant placement in the maxillary aesthetic zone: An in vitro study. J. Dent. 2025, 153, 105472. [Google Scholar] [CrossRef]

- Hama, D.R.; Mahmood, B.J. Comparison of accuracy between free-hand and surgical guide implant placement among experienced and non-experienced dental implant practitioners: An in vitro study. J. Periodontal Implant. Sci. 2023, 53, 388–401. [Google Scholar] [CrossRef]

- Huang, L.; Liu, L.; Yang, S.; Khadka, P.; Zhang, S. Evaluation of the accuracy of implant placement by using implant positional guide versus freehand: A prospective clinical study. Int. J. Implant. Dent. 2023, 9, 45. [Google Scholar] [CrossRef]

- Rueda, J.R.G.; Ávila, I.G.; Hermoso, V.M.d.P.; Deglow, E.R.; Zubizarreta-Macho, Á.; Mourelo, J.P.; Martín, J.M.; Montero, S.H. Accuracy of a Computer-Aided Dynamic Navigation System in the Placement of Zygomatic Dental Implants: An In Vitro Study. J. Clin. Med. 2022, 11, 1436. [Google Scholar] [CrossRef]

- Stefanelli, L.V.; Mandelaris, G.A.; Franchina, A.; Pranno, N.; Pagliarulo, M.; Cera, F.; Maltese, F.; De Angelis, F.; Di Carlo, S. Accuracy of dynamic navigation system workflow for implant supported full arch prosthesis: A case series. Int. J. Environ. Res. Public Health 2020, 17, 5038. [Google Scholar] [CrossRef] [PubMed]

- Kang, Y.; Ge, Y.; Ding, M.; Liu-Fu, J.; Cai, Z.; Shan, X. A comparison of accuracy among different approaches of static-guided implant placement in patients treated with mandibular reconstruction: A retrospective study. Clin. Oral Implant. Res. 2024, 35, 251–257. [Google Scholar] [CrossRef] [PubMed]

- Yan, Q.; Wu, X.; Shi, J.; Shi, B. Does dynamic navigation assisted student training improve the accuracy of dental implant placement by postgraduate dental students: An in vitro study. BMC Oral Health 2024, 24, 600. [Google Scholar] [CrossRef] [PubMed]

- Jorba-Garcia, A.; Figueiredo, R.; Gonzalez-Barnadas, A.; Camps-Font, O.; Valmaseda-Castellon, E. Accuracy and the role of experience in dynamic computer guided dental implant surgery: An in-vitro study. Med. Oral Patol. Oral Cir. Bucal 2019, 24, E76–E83. [Google Scholar] [CrossRef]

- Mediavilla Guzmán, A.; Riad Deglow, E.; Zubizarreta-Macho, Á.; Agustín-Panadero, R.; Hernández Montero, S. Accuracy of computer-aided dynamic navigation compared to computer-aided static navigation for dental implant placement: An in vitro study. J. Clin. Med. 2019, 8, 2123. [Google Scholar] [CrossRef]

- Chen, J.; Ding, Y.; Cao, R.; Zheng, Y.; Shen, L.; Wang, L.; Yang, F. Accuracy of a Novel Robot-Assisted System and Dynamic Navigation System for Dental Implant Placement: A Clinical Retrospective Study. Clin. Oral Implant. Res. 2025, 36, 725–735. [Google Scholar] [CrossRef]

- Yu, X.; Tao, B.; Wang, F.; Wu, Y. Accuracy assessment of dynamic navigation during implant placement: A systematic review and meta-analysis of clinical studies in the last 10 years. J. Dent. 2023, 135, 104567. [Google Scholar] [CrossRef]

- Cosola, S.; Toti, P.; Peñarrocha-Diago, M.; Covani, U.; Brevi, B.C.; Peñarrocha-Oltra, D. Standardization of three-dimensional pose of cylindrical implants from intraoral radiographs: A preliminary study. BMC Oral Health 2021, 21, 100. [Google Scholar] [CrossRef]

| PUBMED |

| ((“3D navigation” OR “computer-assisted surgery” OR “robotic-assisted surgery”) AND (“oral surgery” OR “dental surgery” OR “dental implant” OR “implantology” OR “implant”) AND (“static” OR “dynamic” OR “robot assisted” OR “freehand” OR “navigation”) AND (“accuracy” OR “deviation” OR “precision” OR “error”)) |

| Web of Science |

| TS = ((“3D navigation” OR “computer-assisted surgery” OR “robotic-assisted surgery”) AND (“oral surgery” OR “dental surgery” OR “dental implant” OR “implantology”) AND (“static” OR “dynamic” OR “robot assisted” OR “freehand” OR “navigation”) AND (“accuracy” OR “deviation” OR “precision” OR “error”)) |

| Scopus |

| TITLE-ABS-KEY “dental implant” AND (“dynamic navigation” OR “static guide” OR “robotic surgery” OR “computer-assisted surgery”) AND (accuracy OR deviation OR precision OR error)) AND SUJAREA (dent) AND PUBYEAR 2019 AND PUBYEAR < 2026 |

| Authors | Study Design | Navigation Type | Software/ Hardware | Implant Type | Group No. | Sample | Apical Deviation | Coronal Deviation | Angle Deviation |

|---|---|---|---|---|---|---|---|---|---|

| Tao et al. [9] | In vitro study | DCAIS RCAIS | BrainLAB CMF v3.0.6 (Brainlab AG, Munich, Germany) | Conv | 2 | 480 | RS −1.06 ± 0.59 | RS −0.96 ± 0.57 | RS −2.41 ± 1.42 |

| DCAIS −0.91 ± 0.56 | DCAIS −0.83 ± 0.55 | DCAIS −1 ± 0.48 | |||||||

| Pomares Puig et al. [21] | Proof of concept | DCAIS SCAIS Combined | X-Guide | Conv | 2 | 48 | Hybrid: 1.42 ± 0.19 | Hybrid: 1.25 ± 0.55 | Hybrid: 3.74 ± 0.58 |

| Wang et al. [22] | In vitro study | SCAIS DCAIS | Iris-100 (Lizhi YBK Inc., Shanghai, China) | Conv | 2 | 75 | DCAIS −1.59 ± 0.55 | DCAIS −1.19 ± 0.47 | DCAIS −3.38 ± 2.13° |

| SCAIS −1.94 ± 0.72 | SCAIS −1.27 ± 0.45 | SCAIS −3.74 ± 1.86° | |||||||

| Jorba Garcia et al. [11] | Clinical trial | DCAIS Freehand | NaviDent v2.0 | Conv | 2 | 22 | DCAIS −1.42 ± 0.52 | DCAIS −1.12 ± 0.38 | DCAIS −4.02 ± 2.80° |

| FH −2.49 ± 1.43 | FH −1.70 ± 0.69 | FH −7.97 ± 6.25° | |||||||

| Zhao et al. [16] | In vitro study | RCAIS, SCAIS DCAIS FH | RCAIS −Remebot (Beijing Bihui Weikang Technology Co., Ltd., Beijing, China) CoDiagnostiX) | Conv | 4 | 120 | FH −1.01 ± 0.14 | FH −1.09 ± 0.33 | FH −2.74 ± 0.84° |

| SCAIS −0.87 ± 0.07 | SCAIS −0.72 ± 0.33 | SCAIS −1.99 ± 0.76° | |||||||

| DCAIS −0.64 ± 0.05 | DCAIS −0.69 ± 0.29 | DCAIS −0.85± 0.46 | |||||||

| RCAIS −0.47 ± 0.03 | RCAIS −0.48 ± 0.18 | RCAIS −0.53 ±0.20° | |||||||

| Du et al. [23] | In vitro study | Hybrid (s-CAIS + d-CAIS) | Yizhimei (Digital-Care Medical Technology Co., Ltd., Suzhou, China) | Zygo | 2 | 22 | Hybrid: 1.67 mm | Hybrid: 1.13 ± 0.09 | Hybrid: 1.99 ± 0.17 |

| Taheri Otaghsara et al. [10] | In vitro study | SCAIS DCAIS | DENACAM (Mininavident AG, Liestal, Switzerland) coDiagnostiX v10.4.2 | Conv | 2 | 40 | SCAIS −1.45 ± 0.37 | SCAIS −0.88 ± 0.31 | SCAIS −4.39 ± 1.17° |

| DCAIS −1.58 ± 0.56 | DCAIS −0.97 ± 0.32 | DCAIS −4.49 ± 1.88° | |||||||

| González Rueda et al. [24] | Clinical trial | SCAIS DCAIS FREEHAND | NaviDent | Zygo | 3 | 60 | SCAIS −5.33 ± 2.14 | SCAIS −5.54 ± 1.72 | SCAIS −5.30 ± 2.80 |

| DCAIS −4.92 ± 1.89 | DCAIS −5.43 ± 2.13 | DCAIS −7.36 ± 4.12 | |||||||

| FH −3.20 ± 1.45 | FH −4.75 ± 1.58 | FH −8.47 ± 4.40 | |||||||

| Xu et al. [3] | In vitro study | RCAIS −ARG, PRG, SRG DCAIS −ADG, PDG | DCarer (Dcarer Medical Technology Co., Ltd., Suzhou, China) Active Implant Robot (Yekebot Technology Co, Ltd., Beijing, China) Polaris (NDI Inc., Waterloo, ON, Canada) | Conv | 2 | 216 | DCAIS −1.11 ± 0.23 DCAIS −1.07 ± 0.38 | DCAIS −0.85 ± 0.17 DCAIS −1.05 ± 0.42 | DCAIS −1.78 ± 0.73 DCAIS −1.99 ± 1.20 |

| RCAIS −0.29 ± 0.15 RCAIS −0.50 ± 0.19 RCAIS −0.36 ± 0.16 | RCAIS −0.29 ± 0.15 RCAIS −0.40 ± 0.16 RCAIS −0.33 ± 0.14 | RCAIS −0.61 ± 0.25 RCAIS −1.04 ± 0.37 RCAIS −0.42 ± 0.18 | |||||||

| X.Wang et al. [25] | Comparison study | FREEHAND DCAIS | NaviDent | Conv | 2 | 72 | FH −1.91 ± 1.06 FH −2.54 ± 1.58 | FH −1.11 ± 0.58 FH −1.40 ± 1.01 | FH −9.73 ± 4.29 FH −8.15 ± 4.73 |

| DCAIS −1.70 ± 0.77 DCAIS −1.54 ± 0.94 | DCAIS −1.28 ± 0.55 DCAIS −1.07 ± 0.52 | DCAIS −4.03 ± 1.53 DCAIS −2.88 ± 2.51 | |||||||

| Kunakornsawat et al. [26] | Clinical trial | DCAIS | Iris-100 (EPED Inc., Taipei, Taiwan) | Conv | 2 | 144 | DG −1.23 ± 0.59 | DG −0.92 ± 0.58 | DG −2.80 ± 1.17 |

| MG −1.41 ± 0.54 | MG −1.28 ± 0.58 | MG −2.87 ± 1.67 | |||||||

| X.yu Wang et al. [27] | In vitro study | DCAIS—Active and Passive M-PBR F-PBR | Yizhime (Digital-Care Medical Technology Co., Ltd., Suzhou, China) Iris ((EPED Inc., Taipei, Taiwan)) | Conv | 4 | 704 | Active −1.75 ± 0.81 Passive −2.20 ± 1.68 | Active −1.48 ± 0.60 Passive −1.86 ± 1.12 | Active −4.13 ± 2.39 Passive −4.62 ± 3.32 |

| F-PBR −2.54 ± 1.60 M-PBR −1.41 ± 0.62 | F-PBR −1.97 ± 1.08 M-PBR −1.37 ± 0.56 | F-PBR −5.48 ± 2.99 M-PBR −3.27 ± 2.33 | |||||||

| Jaemsuwana et al. [28] | Non-randomized prospective study | FREEHAND SCAIS DCAIS | Iris-100 coDiagnostiX v9.7 | Conv | 3 | 60 | FH −3.60 ± 2.11 SCAIS −1.66 ± 0.61 DCAIS −1.86 ± 0.82 | FH −3.48 ± 2.00 SCAIS −1.40 ± 0.72 DCAIS −1.73 ± 0.43 | FH −10.09 ± 4.64 SCAIS −4.98 ± 2.16) DCAIS −5.75 ± 2.09 |

| X. Wang et al. [29] | In vitro study | FREEHAND SCAIS DCAIS | X-Guide X-Clip (X-Nav Technologies LLC, Lansdale, PA, USA) | Conv | 3 | 72 | FH – EXP −9.73 ± 4.29 NOV −8.15 ± 4.73 | FH – EXP −1.11 ± 0.58 NOV −1.40 ± 1.01 | FH – EXP −9.73 ± 4.29 NOV −8.15 ± 4.73 |

| SCAIS – EXP −7.27 ± 3.82 NOV −7.07 ± 4.38 | SCAIS – EXP −0.83 ± 0.65 NOV −0.92 ± 0.38 | SCAIS – EXP −7.27 ± 3.82 NOV −7.07 ± 4.38 | |||||||

| DCAIS – EXP −3.37 ± 1.56 NOV −3.19 ± 1.89 | DCAIS – EXP −1.09 ± 0.41 NOV −1.14 ± 0.46 | DCAIS – EXP −3.37 ± 1.56 NOV −3.19 ± 1.89 | |||||||

| González-Rueda et al. [17] | In vitro study | SCAIS DCAIS AR SYSTEM FREEHAND | NaviDent | Zygo | 4 | 80 | SCAIS −5.33 ± 2.14 | SCAIS −5.54 ± 1.72 | SCAIS −5.30 ± 2.80° |

| DCAIS −4.92 ± 1.89 | DCAIS −5.43 ± 2.13 | DCAIS −7.36 ± 4.12° | |||||||

| AR SYSTEM −4.88 ± 1.54 | AR SYSTEM −5.64 ± 1.11 | AR SYSTEM −9.60 ± 4.25° | |||||||

| FH −3.20 ± 1.45 | FH −4.75 ± 1.58 | FH −8.47 ± 4.40° | |||||||

| Zhong et al. [30] | Case study | FREEHAND DCAIS | DHC DI2 (Dcarer Medical Technology Co., Ltd., Suzhou, China) | Conv | 2 | 20 | FH −2.08 ± 0.19 | FH −1.28 ± 0.14 | FH −11.38 ± 0.98° |

| DCAIS −1.42 ± 0.11 | DCAIS −0.99 ± 0.06 | DCAIS −3.84 ± 0.47° | |||||||

| Zhou et al. [19] | In vitro study | SCAIS DCAIS | Yizhimei | Conv | 2 | 80 | SCAIS −1.37 ± 0.38 | SCAIS −1.15 ± 0.34 | SCAIS −2.60 ± 1.11° |

| DCAIS −0.34 ± 0.33 | DCAIS −0.40 ± 0.41 | DCAIS −0.97 ± 1.21° | |||||||

| Wu et al. [20] | Clinical analysis | Manual/ Automatic DCAIS | DHC-DI2 Dcarer | Conv | 2 | 58 | Manual:0.99 ± 0.48 | Manual:0.91 ± 0.45 | Manual:2.82 ± 1.17° |

| Auto: 1.11 ± 0.46 | Auto: 2.21 ± 1.19° | Auto: 2.21 ± 1.19° | |||||||

| Lysenko et al. [31] | In vitro study | SCAIS DCAIS FREEHAND | OptiTrack 13 Prime Exoplan v3.0 (Exocad GmbH, Darmstadt, Germany) | Conv | 3 | 63 | NR | NR | SCAIS: 0.53 ± 0.49° |

| DCAIS: 0.49± 0.17° | |||||||||

| FH: ≈ 1.44 ± 0.67° | |||||||||

| Kivovics et al. [32] | In vitro study | AR-DCAIS SCAIS FREEHAND | Innooral System (Innoimplant Ltd., Budapest, Hungary) Magic Leap One (Magic Leap Inc., Miami, FL, USA) | Conv | 3 | 48 | AR-DCAIS −1.34 ± 0.41 | AR-DCAIS −1.27 ± 0.40 | AR-DCAIS −4.09 ± 2.79 |

| SCAIS −1.38 ± 0.41 | SCAIS −1.31 ± 0.42 | SCAIS −3.21 ± 1.52 | |||||||

| FH −2.28 ± 0.74 | FH −1.93 ± 0.79 | FH −5.85 ± 2.60 | |||||||

| Aydemir & Arısan [33] | Clinical trial | DCAIS FREEHAND | NaviDent v1.3 EvaluNav (ClaroNav Technology Inc. Toronto, ON, Canada) | Conv | 2 | 92 | DCAIS −1.83 (±0.12) | DCAIS −1.01 (±0.07) | DCAIS- 5.59 (±0.39) |

| FH −2.51 (±0.21) | FH −1.70 (±0.13) | FH −10.04 (±0.83) | |||||||

| Mampilly et al. [18] | In vitro study | DCAIS FREEHAND | NaviDent Evalunav | Conv | 10 | 60 | DCAIS −5.89 ± 1.08 | DCAIS −5.34 ± 1.45 mm | DCAIS −7.16 ± 1.76 |

| FH −6.95 ± 2.12 | FH −6.19 ± 3.14 | FH −9.06 ± 2.18 | |||||||

| Chen et al. [13] | In vitro study | RCAIS DCAIS | Remebot Yizhime | Conv | 2 | 80 | RCAIS −0.77 ± 0.34 | RCAIS −0.86 ± 0.36 | RCAIS −1.94 ± 0.66° |

| DCAIS −0.95 ± 0.38 | DCAIS −0.70 ± 0.21 | DCAIS −3.44 ± 1.38° | |||||||

| Chen et al. [34] | In vitro study | RCAIS DCAIS | THETA Robot (Hangzhou Jianjia Robot Co., Ltd., Hangzhou, China) Yizhimei | Conv | 2 | 20 | RCAIS: 0.69 ± 0.28 | RCAIS −0.58 ± 0.31 | RCAIS −1.08 ± 0.66 |

| DCAIS: 0.86 ± 0.33 | DCAIS −0.73 ± 0.20 | DCAIS −2.32 ± 0.71 | |||||||

| Feng et al. [35] | Prospective study | DCAIS SCAIS | Dcarer | Conv | 2 | 40 | SCAIS −1.50 ± 0.75 | SCAIS −0.99 ± 0.63 | SCAIS −3.07 ± 2.18 |

| DCAIS −1.18 ± 0.53 | DCAIS −1.06 ± 0.55 | DCAIS −3.23 ± 1.67 | |||||||

| Yotpibulwong et al. [36] | Clinical trial | SCAIS DCAIS FREEHAND | Iris-100 coDiagnostiX v9.7 | Conv | 4 | 120 | SD −0.75 ± 0.57 | SD −0.62 ± 0.50 | SD −1.24 ± 1.41 |

| S −1.40 ± 0.71 | S −1.06 ± 0.67 | S −3.18 ± 2.04 | |||||||

| D −1.28 ± 0.50 | D −1.02 ± 0.45 | D −3.28 ± 1.57 | |||||||

| FH −2.18 ± 0.9 | FH −1.48 ± 0.68 | FH −7.50 ± 4.06 | |||||||

| Kim et al. [37] | Clinical study | SCAIS FREEHAND | Simple Guide Device—custom-designed Osstem TS III (Osstem Implant Co., Ltd., Seoul, Republic of Korea) | Conv | 4 | 124 | FH: 0.75 ± 0.39 | FH: 1.05 ± 0.55 | FH: 8.03 ± 4.34 |

SCAIS: 0.35 ± 0.23 | SCAIS: 0.47 ± 0.26 | SCAIS: 3.00 ± 1.65 | |||||||

| Zhang et al. [14] | Retrospective study | DCAIS RCAIS | Remebot (Beijing Baihui Weikang Technology Co., Ltd., Beijing, China) Dcarer v1.0 | Conv | 2 | 124 | DCAIS: 1.39 ± 0.52 | DCAIS: 1.25 ± 0.54 | DCAIS: 4.09 ± 1.79 |

| RCAIS: 0.69 ± 0.36 | RCAIS: 0.68 ± 0.36 | RCAIS: 1.37 ± 0.92 | |||||||

| Jia et al. [38] | Retrospective study | RCAIS SCAIS | ADIR (YakeRobot Technology Ltd., Xi’an, China) coDiagnostiX | Conv | 2 | 60 | RCAIS −0.52 ± 0.18 | RCAIS −0.43 ± 0.18 | RCAIS −1.48 ± 0.59 |

| SCAIS −1.47 ± 0.65 | SCAIS −1.31 ± 0.62 | SCAIS −2.42 ± 1.55 | |||||||

| Li et al. [15] | Retrospective study | FREEHAND SCAIS RCAIS | Remebot | Conv | 3 | 106 | FH −1.78 ± 0.59 | FH −0.82 ± 0.46 | FH −6.46 ± 2.21 |

| SCAIS −1.24 ± 0.52 | SCAIS −0.74 ± 0.34 | SCAIS −2.94 ± 1.71 | |||||||

| RCAIS −0.65 ± 0.27 | RCAIS −0.35 ± 0.15 | RCAIS −1.46 ± 0.57 | |||||||

| Shusterman et al. [39] | In vitro study | MR-DCAIS SCAIS FREEHAND | HoloLens 2 (Microsoft Corp., Redmond, WA, USA) ANNA v1.8.5 (MARS Dental AI, Haifa, Israel) M-guide v2.19.0 (M-Soft, MIS Implant Technologies Ltd., HaZafon, Israel) | Conv | 3 | 135 | DCAIS −0.40 ± 0.16 | DCAIS −0.33 ± 0.16 | DCAIS −0.85 ± 0.32 |

| SCAIS −0.49 ± 0.12 | SCAIS −0.36± 0.12 | SCAIS −1.20 ± 0.39 | |||||||

| FH −1.53 ± 0.66 | FH −1.08 ± 0.41 | FH −4.43 ± 1.72° | |||||||

| Yimarj et al. [40] | Clinical trial | SCAIS DCAIS | Iris-100 coDiagnostiX v9.7 | Conv | 2 | 60 | SCAIS −1.54 ± 0.79 | SCAIS −1.04 ± 0.67 | SCAIS −4.08 ± 1.69 |

| DCAIS −1.58 ± 0.56 | DCAIS −1.24 ± 0.39 | DCAIS −3.78 ± 1.84 | |||||||

| Neuschitzer et al. [41] | In vitro study | DCAIS Freehand | NaviDent | Conv | 2 | 18 | FH −1.39 ± 0.74 | FH −1.73 ± 0.77 | FH −5.17 ± 2.54 |

| DCAIS −1.58 ± 0.56 | DCAIS −1.18 ± 0.36 | DCAIS −1.29 ± 0.64 | |||||||

| Hama & Mahmood [42] | In vitro study | SCAIS FREEHAND | CoDiagnostiX | Conv | 2 | 60 | FH: 2.81 ± 0.86 | FH: 0.34 ± 0.32 | FH: 3.67 ± 0.76 |

| sCAIS: 1.91 ± 0.29 | sCAIS: 0.17 ± 0.13 | sCAIS: 0.87 ± 1.17 | |||||||

| Huang et al. [43] | Clinical study | SCAIS FREEHAND | Materialise Magics | Conv | 2 | 48 | FH −1.44 ± 0.63 | FH −1.07 ± 0.44 | FH −4.31 ± 3.37 |

| SCAIS −0.97 ± 0.39 | SCAIS −0.48 ± 0.26 | SCAIS −2.36 ± 1.70 | |||||||

| Rueda et al. [44] | In vitro study | DCAIS FREEHAND | NaviDent EvaluNav | Zygo | 2 | 39 | FH −3.20 ± 1.45 | FH −4.75 ± 1.58 | FH −8.47 ± 4.40 |

| DCAIS −4.92 ± 1.89 | DCAIS −5.43 ± 2.13 | DCAIS −7.36 ± 4.12 | |||||||

| Stefanelli et al. [45] | Case study | DCAIS | NaviDent EvaluNav | Conv | 2 | 77 | Group A −1.17 ± 0.31 | Group A −0.72 ± 0.32 | Group A −3.10 ± 1.02 |

| Group B −0.88 ± 0.37 | Group B −0.61 ± 0.33 | Group B −2.41 ± 0.98 | |||||||

| Kang et al. [46] | Retrospective study | SCAIS | 3Shape Implant Studio (3Shape A/S, Copenhagen, Denmark) | Conv | 3 | 62 | SCAIS −5.09 ± 0.99 SCAIS- 2.04 ± 0.60 SCAIS −1.46 ± 1.09 | SCAIS −4.64 ± 1.19 SCAIS −2.08 ± 0.85 SCAIS −0.95 ± 0.47 | SCAIS −9.11 ± 3.43 SCAIS −1.91 ± 1.61 SCAIS −3.02 ± 1.59 |

| Yan et al. [47] | In vitro study | DCAIS | Yizhime | Conv | 2 | 208 | DCAIS: 1.04 ± 0.47 DCAIS: 1.11 ± 0.46 | DCAIS: 0.91 ± 0.45 DCAIS: 0.89 ± 0.48 | CAIS: 2.21 ± 1.19 DCAIS: 2.82 ± 1.17 |

| Jorba-Garcia et al. [48] | In vitro study | DCAIS Freehand | NaviDent EvaluNav | Conv | 2 | 36 | FH: 2.26 ± 1.11 | FH: 1.50 ± 0.58 | FH: 9.7 ± 5.2 |

| DCAIS: 1.33 ± 0.50 | DCAIS: 1.29 ± 0.46 | DCAIS: 1.6 ± 1.3 | |||||||

| Guzman et al. [49] | In vitro study | SCAIS DCAIS | CoDiagnostiX NaviDent | Conv | 2 | 40 | SCAIS: 1.20 ± 0.48 | SCAIS: 0.78 ± 0.43 | SCAIS: 2.95 ± 1.48 |

| DCAIS: 1.18 ± 0.60 | DCAIS: 0.85 ± 0.48 | DCAIS: 4.00 ± 1.41 | |||||||

| Chen et al. [50] | In vitro study | SCAIS DCAIS RCAIS | coDiagnostiX v10.4.2 Optitrack sa-RASS (Hangzhou Jianjia Medical Technology Co., Ltd., Hangzhou, China) Prototype Robot | Conv | 3 | 90 | SCAIS: 0.98 ± 0.32 | SCAIS: 0.71 ± 0.28 | SCAIS: 3.12 ± 1.44 |

| DCAIS: 0.85 ± 0.27 | DCAIS: 0.62 ± 0.22 | DCAIS: 2.63 ± 1.12 | |||||||

| RCAIS: 0.58 ± 0.14 | RCAIS: 0.50 ± 0.18 | RCAIS: 0.95 ± 0.30 | |||||||

| Kaewsiri et al. [12] | Randomized controlled clinical trial | SCAIS DCAIS | CoDiagnostiX v9.7 IRIS-100 | Conv | 2 | 48 | SCAIS: 1.28 ± 0.46 | SCAIS: 0.97 ± 0.44 | SCAIS: 2.84 ± 1.71 |

| DCAIS: 1.29 ± 0.50 | DCAIS: 1.05 ± 0.44 | DCAIS: 3.06 ± 1.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pisla, D.; Bulbucan, V.; Hedesiu, M.; Vaida, C.; Pusca, A.; Mocan, R.; Tucan, P.; Dinu, C.; Pisla, D. Accuracy of Navigation and Robot-Assisted Systems for Dental Implant Placement: A Systematic Review. Dent. J. 2025, 13, 537. https://doi.org/10.3390/dj13110537

Pisla D, Bulbucan V, Hedesiu M, Vaida C, Pusca A, Mocan R, Tucan P, Dinu C, Pisla D. Accuracy of Navigation and Robot-Assisted Systems for Dental Implant Placement: A Systematic Review. Dentistry Journal. 2025; 13(11):537. https://doi.org/10.3390/dj13110537

Chicago/Turabian StylePisla, Daria, Vasile Bulbucan, Mihaela Hedesiu, Calin Vaida, Alexandru Pusca, Rares Mocan, Paul Tucan, Cristian Dinu, and Doina Pisla. 2025. "Accuracy of Navigation and Robot-Assisted Systems for Dental Implant Placement: A Systematic Review" Dentistry Journal 13, no. 11: 537. https://doi.org/10.3390/dj13110537

APA StylePisla, D., Bulbucan, V., Hedesiu, M., Vaida, C., Pusca, A., Mocan, R., Tucan, P., Dinu, C., & Pisla, D. (2025). Accuracy of Navigation and Robot-Assisted Systems for Dental Implant Placement: A Systematic Review. Dentistry Journal, 13(11), 537. https://doi.org/10.3390/dj13110537