Abstract

Camouflaged target segmentation has been widely used in both civil and military applications, such as wildlife behaviour monitoring, crop pest control, and battle reconnaissance. However, it is difficult to distinguish camouflaged objects and natural backgrounds using traditional grey-level feature extraction. In this paper, a compressive bidirectional reflection distribution function-based feature extraction method is proposed for effective camouflaged object segmentation. First, multidimensional grey-level features are extracted from multiple images with different illumination angles in the same scene. Then, the multidimensional grey-level features are expanded based on Chebyshev polynomials. Next, the first several coefficients are integrated as a new optical feature, which is named the compressive bidirectional reflection distribution function feature. Finally, the camouflaged object can be effectively segmented from the background by compressive feature clustering. Both qualitative and quantitative experimental results prove that our method has remarkable advantages over conventional single-angle or multi-angle grey-level feature-based methods in terms of segmentation precision and running speed.

1. Introduction

Camouflaged object segmentation has been extensively applied in many fields, e.g., crop pest control [1,2,3,4], disaster rescue [5,6], and battlefield scout [7,8,9]. Conventional segmentation methods usually use one-dimensional or multidimensional grey-level features to distinguish the object of interest from a natural background. However, there are some technological difficulties in these algorithms. On the one hand, the segmentation precision is hard to guarantee, since the grey values of camouflaged object and background are close to each other and the boundary line is blurred. Furthermore, the multidimensional grey-level feature-based methods always suffer from a large amount of computation and serious data redundancy, which makes it difficult to improve the computing speed of the segmentation algorithm. Therefore, it is of great necessity to further design new camouflaged object segmentation methods by means of exploiting other effective optical features.

Recent research progress has shown that there are two main types of camouflaged object segmentation methods based on grey-level features, including one-dimensional grey-level feature-based approaches and multidimensional grey-level feature-based approaches. Some methods use the one-dimensional grey-level feature for object segmentation. To solve the segmentation difficulties caused by brightness and contrast changes, varying inclination and shadow, Goccia et al. [10] optimised the feature weights and the prototype sets by a new gradient-based learning algorithm to achieve good recognition performances. To discriminate the individual objects in natural outdoor scenes, Zhao et al. [11] converted the obtained RGB image into an HSI image, and used the H grey-level threshold to segment out the object region in the image. For separating the camouflaged objects from the image with noise and shadow, Zheng et al. [12] introduced a mean-shift procedure into the segmentation algorithm based on multiple colour features. To overcome the difficulty of object detection caused by nonobvious shadow, Chen et al. [13] proposed a detection method based on the image grey value feature, which avoided the dependence of image on solar elevation. In order to achieve accurate segmentation of objects in a natural environment, Guo et al. [14] proposed an image segmentation method combining the crow search algorithm and K-means clustering algorithm, by which the segmentation accuracy of the proposed algorithm was significantly improved. However, these algorithms were conducted based on the considerable difference between object and background. When the grey values of object and background are close to each other, the accuracy of the algorithms will be reduced. Some other methods use multidimensional grey-level features extracted from a series of images to segment the object from background. To explore methods for rapid detection of different leaf objects, Liu [15] extracted feature bands from images with multiple light angles and multispectral bands to find the most suitable angle and band for distinguishing healthy leaves from diseased ones. To analyse the difference between the camouflaged object and different backgrounds in hyperspectral images, Yan et al. [16] evaluated the camouflage effect of a camouflaged object in the background from spectral reflectance, information theory, spectral angle, and spectral gradient, respectively. For avoiding the problem that some objects can only be detected at specific angles, Yang [17] proposed a method of object detection using multiple images taken under a multi-angle light source. To improve the accuracy of textile colour segmentation, Wu [18] proposed a method based on hyperspectral imaging to obtain the colour number of textiles by clustering the multidimensional features. Wang et al. [19] selected several distinct characteristic bands, based on which they set a threshold of the image to segment the objects from the similar background. Wang et al. [20] acquired many images of an object under different lighting conditions to obtain samples of the surface’s BRDF, which help to separate the material types by segmentation. Skaff [21] classified materials using illumination by spectral light from multiple different incident angles, where the incident angle and/or spectral content of each light source was selected based on a mathematical clustering analysis of training data. However, their algorithms required a lot of image collection work and a large amount of calculation to achieve the appropriate feature dimensions, which led to a low speed of computing.

In conclusion, the existing methods are deficient in accuracy and speed, mainly because (1) the one-dimensional grey-level feature of the camouflaged object is very close to background; (2) when the camouflaged object and background are segmented by multidimensional grey-level feature-based methods, there is serious data redundancy, which leads to excessive computation. To solve these problems, a compressive bidirectional reflection distribution function (BRDF)-based feature extraction method is proposed. First, the grey-level feature is extracted from a single frame image. The one-dimensional grey levels extracted from multiple images with different illumination angles constitute the multidimensional grey-level feature. Then, we expand the multidimensional grey-level features by Chebyshev polynomials. Next, the first several coefficients of the polynomials are used as the compressive BRDF feature. Finally, we segment the camouflaged object from background by clustering the compressive BRDF feature of each pixel. The method can be used for the detection of small camouflaged objects by near-earth UAVs and the detection of large ground camouflaged objects by geostationary satellites.

The main contributions of our study can be summarised as follows:

- A multidimensional feature extraction method using multi-angle illumination images is proposed to improve the accuracy of object segmentation. The influence of the illumination image on reflectance is considered to help enrich the details of object;

- We present a compression method for multidimensional BRDF features to improve the computing speed of feature clustering. In addition, the degree of retention of valid information during the compression process is also considered;

- Experimental results demonstrate the usefulness and effectiveness of the proposed compressive BRDF feature extraction method in both segmentation accuracy and execution time.

This paper is organised as follows: Section 2 introduces the background of our study; in Section 3, the camouflaged object segmentation method based on compressive BRDF feature extraction is introduced in detail; Section 4 discusses both the quantitative and qualitative experimental results; finally, a summary of this work is drawn in Section 5.

2. Background

By reviewing the conventional object segmentation methods based on multiple features, it can be found that these methods are based on the difference between the object and background in some physical properties. We focus on the differences in reflectance and roughness between the object and background [22]. In this section, the BRDF model is introduced. BRDF describes the relative amount of reflected radiation of incident irradiation, expressed as [23]

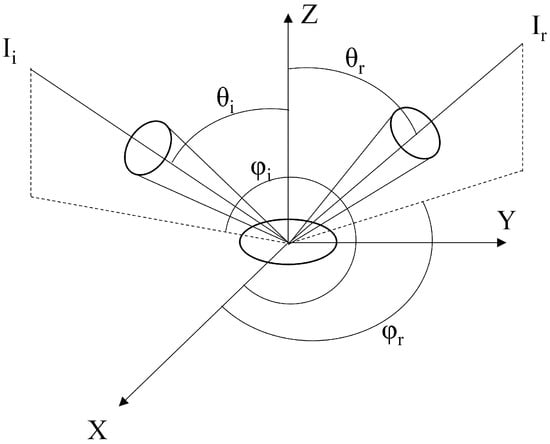

where is the incident zenith angle, is the emergent zenith angle, is the incident orientation angle, is the emergent orientation angle, and means the wavelength of incident light. In Equation (1), represents the radiance in the emergent direction and represents the irradiance in the incident direction. Figure 1 shows the light angles in BRDF model.

Figure 1.

Diagram of the light angles in BRDF model.

Researchers have proposed many BRDF models to describe different scenes. One of the classical models is the Cook-Torrance model [24], which assumes that the surface of the medium is composed of a series of plane elements whose direction vectors satisfy Gaussian distribution. The Cook-Torrance model can be expressed as

where D denotes the facet slope distribution function and G represents the geometrical attenuation factor. The values of D and G are connected with the changes in incident angle and emergent angle of light.

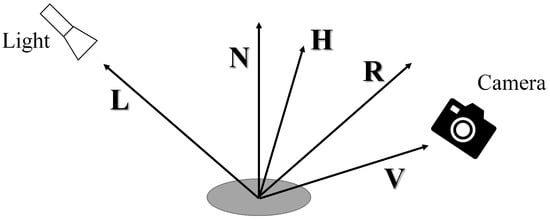

The surfaces of the object and background are observed as a mass of tiny elements. Figure 2 shows the reflection process occurring on the element of surface.

Figure 2.

Diagram of the reflection process.

As shown in Figure 2, L is the unit vector in the direction of light source. N is the unit surface normal. H is the unit internal bisector of V and L. R is the unit vector in the reflection direction of incident light. V is the unit vector in the direction of the viewer. The surface element is not an ideal smooth surface. The facet slope distribution function D is expressed as

where is the angle between N and H, and is the root mean square slope of facets. The value of D is related to the roughness of the material surface.

The geometrical attenuation factor G is expressed as

In other words, G means the degree to which the small element is obscured from incident light; so, it is related to the reflectivity of the material surface.

In this paper, we conduct object segmentation on a scene in which both the camouflaged object and background exist. The Cook-Torrance model is applicable, as it considers both specular and diffuse reflectance components. Equation (2) has several input parameters. We examined the relationship between the reflectance and one of the input parameters, to make the feature of material easy to observe. The following assumptions are given:

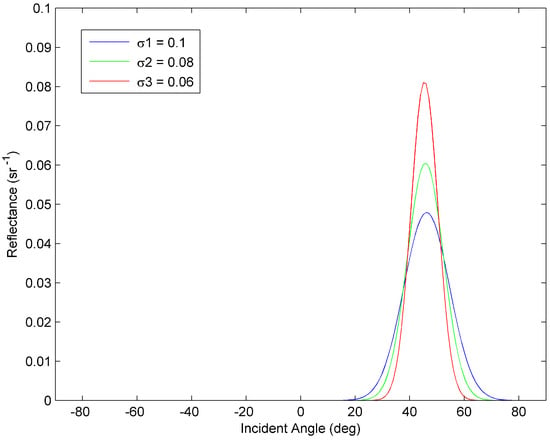

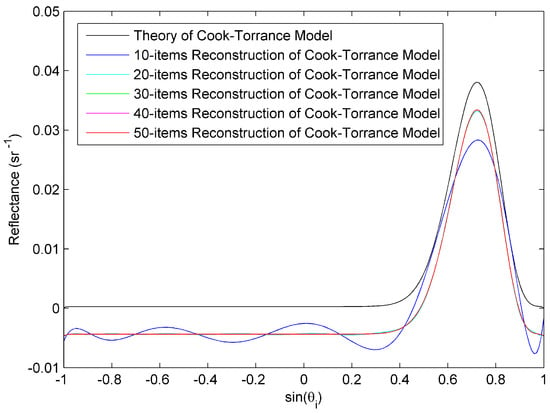

where C stands for a constant in the visible wavelength range. Equation (3) means the incident and emergent angles are in the same plane. Then, Equation (2) can be expressed as a function with as the only independent variable. Figure 3 shows the Cook-Torrance model with the above assumptions.

Figure 3.

Diagram of the Cook-Torrance model (reflectance vs. incident angle).

As shown in Figure 3, the curves of reflectance and incident angle are related to , which represents the root mean square slope of facets and has an effect on the value of D. The camouflaged object has a different material from the background. Their BRDF curves are usually not consistent. Therefore, the object segmentation method can be implemented based on the BRDF feature.

3. Model Construction of Camouflaged Object Segmentation Method Based on Compressive BRDF Feature Extraction

In order to improve the accuracy and speed of the camouflaged object segmentation method, a compressive BRDF feature extraction method is introduced in this section. The compressive BRDF feature is used in image clustering to achieve the segmentation of the camouflaged object. The compressive BRDF feature is achieved by reducing the dimension of multidimensional grey-level features extracted from the multi-illumination angle images. It improves the running speed of the method as well as guarantees the accuracy of the method.

3.1. Extraction of the Compressive BRDF Feature

When a continuous function is defined on the interval [−1, 1], it can be expanded on the Chebyshev polynomials, expressed as [25]

where is the No. n item of the Chebyshev polynomials, which can be obtained by the recursive formulas, expressed as [26]

The Chebyshev polynomials are orthogonal. The Runge phenomenon can be minimised when using Chebyshev polynomials for function interpolation. The relationship between the items conforms to the following formula,

In Section 2, assumptions are proposed to transform BRDF into a function of . The range of is . Therefore, the range of is [−1, 1]. Taking as the independent variable of Equation (2), it conforms to the condition of Chebyshev polynomial expansion. The coefficient of the expansion item is calculated as follows:

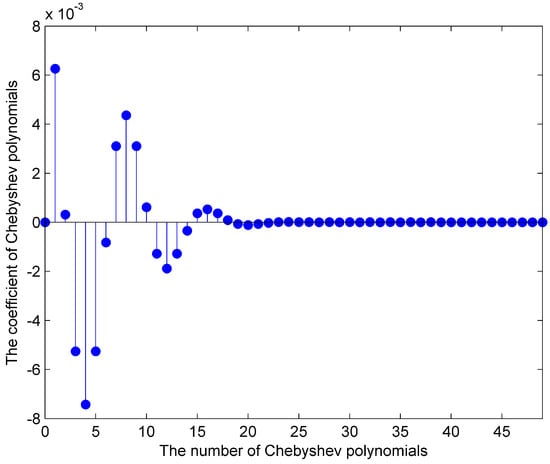

Figure 4 shows the coefficients of the first 50 Chebyshev polynomials. Figure 5 shows the reconstruction result of the Cook-Torrance model based on the first 20 coefficients and the Chebyshev polynomials.

Figure 4.

Diagram of the coefficients of Chebyshev polynomial expansion.

Figure 5.

Diagram of the theory and reconstruction of the Cook-Torrance model.

As shown in Figure 5, the Cook-Torrance model can be reconstructed by using the first several items of Chebyshev polynomials. When the number of items is greater than 20, the curves in the x-coordinate range [0, 1] are not much different. The x-coordinate range [0, 1] is the range of incident angles in the experiment. When the Chebyshev polynomials involved in the reconstruction are less than 20 items, the reduction of the polynomials will reduce the accuracy of the reconstructed curve. However, the reduction of the items will increase the computing speed of compressive BRDF feature extraction at the same time. Although there are differences between the reconstructed curves and the original curve, the reconstructed curves can reflect the change trend of the original curve to some extent.

In this paper, we use a set of images with multiple illumination angles to extract the BRDF curves of the camouflaged object and background. The first several coefficients of the Chebyshev polynomials expansion on the BRDF curve are taken as the compressive BRDF feature. In the process of feature extraction, data loss is inevitable; however, the retained data can still reflect the difference between object and background.

3.2. Object Segmentation Based on the Compressive BRDF Feature

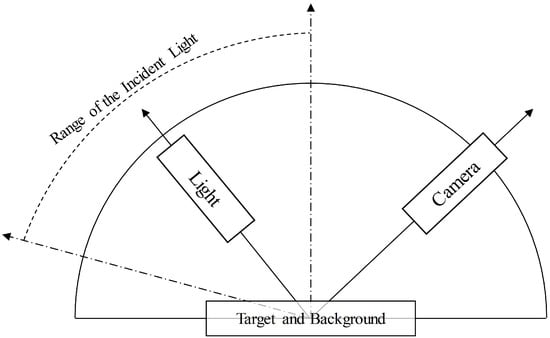

According to the above theory, the compressive BRDF feature can be extracted by multi-angle illumination images. The images can be taken by the experimental platform as shown in Figure 6.

Figure 6.

Diagram of the BRDF model measuring equipment.

As shown in Figure 6, the incident angle of light varies between 0 and 76, taking a point every 2. The emergent angle is fixed as 45. The incident light comes from an LED white light source. It is a broad-band source, whose energy is mainly in the wavelength from 450 nm to 500 nm. The divergence of the beam is about 120. The camera is a single-channel camera with a focal length of 25 mm. The pixel size is 640 pixels × 512 pixels. By using this testing device, 39 images of the same scene can be obtained. The same pixel in each image corresponds to the same position on the object or background. The 39-dimensional grey-level feature of each pixel can be obtained.

By discretising Equations (15) and (16), the following formulas are obtained:

where N is the number of images; represents the grey level of images; and denotes the input parameter of BRDF, expressed as

Equations (17) and (18) are used to perform the same processing on each pixel to obtain the low-dimensional compressed BRDF feature . By clustering the pixels into two categories, the camouflaged object and background are segmented. The steps of the proposed camouflaged object segmentation method are as follows in Table 1.

Table 1.

Steps of the proposed camouflaged object segmentation method.

K-means clustering algorithm is an unsupervised clustering algorithm proposed by Mac Queen [27], which divides the dataset into K data subsets by minimising the error function. In this paper, the error function is expressed as

where K = 2 denotes the number of clustering, represents the compressive BRDF feature of the pixel in row and column , is the cluster of number r, and represents the central value of .

4. Experiment and Analysis

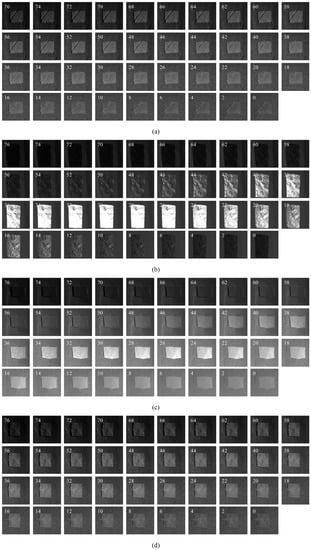

4.1. Analysis of Feature Compression of Different Dimensions

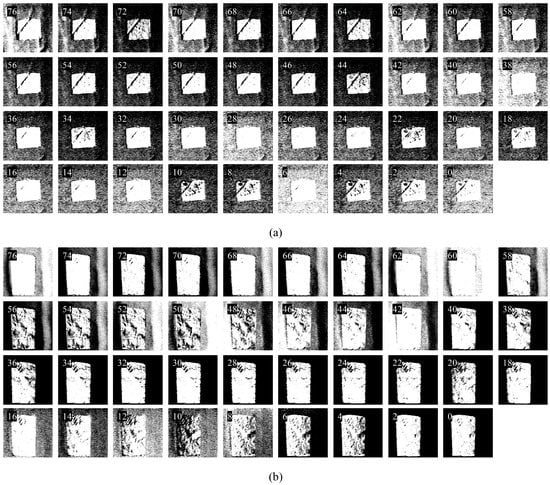

In order to verify that the low-dimensional compression of the multi-angle grey-level feature can achieve good effect in accuracy and running speed, the compressions of different dimensional features used in the camouflage object segmentation method are shown in this section. All the codes in this work are implemented by MATLAB 2012b on a PC with Intel i7-9700 CPU and 32.0 GB RAM. The program runs on one CPU core. There are five groups of original images, with 39 images in each group, as shown in Figure 7. In groups 1 to 4, the size of the single-channel grey images to be segmented is 250 pixels × 250 pixels, and the object and background are flat. The size of images in group 5 is 300 pixels × 300 pixels. In group 5, the top-left corner of the images has uneven folds. The central region of each image is the leaves, and the background is camouflaged cloth of the same colour.

Figure 7.

Diagram of all the images to be segmented: (a) Group 1; (b) Group 2; (c) Group 3; (d) Group 4; (e) Group 5. Note that the incident angles of light are marked on the images.

In each diagram of Figure 7, from left to right and from top to bottom, the light incident angles range from 76 to 0 with an interval of 2. As shown in Figure 7, in some images, the object and background can be easily distinguished by human eyes. However, in the other images, the grey values of the object and background are very close, leading to the difficulty of segmentation.

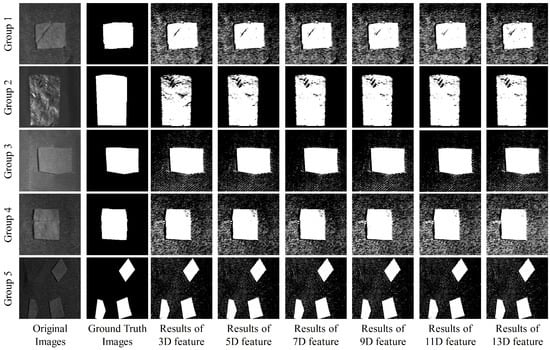

Figure 8 shows the effect of camouflaged object segmentation based on different dimensional compressive BRDF features.

Figure 8.

Diagram of the segmentation result images achieved by different dimensional features.

As shown in Figure 8, from the first to the last row are the experimental results of groups 1 to 5. The first column shows the original images with the incident light of 44. The second column is the ground truth images with the object position manually labelled. From the third to the eighth columns are the segmentation results based on different dimensional compressive BRDF features. In Figure 8, no matter the number of feature dimensions, the segmentation results of the objects are good. To evaluate the segmentation performance, the probability and the false alarm rate of the segmentation performance are defined as follows [28,29]:

where represents the pixel number of the true object area in the result images; denotes the pixel number of the ground truth object; is the pixel number of the false object area in the result images; and w and h are the width and height of images, respectively. The probability and the false alarm rate of segmentation results based on different dimensional compressive BRDF features are shown in Table 2. In addition, the running time of the experiments, recorded by MATLAB’s built-in function, is shown in Table 3.

Table 2.

Probability and false alarm rate of segmentation results obtained by different dimensional features.

Table 3.

Running time of the proposed method obtained by different dimensional features.

As shown in Table 2 and Table 3, the difference in dimensions has little effect on the performance of the proposed compressive BRDF feature-based method. The running times of groups 1 to 4 are close to each other. Group 5 processed larger images than the first four groups; so, the running time is longer. Therefore, when comparing with other methods, we take the 7-dimensional compressive BRDF feature for the experiments.

4.2. Comparative Analysis of Different Camouflaged Object Segmentation Methods

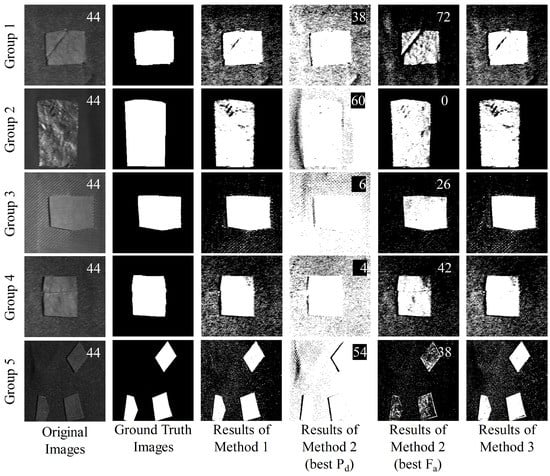

The comparisons between the compressive BRDF feature proposed in this paper, the grey-level feature used by Guo et al. [14], and the multi-dimensional grey-level feature constructed by Wang et al. [19] are conducted in this section. Method 1 is a clustering method using the 7-dimensional compressive BRDF features proposed in this paper. Method 2 uses the 1-dimensional grey feature for clustering. Method 3 uses the 39-dimensional grey-level feature for clustering. The performance of the three methods is shown in Figure 9.

Figure 9.

Diagram of the segmentation result images. Note that the incident angles of light are marked on the original images and the result images of Method 2.

In Figure 9, each row shows the result images of each group obtained by the three methods. The first and second column are the original images and the ground truth images. The third column shows the results images achieved by Method 1. All the images in Figure 6 are segmented using Method 2, with the fourth column showing the result images with the highest probability and the fifth column showing the result images with the lowest false alarm rate . The numbers on them represent the incident light angle of original images. The sixth column indicates the result images obtained by Method 3. As shown in Figure 8, the performance of Method 2 is unstable, which means that the result images are easily affected by the incident light angle. The performance of Method 3 is better than that of Method 2 and close to Method 1. However, it needs more running time. The probability and false alarm rate of the segmentation results obtained by the three methods are shown in Table 4. The running times of the experiments are shown in Table 5.

Table 4.

Probability and false alarm rate of segmentation results obtained by the three methods.

Table 5.

Running time of the proposed method obtained by the three methods.

As shown in Table 4, compared with Method 2, the probability of segmentation achieved by Method 1 is more stable. Regardless of the result images with the highest probability or the lowest false alarm rate, the visual segmentation effect is not as good as method 1. Compared with Method 2, the probability of the proposed method is improved by 17.44% and the false alarm rate is reduced by 39.98%. Compared with Method 3, the probability of segmentation is improved by 2.26%, with the false alarm rate slightly higher. As shown in Table 5, the running time of Method 1 is reduced by 42.70% compared with Method 3. In conclusion, compared with one-dimensional and multi-dimensional grey-level features, the comprehensive performance of the presented compressive BRDF feature for camouflaged object segmentation methods has remarkable advantages.

4.3. Comparison of Performance for Methods Based on Grey Images

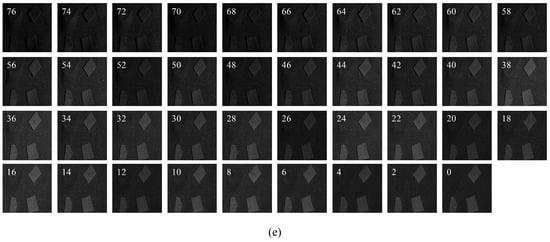

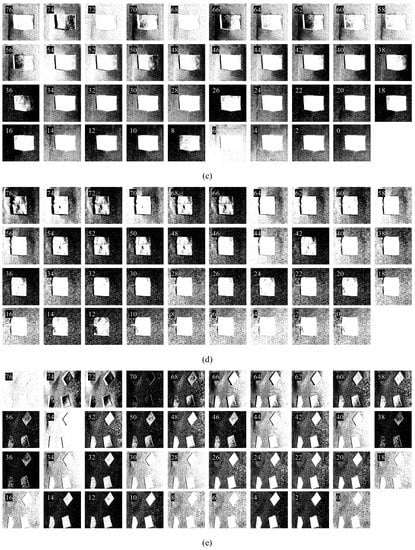

In Section 4.2, we compare the result images obtained by Method 1 with the best-performance result images obtained by Method 2. However, the highest probability and the lowest false alarm rate do not occur in the same resulting image. A detailed comparison of performance between the proposed Method 1 and Method 2 based on one-dimensional grey-level feature is shown in this section. The result images obtained by Method 2 are shown in Figure 10.

Figure 10.

Diagram of the segmentation result images obtained by Method 2: (a) Group 1; (b) Group 2; (c) Group 3; (d) Group 4; (e) Group 5. Note that the incident angles of light are marked on the images.

In each diagram of Figure 10, from left to right and from top to bottom, the light incident angles are from 76 to 0. As shown in Figure 10, the segmentation effect is unstable. The probability and false alarm rate of segmentation results obtained by the three methods are shown in Table 6.

Table 6.

Probability and false alarm rate of segmentation results obtained by Method 2.

We compare the performance data of result images obtained by Method 2 with the performance data in the second column of Table 4 in Section 4.2. The result of the comparison is shown in Table 7.

Table 7.

Comparison of the performance of Method 1 and Method 2.

As shown in Table 6 and Table 7, compared with Method 2, the result images of Method 1 are higher in the probability than 61.03% of the result images obtained by Method 2. The false alarm rate of Method 1 is lower than 85.64% of the result images obtained by Method 2. No result images can be better than Method 1 on both performances. The running times of the experiments are shown in Table 8.

Table 8.

Running time of the segmentation results obtained by Method 2.

5. Conclusions

5.1. Summary of Our Method

In this paper, a compressive BRDF-based feature extraction method for camouflaged object segmentation is proposed. First, multidimensional grey-level features are extracted from multiple images with different illumination incident angles in the same scene. Then, we conduct the Chebyshev polynomials expansion on the multidimensional grey-level features. Next, we use the first several coefficients as a new feature, which is named the compressive BRDF feature. Finally, by clustering the compressive BRDF feature of each pixel, the camouflaged object is segmented from the background. Both qualitative and quantitative experimental results verify that the comprehensive performance of the presented camouflaged object segmentation method has remarkable advantages over conventional methods.

5.2. Limitations

The proposed method has some limitations. It is suitable for situations where the multi-dimensional grey-level features of the camouflaged object can be extracted. However, it cannot be used in instances where the camouflaged object is in an image with a fixed illumination angle and a fixed shooting angle. In addition, the false alarm rate of the presented method needs to be further reduced by improving the method.

5.3. Application Discussion

The proposed method can be applied in drone reconnaissance, when the detector appears in the same location at multiple times. In this application, image registration is required before segmentation. Another application is the detection of large camouflaged targets on the ground using geostationary satellites. In this condition, the loss of light intensity in the atmospheric transport needs to be considered. The proposed method is still in the stage of theoretical research, and further study is needed for practical application.

Author Contributions

Conceptualization, X.C.; data curation, Y.X. and X.K.; formal analysis, A.S.; funding acquisition, G.G. and M.W.; investigation, X.C.; methodology, X.C. and M.W.; project administration, Q.C.; resources, Q.C.; supervision, G.G. and M.W.; validation, G.G. and M.W.; visualization, X.K.; writing—original draft, X.C.; writing—review and editing, Y.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant (62001234, 62201260), the Natural Science Foundation of Jiangsu Province under Grant (BK20200487), the Shanghai Aerospace Science and Technology Innovation Foundation under Grant (SAST2020-071), the Fundamental Research Funds for the Central Universities under Grant (JSGP202102), the Equipment Pre-research Weapon Industry Application Innovation Project under Grant (627010402), and the Equipment Pre-research Key Laboratory Fund Project under Grant (6142604210501).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data that support the findings of this study are available at https://github.com/MinjieWan/Compressive-BRDF-based-Feature-Extraction-Method-for-Camouflaged-Object-Segmentation.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Shinde, R.C.; Jibu, M.C.; Patil, C. Segmentation Technique for Soybean Leaves Disease Detection. Int. J. Adv. Res. 2015, 5, 522–528. [Google Scholar]

- Bukhari, H.R.; Mumtaz, R.; Inayat, S.; Shafi, U.; Haq, I.U.; Zaidi, S.M.H.; Hafeez, M. Assessing the Impact of Segmentation on Wheat Stripe Rust Disease Classification Using Computer Vision and Deep Learning. IEEE Access 2021, 9, 164986–165004. [Google Scholar] [CrossRef]

- Patil, R.; Hegadi, R.S. Segmrntation on cotton insects and pests using image processing. In Proceedings of the National Conference on Current Trends in Advanced Computing and e-Learning, Uttar Pradesh, India, 6–7 October 2009. [Google Scholar]

- Yan, J.; Le, T.; Nguyen, K.; Tran, M.; Do, T.; Nguyen, T.V. MirrorNet: Bio-Inspired Camouflaged Object Segmentation. IEEE Access 2021, 9, 43290–43300. [Google Scholar] [CrossRef]

- Chowdhury, T.; Rahnemoonfar, M. Attention based semantic segmentation on UAV dataset for natural disaster damage assessment. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021. [Google Scholar]

- Ren, Y.; Liu, Y. Geological disaster detection from remote sensing image based on experts’ knowledge and image features. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016. [Google Scholar]

- Ji, Z.; Meng, X. Automatic Identification of Targets Detected by Battlefield Scout Ragar. J. Harbin Inst. Technol. 2011, 33, 830–833. (In Chinese) [Google Scholar]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Chen, Q. Stokes-vector-based Polarimetric Imaging System for Adaptive Target/Background Contrast Enhancement. Appl. Opt. 2016, 55, 5513–5519. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Wan, M.; Ge, J.; Chen, H.; Zhu, X.; Zhang, X.; Chen, Q.; Gu, G. ColorPolarNet: Residual Dense Network-Based Chromatic Intensity-Polarization Imaging in Low Light Environment. IEEE Trans. Instrum. Meas. 2022, 71, 5025210. [Google Scholar] [CrossRef]

- Goccia, M.; Bruzzo, M.; Scagliola, C.; Dellepiane, S. Recognition of container code characters through gray–level feature extraction and gradient–based classifier optimization. In Proceedings of the International Conference on Document Analysis & Recognition, Edinburgh, UK, 3–6 August 2003. [Google Scholar]

- Zhao, J.; Liu, M.; Yang, G. Discrimination of Mature Tomato Based on HIS Color Space in Natural Outdoor Scenes. Trans. Chin. Soc. Agric. Mach. 2004, 35, 122–125. (In Chinese) [Google Scholar]

- Zheng, L.; Zhang, J.; Wang, Q. Mean-shift-based Color Segmentation of Images Containing Green Vegetation. Comput. Electron. Agric. 2009, 65, 93–98. [Google Scholar] [CrossRef]

- Chen, J.; Zhu, S.; Cui, H.; Cui, P. Automated Crater Detection Method Using Gray Value Features and Planet Landing Navigation Research. J. Astronaut. 2014, 35, 908–915. (In Chinese) [Google Scholar]

- Guo, C.; Wang, X.; Shi, C.; Jin, H. Corn Leaf Image Segmentation Based on Improved Kmeans Algorithm. J. North Univ. China (Nat. Sci. Ed.) 2021, 42, 524–529. (In Chinese) [Google Scholar]

- Liu, X. Multi-Angle Identification of Potato Late Blight Based on Multi-Spectral Imaging. Master’s Thesis, Yunnan Normal University, Kunming, China, 7 June 2017. (In Chinese). [Google Scholar]

- Yan, Y.; Hua, W.; Zhang, Y.; Cui, Z.; Wu, X.; Liu, X. Hyperspectral camouflage object characteristic analysis. In Proceedings of the International Symposium on Advanced Optical Manufacturing and Testing Technologies: Optoelectronic Materials and Devices for Sensing and Imaging, Chengdu, China, 8 February 2019. [Google Scholar]

- Yang, Y. Bearing Surface Defect Detection System Based on Multi-Angle Light Source Image. Master’s Thesis, Dalian University of Technology, Dalian, China, 3 June 2019. (In Chinese). [Google Scholar]

- Wu, J. Research on Textiles Color Segmentation and Extraction Based on Hyperspectral Imaging Technology. Master’s Thesis, Zhejiang Sci-Tech University, Hangzhou, China, 24 December 2019. (In Chinese). [Google Scholar]

- Wang, C.; Chen, W.; Lu, C.; Wang, Q. Segmentation Method for Maize Stubble Row Based on Hyperspectral Imaging. Trans. Chin. Soc. Agric. Mach. 2020, 51, 421–426. (In Chinese) [Google Scholar]

- Wang, O.; Gunawardane, P.; Scher, S.; Davis, J. Material classification using BRDF slices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Skaff, S. Material Classification Using BRDF Slices. U.S. Patent US2015/0012226 A1, 8 January 2015. [Google Scholar]

- Zhao, H.; Nie, F.; Tao, H. Study on BRDF Feature Model of Rock Surface. J. Hubei Univ. Arts Sci. 2015, 36, 481–502. (In Chinese) [Google Scholar]

- Nicodemus, F. Directional Reflectance and Emissivity of an Opaque Surface. Appl. Opt. 1965, 4, 767–773. [Google Scholar] [CrossRef]

- Cook, R.; Torrance, K. A Reflectance Models for Computer Graphics. ACM Trans. Graph. 1982, 1, 7–24. [Google Scholar] [CrossRef]

- Revers, M. On the Asymptotics of Polynomial Interpolation to at the Chebyshev Nodes. J. Approx. Theory 2013, 165, 70–82. [Google Scholar] [CrossRef]

- Zhang, X.; Li, J.; Zhang, Z. Distribution of a Sequence of Chebyshev Polynomials. Math. Pract. Theory 2022, 52, 276–280. (In Chinese) [Google Scholar]

- Anzai, Y. Pattern Recognition and Machine Learning; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Rivest, J. Detection of Dim Targets in Digital Infrared Imagery by Morphological Image Processing. Opt. Eng. 1996, 35, 1886–1893. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Object Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).