3.1. Summary of Tests to Be Described

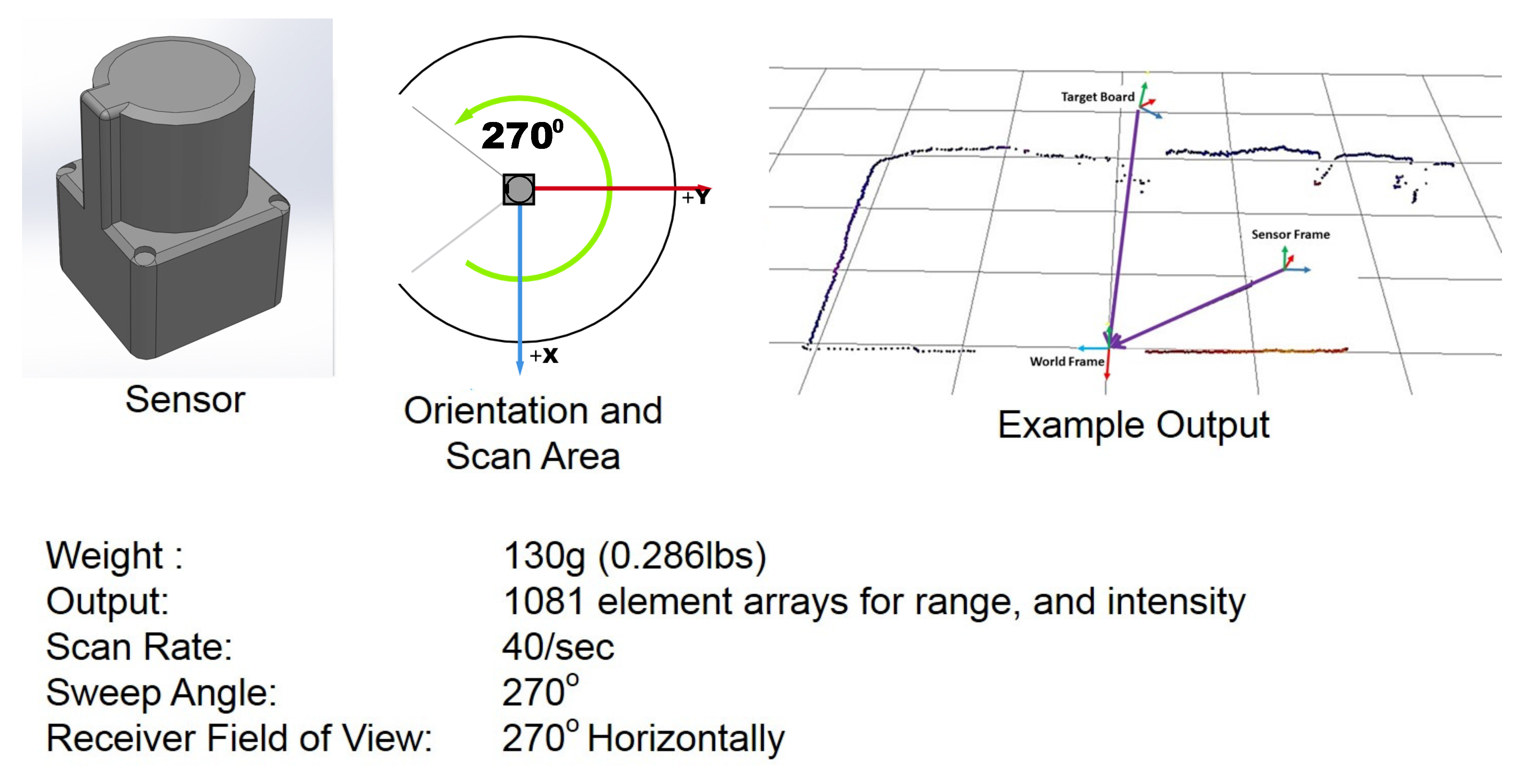

The basic testing set-up included the Hokuyo UST-20LX Scanning Laser Rangefinder, and flat planar target boards as inferred by

Figure 2 and

Figure 3. Initial test placements were positioned via the use of a 1/8 in resolution measuring tape to get a rough order of magnitude placement accuracy. As a secondary and more accurate measurement system, a VICON motion capture system (VICON, Oxford, UK) was employed to measure the actual positions of both the sensor and the target boards. This was used as the set of “truth” data for evaluating measurement accuracy. An initial run was conducted to gain an understanding of the error introduced by the VICON motion capture system at different ranges within the motion capture chamber as seen in

Table 1. In this scenario, the full distance of 4.0 m was near the whole diagonal width of the square testing chamber, and the distances represent moving the target board from one corner to the opposite. It can be seen that the standard deviation changes slightly as the position in the chamber was varied. The overall standard deviation can vary up to 6 mm in the

z-axis, 3.4 mm in the

x-axis, and 1.6 mm in the

y-axis. Considering this, the VICON data points are monitored during each of the following test scenarios. Additionally, the centroid of the target was calculated and the distance to the origin of the chamber from that centroid was calculated. It is interesting to note that the standard deviation of this distance was much lower, and it shows that the noise on each axis can be considered statistically uncorrelated, which results in that lower standard deviation value.

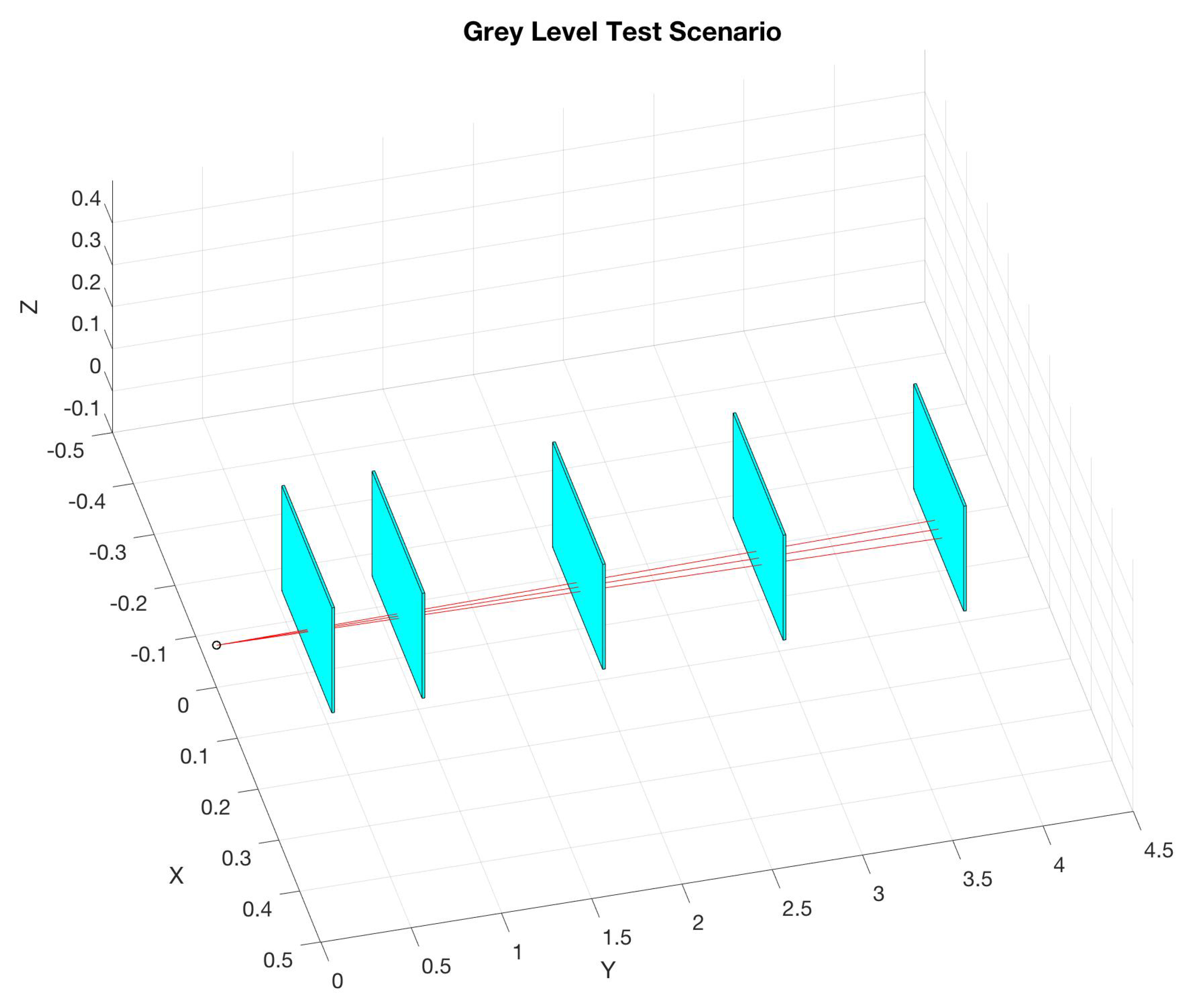

The first test, based on

Figure 2, was to measure the nominal range returns across a typical range against a matte white target board. The sensor source was represented by the blue circle, the red lines represents the looking geometry of the active sensor array, and the blue squares are the locations of the target boards. The ranges to be tested were at 0.5 m, 1.0 m, 2.0 m, 3.0 m, and 4.0 m. More than 10 k data points were collected and analyzed. Once completed, a test to measure the beam divergence was conducted at ranges of 0.5 m, 1.0 m, 2.0 m, 3.0 m, and 4.0 m. From here, the next major test was the range test against a matte black and a matte white target at ranges between 1.0 m, 3.0 m, 10.0 m, and 20.0 m. Once this was completed, an investigation using a gray scale target set illustrated by

Figure 4 was desired at ranges between 0.5 m, 1.0 m, and 2.0 m.

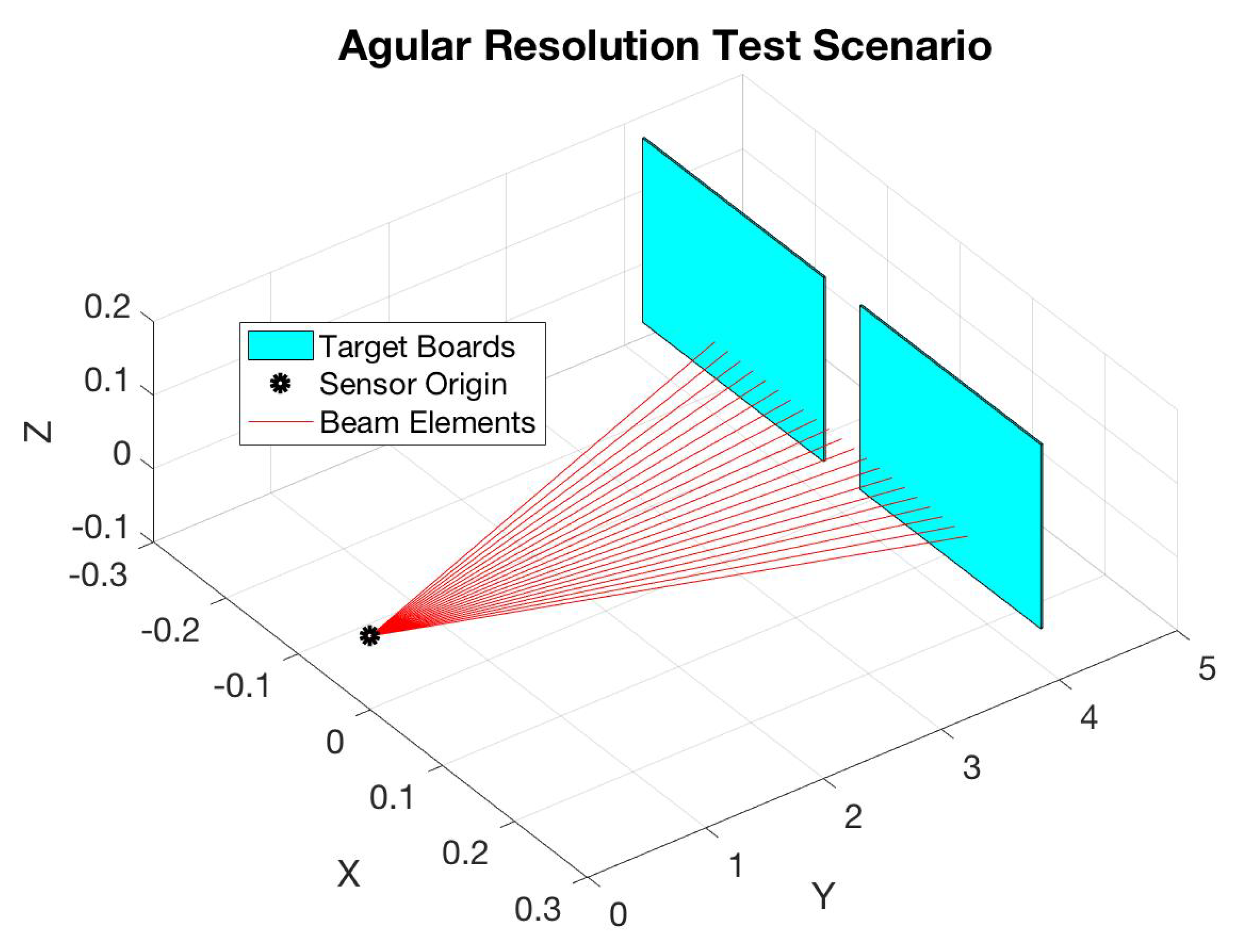

Once the basic ranging test was conducted, a test to investigate the angular resolution of the sensor was performed as shown in

Figure 3. The two target boards were placed at 4.0 m and the boards were placed with such a gap between as 0.0

, 1/8

, 1/4

, 3/8

, and 1/4

.

The next major test was the test to determine the amount of range error that was caused by the target’s orientation. A target board was placed at 4.0 m, and the target angle was stepped incrementally between +80 and −80.

3.2. Range Accuracy Measurements

This first test was designed to characterize the raw data range returns of the LiDAR sensor, and was the first step in identifying any potential issues pixel mapping the raw data to a usable stream. The statistical evaluation of the range returns enable a confidence in the accuracy of the measurements. This confidence may be a function of distance, and was necessary to investigate.

For this test, the sensor configured as an object within the VICON chamber, and was placed at the corner of the test chamber. The first target board was placed as close to 0.50 m according to the measuring tape, with the normal vector of the target surface point directly towards the sensor source. This can be seen in

Figure 2. The target was secured into place by small weights, and then both range data and VICON measurements are taken for the three active sensor elements centered around a zenith. A minimum of 10,000 data points are collected for a reasonableness in statistical accuracy and analyzed.

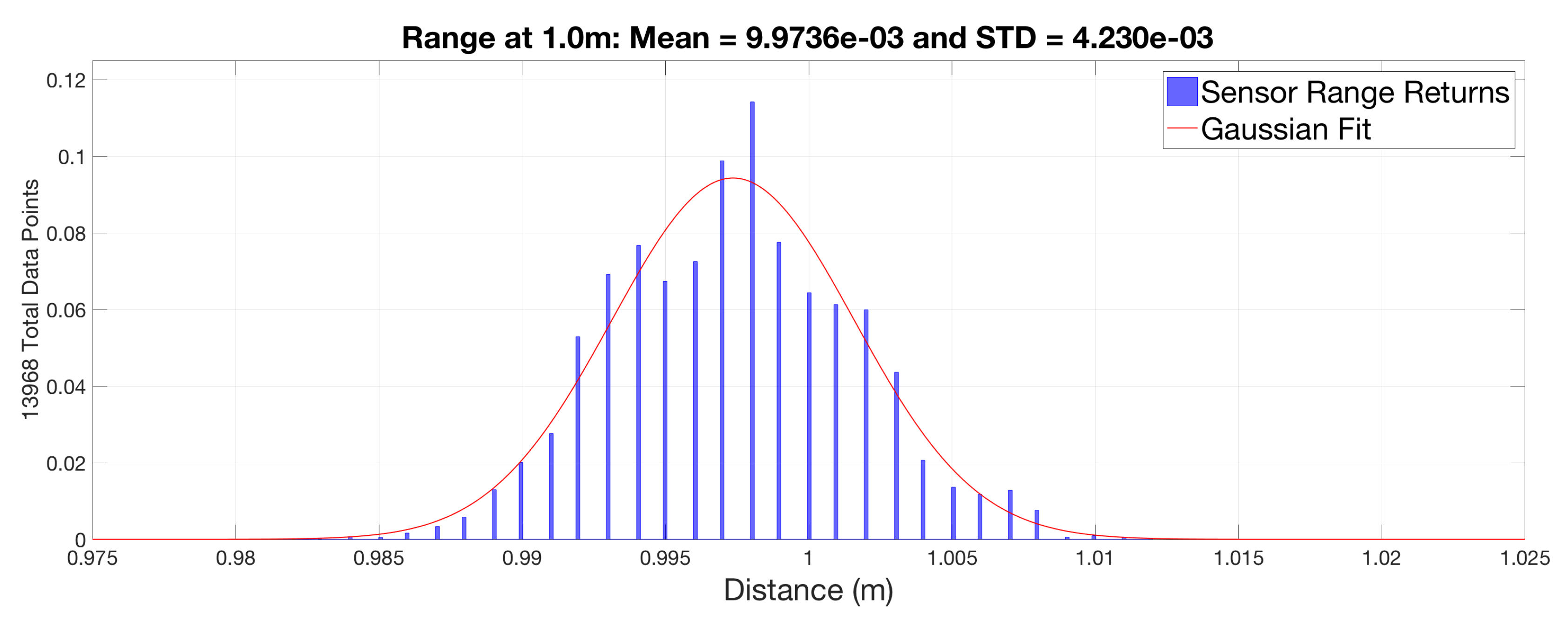

The first set of range data was shown in

Table 2. The data set in

Table 2 shows data collected for the three center data points of the LIDAR sensor. This figure shows the mean and standard deviation (STD) of the error with respect to the measured range. Recall that propagating three beams are necessary due to the fact that the minimum beam count was two as allowed by the internal software of the sensor, and by using three active elements will allow for a more accurate estimation of the center of the center beam due to symmetry.

Figure 5 shows a histogram of the collected data points for the 0

sensor output at the 1.0 m distance. The actual sensor data was represented by the histogram in blue, and the Gaussian distribution pattern describing it was in red. An item of note was that the sensor gates the range return into 1 mm increments. Therefore, the natural tolerance of the sensor was

mm due to the range gating of the internal electronics.

3.3. Black and White Range Measurements

In a localization and mapping environment in which typical small form factor LiDAR sensors will be utilized, a slightly neglected area was how the target interacts with the wavelength of the sensor, and of the sensor’s ranging calculations. The target’s material can essentially induce error in the range measurements due to how that interaction can change the signal-to-noise ratio (SNR) of the receiving sensor, as an initial test for the susceptibility of this on the Hokuyo sensor was to identify if a black or a white target board gives a noticeable difference in range returns. This is an interesting test case in that understanding the effect of the two extrema can provide an expected error bound for an actual real-world application that is not made up of an entirely black and white environment. There have been numerous error analysis studies on sensors of a similar concepts such as in Time-of-Flight (ToF) cameras. In these studies, the authors have used black and white checker boards to essentially average out the effect of black and white targets to provide a more “accurate” range measurement as seen in [

37,

38,

39]. This can provide for a more accurate range estimate by the sensor, especially if included as part of a calibration stage, for operational use but does not quite investigate the error source that is desired in this paper.

The test scenario for the Range Accuracy was mimicked during this test. The significant difference in this test was that the securing weights for the target board are marked to indicate position for each distance under test to ensure that a black target board was as closely located to the same position as the white target board was. Additionally, due to the lengths of this test, the VICON chamber was not utilized, and the test needed to be moved into an engineering hallway to secure the needed space. In this test, the measuring tape, with a 1/8 in tolerance, was the only measuring device. Due to this limitation, the distances were measured three times in each data collect. The test was limited to 20 m due to the sensor limitation at greater distances. Another run was attempted at 30 m, but the sensor returns were so weak in the ambient light that the accuracy of the range returns had to be ignored.

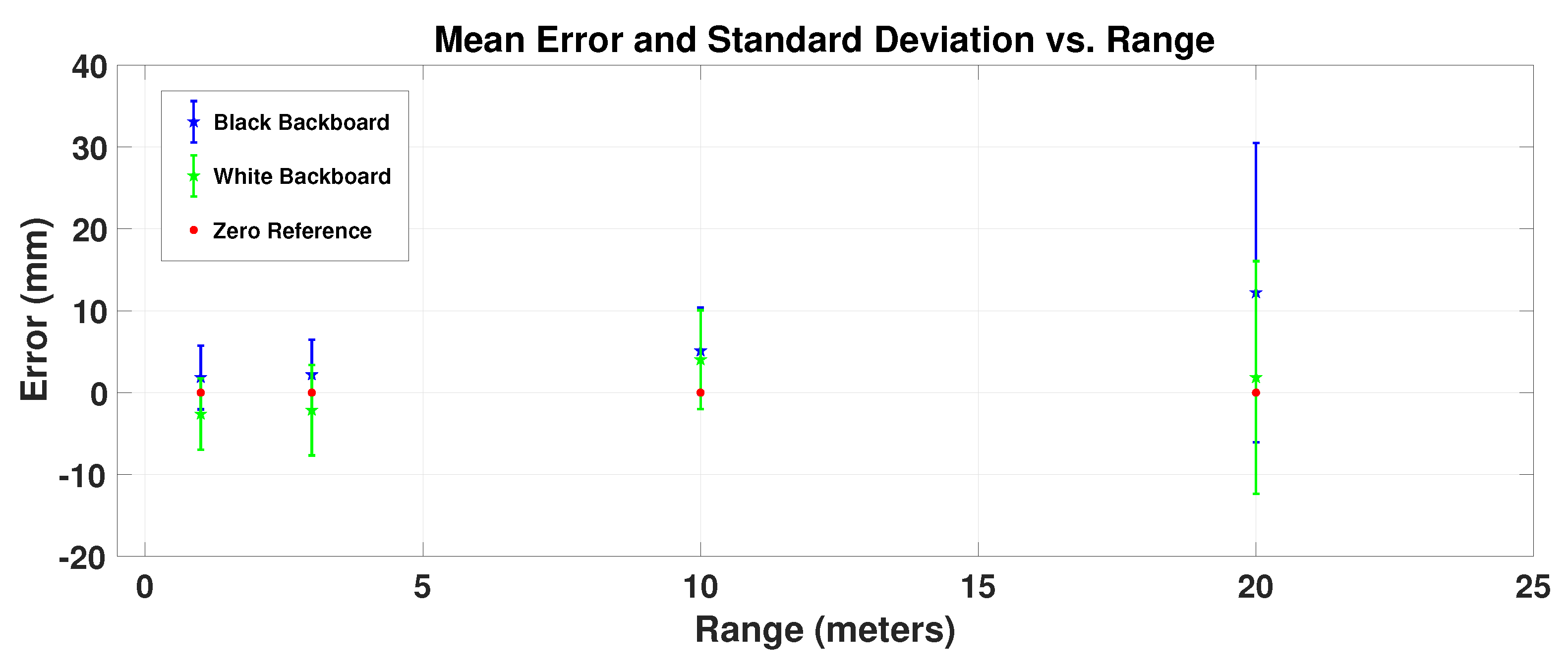

For the first scenario comparing range returns of a black or white matte board, the range accuracy was measured using at least 10,000 samples at each desired distance of 1 m, 3 m, 10 m, and 20 m and was illustrated in

Figure 6. For this scenario, the range returns are indicated in blue for the black target board, and in green for the white target board. The red circle are added to better visualize the zero-mean reference point. Doing this allows one to notice that the white target board consistently produces shorter range returns than the black target board. At shorter distances of 1 m and 3 m, the average range return seems to straddle the zero-mean reference. At a target distance of 10 m and 20 m, the mean error increases globally for both the black and white targets. The standard deviation at each test point was illustrated in

Figure 6 by the vertical error bars. The magnitude of the standard deviation seems to be constant until the test point at 20 m, where it has almost quadrupled in magnitude. It was suspected that there was a point between 10 m and 20 m where the standard deviation begins to increase from a seemingly constant value for distances below 10 m.

It was suspected that the lower intensity returns, and therefore lower SNR, from the matte black board fails to trigger the threshold based sensor return at longer distances [

40]. This may be the reason why the returns have a consistently longer measured distance when compared to a white matte target board.

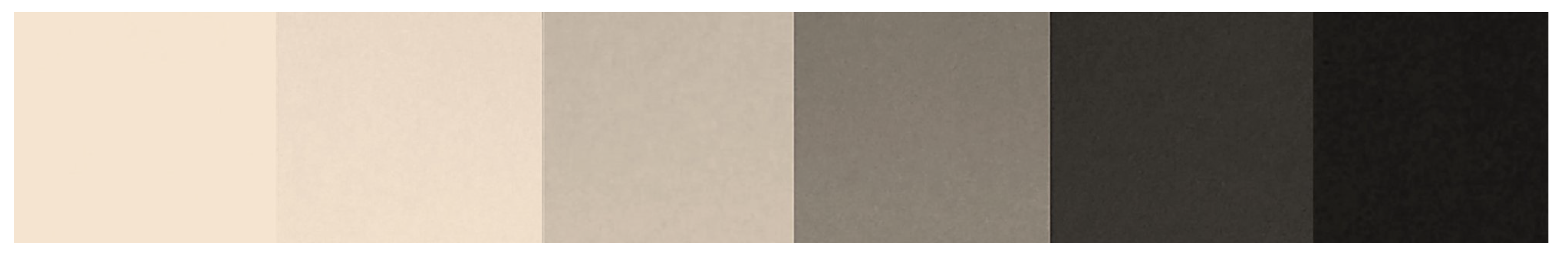

3.4. Gray Level Test

Once the comparison between matte black and matte white targets were analyzed, an additional gray level test was desired to better characterize the effects of target gray level on range error. All of the colors chosen were of the same Sherwin-Williams water-based interior latex paint line.

Figure 4 represents the gray variation in painted targets. The illustration presented is an uncalibrated color image of the actual boards used, and is only included to give the reader an idea on the breadth of the grayscale colors used in this test. For reference, the darkest color is Jet Black and is shown on the far right, whereas the lightest color is Pure White and is seen on the far left. This test was conducted in almost the same exact fashion as the first Range Accuracy test. The only difference was that the target boards are slightly smaller than the original white and black target boards, due to logistical reasons. This also allows for a more secure setting for the target placement within the VICON chamber. This also ensured that consistent placement as the six painted targets and two bare wood targets were put into place for each data collect.

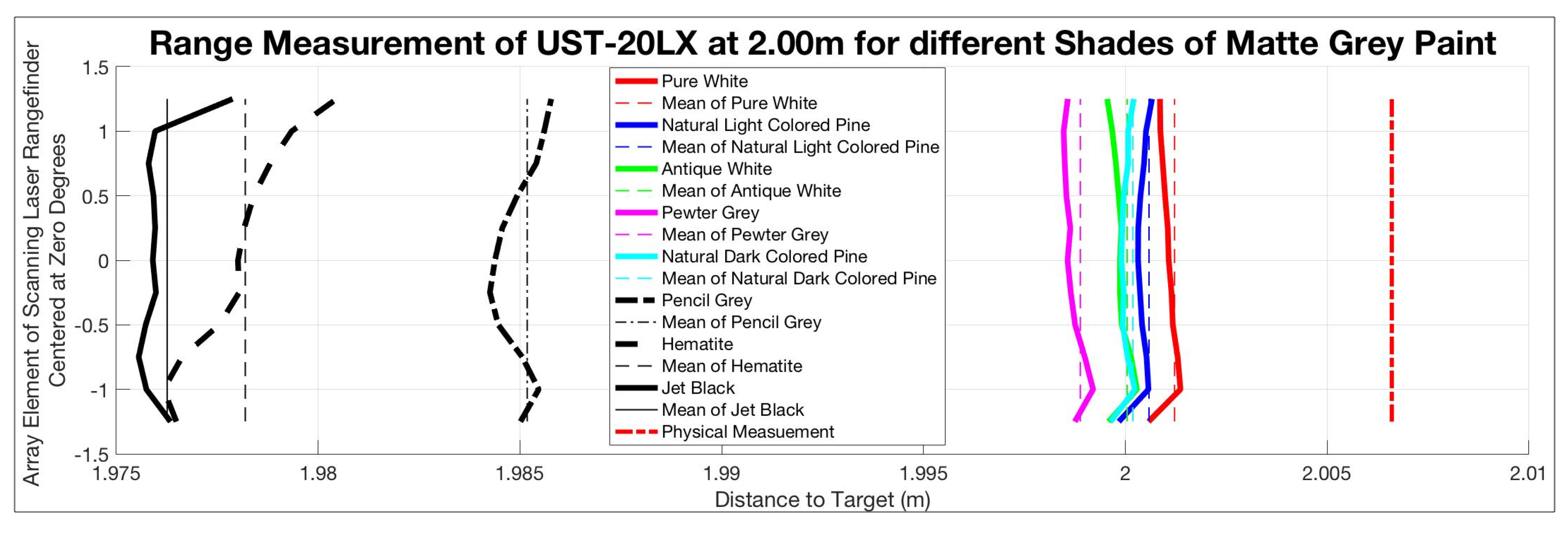

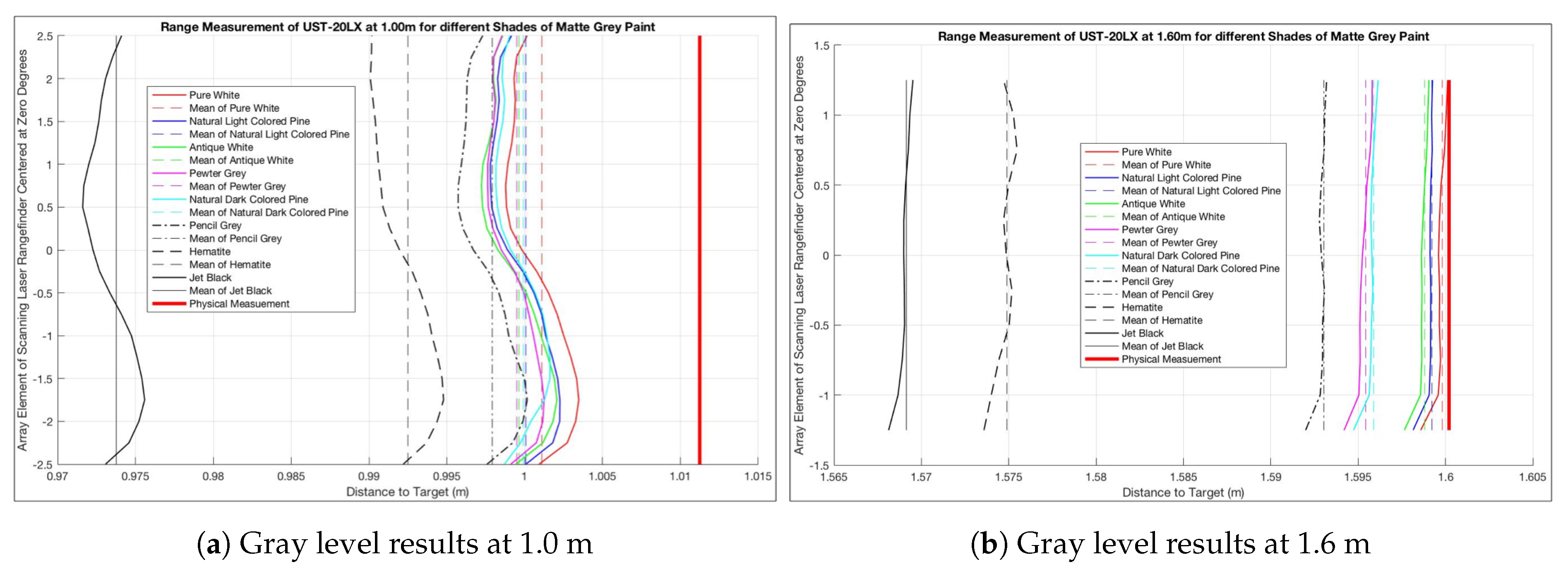

The results can be seen in

Figure 7,

Figure 8 and

Figure 9. During this test, there was an anomaly at a test range of 1.5 m so the data for that distance was collected at 1.6 m and was annotated in

Figure 9b. For this scenario, the test began with the pure white target board and that distance was used as the baseline for the remainder of the series. For example, the test at 0.50 m was started by placing the pure white target board in position as indicated by the thick solid red line. The range data was then taken, and the remainder of the targets were placed in the same exact location. The error in the range was with respect to the solid red line.

When analyzing the four test ranges as depicted in

Figure 7,

Figure 8 and

Figure 9, it can be seen that the test at 0.50 m shows a different level of error.

Figure 8 and

Figure 9a show an actual target distance greater than any range return, whereas the test at 0.50 m shows the actual target distance in the middle of the set of measured ranges.

3.5. Beam Divergence

Once an understanding of how the target color effects the range return, a test to measure the beam divergence was necessary. The beam divergence of the LiDAR sensor was an important characteristic because this phenomena can play an important part in the usability of the sensor in terms of pixel range error. For this test, the common test scenario in measuring the Range Accuracy, and of the errors caused by different gray levels of the target, was used once again. In this version of the test scene, only the matte white target board was used at each target distance. For each distance, a three-element pattern was used, and the target was placed such that the LiDAR beam lands on the center of the target. At this point, an infra-red optic was used to “see” the beam spot as it landed on the target and was traced out by hand onto a small piece of paper placed at the same location. The traced image was then measured via metric ruler with a tolerance of ±0.5 mm. This step was repeated twice at each target distance to help ensure a consistent measure.

The beam spot size on target can play into a number of different error sources. The first error type was a “Mixed Pixel” as described by [

4]. A mixed pixel represents a range return where the sensor has illuminated multiple different targets at different distance planes. The results of this can be seen more in the Angular Resolution test discussed in the following section. Beam spot size can also introduce error due to the target orientation, as the LiDAR return may not follow a traditional Gaussian shaped return. The return may in fact be skewed due the target orientation with a closer edge of the target giving a strong enough return to trigger the sensor threshold but give an incorrect range return. This will be illustrated in the Angle Test section later.

Table 3 shows the beam spot size measurement using the three-beam pattern previously discussed. It can be seen that the “width” of the beam spot was proportionally much larger than the “height" of the beam. Combining this data with a difference equation in Equation (

2) gives an average beam divergence of 0.413 mRad by 17.23 mRad. This divergence ration gives a little insight into the construction of the laser source. It would appear that vertical edges encounter stronger spatial filtering, whereas the horizontal edges do not. This spatial filtering creates a beam spot size that was disproportional, and can help visualize the difficulties in detecting features at long (greater than 2.0 m) distances:

where

was the angle of divergence,

h(

n) was the height at the

nth distance, and

d(

n) was the

n-th distance.

was the average angle of divergence in the vertical direction and

was the average angle of divergence in the horizontal direction.

3.6. Angular Resolution Measurements

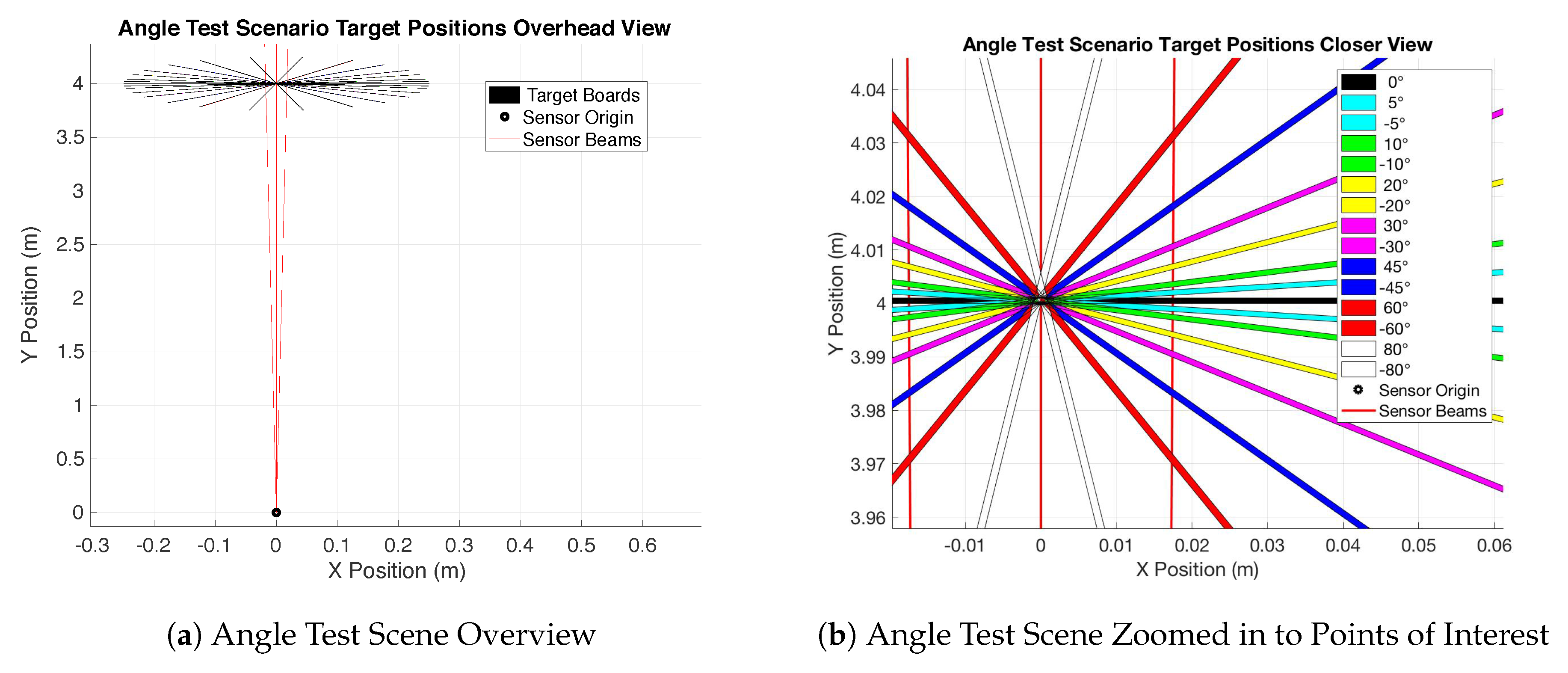

Continuing to investigate the pixel range error capability from the beam divergence test previously conducted, the angular resolution test was performed. The generalized test scenario can be represented by

Figure 3. Here, the sensor is placed at the black dot in

Figure 3 representing the sensor origin. From here, two matte white target boards are placed such that they share the same orientation, with the collective target set normal vector pointing back to the sensor origin. This creates a perpendicular “impact” point for the LiDAR sensor’s photons. The target distances measured are at 3.0 m and 10.0 m. This distance did not allow for use of the truth data garnered through the VICON chamber and distances were again measured solely via a tape measure. This measurement was performed three times each such that reasonable accuracy was performed. The goal of this test was not to evaluate distance, as such the accuracy of the distance was not extremely critical, but rather to characterize effects near those representative distances and to identify how small a feature can be to be detected with a reasonable confidence. Once the test was set-up, initial data collect is performed for a baseline. Then, the target boards are adjusted laterally such that a 1/8

gap now appears between them with respect to the sensor origin. This is repeated in 1/8

increments to 1/2

. Each data set collects at least 10 k data points. This overarching procedure is repeated again at 10 m.

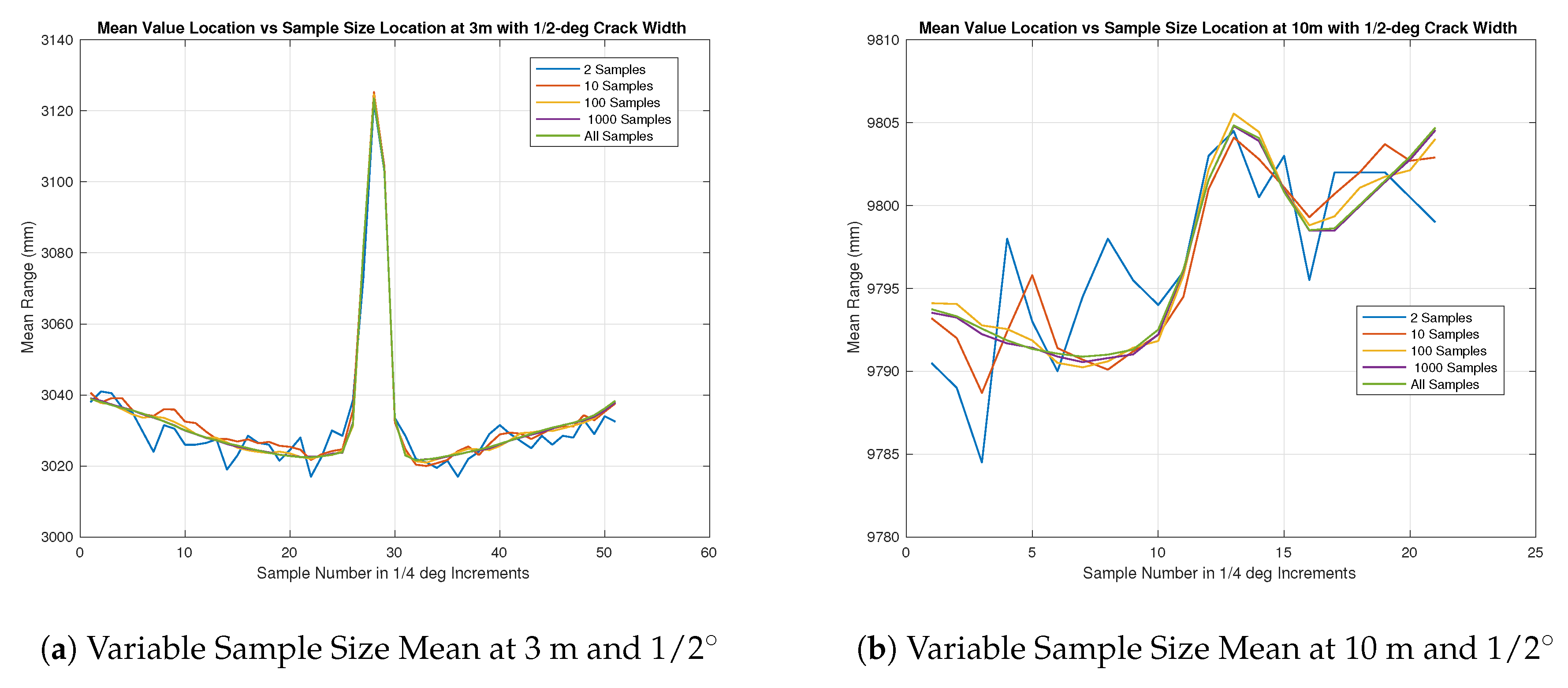

The results for the Angular Resolution test were much more challenging to determine with the collected data. During testing, the initial results to determine accurate alignment in real time was done by the UrgBenri visualization tool provided by the sensor manufacturer, Hokuyo. The resolution of the visualization tool was such that it did not show that any kind of feature was present when the hole depth of the angular gap was no deeper than 2 cm and therefore a much larger feature definition is required in order to visualize it with the UrgBenri software. In this case, both the UrgBenri software, and the raw sensor data was used. For the set of measurements in

Figure 10,

Figure 11,

Figure 12,

Figure 13 and

Figure 14, the UrgBenri visualization tool did not show any gaps, but, upon looking at the mean of the collected raw data of 10 k samples, it was very evident, as seen in the summaries in

Figure 10a,b. The output of the UrgBenri visualization tool did not show any relevant information not already captured in

Figure 11a,

Figure 12a,

Figure 13a and

Figure 14a and therefore is not included for simplicity’s sake. In the single sample case, it was extremely difficult to properly identify the location of the target gap. Keep in mind that the data presented here was not corrected for the range error as the angle from the norm increases, which accounts for the appearance of a “curve" in the presented charts.

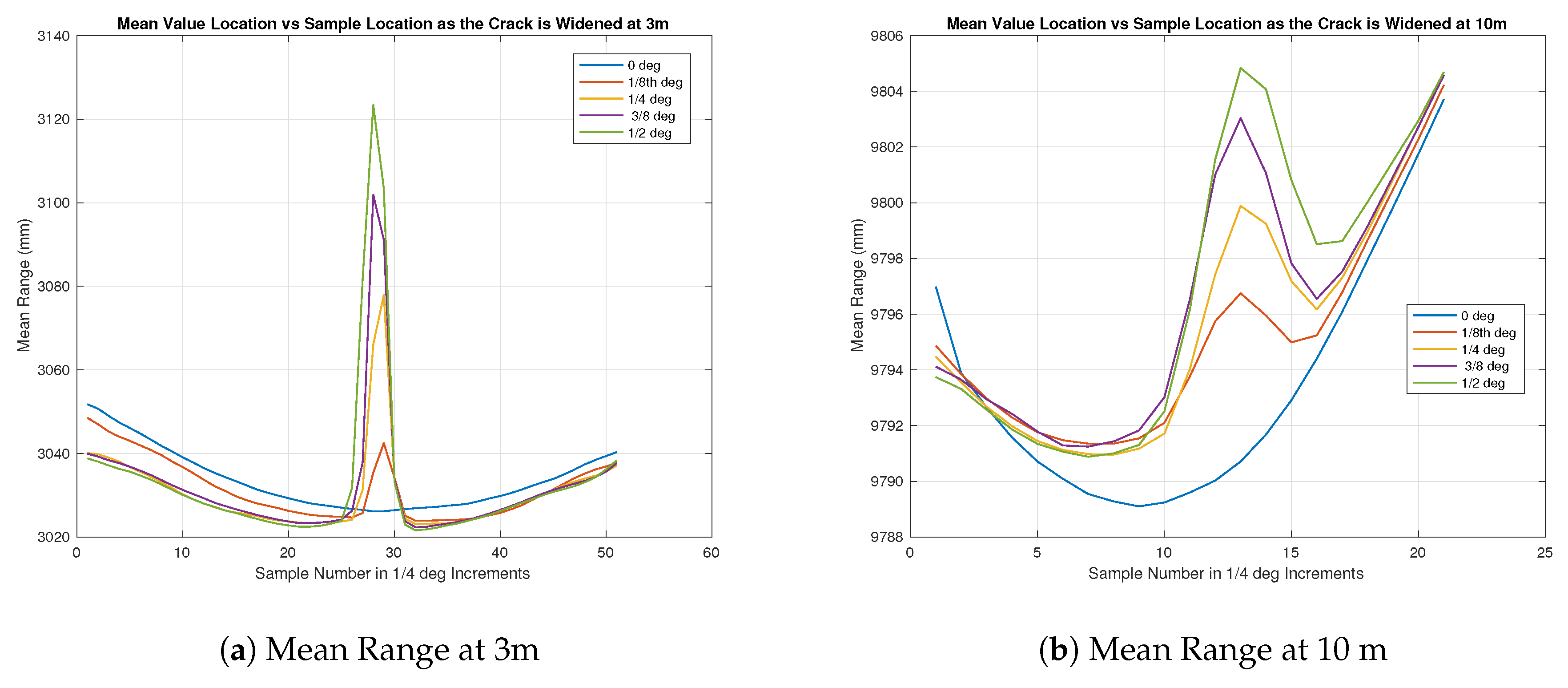

Figure 10a shows the test results at a distance of 3 m from the sensor as the gap increased according to

Table 4. Across 10 k samples, the mean was taken to produce the given results and it was very apparent that there was a discontinuity in the surface when the gap was as small as 6.65 mm, even though the angular separation between the beam samples are 1/4 degrees according to the manufacturer’s specification [

3].

In

Figure 10b, similar results can be seen as shown in

Figure 10a but across less samples overall in the figure. The gap indicated was proportionally the same size between

Figure 10a,b at about 5–6 samples.

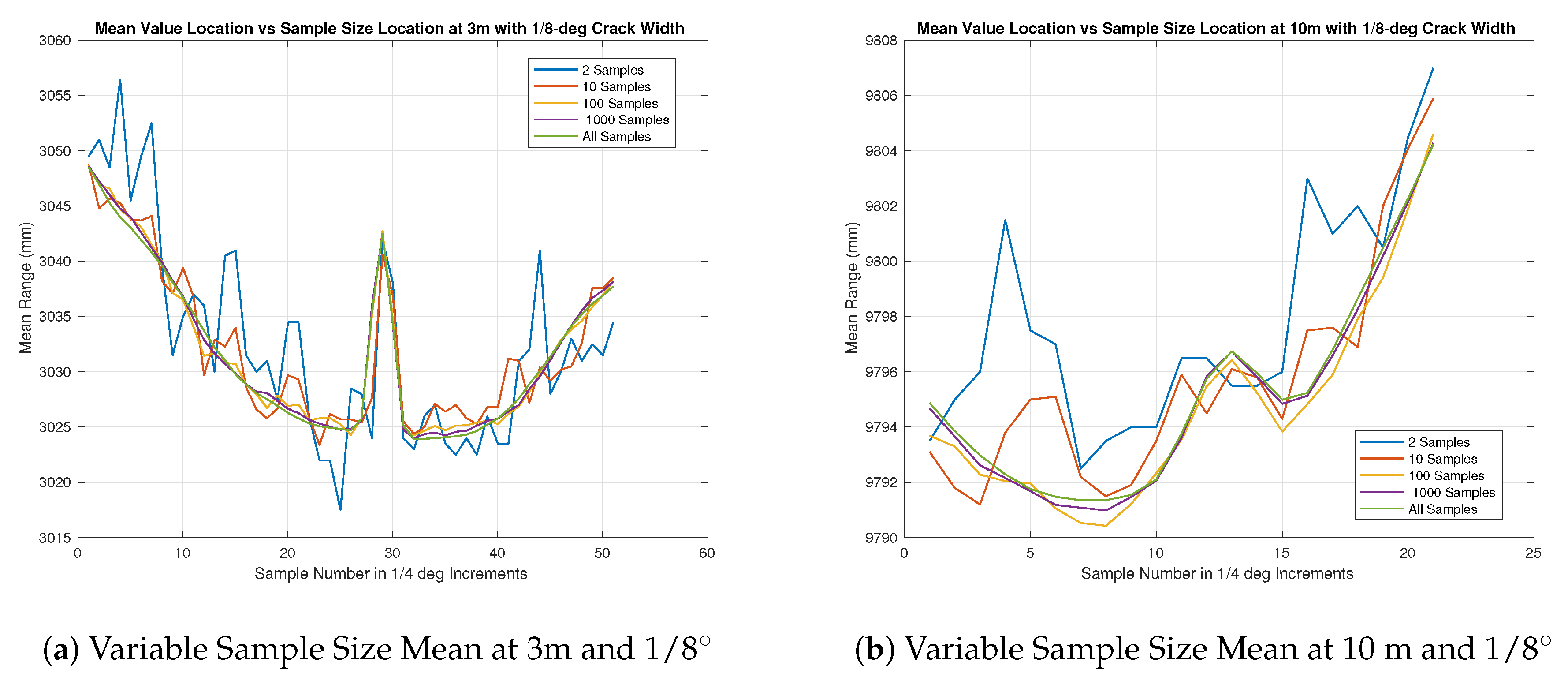

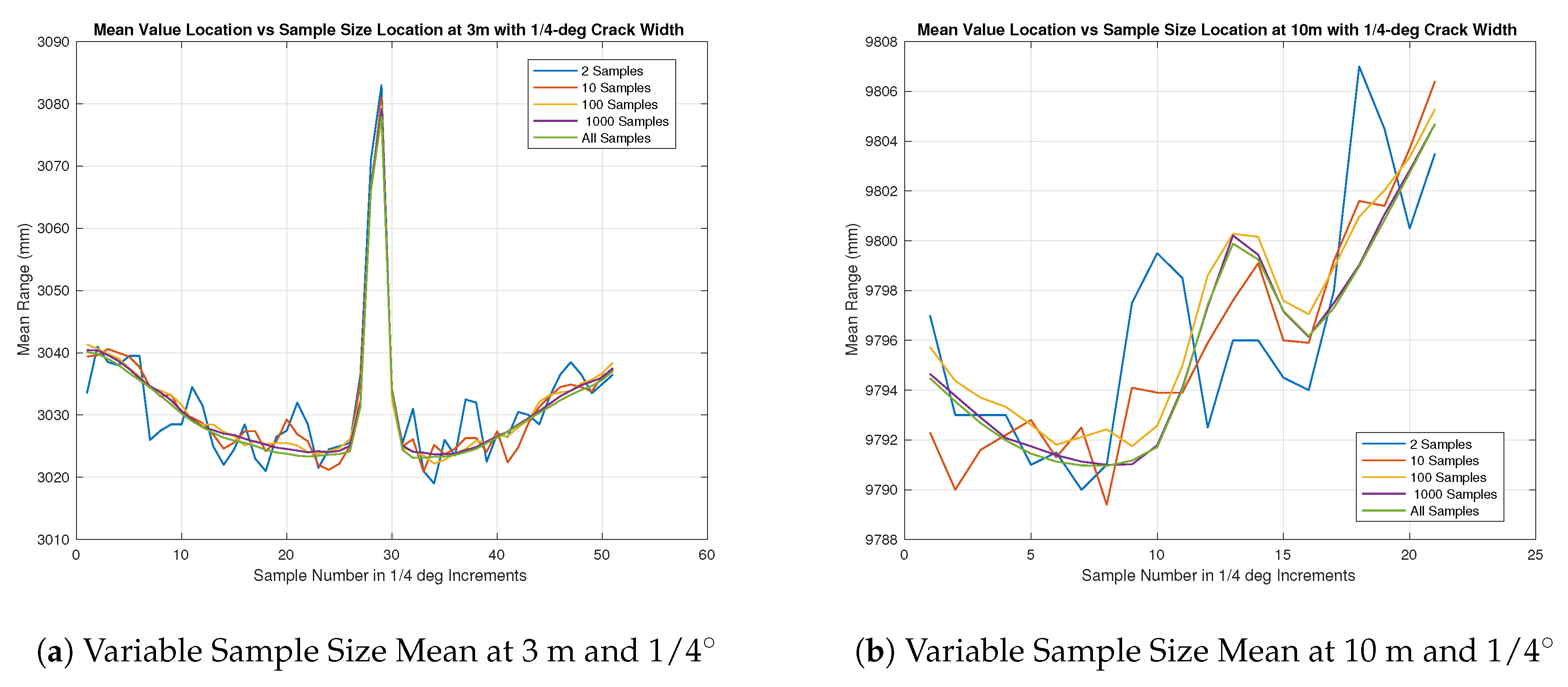

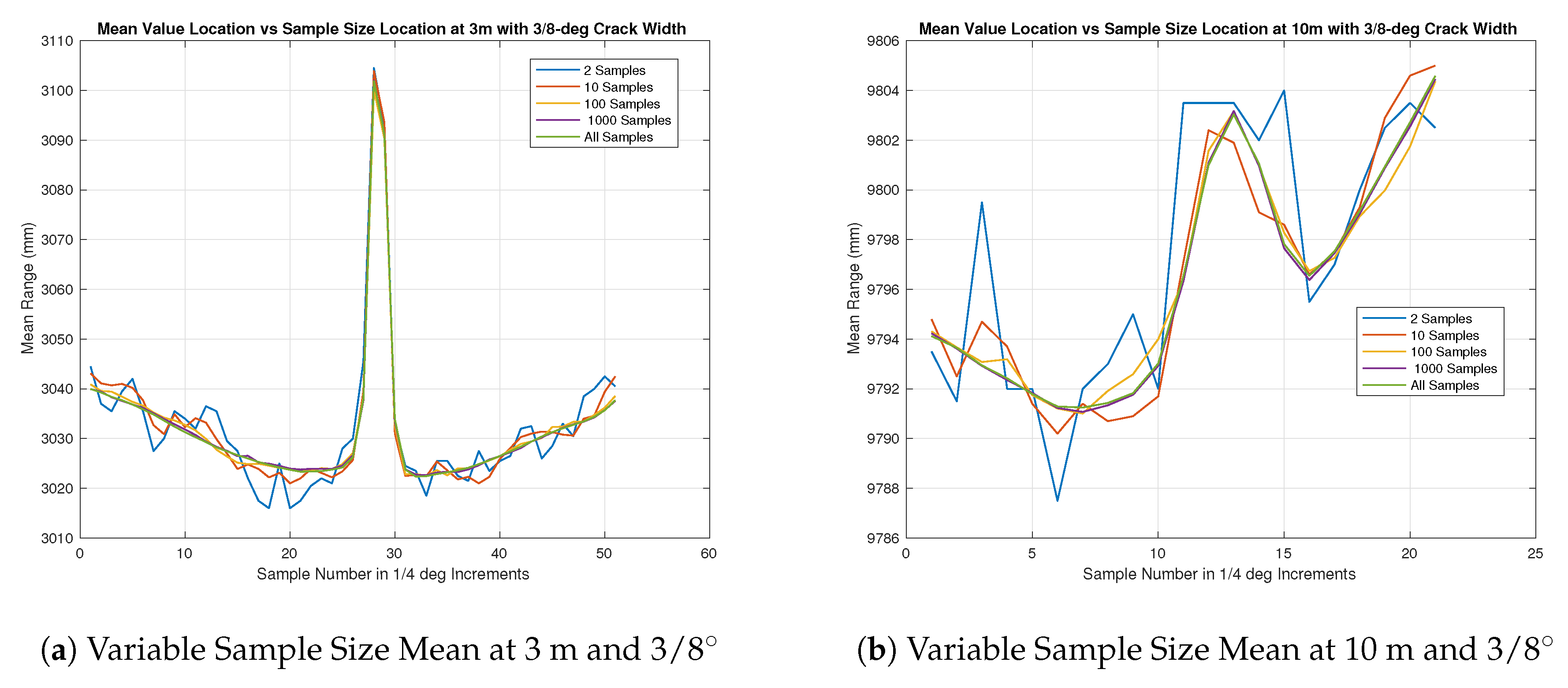

Figure 11a,

Figure 12a,

Figure 13a and

Figure 14a show the progression of the gap size as the number of sample measurements increases. In the simplest case, using a 10-measurement mean seams plausible in all cases but for a flat surface where the variance in the return was large enough to be unclear if something was there. In the rest of the cases at a distance of 3 m, it seems that a 10-sample mean will give “good enough” results to infer that there was a hole at that location.

In all cases, it appears that the mean sample waveform, across all but ones calculated with the lowest number of samples show a constant gap width of about 5–6 sensor-beam elements. This corresponds to roughly 1–1.5 degrees. The largest sized gap under test had an angular resolution of only 1/2 degrees, which was equivalent to two sensor beam elements. With this in mind, the results appear to describe anything below 1/2 degrees as about 1.5 degrees, and it could be inferred that this will hold true as the angular distance of the gap increases. Another interesting note is the comparison between the “b” series in

Figure 10,

Figure 11,

Figure 12,

Figure 13 and

Figure 14 as compared to the “a” series. In theory, the angular resolution is the same in each figure and therefore should be equal if no other factors are affecting the range returns. It can be seen that this is not the case. Combining this information with the previous insights gained through the beam divergence tests, and of the longer range black and white range test, it can be surmised that the longer distances influence the range returns. This influence has distorted the features in the target plane and causes it to be larger, but not as pronounced as it should be.

3.7. Target Angle Test

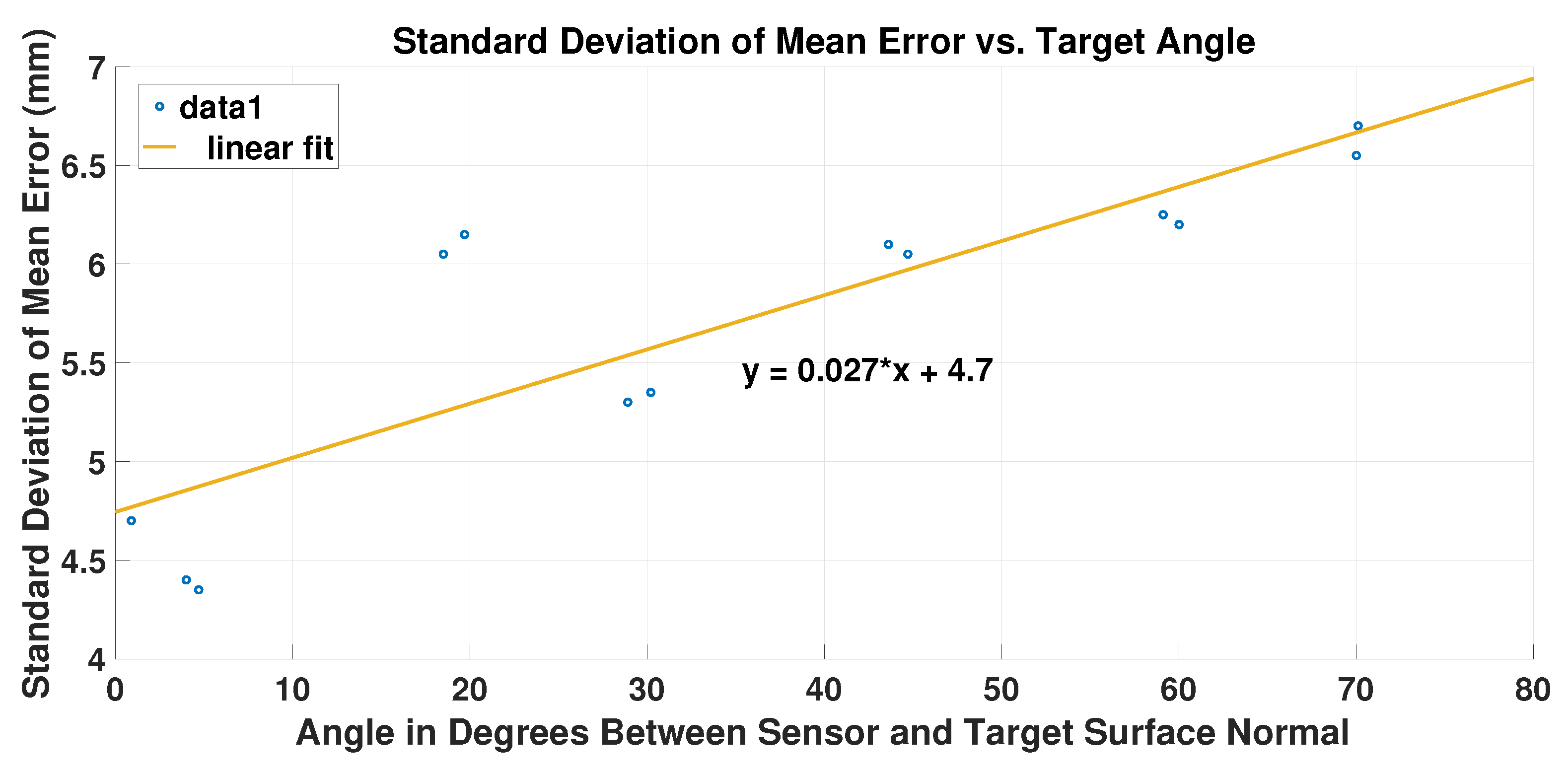

The last major test was what we are calling the target angle test. This test influences both the pixel range error and pixel mapping characteristics of the sensor. In this scenario, the LiDAR sensor is placed at the sensor origin as seen in

Figure 15a. It then propagates to a white matte target board placed at 4.0 m. The goal of this test is not to evaluate a specific characteristic due to distance but of a pixel range error capability. The target distance of 4.0 m was decided, as this allowed for more room to manipulate the target into extreme orientations. This was the farthest distance that could be achieved with high confidence in the VICON range measurements, while simultaneously ensuring a large enough beam spot size to force a potential error, inducing a situation through extreme target orientation angles up to

. At a closer distance, the spot size becomes smaller and can limit the error inducing potential during the test. The chosen distance also amplified the errors such that it was reasonably measurable while still being in the confines of the VICON chamber.

Figure 15a,b presents the test scenario for evaluation. The target range was centered at 4.0 m, while a target board was oriented between a range of angles of

as referenced in

Figure 16. The goal of this test was to gain an understanding of how the beam spot size affects the range error as the orientation from beam normal was increased. Ideally, the spot size would be extremely small such that the target orientation does not affect the sensor range return.

Figure 16 illustrates how the standard deviation of the error changes with respect to the magnitude of the target’s orientation as compared to the sensor origin. As expected, the larger the orientation angle with respect to the sensor beam propagation vector, the larger the standard deviation of the range error. In this figure, a simple linear fit is used to show the general trend in order to enlighten the reader. The rough linear fit implies that there may be a an overarching standard deviation of mean error across the whole spectrum of angle in the order of about 4.5 mm. This can be largely taken into account by the range error previously discussed. If removed, then the increase in the error variance is decreased uniformly across

Figure 16. Even with this potential decrease, the potential error caused by the target’s orientation can be over a half a centimeter at a range of 4 m.

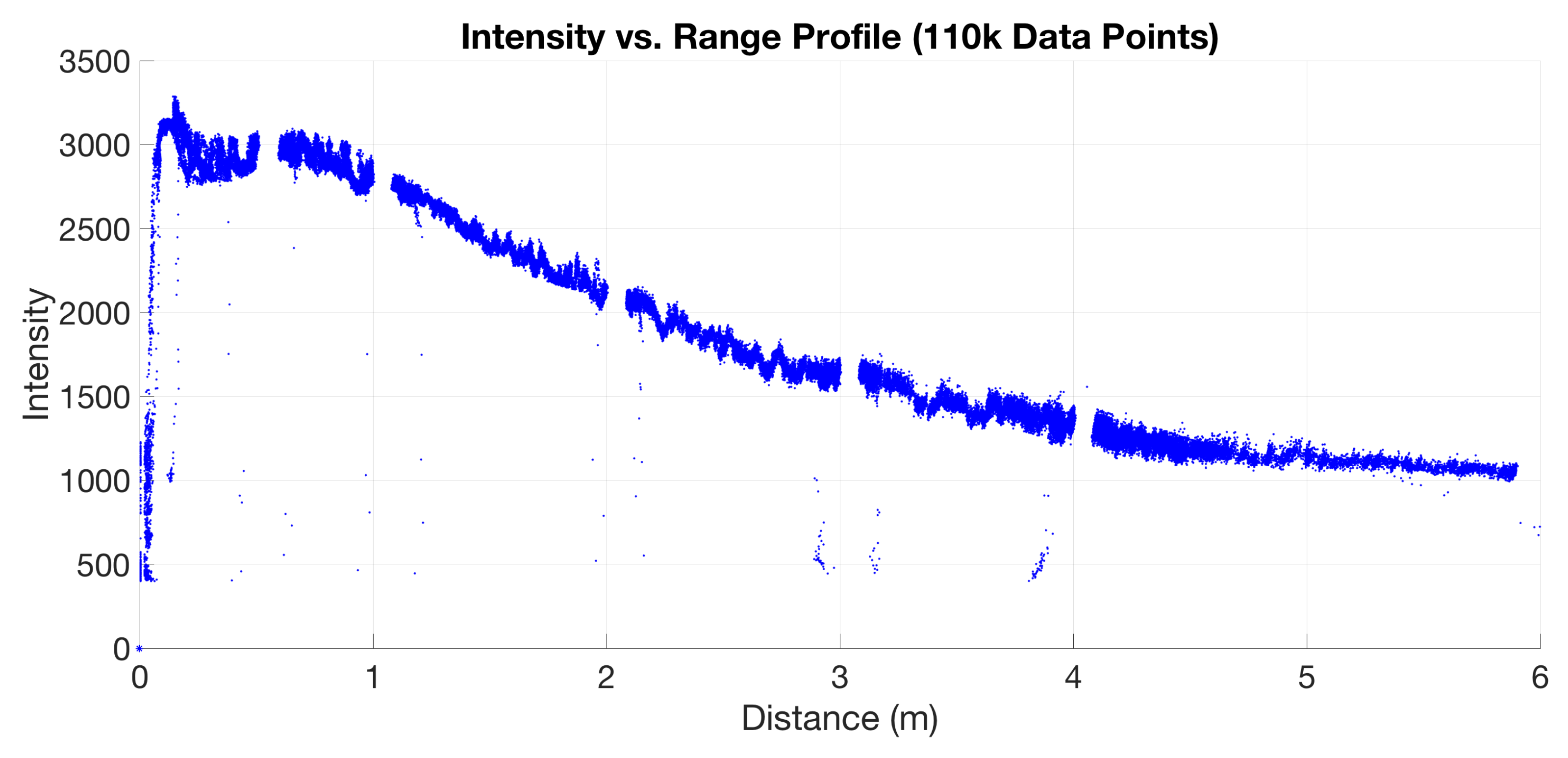

3.8. Intensity-Range Profile

The final experiment in this characterization of the range return data of the Hokuyo UST-20LX Scanning Laser Rangefinder was an intensity profile showcasing the effect of the target distance as a function of the returned intensity. The experiment was conducted at a starting point similar to what has been referenced in many of the previous tests as seen in

Figure 2. After the sensor is warmed up, a matte white target board is placed perpendicular to the sensor beam path, and then range data is collected. As the range data is collected, the target board is moved slowly along the propagation path, keeping the target board normal vector along the propagation path as well, which ensures that the sensor beam hits the flat front surface of the target board. At each meter increment (1, 2, 3, 4, 5, and 6), the target is removed from the beam path. This acts as a range check, as the voids in the collected data should coincide with the corresponding distances, as seen in

Figure 17. The intensity profile seems to follow a decreasing exponential function, which may illustrate the expected SNR and the corresponding sensor threshold estimated at about 1000. For this data collection, the intensity value are in unknown units that was output by the sensor.