4.2.3. Hyperparameter Sensitivity Analysis

- (1)

Loss function weight analysis

To address concerns regarding arbitrary weight selection and validate the scientific rigor of parameter choices, we conducted a comprehensive sensitivity analysis on key hyperparameters and .

Rotation Loss Weight () Analysis: Table 9 presents the sensitivity analysis results for

under different values, with

fixed and using circular trajectory data for testing, as it represents the most challenging motion pattern.

In this analysis, the composite score is calculated as , where higher values indicate better performance.

Results demonstrate that achieves an optimal performance balance. Values below 0.6 lead to significant orientation estimation degradation due to insufficient orientation optimization weighting, while values above 1.0 cause gradual position accuracy loss due to excessive emphasis on orientation components.

Temporal Loss Weight () Analysis: Table 10 presents the sensitivity analysis results for

, with

fixed and using Z-shaped trajectory data to test complex temporal variation characteristics.

In this analysis, trajectory smoothness represents the average inter-frame pose variation, where lower values indicate smoother trajectories. Temporal consistency is calculated as 1 − trajectory prediction variance, where higher values indicate better consistency. The composite score is computed as , using the baseline from circular trajectory experimental data.

Analysis confirms that provides optimal performance baseline, while the range [0.05, 0.10, 0.15] demonstrates robust performance across different motion complexities. Results demonstrate that our parameter selection is scientifically grounded rather than arbitrary.

Joint Parameter Optimization Validation: Table 11 presents the joint optimization results for

and

, validating global optimality through grid search.

Joint optimization results confirm that the combination (, ) achieves optimal baseline performance (score 2.000), validating parameter selection independence and configuration range robustness. remains optimal across all values, while our configuration range [0.05, 0.1, 0.15] performs excellently at , demonstrating parameter selection stability.

Theoretical Validation Analysis: Position-orientation error scale analysis based on experimental data shows: position error range of 3.60–5.82 cm (average 4.71 cm), orientation error range of 6.31–8.02° (average 7.17°). Theoretical optimal weight , while our experimental weight is slightly higher than the theoretical value, which is reasonable as it considers the inherent difficulty of orientation estimation, providing moderate additional weight for orientation optimization within the stable interval of 0.6–1.0 with a conservative strategy.

Thus, we demonstrate that STFI-Net’s loss function hyperparameter configuration represents scientifically validated optimal choices rather than arbitrary decisions, ensuring methodological rigor and reproducibility.

- (2)

TCN kernel size analysis

The TCN kernel size configuration directly impacts the network’s temporal receptive field and multi-scale feature extraction capability. In VLP systems, RSS signals exhibit multi-timescale characteristics: short-term fluctuations (0.1–0.5 s) due to LED switching dynamics and long-term trends (1–3 s) reflecting AGV motion patterns. The kernel size selection must balance capturing these diverse temporal scales while maintaining computational efficiency.

To validate the rationality of the TCN kernel size configuration [15, 31, 31, 63], we designed five different kernel configuration schemes and conducted systematic evaluation based on circular trajectory data. The selection of kernel sizes directly affects the network’s temporal modeling capability and computational efficiency, requiring an optimal balance between capturing multi-scale temporal features and computational complexity.

Table 12 presents the performance comparison results of different TCN kernel configurations. The current configuration [15, 31, 31, 63] achieves the best accuracy balance through progressive multi-scale design, with position MAE of 5.82 cm and orientation MAE of 8.02°. In contrast, the smaller kernel configuration [7, 15, 15, 31] suffers from an insufficient receptive field, leading to limited long-term temporal dependency modeling capability and 9.1% performance degradation. The larger kernel configuration [31, 63, 63, 127], while providing a larger receptive field, increases computational overhead by 68% with only 2.6% performance improvement, exhibiting obvious diminishing marginal returns.

Uniform kernel designs ([15, 15, 31, 31] and [31, 31, 63, 63]) lack multi-scale modeling capability and cannot effectively capture both short-term signal fluctuations and long-term trajectory trends simultaneously. Their performance in complex circular trajectory dynamics is inferior to the current progressive configuration. Experimental results demonstrate that the current configuration [15, 31, 31, 63] achieves optimal balance between computational efficiency and modeling capability through reasonable multi-scale temporal modeling design.

- (3)

Dropout configuration analysis

Dropout regularization strategy is critical for preventing overfitting in deep neural networks, particularly in VLP applications where RSS signal patterns can exhibit complex spatial correlations. The layered dropout design [CNN: 0.2, TCN: 0.1, Output: 0.1] reflects the different overfitting susceptibilities of spatial feature extraction, temporal modeling, and final regression components.

Dropout regularization strategy significantly impacts the network’s generalization capability and training stability. We adopt a layered dropout strategy [0.2, 0.1, 0.1] with differentiated design targeting the functional characteristics of different network layers. To validate this configuration’s rationality, we evaluated the performance of five different dropout configuration strategies.

Table 13 presents the sensitivity analysis results of different dropout configurations. The current layered configuration [0.2, 0.1, 0.1] achieves the optimal precision-generalization balance, with position MAE of 5.82 cm and orientation MAE of 8.02°. Insufficient dropout rates ([0.1, 0.05, 0.05]) result in spatial feature extraction layers exhibiting overfitting to RSS distribution patterns, causing 5.2% validation performance degradation. Excessive dropout rates ([0.3, 0.2, 0.2]), while enhancing generalization capability, compromise model fitting precision, resulting in 6.7% accuracy reduction.

Uniform dropout configurations ([0.15, 0.15, 0.15] and [0.25, 0.25, 0.25]) disregard the functional distinctions of different network layers and fail to address layer-specific overfitting issues, demonstrating inferior overall performance compared to the layered design. Experimental results validate the effectiveness of the layered dropout strategy, achieving optimal equilibrium between learning capability and generalization performance through differentiated regularization design for CNN spatial layers, TCN temporal layers, and output regression layers.

- (4)

Window length analysis

The temporal window length T determines the amount of historical RSS information available for pose estimation. In VLP systems, this parameter must balance temporal information completeness with computational efficiency and real-time constraints. AGV motion characteristics typically exhibit correlation timescales of 1.5–2.5 s, requiring sufficient temporal context while avoiding excessive computational overhead.

The temporal window length T directly affects the network’s ability to capture temporal information completeness and computational efficiency. We evaluated the impact of different window lengths on STFI-Net performance to determine the optimal temporal modeling configuration. The selection of window length requires balancing temporal information capture with real-time requirements.

Table 14 presents the sensitivity analysis results of different temporal window lengths. The T = 30 configuration achieves optimal performance with position MAE of 5.82 cm and orientation MAE of 8.02°. The short window T = 20 suffers from insufficient temporal information, leading to limited modeling capability for continuous turning patterns in circular trajectories and 7.9% performance degradation. The long window T = 40, while providing richer temporal context with slight performance improvement (1.0%), increases memory usage by 44% and inference latency by 34%, exhibiting obvious diminishing marginal returns.

Excessive window length T = 50 introduces temporal information redundancy with negligible performance improvement (0.5%) but dramatically increased resource consumption, which is unsuitable for practical deployment requirements. Experimental results demonstrate that T = 30 window length precisely covers key temporal characteristics of typical AGV motion, achieving optimal balance between temporal information completeness and computational efficiency, meeting real-time VLP system deployment requirements.

Through systematic hyperparameter sensitivity analysis, we validate the scientific rationality of STFI-Net’s current configuration. All key hyperparameters are confirmed optimal through multi-configuration comparisons, providing solid theoretical foundation and experimental support for the network’s high-performance and practical deployment.

4.2.4. Inference Time and Model Complexity Analysis

Computational efficiency is crucial for practical deployment. This section provides a comprehensive evaluation and complexity of STFI-Net’s computational performance, including quantitative inference time analysis and detailed model complexity assessment to evaluate scalability in embedded systems.

- (1)

Inference time evaluation

We conducted systematic inference time evaluation using the hardware setup specified in

Table 2 to assess STFI-Net’s computational efficiency for both static point localization and dynamic trajectory estimation scenarios.

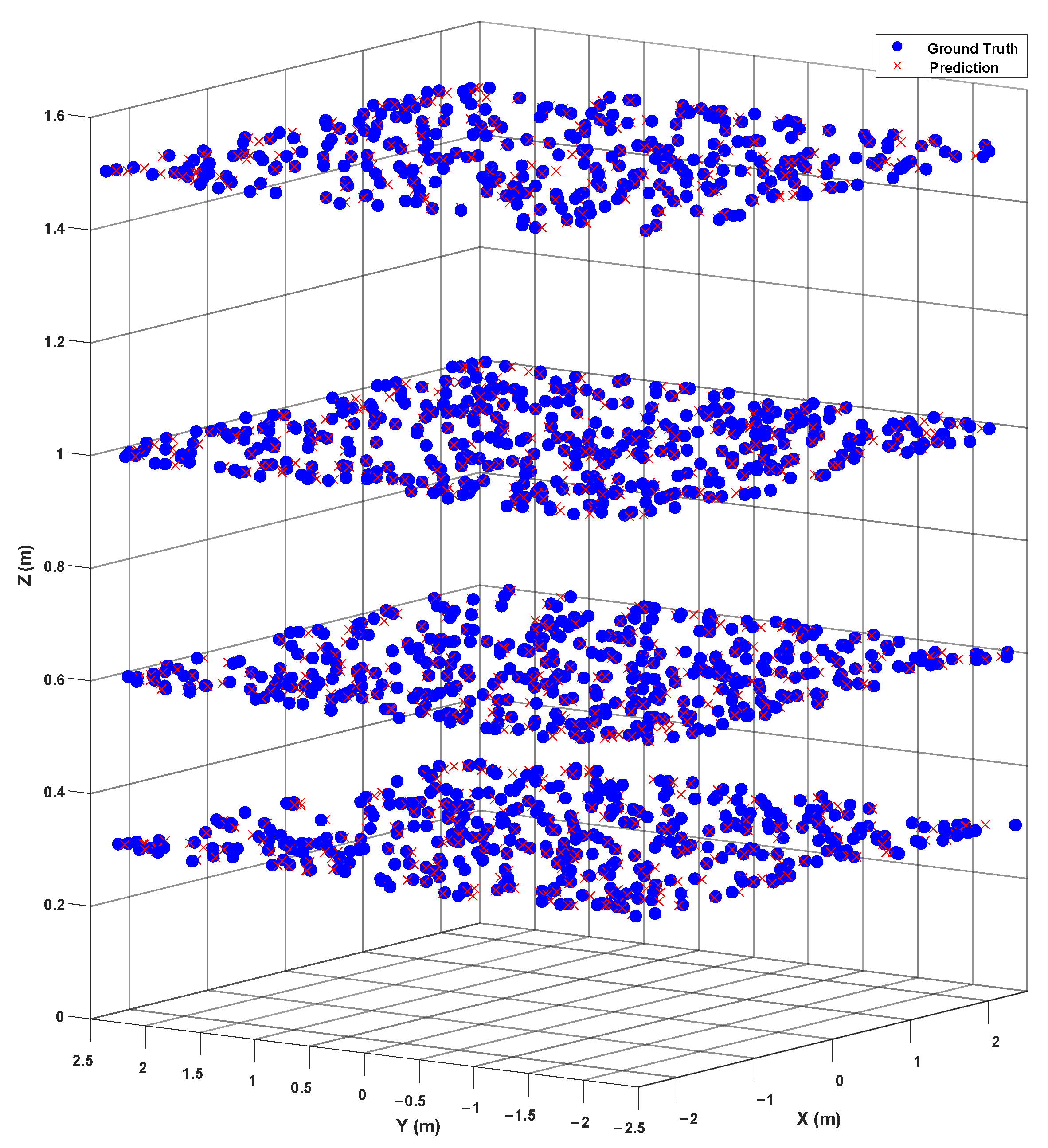

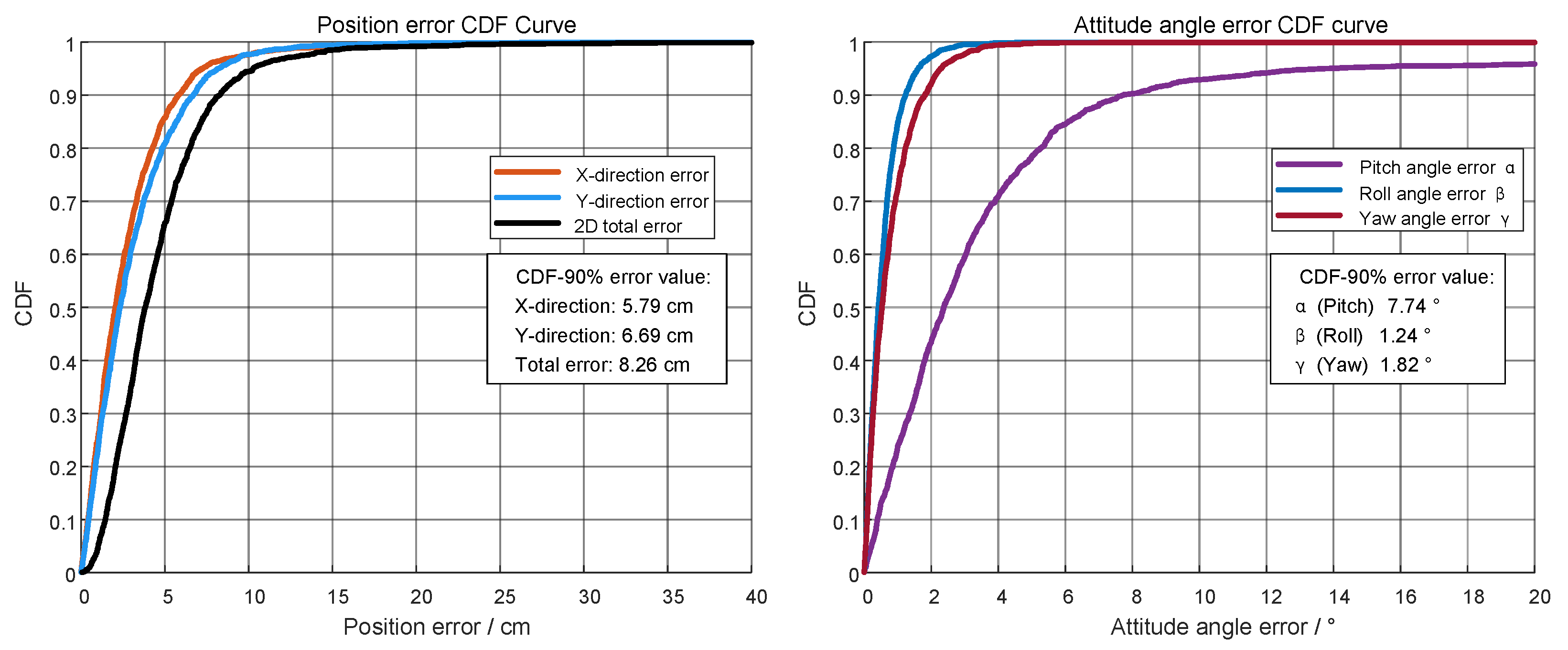

Static Localization Performance: For offline dataset generation, comprehensive spatial data creation (size: points) and corresponding pose vector computation using MATLAB required approximately 1 h 40 min 54 s. Subsequent deep learning model training using DataSpell took approximately 3 h 13 min 28 s. For online validation, inference on 1500 randomly sampled points from multiple height planes (0.3 m, 0.6 m, 1.0 m, 1.5 m) was completed in 11.4 s, yielding an average inference time of approximately 7.6 ms per sample for joint position and orientation estimation. This latency is well within acceptable ranges for real-time indoor localization systems.

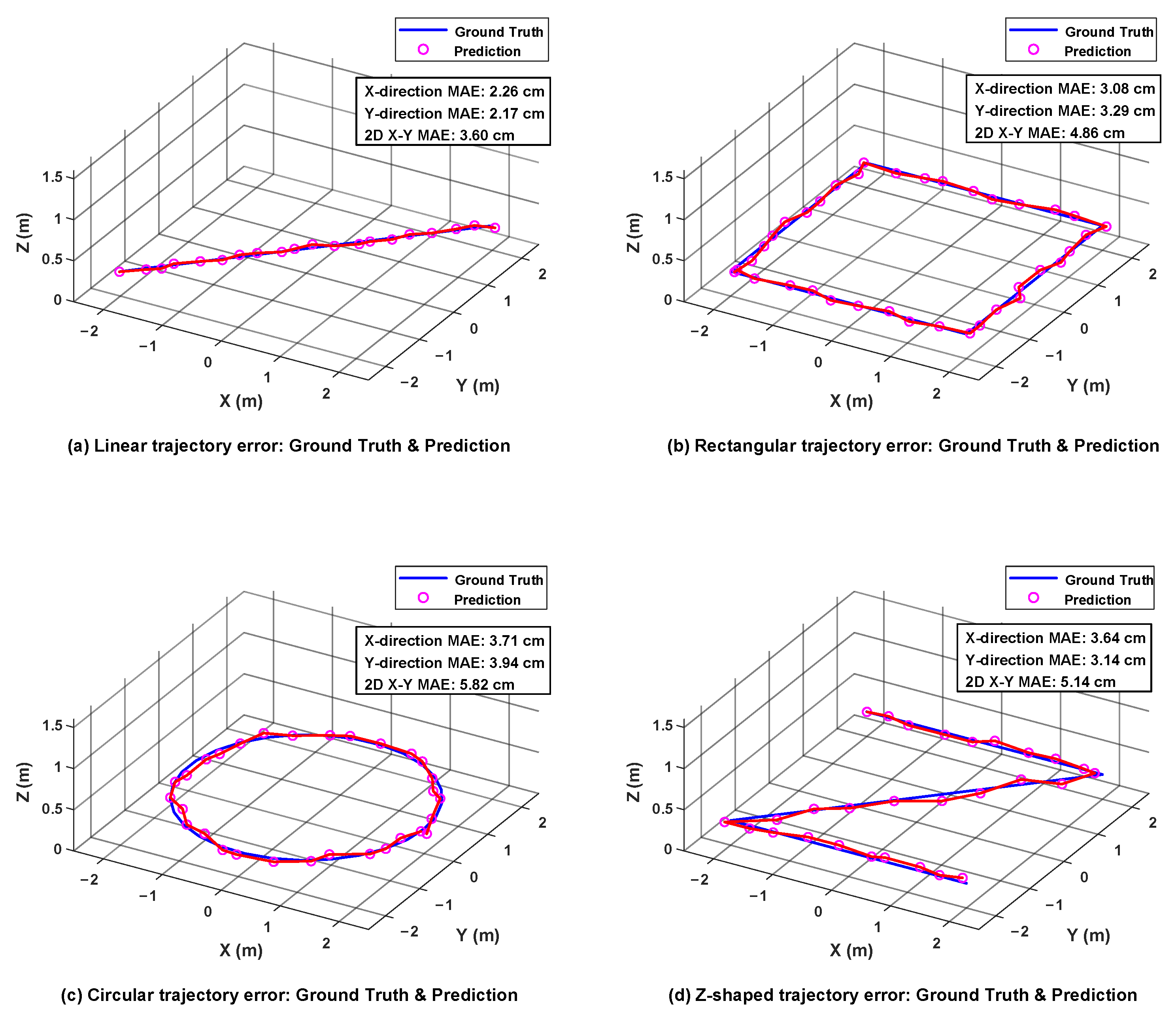

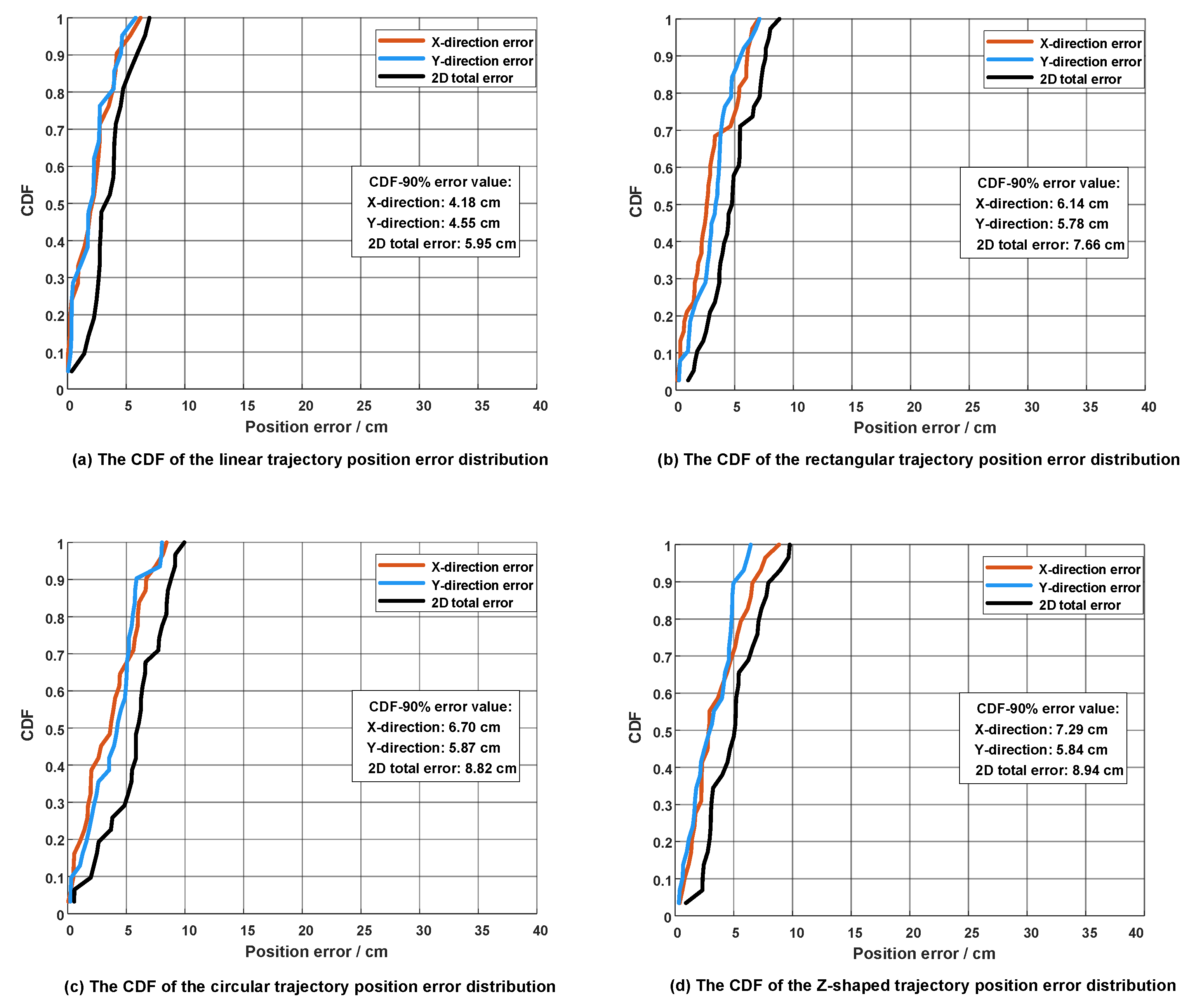

Dynamic Trajectory Estimation Performance: We further examined STFI-Net’s inference overhead under continuous operation by testing four dynamic trajectories with varying time-series lengths.

Table 15 presents the measured inference times for different trajectory types and lengths.

Notably, even the longest sequence (rectangular trajectory, 37 steps) was processed in under 0.3 s. The consistency between static localization (7.6 ms/sample) and dynamic trajectory estimation (average 7.96 ms/step) validates the architectural stability of STFI-Net across different operational modes.

- (2)

Model complexity analysis

Based on the STFI-Net architecture described in

Section 3 (2-layer CNN + 4-layer Modern TCN + 2-layer TimeDistributed) and parameter configurations specified in

Table 4, we conduct precise complexity analysis to understand the computational requirements underlying the observed inference performance.

Table 16 presents the detailed complexity breakdown across STFI-Net components for typical trajectory inference configuration (T = 30, processing 16 AP RSS signals).

The computational bottleneck lies in TCN temporal modeling, specifically the ConvFFN components with expansion ratio of 4× (128→512→128 dimensions). However, the pure convolutional architecture enables complete parallelization, avoiding the sequential bottlenecks inherent in RNN-based approaches, which explains the consistent per-step inference time observed in our experiments.

Table 17 summarizes the complete complexity characteristics of STFI-Net, providing essential metrics for embedded system deployment assessment.

- (3)

Embedded system scalability assessment

Based on the empirically validated inference performance (154–287 ms for 20–37 step trajectories) and theoretical complexity analysis (1.1 M parameters, 33.1 M FLOPs, 11–15 MB memory), we evaluate deployment feasibility across mainstream embedded platforms.

Table 18 presents a comprehensive assessment considering hardware specifications, computational capabilities, and application scenarios.

The deployment feasibility analysis reveals: (1) memory requirements (11–15 MB inference) are fully satisfied by Raspberry Pi 4 and above platforms, (2) computational demands (33.1 M FLOPs) are manageable for ARM Cortex-A72 and higher processors, (3) low-power design enables edge computing and mobile applications.

The integrated analysis demonstrates that STFI-Net achieves an optimal balance between computational efficiency and positioning accuracy. The empirically validated trajectory inference performance (under 0.3 s for all tested scenarios) combined with favorable complexity characteristics confirms its suitability for real-time VLP applications across diverse embedded deployment scenarios, thereby satisfying the real-time demands of AGV navigation and other dynamic positioning applications.