Adaptive Flow Timeout Management in Software-Defined Optical Networks

Abstract

1. Introduction

- Hard timeout: the flow will be deleted after a set number of seconds, regardless of its activity;

- Idle timeout: the flow will be deleted after a set number of seconds of inactivity.

2. Literature Review

- Predictable flows (for example, flows for deterministic network services);

- Unpredictable flows (for example, spontaneous network traffic).

- The remaining space in the flow table is estimated;

- The flow lifetime value is set, which will be assigned to newly arrived flows to be handled by the controller;

- An appropriate sampling period is selected based on the current network traffic.

- The time between packet arrivals (inter-arrival time);

- Controller bandwidth;

- Current flow table usage.

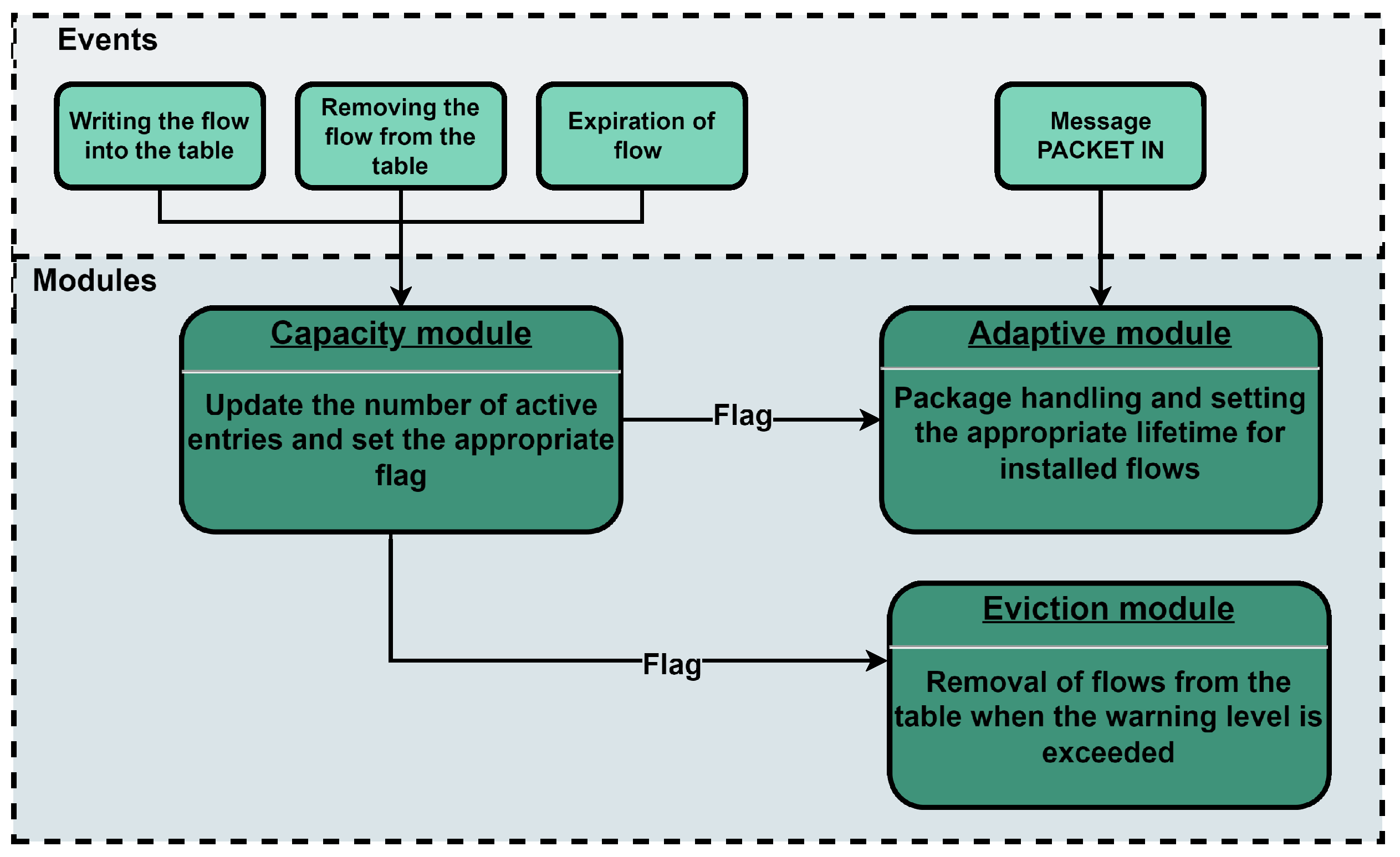

3. Adaptive Flow Timeout Management

3.1. Capacity Module

3.2. Adaptive Module

3.3. Eviction Module

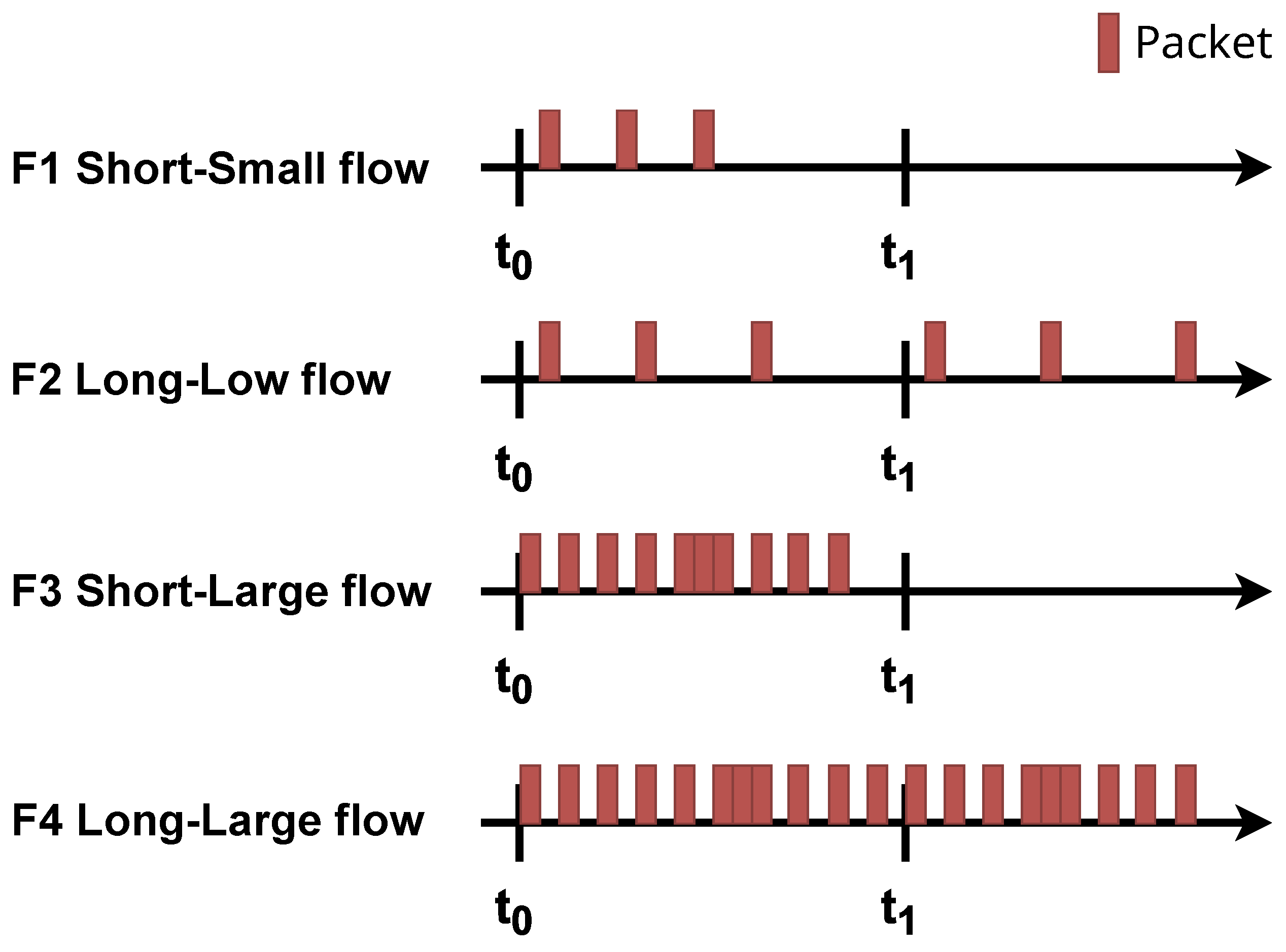

4. Simulations

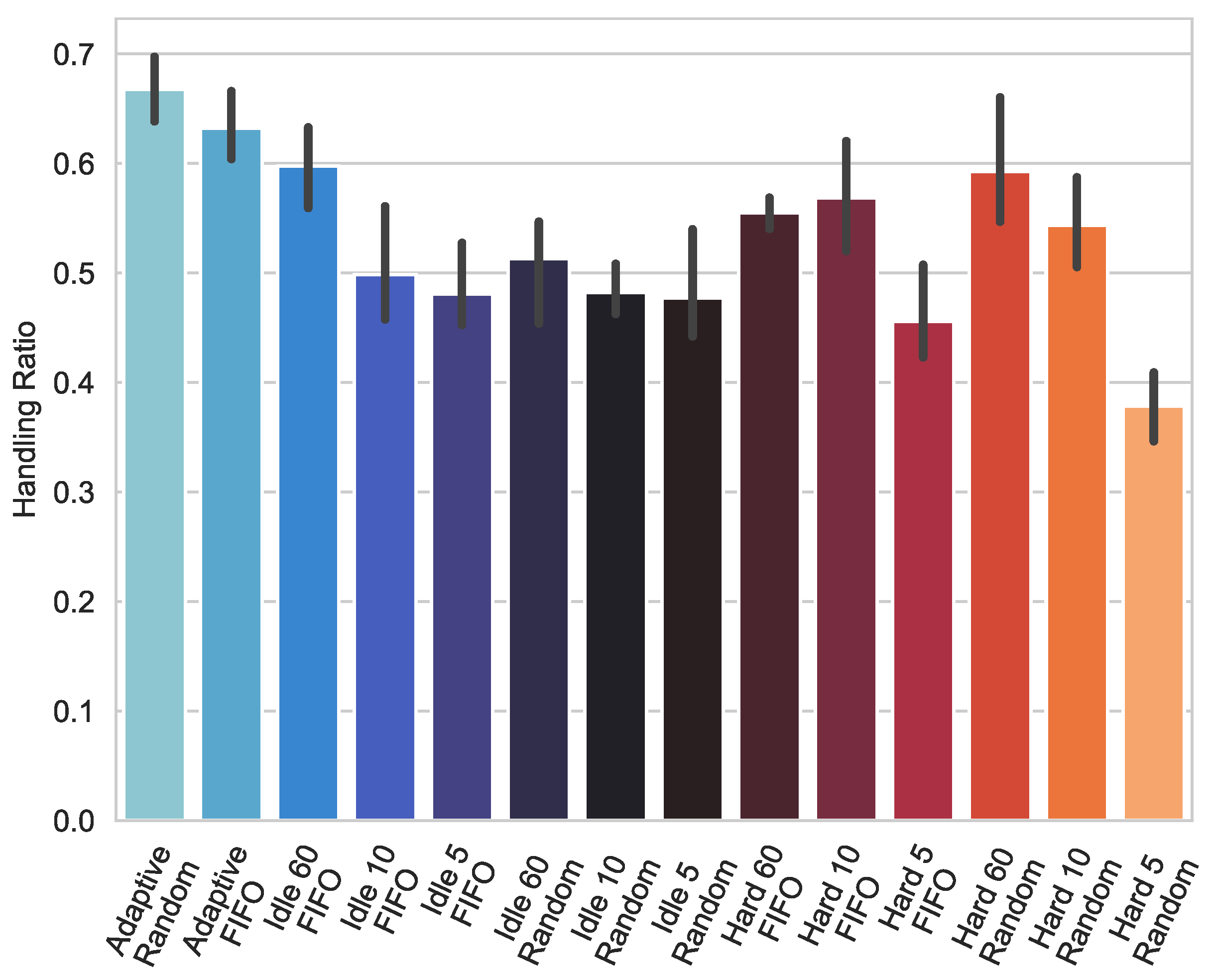

4.1. Packet Handling Ratio

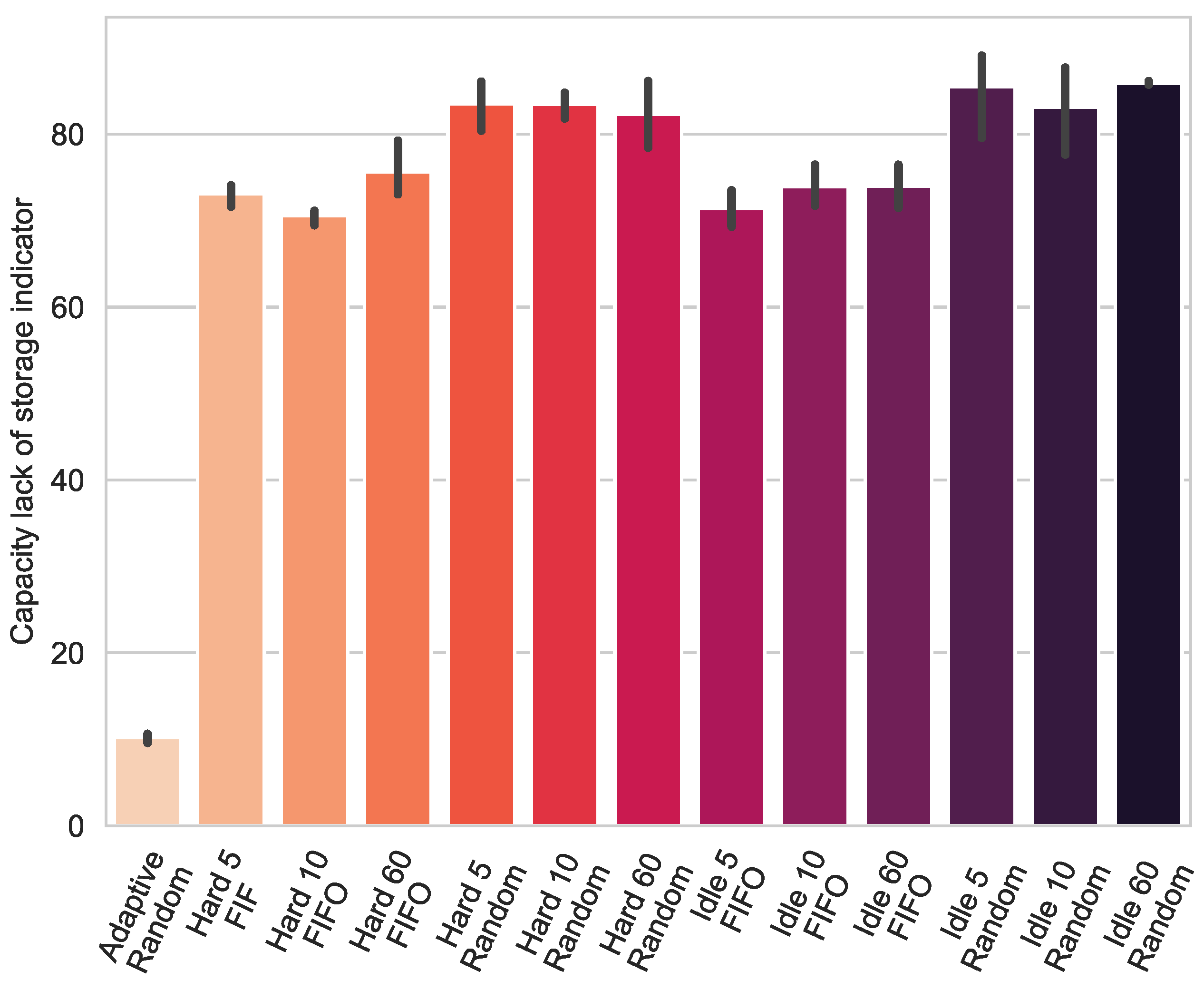

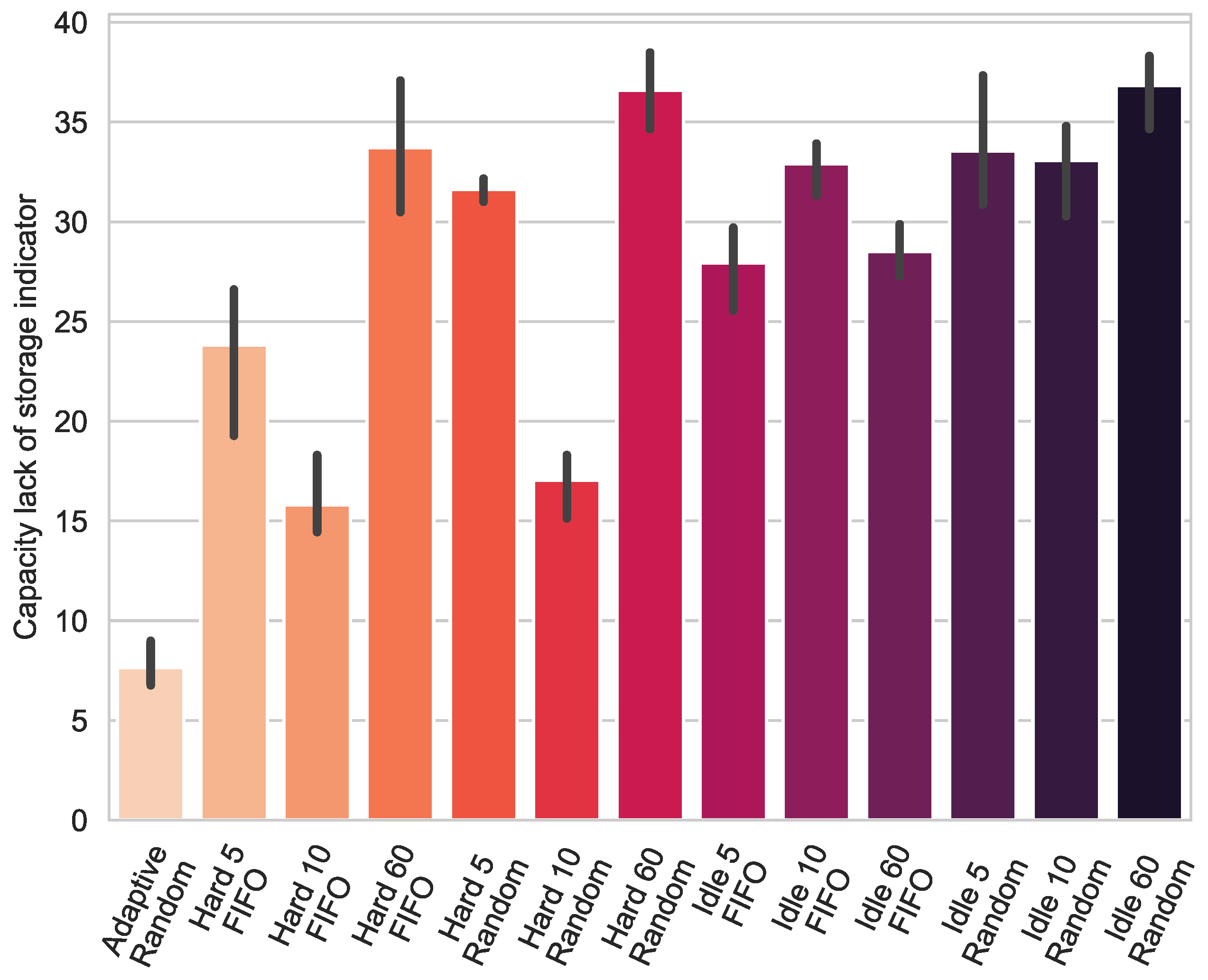

4.2. Capacity Lack of Storage Indicator

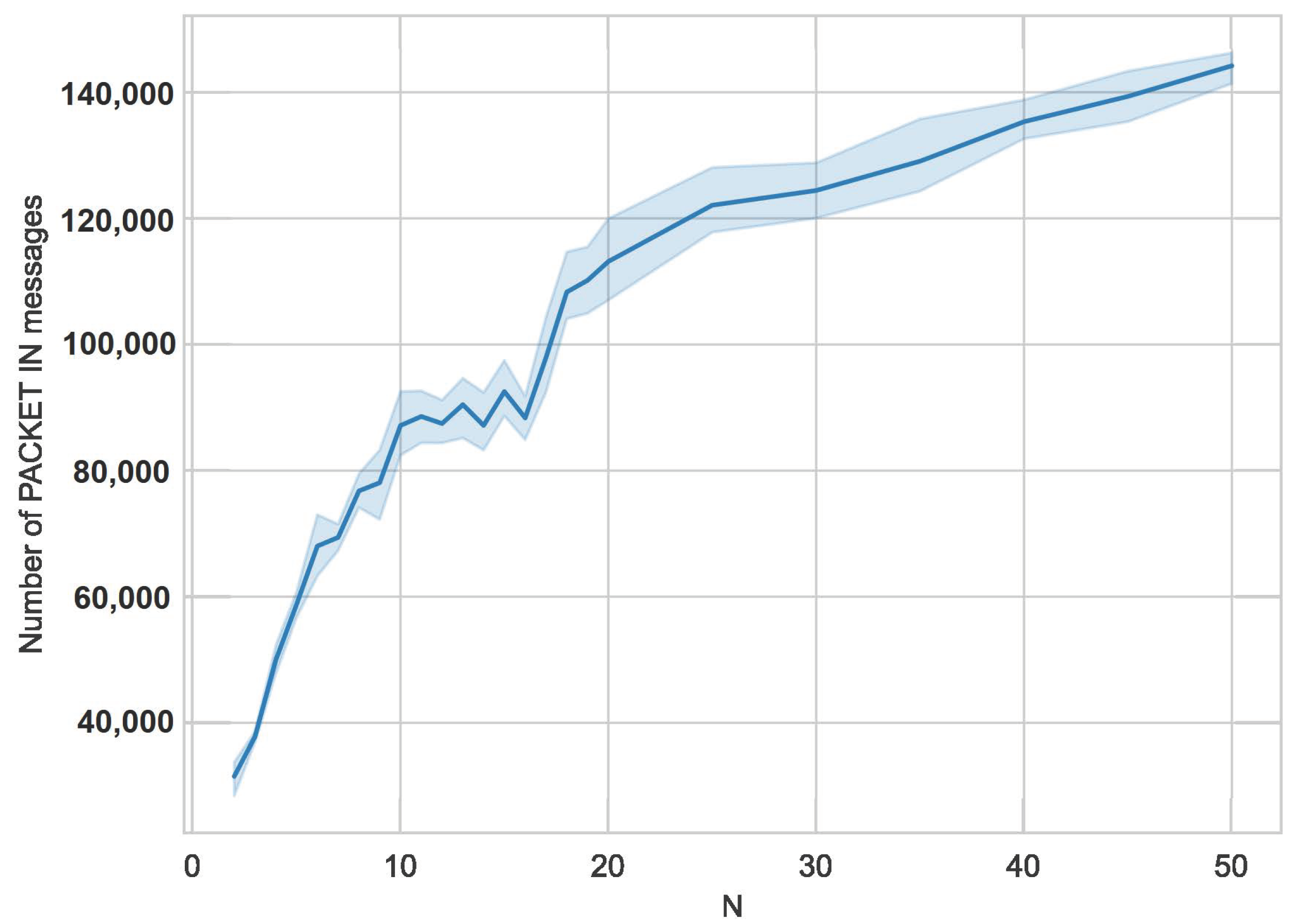

4.3. The Dependence of the Parameter N

4.4. Impact of Mechanism on Network Traffic

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jha, R.K.; Llah, B.N.M. Software Defined Optical Networks (SDON): Proposed architecture and comparative analysis. J. Eur. Opt.-Soc.-Rapid Publ. 2019, 15, 16. [Google Scholar] [CrossRef]

- Díaz-Montiel, A.A.; Lantz, B.; Yu, J.; Kilper, D.; Ruffini, M. Real-Time QoT Estimation through SDN Control Plane Monitoring Evaluated in Mininet-Optical. IEEE Photonics Technol. Lett. 2021, 33, 1050–1053. [Google Scholar] [CrossRef]

- Tao, Y.; Ranaweera, C.; Edirisinghe, S.; Lim, C.; Nirmalathas, A.; Wosinska, L.; Song, T. Automated Control Plane for Reconfigurable Optical Crosshaul in Next Generation RAN. In Proceedings of the 2024 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 5–9 March 2024; pp. 1–3. [Google Scholar]

- Muñoz, R.; Lohani, V.; Casellas, R.; Martínez, R.; Vilalta, R. Control of Packet over Multi-Granular Optical Networks combining Wavelength, Waveband and Spatial Switching For 6G transport. In Proceedings of the 2024 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 5–9 March 2024; pp. 1–3. [Google Scholar]

- Bakopoulos, P.; Patronas, G.; Terzenidis, N.; Wertheimer, Z.A.; Kashinkunti, P.; Syrivelis, D.; Zahavi, E.; Capps, L.; Argyris, N.; Yeager, L.; et al. Photonic switched networking for data centers and advanced computing systems. In Proceedings of the 2024 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 5–9 March 2024; pp. 1–3. [Google Scholar]

- Azodolmolky, S.; Nejabati, R.; Escalona, E.; Jayakumar, R.; Efstathiou, N.; Simeonidou, D. Integrated OpenFlow–GMPLS control plane: An overlay model for software defined packet over optical networks. Opt. Express 2011, 19, B421. [Google Scholar] [CrossRef]

- Ghiasian, A. Impact of TCAM size on power efficiency in a network of OpenFlow switches. IET Netw. 2020, 9, 367–371. [Google Scholar] [CrossRef]

- Nguyen, X.N.; Saucez, D.; Barakat, C.; Turletti, T. Rules Placement Problem in OpenFlow Networks: A Survey. IEEE Commun. Surv. Tutor. 2016, 18, 1273–1286. [Google Scholar] [CrossRef]

- Zarek, A. OpenFlow Timeouts Demystified; University of Toronto: Toronto, ON, Canada, 2012. [Google Scholar]

- Lee, B.S.; Kanagavelu, R.; Aung, K.M.M. An efficient flow cache algorithm with improved fairness in Software-Defined Data Center Networks. In Proceedings of the 2013 IEEE 2nd International Conference on Cloud Networking (CloudNet), San Francisco, CA, USA, 11–13 November 2013; pp. 18–24. [Google Scholar] [CrossRef]

- Kim, E.D.; Choi, Y.; Lee, S.I.; Shin, M.K.; Kim, H.J. Flow table management scheme applying an LRU caching algorithm. In Proceedings of the 2014 International Conference on Information and Communication Technology Convergence (ICTC), Busan, Republic of Korea, 22–24 October 2014; pp. 335–340. [Google Scholar] [CrossRef]

- Kim, E.D.; Lee, S.I.; Choi, Y.; Shin, M.K.; Kim, H.J. A flow entry management scheme for reducing controller overhead. In Proceedings of the 16th International Conference on Advanced Communication Technology, Pyeong Chang, Republic of Korea, 16–19 February 2014; pp. 754–757. [Google Scholar] [CrossRef]

- Vishnoi, A.; Poddar, R.; Mann, V.; Bhattacharya, S. Effective switch memory management in OpenFlow networks. In Proceedings of the 8th ACM International Conference on Distributed Event-Based Systems, Mumbai, India, 26 May 2014. [Google Scholar] [CrossRef]

- Zhu, H.; Fan, H.; Luo, X.; Jin, Y. Intelligent timeout master: Dynamic timeout for SDN-based data centers. In Proceedings of the 2015 IFIP/IEEE International Symposium on Integrated Network Management (IM), Ottawa, ON, Canada, 11–15 May 2015; pp. 734–737. [Google Scholar] [CrossRef]

- Li, H.; Guo, S.; Wu, C.; Li, J. FDRC: Flow-driven rule caching optimization in software defined networking. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 5777–5782. [Google Scholar] [CrossRef]

- Liu, Y.; Tang, B.; Yuan, D.; Ran, J.; Hu, H. A dynamic adaptive timeout approach for SDN switch. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016; pp. 2577–2582. [Google Scholar] [CrossRef]

- Lu, M.; Deng, W.; Shi, Y. TF-IdleTimeout: Improving efficiency of TCAM in SDN by dynamically adjusting flow entry lifecycle. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 002681–002686. [Google Scholar] [CrossRef]

- Challa, R.; Lee, Y.; Choo, H. Intelligent eviction strategy for efficient flow table management in OpenFlow Switches. In Proceedings of the 2016 IEEE NetSoft Conference and Workshops (NetSoft), Seoul, Republic of Korea, 6 June 2016; pp. 312–318. [Google Scholar] [CrossRef]

- Li, Z.; Hu, Y.; Zhang, X. SDN Flow Entry Adaptive Timeout Mechanism based on Resource Preference. Iop Conf. Ser. Mater. Sci. Eng. 2019, 569, 042018. [Google Scholar] [CrossRef]

- Guo, Z.; Liu, R.; Xu, Y.; Gushchin, A.; Walid, A.; Chao, H. STAR: Preventing Flow-table Overflow in Software-Defined Networks. Comput. Netw. 2017, 125, 15–25. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Xu, S.; Lin, R.; Yu, H. TimeoutX: An Adaptive Flow Table Management Method in Software Defined Networks. In Proceedings of the 2015 IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Li, X.; Huang, Y. A Flow Table with Two-Stage Timeout Mechanism for SDN Switches. In Proceedings of the 2019 IEEE 21st International Conference on High Performance Computing and Communications; IEEE 17th International Conference on Smart City; IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Zhangjiajie, China, 10–12 August 2019; pp. 1804–1809. [Google Scholar] [CrossRef]

- Xu, X.; Hu, L.; Lin, H.; Fan, Z. An Adaptive Flow Table Adjustment Algorithm for SDN. In Proceedings of the 2019 IEEE 21st International Conference on High Performance Computing and Communications; IEEE 17th International Conference on Smart City; IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Zhangjiajie, China, 10–12 August 2019; pp. 1779–1784. [Google Scholar] [CrossRef]

- Li, Q.; Huang, N.; Wang, D.; Li, X.; Jiang, Y.; Song, Z. HQTimer: A Hybrid Q-Learning-Based Timeout Mechanism in Software-Defined Networks. IEEE Trans. Netw. Serv. Manag. 2019, 16, 153–166. [Google Scholar] [CrossRef]

- Panda, A.; Samal, S.S.; Turuk, A.K.; Panda, A.; Venkatesh, V.C. Dynamic Hard Timeout based Flow Table Management in Openflow enabled SDN. In Proceedings of the 2019 International Conference on Vision Towards Emerging Trends in Communication and Networking (ViTECoN), Vellore, India, 30–31 March 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Isyaku, B.; Kamat, M.B.; Abu Bakar, K.b.; Mohd Zahid, M.S.; Ghaleb, F.A. IHTA: Dynamic Idle-Hard Timeout Allocation Algorithm based OpenFlow Switch. In Proceedings of the 2020 IEEE 10th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Malaysia, 18–19 April 2020; pp. 170–175. [Google Scholar] [CrossRef]

- Yang, H.; Riley, G.F.; Blough, D.M. STEREOS: Smart Table EntRy Eviction for OpenFlow Switches. IEEE J. Sel. Areas Commun. 2020, 38, 377–388. [Google Scholar] [CrossRef]

- Abbasi, M.; Maleki, S.; Jeon, G.; Khosravi, M.R.; Abdoli, H. An intelligent method for reducing the overhead of analysing big data flows in Openflow switch. IET Commun. 2022, 16, 548–559. [Google Scholar] [CrossRef]

- Mendiboure, L.; Chalouf, M.A.; Krief, F. Load-Aware and Mobility-Aware Flow Rules Management in Software Defined Vehicular Access Networks. IEEE Access 2020, 8, 167411–167424. [Google Scholar] [CrossRef]

- Benson, T.; Akella, A.; Maltz, D. Network Traffic Characteristics of Data Centers in the Wild. In Proceedings of the 10th ACM SIGCOMM Conference on Internet Measurement, Melbourne, Australia, 1–3 November 2010; pp. 267–280. [Google Scholar] [CrossRef]

- Isyaku, B.; Mohd Zahid, M.S.; Bte Kamat, M.; Abu Bakar, K.; Ghaleb, F.A. Software Defined Networking Flow Table Management of OpenFlow Switches Performance and Security Challenges: A Survey. Future Internet 2020, 12, 147. [Google Scholar] [CrossRef]

- Traffic Trace Info. Available online: http://mawi.wide.ad.jp/mawi/samplepoint-F/2022/202209091400.html (accessed on 24 May 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radamski, K.; Ząbek, W.; Domżał, J.; Wójcik, R. Adaptive Flow Timeout Management in Software-Defined Optical Networks. Photonics 2024, 11, 595. https://doi.org/10.3390/photonics11070595

Radamski K, Ząbek W, Domżał J, Wójcik R. Adaptive Flow Timeout Management in Software-Defined Optical Networks. Photonics. 2024; 11(7):595. https://doi.org/10.3390/photonics11070595

Chicago/Turabian StyleRadamski, Krystian, Wojciech Ząbek, Jerzy Domżał, and Robert Wójcik. 2024. "Adaptive Flow Timeout Management in Software-Defined Optical Networks" Photonics 11, no. 7: 595. https://doi.org/10.3390/photonics11070595

APA StyleRadamski, K., Ząbek, W., Domżał, J., & Wójcik, R. (2024). Adaptive Flow Timeout Management in Software-Defined Optical Networks. Photonics, 11(7), 595. https://doi.org/10.3390/photonics11070595