1. Introduction

Because of the loss of phase information, reconstruct the underlying image from Fourier transform magnitudes is defined as a phase retrieval (PR) problem. Phase retrieval problems are ill-posed, because different images can have the same Fourier transform results, so the solution is not unique. It is necessary to know the image’s prior information, such as domain, nonnegative, sparse representation [

1,

2], etc., to constrain the numerical process to obtain the global saddle-point solution. Readers can consult [

1,

2,

3] for applications in regularizing seismic image beyond aliasing via gradient and spectral techniques and additional information.

Gerchberg and Saxton [

4] proposed an error reduction method (ER) based on projection; later, in 1982, this method was improved by Fienup’s hybrid inputs–outputs (HIO) in [

1]. Derived iterative projection algorithms include hybrid projective–reflection [

3,

5], the iterative difference map (DF) [

6] and the relaxed averaged alternation reflection (RAAR) algorithms [

7]. In addition, Marchesini adopted the saddle-point optimization method [

8] to solve the PR problem. The alternate projection method lacks convergent guarantee because of its alternating projection process that does not have nonconvex constraint sets. Thus, the solution converges to a local stationary point rather than the global optimal solution. Moreover, gradient-type methods have become popular; one example is the Wirtinger Flow (WF) method [

9] proposed by Candès, Li and Soltanolkotabi who put forward a gradient scheme with novel update rules that are carefully initialized by the means of a spectral method. Gradient-based approaches usually have first-order convergence. Farrell solved the phase retrieval (PR) problem with a network where every agent only contains a subset of the measurements [

10]. A convex method is characterized by the use of convex relationships of the quadratic equations or semidefinite programming. Candès, Strohmer and Voroninski proposed Phaselift in [

11], which uses SDP lifting techniques to formulates convex trace (nuclear) norm minimization. Moretta study the impact of constraints in Phaselift in [

12]. PhaseCut [

13], proposed by Waldspurger, is a convex method that separates phase information and magnitudes. Yin and Xin proposed PhaseLiftOff [

14], a nonconvex variant of Phaselift which cut out the Frobenius norm from the trace norm to retrieve phase information with fewer measurements. Xia studied the sparse phase retrieval method to recover a k-sparse signal in [

15]. Gao studied the adaptive sparse signal reconstruction algorithm in [

16].

In recent years, total variation (TV) regularization has been successfully used to solve image blind deblurring optimization problems with certain specific blurring kernels. In addition, framelet-based regularization has been adopted to solve deblurring problem with motion blur kernels. However, there is huge potential area for them to be used in phase retrieval problems from Fourier transform magnitudes. In this paper, we propose a phase retrieval algorithm based on TV regularization and the analysis-based sparsity framelet transform method to recover the image through an analysis matrix. We explicitly focus on the sparsity of image representation in phase retrieval problems from Fourier transform magnitudes. Total variation regularization can effectively guarantee a sparsity prior for the gradient domain of ground truth images with a small TV semi-norm and framelet transform can enforce a sparsity prior of target images under the redundant tight frame.

In the field of image deblurring and phase recovery, a single Gaussian noise is usually used to test the robustness of phase recovery algorithms against noise. In the field of image denoising, the robustness of the algorithm to salt-and-pepper noise is studied. Gaussian noise and salt-and-pepper noise are typical types of noise, which exist simultaneously in actual measurements. In order to simulate realistic measurement conditions, the reconstruction ability against two kinds of noise is studied for the first time. In order to simulate more realistic measurement conditions, this paper innovatively proposes to study the robustness of the algorithm with complex noise when two kinds of noise exist simultaneously. This is a very challenging problem.

Phase retrieval problems from Fourier transform magnitudes are an ill-posed problem, and these problems are more difficult than phase retrieval problems derived from motion blur. Readers can consult the work [

17,

18] of E. J. Candes, who studies the relationship between the number of measurements and the possibility of reconstructing an image. For research on further applications for reconstructing images from partial measurements, please see the work of Chang Huibin [

19]. On that basis, our research shows that when the illumination source meets a certain parameter setting, three sets of measured values can reconstruct an image.

2. Foundations of Phase Retrieval

2.1. Phase Retrieval Model

Phase retrieval is a branch of deconvolution problems and can be expressed as follows:

is the two-dimensional discrete Fourier transform.

is the measurement magnitude with noise

. For the convenience of calculation and implementation, we use vectors to represent two-dimensional discrete images in lexicographical order (connected by columns). To more easily present the modules, the symbols do not change.

is defined on a discrete lattice

of size

, which denotes that image u is connected by columns. The corresponding discrete Fourier transform is expressed as follows:

2.2. Total Variation

The total variation (TV) model of image processing based on partial differential equation (PDE) variation method is known as the classic Rudin Osher Fatemi (ROF) model in [

20]. It is one of the most common models used for image restoration, and generally consists of a fidelity term, regularization penalty items and regularization parameters. In recent years, the TV model is widely used in image denoising and other fields; readers can consult [

21,

22,

23] for detail. Total variation regularization can effectively guarantee a sparsity prior for the gradient domain of the underlying images which have a small norm.

In the following equations,

denotes the image for phase retrieval which is represented as discrete 2-dimensionl matrices with a size of

. The total variation in the discrete domain calculates the gradient of u, which is denoted by a gradient operator

:

where

is an element of u,

is the gradient of the horizontal direction and

is the gradient of the vertical direction.

The total variation (TV) regularization term of u is indicated as follows:

For the restoration model with additive noise pollution data, it can be indicated as follows:

where λ > 0 is the parameter of the total variation regular term. The total variation regularization penalty term is the semi-norm of the image gradient. One type of TV variant, namely the isotropic TV, is defined by

Another type is anisotropic TV, which is defined by

2.3. Wavelet

Images have sparse representation or approximation in redundant transformation, such as tight frame transform [

24,

25]. Wavelet [

26] is one type of tight frame. A large number of studies have shown that sparsity prior and low rank prior regularization enable the corresponding algorithm to produce a high-quality solution [

27,

28].

2.3.1. Tight Framework

A tight frame in a Hilbert space is introduced as follows, and the interested readers can consult [

27] for more in-depth study. Let

denote the norm of a variable in a Hilbert space

. The sequence

constructs a tight frame in

, when

where

indicates an inner product and

indicates the norm of

.

For the bounded sequence

, let

denote the analysis operator and let

denote its adjoining operator; they are defined by

The sequence constructs a tight frame when ; therein, is the identity operator.

In the following equations, u denotes the image for phase retrieval.

where

g indicates the tight framelet transform coefficients.

The phase retrieval deconvolution optimization model based on synthetic sparsity representation is

The deconvolution optimization model based on analytical sparsity representation is

The deconvolution optimization model based on synthesis sparsity representation is

The tight frame is one type of orthonormal basis generalization; redundant frame has been found to be useful in image and signal processing [

25,

29,

30]. Analysis operator W is redundant when its column dimension is smaller than the row dimension and the two methods generate different results. The synthesis-based method aims to obtain the most spars result among all possible transform coefficient vectors, while the analysis-based method seeks the most spar solutions among all possible canonical framelet coefficient vectors. Thus, the analysis-based method is a strict subset of synthesized-based methods. The solutions of analysis-based methods are close to the underlying image have increased smoothness. This has been proven empirically in many experiments. Since a solution with a certain smoothness will have a better visual quality, the analysis-based approach was chosen for this study. The two approaches are equivalent only if

. Then, the tight frame becomes a canonical orthogonal transformation.

2.3.2. Wavelet Tight Framework

A one-dimensional wavelet frame is constructed by a finite set of generators with shifts and dilations, , where is called the father wavelet and is called the wavelet. A refinable function is usually used to construct a tight wavelet frame, , which is also known as the scale function or mother wavelet. This satisfies the two-scale equation , where are periodic trigonometric polynomials, which satisfies .

The unitary extension principle (UEP) [

31] declares that

forms a tight frame when

Image processing uses two-dimensional information, so the two-dimensional wavelet frames are needed. Indeed, a two-dimensional wavelet is a tensor product of a one-dimensional wavelet. S. Malat and Meyer proposed MRA theory [

31,

32,

33], which studies the multi-resolution analysis properties of wavelets from the perspective of a function space, and provides a unified theory for constructing a wavelet framework and a fast algorithm for orthogonal wavelet transform. In this study, a two-level piecewise linear B-spline tight frame system with tensor product filters was adopted. The piecewise linear B-spline tight frame is the simplest system in this family, which employs piecewise linear B-spline functions as

. The corresponding tensor product filter is:

MATLAB software R2016b was used for the calculations. We used the wavelet frame decomposition algorithm [

25], whose construction varies with boundary setting conditions. In this research, Neumann (symmetric) boundary conditions were adopted. Interested readers can refer to [

27,

31,

34] for the principles of generating such matrices.

3. Proposed Model and Numerical Algorithm

3.1. Formulation of Minimization Model

Our work is closely related to article [

17]. where E. J. Candes discussed the possibility of reconstructing the target from incomplete sampling, and

measurements (the size of ground truth image is

) were suggested in order to uniquely recover real-valued images. Chang Huibin proposed a TV regularization [

19] model which can recover images from noisy measurements. In this research, a TV and wavelet-based co-exist regularization model is proposed to recover underlying images from

measurements with noise. The measurements were obtained by structured light illumination and image value with box constraint

. The model is introduced as follows:

where

denotes the Fourier transform. Let

The data

R(

) are obtained using three-light-field illumination

where

The research in [

19,

35] showed that the least square method produces a unique result for the PR problem with these three sets of data. The model is referred to as the least-square minimization problem with a box-constrained (LSB) model [

35].

This study focused on the sparse prior of objects; we incorporated TV and wavelet transform regularization into (17) to guarantee an exact solution. The analysis-based sparsity approach under wavelet tight frame decomposition was selected for this study. The proposed TV and framelet-based minimization problem of the least-square type with a box constraint (TFLSB) model is presented as follows:

where we adopt anisotropic TV regularization to be the term of TV(u),

is the wavelet decomposition of

, and

denotes the L-1 norm.

3.2. Uniqueness Analysis

Hayes [

36] and Sanz [

37] proved that using double the number of measurements can uniquely determine the solution of PR when the underling signal is nonnegative and finitely supported. The results are further extended with random oversampling [

38,

39,

40], where random illumination guarantees the absolute uniqueness and resolves all types of problems. We found that when

(where

N is a positive integer), 3n

1n

2 measurements and additional constraints such as the underlying object

is real and has a non-negative value, the algorithm can produce a unique solution.

Theorem 1. Assume that and the DFT of u and Ds u are nonvanishing, when s1 and s2 are prime with m and n, and s1 = s2 = N + 0.5 (N is a positive integer). Then, u can be recovered with 3 mn measurements in (15).

Proof. This theorem applies to one-dimensional and two-dimensional cases. The complete proof is shown in

Appendix A. □

3.3. Solution Existence Analysis

Theorem 2. Let Ω denote a bounded set in Lipschitz regular domain and comprehensive data b = (b0, b1, b2) is non-negative; then, the TFLSB model (19) has at least one minimum solution * ∈ BV (Ω).

Proof. It should be noted that . A minimizing sequence {uk}0 ≤ k ≤ ∞ satisfies (s.t.) . It is known that . The set Ω is bounded in a Lipschitz regular domain, C is a positive constant and exists as the upper limit, and s.t. . As Rellich’s compactness theorem state that there is and a subsequence , which meet in the norm of when . Using the continuity of the fidelity term of and lower semi-continuity of TV and wavelet regularization, one obtains . Then, u* is the one solution of . □

3.4. Numerical Model

There are several numerical methods that are used for the constrained optimization problem. At present, the Projected Gradient Descent (PGD) and Alternating Direction Method of Multipliers (ADMM) are widely used. The Alternating Direction Method of Multipliers (ADMM) was first proposed by Glowinski and Gabay, and further improved by Boyd in 2011, who demonstrated that ADMM is applicable in large-scale distributed optimization problems [

41]. ADMM is a computational method for optimization problems, and is effective in solving distributed convex optimization problems, especially statistical learning problems [

42,

43]. Through the process of decomposition–coordination, ADMM disassembles the large complicated global problem into several smaller solvable local sub-problems, which can be computed more easily and could converge to the global optimal solution through the coordination of the sub-problems. The disadvantages of the Projected Gradient Descent method are as follows: (1) it may converge to the local optimal solution; (2) the differential at saddle point is 0, but it is not the optimal solution; (3) because of its computational complexity, it is a time-consuming method, especially when the data is large-scale. Thus, ADMM was selected to solve the constrained optimization problem in this paper.

The above minimum problem model can be described by the formula:

where

The augmented Lagrangian equation of

reads

where

,

is the position penalty term of the image, and

are weight parameters and are positive. The ADMM solution framework for the above saddle-point solution, minimizes

with regardto

alternately and then update the dual variables

,

,

,

and

. The algorithm is summarized in the Algorithm 1.

According to the ADMM algorithm, the solution is decomposed into the following steps.

| Algorithm 1 ADMM method for solving the TFLSB model (22) |

| Initialization: |

| |

| While the loop stop conditions are not satisfied, do |

| |

| |

| |

| |

| |

| Update dual variables |

| |

| k = k + 1 |

| end while |

| output the solution |

The subproblem to solve the saddle point of u is

By calculating the derivative, the solution is

4. Numerical Experiments

The initialization method for the proposed Algorithm 1 is as follows.

The measurements bi containing noise are used directly for the calculations without any processing. The initial phase is given randomly without any specific request.

The initialization for variables

and

was chosen to be

and

, where initial phase

is derived from the standard uniform distribution in the open interval (0, 1).

The quality of the reconstructed image includes two aspects: one is the visual effect and the other is the evaluation index to analyze the difference between the reconstructed image and original image. The evaluation of visual effects varies with different people’s visual conditions. Objective evaluation indicators are very important. The affluent evaluation index of the reconstruction image evaluation used in the field of image reconstruction was adopted in this study to compare the proposed algorithm with other algorithms.

The peak signal-to-noise ratio (PSNR), structural similarity (SSIM) and signal-to-noise ratio (SNR) were used to measure the quality of the reconstruction, and the relative error (relative-error) was used to measure the convergence speed. PSNR is a comprehensive evaluation index of reconstruct image quality. MSE is the mean square error of the current calculated image compared to the ground truth image. H and V represent the number of the row and column, respectively, and n is the number of bits of storage per pixel.

SSIM (structural similarity) is a comprehensive evaluation of the image restoration quality in terms of brightness, contrast and image structure.

In general, let .

Signal-to-noise ratio (SNR) is calculated as

Relative-error is defined as follows:

In all the above formulas, u represents the current reconstructed image and represents the ground truth image.

4.1. Numerical Results

We also compared the PR results for the performance of the proposed TFLSB model with three other related phase retrieval algorithms: the error reduction algorithm (ER) [

3], TVB method [

18] and Wirtinger flow (WTF) method [

9]. The data were contaminated by the Gaussian noise

, where

is magnitudes of the original image,

represents the noisy weight,

represents the white Gaussian noise, and

is the measurement of the real object’s Fourier transform magnitude.

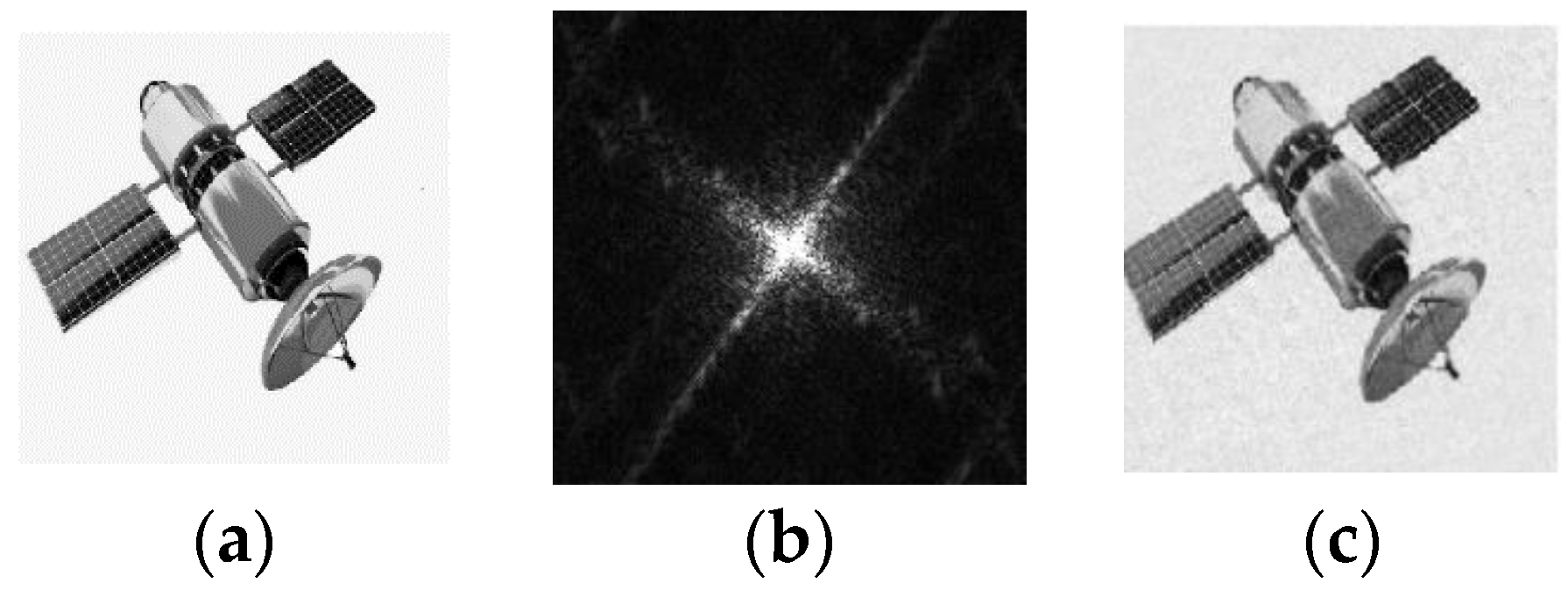

The test simulation images are available on the Internet (no copyright restrictions). Our research focused on the phase retrieval problem from Fourier transform magnitudes. Its application field covers remote target imaging. Therefore, the selected images have obvious geometric features and simple textures, with a size of 256 × 256.

In order to study the algorithm’s robustness to noise, 60 dB Gaussian noise was added to the measured value to verify the robustness of the algorithms. The noise level was set according to the SNR formula and rand function.

The TVB method and the TFLSB algorithm use the same structured illumination pattern in this study; the relevant parameters were set as follows: the iteration numbers were 1000,

,

,

and

. As

(

N is a positive integer) proves that the underlying image has an optimal solution, then s

1 and s

2 can be set to 0.5, 1.5 and 2.5. Here, in this experiment, we set

. Other value settings, such as 1.5, 2.5 and 3.5, could also obtain visually good reconstructed images and can be modified according to different graphics. The results are shown in the following figures,

Figure 1,

Figure 2,

Figure 3,

Figure 4,

Figure 5 and

Figure 6.

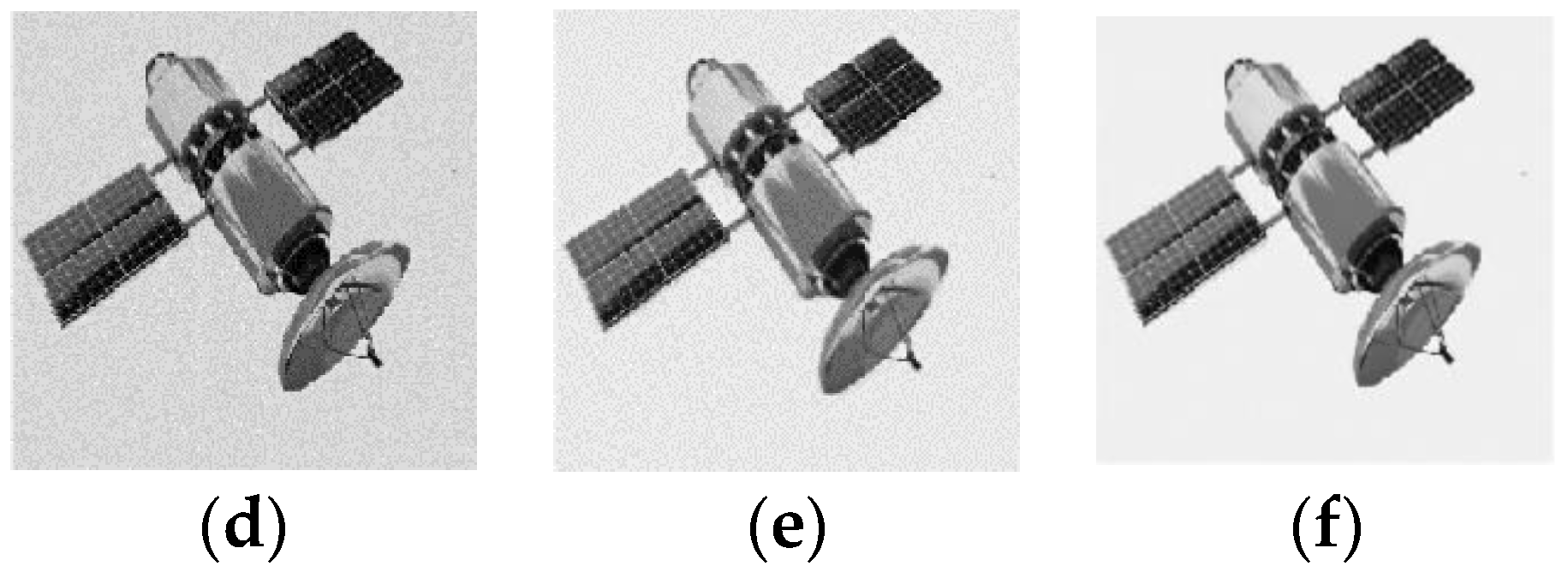

The numerical evaluation index performance of the phase retrieval method reflects the approximate degree of the distribution characteristics of the calculated image and the real object. Image vision includes sharpness, smoothness and similarity. The comparison results of the reconstructed image quality from the three images in

Figure 1,

Figure 3 and

Figure 5 were as follows. Firstly, the ER and TVB algorithms introduced obvious defects in the originally smooth background area. Secondly, the reconstructed image of the ER algorithm was fuzzy overall, while the reconstructed image of WF was sharper. This is because these algorithms do not have a good balance between image smoothness and boundary sharpness. Although the constrained performance of the TVB algorithm was better, its staircase effect can produce obvious defects which could not be omitted. Overall, compared with the other algorithms, the proposed TFLSB model using the ADMM algorithm performed better, and the reconstructed images were more visually pleasant with few noticeable image artifacts.

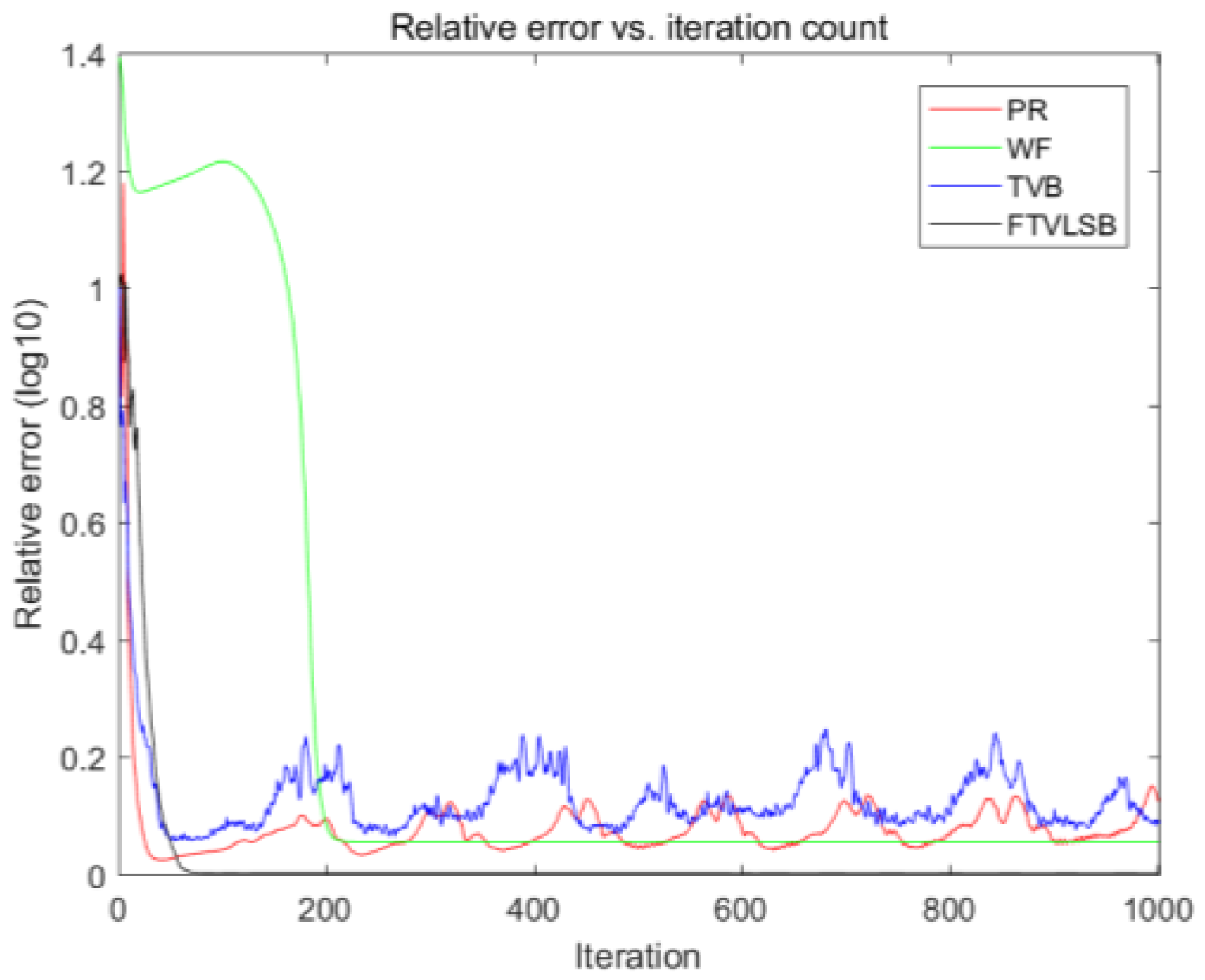

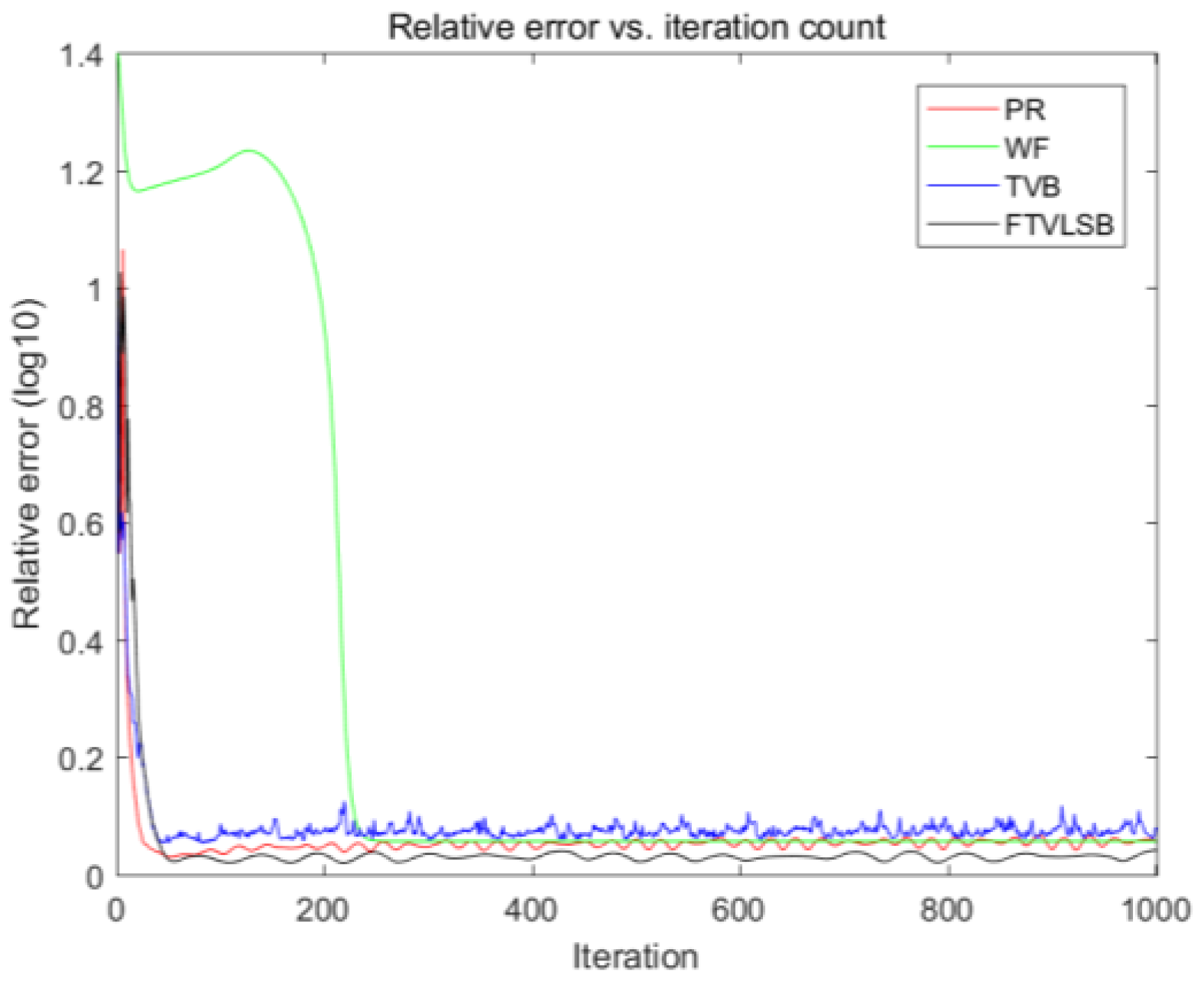

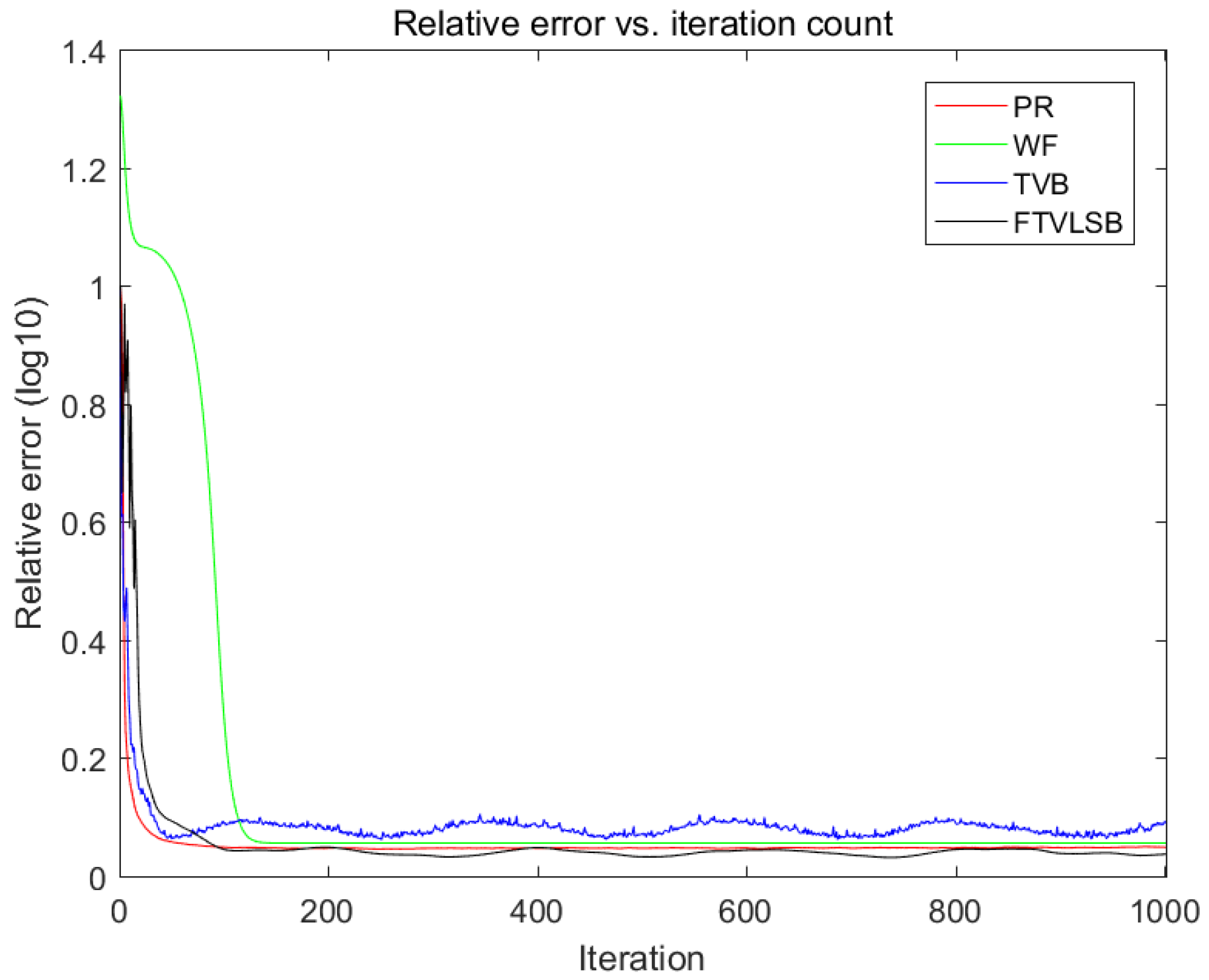

The comparison results in

Figure 2,

Figure 4 and

Figure 6 show that the proposed algorithm was more stable, converged to the optimal value and always had the lowest relative error value when dealing with images with different complexities.

Table 1,

Table 2 and

Table 3 show the image restoration quality with the numerical evaluation indicators, PSNR, SSIM, SNR and processing time. When the iteration number was 1000, our algorithm required more time due to the algorithm’s computing complexity. However, according to the speed of iterative convergence, the solving time could be shortened by reducing the iteration number. The PSNR, SSIM and SNR indexes directly reflect the pixel correspondence between the reconstructed image and the original image. According to the results of the three reconstructed images, for the TFLSB model proposed in this paper, compared with ER, WF and TVB algorithm, the PSNR index was improved by 111.59%, 108.87% and 57.14%, respectively. The SSIM index improved by 174.49%, 194,74% and 84.37%, and the SNR index improved by 674.89%, 517.72% and 241.47%, respectively. The comparison results in

Table 1,

Table 2 and

Table 3 directly show that our proposed FLSB model is more robust to noise and more efficient in reconstructing images compared to the other methods.

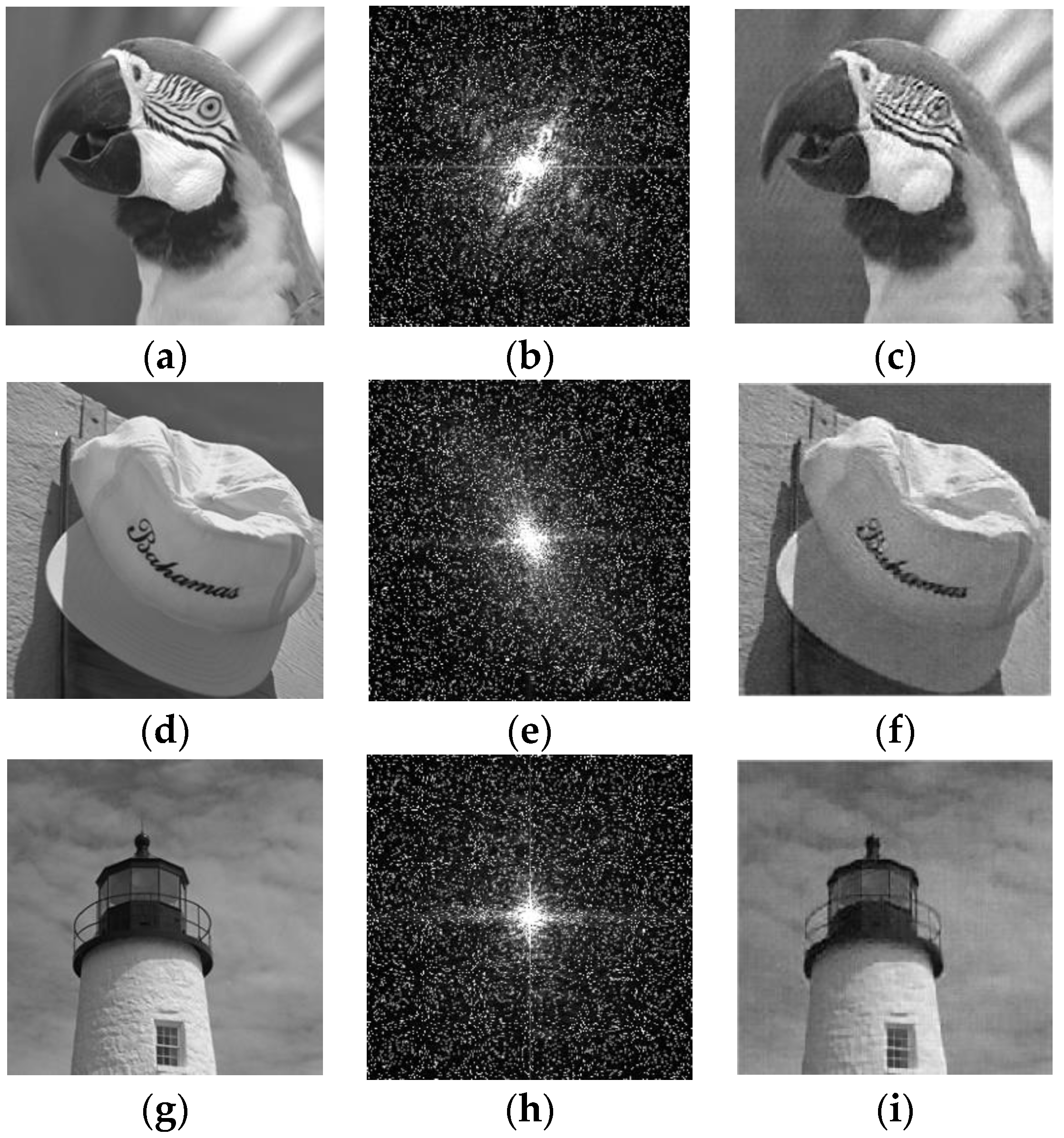

4.2. Sensitivity with Complex Noise

Image acquisition not only contains Gaussian noise, but also electromagnetic interference in the environment, and sensor internal errors, which will introduce salt–pepper noise. Salt–pepper noise, also known as pulse noise, is represented in the image as discrete distributions of pure white or black pixels. The image reconstruction ability of the TFLSB model was studied in images where Gaussian noise and salt–pepper noise coexist. Due to the excellent performance of the proposed algorithm in the previous task, in this experiment, we challenged the proposed algorithm to deal images with more complex textures to study its robustness in the presence of complex noise. The images are public images released by the Kodak Company. For the sake of consistency in this paper, the images used below are part of the original Kodak images, with a size of 256 × 256. Images were named—“Bird”, “Hat”, “Tower”.

The data were contaminated with Gaussian noise and salt–pepper noise, i.e., , where is the magnitudes of the spatial spectrum of the original image, represents the noisy weight, represents the white Gaussian noise, p denotes the salt–pepper noise, and is the measurement of the Fourier transform magnitude of the real object. The salt–pepper noise was generated by a random function and threshold setting. First, a random matrix was generated, whose values were derived from the standard uniform distribution in the open interval (0, 1). Then, using the threshold setting, the elements of a random matrix were converted to integers 0 or 1.

Thus, the complex noise is described using the following formula:

In this experiment,

= 1 and the threshold setting of salt–pepper noise is 0.9. When the value generated by the random function is greater than 0.9, white noise is obtained. The salt–pepper noise setting means that 10% of the measurements were corrupted, which is more serious than a real experiment. The results are shown in the following

Figure 7.

By comparing the reconstructed images with the original images, we can observe that the reconstructed images with complex noise have significant clarity regarding visual quality. The numerical values in

Table 4 show that the PSNR values of the restored images varied from 23.606 to 29.4759, the SSIM values varied from 0.355219 to 0.443401, and the SNR values varied from 24.715 dB to 27,188 dB. These data suggest that the proposed sparse prior regularization model TFLSB is robust even with complex noise, and it is also effective in processing images with complex textures. In the future, its application range could be determined by studying the relationship between noise standards, parameter settings and image complexity.

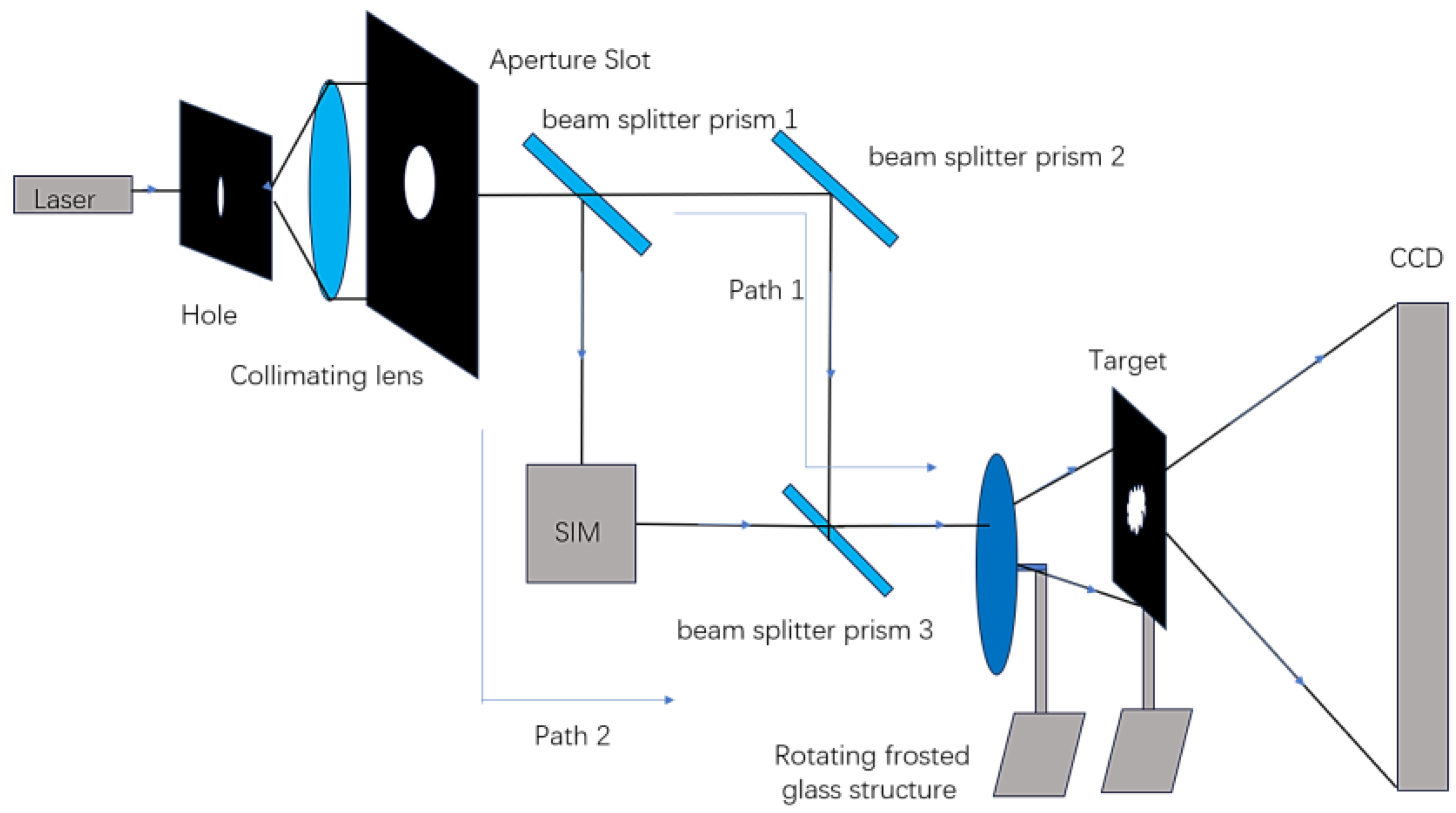

5. Experimental Results

Experimental equipment was set up to simulate reconstruction of the underlying image using Fourier transform magnitudes. The process consisted of two parts. The first part was data collection, during which the spatial spectrum modulus of the target underwent correlation computing. The second is to use the phase recovery algorithm to calculate the reconstructed image.

The schematic diagram of the experiment is shown in

Figure 8. The working wavelength selected for the laser for the purpose of the experiment was 532 nm. The laser turned into a pseudothermal light source after passing through the rotating glass. In order to reduce the extra stray light caused by reflection, this experiment adopted a transmission target, which was made by hollowing out a target pattern on a metal plate, with an image size of 3 × 3 mm. The structure is shown in

Figure 9.

The pixel size of the CCD camera was 6.5 μm, and the number of detection units is 2048 × 2048. In the experiment, the CCD detection frequency was 20 Hz, and the exposure time was 30 ms. The glass rotation speed was 0.3°/s. A SIM structured light modulator was set up using the internal DMD array to realize the control of the structured light. The light field distribution of structured light mode 1 is . When , it is a two-dimensional sinusoidal distribution of phases in box constrained interval (0-Π). The light field distribution of structured light mode 1 is , and compared to pattern 1, it has a phase shift.

In order to reduce the influence of the environment on the phase retrieval experiment, data acquisition was divided into two steps. First, when the measurement was not illuminated by a light source, the measurement values of the experimental environment used for imaging and the inherent defects of the measurement system recorded with the detector, representing the background noise. Then, when the light source illuminates the target, the measurement values were recorded. The difference between these two points can offset the impact of some of the noise.

The TFLSB algorithm in this paper is used for phase retrieval, and the results are shown in

Figure 10.

Due to the limited experimental conditions, the acquisition frequency of the CCD camera was limited, as was the target spatial spectrum information that could be obtained by the pseudothermal light source, making the obtained target information very scarce and increasing the difficulty of phase recovery. When structured light lit an area, the two optical paths should be at the same frequency and there should be no phase delay, but in practical applications, it is difficult to ensure that the optical path difference between the two optical paths is 0. This error reduces reconstructed image quality. From the visual evaluation, the resolution of the reconstruction image is not good enough when compared the previous numerical simulation. The evaluation indexes PSNR, SSIM and SNR used for image restoration are not ideal, and additional research on phase retrieval should be carried out in the future. The numerical results in

Table 5 directly show that our proposed FLSB model could efficiently reconstruct images in practice.

6. Conclusions

In this paper, we introduced an innovative TV and framelet-based regularization minimization phase retrieval model with a box constraint (TFLSB) for image recovery from magnitudes degraded by Gaussian and salt–pepper noise. Our proposed model incorporates isotropic TV and analysis-based wavelet regularization, enabling the enforcement of sparse priors in both the gradient domain and spatial structure domain simultaneously. Through heuristic analysis, we identified the key parameters s1 = s2 = N + 0.5 (N being a positive integer) that contribute to stable phase recovery with 3n1n2 measurements. This structural light can be easily obtained by using a structured light modulator. The TFLSB model effectively reconstructs high-quality latent images from corrupted measurement data obtained with a structured lighting model. Comparative evaluations against the ER, WF, and TVB algorithms demonstrate that the degraded images reconstructed by the TFLSB model exhibit superior image quality, with clearer edges and fewer artifacts. The evaluation indices PSNR, SSIM and SNR further confirm the significant enhancement in image quality achieved by the numerical theory and TFLSB model. Additionally, our study investigated the robustness of the TFLSB model against Gaussian and salt–pepper noise, revealing its resilience against complex noise. This provides a certain direction for the implementation of phase retrieval from Fourier transform magnitudes in practice.

Furthermore, in practice, the proposed method is able to reconstruct the underlying image from Fourier transform magnitudes, helping us to solve the phase retrieval problem in environments. Under the existing experimental conditions, the reconstructed images solved using the TFLSB algorithm are not clear enough, but there is an optimal solution, that can verify the stability and feasibility of the proposed algorithm.

It is important to note that phase retrieval remains a challenging deconvolution problem, and there are several open questions that require further exploration. The proposed algorithm was the most time consuming compared to the other algorithms, which is not conducive to real-time imaging applications. One potential improvement is the use of a more powerful industrial computer to reduce the computation time. Future work will focus on developing faster algorithms with a second-order convergence rate to reduce processing time. Additionally, we aim to incorporate more priors to enhance the quality of the reconstructed solutions for a broader range of image categories. While the proposed phase retrieval model is currently applicable to oversampling scenarios (structured illuminated patterns), our future research will explore additional patterns to enable the exact recovery of latent images in various settings.