Inference-Optimized High-Performance Photoelectric Target Detection Based on GPU Framework

Abstract

1. Introduction

- In order to effectively reduce the number of model parameters and ensure the high accuracy of the neural network, we trained a high-precision CNN model through knowledge distillation [21]; guided learning was performed on lightweight networks. Finally, a high-precision lightweight network model was obtained.

- In order to reduce the number of computations in the process of model inference, we deeply explored the inference acceleration principle of the TensorRT [22,23,24] engine based on the characteristics of the GPU computing framework and built a computational graph based on the existing network. Experiments verified the effectiveness and practicality of the TensorRT inference acceleration.

- In order to solve the excessive waste of time for data replication and overlapping calculations during the model inference, we optimized TensorRT to exploit CUDA (Computer Unified Device Architecture) control based on the kernel execution principle. The utilization of the GPU was fully invoked through the multi-stream [25] mode, further shortening the inference time of deep learning models.

2. Materials

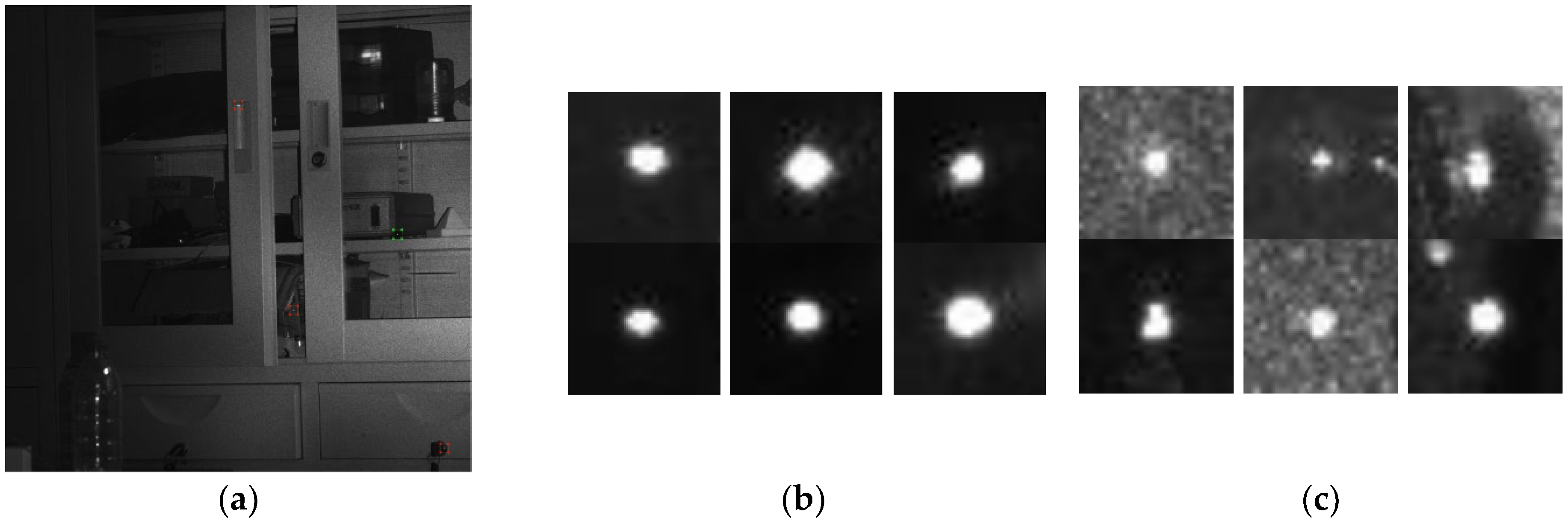

2.1. Dataset

2.2. Experimental Environment

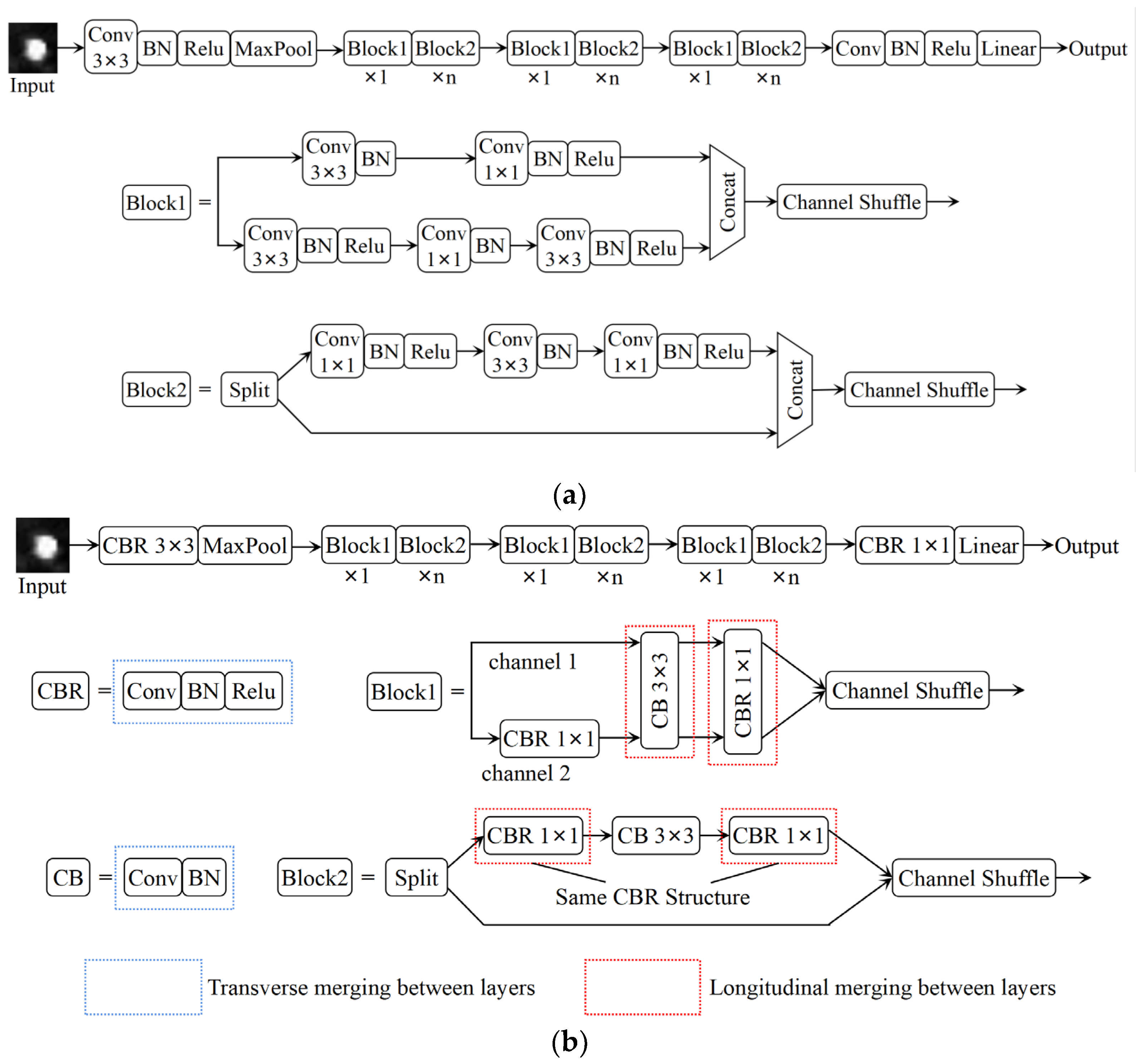

3. Methods

3.1. Knowledge Distillation

3.2. TensorRT Acceleration

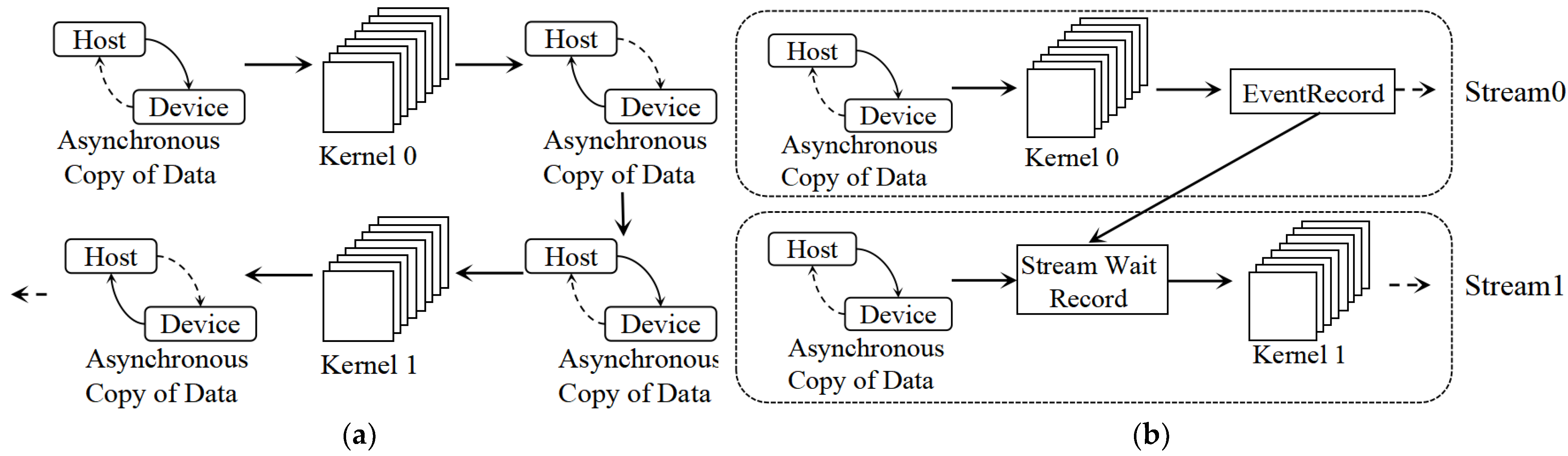

3.3. Multi-Stream Mode

4. Results

4.1. Analysis of Knowledge Distillation Results

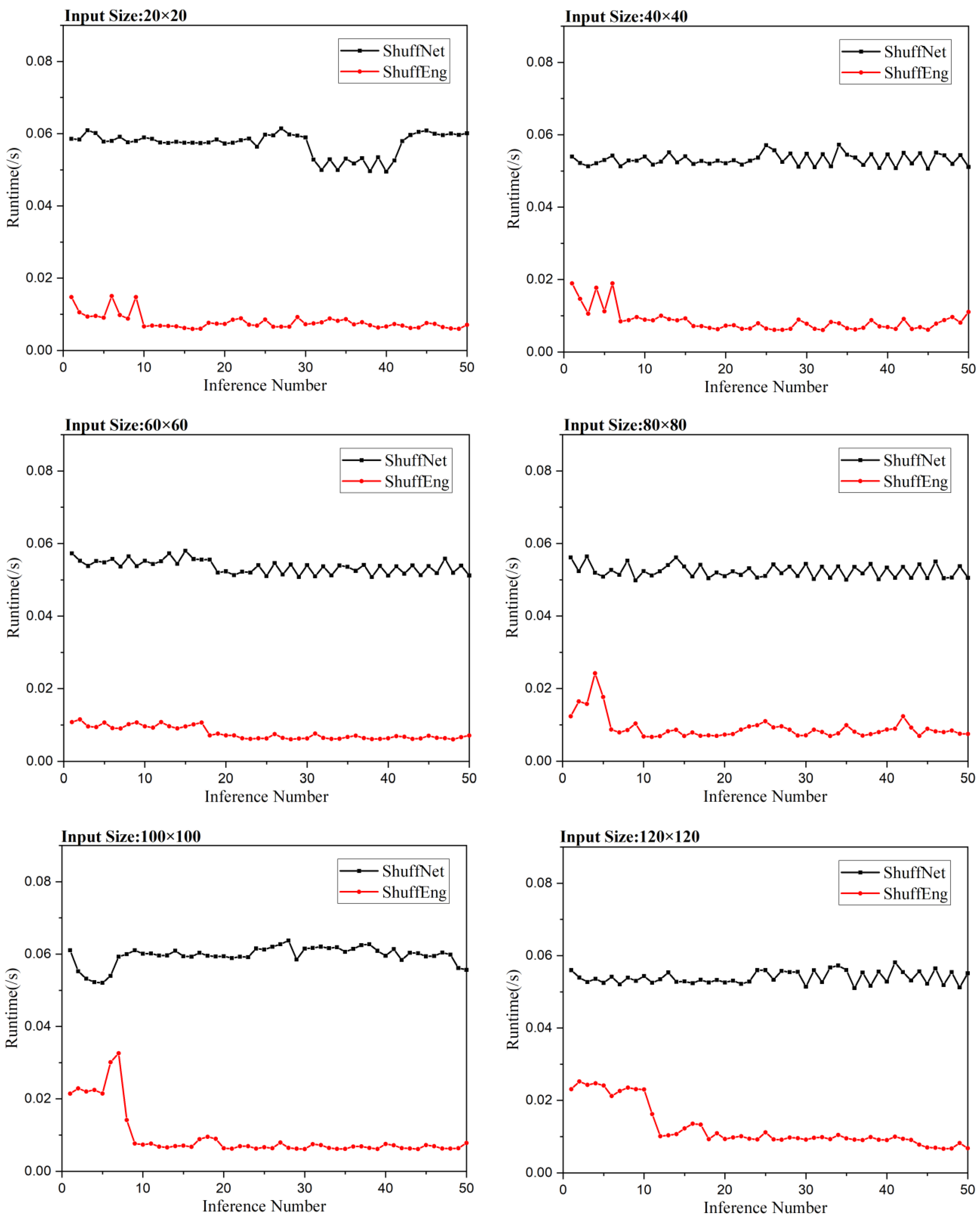

4.2. Analysis of MSIOT Result

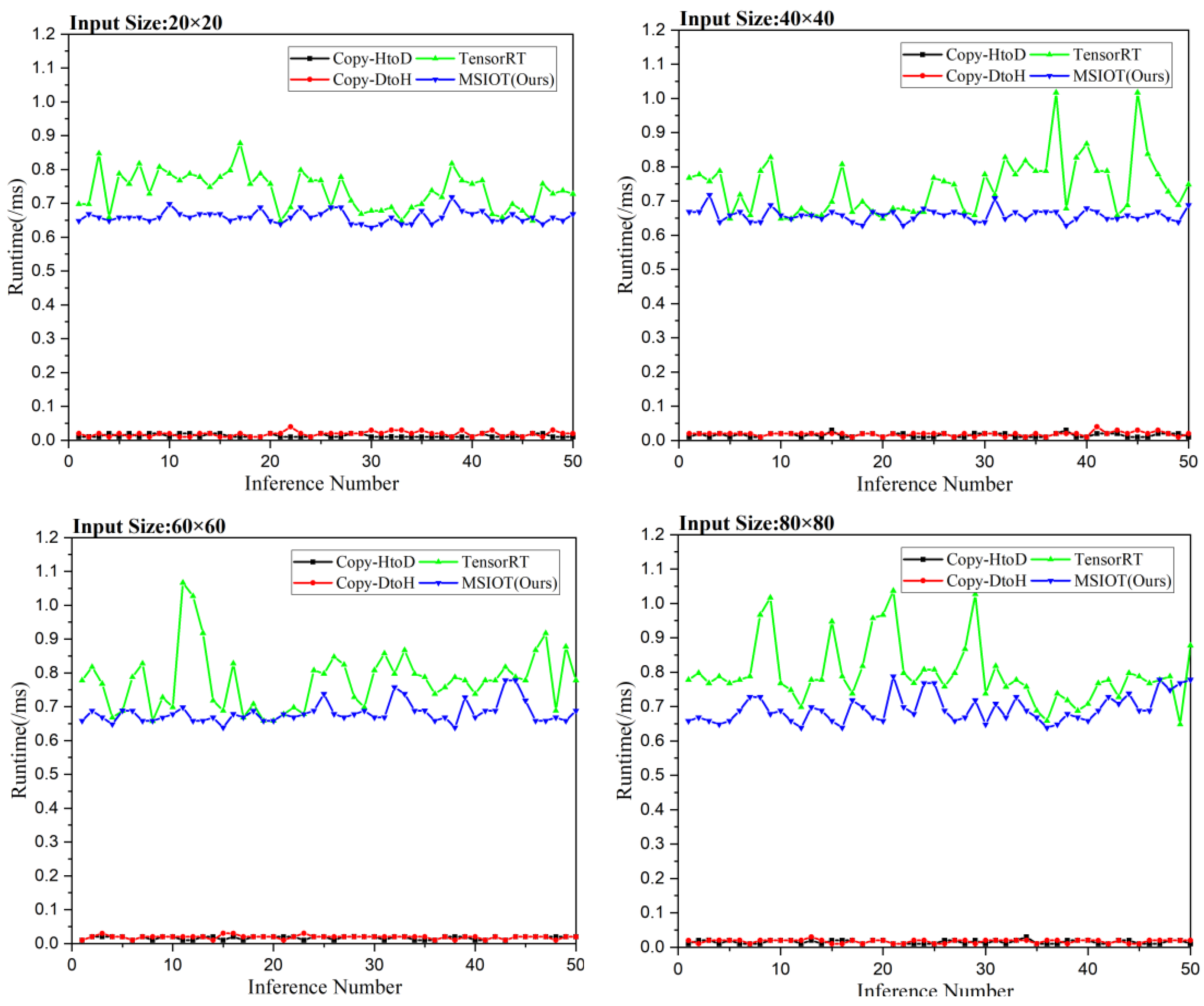

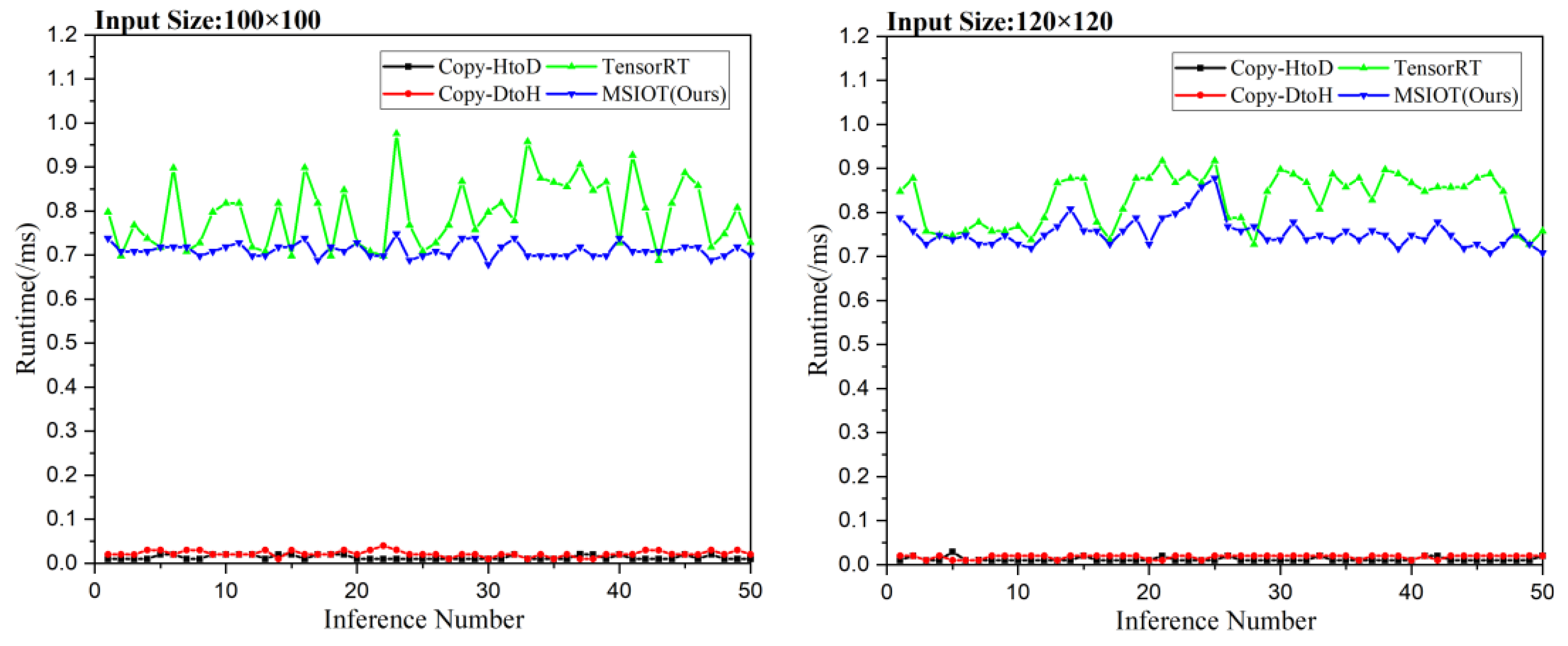

- Copy-HtoD and Copy-DtoH cannot be avoided when using GPUs for inference. Figure 7 shows that Copy-HtoD and Copy-DtoH occupy a relatively small amount of time, but the time occupancy of data replication in each inference will have a non-negligible time cost. Theoretically, MSIOT saves n–1 times Copy-HtoD and Copy-DtoH in the n times inference process compared to TensorRT. MSIOT can better cope with multiple inference tasks of photoelectric targets real-time detection.

- MSIOT uses multiple threads within the same CUDA to perform calculations simultaneously by calling CUDA cores in a more complete way than TensorRT, improving the hardware performance utilization and stabilizing the inference speed in performing photoelectric targets detection task.

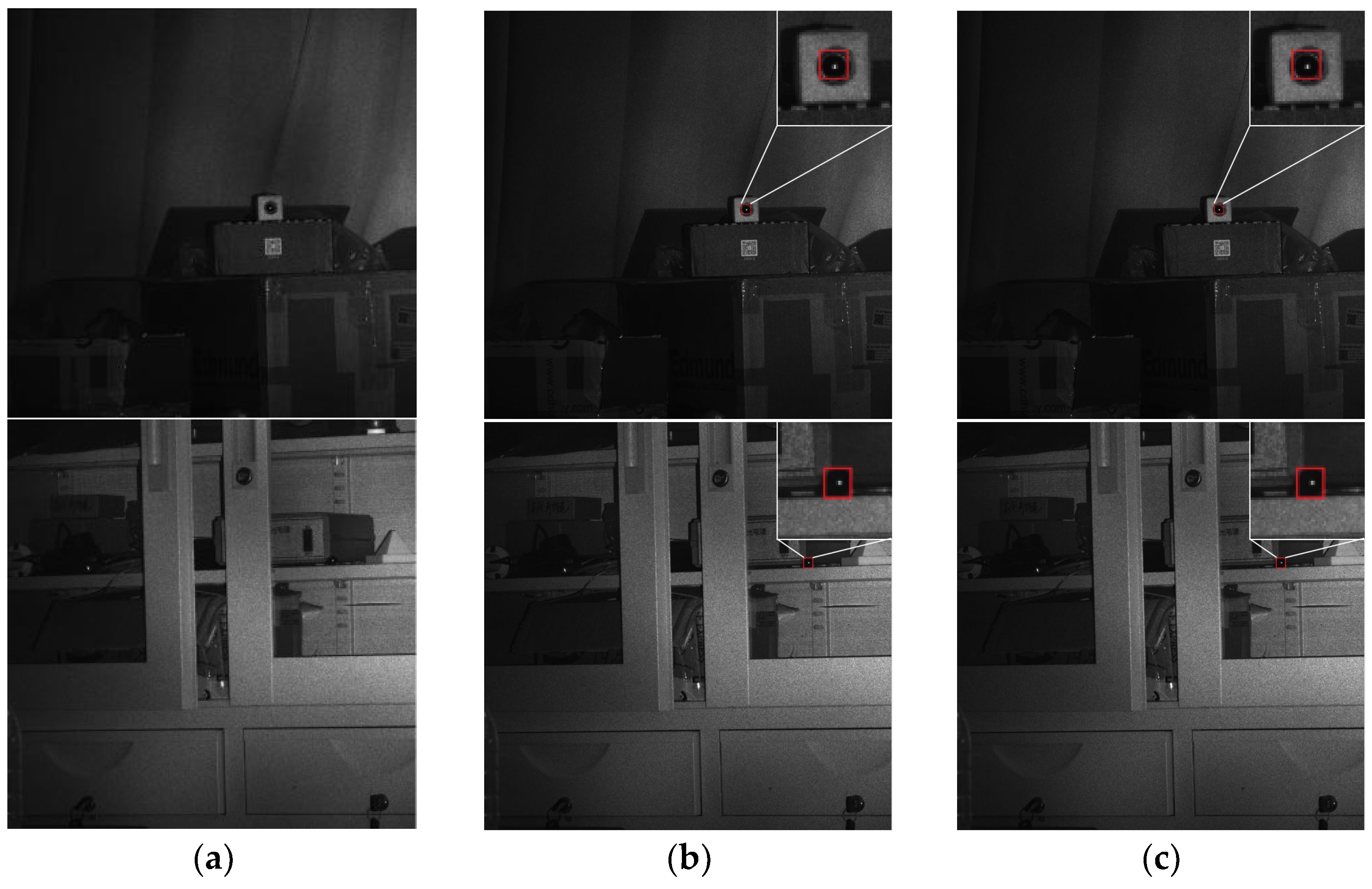

4.3. System Experimental Verification

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huayan, S.; Laixian, Z.; Yanzhong, Z.; Yonghui, Z. Progress of Free-Space Optical Communication Technology Based on Modulating Retro-Reflector. Laser Optoelectron. Prog. 2013, 50, 040004. [Google Scholar] [CrossRef]

- Laixian, Z.; Hua-yan, S.; Guihua, F.; Yanzhong, Z.; Yonghui, Z. Progress in free space optical communication technology based on cat-eye modulating retro-reflector. Chin. J. Opt. Appl. Opt. 2013, 6, 681–691. [Google Scholar]

- Mieremet, A.-L.; Schleijpen, R.-M.-A.; Pouchelle, P.-N. Modeling the detection of optical sights using retroreflection. Proc. SPIE 2008, 6950, 69500. [Google Scholar]

- Auclair, M.; Sheng, Y.; Fortin, J. Identification of Targeting Optical Systems by Multiwavelength Retroreflection. Opt. Eng. 2013, 52, 54301. [Google Scholar] [CrossRef]

- Anna, G.; Goudail, F.; Dolfi, D. General state contrast imaging: An optimized polarimetric imaging modality insensitive to spatial intensity fluctuations. Opt. Soc. Am. A 2012, 29, 892–900. [Google Scholar] [CrossRef] [PubMed]

- Sjöqvist, L.; Grönwall, C.; Henriksson, M.; Jonsson, P.; Steinvall, O. Atmospheric turbulence effects in single-photon counting time-of-flight range profiling. Technol. Opt. Countermeas. V. SPIE 2008, 7115, 118–129. [Google Scholar]

- Zhou, B.; Liu, B.; Wu, D. Research on echo energy of ‘cat-eye’target based on laser’s character of polarization. In Proceedings of the 2011 International Conference on Electronics and Optoelectronics, Dalian, China, 29–31 July 2011; Volume 2, pp. V2-302–V2-305. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Neural Information Processing Systems Conference, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the Neural Information Processing Systems Conference, Barcelona, Spain, 5–10 December 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Li, H.; Xiong, P.; An, J.; Wang, L. Pyramid Attention Network for Semantic Segmentation. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Wang, X.; Jun, Z.; Wang, S. The Cat-Eye Effect Target Recognition Method Based on Visual Attention. Chin. J. Electron. 2019, 28, 1080–1086. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, L.; Sun, H.; Guo, H. Photoelectric Target Detection Algorithm Based on NVIDIA Jeston Nano. Sensors 2022, 22, 7053. [Google Scholar] [CrossRef] [PubMed]

- Ke, X. Research on Hidden Camera Detection and Recognization Method Based on Machine Vision; Huazhong University of Science and Technology: Wuhan, China, 2019. [Google Scholar]

- Liu, C.; Zhao, C.M.; Zhang, H.Y.; Zhang, Z.; Zhai, Y.; Zhang, Y. Design of an Active Laser Mini-Camera Detection System using CNN. IEEE Photonics J. 2019, 11, 1. [Google Scholar] [CrossRef]

- Huang, J.H.; Zhang, H.Y.; Wang, L.; Zhang, Z.; Zhao, C. Improved YOLOv3 Model for miniature camera detection. Opt. Laser Technol. 2021, 142, 107133. [Google Scholar] [CrossRef]

- Narayanan, D.; Santhanam, K.; Phanishayee, A.; Zaharia, M. Accelerating deep learning workloads through efficient multi-model execution. NeurIPS Workshop Syst. Mach. Learn. 2018, 20, 1. [Google Scholar]

- Tokui, S.; Okuta, R.; Akiba, T.; Niitani, Y.; Ogawa, T.; Saito, S.; Yamazaki Vincent, H. Chainer: A deep learning framework for accelerating the research cycle. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2002–2011. [Google Scholar]

- NVIDIA Tesla V100 GPU Architecture. Available online: https://images.nvidia.com/content/volta-architecture/pdf/volta-architecture-whitepaper.pdf (accessed on 14 June 2021).

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv.org. Available online: https://arxiv.org/abs/1503.02531 (accessed on 3 May 2021).

- NVIDIA A100 Tensor Core GPU Architecture. Available online: https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/nvidia-ampere-architecture-whitepaper.pdf (accessed on 24 June 2022).

- NVIDIA TensorRT. Available online: https://developer.nvidia.com/tensorrt (accessed on 21 October 2020).

- Lijun, Z.; Yu, L.; Lu, B.; Fei, L.; Yawei, W. Using TensorRT for deep learning and inference applications. J. Appl. Opt. 2020, 41, 337–341. [Google Scholar] [CrossRef]

- Kwon, W.; Yu, G.I.; Jeong, E.; Chun, B.G. Nimble: Lightweight and parallel gpu task scheduling for deep learning. Adv. Neural Inf. Process. Syst. 2020, 33, 8343–8354. [Google Scholar]

- Chetlur, S.; Woolley, C.; Vandermersch, P.; Cohen, J.; Tran, J.; Catanzaro, B.; Shelhamer, E. cudnn: Efficient primitives for deep learning. arXiv 2014, arXiv:1410.0759. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- ONNX: Open neural network exchange. Available online: https://github.com/onnx/onnx (accessed on 15 February 2021).

- Ningning, M.A.; Xiangyu, Z.; Haitao, Z.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In Proceedings of the Computer Vision-ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar] [CrossRef]

- Iandola, F.N.; Song, H.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2022, arXiv:1602.07360. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yang, L.; Jiang, H.; Cai, R.; Wang, Y.; Song, S.; Huang, G.; Tian, Q. Condensenet v2: Sparse feature reactivation for deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3569–3578. [Google Scholar]

- Bolon-Canedo, V.; Remeseiro, B. Feature selection in image analysis: A survey. Artif. Intell. Rev. 2020, 53, 2905–2931. [Google Scholar] [CrossRef]

- Kabir, H.; Garg, N. Machine learning enabled orthogonal camera goniometry for accurate and robust contact angle measurements. Sci. Rep. 2023, 13, 1497. [Google Scholar] [CrossRef] [PubMed]

| Network | Top-1% | Parameter Quantity (M) | Calculated Amount (M) | Inference Time (s) |

|---|---|---|---|---|

| VGG16 | 99.18% | 134.27 | 1368.74 | - |

| AlexNet | 98.75% | 57.00 | 90.61 | 0.2152 |

| ResNet18 | 98.27% | 23.51 | 82.27 | 0.1343 |

| Network | Top-1% | KD- VGG16 Top-1% | KD- AlexNet Top-1% | KD- ResNet18 Top-1% | Parameter Quantity (M) | Calculated Amount (M) | Inference Time (s) |

|---|---|---|---|---|---|---|---|

| Shuffv2_x0_5 | 97.31% | 96.47% | 96.08% | 97.72% | 0.34 | 2.95 | 0.0759 |

| Shuffv2_x1_0 | 97.87% | 97.22% | 97.74% | 98.09% | 1.26 | 11.62 | 0.0893 |

| Shuffv2_x1_5 | 98.23% | 97.37% | 97.89% | 98.18% | 2.48 | 24.07 | 0.0982 |

| Shuffv2_x2_0 | 98.39% | 97.93% | 98.14% | 98.21% | 5.35 | 47.62 | 0.1176 |

| Squeezent1_0 | 96.06% | 86.83% | 66.72% | 95.97% | 0.73 | 41.74 | 0.0734 |

| Squeezent1_1 | 90.04% | 93.47% | 76.79% | 92.29% | 0.72 | 16.05 | 0.0697 |

| GhostNet | 96.22% | 97.72% | 97.60% | 97.67% | 3.90 | 14.26 | 0.0928 |

| CondenseNetv2 | 93.87% | 96.94% | 95.02% | 97.23% | 7.26 | 169.0 | - |

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| ShuffNet | 94.2% | 93.16% | 95.4% | 94.27% |

| ShuffEng | 94.2% | 93.16% | 95.4% | 94.27% |

| Input Size | 20 × 20 | 40 × 40 | 60 × 60 | 80 × 80 | 100 × 100 | 120 × 120 |

| Speedup Ratio | 7.2323 | 6.1883 | 6.9185 | 5.7292 | 6.2322 | 4.3423 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Zhang, L.; Guo, H.; Zheng, Y.; Ma, S.; Chen, Y. Inference-Optimized High-Performance Photoelectric Target Detection Based on GPU Framework. Photonics 2023, 10, 459. https://doi.org/10.3390/photonics10040459

Zhang S, Zhang L, Guo H, Zheng Y, Ma S, Chen Y. Inference-Optimized High-Performance Photoelectric Target Detection Based on GPU Framework. Photonics. 2023; 10(4):459. https://doi.org/10.3390/photonics10040459

Chicago/Turabian StyleZhang, Shicheng, Laixian Zhang, Huichao Guo, Yonghui Zheng, Song Ma, and Ying Chen. 2023. "Inference-Optimized High-Performance Photoelectric Target Detection Based on GPU Framework" Photonics 10, no. 4: 459. https://doi.org/10.3390/photonics10040459

APA StyleZhang, S., Zhang, L., Guo, H., Zheng, Y., Ma, S., & Chen, Y. (2023). Inference-Optimized High-Performance Photoelectric Target Detection Based on GPU Framework. Photonics, 10(4), 459. https://doi.org/10.3390/photonics10040459