Using Non-Lipschitz Signum-Based Functions for Distributed Optimization and Machine Learning: Trade-Off Between Convergence Rate and Optimality Gap

Abstract

1. Introduction

1.1. Literature Review

1.2. Contributions

1.3. Paper Organization

2. Preliminaries

2.1. Notations

2.2. Algebraic Graph Theory

2.3. Background on Signum-Based Consensus

3. The Framework: Distributed Regression Problem

4. The Proposed Signum-Based Learning Dynamics

4.1. The Algorithm

| Algorithm 1. GT-based distributed ML algorithm |

| randomly initialized; |

| While termination criteria NOT hold; |

| do |

| ; |

| ); |

| via dynamics (11)-(12); |

| ; |

4.2. Practical Implementations and Applications

5. Simulations

5.1. Academic Example

- Case (i):

- Case (ii):

- Case (iii):

- Case (iv):

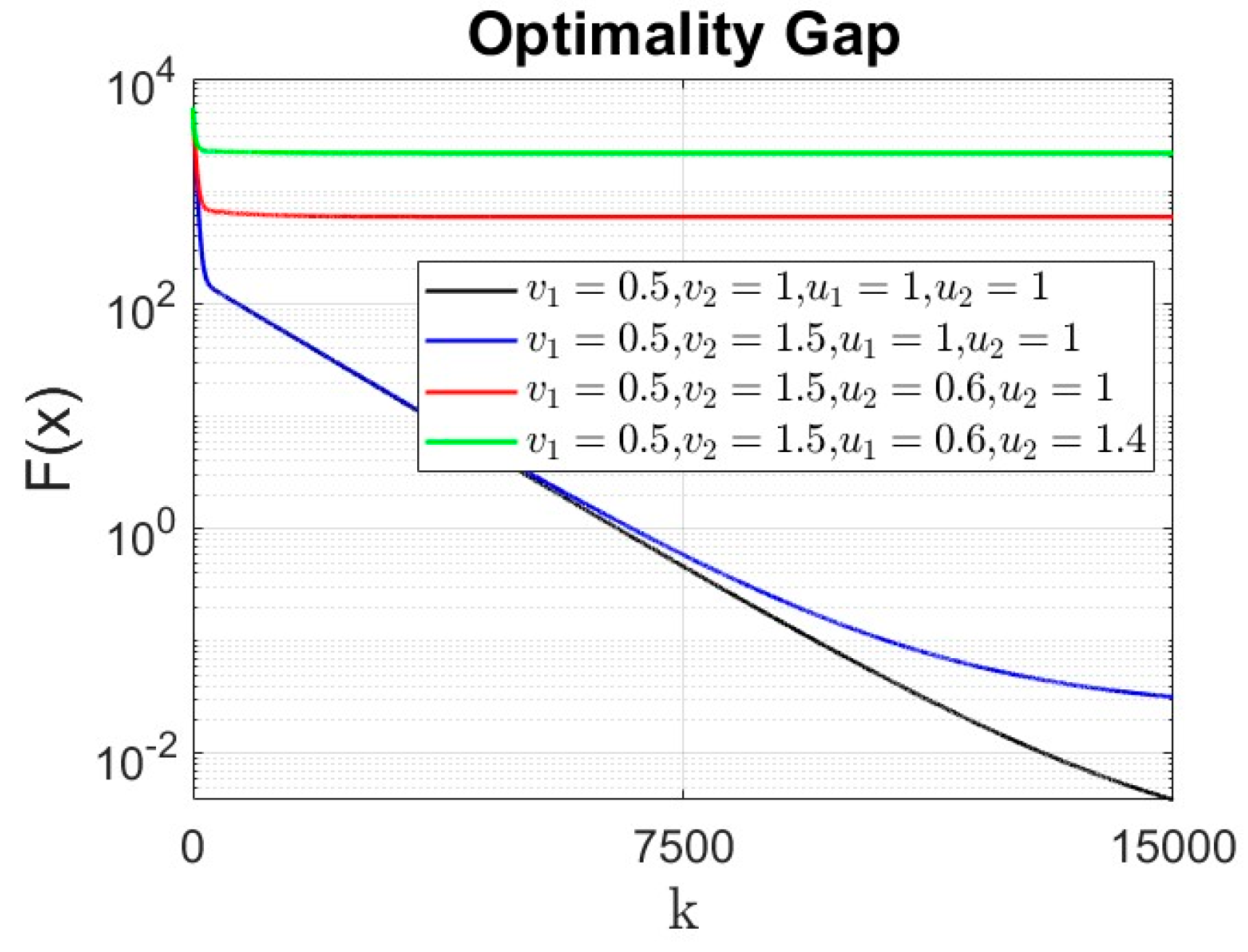

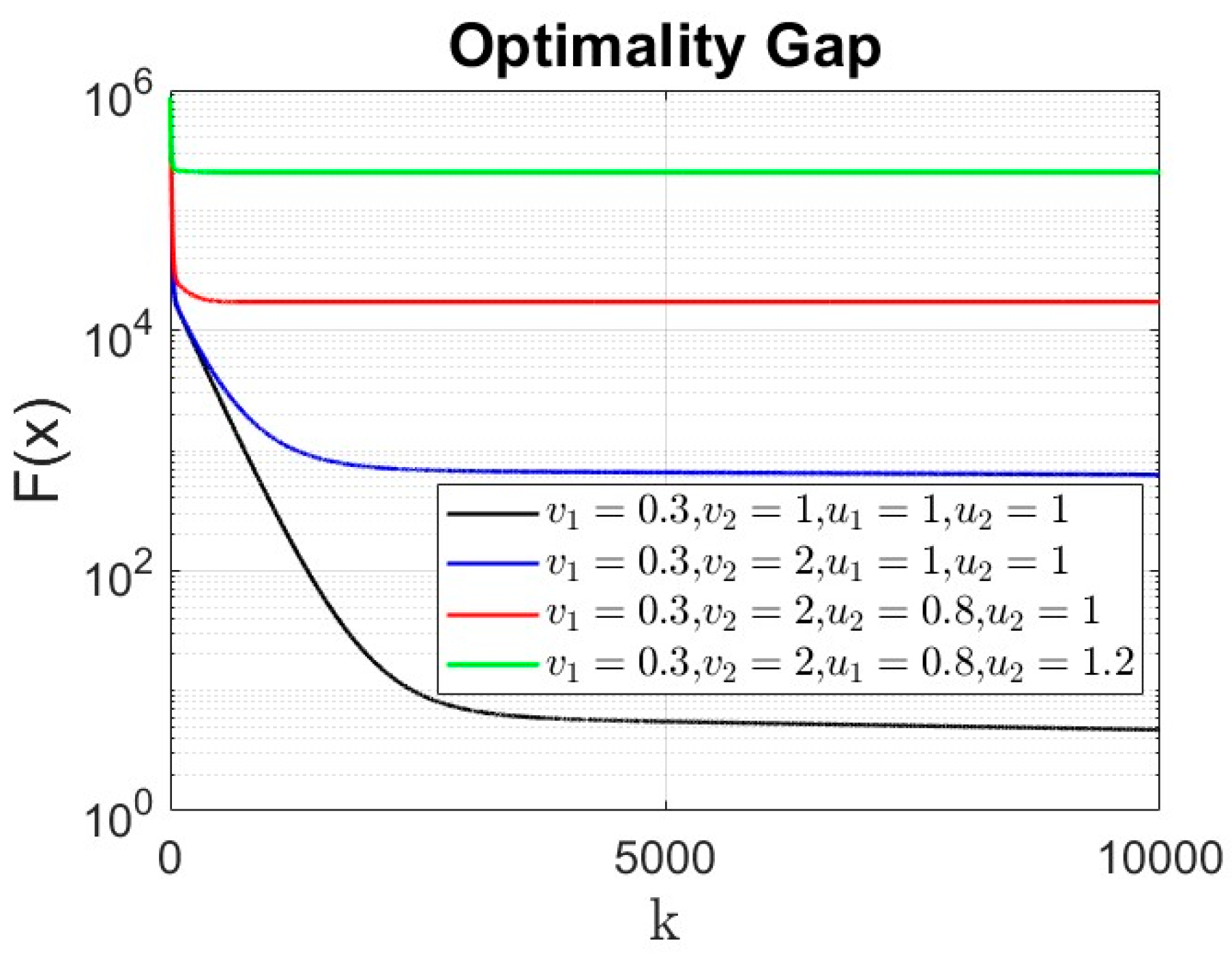

- The time evolution of the cost functions is compared in Figure 2. As it can be seen from the figure, although as claimed in the literature the signum-based dynamics may result in finite time stability, it results in steady-state optimization residual (depending on the parameters ).

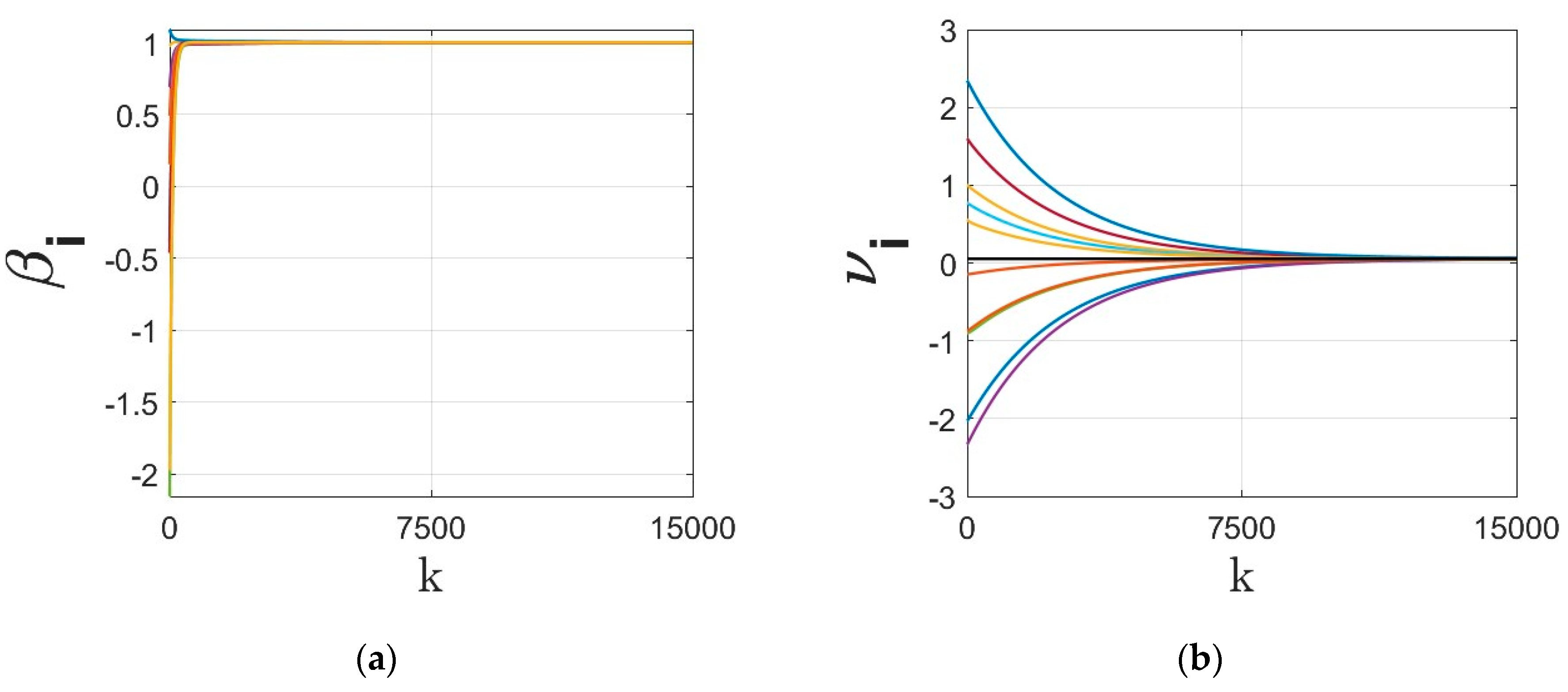

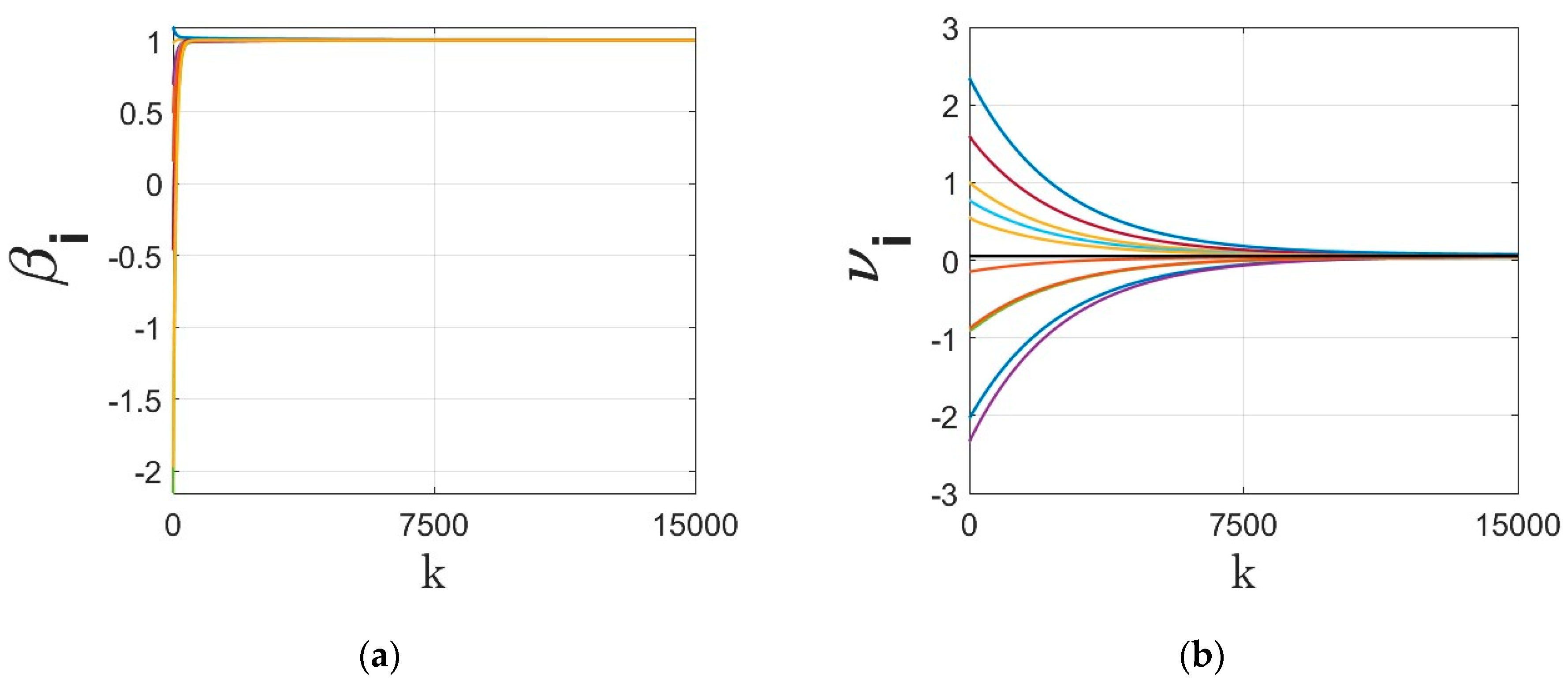

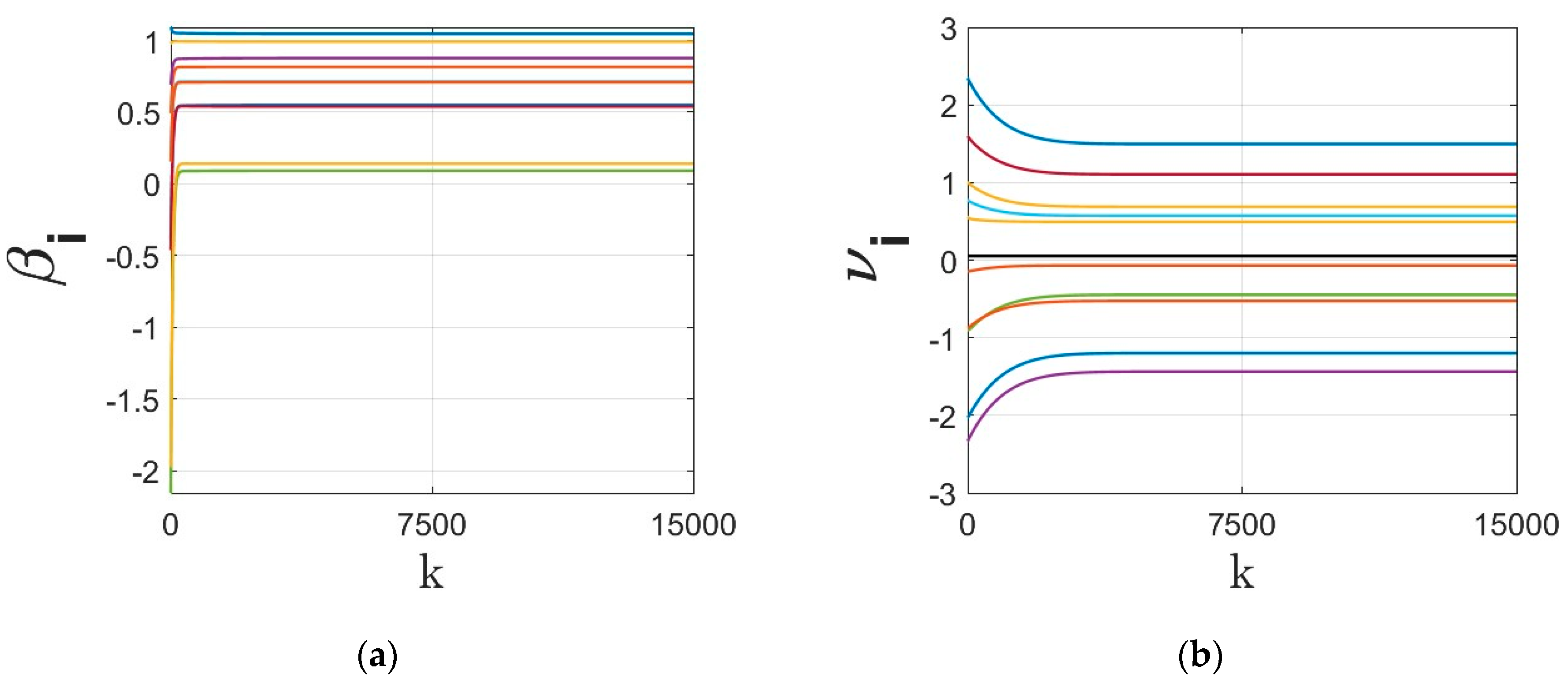

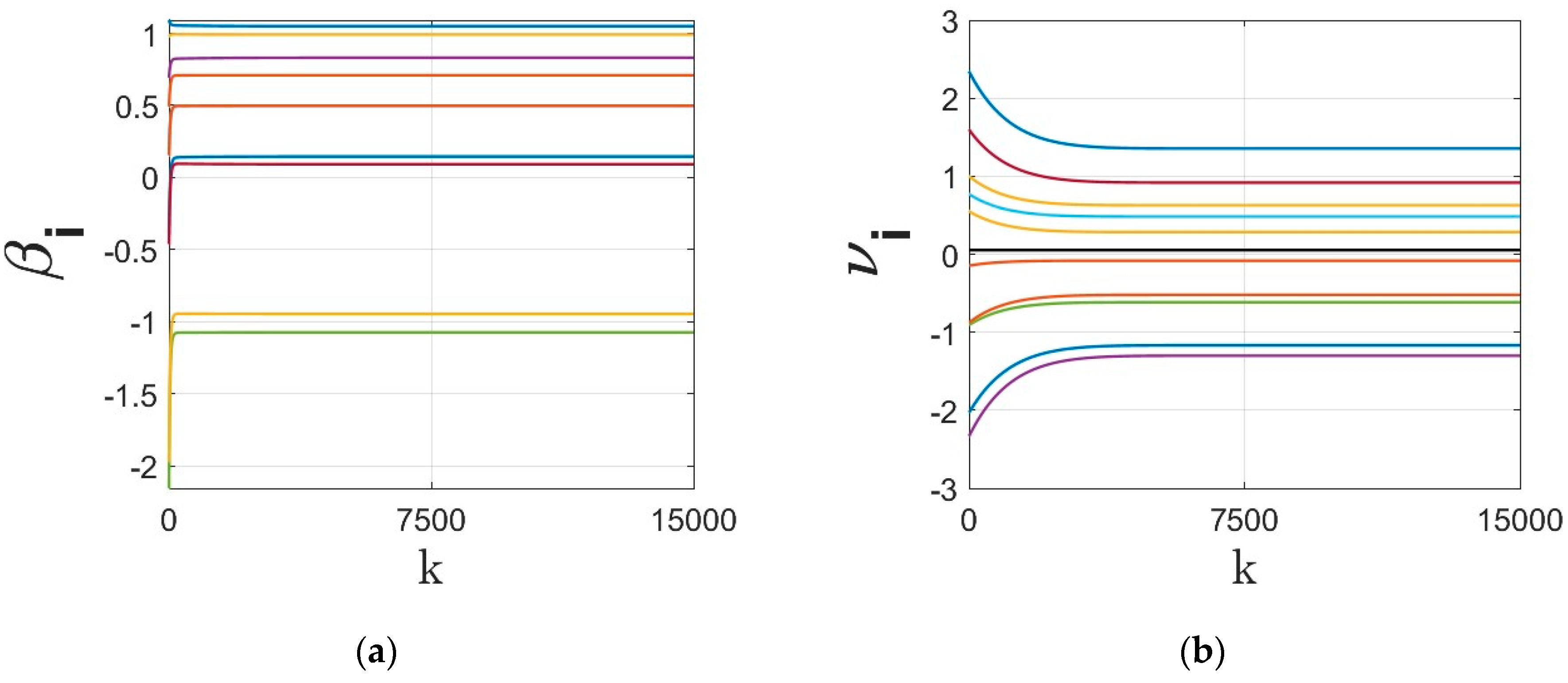

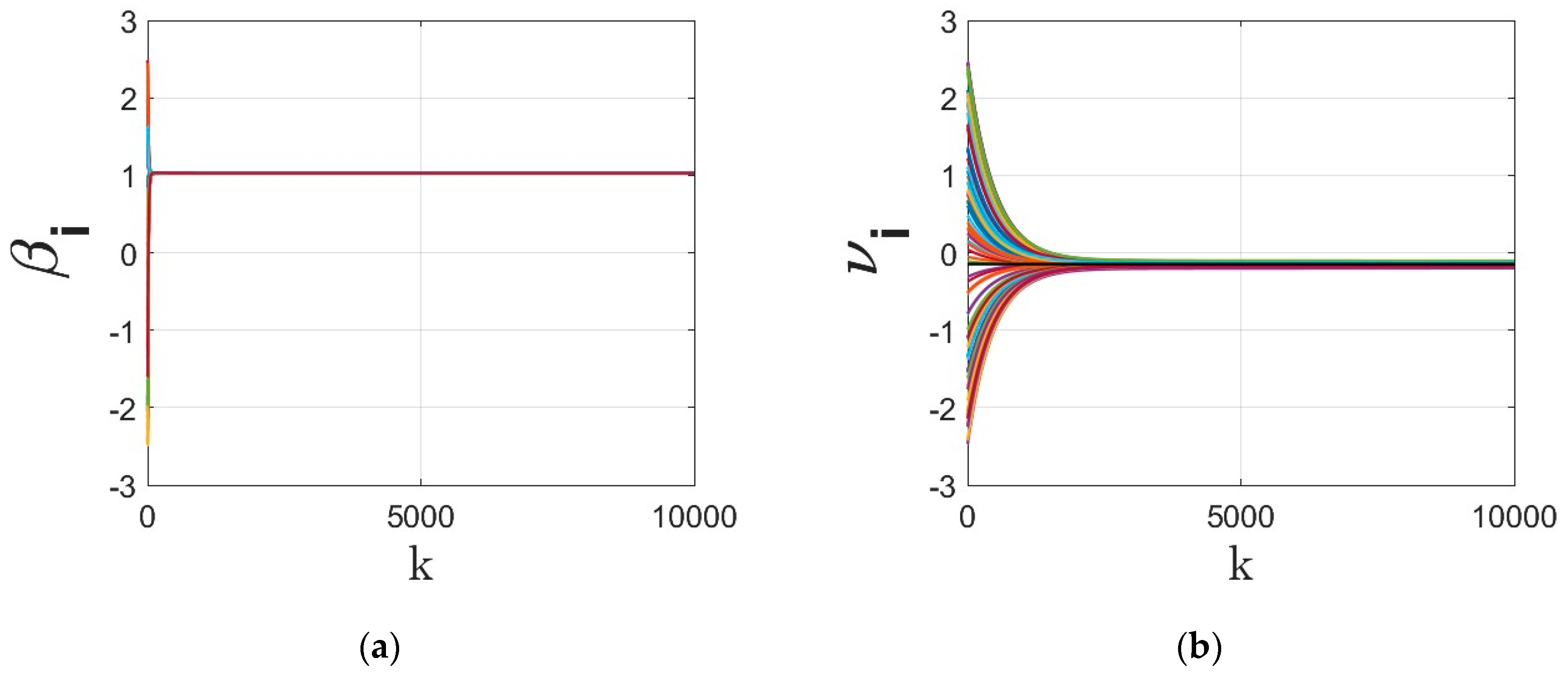

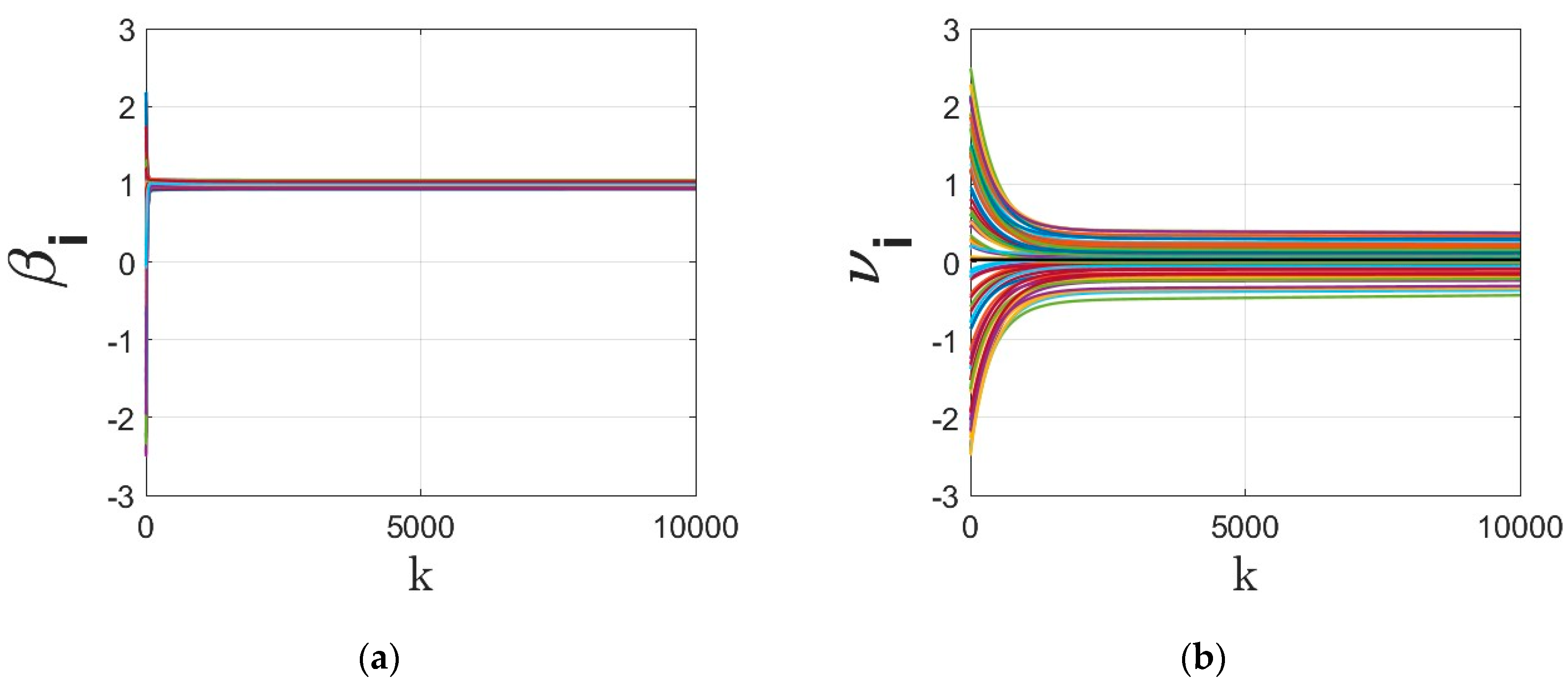

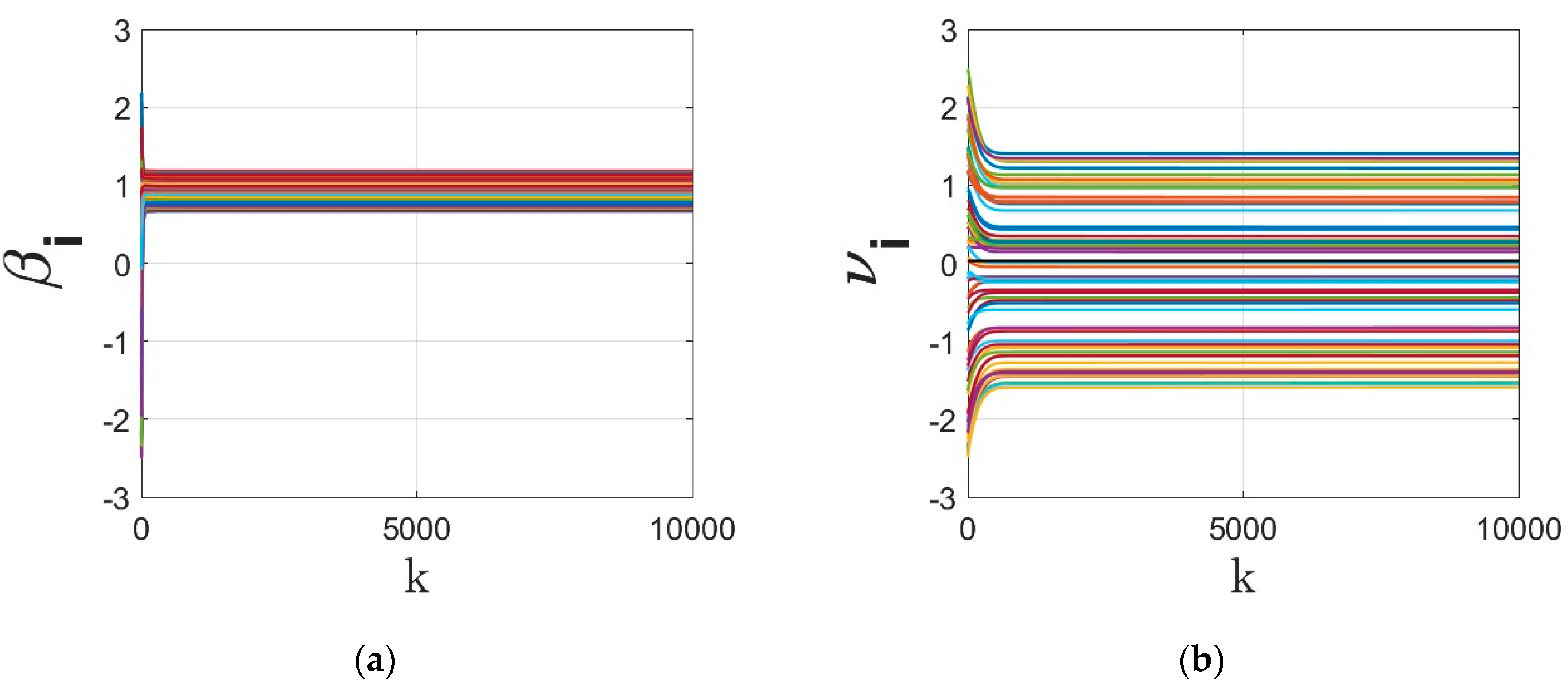

- The parameters of the regressor at different agents under different dynamics are shown in Figure 3, Figure 4, Figure 5 and Figure 6. The regressor line parameters are calculated at every node/agent . In these figures, different colors show the parameters associated with different computing nodes/agents. As it can be seen, applying sign function may result in inexact convergence and steady-state error, especially when adding it to the gradient tracking part of the dynamics (as shown in Figure 5 and Figure 6).

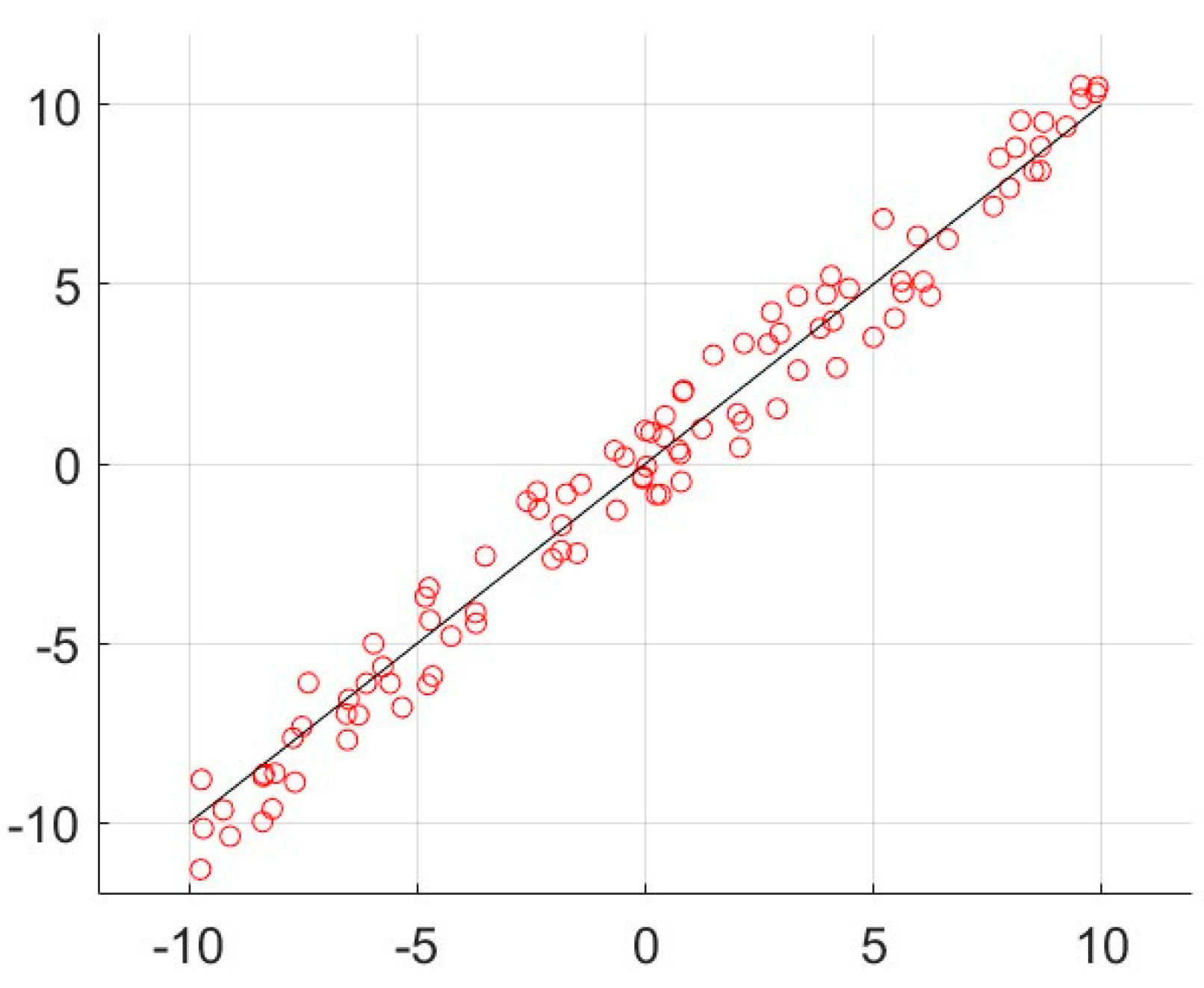

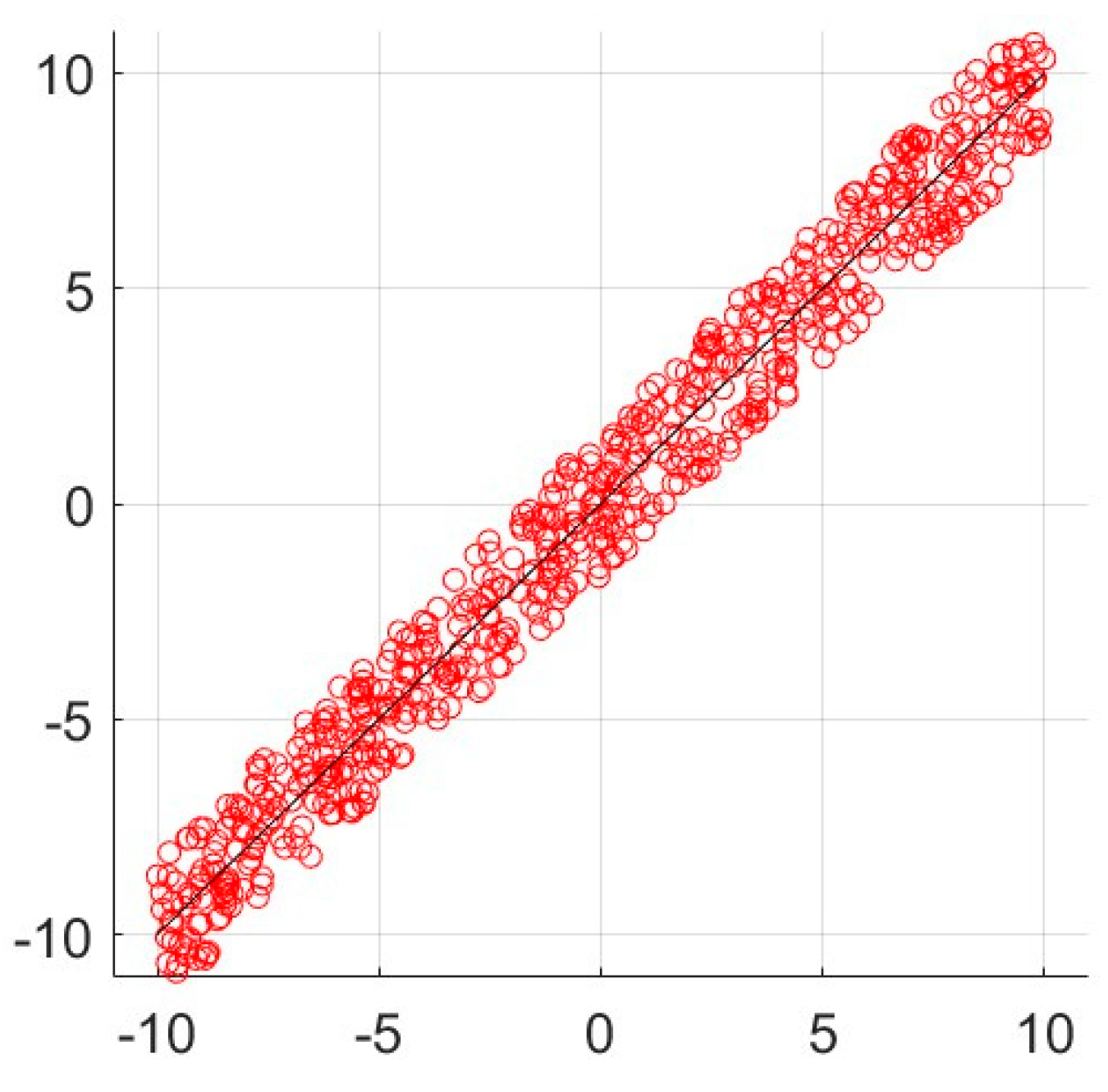

- Next, to strengthen the generalizability of the results, we redo the simulations for large-scale example with data points and a network of agents each having access to of the (randomly chosen) data points. The large dataset is shown in Figure 7. We redo the simulation on local regression with the objective function (6)–(7) over an ER random network with linking probability. For this simulation, we set different values for step size as and gradient tracking rate as . Note that large step sizes, although they may lead to faster convergence, may result in larger optimality gap of the signum-based dynamics. In case of very large step size the solution may diverge. We compare the optimality gap under four different signum-based models based on (18)–(19) as,

- Case (1):

- Case (2):

- Case (3):

- Case (4):

- The residual cost function over iteration is compared in Figure 8. Despite the finite time convergence, the solution may result in steady-state residual or optimality gap depending on the parameters , , , . To better highlight this optimality gap on the regressor line parameters , these parameters are shown in Figure 9, Figure 10, Figure 11 and Figure 12 for different cases under signum-based dynamics. It is clear that applying sign function may result in steady-state error, especially for , where the gradient tracking is under signum-based nonlinearity (e.g., see Figure 11 and Figure 12).

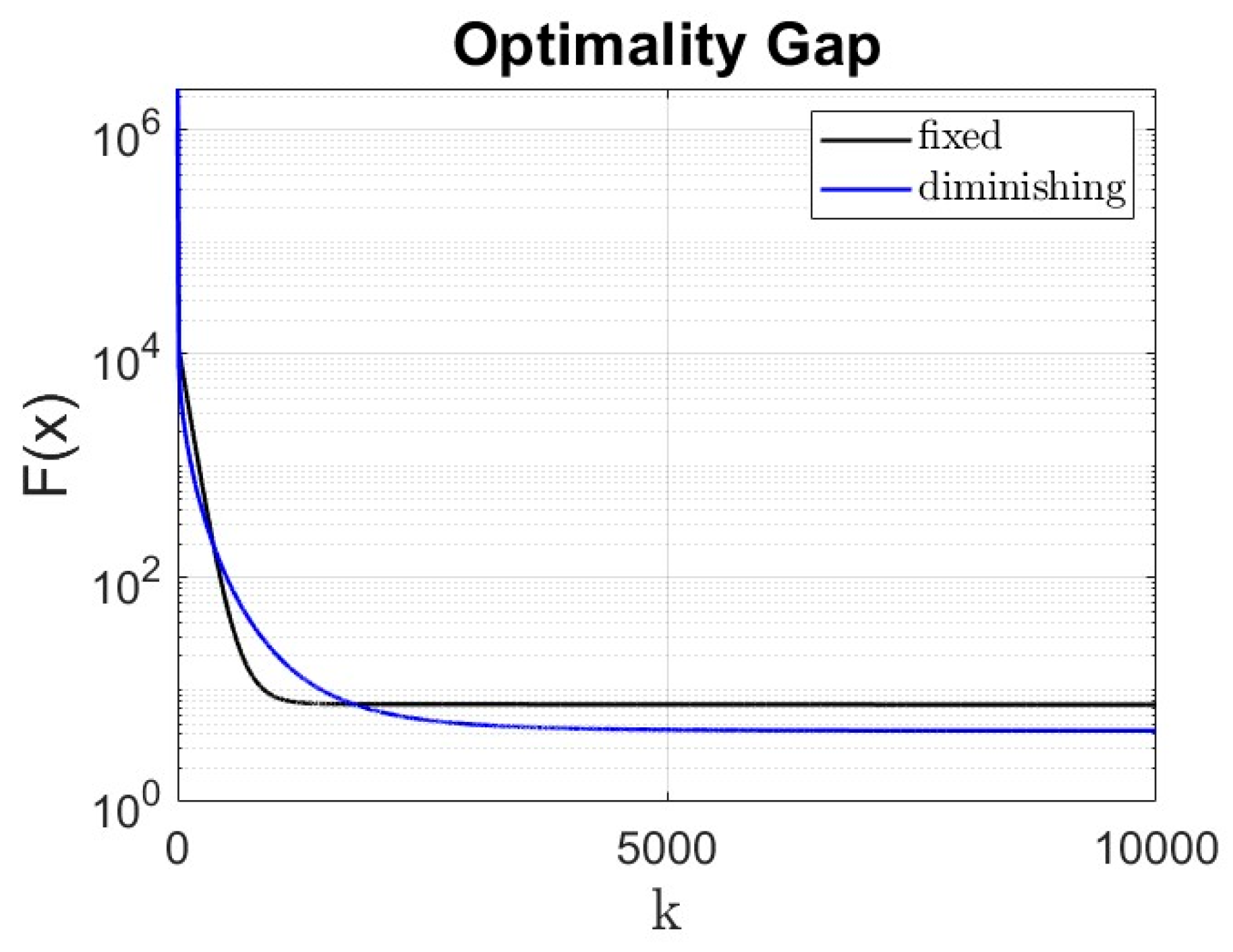

- Next, we repeat the simulation to compare the optimality gap under fixed and diminishing step sizes . For fixed step size we consider and for diminishing step size . The signum function parameters are set as , , , . As we see from Figure 13, the optimality gap is smaller for diminishing step size as compared with the fixed step size, while converging in slower rate. This is one remedy to decrease the optimality gap while it causes slower convergence rate.

5.2. Real Dataset Example

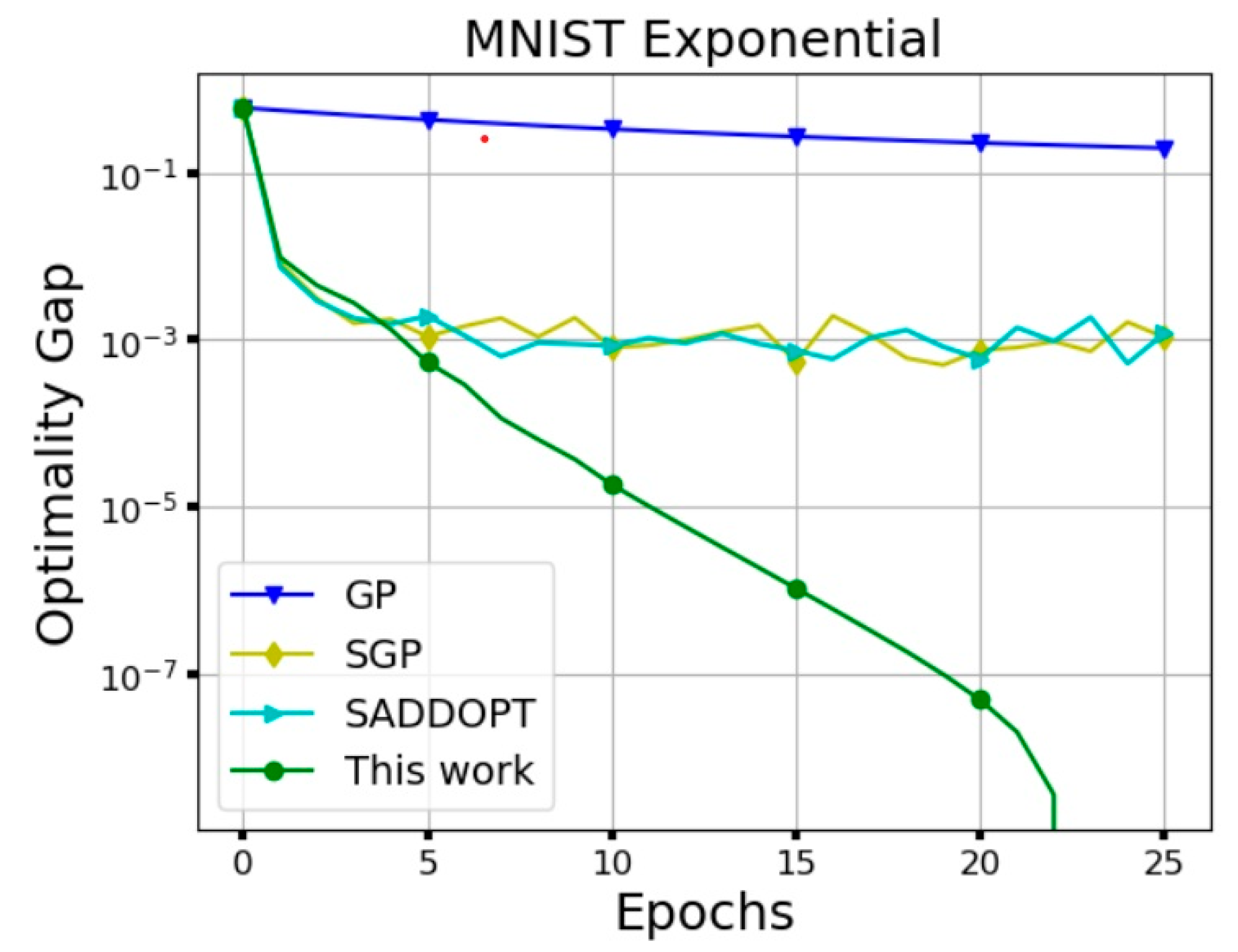

- For the next simulation, we consider the MNIST dataset and the optimization data from [63]. We randomly select labelled images from this dataset and classify these images via logistic regression with a convex regularizer over an exponential network of computing nodes. For this problem, the global cost function iswith each computing node taking a batch of sample images. Then, every node locally minimizes the following objective function:where denote the parameters of the separating hyperplane for classification. The optimization residual (or the optimality gap) of the signum-based Algorithm 1 with is compared with some existing algorithms in the literature. The following algorithms are considered for comparison: GP [64], SGP [65], S-ADDOPT [66]. The comparison results are shown in Figure 14. As it is clear from the figure, by choosing , and moderately close to , Algorithm 1 reaches fast convergence with sufficiently low optimality gap.

6. Conclusions

6.1. Concluding Remarks

6.2. Future Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Necoara, I.; Nedelcu, V.; Dumitrache, I. Parallel and distributed optimization methods for estimation and control in networks. J. Process Control 2011, 21, 756–766. [Google Scholar] [CrossRef]

- Verma, A.; Butenko, S. A distributed approximation algorithm for the bottleneck connected dominating set problem. Optim. Lett. 2012, 6, 1583–1595. [Google Scholar] [CrossRef]

- Veremyev, A.; Boginski, V.; Pasiliao, E.L. Potential energy principles in networked systems and their connections to optimization problems on graphs. Optim. Lett. 2015, 9, 585–600. [Google Scholar] [CrossRef]

- Qureshi, M.I.; Rikos, A.I.; Charalambous, T.; Learning, U.A. Learning and Optimization in Wireless Sensor Networks. In Wireless Sensor Networks in Smart Environments: Enabling Digitalization from Fundamentals to Advanced Solutions; Wiley: Hoboken, NJ, USA, 2025; Volume 10, pp. 35–64. [Google Scholar]

- Gabidullina, Z.R. Design of the best linear classifier for box-constrained data sets. In Mesh Methods for Boundary-Value Problems and Applications, Proceedings of the 13th International Conference, Kazan, Russia, 20–25 October 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 109–124. [Google Scholar]

- Alzubi, J.; Nayyar, A.; Kumar, A. Machine learning from theory to algorithms: An overview. J. Phys. Conf. Ser. 2018, 1142, 012012. [Google Scholar]

- Doostmohammadian, M.; Aghasi, A.; Charalambous, T.; Khan, U.A. Distributed support vector machines over dynamic balanced directed networks. IEEE Control Syst. Lett. 2021, 6, 758–763. [Google Scholar] [CrossRef]

- Cui, L.; Yang, S.; Chen, F.; Ming, Z.; Lu, N.; Qin, J. A survey on application of machine learning for internet of things. Int. J. Mach. Learn. Cybern. 2018, 9, 1399–1417. [Google Scholar] [CrossRef]

- Fourati, H.; Maaloul, R.; Chaari, L. A survey of 5g network systems: Challenges and machine learning approaches. Int. J. Mach. Learn. Cybern. 2021, 12, 385–431. [Google Scholar] [CrossRef]

- Xie, Z.; Wu, Z. Event-triggered consensus control for dc microgrids based on MKELM and state observer against false data injection attacks. Int. J. Mach. Learn. Cybern. 2024, 15, 775–793. [Google Scholar] [CrossRef]

- Doostmohammadian, M.; Rabiee, H.R.; Khan, U.A. Cyber-social systems: Modeling, inference, and optimal design. IEEE Syst. J. 2019, 14, 73–83. [Google Scholar] [CrossRef]

- Stankovic, S.S.; Beko, M.L.; Stanković, M.S. Nonlinear robustified stochastic consensus seeking. Syst. Control Lett. 2020, 139, 104667. [Google Scholar] [CrossRef]

- Jakovetic, D.; Vukovic, M.; Bajovic, D.; Sahu, A.K.; Kar, S. Distributed recursive estimation under heavy-tail communication noise. SIAM J. Control Optim. 2023, 61, 1582–1609. [Google Scholar] [CrossRef]

- Dai, L.; Chen, X.; Guo, L.; Zhang, J.; Chen, J. Prescribed-time group consensus for multiagent system based on a distributed observer approach. Int. J. Control Autom. Syst. 2022, 20, 3129–3137. [Google Scholar] [CrossRef]

- Zhang, B.; Mo, S.; Zhou, H.; Qin, T.; Zhong, Y. Finite-time consensus tracking control for speed sensorless multi-motor systems. Appl. Sci. 2022, 12, 5518. [Google Scholar] [CrossRef]

- Doostmohammadian, M. Single-bit consensus with finite-time convergence: Theory and applications. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 3332–3338. [Google Scholar] [CrossRef]

- Ge, C.; Ma, L.; Xu, S. Distributed fixed-time leader-following consensus for multi-agent systems: An event-triggered mechanism. Actuators 2024, 13, 40. [Google Scholar] [CrossRef]

- Yang, J.; Li, R.; Gan, Q.; Huang, X. Zero-sum-game-based fixed-time event-triggered optimal consensus control of multi-agent systems under FDI attacks. Mathematics 2025, 13, 543. [Google Scholar] [CrossRef]

- Doostmohammadian, M.; Aghasi, A.; Pirani, M.; Nekouei, E.; Khan, U.A.; Charalambous, T. Fast-convergent anytime-feasible dynamics for distributed allocation of resources over switching sparse networks with quantized communication links. In Proceedings of the IEEE European Control Conference, London, UK, 12–15 July 2022; pp. 84–89. [Google Scholar]

- Doostmohammadian, M.; Meskin, N. Finite-time stability under denial of service. IEEE Syst. J. 2020, 15, 1048–1055. [Google Scholar] [CrossRef]

- Budhraja, P.; Baranwal, M.; Garg, K.; Hota, A. Breaking the convergence barrier: Optimization via fixed-time convergent flows. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 6115–6122. [Google Scholar]

- Mishra, J.; Yu, X. On fixed-time convergent sliding mode control design and applications. In Emerging Trends in Sliding Mode Control: Theory and Application; Springer: Singapore, 2021; pp. 203–237. [Google Scholar]

- Rı, H.; Efimov, D.; Moreno, J.A.; Perruquetti, W.; Rueda-Escobedo, J.G. Time-varying parameter identification algorithms: Finite and fixed-time convergence. IEEE Trans. Autom. Control 2017, 62, 3671–3678. [Google Scholar]

- Malaspina, G.; Jakovetic, D.; Krejić, N. Linear convergence rate analysis of a class of exact first-order distributed methods for weight-balanced time-varying networks and uncoordinated step sizes. Optim. Lett. 2023, 18, 825–846. [Google Scholar] [CrossRef]

- Zuo, Z.; Han, Q.; Ning, B. Fixed-Time Cooperative Control of Multi-Agent Systems; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Acho, L.; Buenestado, P.; Pujol, G. A finite-time control design for the discrete-time chaotic logistic equations. Actuators 2024, 13, 295. [Google Scholar] [CrossRef]

- Basin, M. Finite-and fixed-time convergent algorithms: Design and convergence time estimation. Annu. Rev. Control 2019, 48, 209–221. [Google Scholar] [CrossRef]

- Xi, C.; Khan, U.A. Distributed subgradient projection algorithm over directed graphs. IEEE Trans. Autom. Control 2016, 62, 3986–3992. [Google Scholar] [CrossRef]

- Szabo, Z.; Sriperumbudur, B.K.; Póczos, B.; Gretton, A. Learning theory for distribution regression. J. Mach. Learn. Res. 2016, 17, 5272–5311. [Google Scholar]

- Xin, R.; Khan, U.; Kar, S. A hybrid variance-reduced method for decentralized stochastic non-convex optimization. In Proceedings of the International Conference on Machine Learning, PMLR, 2021, Virtual, 18–24 July 2021; pp. 11459–11469. [Google Scholar]

- Du, B.; Zhou, J.; Sun, D. Improving the convergence of distributed gradient descent via inexact average consensus. J. Optim. Theory Appl. 2020, 185, 504–521. [Google Scholar] [CrossRef]

- Simonetto, A.; Jamali-Rad, H. Primal recovery from consensus-based dual decomposition for distributed convex optimization. J. Optim. Theory Appl. 2016, 168, 172–197. [Google Scholar] [CrossRef]

- Liu, Z.; Jahanshahi, H.; Volos, C.; Bekiros, S.; He, S.; Alassafi, M.O.; Ahmad, A.M. Distributed consensus tracking control of chaotic multi-agent supply chain network: A new fault-tolerant, finite-time, and chatter-free approach. Entropy 2021, 24, 33. [Google Scholar] [CrossRef]

- Yu, Z.; Yu, S.; Jiang, H.; Mei, X. Distributed fixed-time optimization for multi-agent systems over a directed network. Nonlinear Dyn. 2021, 103, 775–789. [Google Scholar] [CrossRef]

- Shi, X.; Wen, G.; Yu, X. Finite-time convergent algorithms for time-varying distributed optimization. IEEE Control Syst. Lett. 2023, 7, 3223–3228. [Google Scholar] [CrossRef]

- Tang, W.; Daoutidis, P. Fast and stable nonconvex constrained distributed optimization: The ellada algorithm. Optim. Eng. 2022, 23, 259–301. [Google Scholar] [CrossRef]

- Li, S.; Nian, X.; Deng, Z.; Chen, Z. Predefined-time distributed optimization of general linear multi-agent systems. Inf. Sci. 2022, 584, 111–125. [Google Scholar] [CrossRef]

- Gong, X.; Cui, Y.; Shen, J.; Xiong, J.; Huang, T. Distributed optimization in prescribed-time: Theory and experiment. IEEE Trans. Netw. Sci. Eng. 2021, 9, 564–576. [Google Scholar] [CrossRef]

- Deng, C.; Ge, M.G.; Liu, Z.; Wu, Y. Prescribed-time stabilization and optimization of cps-based microgrids with event-triggered interactions. Int. J. Dyn. Control 2023, 12, 2522–2534. [Google Scholar] [CrossRef]

- Wen, X.; Qin, S. A projection-based continuous-time algorithm for distributed optimization over multi-agent systems. Complex Intell. Syst. 2022, 8, 719–729. [Google Scholar] [CrossRef]

- Li, Q.; Wang, M.; Sun, H.; Qin, S. An adaptive finite-time neurodynamic approach to distributed consensus-based optimization problem. Neural Comput. Appl. 2023, 35, 20841–20853. [Google Scholar] [CrossRef]

- Slotine, J.J.; Li, W. Applied Nonlinear Control; Prentice-Hall: Englewood Cliffs, NJ, USA, 1991. [Google Scholar]

- Wu, H.; Tao, F.; Qin, L.; Shi, R.; He, L. Robust exponential stability for interval neural networks with delays and non-lipschitz activation functions. Nonlinear Dyn. 2011, 66, 479–487. [Google Scholar] [CrossRef]

- Yu, H.; Wu, H. Global robust exponential stability for hopfield neural networks with non-lipschitz activation functions. J. Math. Sci. 2012, 187, 511–523. [Google Scholar] [CrossRef]

- Balcan, M.; Blum, A.; Sharma, D.; Zhang, H. An analysis of robustness of non-lipschitz networks. J. Mach. Learn. Res. 2023, 24, 1–43. [Google Scholar]

- Li, W.; Bian, W.; Xue, X. Projected neural network for a class of non-lipschitz optimization problems with linear constraints. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3361–3373. [Google Scholar] [CrossRef] [PubMed]

- Olfati-Saber, R.; Murray, R.M. Consensus problems in networks of agents with switching topology and time-delays. IEEE Trans. Autom. Control 2004, 49, 1520–1533. [Google Scholar] [CrossRef]

- Wang, F.; Zhang, Y.; Zhang, L.; Zhang, J.; Huang, Y. Finite-time consensus of stochastic nonlinear multi-agent systems. Int. J. Fuzzy Syst. 2020, 22, 77–88. [Google Scholar] [CrossRef]

- Zhang, B.; Jia, Y. Fixed-time consensus protocols for multi-agent systems with linear and nonlinear state measurements. Nonlinear Dyn. 2015, 82, 1683–1690. [Google Scholar] [CrossRef]

- Liu, J.; Yu, Y.; Wang, Q.; Sun, C. Fixed-time event-triggered consensus control for multi-agent systems with nonlinear uncertainties. Neurocomputing 2017, 260, 497–504. [Google Scholar] [CrossRef]

- Zuo, Z.; Tian, B.; Defoort, M.; Ding, Z. Fixed-time consensus tracking for multiagent systems with high-order integrator dynamics. IEEE Trans. Autom. Control 2017, 63, 563–570. [Google Scholar] [CrossRef]

- Yan, K.; Han, T.; Xiao, B.; Yan, H. Distributed fixed-time and prescribed-time average consensus for multi-agent systems with energy constraints. Inf. Sci. 2023, 647, 119471. [Google Scholar] [CrossRef]

- Yu, Y.; Liu, C.; Li, Y.; Li, H. Practical fixed-time distributed average-tracking with input delay based on event-triggered method. Int. J. Control Autom. Syst. 2023, 21, 845–853. [Google Scholar] [CrossRef]

- Doostmohammadian, M.; Kharazmi, S.; Rabiee, H.R. How clustering affects the convergence of decentralized optimization over networks: A monte-carlo-based approach. Soc. Netw. Anal. Min. 2024, 14, 135. [Google Scholar] [CrossRef]

- Doostmohammadian, M.; Aghasi, A. Accelerated distributed allocation. IEEE Signal Process. Lett. 2024. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 2011, 3, 1–122. [Google Scholar]

- Song, C.; Yoon, S.; Pavlovic, V. Fast ADMM algorithm for distributed optimization with adaptive penalty. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Lin, Z.; Li, H.; Fang, C. ADMM for distributed optimization. In Alternating Direction Method of Multipliers for Machine Learning; Springer: Berlin/Heidelberg, Germany, 2022; pp. 207–240. [Google Scholar]

- Ma, M.; Nikolakopoulos, A.N.; Giannakis, G.B. Hybrid admm: A unifying and fast approach to decentralized optimization. EURASIP J. Adv. Signal Process. 2018, 2018, 1–17. [Google Scholar] [CrossRef]

- Gautam, M.; Pati, A.; Mishra, S.; Appasani, B.; Kabalci, E.; Bizon, N.; Thounthong, P. A comprehensive review of the evolution of networked control system technology and its future potentials. Sustainability 2021, 13, 2962. [Google Scholar] [CrossRef]

- Zhang, L.; Hua, L. Major issues in high-frequency financial data analysis: A survey of solutions. Mathematics 2025, 13, 347. [Google Scholar] [CrossRef]

- Wei, Y.; Xie, C.; Qing, X.; Xu, Y. Control of a new financial risk contagion dynamic model based on finite-time disturbance. Entropy 2024, 26, 999. [Google Scholar] [CrossRef] [PubMed]

- Qureshi, M.I.; Khan, U.A. Stochastic first-order methods over distributed data. In Proceedings of the IEEE 12th Sensor Array and Multichannel Signal Processing Workshop (SAM), Trondheim, Norway, 20–23 June 2022; pp. 405–409. [Google Scholar]

- Nedic, A.; Olshevsky, A. Distributed optimization over time-varying directed graphs. IEEE Trans. Autom. Control 2014, 60, 601–615. [Google Scholar] [CrossRef]

- Spiridonoff, A.; Olshevsky, A.; Paschalidis, I. Robust asynchronous stochastic gradient-push: Asymptotically optimal and network-independent performance for strongly convex functions. J. Mach. Learn. Res. 2020, 21, 1–47. [Google Scholar]

- Qureshi, M.I.; Xin, R.; Kar, S.; Khan, U.A. S-ADDOPT: Decentralized stochastic first-order optimization over directed graphs. IEEE Control Syst. Lett. 2020, 5, 953–958. [Google Scholar] [CrossRef]

- Noah, Y.; Shlezinger, N. Distributed learn-to-optimize: Limited communications optimization over networks via deep unfolded distributed ADMM. IEEE Trans. Mob. Comput. 2025, 24, 3012–3024. [Google Scholar] [CrossRef]

- Zhang, N.; Sun, Q.; Yang, L.; Li, Y. Event-triggered distributed hybrid control scheme for the integrated energy system. IEEE Trans. Ind. Inform. 2022, 18, 835–846. [Google Scholar] [CrossRef]

| Parameter | Faster Convergence |

|---|---|

| smaller | |

| larger | |

| smaller | |

| larger |

| Parameter | Faster Convergence |

|---|---|

| larger | |

| smaller | |

| larger | |

| smaller |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doostmohammadian, M.; Ghods, A.A.; Aghasi, A.; Gabidullina, Z.R.; Rabiee, H.R. Using Non-Lipschitz Signum-Based Functions for Distributed Optimization and Machine Learning: Trade-Off Between Convergence Rate and Optimality Gap. Math. Comput. Appl. 2025, 30, 108. https://doi.org/10.3390/mca30050108

Doostmohammadian M, Ghods AA, Aghasi A, Gabidullina ZR, Rabiee HR. Using Non-Lipschitz Signum-Based Functions for Distributed Optimization and Machine Learning: Trade-Off Between Convergence Rate and Optimality Gap. Mathematical and Computational Applications. 2025; 30(5):108. https://doi.org/10.3390/mca30050108

Chicago/Turabian StyleDoostmohammadian, Mohammadreza, Amir Ahmad Ghods, Alireza Aghasi, Zulfiya R. Gabidullina, and Hamid R. Rabiee. 2025. "Using Non-Lipschitz Signum-Based Functions for Distributed Optimization and Machine Learning: Trade-Off Between Convergence Rate and Optimality Gap" Mathematical and Computational Applications 30, no. 5: 108. https://doi.org/10.3390/mca30050108

APA StyleDoostmohammadian, M., Ghods, A. A., Aghasi, A., Gabidullina, Z. R., & Rabiee, H. R. (2025). Using Non-Lipschitz Signum-Based Functions for Distributed Optimization and Machine Learning: Trade-Off Between Convergence Rate and Optimality Gap. Mathematical and Computational Applications, 30(5), 108. https://doi.org/10.3390/mca30050108