Abstract

The decision-making process can be complex and underestimated, where mismanagement could lead to poor results and excessive spending. This situation appears in highly complex multi-criteria problems such as the project portfolio selection (PPS) problem. Therefore, a recommender system becomes crucial to guide the solution search process. To our knowledge, most recommender systems that use argumentation theory are not proposed for multi-criteria optimization problems. Besides, most of the current recommender systems focused on PPS problems do not attempt to justify their recommendations. This work studies the characterization of cognitive tasks involved in the decision-aiding process to propose a framework for the Decision Aid Interactive Recommender System (DAIRS). The proposed system focuses on a user-system interaction that guides the search towards the best solution considering a decision-maker’s preferences. The developed framework uses argumentation theory supported by argumentation schemes, dialogue games, proof standards, and two state transition diagrams (STD) to generate and explain its recommendations to the user. This work presents a prototype of DAIRS to evaluate the user experience on multiple real-life case simulations through a usability measurement. The prototype and both STDs received a satisfying score and mostly overall acceptance by the test users.

1. Introduction

The decision-making process consists of selecting the best solution among a set of possible alternatives, considering difficult and complicated decisions [1]. Finding efficient strategies or techniques to aid this process is challenging due to the complexity of the problems.

In decision-making processes, such as the solution of optimization problems, the decision-maker (DM) is the person or group whose preferences are decisive for choosing an adequate solution to problems with multiple objectives (which are sometimes in conflict) and multiple efficient solutions [2]. The DM is the one who makes the final decision and chooses the solution that seems more appropriate from the preferences previously established.

There is a recent growing interest in using various techniques to incorporate the DM’s preferences within a methodology, heuristic, or meta-heuristic to solve an optimization problem [3]. Among the different preference incorporation techniques available, using a weight vector that defines the importance of each objective is one of the most commonly used and accepted approaches.

The project portfolio selection (PPS) problem is a challenging optimization problem that presents several conditions to consider. First, these problems are usually multi-objective, searching for the best possible outcome for each objective. However, these objectives usually face conflicts between them based on the constraints that the problem sets. Second, the number of constraints that a PPS problem presents can make the decision-making process difficult since many possible solutions within the solution search space may not be feasible.

Usually, PPS problems define a limited number of resources to be distributed to improve each of the objectives while considering a maximum and minimum threshold of said resources for each of the elements defined in the constraints, limiting each objective’s gain. Under these circumstances, it is most likely that it will not be possible to determine an optimal single solution, but instead, a set of optimal solutions that define a balance between the objectives of the problem and the DM’s preferences, identified by using different strategies [4]. Therefore, it is crucial to select the most suitable solution that reflects the preferences of the DM. Multi-criteria decision analysis (MCDA) methods are among the most widely used tools for solving PPS problems because of their capacity to handle complex problems with multiple objectives (usually in conflict) to satisfy [5].

A practical methodology to solve PPS problems is the decision support system (DSS), which allows the DM to analyze a PPS problem under the current set of preferences and facilitate the decision-making process. However, choosing the best solution is a complex task because of the problem’s subjective nature and the DM’s preferences, which could be specific to a person or group and might change during the solution process. An interactive DSS allows the DM to show the best solutions based on the current preferences and receive new information from the DM and update its search to adapt to changes. As the name infers, this system can establish a user-system interaction during the solution process.

This paper proposes the Decision Aid Interactive Recommender System (DAIRS), a multi-criteria DSS (MCDSS) framework that considers integrating cognitive tasks to the user-system interaction. DAIRS is able to perform several tasks aiming to aid the DM during the decision-making process, such as evaluating alternatives, interacting with the DM, and recommending a solution while presenting arguments to justify this selection. The most relevant and novel feature DAIRS provides is that it not only is able to obtain information from the DM and adapt it to present an appropriate recommendation. This proposal can also present new information to DM or defend its current recommendation. In other words, DAIRS establishes a dialogue game with the user instead of only being a system that receives information.

This paper addresses the characterization of cognitive tasks involved in the decision support process and its integration in recommender systems to develop more robust DSS. These systems should allow the precise analysis of possible solutions, provide solutions that optimize the results, and at the same time, satisfy the preferences established by a DM. DAIRS includes on its MCDSS framework different MCDA methods supported by argumentation theory in the form of argumentation schemes and proof standards.

This proposal intends to present a recommender system that is able to provide a bidirectional interaction. Both the user and system provide and obtain new information based on the knowledge obtained during a dialogue. For this purpose, this work uses concepts related to argumentation theory, which allow both participants (user and system) to establish a well-structured dialogue.

DAIRS uses a bidirectional interaction under the assumption that the user will satisfactorily carry out a decision-making process even without extensive knowledge of the problem. DAIRS provides information to the DM through the dialogue game, seeking to enhance and accelerate learning about the problem to aid in selecting a suitable solution.

This work seeks to meet three main objectives. First, develop a recommender system, called DAIRS, which suggests a solution to a multi-objective optimization problem (MOP), precisely a PPS problem, with a deep interaction between the decision-maker (DM) and the system. Second, this work seeks to simplify the DM interaction with the proposed recommender system. Lastly, DAIRS endeavors to achieve high-level satisfaction of a DM. For the last objective, this proposal seeks to validate the developed recommender system, evaluating the effects of using argumentation theory and a bidirectional dialogue concerning several properties related to the usability of an MCDSS.

The main contributions of this work, proposed to meet the above objectives, can be summarized in three elements, whose originality is shown in Section 2:

- The development of an interactive MCDSS framework prototype, called DAIRS, supported by argumentation theory to perform a study of the effects of the proposed state transition diagrams (STDs), which regulates the flow of interaction with real users when solving a real-life optimization problem. For this paper, this work focuses on PPS problems.

- The incorporation of argumentation schemes (reasoning patterns) and proof standards (MCDA methods to compare solutions) in an interactive MCDSS to evaluate and analyze their effects on the decision-making process.

- The proposal, design, and implementation of two STDs, which determine the evolution of a dialogue game established between the DM and DAIRS.

The remaining part of this paper is structured as follows: Section 2 shows a brief review of works related to the proposal in this paper. Section 3 presents the necessary concepts on recommender systems employed in this work. Section 4 describes the proposed methodology and the developed prototype. Section 5 presents the experimental design and the results and analysis regarding the proposed prototype’s performance when used to solve a test case study, which simulates a real-life scenario of a PPS problem. Finally, Section 6 addresses conclusions regarding the usability and effectiveness of the proposal and possible future work.

2. Related Work

This section reviews some of the most relevant works related to three topics in particular: (i) DSS frameworks used to solve PPS problems, (ii) interactive systems used for optimization problems, and (iii) proposals that use the characterization of cognitive tools to improve the interaction between the user and the recommender system.

2.1. DSS Frameworks Used to Solve PPS Problems

There are multiple DSS proposed focused on solving PPS problems. These works consider different strategies to incorporate the preferences established by the DM and select the most appropriate solution based on the current preference set or a preference weight vector.

While the proposal presented on this paper focuses on the development of a system that performs a project portfolio selection reflecting the DM’s preferences in the best possible form and is able to interact with the user by entering in a dialogue, this bidirectional interaction feature, to the best of the authors’ knowledge, has not been considered for solving PPS problems. It is important to understand some of the most relevant approaches to solve this problem.

Chu et al. [6] presents one of the first DSS proposed to solve PPS problems. Their DSS presents an approach based on a cost/benefit model for research and development (R&D) project management. Their work considers monetary and time cost, as well as the probability of success of each project to determine the optimal sequence of R&D projects to execute. The impact of each element when performing a selection of a solution is defined by a pair of weight variables that allowed to define how relevant is for the user to save money or time. More recently, Hummel et al. proposed a DSS framework based on the Measuring Attractiveness by a Categorical Based Evaluation Technique (MACBETH) approach [7] to solve R&D project portfolio management problems interactively [8].

Archer and Ghasemzadeh [9] propose a framework to design decision support systems to solve PPS problems. In their work, they attempt to simplify the PPS process in three main phases: strategic consideration, individual project evaluation, and portfolio selection. At each phase the users are free to select the techniques that they find the most suitable. This framework is used to develop a DSS named Project Analysis and Selection System (PASS) [10] which is able to perform tasks such as data entry, pre-screening, project evaluation, screening and optimization models without the involvement of the DM. PASS is used to solve successfully solve a single-objective PSS problem.

DSS frameworks have proven to be suitable alternatives for solving multi-objective PPS problems. Hu et al. [11] proposes a multi-criteria DSS (MCDSS) framework to solve PPS problems implementing the Lean and Six Sigma concepts [12] and considering the cost and benefit of each project. Their framework considers flexible weight vectors that can be modified during the solution process and the output is a Pareto optimal portfolio set which allows the DM to select the most adequate to their preferences.

Khalili-Damghani’s work [13] shows how flexible frameworks developed to solve PPS problems can be. In this case, an evolutionary algorithm (EA) is combined with a data envelope analysis model (DEA) to create the structure of a fuzzy rule-based (FRB) system that measures the suitability of all available candidate project portfolios.

Mira et al. [14] evaluates the performance of a DSS framework by solving a real-life simulation of a PPS problem and comparing the cumulative controlled risk value obtained by the DSS with respect to the controlled risk value obtained by a manual-based portfolio selection method. The results show a 10% improvement of the DSS framework over manual-based selection.

Mohammed [15] proposes the use of various strategies to find appropriate solutions to PPS problems within fuzzy environments. For this purpose, his work relies on the Analytic Hierarchy Process (AHP) [16] and TOPSIS [17] adapted to work using fuzzy strategies, which use a set of vectors of relative criteria weights. In this case, the used strategies incorporate preferences in the decision-making process before the system proceeds to generate a recommendation.

Recently, DSS frameworks have proven to be an adequate alternative to solve PPS problems focused on sustainability. Dobrovolskiene and Tamosiuniene [18] propose integrating the analysis of a sustainability index of each project within a Markowitz risk-return scheme [19]. This incorporation aims to find better portfolios based on a risk-return assessment that at the same time considers the DM’s responsibility towards the surrounding environment with a long-term focus on the well-being of society.

Debnath et al. [20] propose a DSS framework supported by a hybrid multi-criteria decision support method. This hybrid system combines strategies such as sensitivity analysis with grey-based Decision-Making Trial and Evaluation Laboratory [21] and Multi-Attributive Border Approximation area Comparison [22] to solve PPS problems focused on the development, quality, and distribution of genetically modified agricultural products considering sustainability under social, beneficial, and differential criteria.

Verdecho et al. [23] present another proposal focused on sustainability. In this case, an AHP is used to solve a PPS problem related to supply chains, whose objectives are focused on financial, environmental, and social sustainability. Their framework also seeks to optimize supply-related processes and customer satisfaction.

2.2. Interactive Systems for Optimization Problems

A common scenario when using DSS frameworks to solve any optimization problem, such as the PPS problem, is that while they present a solution based on preferences defined by a DM, the next step in the decision-making process is not considered. This step consists on determining the acceptance (or rejection) of the recommended solution by the DM, as well as updating the DM’s preferences. The preferences of the DM may change during solution process. It must be considered that the DM may be a single agent or group whose preferences are susceptible to social, political or economical-related changes surrounding the problem to be solved. Therefore, it is desirable that the framework is able to adapt to a new preferences.

For this situation, it is advisable to use an interactive process between the DM and the recommender system for decision-making support. The proposal of this work focuses on a bidirectional interaction. This feature is not present in the PPS problem nor in the optimization problems mentioned in this subsection.

Miettinen et al. [24] present a study that focuses on solving multi-objective optimization problems (MOPs) while using an interactive system that allows the system and the user to exchange information. Their study mentions that there are three main stopping criteria for these systems: the DM accepts the solution, the DM stops the process manually or an algorithmic stopping criterion is reached. Also, according to their work and [25] the interactive process can be divided in two phases. First, a learning phase where the DM obtains knowledge regarding the problem. The second phase is the decision phase, where the system identifies the most suitable solution according to the current information and DM must accept or reject it.

The Flexible and Interactive Tradeoff method [26] is a proposed approach implemented into a DSS to solve MOPs. This proposal considers that it is easier for the DM to compare results from multiple alternatives based on a definition of strict preferences between criteria rather than on indifference. This approach considers that the DM needs to establish a preferential lexicographic order for all criteria.

The InDM2 algorithm [27] is a recent work that allows interaction between the DM and the recommender system. The DM initially establishes a reference point which reflects to reflect his/her preferences. During the solution process, InDM2 shows the user the best candidate solutions it has found that match the current preferences. The DM is able to accept the solutions obtained, wait for the system to provide new solutions or stop the process at any time. InDM2 also allows the DM to update the current reference point or propose a new one, allowing the system to obtain new information based on the DM’s new preferences.

Azabi et al. [28] propose an interactive optimization framework supported by a low fidelity flow resolver and an interactive Multi-objective Particle Swarm Optimization (MOPSO) for the optimization of the aerodynamic shape design of aerial vehicle platforms. As InDM2, the DM can incorporate preferences before and during the solution process. This interaction allows their framework to define and update a region of interest, accelerating the process. The results of their experiments show that the interactive MOPSO outperforms a non-interactive MOPSO, proving that a constant user-system interaction can provide better results.

There are also interactive framework proposals focused on solving PPS problems. Strummer et al. [29] proposes an interactive framework using a strategy based on identifying Pareto optimal solutions to determine a set of optimal portfolios to present to the DM. The system first solves a PPS problem and presents the best solutions under the current preferences and constraints defined by to the DM. The DM can then interact with the system to determine its preference for a particular criterion or set new constraints.

A study in Nowak et al. [30] states that several frameworks presented in the literature assume that the DM has a high-level knowledge of the problem, the methods used to solve, and has a well-defined set of preferences. These assumptions are obviously not always true. Therefore, the recommender system has to be as user-friendly as possible and understand that the user might have little knowledge of the problem prior using the system. Their study also notes a lack of consideration of dynamic elements, such as changes in preferences or the problem environment. The authors propose a general structure for the development of frameworks to solve PPS problems. This structure considers the criteria to be evaluated, the data needed to evaluate the projects, an analysis and evaluation of the projects, as well as the construction of project portfolios. The proposed structure also is capable of obtaining new information from the DM during each iteration, allowing the DSS to adapt to every change and focusing their search towards the new preferences.

Interactive systems can use graphical visualization to support the decision-making process of optimization problems. The work of Haara et al. [31] performs a study to evaluate several interactive data visualization techniques to support the solution of multi-objective forest planning problems. The DM uses visual elements to ease the process of correctly identifying and defining his/her preferences. The authors mention that these interactive systems can be used for proposal management problems. PPS problems are management problems. Therefore, it possible to think that these proposals could work successfully for PPS problems.

The Your Own Decision Aid (YODA) framework is an interactive recommender system proposed in Kurttila et al. [32] to solve PPS problems. YODA focuses on working with DMs composed of multiple people, where each user defines his/her preferences and acceptable candidate projects. All available projects are separated in subsets based on the level of group acceptance that each project has. The projects with the highest level of acceptance will have priority when the system defines a candidate project portfolio. Each user is able to update their preferences or define a project acceptance threshold to allow rejected projects that are close enough to their acceptance standards to be considered to be acceptable alternatives.

2.3. Characterization of Cognitive Tools to Improve User-System Interaction

The interactive process between the user and the DSS should not be limited to a series of commands simulating a master-slave structure. The interaction requires the characterization of cognitive tasks to become an entity that not only receives information but also provides new knowledge of the problem to the user.

The problem of characterizing cognitive tasks in the decision support process has been addressed using different approaches. Some representative works on providing explanations to accompany a recommended solution in each interaction are shown in this section. Some works, described below, are based on argumentation to model human argumentation and dialogue processes. Their description includes limitations.

The proposals using artificial intelligence provide a recommendation by learning the user preferences for particular products. They do not seek to recommend a solution for an optimization problem. Instead, they use the AI methods to optimize the recommender systems (e.g., identify similar users). Other related works use queries to obtain information from the DM related to the currently presented solution, but they do not explain the result [33].

Labreuche [34] describes how to use the argumentation theory to perform a pairwise comparison between alternatives, using an MCDA method based on a weight vector. It establishes four different situations, which involve two candidate solutions x and y and a weight vector w for six different criteria representing the DM preferences. These situations use pairwise evaluations based on criteria weights to present an argument in favor or against the statement “x is preferred over y”.

Ouerdane [35] extends Labreuche’s work, presenting an approach to provide underlying reasons for supporting an alternative selected by a recommendation system. For the process of justification, the argumentation theory and decision support are combined with an established language to enable communication. It also proposed a hierarchical structure of argument schemes to decompose the decision process into steps whose underlying premises are made explicit, allowing identifying when the dialogue should incorporate the information into the dialogue with the DM. This structure has only been tested for a low-dimensional choice problem (CHP), where the decision options are known from the beginning. Said proposal analyzed the required elements to perform a dialogue game with the user, not only to defend an established recommendation by the system but also to obtain new preferences and statements provided by the DM. The new information obtained could change dialogue-related elements, leading the system to provide a new recommendation if necessary.

The work presented in Cruz-Reyes et al. [36] proposes a framework design for generating DSSs focused on a PPS problem by characterizing arguments and dialogue using argumentation theory and rough sets theory. The framework has a justification module for the recommended solution shown to the user; the justification is supported by argument schemes and decision rules generated with rough sets. The process starts by obtaining available preferential information provided by the DM and selecting the appropriate multi-criteria method to evaluate the available portfolios. After, it generates a recommendation and its justification interactively. This work focuses more on the decision rules generated through rough sets. The argumentation theory is a complementing element of the architectural design, and it is presented only in a conceptual form.

Sassoon et al. [37] use argumentation theory by using argumentation schemes within a chatbot. Their chatbot establishes a dialogue between the user and a DSS focused on medical consultation. It considers the user’s symptoms, medical history, and a list of available treatments to recommend the most appropriate medication. This DSS can attempt to justify its response through arguments. This system only considers current feasible information and does not use the user’s preferences.

The studies conducted by Morveli-Espinoza et al. [38,39,40] focus on the solution of goal selection problems. Their research uses artificial intelligence and argumentation semantics to select goals that are not in conflict and produce the best results considering a set of premises added before carrying out the solution process. The interface developed in their study is able to answer the “Why?” and “Why not?” questions for each goal, generating arguments based on the semantics used.

Recommender systems for e-commerce often rely on artificial intelligence (AI). The use of advanced AI related methods allow the system to ease the user-system interaction and recommend higher quality e-services and online products more closely related to the DM’s preferences [33].

Recently, interactive DSS frameworks that accept arguments from the DM have been proposed to solve PPS problems. Vayanos et al. [41] presents a framework that focuses on obtaining the preferences of the DM before and during the solution of the problem. The system generates a set of preferences based on a moderate number of queries presented to the DM. Each query provides a pairwise comparisons between two solutions. The system is based on weak-preference concept. This means that even if the user shows a preference on a certain criterion, this is not considered to be an absolute factor to determine dominance between solutions and is instead taken as a support by the framework when performing a portfolio project selection.

The previous query-based interactive system research extends in another study [42]. This investigation considers two and multi-stage robust optimization problems, including R&D PPS problems. The DSS framework interacts with the user before making a recommendation by performing a series of queries where the DM must define a value that reflects the level of attractiveness towards a particular item. The system uses these values to elicit preferences considering one of two possible models: maximize worst-case utility or minimize the worst-case regret of the item recommended.

Another DSS interactive framework has been recently proposed in Nowak & Trzaskalik [43]. Their work presents a MCDSS which interacts with the DM during each interaction and allows the user to redefine his/her preferences and constraints to solve dynamic PPS problems. Their DSS considers two possible sources that lead to a change in the problem environment: a time-dependent variable and a change in the DM’s preferences and constraints.

These last three proposals allow the user to provide new preferences by using queries. The proposal presented in this paper aims to provide an interactive recommender system that receives new preferential information from the DM and adapts its recommendation. The proposed system is also able to provide the DM with new information based on the knowledge obtained. In addition, the system can argue and defend its proposed project portfolio selection through arguments, with the objective that the DM understands, through dialogue, that the recommendation presented by the system is the most appropriate based on the current information.

The intention of allowing the proposed DSS framework to defend its recommendation through arguments is to allow the user to learn in detail the characteristics and properties of the problem to solve. This also allows the DM to see thoroughly the reasons for the portfolio selection made by the DSS. This paper focuses on the use of argumentation theory to not only support the solution process for a PPS problem, but also to allow the system to defend the recommended solution. Additionally, this paper incorporates two newly proposed STDs, argumentation schemes and a proof standard (TOPSIS [17]) different from those proposed by Ouerdane [35].

3. Background

This section reviews the most relevant concepts related to the proposed work, necessary to understand the said proposal and how it operates. For this, the revised concepts focus on the decision-making problem, several of the most relevant approaches, and recommendation systems, and the argumentation theory.

3.1. Multi-Objective Optimization Problem

As mentioned in Section 1, many cases in which decision problems arise involve multiple objectives to be satisfied and usually in conflict with each other. Equation (1) presents the definition of a multi-objective optimization problem (MOP). This particular example presents a maximization MOP, looking to obtain the variable decision vector that obtains the highest possible value for the M objectives within the function set F. However, it is also necessary to mention that it is possible to define minimization MOPs or combine both maximization and minimization for a subset of objectives.

Each MOP has a set of inequality (g) and equality (h) constraints that define the solutions’ feasibility. Based on the above scenario, it is understandable to believe that there are cases in which defining a single solution as optimal over all the other candidates is impossible. At this point, it falls to the decision-maker to carry out the selection of the most appropriate solution (or set of solutions) based on his preferences.

3.1.1. The Decision Making Problem

In real-life situations, the DM may be represented by a person or group which seeks to improve their profits. However, the DM might not have enough resources to support all available alternatives simultaneously. This leads to what can be defined as a decision-making problem. It is necessary to search for actions that meet the current goals in the best way possible, using the available resources and maximizing profit.

Decision-making problems present four basic elements [44]: A set of one or several objectives to solve; a set of candidate solutions to achieve all objectives within the set; a set of factors that define the environment that surrounds the problem; and a set of utility values associated with each solution when they interact with the current environment.

In these cases, DMs might use multi-criteria decision support systems (MCDSS) to support their decisions. MCDSS uses computational techniques used to analyze highly complex decision problems in a reasonable computational time [45]. The multi-criteria decision analysis (MCDA) is a collection of concepts, methods, and techniques that seek to help individuals or groups make decisions involving conflicting points of view and multiple stakeholders [46]. MCDA methods are relevant components of MCDSS. Five elements are involved in these methods: Goal, decision-maker, alternatives or actions, preferences, and a solution set based on preferences.

3.1.2. Project Portfolio Selection Problem

An example of a decision-making problem can be seen in the project portfolio selection (PPS) problem. A project is defined as a temporary, unique, and unrepeatable process that pursues a specific set of objectives [47]. A project portfolio is a set of projects selected for future implementation.

In this case, a person or organization has a set of projects to carry out. These projects share the resources currently available, and there is the possibility that several of those projects complement each other, as they are effective in the same area. Therefore, it is necessary to know which project portfolio meets an organization’s demands, maximizing its profit.

Equations (2)–(4) present a formal definition of the PPS problem. Let N be the number of available projects. A project portfolio is an N sized binary vector. The projects that have been selected are given a value of 1, while the non-selected projects are given a value of 0. The value of a project portfolio for an objective i is defined by the sum of each selected portfolio’s profit towards the said objective. The profit matrix p contains the respective profit obtained by the jth project for the ith objective.

Two main constraints restrict the PPS problem. First, the budget threshold, which is presented in Equation (3). The cost vector c defines how much each project costs, while B defines the maximum available current budget. The sum of all the selected projects’ costs must be equal to or lower than B.

The second constraint refers to all the areas involved in the problem. Thus, it is necessary to consider several A areas and a binary project-area matrix a, which defines which projects are assigned on each area. Each area has lower and upper investment thresholds and , respectively. The sum of all selected projects’ costs involved in each area must be between those two thresholds to be considered a feasible portfolio.

Such as

3.2. Recommender System

By solving a PPS problem using a method such as genetic or exact algorithms, it is possible to generate a set of good quality candidate solutions. However, a prevalent issue at this step lies in presenting the DM too many potential solutions, which may be too many to carry out an analysis using only the human capability. It is also necessary to consider that the DM’s preferences might have changed during the problem’s solution, making the decision-making process even more difficult.

A recommender system is a potential alternative for this situation. This system relies on the DM’s preferences and a set of various heuristics to direct its search and define which solutions from the set may be more attractive to the DM [48]. Specifically, in the PPS problem, a set of solutions, global and area budget constraints, and DM preferences can be used to determine the most appropriate project portfolios.

However, there is a possibility that DM is not entirely convinced and needs to know the reasons behind the decision made by the recommender system. Other possible situations that the system might face when presenting a solution to the DM are related to the human factor. For example, the DM may not know how to express his preferences correctly, may not fully know the details of the problem, and may even directly reject the system’s recommendation without waiting for a justification. For these reasons, it is desirable to establish a quick relationship with the DM. The theory of argumentation offers an alternative to carry out this relationship.

3.3. Argumentation Theory in Decision Making

The argumentation theory is within the field of artificial intelligence. It can be defined as the process of constructing and evaluating arguments to justify conclusions. This allows decision-making to be carried out in a justified manner. This theory is based on non-monotonic reasoning. This means that the conclusions obtained may be modified and even rejected when new information is presented [35].

The most relevant elements to consider within the argumentation theory are cognitive artifacts, proof standards, and argumentation schemes.

3.3.1. Cognitive Artifact

Cognitive artifacts human-made objects that seek to help or enhance cognition. Its use is not only focused on supporting memory but also to set reasoning towards classifications and comparisons among several alternatives [49]. The support to the decision-making process presented by the argumentation theory can be seen as a set of cognitive artifacts used sequentially. This sequence occurs through an interaction between an expert and a client. According to [50], this process uses four cognitive artifacts: a representation of the problem, a formulation of the problem, a model of evaluation, and a final recommendation. This work addresses these last two artifacts.

3.3.2. Proof Standard

In argumentation theory, all statements must be analyzed to determine their truthfulness and their effect on a possible conclusion the DM desires to reach [35]. Proof standards are methods and techniques that allow the unification of a set of arguments for and against a certain conclusion. These proof standards analyze and determine each argument’s strength and value to solve the conflict between them by accepting or rejecting the established conclusion.

A basic example of a proof standard is the simple majority. This standard takes a statement such as “project x is better than project y”. For this case, the M objectives are considered, and the values obtained by each one for both projects are analyzed. If x has more objectives with better value than y, then the conclusion is true. This expression can be formally defined as presented in the following Equation (5), where represent the dominance factor for objective i

3.3.3. Argumentation Scheme

Argumentation schemes can be defined as argumentative structures capable of detecting common and stereotypical patterns of human reasoning [51]. They are based on a set of inference rules in which the existence of certain premises can lead to a conclusion. The structure of the schemes is based on non-monotonic reasoning, allowing the entry of new information, altering the state of the conclusion.

An argumentation scheme is composed of three main elements:

- Premises: arguments for or against the conclusion. The status of each premise can be considered to be true or false until proven otherwise or to require further evidence for consideration;

- Conclusion: statement to be confirmed or rejected based on the premises and a proof standard;

- Critical questions: questions related to the structure of the argumentation scheme that, if not answered adequately, can falsify the veracity of an argument within it.

Argumentation schemes are not necessarily complex. For example, the cause to effect scheme [52] is based on two premises: If event A occurs, event B occurs as a consequence, and A has occurred. Therefore, the conclusion defines that B will occur. Critical questions focus on the strength of the relationship between A and B, whether if it is strong enough evidence to warrant this event, and if there exist other relevant factors that also provoke B to occur.

3.4. Dialogue Game

One possible form to represent argumentation theory within decision-making problems is through the use of dialogue games. These games model verbally or in-writing the interaction between two or more individuals, called players. The dialogue game intends to exchange arguments both for and against a statement between the players to reach a satisfactory conclusion [53].

Multiple elements must be considered for the dialogue game, such as the players and their respective roles, objectives, limitations, etc. Like any game, a set of rules must be established that defines which actions are acceptable or not during the dialogue. Also, it is necessary to define a system to determine the movements that each participant is allowed to perform at the different stages of the dialogue game.

3.4.1. Dialogue Game Rules

The dialogue game rules establish how the game is performed, defining criteria such as the starting and ending points of the game, the movements allowed for each player. These rules also define the criteria necessary to allow a coherent dialogue between the players. Each one can provide statements, arguments, and premises considered acceptable by the other participants, avoiding fallacies and dialogue loops that would stall the dialogue at a certain point [53].

There are four different types of dialogue game rules.

- Locution rules: define the set of movements allowed for the entire dialogue game;

- Compromise rules: define the set of statements and arguments each player is compromised to defend until proven right or wrong;

- Dialogue rules: define the set of available movements a player has during the current state of the dialogue;

- Termination rules: define the scenario or state that needs to be reached for the dialogue game to end.

3.4.2. State Transition Diagram

Based on the defined dialogue game rules, it is possible to identify which movements are allowed for each player and when he/she can use them. A state transition diagram (STD) can represent the evolution of the dialogue game graphically. An STD allows the players to visualize each of the different states where the dialogue can be located and the player currently in turn and what their available movements are. Similarly, an STD represents the starting and ending points of the game. With this, the four different types of rules of the dialogue game are effectively represented.

4. Proposed Work

This section describes the methodology and the different cognitive components defined for DAIRS. Afterward, a prototype proposed in this paper implements this methodology, which allows a user-system interaction through a dialogue game. This work focuses on two cognitive tasks: the evaluation model of the alternatives based on proof standards and the construction of arguments for the proposed recommender system’s recommendation using argumentation schemes and a dialogue game.

4.1. Dairs Methodology

The evaluation of alternatives is the process of evaluating a set of alternatives based on their attributes, indicators, or dimensions of those alternatives [50]. In this case, the alternatives are the feasible project portfolios for the PPS problem. Each portfolio is evaluated considering its performance on each objective and set of constraints. A criteria weight vector or a criteria hierarchy order is commonly used to solve evaluate alternatives. Therefore, DAIRS also considers these two elements when evaluating portfolios to create a recommendation

Using the previous information regarding the properties of the problem provided by the DM, a proof standard is selected considering said properties and used to evaluate all the feasible portfolios. Then, the recommender system defines an initial recommendation supported by the information provided by the DM and an abductive inference argumentation scheme based on the information obtained by the proof standard used. Therefore, before the dialogue game has begun, the system already has an initial portfolio recommendation to present to the user according to his/her preferences and arguments to defend said recommendation.

The recommendation system presented in this work requires defining a set of crucial elements for its operation: A set of proof standards, argumentation schemes, and a dialogue structure that defines how both user and system will perform a bidirectional interaction using a dialogue game.

4.1.1. Proof Standards

To carry out a proper dialogue game between the user and the system, it is necessary to define methods that allow correctly collecting and analyzing the arguments for and against the current statement to reach a reasonable conclusion. Proof standards allow performing such collection and analysis.

The recommender system is capable of using a large number of proof standards. For this work, the orientation of the set of proof standards selected aims towards defining a solution for the PPS problem and is based on Ouerdane’s work [35].

DAIRS considers proof standards that use a criteria preference hierarchy. These standards allow the user to define strict preferences between objectives. The recommender system focuses its search on the criteria defined as most relevant by the DM.

Simple majority: As explained in Section 3.3.2 and presented in Equation (5), this standard evaluates the truth of the statement “x is better than y” based on the number of objectives this statement holds.

Lexicographic order: This proof standard uses a hierarchical order established in the criteria. A project x is better than a project y if, and only if, x has a better value on a criterion of higher priority than y. The criteria hierarchy establishes that a higher-order criterion is infinitely more important than those in a lower position. Therefore, this method disregards the value of any other criterion of lower priority.

There are cases where even when the DM has a higher preference over specific criteria, this preference might not be strict. Instead, there is a certain threshold of acceptance for criteria with lower priority if their improvement is significant in these cases. Therefore, DAIRS considers proof standards that analyze each project portfolio supported by a criteria weight vector, determining each objective’s relevance. These standards allow the system to identify possible significant improvements in criteria with different levels of importance for the DM.

Weighted majority: This method follows a similar strategy than simple majority. However, it relies on the weights of each criterion to evaluate. In this case, a criteria weight vector w assigns a weight to each criterion i (). Portfolio x has a preference over portfolio y if the sum of the weights of the criteria where x is better than y is greater than the sum of the weights of the criteria where y is better than x.

Weighted sum: This method defines a single fitness value for each portfolio based on w and the fitness value f obtained on each objective i (). Let N be the number of criteria for the current problem. Equation (7) presents a formal definition of the previous statement. Portfolio x is preferred over portfolio y if, and only if, the sum of x is greater than the sum of y ( > ).

TOPSIS: This proof standard is based on a method proposed in [17], which considers both the distance to the ideal solution, also known as utopia point, and the distance towards the negative ideal solution or nadir point. The solution that is closer to the former and furthest from the latter is the one that takes precedence.

The selection of the proper proof standard is essential to obtain a successful recommendation that follows both the DM’s preferences and the quality of the solution itself. This process has a very relevant impact on the dialogue game. Each proof standard can have a set of properties defined, making them unique compared to the other set standards. During the dialogue game, both the user and system can define which properties are suitable to be considered or not in the discussion to obtain better recommendations or enhance the dialogue game’s quality, based on the information provided by both players. The properties considered for the proof standard selection are:

- Ordinality: Only the ordinal information about the performance is relevant.

- Anonymity: There is no specific preference order for the criteria.

- Additivity with respect to coalitions: It is possible to formulate additive values regarding the importance of a criteria subset.

- Additivity with respect to values: The value of a solution is obtained by the sum of each criterion’s values.

- Veto: A solution must improve another over a certain veto threshold to be accepted.

- Distance to the worst solution. The best solution is determined not only by its closeness towards the best possible solution but also by how far it is from the worst possible solution

This set of properties is based on the recommendations provided multiple works in the literature [35,52]. Table 1 shows the properties belonging to each proof standard. It should be noted that both simple majority and weighted majority methods can be used with or without a veto threshold.

Table 1.

Proof Standards used for DAIRS and their properties.

4.1.2. Argumentation Schemes

In addition to determining the proof standards to be used, it is necessary to define which human behavior patterns to consider for a dialogue within the system. The intention of defining the patterns to be identified is to regulate the system responses based on these patterns and establish boundaries in the dialogue to avoid situations such as infinite dialogue loops or loss of focus. For this reason, it is necessary to establish a set of argumentation schemes, which allow the process of identifying behavioral patterns to be carried out.

This work seeks to incorporate the proof standards selected in the previous subsection to strengthen and facilitate premise analysis and to define a conclusion for the current statement in the dialogue through argumentation schemes. These schemes are chosen considering proposals provided in previous related works [35,52]:

Abductive reasoning argument: This argumentation scheme allows the system to select the most suitable proof standard according to the current properties identified based on the information provided by user and the system.

Argument from position to know: The system performs an initial recommendation using this argumentation scheme after the system chooses a proof standard. This scheme also provides recommendations for the dialogue game’s first cycles. With this, the system does not consider itself an expert yet as it has only obtained the initial information given by the list of available projects, DM’s preferences, and budget threshold.

Argument from an expert opinion: After several cycles have passed in the dialogue game, surpassing a certain number of cycles, defined as cycle threshold, the system considers that it has obtained enough information from the user to position itself as an expert for the problem analyzed. Under this scheme, the system is more assertive in its arguments, as it has more information to defend them instead of just expecting to obtain new data from the user.

Multi-criteria pairwise comparison: The system compares the current recommendation against other alternatives, as well as solutions picked by the user that might attract his/her interest. The proof standard currently being used supports this scheme to form arguments to either defend the recommendation or select the user-picked solution if the new information provided proves that the DM’s selection outperforms the system’s recommendation under the current proof standard.

Practical argument from analogy: Sometimes, two solutions might be similar to a high degree. Therefore, it is necessary to consider if the previous solutions considered by either the system or the user can be considered recommendations for the dialogue game’s current stage.

Ad ignorantiam: The current state of the system is unable to make inferences. All the information known by the system is considered valid by it. Meanwhile, all unknown information is considered false. The user can provide the system with new information regarding the problem in discussion at any point during the dialogue game.

Cause to effect: A change in the state of a proof standard property or the value of a criterion affects the current state of the system’s recommendation. Whenever a change is detected, the system performs a reevaluation of the current solutions based on the new information. Then, it provides the user with a new recommendation, and the dialogue game continues.

From bias: The system considers this fallacy as the user might be biased towards a particular solution. While one of the recommender system’s objectives is to provide the most suitable solution, user satisfaction is also a very relevant factor that a system must consider. Therefore, the system allows the user to set the recommended solution as the alternative the user picks. However, the system constantly reminds the DM that his/her choice might be biased and not the best available.

During the dialogue game, the system uses an argumentation scheme selected depending on the activities carried out in its current state by either the system or the user. Therefore, it is necessary to properly establish the dialogue game structure to use the correct argumentation scheme to characterize the arguments and premises used in the dialogue’s current state.

The system relies on argumentation schemes to accept or reject a statement and obtain information, leading to changes in the problem’s criteria values or the state of the proof standard properties. As previously mentioned, argumentation schemes can define the most suitable proof standard according to the current information provided.

4.1.3. Dialogue Game Rules

DAIRS aims to use a dialogue game to establish a two-dimensional interaction between the user and the system. This interaction allows both participants to provide statements to strengthen the information to ease the decision-making process.

Before carrying out a dialogue game between the user and the system, it is necessary to define the set of rules that the players will follow in the game. As previously mentioned in Section 3.4, there are four types of dialogue game rules: locution, compromise, dialogue, and termination.

The compromise, dialogue, and termination rules followed in this work are established in [35]. However, the locution rules provide two main additions. First, the system can reject an argument presented by the user if it does not satisfy the current evaluation criteria. Second, the user is allowed to reject the system’s recommendation at multiple points during the dialogue. These additions focus on the system’s capability to defend its recommendation and user’s satisfaction. Table 2 presents the locution rules used in this work. Let be the current statement, C a critical question, and “type” refers to C being an assumption or exception.

Table 2.

Locution rules for the dialogue game used in this work.

4.1.4. State Transition Diagrams

Once the dialogue game rules are defined, it is possible to design state transition diagrams (STDs). An STD can graphically represent how the dialogue flow will carry out. A noticeable advantage in using STDs is that they offer an easy method to identify and regulate how the dialogue transpires. Also, STDs show the movements available to both players at each stage of the interaction.

The recommender system proposed uses two STDs. Before a dialogue game begins, the system will select one of these diagrams to establish a user-system interaction for the current instance. The factor considered to define which STD to use is whether the DM establishes criteria preference hierarchy before the dialogue game begins. Depending on which scenario occurs, the system will use a particular STD and a different proof standard according to which properties are considered active.

The reasoning for using different STDs based on the DM’s preferences is to take advantage of the amount of information and knowledge the user has regarding the problem. DAIRS provides a learning-focused dialogue if the DM has little knowledge of the problem. Meanwhile, the system provides a more assertive and portfolio selection-driven dialogue if the DM has an acceptable level of knowledge and it is possible to skip or shorten the learning phase.

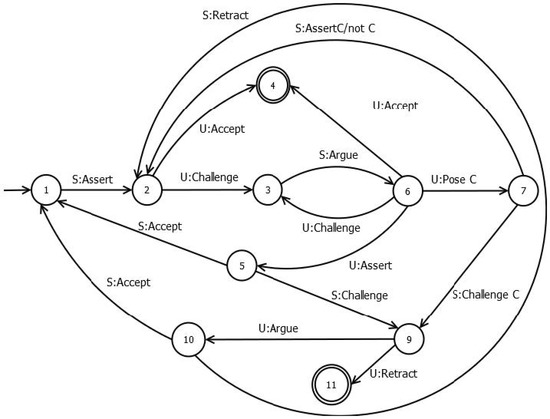

State Transition Diagram 1 (STD1): This diagram is chosen whenever the initial information available about the problem does not provide an explicit preference hierarchy regarding the problem criteria. This STD follows the structure defined in [35] while adding the additional locution rules mentioned previously. In particular, it adds a move that allows the system to reject the user’s suggestion if there are no additional reasons for supporting his or her statement after a certain number of dialogue cycles have passed. A dialogue cycle can be defined as the point in the dialogue game when it reaches the initial state (1) once again. This system explains to the user that the reason for this rejection is to avoid a dialogue loop and continue the recommendation process. Figure 1 shows the structure of STD1.

Figure 1.

State Transition Diagram 1, used when there is not an explicit criteria preference hierarchy defined on the initial information.

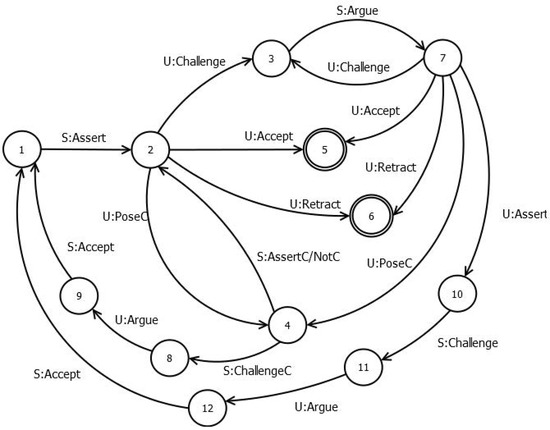

State Transition Diagram 2 (STD2): The second STD is used when there is an explicit user-defined preference hierarchy for the criteria before the dialogue game begins. The system seeks to exploit this situation to use and obtain as much information as possible from the early state of the dialogue game. Also, it allows the user to present critical questions from the beginning, which is not allowed when using STD1. STD2 provides more flexibility for the user by allowing him/her to reject the recommendation since the initial states of the dialogue. Figure 2 shows the structure of STD2.

Figure 2.

State Transition Diagram 2, used when there is an explicit criteria preference hierarchy defined on the initial information.

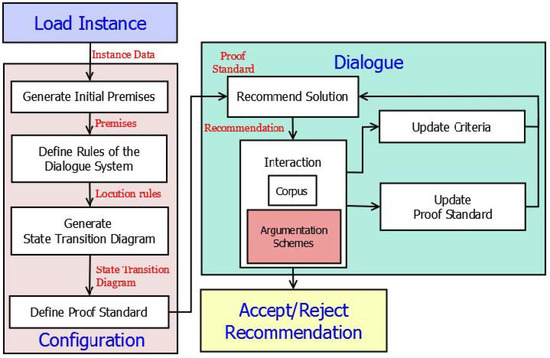

4.1.5. System Modules

The next step is to incorporate the dialogue game and all its necessary procedures to be carried out properly within DAIRS. Previously, four main processes were identified as necessary to be implemented in the system to execute a recommendation process properly [36]. Figure 3 presents the structure of these models.

Figure 3.

Diagram module of the proposed recommender system, argumentation scheme are used within the dialogue module.

Load instance module: Reads the information concerning an instance to be solved by the recommender system. The DM uploads a file containing initial instance data to the system. This file contains information such as the number of candidate solutions and criteria, a criteria weight vector, a solution/objective matrix, budget threshold, veto threshold (if required), and criteria hierarchy. For the PPS problem, it is also necessary to insert additional data, such as the project portfolio matrix, representing the projects selected by each portfolio.

Configuration module: The system analyzes the information from the instance obtained in the load instance module to determine the initial configuration of all the elements required to start a dialogue game, such as the dialogue game rules, the state transition diagram, and the initial proof standard. This setup will allow the system to provide an initial recommendation to start the dialogue with the user.

Dialogue module: The user and the system start the dialogue game. The system’s main objective is to convince the user to accept the recommendation provided by it. However, the user can reject the current recommendation or add new information and modify the initial configuration. This process will provide new information to the system, which the system will use to generate a new recommendation.

Recommendation acceptance/rejection module: The user can accept or reject the system’s recommendation. This module determines a final step in the dialogue. The proposed recommender system attempts to consider the human factor by allowing the user to reject the solution at several stages of the dialogue, even if the solution recommended is the most suitable according to the current information provided by both the instance and the user. This option aims towards the user’s satisfaction. As previously mentioned, while the recommender system’s objective is to provide a high-quality recommendation, it is also desirable that the user feels satisfied with his/her final decision. User satisfaction is also an objective that any recommender system must pursue.

These modules adequately represent a recommender system’s structure supported by concepts related to argumentation theory, such as argumentation schemes. For this reason, the development of the recommender system presented in this paper uses the previously mentioned structure.

4.2. Interactive Prototype

The next step in developing the proposed recommender system is the implementation of a prototype, which incorporates all the previously mentioned elements (argumentation schemes, proof standards, dialogue games, and STDs). The proposed methodology intends to properly carry out a dialogue game, following the dialogue structures defined and represented in the STDs. The development of this prototype allows a user to directly contact the recommender system and to evaluate the usability of the framework designed in the previous subsection.

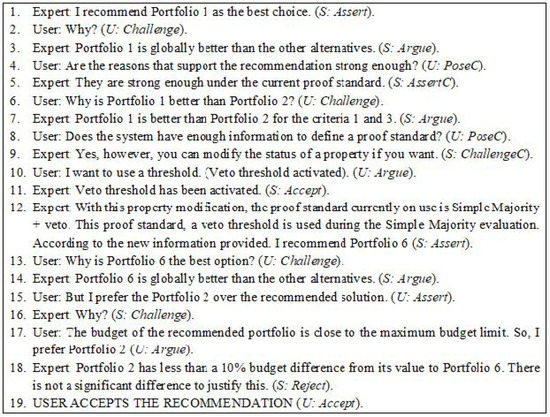

Figure 4 shows a dialogue game carried out between two users following the proposed structure. This dialogue shows an interaction between a recommender system (which plays the role of an expert) and the DM (who plays the role of the user). While the system presents recommendations, the user can question them, challenge them, or argue. The end of the dialogue relies on the user’s final decision to accept or reject the system’s recommendations. Note that the system can evolve its recommendation into a new one when the information provided presents valid arguments to justify the change.

Figure 4.

Example of a dialogue game between user and system following the defined STDs.

4.2.1. Bidirectional Interaction Algorithm

Algorithm 1 corresponds to the proposed method for bidirectional interaction between the user and DAIRS. The objective is to present the user with recommended solutions and an explanation of the recommendation while receiving the DM’s preferences. The system must define several argumentation elements before the user-system interaction within the prototype may begin: A set of proof standards and its properties , the initial set of premises , the argumentation scheme set used for the dialogue game , the dialogue game rules D, and the set of available state transition diagrams . The output of this algorithm is a portfolio recommendation

This algorithm also requires a file of the instance (file). This file must contain a set of elements as part of the initial input: An alternative/criteria value matrix (C), a criteria preference hierarchy (), a criteria weight vector (W), a veto threshold vector for all criteria (V), a set of available project portfolios (P), its respective cost (), and the maximum allowed budget (B). Appendix A shows in more detail the information that this file should contain.

The algorithm begins using the Load instance module to load an instance in step 1, obtaining all the data necessary to proceed to the Configuration module. From steps 2 to 7, this module defines each element’s values for the cognitive decision tasks and dialogue game, according to the information provided by the instance.

Then, the Dialogue module is used from step 8 to step 18, establishing an interaction with the user in step 9, which could result in a possible modification of the values of the alternative/criterion value matrix, the active set of the proof standard properties, or the selected proof standard, as well as an update on the set of premises according to with the new information given by the user during that step.

| Algorithm 1 Bidirectional interaction of DAIRS |

|

Then, the system checks whether there was a change that could affect the current recommendation. Steps 11 and 12 are executed if there is a change in the alternative/criterion matrix values. These steps update the matrix and use the current proof standard to evaluate all the available portfolios again. Steps 14 to 16 are performed if either the user or the system has modified the proof standard’s properties. These steps update the set of active proof standard properties, select the most appropriate proof standard and reevaluate the set of portfolios. The system can directly change the proof standard to offer the user a more flexible system if the user desires. If so, then step 18 is executed, using the chosen proof standard to generate a new recommendation.

The algorithm repeats this process until the user reaches a final state of acceptance or rejection of the system’s recommendation. When that happens, DAIRS reaches the Recommendation acceptance/rejection module, considering the dialogue game finished and ending the interaction.

4.2.2. Graphical User Interface

The graphical user interface of the proposed prototype seeks to allow the user to interact with the system in multiple ways. From the definition of the instance to work with, establish a dialogue with the system, edit values of the profit obtained by each available project, and manipulate the status of the proof standard properties considered by the system to match the user’s preferences better. This interface is composed of a set of windows that allow the user to perform the activities previously mentioned.

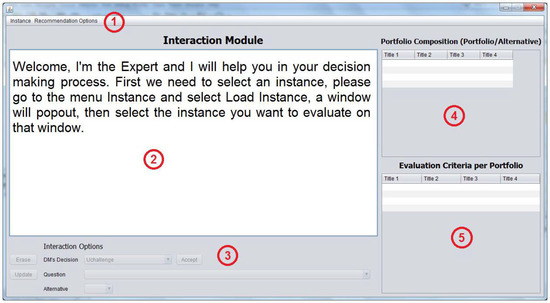

Figure 5 presents the graphical user interface (GUI) of DAIRS; the primary areas in this interface are:

Figure 5.

Main window of the DAIRS GUI. The GUI allows the user to read the dialogue, perform actions and see the available portfolios.

- The menu bar. A set of menus that allow the user to perform actions related to the instance and its properties. It contains two sub-menus. The first sub-menu, named Instance, allows the user to read, start and restart instances. The second sub-menu, named Recommendation Options, lets the user update criteria values, visualize information regarding all available portfolios, the current state of the dialogue game, and even provides the user a Help window with any necessary additional information regarding the GUI.

- The dialogue area. Displays the recommendations and arguments presented by DAIRS, questions posed, or changes in the user’s information. This section of the windows presents the arguments provided by both players during the current and previous steps in the dialogue game.

- The interaction area. This area allows the user to perform a dialogue with the system. The user can determine his next move within the dialogue game, the statement or question that follows that movement, and, if necessary, the chance to select an alternative portfolio that accompanies the presented statement.

- The portfolio composition area. Shows information about the portfolios and their selected projects by presenting a portfolio/alternative binary matrix.

- The evaluation criteria area. Shows the information regarding each criterion’s values for every portfolio by presenting a portfolio/criteria matrix.

As previously mentioned, the recommender system prototype proposed in this work focuses on the PPS problem. As previously mentioned, the GUI intends to allow the user to interact with a recommender system using the proposed structure. The Load instance module reads the information about a PPS problem instance from a file. This file includes all the required information necessary to initiate a dialogue game between the user and system in DAIRS, as explained in Algorithm 1.

The Configuration module allows the user to select proper parameters to start the dialogue game seeking to aid the DM in his/her decision for the uploaded PPS problem. The initial premises and arguments that both the user and system are available to select from are determined based on the information. The dialogue game rules are defined. Then, DAIRS generates an STD following the structure of said rules. In this case, if there is not a criteria hierarchy defined on the instance file, then STD1 is used. However, if there is a preference order defined in the file, then STD2 is used. Finally, a proof standard is selected based on the information provided by said instance.

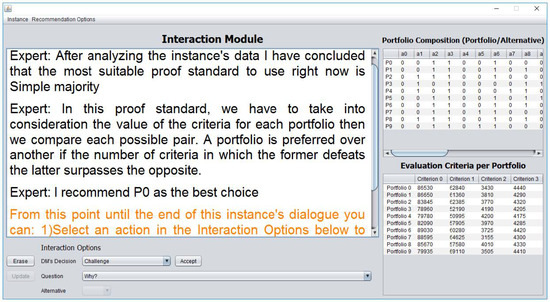

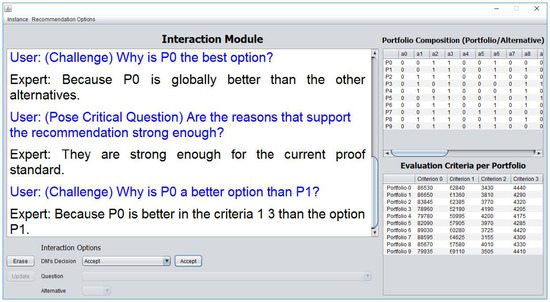

After this, the Dialogue module is reached. In the first step, the system provides an initial recommendation to the user based on the selected proof standard and all available information regarding the candidate portfolios. Figure 6 shows this process. From this point, the dialogue game begins, the user can accept or reject the said recommendation, provide his arguments to counter the system’s proposal, or even introduce additional information which affects weights or veto thresholds of the criteria, the impact of an alternative in a criterion, or the proof standard. Figure 7 presents a screenshot reflecting these movements.

Figure 6.

Initial recommendation from the system. DAIRS analyzes all the information provided by the instance file and provides a recommendation.

Figure 7.

Advanced stage of the dialogue game. The user is questioning reasoning behind the system’s recommendation and the system is able to respond.

During the dialogue game, it is expected that the prototype’s interface allows changes within the instance. The user can change the value of any criterion for each available project. The user can do so until the process reaches the Recommendation acceptance/rejection module when the DM accepts or rejects the current recommendation and considers it a final decision. By doing so, the dialogue game reaches its end.

4.2.3. Definition of the Dialogue Game Rules

Within the prototype, once the instance to read containing the PPS problem’s information has been defined, it is necessary to determine how DAIRS will carry out the dialogue game between the user and the system. For this purpose, the system must define the dialogue game rules.

For this prototype, the compromise, dialogue, and termination rules are identical in all possible scenarios where an interaction between the players occurs to aid the decision-making process of a PPS problem, as shown in Section 4.1.3. However, it is necessary to define the locution rules that each dialogue game will use, based on whether or not there is a hierarchy order established for the criteria.

As mentioned in Section 4.1.4, DAIRS uses two STDs. The first one, STD1, does not require an initial preference hierarchy and focuses on obtaining information regarding the PPS problem on the dialogue game’s initial cycles. The second diagram, STD2, is used when the DM defines preferences before the dialogue game begins. In this case, the system is more flexible to the user since DAIRS considers that both players have a better understanding of the problem as there is enough information to determine a hierarchy.

Once the dialogue game rules and STD are defined, the user can communicate with the system through the main window’s interaction area after the system has presented an initial recommendation. Considering the structure presented by Figure 5, the user has at his disposal a set of available actions that allow him to interact with the system before and during the dialogue game. These actions are the ones that allow the creation of bidirectional interactions between the user and the system:

- DM’s Decision. Dialogue move performed by the DM.

- Question. Statement related to the decision taken by the DM.

- Alternative. This option will active to allow the user to select a portfolio from the available candidates if the statement selected requires selecting an alternative (for example, comparing the recommendation and another portfolio).

- Accept button. Executes the DM’s decision.

- Erase button. Deletes the text from the dialogue text box.

- Update button. Allows the user to update the values of the project/criteria matrix.

The options available for DM’s decision depend on the current state of the dialogue within the STD and the locution rules defined. The user can accept the current recommendation (Accept) or reject it (Retract), ending the dialogue game. He/She can also present an argument to challenge the system’s actions (Challenge), create an argument for or against the recommendation (Argue), suggest a recommendation for the system to analyze (Assert), or present a critical question that can modify the current proof standard used and its properties (Pose Critical Question).

4.2.4. Use of Argumentation Schemes

DAIRS uses an argumentation scheme based on the current state of the STD and the DM’s action in the interaction area. The argumentation schemes used in this prototype are those reviewed in Section 4.1.2. This subsection briefly explains the conditions and events that trigger the use of each scheme.

The abductive reasoning argument scheme is used in the prototype when the system reads an instance before generating an initial recommendation, the user uses the GUI to start a dialogue game, after posing a critical question, and when the user argues to have a preference towards a particular criterion.

DAIRS uses the argument from position to know scheme after setting the initial proof standard, when the dialogue game starts using the GUI, when the system provides a new recommendation and the dialogues cycles have not surpassed the cycle threshold. The system also uses this scheme if the user challenges a system’s argument and when the user poses a critical question.

Meanwhile, the recommender system uses the argument from an expert opinion scheme under the same scenarios as argument from position to know. However, it is only used when the number of dialogue cycles has surpassed the cycle threshold, which implies that the system has a more profound knowledge about the instance.

Whenever the user wishes to compare the profit or budget of two portfolios, DAIRS uses the multi-criteria pairwise comparison scheme. When the difference between two compared portfolios has no significant difference, DAIRS uses the practical argument from analogy argumentation scheme to support its decision.

For the fallacy-based argumentation schemes, the system uses the ad ignorantiam scheme at all times, as all information not introduced into the system is considered false by it. Meanwhile, DAIRS uses the from bias scheme when there is a criteria hierarchy defined or if the user decides to define a hierarchy.

Lastly, the prototype uses the cause to effect argumentation scheme when the user poses a critical question, asserts a preference towards a specific portfolio, or defines a preference towards a particular criterion as an argument to justify his/her preference for a specific portfolio.

4.2.5. Proof Standard Selection

The last step in the configuration module before presenting an initial recommendation is to select the initial proof standard. To do the system performs a two-stage method. The first stage corresponds to the definition of the proof standard properties before starting the dialogue game.

In DAIRS, different considerations determine if a property is set as active or inactive. Ordinality is always active unless the value of one of the weight vector values is equal to or exceeds 0.6 under a normalized value. Anonymity is active when there is not an explicit criteria preference hierarchy order defined. Additivity with respect to coalitions and with respect to values are only active if ordinality is active as well. Veto and distance to the worst solution are inactive by default.

The veto property is defined as inactive by default for the definition of the initial proof standard since the simple majority and weighted majority proof standards can have both veto and non-veto versions. Therefore, the user has the choice to activate this property during the dialogue game. Distance to the worst solution is set as inactive as the system seeks to use basic comparisons between all portfolios during the initial recommendation. The user is allowed to activate this property and access more complex proof standards during the dialogue.

After the proof standard properties setup, the system selects the most suitable standard based on the active properties. The DAIRS prototype analyses each proof standard, choosing the one with the most significant number of related properties active at that time in the system.

Following the proof standard selection, the process moves towards the dialogue module and performs an interaction between the user and the system using the DAIRS prototype. In this module, the user can modify the current state of all properties during the argument exchange between him/her and the system. Providing new information can also cause said properties to become active or inactive. There are three conditions in which the system can modify the status of each proof standard property:

- If the user explicitly indicates he/she wishes to modify the state of a property (see steps 9 to 11 in Figure 4).

- If the user indicates that he/she has a preference for a particular criterion.

- If the user directly selects the proof standard by posing a critical question asking if the current proof standard is the best available option, in which the system responds by allowing the user to edit the status of a property or choose a new standard directly.

If any previous scenarios occur, the recommender system selects the new proof standard to use by considering the active properties or using the standard that the user directly chose. After doing so, the system analyses the available portfolios under the selected proof standard and presents a new recommendation. This process is what the system considers a dialogue loop.

5. Experimentation and Analysis

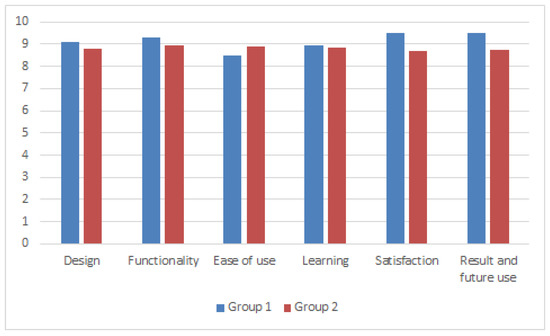

This section defines an experimental design to evaluate the effect of the developed prototype on various users. Generally, the measurement of recommender systems comes through quantitative measures. However, human factors affect the acceptance of the recommendation that must be evaluated in interactive systems [54], such as user satisfaction and confidence in the results.

This experiment seeks to analyze the usability of the recommender system under a real-life simulation of a PPS problem in a controlled environment, where users interact with the system. Under these considerations, this work performs a usability test to evaluate the proposed prototype.

The analysis presented in this section will allow a study of the effects on user overall satisfaction by using argumentation theory concepts, such as argumentation schemes, proof standards, and dialogue games on an MCDSS. This study will also compare the effects on user satisfaction under the two STDs presented in this proposal.

5.1. Experimental Design

A study is conducted on two groups of seven individuals to evaluate the performance of the DAIRS prototype built; each group includes people with different degrees of computational and mathematical knowledge, from people that have a basic level of computer knowledge to master degree and Ph.D. students.

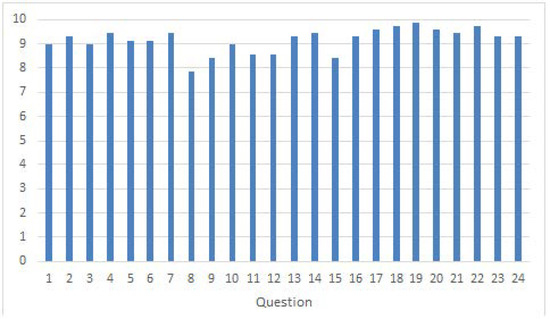

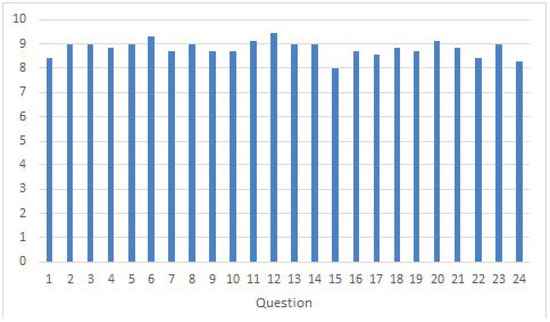

Each member plays the role of a user and interacts with the system. in a dialogue game. The interaction period given to the users to work with the prototype has a maximum limit set to 50 min. The experimentation process performed by each group consists of the following steps: