Abstract

A planar 3-index assignment problem (P3AP) of size is an NP-complete problem. Its global optimal solution can be determined by a branch and bound algorithm. The efficiency of the algorithm depends on the best lower and upper bound of the problem. The subgradient optimization method, an iterative method, can provide a good lower bound of the problem. This method can be applied to the root node or a leaf of the branch and bound tree. Some conditions used in this method may result in one of those becoming optimal. The formulas used in this method contain some constants that can be evaluated by computational experiments. In this paper, we show a variety of initial step length constants whose values have an effect on the lower bound of the problem. The results show that, for small problem sizes, when n < 20, the most suitable constants are best chosen in the interval [0.1, 1]. Meanwhile, the interval [0.05, 0.1] is the best interval chosen for the larger problem sizes, when n ≥ 20.

1. Introduction

Consider a scheduling machine problem where there are machines, tasks, and time slots provided. Let be the cost associated with the assignment where the machine does the task at the time slot . The problem is to find an assignment that will minimizes the total cost and satisfies the following three conditions:

- (i)

- at every fixed time slot, every machine works in parallel;

- (ii)

- each machine does a different task on a different time slot; and

- (iii)

- after the last time slot, all machines have completed all tasks.

Let for be the decision variables where if the machine is assigned to do the task at the time slot , otherwise . The problem can be represented by the following mathematical programming:

This problem is called the planar 3-index assignment problem (P3AP). The problem was shown to be an NP-complete problem by Frieze in 1983 [1]. Tabu search heuristics and several approximation algorithms have been developed to solve the problem. The first approximation was presented by Kumar et al. [2]. The algorithm had a performance guarantee of . This was improved to and 0.669 by Gomes et al. [3] and Katz-Rogozhnikov and Sviridenko [4], respectively. Subgradient optimization procedures have been developed in order to create a good lower bound in an exact branch and bound algorithm for solving the problem (see Magos and Miliotis [5]). The idea and general steps of the subgradient optimization methods are presented in Section 2. In Section 3 and Section 4, a modified subgradient optimization method for solving P3AP is presented and its computational results are given, respectively.

2. Subgradient Optimization Methods and Modifications

The subgradient optimization method was first developed in 1985 [6]. The method aimed to solve non-differentiable optimization problems. One such problem is mathematical programming. The problem optimizes a non-differentiable function with some constraints as follows:

where is an -column matrix, is a -row matrix, is an -column matrix, is an -row matrix, is an -coefficient matrix, and is an -coefficient matrix. The structure of Problem (2) is suitable for applying Lagrangean relaxation [7] in order to construct an easier solvable problem. We can propose that the constraints will be incorporated into the objective function using the Lagrangean multiplier vector where for . Then, the corresponding Lagrangean relaxation problem can be written as follows:

Since for all , the objective value for all . Assume that . Then, is the best lower bound found by the Lagrangean relaxation method. In order to find , the successive values of need to be determined. The “subgradient optimization method” is the most useful iterative method to do so.

At the -th iteration of the method, we suppose that is an optimal solution to Problem (3) with the Lagrangean multiplier vector . The vector provides a subgradient directions of at the point . At the -th iteration, the Lagrangean multiplier vector can be determined as follows:

where is a step length, commonly determined as

where is the optimal objective value to Problem (3), and is a step length constant of the method at the -th iteration. In general, the method will converge the optimal solution if one of the following conditions holds [8]:

- (i)

- and ;

- (ii)

- and is bounded for .

The step length plays a crucial role in any subgradient optimization method. Different choices of step length and target values for the method have been presented [9]. Moreover, the step length constant definitely plays a crucial role for the value of the step length . Many choices of -value have been proposed; some authors have defined [7], but most others have used [5,8,10]. Held and others [10] used their computational result to report some good rules for the -value as follows:

- (i)

- set for iterations where is a measure of the size of the problem; and

- (ii)

- successively halve both the values of and the number of iterations until is sufficiently small.

These rules were applied in the subgradient optimization algorithm for solving classical assignment problems:

A modified subgradient optimization method for solving the classical assignment problem was presented in 1981 by Bazaraa and Sherali [11]. In this method, a simple subgradient is used to identify the search direction. Another modified subgradient method was proposed by Fumero in 2001 [8]. The main difference between these two methods lies in the employment of the search direction. These two methods, as well as the method proposed by Held and others, were compared for efficiency by Fumero in 2001 [8]. The results showed that the modified method proposed by Fumero provided higher objective values in the initial steps.

In 1981, Fisher [12] supported the rules of identifying the -value proposed by Held and others. He confirmed that the -value must be between and for the subgradient procedure to converge to optimum. Unfortunately, there is no literature that provides the exact value to the step length constant, . However, a common practice is to use a decreasing sequence of -values.

Since the objective function value of Problem (3), , is unknown, most of the proposed applications have adopted a known upper bound () in the step length formula, Equation (5). Thus, the formula becomes

In order to avoid the zigzagging behavior in the Markov nature of the subgradient method and to improve its convergence rate, modified subgradient techniques have been proposed [13,14,15,16]. The techniques contain a suitable combination of the current and previous subgradient called search direction

where and are search direction constants at the -th iteration. Moreover, in the ()-th iteration, the Lagrangean multiplier vector is determined as follows:

These subgradient schemes have been analyzed for their convergence properties by Kim and Ahn [17]. It was shown that the method with these schemes has stronger convergence properties than the standard one.

3. Subgradient Optimization Method for the Planar 3-Index Assignment Problem

The structure of P3AP is suitable for applying the Lagrangean relaxation [5]. The relaxation can be formed by incorporating the constraints of type into the objective function. Hence, Problem (1) becomes a Lagrangean relaxation problem as follows:

For any vector , is a lower bound of Problem (1). Then, the best lower bound can be found by solving the following problem: for all possible vectors . A solution to the problem can be approximated by solving Problem (10) for a sequence of obtained through a “subgradient optimization method”. In 1980, Burkard and Froehlich [18] reported that the method produced good boundaries for Problem (1), but there were no details provided of the procedure used. Later, in 1994, Magos and Miliotis [5] implemented a subgradient optimization procedure that was modified from a subgradient scheme proposed by Camerini et al. [13]. The procedure employed many choices of the step length constant, , but no details of the choices were given.

In this paper, we present variants of the -values in the subgradient optimization method at the root node of the tree in the branch and bound algorithm for solving the problem. The procedure has been modified from the one proposed by Magos and Miliotis [5]. The main idea is unchanged, but the differences are: (1) the stopping criteria; (2) the condition rules for decreasing the step length constants; and (3) the step length formula. The stopping criterion and the rule for decreasing the step length constant in the modified procedure are much simpler, as shown in Table 1.

Table 1.

The stopping criteria, the condition rules for decreasing the step length constant, and the step length formulas in the two subgradient optimization procedures.

For any fixed index , Problem (10) becomes a classical assignment problem. It can be solved by the Hungarian method. A solution for every fixed index forms a subgradient direction vector, where . Then, a search direction vector at the current iteration , denoted by , is defined based on the subgradient direction, , and the previous search direction, . This vector can be formulated as

where

This idea is employed from Camerini et al. [13]. The current step length, , is defined as

where UB is the current upper bound of Problem (1), and is the objective function value of Problem (10) with the current Langarean multiplier vector . The step length constant value, , is discussed in Section 4. Finally, the Lagrangean multiplier vector for the next iteration is updated, as for Formula (9).

4. Computational Results and Conclusions

In this section, we present the computational results from applying the modified subgradient optimization procedure with some variations of the initial step length constant, . The procedure is operated on 1500 instants of P3AP of sizes 5, 6, 7, 8, 9, 10, 12, 14, 16, 18, 20, 30, 40, 60, 80. Each problem size consists of 100 instants. All cost coefficients where are integers sampled from a uniform distribution between 0 and 100. The dataset can be reached via www.math.psu.ac.th/html/th/component/content/article/75-person_detial/124-sutitar-m.html.

The purpose of the modified subgradient optimization procedure is to generate the best lower bound for Problem (1). The procedure provides different lower bounds according to different , even though all problems have the same actual size. The best lower bound found by this procedure is much better than the initial lower bound generated by the admissible transformations, see Burkard [19]. However, in the procedure in Magos and Miliotis’s paper [5], the authors considered that at the root node of the branch and bound tree. For other nodes, the author considered two factors effecting the value: the magnitude of the duality gap, , and the location of the node, denoted by with respect to the root of the tree. After several tests, their results are presented in Table 2.

Table 2.

The initial step length constant presented by Magos and Miliotis [5].

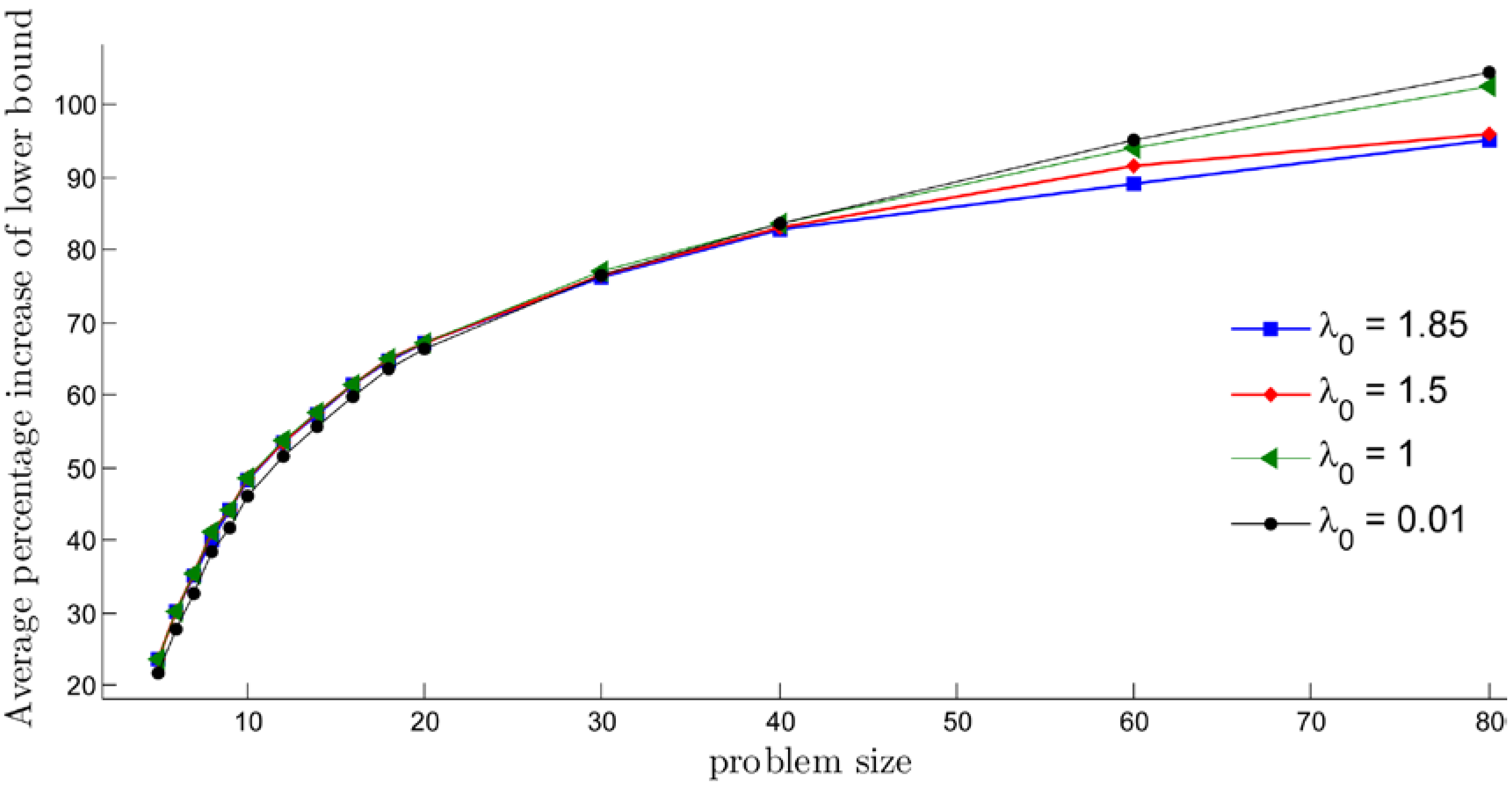

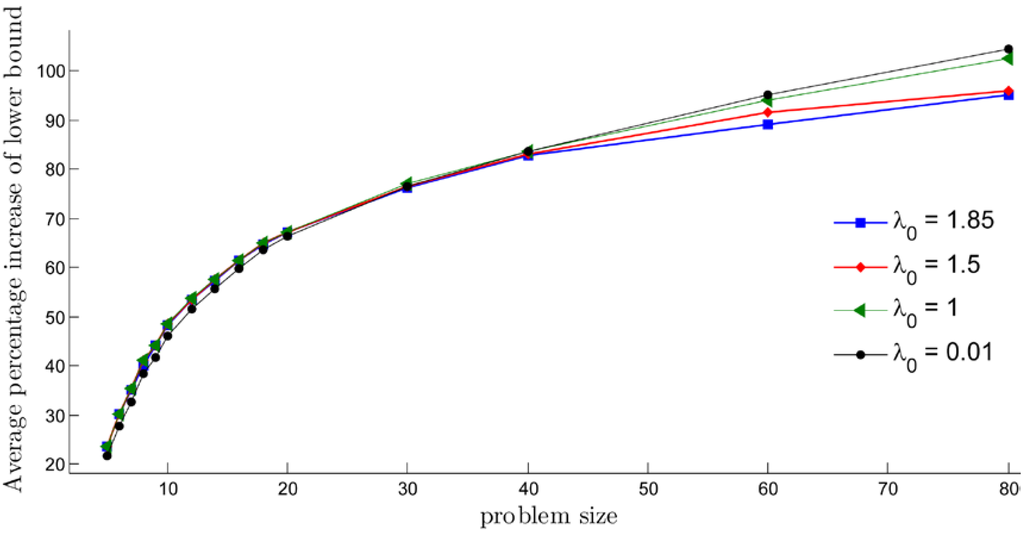

In our implementation, we show lower bounds behavior for the different cases of . Figure 1 depicts the average of the percentage increase of the lower bound when 1.85, 1.5, 1 and 0.01 are employed. After applying the modified subgradient optimization procedure, the lower bounds of all instants were increased from the initial lower bound generated from an admissible transformation. However, the figure still indicates that, if a problem size is smaller, a higher should be employed. On the other hand, for a bigger problem size, a smaller should be used.

Figure 1.

The average percentage increase of the lower bound after applying the modified subgradient optimization method with different -values.

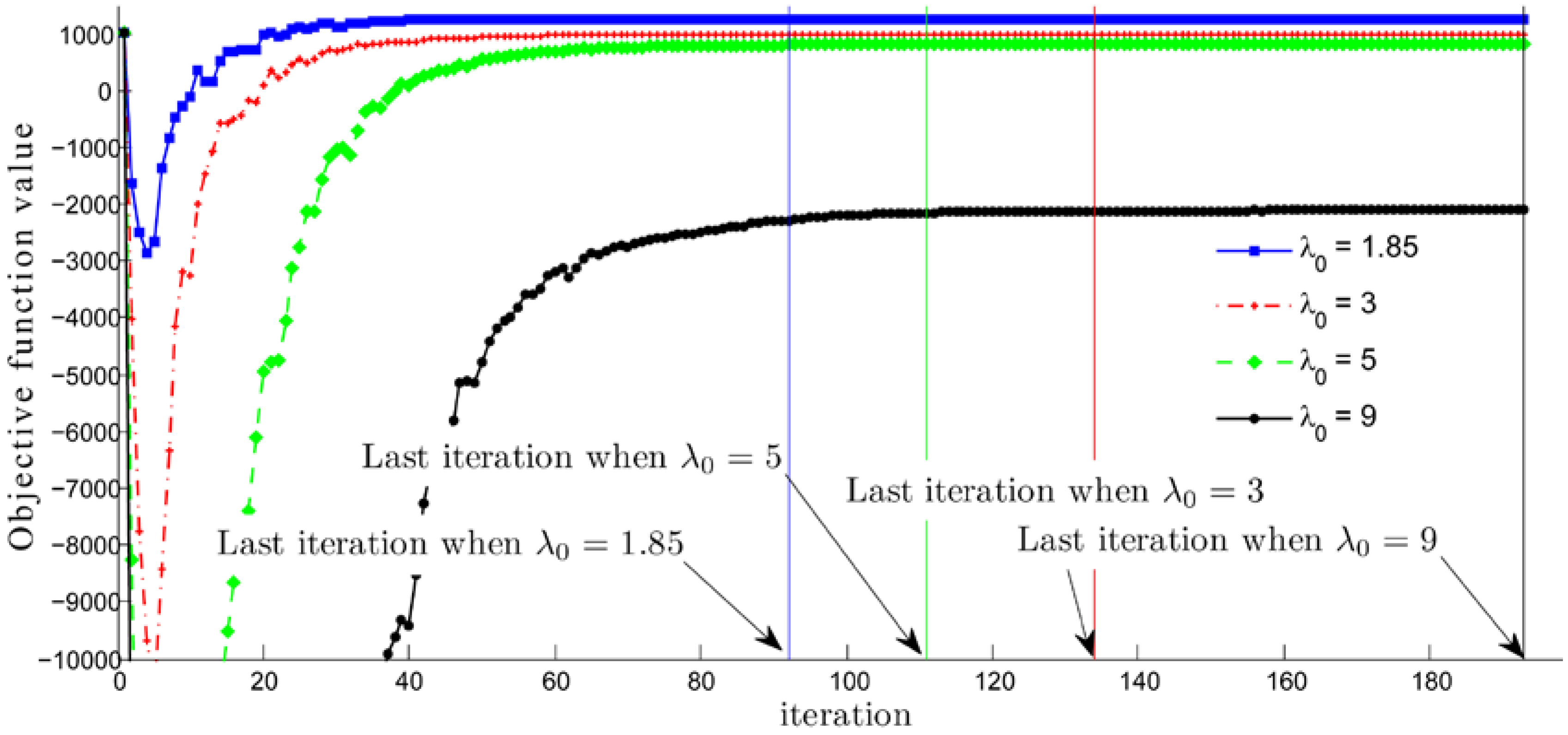

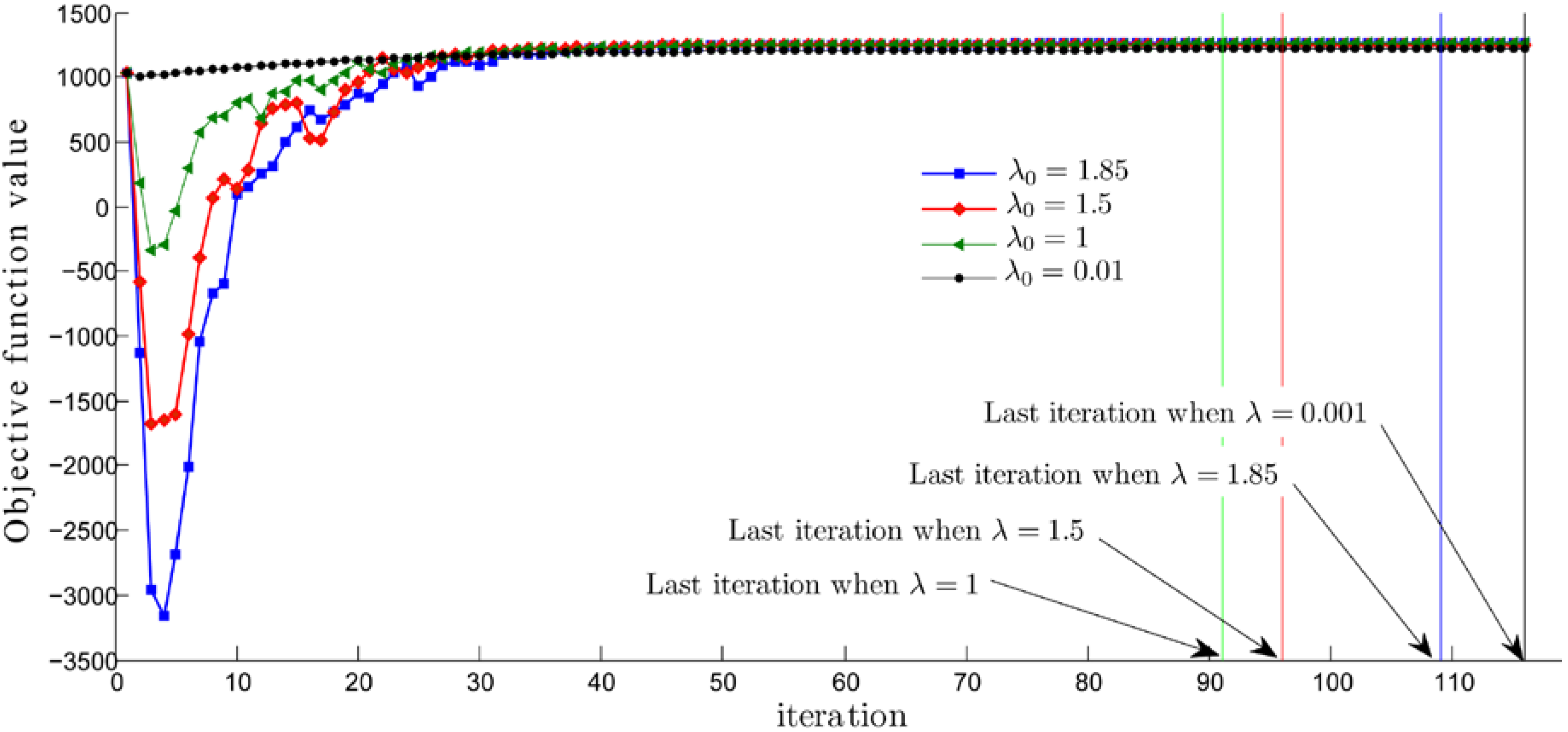

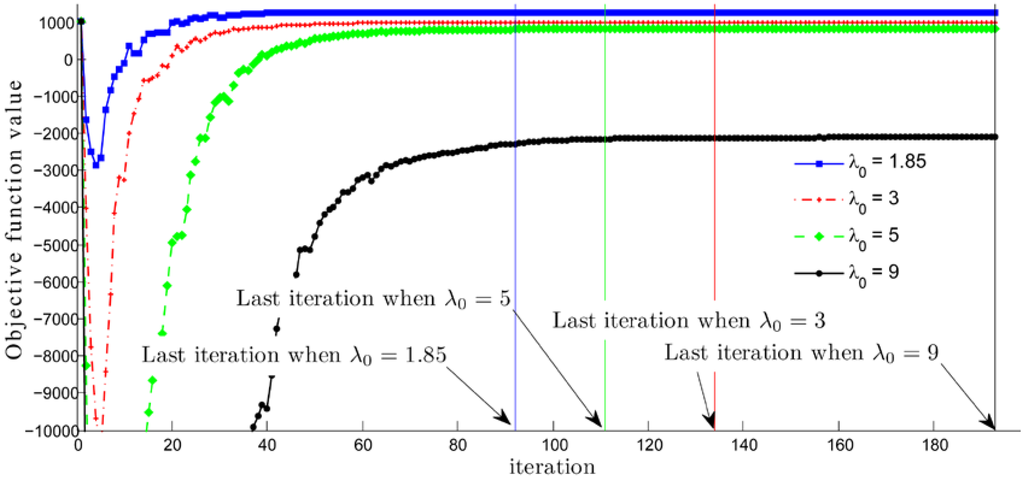

In most practices, authors set the value of the step length constant between 0 and 2, as is discussed in Section 2. However, our computational results have shown that choosing causes the minimum objective function value of Problem (10), the sink point in Figure 2 and Figure 3, to be deeper in accordance with the bigger . This value is extremely low, even for a small problem size. This especially occurs for a large problem size and is a major problem. Furthermore, the objective function value at the last iteration is much lower than the value at the earlier iterations, as seen in Figure 2. Consequently, it is not necessary to run the procedure until the stopping criteria is met. Moreover, in terms of lower bound behavior, , in our implementation , dominates the other cases with , see Figure 2 and Figure 3. Therefore, it is not necessary to choose values greater than 2.

Figure 2.

Objective function values, , at each iteration , generated by the modified subgradient optimization method for solving an instant of size 8 when .

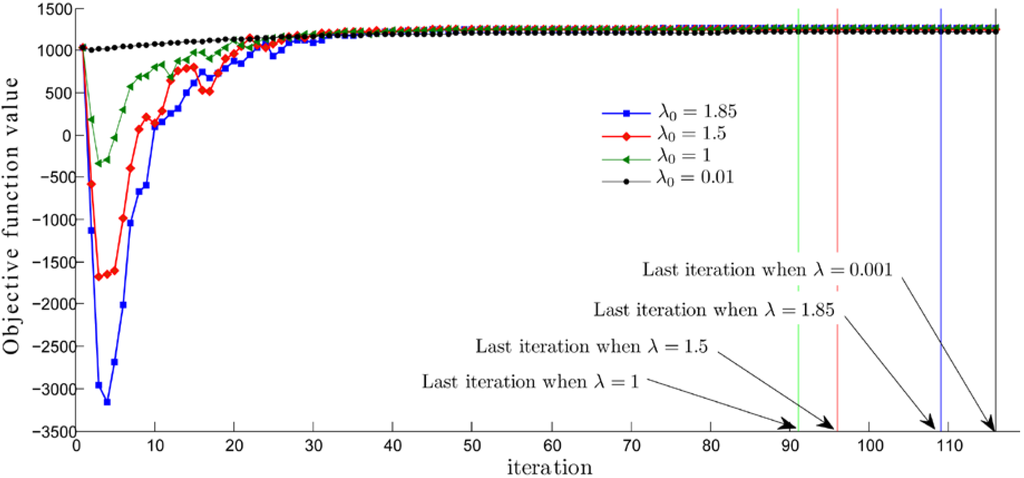

Figure 3.

Objective function values, , at each iteration , generated by the modified subgradient optimization method for solving an instant of size 8 when .

Consider the sequence of the objective function values of the considered instants, an example of which can be seen in Figure 3. The values decrease for some early iterations, then quickly increase for some iterations after hitting the possible minimum value (a sink point in the figure). After some iterations (mostly about 30 iterations), the values faintly increase until the stopping criteria are met. However, the objective function values in some iterations may be lower than some previous iterations, but this does not have an effect on the tendency of the sequence, as is shown in Figure 3.

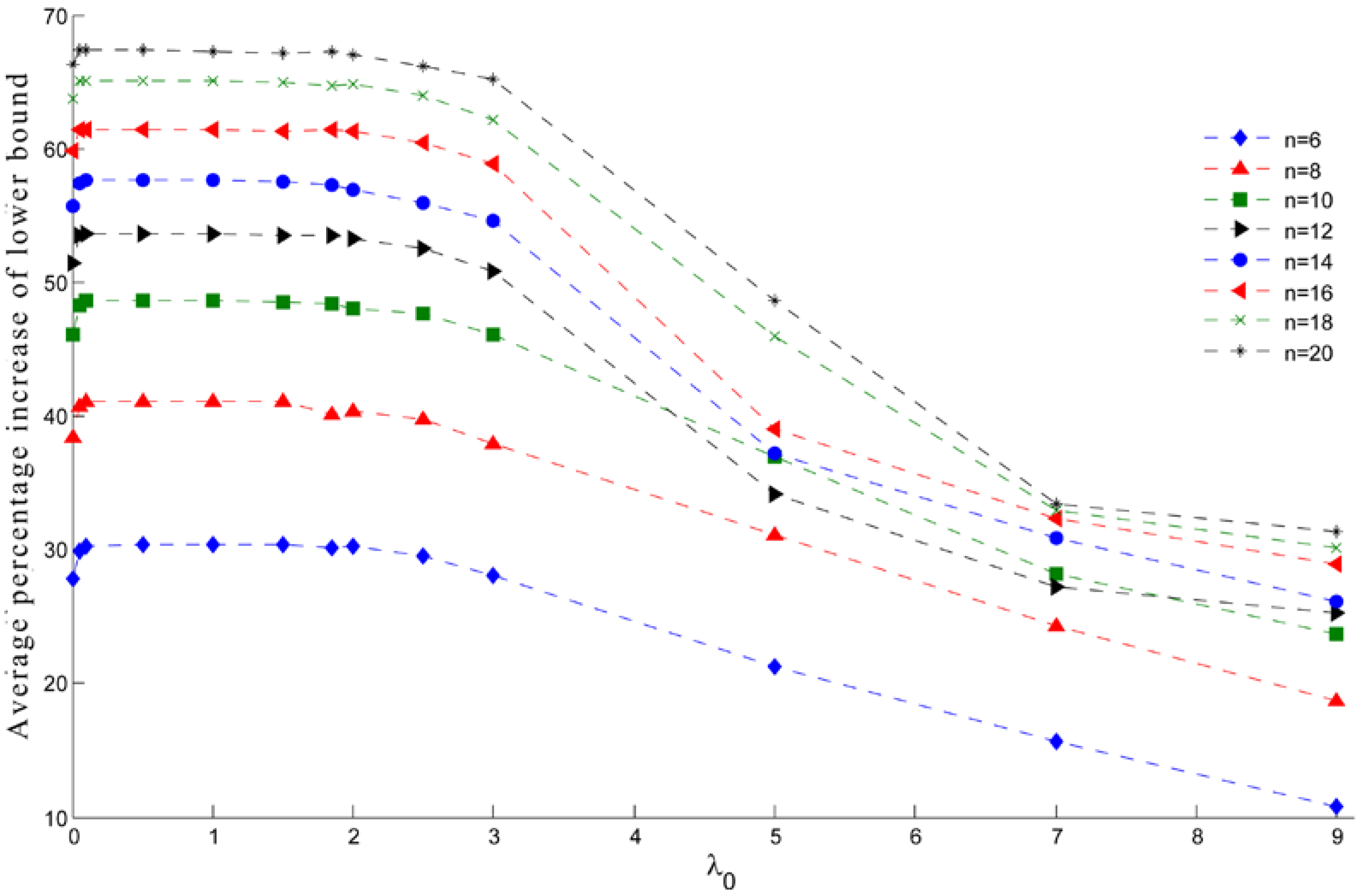

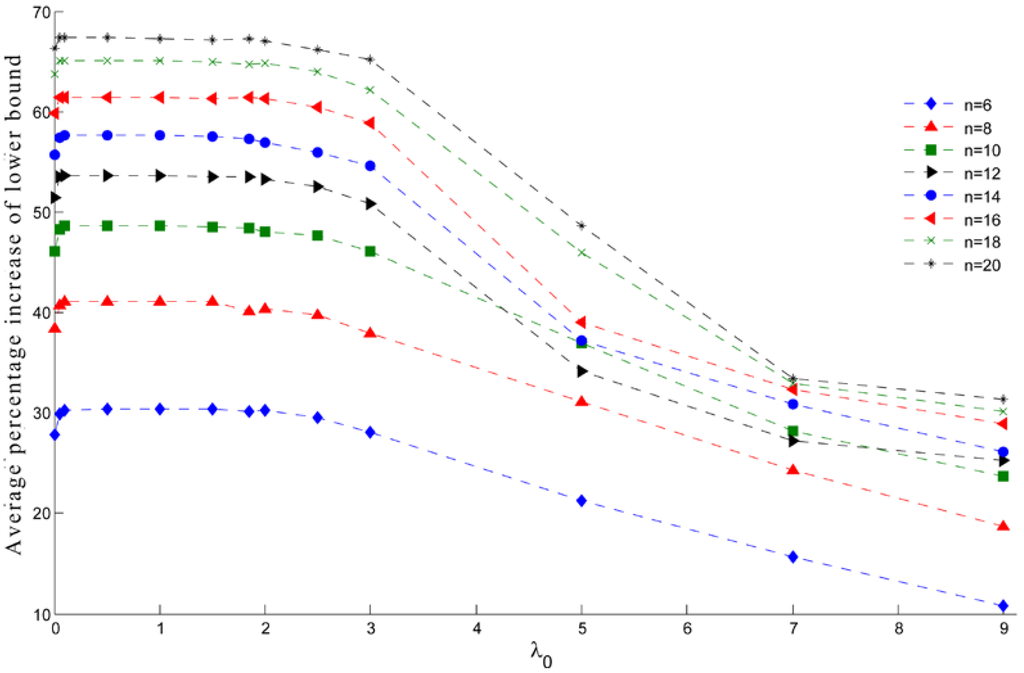

For every instant of problem sizes 6, 8, 10, 12, 14, 16, 18, 20, the highest average percentage of increasing of the lower bound occurs when lies in between 0.1 and 1, as is shown in Figure 4 and Table 3. These results support the idea of choosing a -value between 0 and 2. However, the average percentage of increase of the lower bound for the instants of the large problem sizes 30, 40, 60, and 80, the highest value occurs when (see Table 3). Furthermore, our computational results have shown that setting between 0.05 and 0.1 costs the lowest number of iterations for the procedure (see Table 4).

Figure 4.

The average percentage of increase of the lower bound for the P3AP instants of size 6, 8, 10, 12, 14, 16, 18, 20 generated by the modified subgradient optimization method with 0.001, 0.001, 0.05, 0.075, 0.1, 0.3, 0.5, 0.75, 1.

Table 3.

The average percentage of increase of the lower bound for the P3AP generated by the modified subgradient optimization method with different -values.

Table 4.

The average number of iterations in the modified subgradient optimization method for each problem size with different -values.

Since a P3AP is an NP-complete problem [1], each problem has its own properties and it is not easy to find a global solution for this problem. The only known algorithm for finding the global optimal solution for this problem is a branch and bound algorithm. The efficiency of the algorithm depends on the best lower and upper bound found by the algorithm. The subgradient optimization method is one of the methods used for generating a good lower bound for the problem. The method can be applied to the root node or a leaf of the branch and bound tree. Some conditions used in the method may lead to an optimal solution. The exact value for some constants in the method need computer experiments, and the best choice will be made. For our experiments, the variant values of the initial step length constant , the most suitable are best chosen in the interval [0.1, 1] for small problem sizes, when , and [0.05, 0.1] for bigger problem sizes, when .

Conflicts of Interest

The author declares no conflict of interest.

References

- Frieze, A.M. Complexity of a 3-dimensional assignment problem. Eur. J. Op. Res. 1983, 13, 161–164. [Google Scholar] [CrossRef]

- Kumar, S.; Russell, A.; Sundaram, R. Approximating Latin square extensions. Algorithmica 1999, 24, 128–138. [Google Scholar] [CrossRef]

- Gomes, C.; Regis, R.; Shmoys, D. An improved approximation algorithm for the partial Latin square extension problem. Oper. Res. Lett. 2004, 32, 479–484. [Google Scholar] [CrossRef]

- Katz-Rogozhnikov, D.; Sviridenko, M. Planar Three-Index Assignment Problem via Dependent Contention Resolution; IBM Research Report; Thomas, J., Ed.; Watson Research Center: Yorktown Height, New York, NY, USA, 2010. [Google Scholar]

- Magos, D.; Miliotis, P. An algorithm for the planar three-index assignment problem. Eur. J. Op. Res. 1994, 77, 141–153. [Google Scholar] [CrossRef]

- Shor, N. Minimization methods for non-differentiable functions. In Springer Series in Computational Mathematics; Springer-Verlag: Berlin; Heidelberg, Germany, 1985. [Google Scholar]

- Sherali, H.D.; Choi, G. Recovery of primal solutions when using subgradient methods to solve Lagrangian duals of linear programs. Oper. Res. Lett. 1996, 19, 105–113. [Google Scholar] [CrossRef]

- Fumero, F. A modified subgradient algorithm for Lagrangean relaxation. Comput. Op. Res. 2001, 28, 33–52. [Google Scholar] [CrossRef]

- Goffin, J.L. On convergence rate of subgradient optimization methods. Math. Program. 1977, 13, 329–347. [Google Scholar] [CrossRef]

- Held, M.; Wolfe, P.; Crowder, H.P. Validation of subgradient optimization. Math. Program. 1974, 6, 62–88. [Google Scholar] [CrossRef]

- Bazaraa, M.S.; Sherali, H.D. On the choice of step size in subgradient optimization. Eur. J. Op. Res. 1981, 7, 380–388. [Google Scholar] [CrossRef]

- Fischer, M.L. The Lagrangean relaxation method for solving integer programming problems. Manag. Sci. 1981, 72, 1–18. [Google Scholar] [CrossRef]

- Camerini, P.; Fratta, L.; Maffioli, F. On improving relaxation methods by modified gradient techniques. Math. Program. Study 1975, 3, 26–34. [Google Scholar]

- Kim, S.; Koh, S.; Ahn, H. Two-direction subgradient method for non-differentiable optimization problems. Oper. Res. Lett. 1987, 6, 43–46. [Google Scholar] [CrossRef]

- Kim, S.; Koh, S. On Polyak’s improved subgradient method. J. Optim. Theory Appl. 1988, 57, 355–360. [Google Scholar] [CrossRef]

- Norkin, V.N. Method of nondifferentiable function minimization with average of generalized gradients. Kibernetika 1980, 6, 86–89. [Google Scholar]

- Kim, S.; Ahn, H. Convergence of a generalized subgradient method for non-differentiable convex optimization. Math. Program. 1991, 50, 75–80. [Google Scholar] [CrossRef]

- Burkard, R.E.; Froehlich, K. Some remark on 3-dimensinal assignment problems. Methods Op. Res. 1980, 36, 31–36. [Google Scholar]

- Burkard, E. Admissible transformations and assignment problems. Vietnam J. Math. 2007, 35, 373–386. [Google Scholar]

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).