From Senses to Memory During Childhood: A Systematic Review and Bayesian Meta-Analysis Exploring Multisensory Processing and Working Memory Development

Abstract

1. Introduction

1.1. Multisensory Development

1.2. Working Memory and Multisesnory Processing

1.3. The Current Review

- (i)

- We aimed to examine the association between motor reaction times (MRTs) and verbal multisensory processing for both visual and auditory WM measures. On the basis of past findings, studies were grouped according to the type of multisensory task and parameter being assessed (i.e., MRTs, or verbal tasks thus we hypothesized that accurate responses on verbal multisensory tasks (Constantinidou et al., 2011), and faster MRTs (Alhamdan et al., 2023b) would be significantly associated with better visual and auditory WM performance of children old enough to speak.

- (ii)

- We sought to compare the contribution of unisensory (auditory-alone and/or visual-alone) to multisensory (audiovisual) stimuli to WM capacity across childhood. It was hypothesized, in line with past research in adults (Matusz et al., 2017; Tovar et al., 2020), that WM capacity (i.e., number of items able to be recalled) would be higher for audiovisual stimuli (i.e., cross-modal objects) than for unisensory stimuli (visual or/and auditory alone).

2. Method

2.1. Protocol and Registration

2.2. Literature Search Strategy

2.3. Eligibility Criteria and Study Selection

- Studies not published in English;

- Study design that did not meet criteria (e.g., case studies, reviews, qualitative papers, training programs, book chapters or dissertations);

- Sample/s that did not meet the inclusion criteria (e.g., participants over 15 years old and/or individuals with neurodevelopmental disorders, hearing impairments, or visual impairments);

- No measure of multisensory processing (i.e., audiovisual stimuli);

- No measure of WM;

- Required data/statistics not reported (e.g., no correlation, or the standard deviation for each group could not be extracted from the provided data);

- Study did not report the result on typically developing children separately from atypically developing children.

2.4. Risk of Bias and Quality Assessment

2.5. Data Extraction

- Motor reaction times (i.e., measuring how quickly participants press a button);

- Verbal multisensory tasks (i.e., assessing accuracy through the number of correct responses as reported verbally and without any time related data);

- Motor non-timed tasks (i.e., tasks involving manual motor actions but focusing on the accuracy of responses rather than response time).

2.6. Data Analysis

3. Results

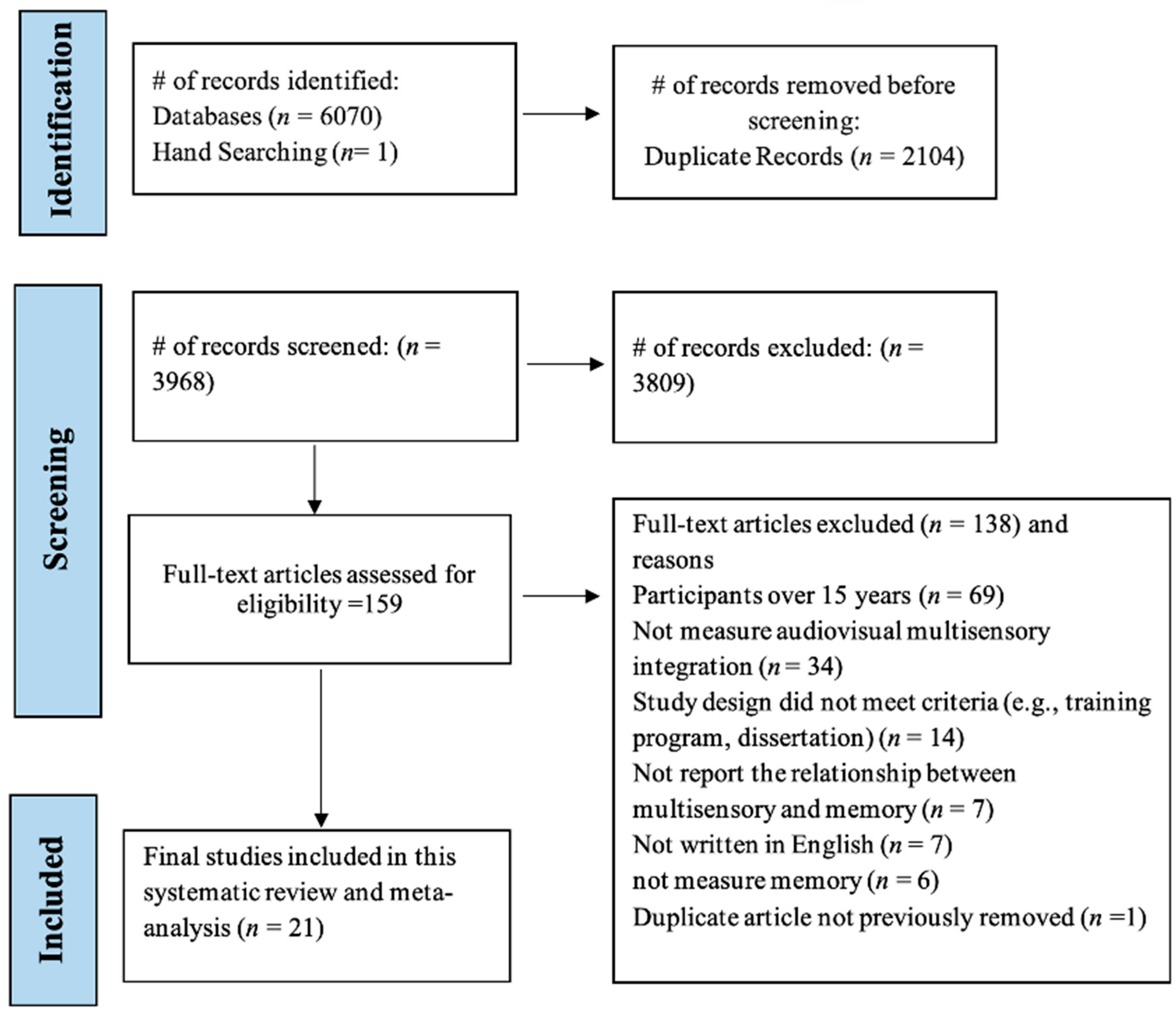

3.1. Study Selection

3.2. Quality Assessment and Risk of Bias

| Study Details | Intro | Method | Results | Discussion | Other | Total | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # | (Author(s), Year) | Study Design Contributed to This Meta-Analysis | 1: Aims | 2: Study Design | 3: Sample Size Justification | 4: Target Population | 5: Sampling Frame | 6: Sample Selection | 7: Non-Responders | 8: Appropriate Measurement | 9: Reliable Measurement | 10: Statistical Significance | 11: Repeatability | 12: Basic Data | 13: Response Rate | 14: Non-Responders | 15: internal Consistency | 16: All Results Presented | 17: Conclusions Justified | 18: Limitations Discussed | 19: Funding a& COI Declared | 20: Ethics & Consent | % |

| 1 | (Alhamdan et al., 2023b) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 94% |

| 2 | (Badian, 1977) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 69% |

| 3 | (Barutchu et al., 2011) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 82% |

| 4 | (Barutchu et al., 2019) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 87% |

| 5 | (Barutchu et al., 2020) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 84% |

| 6 | (Broadbent et al., 2018) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 79% |

| 7 | (Choudhury et al., 2007) | Group Differences | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 85% |

| 8 | (Cleary et al., 2001) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 70% |

| 9 | (Constantinidou et al., 2011) | Both | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 78% |

| 10 | (Constantinidou & Evripidou, 2012) | Group Differences | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 84% |

| 11 | (Crawford & Fry, 1979) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 60% |

| 12 | (Davies et al., 2019) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 80% |

| 13 | (Denervaud et al., 2020b) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 84% |

| 14 | (Field & Anderson, 1985) | Group Differences | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 76% |

| 15 | (Gillam et al., 1998) | Group Differences | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 81% |

| 16 | (Hatchette & Evans, 1983) | Group Differences | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 73% |

| 17 | (Montgomery et al., 2009) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 76% |

| 18 | (Magimairaj et al., 2009) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 84% |

| 19 | (Ortiz-Mantilla et al., 2008) | Group Differences | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 89% |

| 20 | (Pillai & Yathiraj, 2017a) | Correlation | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 79% |

| 21 | (Pillai & Yathiraj, 2017b) | Group Differences | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | 72% |

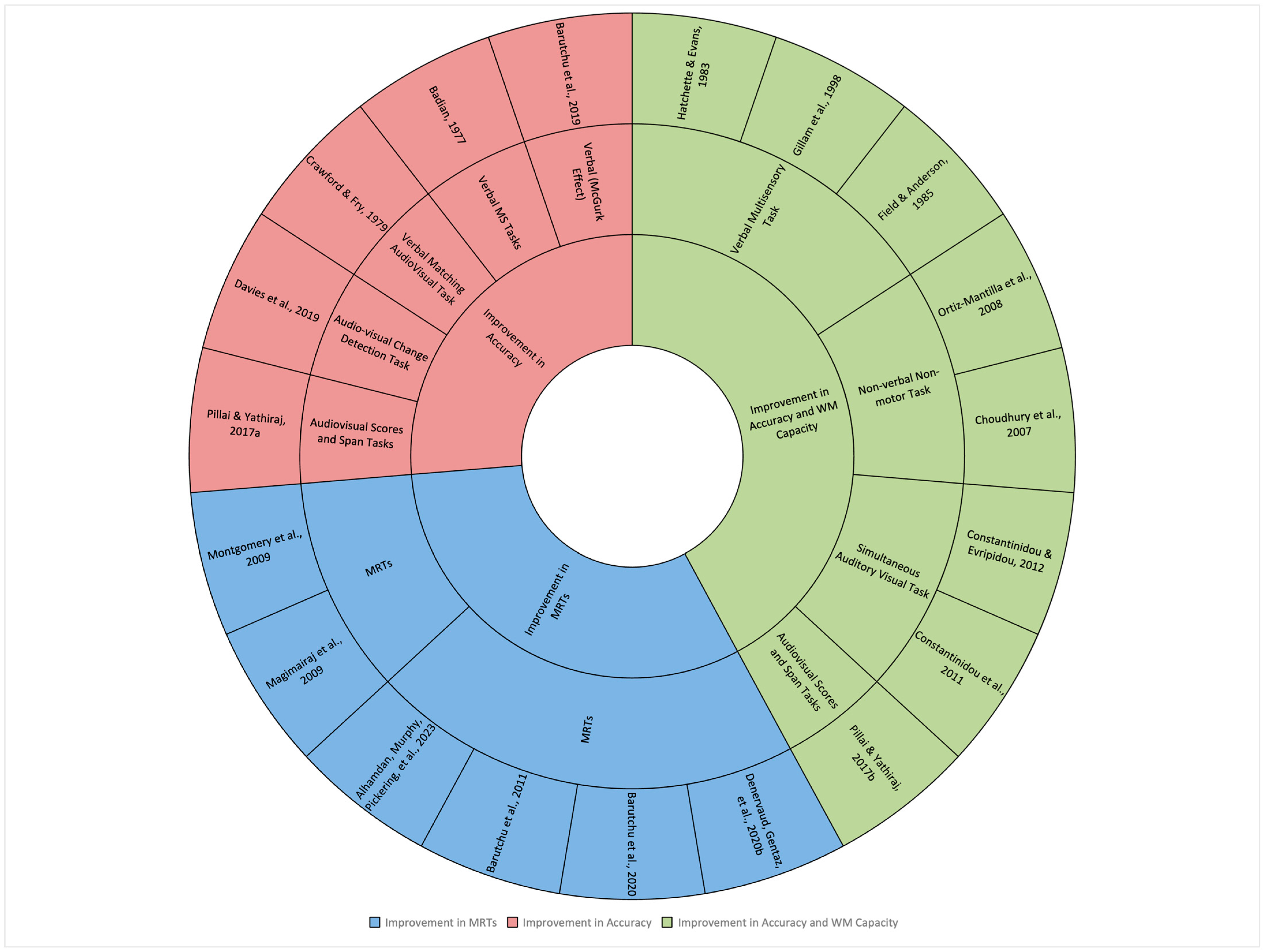

3.3. Study Characteristics and Data Synthesis

| # | (Author(s), Year) | Type of MS Task | Task Description | Aim of MS Task |

|---|---|---|---|---|

| 1 | (Alhamdan et al., 2023b) | MRTs Non-verbal motor task (Simple AV Task) | Multisensory processing was measured using motor reaction times to target detection. The targets included three types of stimuli: an auditory stimulus (AS; beep), a visual stimulus (VS; grey circle), and an audiovisual stimulus (AVS; beep and grey circle presented simultaneously) The children aged 5-10 years were instructed to press a button as rapidly as possible | Multisensory gain seen as measure of improvement in MRT (the time it took participants to respond in milliseconds) |

| 2 | (Barutchu et al., 2011) | MRTs Non-verbal motor task (Simple AV Task) | Participants aged 7–11 years were randomly presented with AS, VS, AVS, and blank stimuli and instructed to press a button when they saw a flash, when they heard a tone burst, or when both happened simultaneously | Multisensory gain seen as measure of improvement in MRT (the time it took participants to respond in milliseconds) |

| 3 | (Barutchu et al., 2020) | MRTs Non-verbal motor task (Simple AV Task and Associate Learning Taks; ALT) | Simple detection task is similar to the task used by the same authors 2011, while ALTs required participants aged 8–11 years to learn an association between novel black symbols and either a novel auditory sound (novel-AV), or a verbal sound (verbal-AV) with a consonant-vowel-consonant | Multisensory gain seen as measure of improvement in MRT (the time it took participants to respond in milliseconds) |

| 4 | (Denervaud et al., 2020b) | MRTs Non-verbal motor task (Simple AV Task) | Participants aged 4–15 years were randomly presented with AS, VS, AVS, and blank stimuli and instructed to press a button when they saw a flash, when they heard a tone burst, or when both happened simultaneously | Multisensory gain seen as measure of improvement in MRT (the time it took participants to respond in milliseconds) |

| 5 & 6 | (Montgomery et al., 2009) & (Magimairaj et al., 2009) | MRTs Non-verbal motor task (Colour–word Recognition) | Participant aged 6–12 were asked to touch a coloured shape (e.g., a green square) as quickly as possible in response to hearing a familiar colour word (e.g., “green”). The task required them to make a simple, speeded decision about which visual object matched the spoken color name. | Multisensory gain seen as measure of improvement in MRT (the time it took participants to respond in milliseconds) |

| 7 | (Badian, 1977) | Verbal MS Tasks | In the verbal MS task, participants aged 8–11 years were presented with grouped sequences of the names of four geometric forms: square, circle, triangle, and cross. The auditory stimuli involved orally presenting these names, while the corresponding visual stimuli consisted of sequences of the geometric forms drawn in black outline on white cards. Participants were required to match or select the correct visual response based on the auditory input | Multisensory gain seen as measure of improvement in accuracy (the proportion of correct responses on trials) |

| 8 | (Barutchu et al., 2019) | Verbal (McGurk Effect) | Participants aged 8–11 years were shown incongruous auditory syllables and visual syllables in four different videos (i.e., three matching videos and one incongruent audiovisual video with /ba-ba/ sounds and /ga-ga/ lip movements) | Multisensory gain seen as the number of perceptions of the fused percept (e.g., “da” or “tha”) |

| 9 | (Broadbent et al., 2018) | Motor non-timed Task (Multisensory Attention Learning Task (MALT) | The Multisensory Attention Learning Task (MALT) studies how sensory cues affect attention and learning. Participants aged 6–10 years view frog images, for example, paired with frog sounds in three conditions, visual, auditory, and combined cues for frog categorization. They respond to target frogs while disregarding others. Participants are instructed to press the space bar whenever a frog (the target animal) appears on the screen | Improvement in accuracy |

| 10 | (Cleary et al., 2001) | Motor non-timed Task (Auditory-plus-visual–spatial memory game) | Participants aged 8–9 years were instructed to press appropriate buttons on the response box when hearing sounds through the loudspeaker and seeing the buttons light up. For example, if they heard ‘blue’ followed by ‘green’ (indicating two buttons consecutively), they were expected to press the blue button first and then the green button | Improvement in accuracy |

| 11 & 12 | (Constantinidou et al., 2011) & (Constantinidou & Evripidou, 2012) | Verbal Auditory, Visual, and Simultaneous Auditory visual Task | Participants aged 7–13 years were presented words and pictures under three conditions: (a) auditory, (b) visual, and (c) auditory plus visual, and they asked to recall the items after each presentation | Multisensory gain seen as measure of improvement in accuracy (the proportion of correct responses on trials) |

| 13 | (Crawford & Fry, 1979) | Verbal (Matching Auditory and Visual trigrams) | Participants aged 5–9 years completed four matching tasks: two within the same senses (visual-to-visual, auditory-to-auditory) and two between senses (visual-to-auditory, auditory-to-visual). They matched cards with sounds and images, following instructions based on names given through cards or a tape recorder | Multisensory gain seen as measure of improvement in accuracy (the proportion of correct responses on trials) |

| 14 | (Davies et al., 2019) | Audiovisual Change Detection Task | Participants aged 5–6 years were presented with two sequential memory displays, each of which contained one shape and one sound. They were asked if the test shape-sound pairing was the same or different to either of the original pairings in the memory displays | Multisensory gain seen as measure of improvement in accuracy (the proportion of correct responses on trials) |

| 15 & 16 | (Choudhury et al., 2007) & (Ortiz-Mantilla et al., 2008) | Habituation Recognition Memory Taks Non-verbal non-motor task | Infants (6–9 months) participated in a series of habituation-recognition memory tasks, including one task involving visual stimuli with familiarized and novel faces, and two tasks combining auditory and visual stimuli. These tasks featured abstract visual slides paired with tone-pairs (with durations of 70 ms and 300 ms, as described in Choudhury et al., 2007), as well as consonant-vowel (CV) syllables, as reported in Ortiz-Mantilla et al., 2008. During the habituation phase, infants underwent consecutive discrete trials based on their individual looking times | Improvement in looking time |

| 17 | (Field & Anderson, 1985) | Cued and Free Recall Measures of Television Program Verbal multisensory task | Participants aged 5- and 9-years were presented with a 35 min colour videotape containing six short segments of story, including three types of modalities (visual, auditory and audiovisual content). Participants were then asked cued and free questions for each story | Improvement in accuracy and capacity of WM |

| 18 | (Gillam et al., 1998) | Audiovisual recall task-digits Verbal multisensory task | Participants aged 8–12 years were presented with (a) auditory digits spoken in a male voice, (b) visual digits appearing on a computer screen, and (c) audiovisual digits appearing on a computer screen in synchrony with the auditory presentation. They were asked to recall the digit in the same order as they appeared in the presentation | Improvement in accuracy and capacity of WM |

| 19 | (Hatchette & Evans, 1983) | Multisensory temporal/spatial Pattern Matching Taks Verbal multisensory task | Participants aged 7–10 years were presented with four to nine black dot pictures with either one or two discontinuities in patterns, and auditory stimuli. In the multisensory condition, the visual and audio stimuli were presented simultaneously, and participants were asked to determine whether the items presented were the same or different | Improvement in accuracy and capacity of WM |

| 20 & 21 | (Pillai & Yathiraj, 2017a) & (Pillai & Yathiraj, 2017b) | Visual and Audiovisual Scores and Span Tasks | Participants aged 7–9 years were instructed to repeat the words of the sequence presented auditory, visual and auditory–visual combined modalities and each memory skill was calculated using two different scoring procedures (score and span) | Improvement in accuracy and capacity of WM |

| Study Details | Participants | Measures | Meta-Analysis Outcomes | |||||

|---|---|---|---|---|---|---|---|---|

| # | Citation | Aim as in Study | Study Design | N | Age M (SD); Range | Multisensory Task/s | Memory Task/s | Meta-Analysis Category Fishers’ z/Cohens’ d [95% CI] |

| 1 | (Alhamdan et al., 2023b) | To investigate developmental changes in the associations between age, and visual and auditory short-term and WM, and multisensory processing | Correlation | 75 | 7.95 (1.70) 5–10 years | Simple Audiovisual Detection Task | “VWM and AWM” using (Digit Span Tasks) | 1. AV−MRTs vs. VWM (Forwards) −0.40 [−0.63, −0.17] 2. AV−MRTs vs. VWM (Backwards) −0.55 [−0.78, −0.32] 3. AV−MRTs vs. AWM (Forwards) −0.38 [−0.65, −0.15] 4. AV−MRTs vs. AWM (Backwards) −0.45 [−0.68, −0.22] |

| 2 | (Badian, 1977) | To explore the nature of auditory–visual processing and memory performance | Correlation | 30 | 9.71 (11.95) 8–11 years 0 | Verbal Multisensory Tasks | “AWM” Auditory Memory Task (AMT) and the Auditory Sequential Memory subtest (ITPA) | 1. MS (verbal) vs. AMT 0.21 [−0.16, 0.59] 2. MS (verbal) vs. ITPA 0.29 [−0.09, 0.66] |

| 3 | (Barutchu et al., 2011) | To investigate the relationships between multisensory integration, auditory background noise, and the general intellectual abilities and AWM | Correlation | 85 | 9.7 7–11 years | Simple Audiovisual Detection Task | “AWM” WISC–IV WMI | AV−MRTs vs. AWM −0.13 [−0.35, 0.09] |

| 4 | (Barutchu et al., 2019) | To investigate developmental relationships between attention, multisensory processes, and children’s general intellectual abilities including AWM | Correlation | 51 | 9.18 (1.61) 7–13 years | McGurk Effect/Illusion Task | “AWM” WISC–IV WMI | MS (McGurk) vs. AWM −0.13 [−0.41, 0.15] |

| 5 | (Barutchu et al., 2020) | To investigate the developmental profile and relationships between uni- and multisensory processes and children’s intellectual abilities including AWM | Correlation | 41 | 9.85 8–11 years | Simple Audiovisual Detection Task Associative Learning Tasks (ALT) | “AWM” WISC–IV WMI | 1. AV−MRTs vs. AWM 0.33 [0.00, 0.66] 2. AV−MRTs (ALT−Novel) vs. AWM 0.17 [−0.16, 0.50] 3. AV−MRTs (ALT−Verbal) vs. AWM −0.06 [−0.39, 0.27] |

| 6 | (Crawford & Fry, 1979) | To explore the contributions of auditory and visual WM to audiovisual multisensory stimuli | Correlation | 52 | 6.75 5–9 years | Matching Auditory and Visual trigrams | “VWM” Visual-Sequential Memory subtest (ITPA), and “AWM” WISC (Digit Span) | 1. Verbal (A−V) vs. Total−WM 0.55 [0.27, 0.83] 2. Verbal (V−A) vs. Total−WM 0.69 [0.41, 0.97] 3. (A−V) vs. VWM 0.52 [0.24, 0.80] |

| 7 | (Davies et al., 2019) | To test the developmental increase in audiovisual binding ability and its influence on retrieving bound audiovisual information and measures of verbal and visual complex WM span | Correlation | 49 | 5.6 (3.25) 5–6 years | Audiovisual Change Detection Task | “AWM” Listening Recall Task and “VWM” Odd One Out Task | 1. Verbal (A−V binding) vs. AWM 0.65 [0.36, 0.94] 2. Verbal (A−V binding) vs. VWM 0.60 [0.32, 0.89] |

| 8 | (Denervaud et al., 2020b) | To investigate the relationship between multisensory processing and higher-level cognition such as WM, and fluid intelligence in school children | Correlation | 77 | 8.1 (3.0) 4–15 years | Simple Audiovisual Detection Task | “AWM” WISC (Digit Span) | AV−MRTs vs. AWM −0.56 [−0.79, −0.33] |

| 9 | (Montgomery et al., 2009) | To examine the potential influence of three main components of WM, and processing speed (AV reaction time) on children’s ability to understand spoken narrative | Correlation | 67 | 8.39 (1.69) 6–11 years | Auditory–visual MRTs Task (colour word recognition) | “AWM” WISC (Digit Span), and Concurrent processing-storage (CPS) | 1. AV−MRTs vs. AWM (DS) −0.46 [−0.70, −0.21] 2. AV−MRTs vs. AWM (CPS) −0.51 [−0.76, −0.27] |

| 10 | (Magimairaj et al., 2009) | To investigate the relative contribution of storage, processing speed (AV reaction time), and attentional allocation on digits and complex span tests | Correlation | 65 | 8.6 (1.8) 6–12 years | Auditory–visual MRTs Task (colour word recognition) | “AWM” WISC (Digit Span), and Complex Memory Span Task (CMST) | 1. AV−MRTs vs. AWM (CMST) −0.44 [−0.68, −0.19] 2. AV−MRTs vs. AWM −0.24 [−0.49, 0.00] |

| 11 | (Pillai & Yathiraj, 2017a) | To test whether there are associations between different memory skills and the auditory, visual, and combined modalities | Correlation | 28 | 8.1 7–8 years | Auditory, Visual and Audiovisual Scores and Span Tasks | Revised Auditory Memory and Sequencing Test (RAMST-IE) | 1. Verbal MS vs. AWM (RAMST−Span) 0.42 [0.03, 0.82] 2. Verbal MS vs. AWM (RAMST−Score) 0.89 [0.50, 1.28] |

| 12 | (Constantinidou et al., 2011) | To investigate the effects of stimulus presentation modality (i.e., auditory, visual and audiovisual) on WM performance | Correlation & Group differences | 40 | 9.85 (1.03) 7–13 years | Auditory, Visual, and Simultaneous Auditory visual Task | “AWM” CVLT-C (Listening Recall Task) | Correlation Verbal (A−V) vs. AWM 0.38 [0.06, 0.69 Group Differences 1. WMC−AVS vs. WMC−AS (M= 8 years) 0.32 [−0.28, 0.91] 2. WMC−AVS vs. WMC−AS (M = 10 years) 0.75 [0.07, 1.42] 3. WMC−AVS vs. WMC−VS (M = 8 years) 0.07 [−0.52, 0.67] 4. WMC−AVS vs. WMC−VS (M = 10 years) −0.19 [−0.84, 0.47] |

| 13 | (Constantinidou & Evripidou, 2012) | To investigate the effects of stimulus presentation modality on WM performance in children | Group differences | 20 | 11.5 (5.07) 10–12 | Auditory, Visual, and Simultaneous Auditory visual Task using AVLT (Auditory Verbal Learning Test Paradigm) | 1. WMC-AVS vs. WMC-AS 0.23 [−0.39, 0.86] 2. WMC-AVS vs. WMC-VS −0.28 [−0.91, 0.34] | |

| 14 | (Choudhury et al., 2007) | To determine if performance on infant information processing measures differ as a function of family history of specific language impairment or the particular demand characteristics of two different Rapid Auditory Processing paradigms used | Group differences | 29 | 8.97 mo (1.0) 6–9 mo | Auditory Visual Habituation Recognition Memory Task | 1. AVH/RM (70 ms) vs. VH/RM 0.25 [−0.27, 0.76] 2. AVH/RM (300 ms) vs. VH/RM 0.12 [−0.39, 0.64] | |

| 15 | (Ortiz-Mantilla et al., 2008) | To determine whether performance differences on language and cognitive tasks were due to poorer global cognitive performance or to deficits in specific processing abilities | Group differences | 32 | 8.97 mo (0.58) 6 & 9 mo | Auditory Visual Habituation Recognition Memory Task | 1. AVH/RM (6 mo) vs. VH/RM 0.20 [−0.29, 0.70] 2. AVH/RM (9 mo) vs. VH/RM −0.12 [−0.61, 0.37] | |

| 16 | (Field & Anderson, 1985) | To investigate how children’s visual orientation and memory recall of a video clip are influenced by different factors such as the emphasis on different modalities (visual, auditory, or both) | Group differences | 80 | 5.1 & 9.1 5 & 9 years | Cued and Free Recall Measures of Television Program | 1. WMC-AVS vs. WMC-AS (cued; 5 years) 0.67 [0.35, 0.98] 2. WMC-AVS vs. WMC-AS (cued; 9 years) 0.23 [−0.08, 0.54] 3. WMC-AVS vs. WMC-AS (free; 5 years) 0.25 [−0.06, 0.56] 4. WMC-AVS vs. WMC-AS (free; 9 years) 0.64 [0.33, 0.96] 5. WMC-AVS vs. WMC-VS (cued; 5 years) 0.46 [0.14, 0.77] 6. WMC-AVS vs. WMC-VS (cued; 9 years) 1.20 [0.86, 1.53] 7. WMC-AVS vs. WMC-AS (free; 5 years) 0.12 [−0.19, 0.43 8. WMC-AVS vs. WMC-AS (free; 9 years) 0.79 [0.47, 1.12] | |

| 17 | (Gillam et al., 1998) | To test the immediate recall of digits presented visually, auditorily, or audiovisually | Group differences | 16 | 9.8 (1.2) 8–12 years | Audio-Visual Digit Recall Task | 1. WMC-AVS vs. WMC-AS (WM) −0.26 [−0.95, 0.44] 2. WMC-AVS vs. WMC-AS (STM) −0.21 [−0.90, 0.49] 3. WMC-AVS vs. WMC-VS (WM) −0.19 [−0.89, 0.50] 4. WMC-AVS vs. WMC-VS (STM) 0.18 [−0.52, 0.87] | |

| 18 | (Hatchette & Evans, 1983) | To determine whether audiovisual processing (temporal and spatial integration) is the critical factor in accounting for the performance on pattern matching tasks | Group differences | 18 | 8.6 7.3–10 years | Multisensory temporal/spatial Pattern Matching Taks | 1. WMC-AVS vs. WMC-AS (WM) 0.46 [−0.20, 1.12] 2. WMC-AVS vs. WMC-VS (spatial) 0.49 [−0.18, 1.16] 3. WMC-AVS vs. WMC-VS (temporal) −0.14 [−0.79, 0.51] | |

| 19 | (Pillai & Yathiraj, 2017b) | To assess whether a correlation exists between two scoring of memory task (score and span) across three sensory conditions (auditory, visual, and auditory–visual) | Group differences | 28 | 8.1 7–9 years | Visual and Audiovisual Scores and Span Tasks | 1. WMC-AVS vs. WMC-AS (Score) 0.34 [−0.18, 0.87] 2. WMC-AVS vs. WMC-AS (Span) −0.44 [−0.97, 0.09] 3. WMC-AVS vs. WMC-VS (Score) 0.88 [0.33, 1.42 4. WMC-AVS vs. WMC-VS (Span) 0.46 [−0.07, 0.99] | |

| Study Details | Participants | Measures | Results | ||||||

|---|---|---|---|---|---|---|---|---|---|

| # | Citation | Aim of Study | Study Design | N | Age M (SD); Range | Multisensory Task/s | Memory Task/s | Correlation, p | Fishers’ z [95% CI] |

| 1 | (Broadbent et al., 2018) | To examine the role of multisensory information on incidental category learning during an attentional vigilance task | Correlation | 185 | 8.17 (0.41) 6–10 years | Multisensory Attention Learning Task (MALT) | “AWM” WISC (Digit Span Backward) | r = 0.118, ns r = 0.009 a, ns | 0.12 [−0.03, 0.26] 0.01 [−0.14, 0.16] |

| 2 | (Cleary et al., 2001) | To examine the relationship between verbal digit span and each of the three different presentation conditions (visual, auditory and audiovisual) | Correlation | 44 | 8.10 8–9 years | Auditory-plus-visual–spatial memory game | “AWM” WISC (Digit Span Forward &Backward) | r = 0.58, <0.01 r = 0.36, <0.05 | 0.66 [0.36, 0.97] 0.38 [0.07, 0.68] |

| 3 | (Barutchu et al., 2020) | See Table 2, study # 5 for further details | Associative Learning Tasks (ALT) | “AWM” WISC–IV WMI | r = 0.09, ns r = 0.17, ns | 0.09 [−0.24, 0.42] 0.18 [−0.16, 0.50] | |||

3.3.1. Participant Characteristics

3.3.2. Task Characteristics in Correlational Studies

3.3.3. Task Characteristics in Group Differences Studies

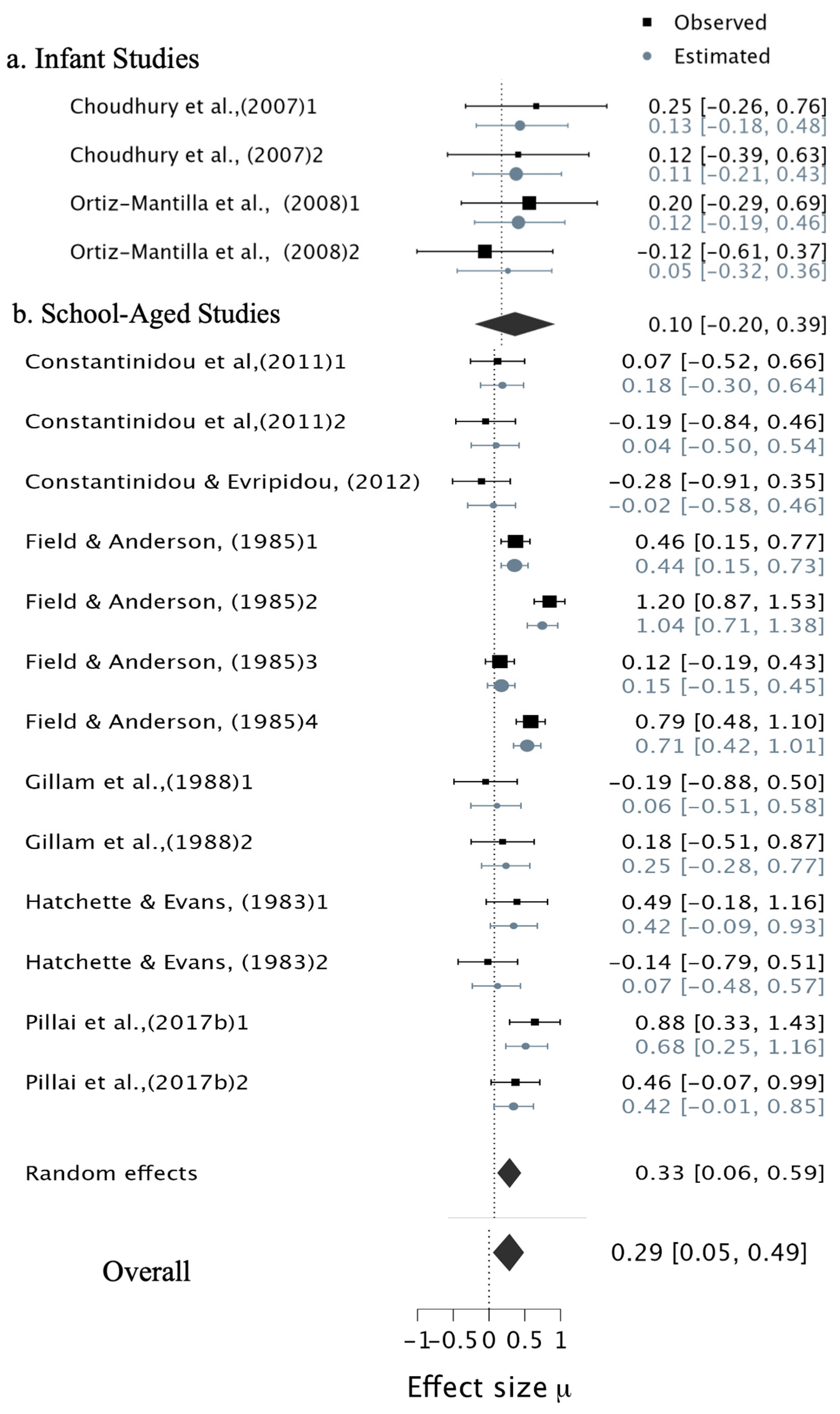

3.4. Result of Bayesian Meta-Analyses

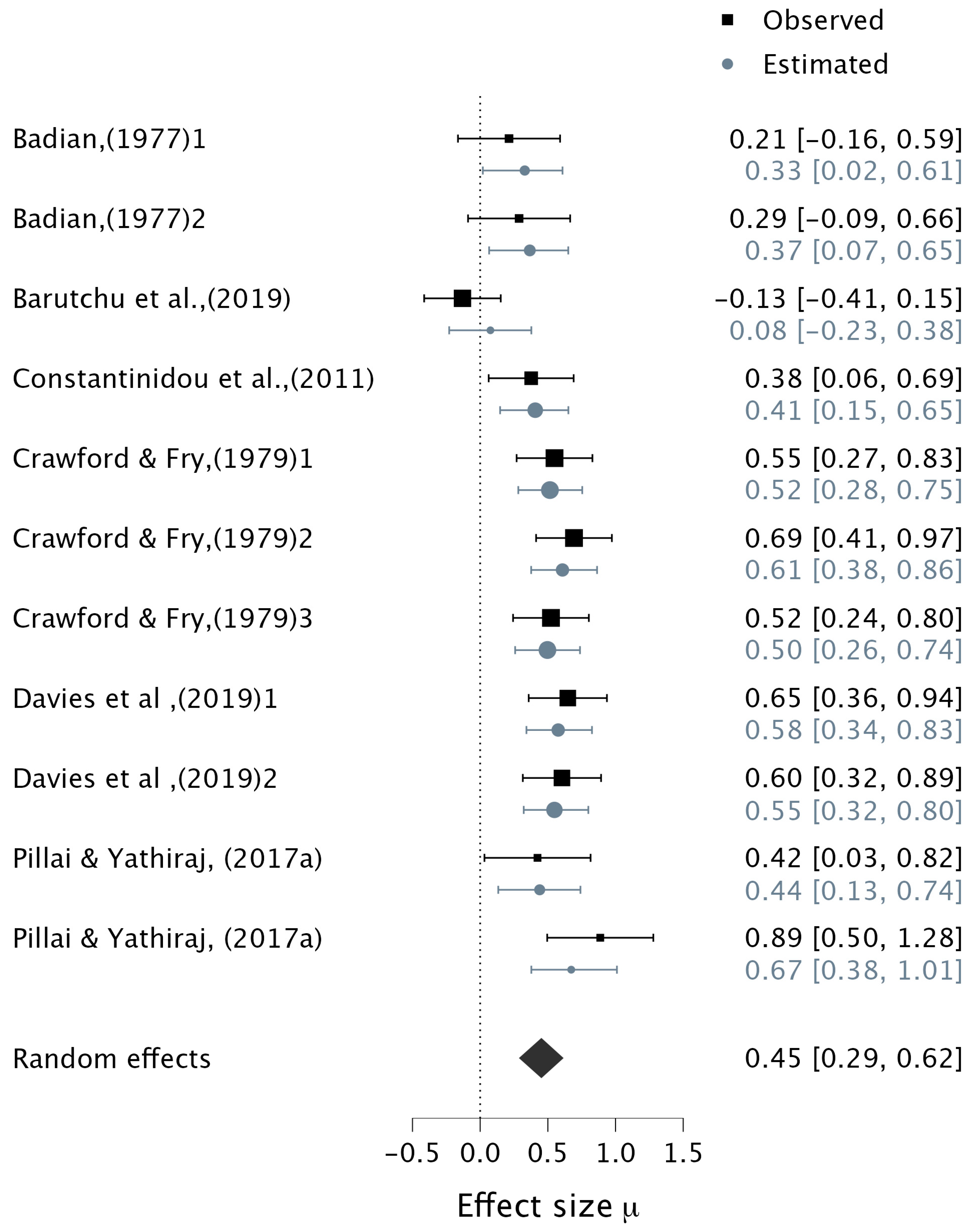

3.4.1. Multisensory MRTs Tasks

3.4.2. Verbal Multisensory Tasks

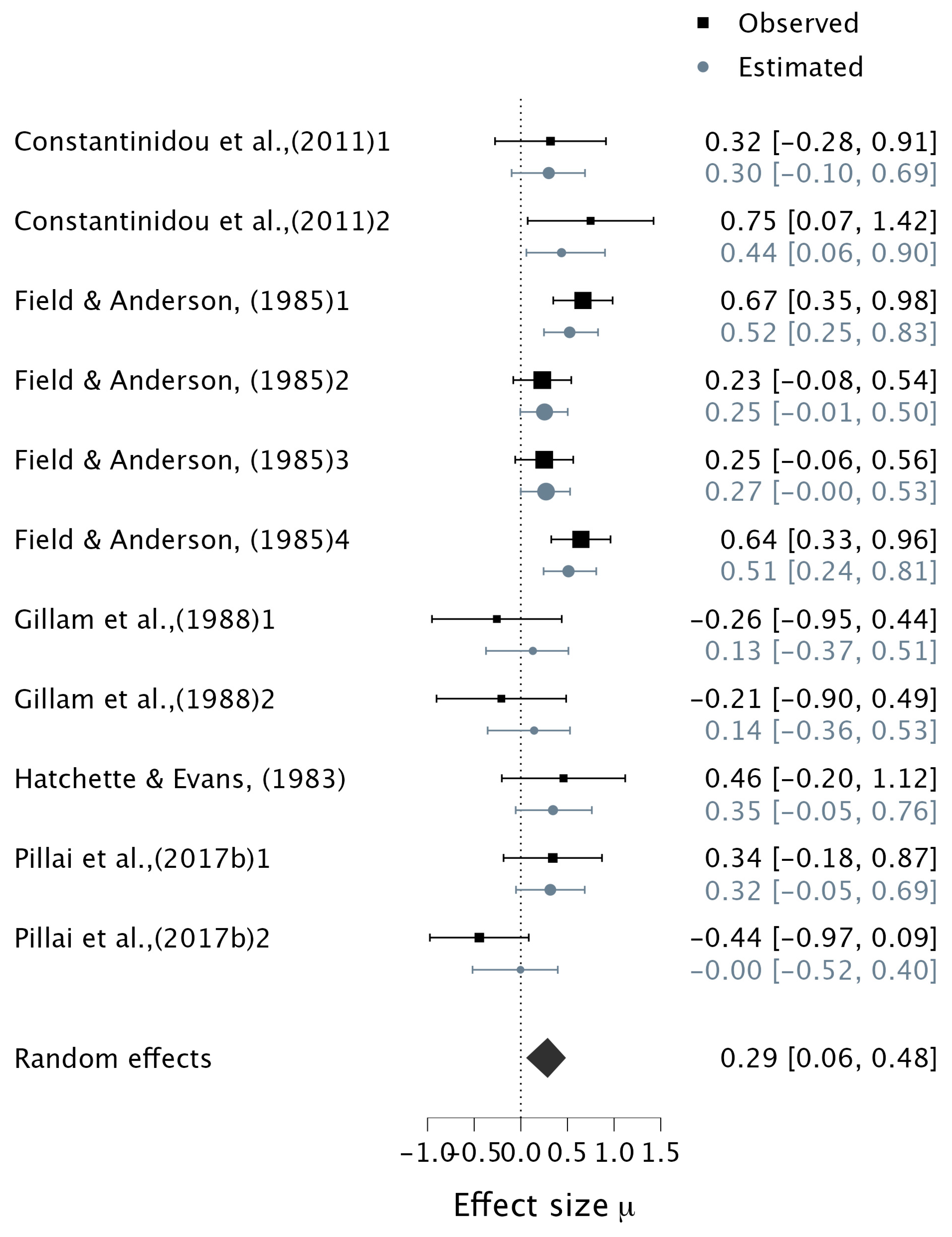

3.4.3. The Contribution of Multisensory vs. Unisensory Stimuli to WM Capacity

3.5. Moderator Meta-Regression Analysis

3.6. Results of Narrative Synthesis

4. Discussion

- First, verbal multisensory processing was extremely/decisively associated with visual and auditory WM in children, while non-verbal motor reaction time multisensory tasks showed strong correlations with auditory and visual WM, suggesting verbal and MRTs multisensory processing contribute differently to WM performance, and might follow different developmental trajectories as suggested by recent brain imaging study (Gunaydin et al., 2025; Sydnor et al., 2025).

- Furthermore, as expected, age was a significant moderator for the association between multisensory processing and visual and auditory WM, highlighting both the developmental maturation of cognitively complex WM and multisensory processing abilities.

- Lastly, we found strong evidence that WM capacity for both verbal and MRT multisensory stimuli was higher than either visual or auditory stimuli alone. These results will first be discussed with reference to age and development, followed by the association between MRTs and verbal multisensory tasks and WM, and finally the contributions of multisensory stimuli to WM capacity will be considered.

4.1. Age Development in Motor and Verbal Multisensory Tasks and Their Relationship with WM

4.2. Contribution of Multisensory Stimuli to WM Capacity

4.3. Limitations and Future Direction

4.4. Conclusion and Implications

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Correction Statement

References

- Akoglu, H. (2018). User’s guide to correlation coefficients. Turkish Journal of Emergency Medicine, 18(3), 91–93. [Google Scholar] [CrossRef]

- Alais, D., Newell, F., & Mamassian, P. (2010). Multisensory processing in review: From physiology to behaviour. Seeing and Perceiving, 23(1), 3–38. [Google Scholar] [CrossRef]

- Alhamdan, A. A., Murphy, M., & Crewther, S. G. (2022). Age-related decrease in motor contribution to multisensory reaction times in primary school children. Frontiers in Human Neuroscience, 16, 967081. [Google Scholar] [CrossRef]

- Alhamdan, A. A., Murphy, M. J., & Crewther, S. G. (2023a). Visual motor reaction times predict receptive and expressive language development in early school-age children. Brain Sciences, 13(6), 965. [Google Scholar] [CrossRef]

- Alhamdan, A. A., Murphy, M. J., Pickering, H. E., & Crewther, S. G. (2019). A systematic review of the contribution of multisensory processing to memory performance in children (0–12-years). PROSPERO international prospective register of systematic reviews. Available online: https://www.crd.york.ac.uk/PROSPERO/display_record.php?RecordID=148110 (accessed on 29 July 2025).

- Alhamdan, A. A., Murphy, M. J., Pickering, H. E., & Crewther, S. G. (2023b). The contribution of visual and auditory working memory and non-verbal IQ to motor multisensory processing in elementary school children. Brain Sciences, 13(2), 270. [Google Scholar] [CrossRef] [PubMed]

- Anderson, J. D., & Wagovich, S. A. (2010). Relationships among linguistic processing speed, phonological working memory, and attention in children who stutter. Journal of Fluency Disorders, 35(3), 216–234. [Google Scholar] [CrossRef] [PubMed]

- Baddeley, A. (2003a). Working memory and language: An overview. Journal of Communication Disorders, 36(3), 189–208. [Google Scholar] [CrossRef] [PubMed]

- Baddeley, A. (2003b). Working memory: Looking back and looking forward. Nature Reviews Neuroscience, 4(10), 829–839. [Google Scholar] [CrossRef]

- Baddeley, A. (2007). Working memory, thought, and action (Vol. 45). OuP Oxford. [Google Scholar]

- Baddeley, A. (2012). Working memory: Theories, models, and controversies. Annual Review of Psychology, 63(1), 1–29. [Google Scholar] [CrossRef]

- Baddeley, A., Gathercole, S., & Papagno, C. (1998). The phonological loop as a language learning device. Psychological Review, 105(1), 158–173. [Google Scholar] [CrossRef]

- Baddeley, A., & Warrington, E. K. (1973). Memory coding and amnesia. Neuropsychologia, 11(2), 159–165. [Google Scholar] [CrossRef] [PubMed]

- Badian, N. A. (1977). Auditory-visual integration, auditory memory, and reading in retarded and adequate readers. Journal of Learning Disabilities, 10(2), 108–114. [Google Scholar] [CrossRef]

- Bahrick, L. E., & Lickliter, R. (2000). Intersensory redundancy guides attentional selectivity and perceptual learning in infancy. Developmental Psychology, 36(2), 190. [Google Scholar] [CrossRef]

- Bahrick, L. E., & Lickliter, R. (2012). The role of intersensory redundancy in early perceptual, cognitive, and social development. In A. J. Bremner, D. J. Lewkowicz, & C. Spence (Eds.), Multisensory development (pp. 183–206). Oxford University Press. [Google Scholar]

- Bartoš, F., Maier, M., Wagenmakers, E.-J., Doucouliagos, H., & Stanley, T. (2021). No need to choose: Robust Bayesian meta-analysis with competing publication bias adjustment methods. PsyArXiv Prepr. Available online: https://www.researchgate.net/publication/352502561_No_Need_to_Choose_Robust_Bayesian_Meta-Analysis_with_Competing_Publication_Bias_Adjustment_Methods (accessed on 28 July 2025).

- Bartoš, F., Maier, M., Wagenmakers, E.-J., Doucouliagos, H., & Stanley, T. D. (2023). Robust Bayesian meta-analysis: Model-averaging across complementary publication bias adjustment methods. Research Synthesis Methods, 14(1), 99–116. [Google Scholar] [CrossRef]

- Barutchu, A., Crewther, D. P., & Crewther, S. G. (2009). The race that precedes coactivation: Development of multisensory facilitation in children. Developmental Science, 12(3), 464–473. [Google Scholar] [CrossRef]

- Barutchu, A., Crewther, S. G., Fifer, J., Shivdasani, M. N., Innes-Brown, H., Toohey, S., Danaher, J., & Paolini, A. G. (2011). The relationship between multisensory integration and IQ in children [Empirical Study; Quantitative Study]. Developmental Psychology, 47(3), 877–885. [Google Scholar] [CrossRef]

- Barutchu, A., Fifer, J. M., Shivdasani, M. N., Crewther, S. G., & Paolini, A. G. (2020). The interplay between multisensory associative learning and IQ in children. Child Development, 91(2), 620–637. [Google Scholar] [CrossRef]

- Barutchu, A., Toohey, S., Shivdasani, M. N., Fifer, J. M., Crewther, S. G., Grayden, D. B., & Paolini, A. G. (2019). Multisensory perception and attention in school-age children. Journal of Experimental Child Psychology, 180, 141–155. [Google Scholar] [CrossRef] [PubMed]

- Bates, E., O’Connell, B., Vaid, J., Sledge, P., & Oakes, L. (1986). Language and hand preference in early development. Developmental Neuropsychology, 2(1), 1–15. [Google Scholar] [CrossRef]

- Beauchamp, M. S., Lee, K. E., Argall, B. D., & Martin, A. (2004). Integration of auditory and visual information about objects in superior temporal sulcus. Neuron, 41(5), 809–823. [Google Scholar] [CrossRef]

- Blau, V., Reithler, J., van Atteveldt, N., Seitz, J., Gerretsen, P., Goebel, R., & Blomert, L. (2010). Deviant processing of letters and speech sounds as proximate cause of reading failure: A functional magnetic resonance imaging study of dyslexic children. Brain, 133(3), 868–879. [Google Scholar] [CrossRef]

- Borenstein, M. (2009). Effect sizes based on means. In Introduction to meta-analysis (pp. 21–32). John Wiley & Sons. [Google Scholar] [CrossRef]

- Borenstein, M., Hedges, L. V., Higgins, J. P., & Rothstein, H. R. (2021). Introduction to meta-analysis. John Wiley & Sons. [Google Scholar]

- Braddick, O., & Atkinson, J. (2011). Development of human visual function. Vision Research, 51(13), 1588–1609. [Google Scholar] [CrossRef] [PubMed]

- Bradley, L., & Bryant, P. E. (1983). Categorizing sounds and learning to read—A causal connection. Nature, 301(5899), 419–421. [Google Scholar] [CrossRef]

- Brandwein, A. B., Foxe, J. J., Russo, N. N., Altschuler, T. S., Gomes, H., & Molholm, S. (2011). The development of audiovisual multisensory integration across childhood and early adolescence: A high-density electrical mapping study. Cerebral Cortex, 21(5), 1042–1055. [Google Scholar] [CrossRef]

- Bremner, A. J., Lewkowicz, D. J., & Spence, C. (2012). The multisensory approach to development. In Multisensory development (pp. 1–26). Oxford University Press. [Google Scholar] [CrossRef]

- Broadbent, H. J., White, H., Mareschal, D., & Kirkham, N. Z. (2018). Incidental learning in a multisensory environment across childhood. Developmental Science, 21(2), e12554. [Google Scholar] [CrossRef]

- Bucci, W., & Freedman, N. (1978). Language and hand: The dimension of referential competence. Journal of Personality, 46(4), 594–622. [Google Scholar] [CrossRef]

- Buschman, T. J., & Miller, E. K. (2022). Working memory is complex and dynamic, like your thoughts. Journal of Cognitive Neuroscience, 35(1), 17–23. [Google Scholar] [CrossRef]

- Buss, A. T., Fox, N., Boas, D. A., & Spencer, J. P. (2014). Probing the early development of visual working memory capacity with functional near-infrared spectroscopy. NeuroImage, 85, 314–325. [Google Scholar] [CrossRef] [PubMed]

- Buss, A. T., Ross-Sheehy, S., & Reynolds, G. D. (2018). Visual working memory in early development: A developmental cognitive neuroscience perspective. Journal of Neurophysiology, 120(4), 1472–1483. [Google Scholar] [CrossRef] [PubMed]

- Chartier, J., Anumanchipalli, G. K., Johnson, K., & Chang, E. F. (2018). Encoding of articulatory kinematic trajectories in human speech sensorimotor cortex. Neuron, 98(5), 1042–1054.e1044. [Google Scholar] [CrossRef] [PubMed]

- Choudhury, N., Leppanen, P. H., Leevers, H. J., & Benasich, A. A. (2007). Infant information processing and family history of specific language impairment: Converging evidence for RAP deficits from two paradigms. Developmental Science, 10(2), 213–236. [Google Scholar] [CrossRef]

- Christian, K. T., Megan, M. H., Anne-Lise, G., Rosa, M., Sarah-Jayne, B., Ronald, E. D., Berna, G., Armin, R., Elizabeth, R. S., Eveline, A. C., & Kathryn, L. M. (2017). Development of the cerebral cortex across adolescence: A multisample study of inter-related longitudinal changes in cortical volume, surface area, and thickness. The Journal of Neuroscience, 37(12), 3402. [Google Scholar] [CrossRef]

- Cleary, M., Pisoni, D. B., & Geers, A. E. (2001). Some measures of verbal and spatial working memory in eight-and nine-year-old hearing-impaired children with cochlear implants. Ear and Hearing, 22(5), 395. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Taylor & Francis. [Google Scholar]

- Constantinidou, F., Danos, M. A., Nelson, D., & Baker, S. (2011). Effects of modality presentation on working memory in school-age children: Evidence for the pictorial superiority hypothesis. Child Neuropsychology, 17(2), 173–196. [Google Scholar] [CrossRef]

- Constantinidou, F., & Evripidou, C. (2012). Stimulus modality and working memory performance in Greek children with reading disabilities: Additional evidence for the pictorial superiority hypothesis. Child Neuropsychology, 18(3), 256–280. [Google Scholar] [CrossRef]

- Corbetta, M., & Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience, 3(3), 201–215. [Google Scholar] [CrossRef]

- Corbetta, M., Shulman, G. L., Miezin, F. M., & Petersen, S. E. (1995). Superior parietal cortex activation during spatial attention shifts and visual feature conjunction. Science, 270(5237), 802–805. [Google Scholar] [CrossRef] [PubMed]

- Crawford, J. H., & Fry, M. A. (1979). Trait-Task interaction in intra-and intermodal matching of auditory and visual trigrams. Contemporary Educational Psychology, 4(1), 1–10. [Google Scholar] [CrossRef]

- Crewther, S. G., Crewther, D. P., Klistorner, A., & Kiely, P. M. (1999). Development of the magnocellular VEP in children: Implications for reading disability. Electroencephalography and Clinical Neurophysiology. Supplement, 49, 123–128. Available online: http://europepmc.org/abstract/MED/10533097.

- Cuturi, L. F., Cappagli, G., Yiannoutsou, N., Price, S., & Gori, M. (2022). Informing the design of a multisensory learning environment for elementary mathematics learning. Journal on Multimodal User Interfaces, 16, 155–171. [Google Scholar] [CrossRef]

- Davies, S., Bourke, L., & Harrison, N. (2019). Does audio-visual binding as an integrative function of working memory influence the early stages of learning to write? Reading and Writing, 33(4), 835–857. [Google Scholar] [CrossRef]

- Denervaud, S., Gentaz, E., Matusz, P. J., & Murray, M. M. (2020a). An fMRI study of error monitoring in Montessori and traditionally-schooled children. NPJ Science of Learning, 5(1), 11. [Google Scholar] [CrossRef] [PubMed]

- Denervaud, S., Gentaz, E., Matusz, P. J., & Murray, M. M. (2020b). Multisensory gains in simple detection predict global cognition in schoolchildren. Scientific Reports, 10(1), 1394. [Google Scholar] [CrossRef] [PubMed]

- D’Esposito, M. (2007). From cognitive to neural models of working memory. Philosophical Transactions of the Royal Society B: Biological Sciences, 362(1481), 761–772. [Google Scholar] [CrossRef]

- Dionne-Dostie, E., Paquette, N., Lassonde, M., & Gallagher, A. (2015). Multisensory integration and child neurodevelopment. Brain Sciences, 5(1), 32–57. [Google Scholar] [CrossRef] [PubMed]

- Dobinson, K. L., & Dockrell, J. E. (2021). Universal strategies for the improvement of expressive language skills in the primary classroom: A systematic review. First Language, 41(5), 527–554. [Google Scholar] [CrossRef]

- Downes, M. J., Brennan, M. L., Williams, H. C., & Dean, R. S. (2016). Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS). BMJ Open, 6(12), e011458. [Google Scholar] [CrossRef]

- Dunlap, W. P., Cortina, J. M., Vaslow, J. B., & Burke, M. J. (1996). Meta-analysis of experiments with matched groups or repeated measures designs. Psychological Methods, 1(2), 170–177. [Google Scholar] [CrossRef]

- Ebaid, D., & Crewther, S. G. (2020). The contribution of oculomotor functions to rates of visual information processing in younger and older adults. Scientific Reports, 10(1), 10129. [Google Scholar] [CrossRef]

- Elhassan, Z., Crewther, S. G., & Bavin, E. L. (2017). The contribution of phonological awareness to reading fluency and its individual sub-skills in readers aged 9- to 12-years. Frontiers in Psychology, 8, 533. [Google Scholar] [CrossRef] [PubMed]

- Eroğlu, G., Teber, S., Ertürk, K., Kırmızı, M., Ekici, B., Arman, F., Balcisoy, S., Özcan, Y. Z., & Çetin, M. (2022). A mobile app that uses neurofeedback and multi-sensory learning methods improves reading abilities in dyslexia: A pilot study. Applied Neuropsychology: Child, 11(3), 518–528. [Google Scholar] [CrossRef]

- Eysenck, M. W., Derakshan, N., Santos, R., & Calvo, M. G. (2007). Anxiety and cognitive performance: Attentional control theory. Emotion, 7(2), 336. [Google Scholar] [CrossRef]

- Field, D. E., & Anderson, D. R. (1985). Instruction and modality effects on children’s television attention and comprehension. Journal of Educational Psychology, 77(1), 91. [Google Scholar] [CrossRef]

- Fifer, J. M., Barutchu, A., Shivdasani, M. N., & Crewther, S. G. (2013). Verbal and novel multisensory associative learning in adults. F1000Research, 2, 34. [Google Scholar] [CrossRef] [PubMed]

- Flom, R., & Bahrick, L. E. (2007). The development of infant discrimination of affect in multimodal and unimodal stimulation: The role of intersensory redundancy. Developmental Psychology, 43(1), 238. [Google Scholar] [CrossRef]

- Foxe, J. J., & Simpson, G. V. (2005). Biasing the brain’s attentional set: II. Effects of selective intersensory attentional deployments on subsequent sensory processing. Experimental Brain Research, 166(3), 393–401. [Google Scholar] [CrossRef]

- Froyen, D., Willems, G., & Blomert, L. (2011). Evidence for a specific cross-modal association deficit in dyslexia: An electrophysiological study of letter–speech sound processing. Developmental Science, 14(4), 635–648. [Google Scholar] [CrossRef] [PubMed]

- Gathercole, S. E., & Baddeley, A. D. (1990). Phonological memory deficits in language disordered children: Is there a causal connection? Journal of Memory and Language, 29(3), 336–360. [Google Scholar] [CrossRef]

- Gathercole, S. E., Willis, C. S., Emslie, H., & Baddeley, A. D. (1992). Phonological memory and vocabulary development during the early school years: A longitudinal study. Developmental Psychology, 28(5), 887–898. [Google Scholar] [CrossRef]

- Gillam, R. B., Cowan, N., & Marler, J. A. (1998). Information processing by school-age children with specific language impairment: Evidence from a modality effect paradigm. Journal of Speech, Language, and Hearing Research, 41(4), 913–926. [Google Scholar] [CrossRef] [PubMed]

- Gkintoni, E., Vassilopoulos, S. P., & Nikolaou, G. (2025). Brain-inspired multisensory learning: A systematic review of neuroplasticity and cognitive outcomes in adult multicultural and second language acquisition. Biomimetics, 10(6), 397. [Google Scholar] [CrossRef]

- Goldberg, M. E., & Wurtz, R. H. (1972). Activity of superior colliculus in behaving monkey. II. Effect of attention on neuronal responses. Journal of Neurophysiology, 35(4), 560–574. [Google Scholar] [CrossRef]

- Goodale, M. A., & Milner, A. D. (1992). Separate visual pathways for perception and action. Trends in Neurosciences, 15(1), 20–25. [Google Scholar] [CrossRef]

- Grady, C. L. (2012). The cognitive neuroscience of ageing. Nature Reviews Neuroscience, 13(7), 491–505. [Google Scholar] [CrossRef]

- Gronau, Q. F., Heck, D. W., Berkhout, S. W., Haaf, J. M., & Wagenmakers, E.-J. (2021). A primer on Bayesian model-averaged meta-analysis. Advances in Methods and Practices in Psychological Science, 4(3), 25152459211031256. [Google Scholar] [CrossRef]

- Guenther, F. H., & Hickok, G. (2016). Chapter 58—Neural models of motor speech control. In G. Hickok, & S. L. Small (Eds.), Neurobiology of language (pp. 725–740). Academic Press. [Google Scholar] [CrossRef]

- Gunaydin, G., Moran, J. K., Rohe, T., & Senkowski, D. (2025). Causal inference shapes crossmodal postdictive perception within the temporal window of multisensory integration. bioRxiv. [Google Scholar] [CrossRef]

- Hackenberger, B. K. (2020). Bayesian meta-analysis now—let’s do it. Croatian Medical Journal, 61(6), 564–568. [Google Scholar] [CrossRef] [PubMed]

- Hahn, N., Foxe, J. J., & Molholm, S. (2014). Impairments of multisensory integration and cross-sensory learning as pathways to dyslexia. Neuroscience & Biobehavioral Reviews, 47, 384–392. [Google Scholar] [CrossRef] [PubMed]

- Haier, R. J., Siegel, B. V., Nuechterlein, K. H., Hazlett, E., Wu, J. C., Paek, J., Browning, H. L., & Buchsbaum, M. S. (1988). Cortical glucose metabolic rate correlates of abstract reasoning and attention studied with positron emission tomography. Intelligence, 12(2), 199–217. [Google Scholar] [CrossRef]

- Hatchette, R. K., & Evans, J. R. (1983). Auditory-visual and temporal-spatial pattern matching performance of two types of learning-disabled children. Journal of Learning Disabilities, 16(9), 537–541. [Google Scholar] [CrossRef] [PubMed]

- Hedman, A. M., van Haren, N. E. M., Schnack, H. G., Kahn, R. S., & Hulshoff Pol, H. E. (2012). Human brain changes across the life span: A review of 56 longitudinal magnetic resonance imaging studies. Human Brain Mapping, 33(8), 1987–2002. [Google Scholar] [CrossRef]

- Heikkilä, J., Alho, K., Hyvönen, H., & Tiippana, K. (2015). Audiovisual semantic congruency during encoding enhances memory performance. Experimental Psychology, 62(2), 123–130. [Google Scholar] [CrossRef]

- Heikkilä, J., & Tiippana, K. (2016). School-aged children can benefit from audiovisual semantic congruency during memory encoding. Experimental Brain Research, 234(5), 1199–1207. [Google Scholar] [CrossRef]

- Higgins, J. P., & Thompson, S. G. (2002). Quantifying heterogeneity in a meta-analysis. Statistics in Medicine, 21(11), 1539–1558. [Google Scholar] [CrossRef]

- Hitch, G. J., & Baddeley, A. (1976). Verbal reasoning and working memory. The Quarterly Journal of Experimental Psychology, 28(4), 603–621. [Google Scholar] [CrossRef]

- Hocking, J., & Price, C. J. (2009). Dissociating verbal and nonverbal audiovisual object processing. Brain and Language, 108(2), 89–96. [Google Scholar] [CrossRef] [PubMed]

- Hopfinger, J. B., & Slotnick, S. D. (2020). Attentional control and executive function. Cognitive Neuroscience, 11(1–2), 1–4. [Google Scholar] [CrossRef] [PubMed]

- Horowitz, F. D., Paden, L., Bhana, K., & Self, P. (1972). An infant-control procedure for studying infant visual fixations. Developmental Psychology, 7(1), 90. [Google Scholar] [CrossRef]

- Hubel, D. H., & Wiesel, T. N. (1963). Receptive fields of cells in striate cortex of very young, visually inexperienced kittens. Journal of Neurophysiology, 26(6), 994–1002. [Google Scholar] [CrossRef]

- Hullett, C. R., & Levine, T. R. (2003). The overestimation of effect sizes from f values in meta-analysis: The cause and a solution. Communication Monographs, 70(1), 52–67. [Google Scholar] [CrossRef]

- JASP Team. (2022). JASP (Version 0.16.3) [Computer Software]. Available online: https://jasp-stats.org/ (accessed on 30 August 2022).

- Johnson, M. H. (1990). Cortical maturation and the development of visual attention in early infancy. Journal of Cognitive Neuroscience, 2(2), 81–95. [Google Scholar] [CrossRef]

- Kanaka, N., Matsuda, T., Tomimoto, Y., Noda, Y., Matsushima, E., Matsuura, M., & Kojima, T. (2008). Measurement of development of cognitive and attention functions in children using continuous performance test. Psychiatry and Clinical Neurosciences, 62(2), 135–141. [Google Scholar] [CrossRef]

- Kent, R. D. (2000). Research on speech motor control and its disorders: A review and prospective. Journal of Communication Disorders, 33(5), 391–428. [Google Scholar] [CrossRef] [PubMed]

- Kocsis, Z., Jenison, R. L., Taylor, P. N., Calmus, R. M., McMurray, B., Rhone, A. E., Sarrett, M. E., Deifelt Streese, C., Kikuchi, Y., Gander, P. E., Berger, J. I., Kovach, C. K., Choi, I., Greenlee, J. D., Kawasaki, H., Cope, T. E., Griffiths, T. D., Howard, M. A., & Petkov, C. I. (2023). Immediate neural impact and incomplete compensation after semantic hub disconnection. Nature Communications, 14(1), 6264. [Google Scholar] [CrossRef] [PubMed]

- Kopp, F. (2014). Audiovisual temporal fusion in 6-month-old infants. Developmental Cognitive Neuroscience, 9, 56–67. [Google Scholar] [CrossRef]

- Langan, D., Higgins, J. P. T., Jackson, D., Bowden, J., Veroniki, A. A., Kontopantelis, E., Viechtbauer, W., & Simmonds, M. (2019). A comparison of heterogeneity variance estimators in simulated random-effects meta-analyses. Research Synthesis Methods, 10(1), 83–98. [Google Scholar] [CrossRef] [PubMed]

- Laycock, R., Stein, J. F., & Crewther, S. G. (2022). Pathways for rapid visual processing: Subcortical contributions to emotion, threat, biological relevance, and motivated behavior. Frontiers in Behavioral Neuroscience, 16, 960448. [Google Scholar] [CrossRef]

- Lewkowicz, D. J., & Bremner, A. J. (2020). Chapter 4—The development of multisensory processes for perceiving the environment and the self. In K. Sathian, & V. S. Ramachandran (Eds.), Multisensory perception (pp. 89–112). Academic Press. [Google Scholar] [CrossRef]

- Lewkowicz, D. J., & Lickliter, R. (1994). The development ofintersensory perception: Comparative perspectives. Psychology Press. [Google Scholar]

- Lewkowicz, G. (2009). The emergence of multisensory systems through perceptual narrowing. Trends in Cognitive Sciences, 13(11), 470–478. [Google Scholar] [CrossRef]

- Mabbott, D. J., Noseworthy, M., Bouffet, E., Laughlin, S., & Rockel, C. (2006). White matter growth as a mechanism of cognitive development in children. NeuroImage, 33(3), 936–946. [Google Scholar] [CrossRef]

- Macaluso, E., & Driver, J. (2005). Multisensory spatial interactions: A window onto functional integration in the human brain. Trends in Neurosciences, 28(5), 264–271. [Google Scholar] [CrossRef]

- Maegherman, G., Nuttall, H. E., Devlin, J. T., & Adank, P. (2019). Motor imagery of speech: The involvement of primary motor cortex in manual and articulatory motor imagery. Frontiers in Human Neuroscience, 13, 195. [Google Scholar] [CrossRef]

- Magimairaj, B., Montgomery, J., Marinellie, S., & McCarthy, J. (2009). Relation of three mechanisms of working memory to children’s complex span performance. International Journal of Behavioral Development, 33(5), 460–469. [Google Scholar] [CrossRef]

- Maitre, N. L., Key, A. P., Slaughter, J. C., Yoder, P. J., Neel, M. L., Richard, C., Wallace, M. T., & Murray, M. M. (2020). Neonatal multisensory processing in preterm and term infants predicts sensory reactivity and internalizing tendencies in early childhood. Brain Topography, 33(5), 586–599. [Google Scholar] [CrossRef] [PubMed]

- Marsman, M., & Wagenmakers, E.-J. (2017). Bayesian benefits with JASP. European Journal of Developmental Psychology, 14(5), 545–555. [Google Scholar] [CrossRef]

- Mason, G. M., Goldstein, M. H., & Schwade, J. A. (2019). The role of multisensory development in early language learning. Journal of Experimental Child Psychology, 183, 48–64. [Google Scholar] [CrossRef]

- Massaro, D. W., Thompson, L. A., Barron, B., & Laren, E. (1986). Developmental changes in visual and auditory contributions to speech perception. Journal of Experimental Child Psychology, 41(1), 93–113. [Google Scholar] [CrossRef]

- Mathias, B., Andrä, C., Schwager, A., Macedonia, M., & von Kriegstein, K. (2022). Twelve- and fourteen-year-old school children differentially benefit from sensorimotor- and multisensory-enriched vocabulary training. Educational Psychology Review, 34(3), 1739–1770. [Google Scholar] [CrossRef]

- Matusz, P. J., Wallace, M. T., & Murray, M. M. (2017). A multisensory perspective on object memory. Neuropsychologia, 105, 243–252. [Google Scholar] [CrossRef]

- McCulloch, D. L., & Skarf, B. (1991). Development of the human visual system: Monocular and binocular pattern VEP latency. Investigative Ophthalmology & Visual Science, 32(8), 2372–2381. [Google Scholar]

- Moeskops, P., Benders, M. J. N. L., Kersbergen, K. J., Groenendaal, F., de Vries, L. S., Viergever, M. A., & Išgum, I. (2015). Development of cortical morphology evaluated with longitudinal MR brain images of preterm infants. PLoS ONE, 10(7), e0131552. [Google Scholar] [CrossRef] [PubMed]

- Molholm, S., Murphy, J. W., Bates, J., Ridgway, E. M., & Foxe, J. J. (2020). Multisensory audiovisual processing in children with a sensory processing disorder (I): Behavioral and electrophysiological indices under speeded response conditions. Frontiers in Integrative Neuroscience, 14, 4. [Google Scholar] [CrossRef]

- Montgomery, J. W., Polunenko, A., & Marinellie, S. A. (2009). Role of working memory in children’s understanding spoken narrative: A preliminary investigation. Applied Psycholinguistics, 30(3), 485–509. [Google Scholar] [CrossRef]

- Morris, S. B., & DeShon, R. P. (2002). Combining effect size estimates in meta-analysis with repeated measures and independent-groups designs. Psychological Methods, 7(1), 105. [Google Scholar] [CrossRef]

- Muir, D., & Field, J. (1979). Newborn infants orient to sounds. Child Development, 431–436. Available online: https://www.jstor.org/stable/1129419 (accessed on 5 July 2021). [CrossRef]

- Murray, M. M., Thelen, A., Thut, G., Romei, V., Martuzzi, R., & Matusz, P. J. (2016). The multisensory function of the human primary visual cortex. Neuropsychologia, 83, 161–169. [Google Scholar] [CrossRef]

- Nardini, M., Bales, J., & Mareschal, D. (2016). Integration of audio-visual information for spatial decisions in children and adults. Developmental Science, 19(5), 803–816. [Google Scholar] [CrossRef] [PubMed]

- Nardini, M., Jones, P., Bedford, R., & Braddick, O. (2008). Development of cue integration in human navigation. Current biology, 18(9), 689–693. [Google Scholar] [CrossRef]

- Neil, P. A., Chee-Ruiter, C., Scheier, C., Lewkowicz, D. J., & Shimojo, S. (2006). Development of multisensory spatial integration and perception in humans. Developmental Science, 9(5), 454–464. [Google Scholar] [CrossRef]

- Nelson, C. A. (1995). The ontogeny of human memory: A cognitive neuroscience perspective. Developmental Psychology, 31(5), 723–738. [Google Scholar] [CrossRef]

- Nijakowska, J. (2008). An experiment with direct multisensory instruction in teaching word reading and spelling to Polish dyslexic learners of English. In Language learners with special needs: An international perspective (pp. 130–157). Available online: https://scholar.google.com/scholar_lookup?title=An+Experiment+with+Direct+Multisensory+Instruction+in+Teaching+Word+Reading+and+Spelling+to+Polish+Dyslexic+Learners+of+English&author=Nijakowska,+Joanna&publication_year=2008&pages=130–57 (accessed on 28 July 2025).

- Nijakowska, J. (2013). Multisensory structured learning approach in teaching foreign languages to dyslexic learners. In D. Gabryś-Barker, E. Piechurska-Kuciel, & J. Zybert (Eds.), Investigations in teaching and learning languages: Studies in honour of Hanna Komorowska (pp. 201–215). Springer International Publishing. [Google Scholar] [CrossRef]

- Okada, K., & Hickok, G. (2009). Two cortical mechanisms support the integration of visual and auditory speech: A hypothesis and preliminary data. Neuroscience Letters, 452(3), 219–223. [Google Scholar] [CrossRef]

- Ortiz-Mantilla, S., Choudhury, N., Leevers, H., & Benasich, A. A. (2008). Understanding language and cognitive deficits in very low birth weight children. Developmental Psychobiology: The Journal of the International Society for Developmental Psychobiology, 50(2), 107–126. [Google Scholar] [CrossRef] [PubMed]

- Padilla, N., Alexandrou, G., Blennow, M., Lagercrantz, H., & Ådén, U. (2015). Brain growth gains and losses in extremely preterm infants at term. Cerebral Cortex, 25(7), 1897–1905. [Google Scholar] [CrossRef] [PubMed]

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., & Moher, D. (2021). Updating guidance for reporting systematic reviews: Development of the PRISMA 2020 statement. Journal of Clinical Epidemiology, 134, 103–112. [Google Scholar] [CrossRef] [PubMed]

- Park, D. C., & Reuter-Lorenz, P. (2009). The adaptive brain: Aging and neurocognitive scaffolding. Annual Review of Psychology, 60, 173–196. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Pickering, H. E., Peters, J. L., & Crewther, S. G. (2022). A role for visual memory in vocabulary development: A systematic review and meta-analysis. Neuropsychology Review, 33(4), 803–833. [Google Scholar] [CrossRef]

- Pickering, S. J. (2001). The development of visuo-spatial working memory. Memory, 9(4–6), 423–432. [Google Scholar] [CrossRef]

- Pillai, R., & Yathiraj, A. (2017a). Auditory, visual and auditory-visual memory and sequencing performance in typically developing children. International Journal of Pediatric Otorhinolaryngology, 100, 23–34. [Google Scholar] [CrossRef]

- Pillai, R., & Yathiraj, A. (2017b). Two scoring procedures to evaluate memory and sequencing in auditory, visual and auditory-visual combined modalities. Hearing, Balance and Communication, 15(4), 214–220. [Google Scholar] [CrossRef]

- Postle, B. R. (2006). Working memory as an emergent property of the mind and brain. Neuroscience, 139(1), 23–38. [Google Scholar] [CrossRef]

- Quak, M., London, R. E., & Talsma, D. (2015). A multisensory perspective of working memory. Frontiers in Human Neuroscience, 9, 197. [Google Scholar] [CrossRef]

- Richards, J. E. (1985). The development of sustained visual attention in infants from 14 to 26 weeks of age. Psychophysiology, 22(4), 409–416. [Google Scholar] [CrossRef]

- Rossi, C., Vidaurre, D., Costers, L., Akbarian, F., Woolrich, M., Nagels, G., & Van Schependom, J. (2023). A data-driven network decomposition of the temporal, spatial, and spectral dynamics underpinning visual-verbal working memory processes. Communications Biology, 6(1), 1079. [Google Scholar] [CrossRef] [PubMed]

- Shams, L., & Seitz, A. R. (2008). Benefits of multisensory learning. Trends in Cognitive Sciences, 12(11), 411–417. [Google Scholar] [CrossRef]

- Shimi, A., Nobre, A. C., Astle, D., & Scerif, G. (2014). Orienting attention within visual short-term memory: Development and mechanisms. Child Development, 85(2), 578–592. [Google Scholar] [CrossRef] [PubMed]

- Silver, N. C., & Dunlap, W. P. (1987). Averaging correlation coefficients: Should Fisher’s z transformation be used? Journal of Applied Psychology, 72(1), 146. [Google Scholar] [CrossRef]

- Simonyan, K., & Horwitz, B. (2011). Laryngeal motor cortex and control of speech in humans. Neuroscientist, 17(2), 197–208. [Google Scholar] [CrossRef]

- Smith, S. E., & Chatterjee, A. (2008). Visuospatial attention in children. Archives of Neurology, 65(10), 1284–1288. [Google Scholar] [CrossRef]

- Snowling, M. J., Hulme, C., & Nation, K. (2022). The science of reading: A handbook. John Wiley & Sons. [Google Scholar]

- Soto-Faraco, S., Calabresi, M., Navarra, J., Werker, J. F., & Lewkowicz, D. J. (2012). The development of audiovisual speech perception. In Multisensory development (pp. 207–228). Oxford University Press. [Google Scholar] [CrossRef]

- Squire, L. R. (1992). Declarative and nondeclarative memory: Multiple brain systems supporting learning and memory. Journal of Cognitive Neuroscience, 4(3), 232–243. [Google Scholar] [CrossRef]

- Stein, B. E., Burr, D., Constantinidis, C., Laurienti, P. J., Alex Meredith, M., Perrault, T. J., Jr., Ramachandran, R., Röder, B., Rowland, B. A., & Sathian, K. (2010). Semantic confusion regarding the development of multisensory integration: A practical solution. European Journal of Neuroscience, 31(10), 1713–1720. [Google Scholar] [CrossRef] [PubMed]

- Stein, B. E., & Stanford, T. R. (2008). Multisensory integration: Current issues from the perspective of the single neuron. Nature Reviews Neuroscience, 9(4), 255–266. [Google Scholar] [CrossRef]

- Stokes, T. L., & Bors, D. A. (2001). The development of a same–different inspection time paradigm and the effects of practice. Intelligence, 29(3), 247–261. [Google Scholar] [CrossRef]

- Sydnor, V. J., Bagautdinova, J., Larsen, B., Arcaro, M. J., Barch, D. M., Bassett, D. S., Alexander-Bloch, A. F., Cook, P. A., Covitz, S., Franco, A. R., Gur, R. E., Gur, R. C., Mackey, A. P., Mehta, K., Meisler, S. L., Milham, M. P., Moore, T. M., Müller, E. J., Roalf, D. R., … Satterthwaite, T. D. (2025). Human thalamocortical structural connectivity develops in line with a hierarchical axis of cortical plasticity. Nature Neuroscience. Advance online publication. [Google Scholar] [CrossRef] [PubMed]

- Teller, D. Y. (1997). First glances: The vision of infants. the Friedenwald lecture. Investigative Ophthalmology & Visual Science, 38(11), 2183–2203. [Google Scholar]

- Teller, D. Y., McDonald, M. A., Preston, K., Sebris, S. L., & Dobson, V. (1986). Assessment of visual acuity in infants and children; the acuity card procedure. Developmental Medicine & Child Neurology, 28(6), 779–789. [Google Scholar] [CrossRef]

- Thierry, G., & Price, C. J. (2006). Dissociating verbal and nonverbal conceptual processing in the human brain. Journal of Cognitive Neuroscience, 18(6), 1018–1028. [Google Scholar] [CrossRef]

- Thompson, S. G., & Higgins, J. P. T. (2002). How should meta-regression analyses be undertaken and interpreted? Statistics in Medicine, 21(11), 1559–1573. [Google Scholar] [CrossRef] [PubMed]

- Todd, J. W. (1912). Reaction to multiple stimuli. Science Press. [Google Scholar]

- Tovar, D. A., Murray, M. M., & Wallace, M. T. (2020). Selective Enhancement of object representations through multisensory integration. The Journal of Neuroscience, 40(29), 5604–5615. [Google Scholar] [CrossRef] [PubMed]

- Treisman, A. M., & Gelade, G. (1980). A feature-integration theory of attention. Cognitive Psychology, 12(1), 97–136. [Google Scholar] [CrossRef]

- Tremblay, C., Champoux, F., Voss, P., Bacon, B. A., Lepore, F., & Théoret, H. (2007). Speech and non-speech audio-visual illusions: A developmental study. PLoS ONE, 2(8), e742. [Google Scholar] [CrossRef]

- Tzarouchi, L. C., Astrakas, L. G., Xydis, V., Zikou, A., Kosta, P., Drougia, A., Andronikou, S., & Argyropoulou, M. I. (2009). Age-related grey matter changes in preterm infants: An MRI study. NeuroImage, 47(4), 1148–1153. [Google Scholar] [CrossRef]

- Verhagen, J., Boom, J., Mulder, H., de Bree, E., & Leseman, P. (2019). Reciprocal relationships between nonword repetition and vocabulary during the preschool years. Developmental Psychology, 55(6), 1125–1137. [Google Scholar] [CrossRef]

- Veritas Health Innovation. (2019). Covidence systematic review software. Available online: www.covidence.org (accessed on 11 November 2020).

- Wang, D., Marcantoni, E., Shapiro, K. L., & Hanslmayr, S. (2025). Pre-stimulus alpha power modulates trial-by-trial variability in theta rhythmic multisensory entrainment strength and theta-induced memory effect. bioRxiv. [Google Scholar] [CrossRef]

- Wang, R., Chen, X., Khalilian-Gourtani, A., Yu, L., Dugan, P., Friedman, D., Doyle, W., Devinsky, O., Wang, Y., & Flinker, A. (2023). Distributed feedforward and feedback cortical processing supports human speech production. Proceedings of the National Academy of Sciences of the United States of America, 120(42), e2300255120. [Google Scholar] [CrossRef] [PubMed]

- Weaver, B. P., & Hamada, M. S. (2016). Quality quandaries: A gentle introduction to Bayesian statistics. Quality Engineering, 28(4), 508–514. [Google Scholar] [CrossRef]

- Weisberg, R. W., & Reeves, L. M. (2013). Cognition: From memory to creativity. John Wiley & Sons. [Google Scholar]

- Wiesel, T. N., & Hubel, D. H. (1963). Single-cell responses in striate cortex of kittens deprived of vision in one eye. Journal of Neurophysiology, 26(6), 1003–1017. [Google Scholar] [CrossRef] [PubMed]

- Yi, Y. J., & Heidari Matin, N. (2025). Perception and experience of multisensory environments among neurodivergent people: Systematic review. Architectural Science Review, 1–19. [Google Scholar] [CrossRef]

- Zackon, D. H., Casson, E. J., Stelmach, L., Faubert, J., & Racette, L. (1997). Distinguishing subcortical and cortical influences in visual attention. Subcortical attentional processing. Investigative Ophthalmology & Visual Science, 38(2), 364–371. [Google Scholar]

| No | Search Term (S) |

|---|---|

| 1. | (sound* ADJ2 (vision OR visual OR sight*)).mp |

| 2. | (multisensory ADJ2 (integration OR perspective OR processing)).mp |

| 3. | (sensory ADJ2 (integration OR cross-modal OR multiple)).mp |

| 4. | Intersensory processing/OR sensory integration/ |

| 5. | ((Audiovisual OR audio-visual) ADJ2 (synchrony OR information)).mp |

| 6. | Visual auditory integration.mp |

| 7. | Visual perception.mp OR exp Visual perception/ |

| 8. | Auditory perception.mp OR exp Auditory perception/ |

| 9. | 7 and 8 |

| 10. | 1 or 2 or 3 or 4 or 5 or 6 or 7 or 8 or 9 |

| 11. | ((working OR short-term OR long-term OR verbal OR visual OR spatial) ADJ2 memory*).mp |

| 12. | memory/ or long-term memory/ or short-term memory/ or spatial memory/ or verbal memory/ or visual memory/ |

| 13. | 11 or 12 |

| 14. | (Child* ADJ2(development OR early OR school age* OR pre-school OR young)).mp |

| 15. | ((preschool OR pre-school OR school) ADJ2 student*).mp |

| 16. | Birth OR infant OR toddler OR newborn OR child* |

| 17. | elementary school students/or preschool students/childhood development/or early childhood development/exp Infant Development/ |

| 18. | 14 or 15 or 16 or 17 |

| 19. | 10 and 13 and 18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the University Association of Education and Psychology. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alhamdan, A.A.; Pickering, H.E.; Murphy, M.J.; Crewther, S.G. From Senses to Memory During Childhood: A Systematic Review and Bayesian Meta-Analysis Exploring Multisensory Processing and Working Memory Development. Eur. J. Investig. Health Psychol. Educ. 2025, 15, 157. https://doi.org/10.3390/ejihpe15080157

Alhamdan AA, Pickering HE, Murphy MJ, Crewther SG. From Senses to Memory During Childhood: A Systematic Review and Bayesian Meta-Analysis Exploring Multisensory Processing and Working Memory Development. European Journal of Investigation in Health, Psychology and Education. 2025; 15(8):157. https://doi.org/10.3390/ejihpe15080157

Chicago/Turabian StyleAlhamdan, Areej A., Hayley E. Pickering, Melanie J. Murphy, and Sheila G. Crewther. 2025. "From Senses to Memory During Childhood: A Systematic Review and Bayesian Meta-Analysis Exploring Multisensory Processing and Working Memory Development" European Journal of Investigation in Health, Psychology and Education 15, no. 8: 157. https://doi.org/10.3390/ejihpe15080157

APA StyleAlhamdan, A. A., Pickering, H. E., Murphy, M. J., & Crewther, S. G. (2025). From Senses to Memory During Childhood: A Systematic Review and Bayesian Meta-Analysis Exploring Multisensory Processing and Working Memory Development. European Journal of Investigation in Health, Psychology and Education, 15(8), 157. https://doi.org/10.3390/ejihpe15080157