Assessing the Usability of ChatGPT for Formal English Language Learning

Abstract

1. Introduction

2. Literature Review

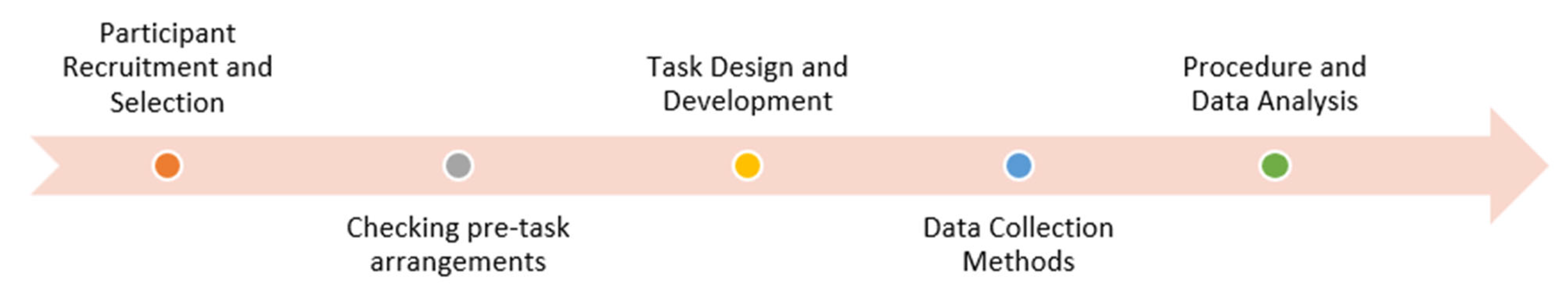

3. Methodology

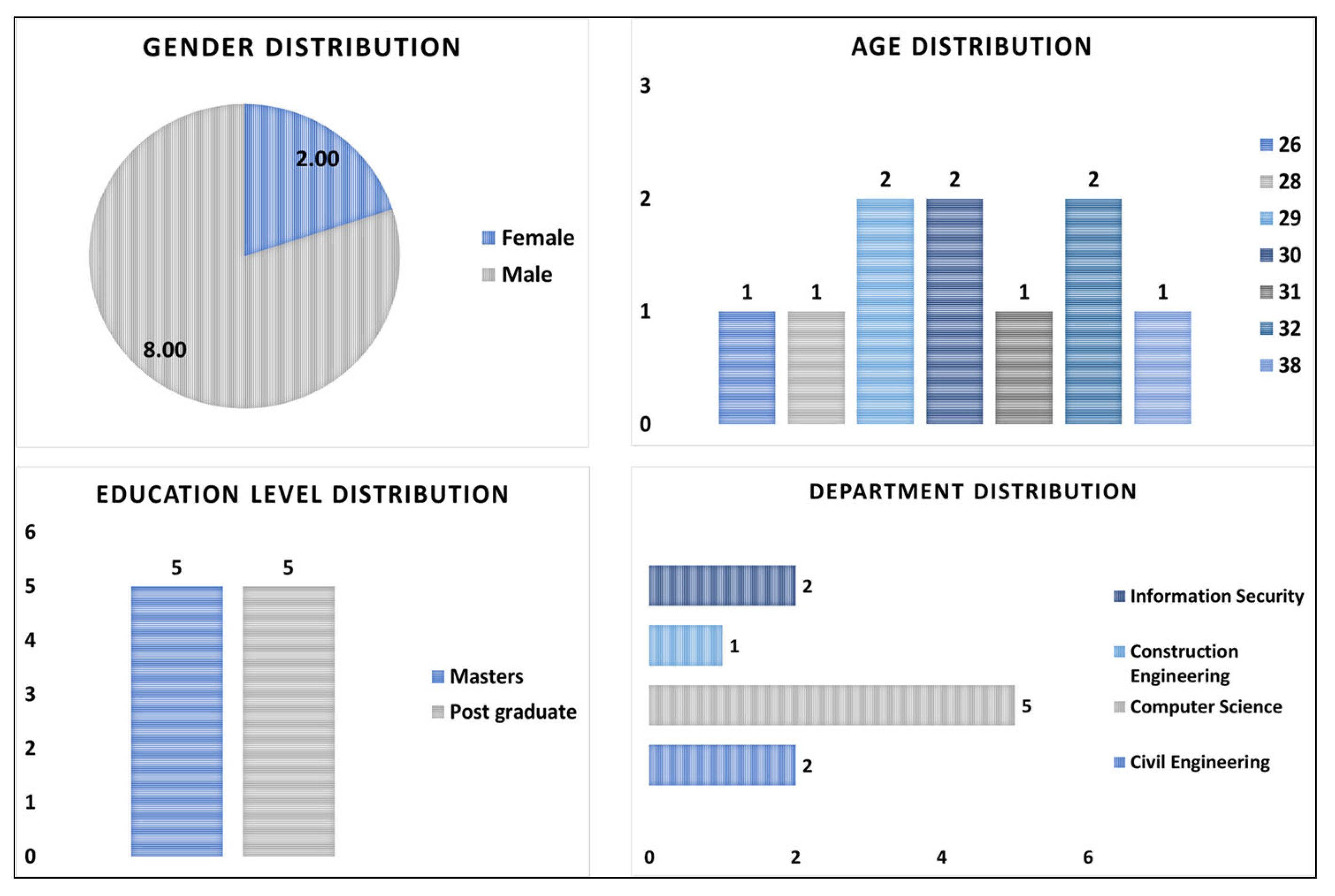

3.1. Participants

3.2. Data Collection

3.3. Data Analysis

4. Results

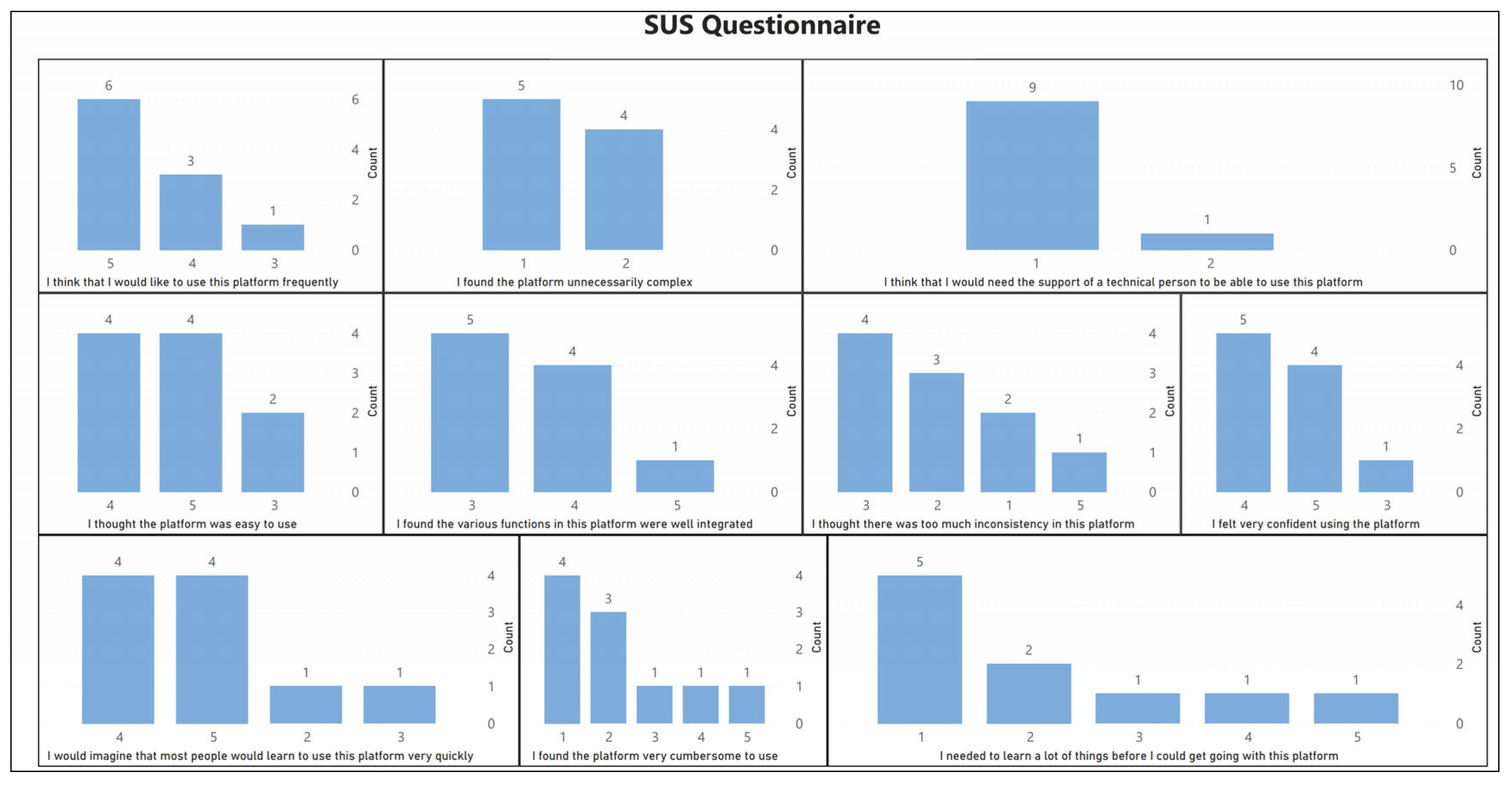

- For the SUS questionnaire, we sum up the feedback score given for each of the questions for all 10 questions. This summation is calculated against each individual participant response and used as the base feedback score.

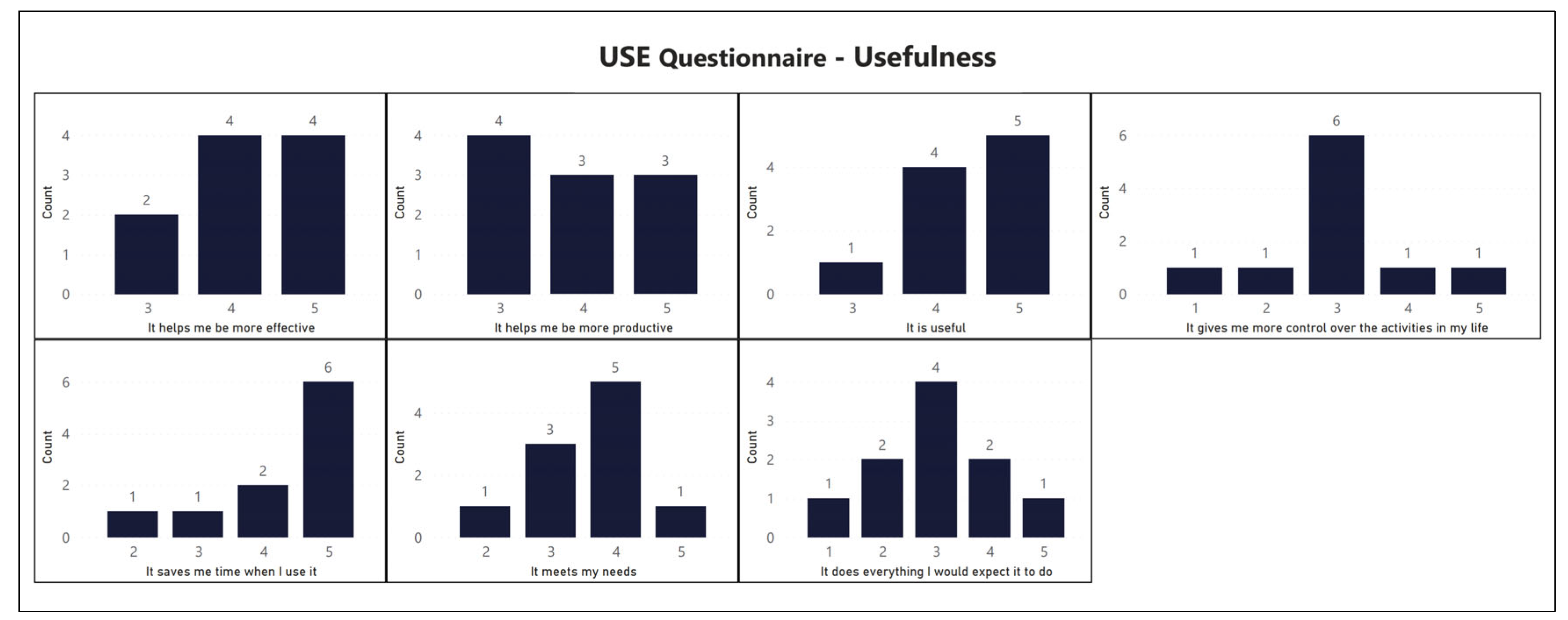

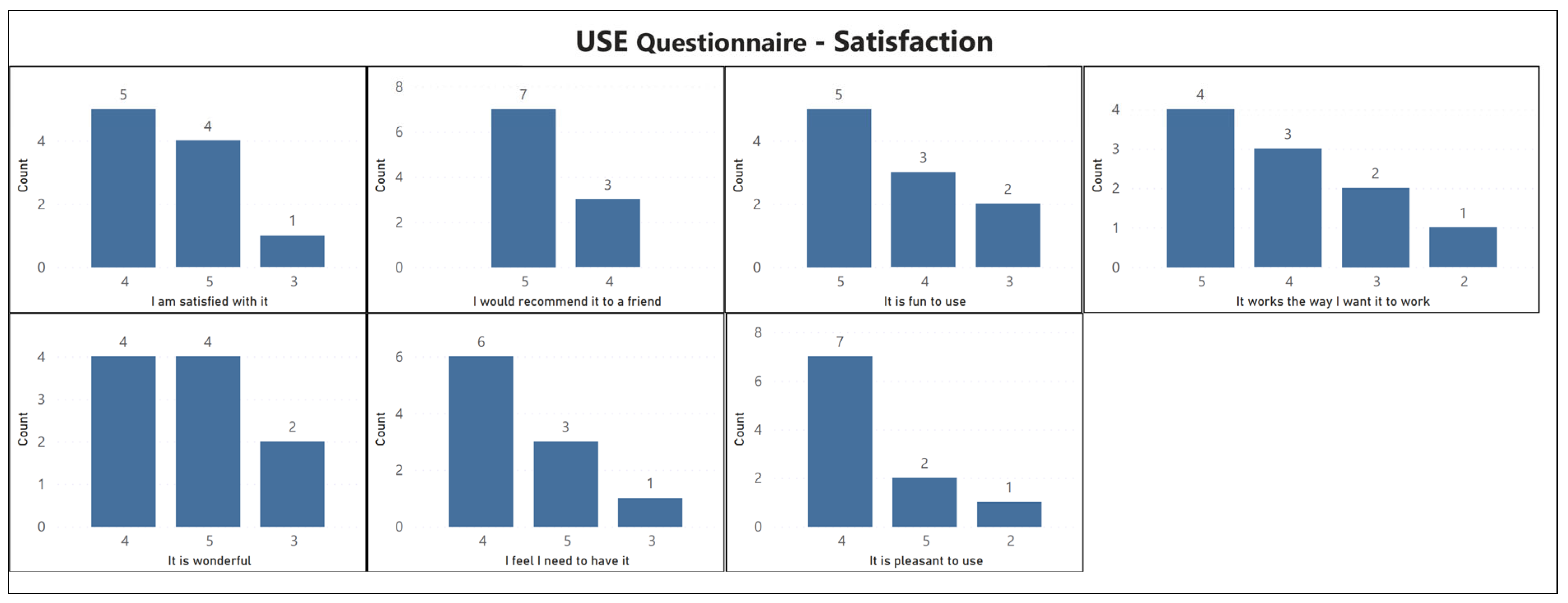

- For the USE questionnaire, we sum up the feedback score given for each of the questions for all the questions in each aspect (i.e., ease of use, ease of learning, usefulness, and satisfaction). The summation is calculated against each individual participant response for each of the sections. This score is used as the base feedback score to perform the data analysis.

- Hence, in the end we assess the usability of ChatGPT for English language learning in terms of total five aspects. (1) SUS—System Usability Scale, (2) Usefulness, (3) Ease of Learning, (4) Ease of Use, and (5) Satisfaction.

5. Discussions

- The language learners and the educators should explore the new methods of integrating this tool to enhance language learning experience.

- The language learners should try to integrate this tool into instructional practices.

- The developers of these tools should also consider user-centered design principles to make the language learners accomplish their tasks with as few steps as possible. This is based on the participant responses in the ease-of-use aspect for ChatGPT.

- The developers can try to continuously improve the user interface, and more interactive elements to increase the satisfaction of the end-users.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Task Design Details

- For the writing task, please bring two or three English language paragraph texts originally written by you.

- Suggest some topics suitable for the discussion.

- Let’s start the conversation for [topic].

- Let’s have a job interview questions where I will act as a person applying for the job.

- Generate a conversation at a police station.

- Re-write the conversation and add a conflict into it.

- Rewrite the following paragraph in more formal/informal way.

- Rewrite the following paragraph in a more polite way.

- Rewrite the following text like a formal email.

- Simplify the vocabulary in the following text.

- Write an email regarding requesting for a fee refund.

- Write an email about asking for rescheduling an email.

- Identify and correct the possible mistakes in the following text.

- Generate a short/simple conversation in a specific [tense].

- Rewrite the following text in passive voice.

- Explain the meaning of [word] in a simple way.

- Generate 20 sentences using the specific [word].

- Explain different contexts of this specific [word].

- Generate a list of most widely used phrasal verbs along with their definitions and examples.

- Generate a list of most commonly used phrases in the business domain.

- Generate a possible list of vocabulary used in the meeting context.

Appendix A.2. Data Collection Form for Collecting Participants Feedback

- 1.

- Demographics Information

- Gender (Male, Female, or Other)

- Age

- Current Education Level

- Department

- Previous English language knowledge level

- 2.

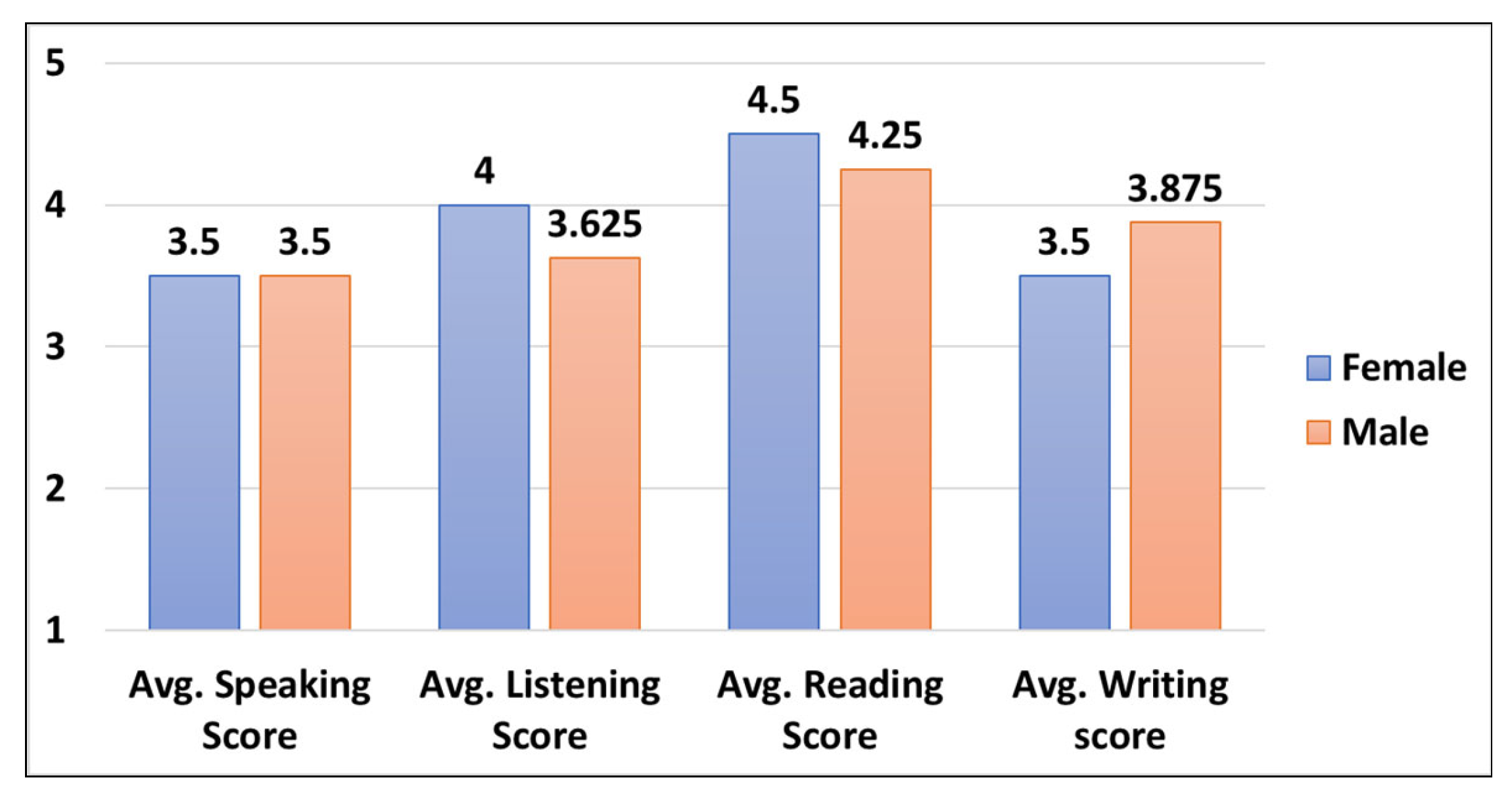

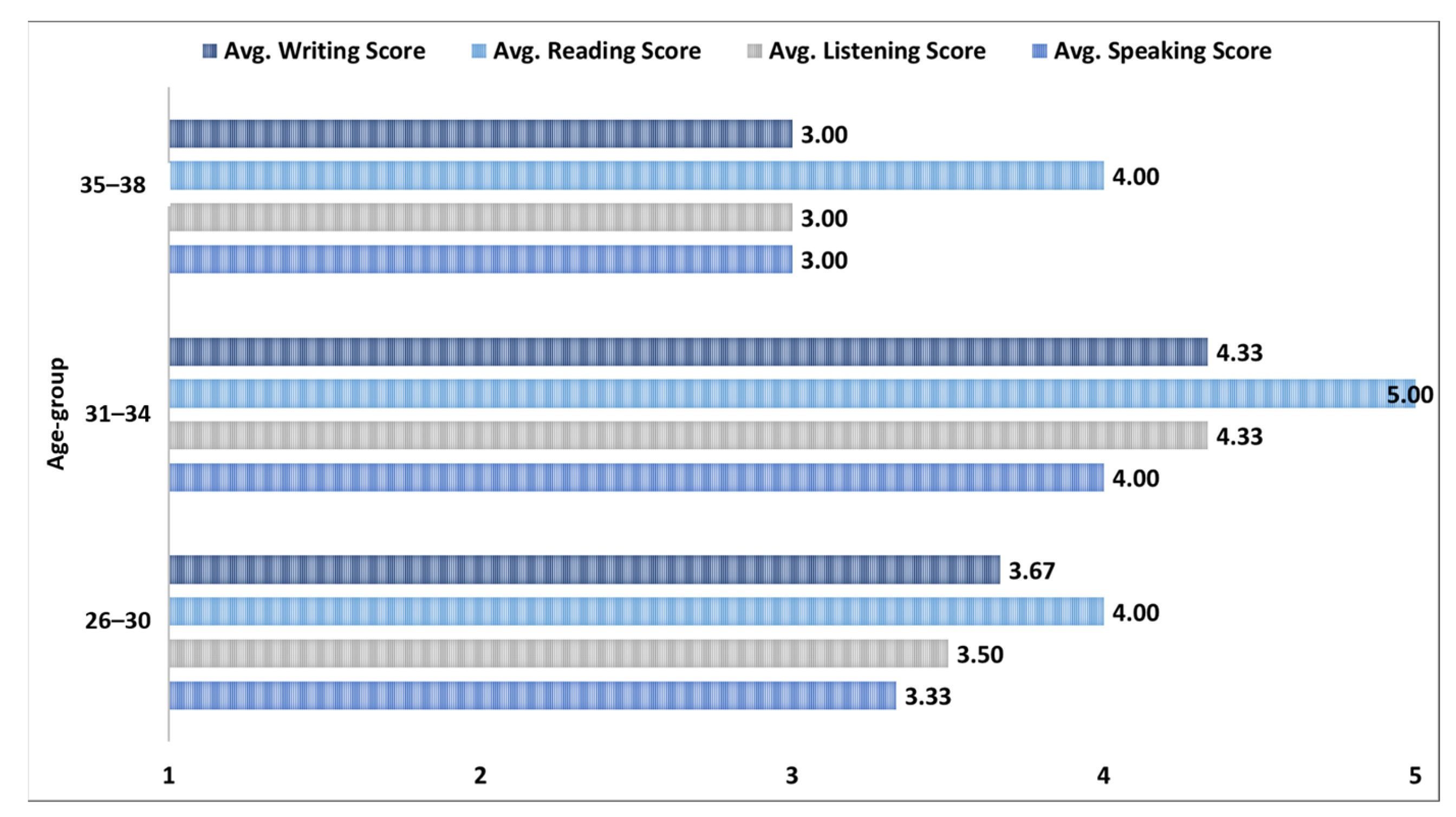

- Please rate your previous English language knowledge level (1–5) for below categories

- Speaking (1–5)

- Listening (1–5)

- Reading (1–5)

- Writing (1–5)

- 3.

- Please rate the following items from (1–5) based on your experience with ChatGPT (USE Questionnaire)

- It helps me be more effective. (1–5)

- It helps me be more productive. (1–5)

- It is useful. (1–5)

- It gives me more control over the activities in my life. (1–5)

- It makes the things I want to accomplish easier to get done. (1–5)

- It saves me time when I use it. (1–5)

- It meets my needs. (1–5)

- It does everything I would expect it to do. (1–5)

- It is easy to use. (1–5)

- It is simple to use. (1–5)

- It is user friendly. (1–5)

- It requires the fewest steps possible to accomplish what I want to do with it. (1–5)

- It is flexible. (1–5)

- Using it is effortless. (1–5)

- I can use it without written instructions. (1–5)

- I do not notice any inconsistencies as I use it. (1–5)

- Both occasional and regular users would like it. (1–5)

- I can recover from mistakes quickly and easily. (1–5)

- I can use it successfully every time. (1–5)

- I learned to use it quickly. (1–5)

- I easily remember how to use it. (1–5)

- It is easy to learn to use it. (1–5)

- I quickly became skillful with it. (1–5)

- I am satisfied with it. (1–5)

- I would recommend it to a friend. (1–5)

- It is fun to use. (1–5)

- It works the way I want it to work. (1–5)

- It is wonderful. (1–5)

- I feel I need to have it. (1–5)

- It is pleasant to use. (1–5)

- 4.

- Please rate the following items from (1–5) based on your experience with ChatGPT

- I think that I would like to use this platform frequently. (1–5)

- I found the platform unnecessarily complex. (1–5)

- I thought the platform was easy to use. (1–5)

- I think that I would need the support of a technical person to be able to use this platform. (1–5)

- I found the various functions in this platform were well integrated. (1–5)

- I thought there was too much inconsistency in this platform. (1–5)

- I would imagine that most people would learn to use this platform very quickly. (1–5)

- I found the platform very cumbersome to use. (1–5)

- I felt very confident using the platform. (1–5)

- I needed to learn a lot of things before I could get going with this platform. (1–5)

- 5.

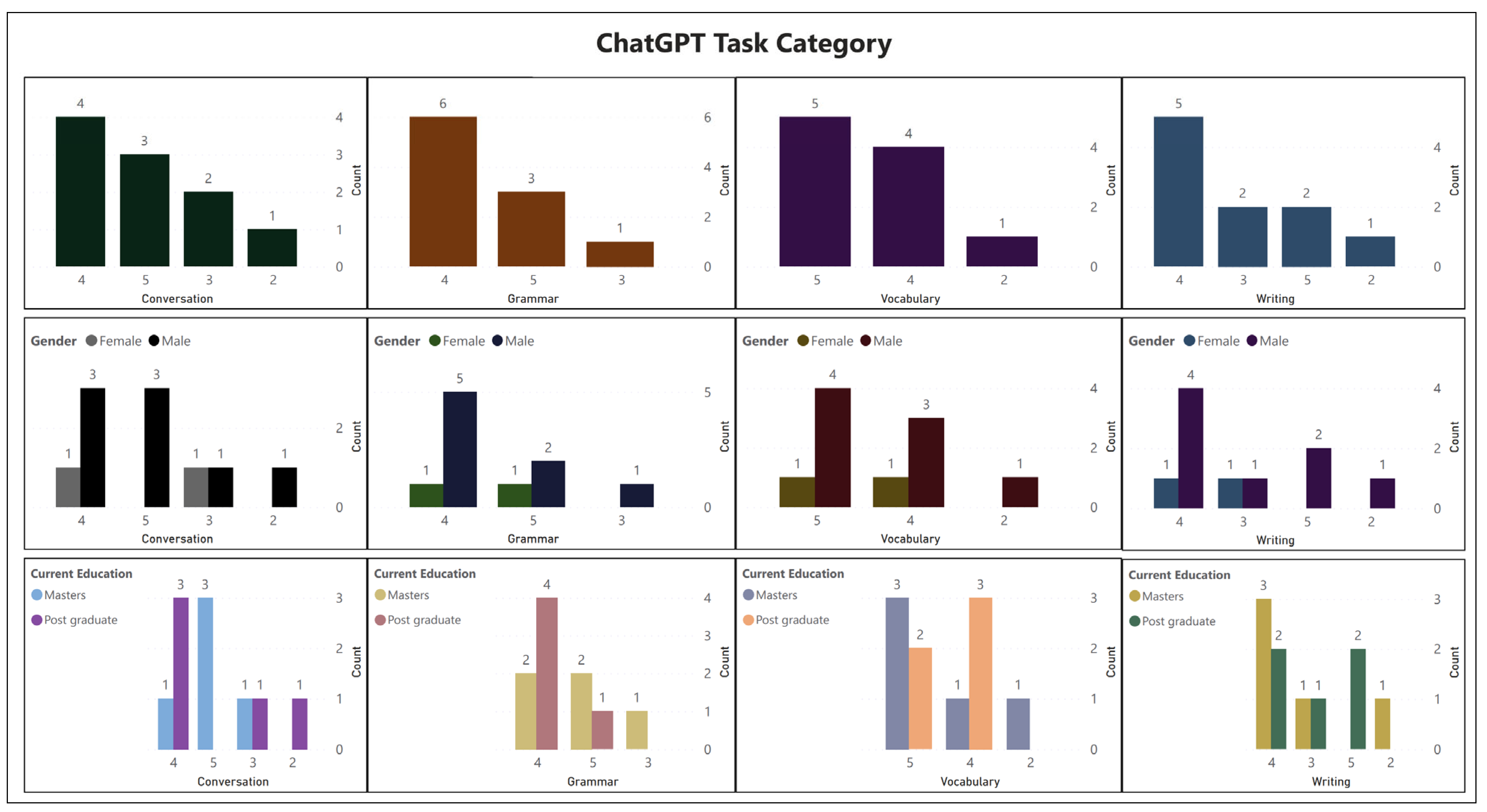

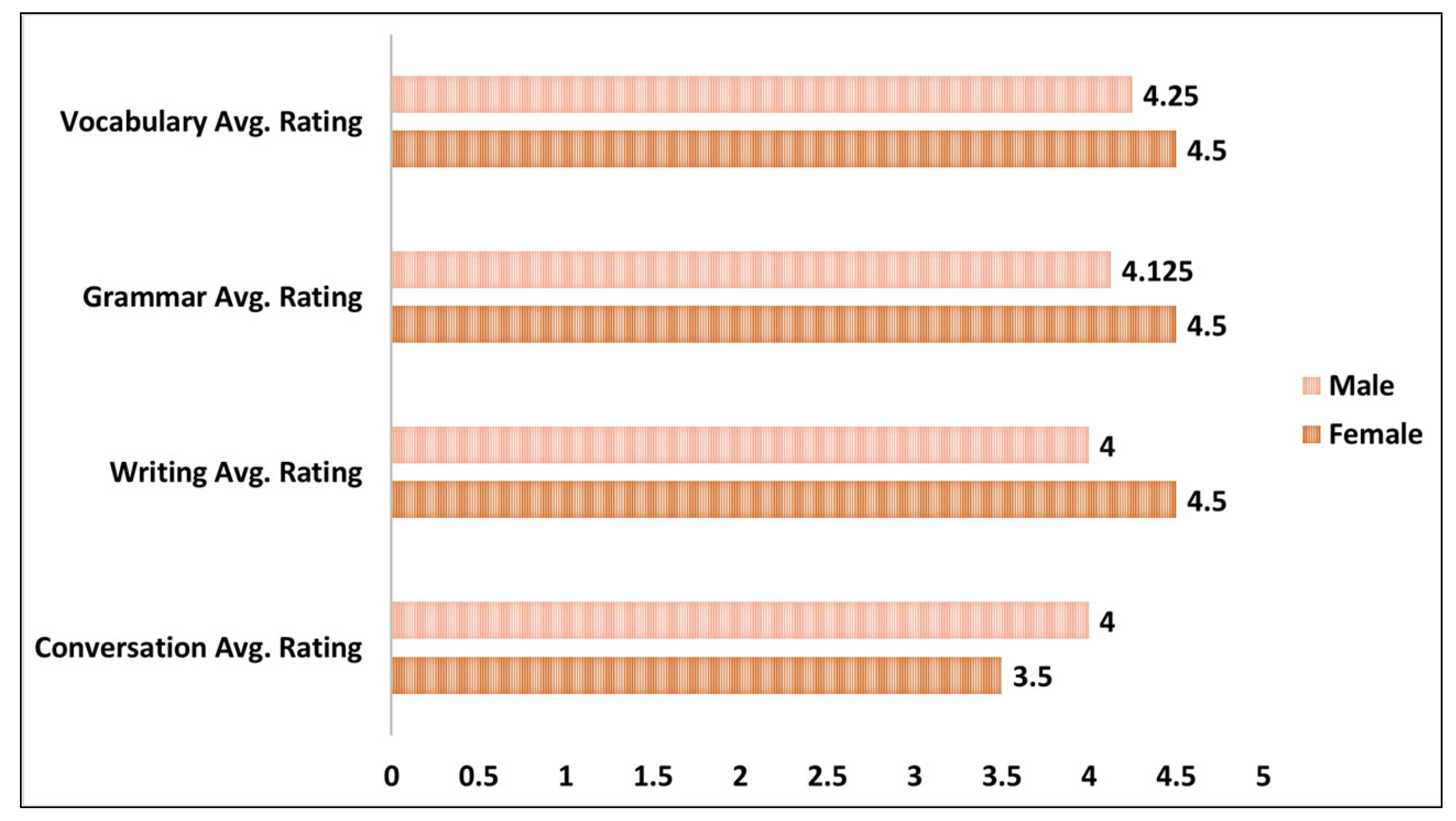

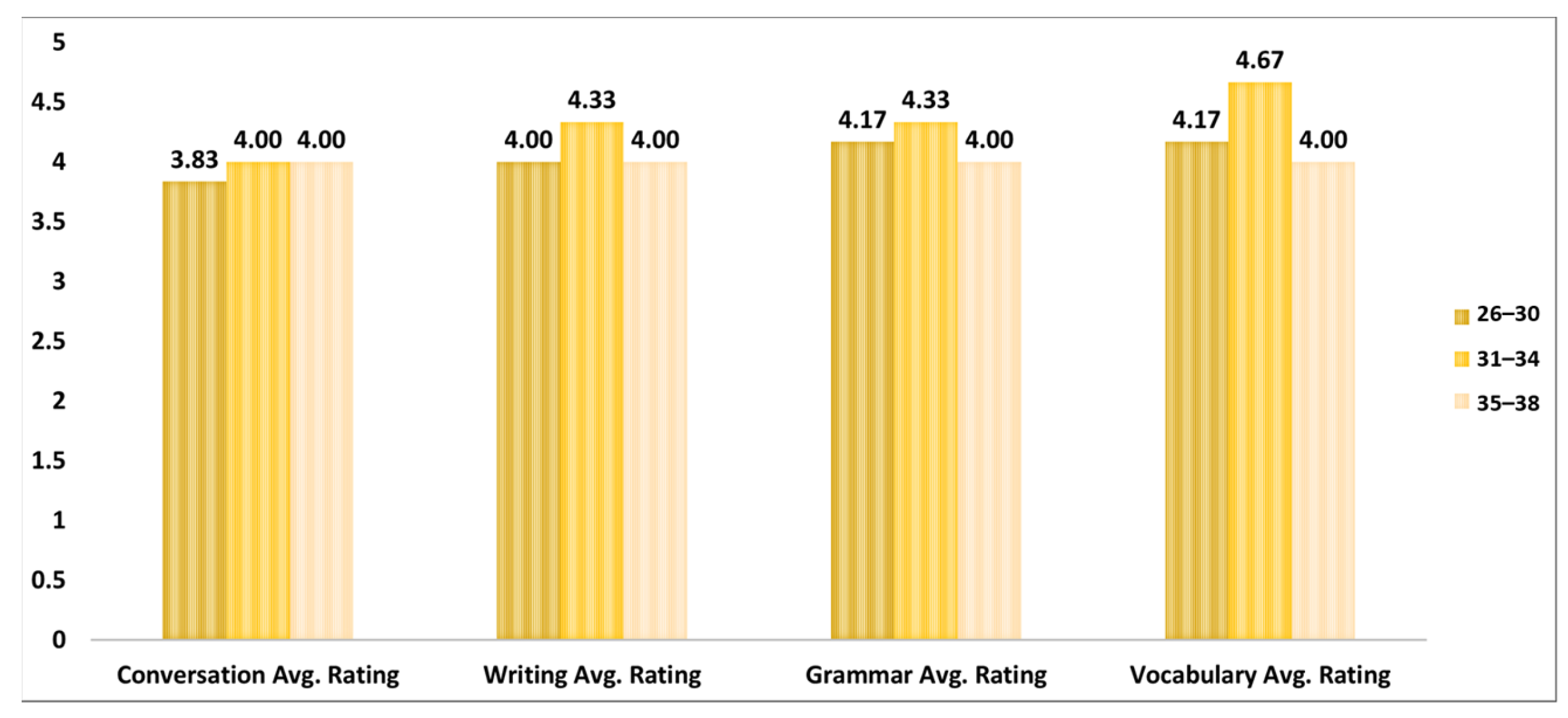

- Please rate your satisfaction (1–5) for different group of tasks you performed with ChatGPT

- Conversation (1–5)

- Writing (1–5)

- Grammar (1–5)

- Vocabulary (1–5)

Appendix B. Individual Participants’ Feedback Results

References

- Epp, C.D. English language learner experiences of formal and informal learning environments. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016. [Google Scholar]

- Herrera, P. English and Students with Limited or Interrupted Formal Education; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Malyuga, E.N. Professional language in formal and business style. Glob. J. Hum. Soc. Sci. 2012, 12, 7–10. [Google Scholar]

- Kia, L.S.; Cheng, X.; Yee, T.K.; Ling, C.W. Code-mixing of English in the entertainment news of Chinese newspapers in Malaysia. Int. J. Engl. Linguist. 2011, 1, 3. [Google Scholar] [CrossRef]

- Klimova, B.; Pikhart, M.; Polakova, P.; Cerna, M.; Yayilgan, S.Y.; Shaikh, S. A Systematic Review on the Use of Emerging Technologies in Teaching English as an Applied Language at the University Level. Systems 2023, 11, 42. [Google Scholar] [CrossRef]

- Huang, W.; Hew, K.F.; Fryer, L.K. Chatbots for language learning—Are they really useful? A systematic review of chatbot-supported language learning. J. Comput. Assist. Learn. 2022, 38, 237–257. [Google Scholar] [CrossRef]

- Jeon, J. Chatbot-assisted dynamic assessment (CA-DA) for L2 vocabulary learning and diagnosis. Comput. Assist. Lang. Learn. 2021, 30, 1–27. [Google Scholar] [CrossRef]

- Haristiani, N. Artificial Intelligence (AI) chatbot as language learning medium: An inquiry. J. Phys. Conf. Ser. 2019, 1387, 012020. [Google Scholar] [CrossRef]

- Godwin-Jones, R. Chatbots in language learning: AI systems on the rise. In Intelligent CALL, Granular Systems, and Learner Data: Short Papers from EUROCALL 2022; Arnbjörnsdóttir, B., Bédi, B., Bradley, L., Friðriksdóttir, K., Garðarsdóttir, H., Thouësny, S., Whelpto, M.J., Eds.; Research-publishing.net: Voillans, France, 2022; pp. 124–128. [Google Scholar] [CrossRef]

- Ren, R.; Castro, J.W.; Acuña, S.T.; de Lara, J. Evaluation techniques for chatbot usability: A systematic mapping study. Int. J. Softw. Eng. Knowl. Eng. 2019, 29, 1673–1702. [Google Scholar] [CrossRef]

- Petersen, K.; Feldt, R.; Mujtaba, S.; Mattsson, M. Systematic mapping studies in software engineering. In Proceedings of the 12th International Conference on Evaluation and Assessment in Software Engineering (EASE), Bari, Italy, 26–27 June 2008; Volume 12. [Google Scholar]

- Fitria, T.N. Artificial intelligence (AI) technology in OpenAI ChatGPT application: A review of ChatGPT in writing English essay. ELT Forum J. Engl. Lang. Teach. 2023, 12, 44–58. [Google Scholar] [CrossRef]

- Klimova, B.F. Motivation for learning English at a university level. Procedia-Soc. Behav. Sci. 2011, 15, 2599–2603. [Google Scholar] [CrossRef][Green Version]

- Chun, D.; Kern, R.; Smith, B. Technology in language use, language teaching, and language learning. Mod. Lang. J. 2016, 100 (Suppl. 1), 64–80. [Google Scholar] [CrossRef]

- Bajorek, J.P. L2 pronunciation in CALL: The unrealized potential of Rosetta stone, Duolingo, Babbel, and mango languages. Issues Trends Educ. Technol. 2017, 5, 24–51. [Google Scholar]

- Guillén, G.; Sawin, T.; Avineri, N. Zooming out of the crisis: Language and human collaboration. Foreign Lang. Ann. 2020, 53, 320–328. [Google Scholar] [CrossRef]

- Costuchen, A.L. Gamified Curriculum and Open-Structured Syllabus in Second-Language Teaching. In Challenges and Opportunities in Global Approaches to Education; IGI Global: Hershey, PA, USA, 2020; pp. 35–55. [Google Scholar]

- Nushi, M.; Eqbali, M.H. Babbel: A mobile language learning app. TESL Report. 2018, 51, 13. [Google Scholar]

- Shortt, M.; Tilak, S.; Kuznetcova, I.; Martens, B.; Akinkuolie, B. Gamification in mobile-assisted language learning: A systematic review of Duolingo literature from public release of 2012 to early 2020. Comput. Assist. Lang. Learn. 2023, 36, 517–554. [Google Scholar] [CrossRef]

- Reknasari, A.; Putro, N. Using the Memrise mobile application to assist foreign language learning. In Teacher Education and Professional Development in Industry 4.0; CRC Press: Boca Raton, FL, USA, 2020; pp. 324–328. [Google Scholar]

- Berns, A. A review of virtual reality-bassed language learning apps. RIED Rev. Iberoam. Educ. Distancia 2021. [Google Scholar]

- Van Lieshout, C.; Cardoso, W. Google Translate as a tool for self-directed language learning. Lang. Learn. Technol. 2022, 26, 1–19. [Google Scholar]

- Kiliçkaya, F. Learning English Vocabulary by Hyperglossing and Narrow Reading: Readlang. Online Submission 2017. [Google Scholar]

- Prathyusha, N. Role of gamification in language learning. Int. J. Res. Anal. Rev. (IJRAR) 2020, 7, 577–583. [Google Scholar]

- Sayers, D.; Sousa-Silva, R.; Höhn, S.; Ahmedi, L.; Allkivi-Metsoja, K.; Anastasiou, D. The Dawn of the Human-Machine Era: A Forecast of New and Emerging Language Technologies; Hal Open Science: Lyon, France, 2021. [Google Scholar]

- Kholis, A. Elsa speak app: Automatic speech recognition (ASR) for supplementing English pronunciation skills. Pedagog. J. Engl. Lang. Teach. 2021, 9, 1–14. [Google Scholar] [CrossRef]

- Haryadi Haryadi, S.; Aprianoto, A. Integrating “English Pronunciation” App into Pronunciation Teaching: How It Affects Students’ Participation and Learning. J. Lang. Lang. Teach. 2020, 8, 202–212. [Google Scholar] [CrossRef]

- Jeon, J.; Lee, S.; Choi, S. A systematic review of research on speech-recognition chatbots for language learning: Implications for future directions in the era of large language models. Interact. Learn. Environ. 2023, 1–19. [Google Scholar] [CrossRef]

- Jeon, J. Exploring AI chatbot affordances in the EFL classroom: Young learners’ experiences and perspectives. Comput. Assist. Lang. Learn. 2022, 1–26. [Google Scholar] [CrossRef]

- Jia, J.; Chen, Y.; Ding, Z.; Ruan, M. Effects of a vocabulary acquisition and assessment system on students’ performance in a blended learning class for English subject. Comput. Educ. 2012, 58, 63–76. [Google Scholar] [CrossRef]

- Chen, H. Developing and evaluating an oral skills training website supported by automatic speech recognition technology. ReCALL 2011, 23, 59–78. [Google Scholar] [CrossRef]

- Yang, H.; Kim, H.; Lee, J.; Shin, D. Implementation of an AI chatbot as an English conversation partner in EFL speaking classes. ReCALL 2022, 34, 327–343. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Akcora, D.E.; Belli, A.; Berardi, M.; Casola, S.; Di Blas, N.; Falletta, S.; Faraotti, A.; Lodi, L.; Diaz, D.N.; Paolini, P. Conversational support for education. In Proceedings of the Artificial Intelligence in Education: 19th International Conference, AIED 2018, London, UK, 27–30 June 2018. [Google Scholar]

- Chen, L.; Chen, P.; Lin, Z. Artificial intelligence in education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Hobert, S. Say hello to ‘coding tutor’! design and evaluation of a chatbot-based learning system supporting students to learn to program. In Proceedings of the Fortieth International Conference on Information Systems, Munich, Germany, 15–18 December 2019. [Google Scholar]

- Garzón, J.; Lampropoulos, G.; Burgos, D. Effects of Mobile Learning in English Language Learning: A Meta-Analysis and Research Synthesis. Electronics 2023, 12, 1595. [Google Scholar] [CrossRef]

- Luo, Z. The Effectiveness of Gamified Tools for Foreign Language Learning (FLL): A Systematic Review. Behav. Sci. 2023, 13, 331. [Google Scholar] [CrossRef]

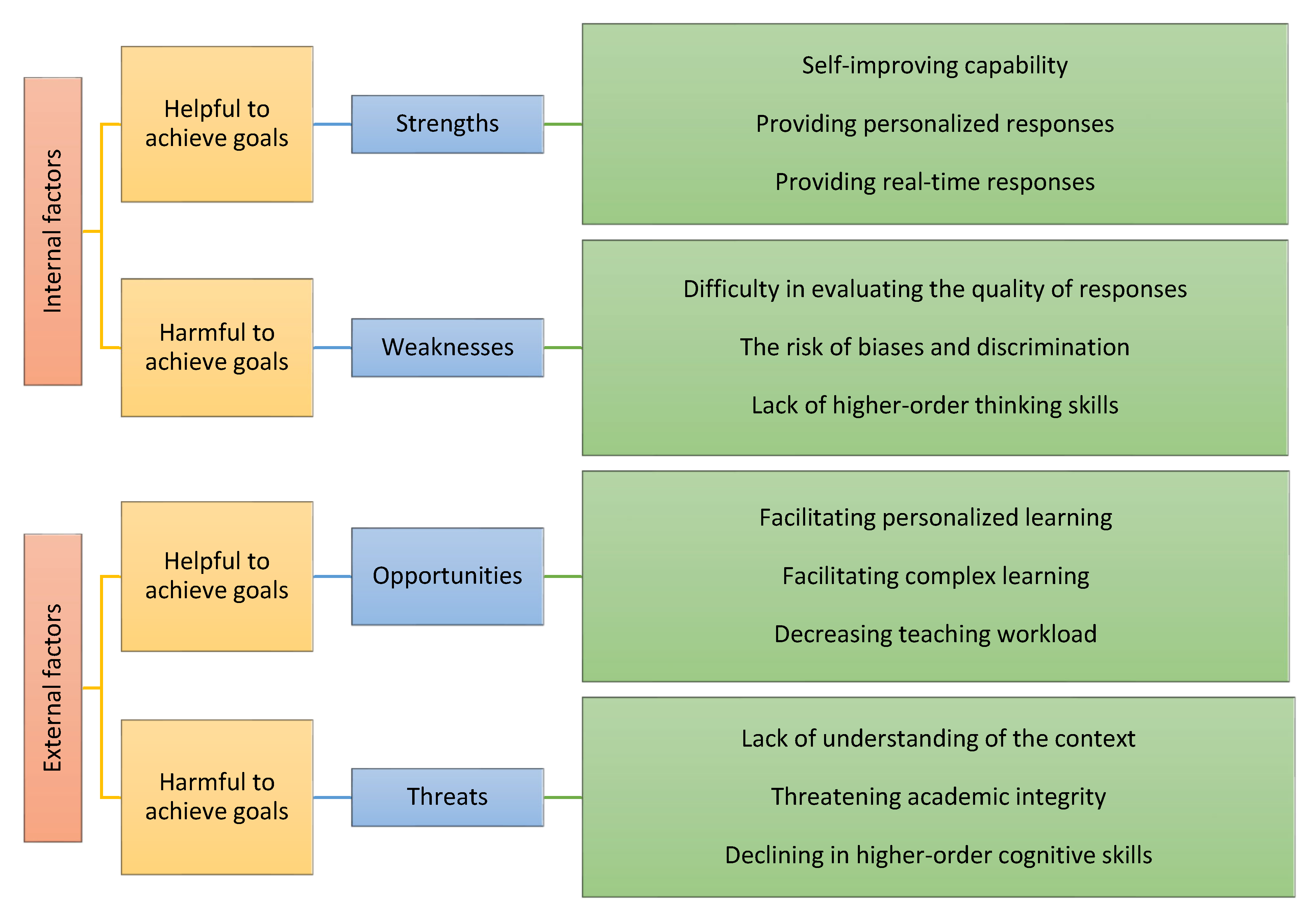

- Farrokhnia, M.; Banihashem, S.K.; Noroozi, O.; Wals, A. A SWOT analysis of ChatGPT: Implications for educational practice and research. Innov. Educ. Teach. Int. 2023, 1–5. [Google Scholar] [CrossRef]

- Gao, M.; Kortum, P.; Oswald, F. Psychometric evaluation of the use (usefulness, satisfaction, and ease of use) questionnaire for reliability and validity. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Philadelphia, PA, USA, 1–5 October 2018. [Google Scholar]

- Barrot, J.S. Using ChatGPT for second language writing: Pitfalls and potentials. Assess. Writ. 2023, 57, 100745. [Google Scholar] [CrossRef]

- Montenegro-Rueda, M.; Fernández-Cerero, J.; Fernández-Batanero, J.M.; López-Meneses, E. Impact of the Implementation of ChatGPT in Education: A Systematic Review. Computers 2023, 12, 153. [Google Scholar] [CrossRef]

- Bin-Hady, W.R.A.; Al-Kadi, A.; Hazaea, A.; Ali, J.K.M. Exploring the dimensions of ChatGPT in English language learning: A global perspective. Library Hi Tech. 2023. ahead-of-print. [Google Scholar] [CrossRef]

| Category | Description | Example of Software/Application | Purpose |

|---|---|---|---|

| Language learning software | These are standalone computer/mobile software programs. | Rosetta Stone Duolingo Babbel [15] | This software usually covers a comprehensive experience of language learning by using interactive lessons, vocabulary, exercise, pronunciation practice, grammar explanations, etc. |

| Language learning websites | These are online platforms dedicated to language learning with a wide range of resources, features, etc. | Memrise Busuu FluentU | These websites most of the time offer organized lessons, interactive tasks, quizzes, forums for language learners to interact with each other, etc. |

| Language exchange websites | These websites create an exchange platform for the language learners. Language learner for the specific target language can practice/learn language together with the native speaker of the target language. | Tandem or HelloTalk [16] | These websites provide different ways for language learners to connect with each other using messaging, video or voice calls, etc. |

| Flashcard Apps | These applications are used to help learners to memorize vocabulary and phrases through repetition. | Anki Quizlet [17] | These applications make users to create their own flashcards as well as to use pre-made combinations. |

| Category | Description | Example of Software/Application | Purpose |

|---|---|---|---|

| AI-based language learning apps | These language learning apps offer personalized learning experience by incorporating artificial intelligence (AI) techniques. | Babbel [18] Duolingo [19] Memrise [20] | The apps analyze users’ performance and progress. Being based on pattern understanding, these apps provide feedback and recommendations and adapt the content and difficulty level for different tasks related to language learning. |

| VR-based language learning tools | The VR-based tools make learners to involve themselves in a simulated language learning environment by providing a more interactive and realistic learning experience. | Mondly VR VR Speech Flashcard VR for Google Cardboard VR-House [21] | VR-based applications simulate real-life scenarios such as conversation, classroom teaching, group communications, etc. This helps to enable learners to practice language learning skills. |

| NLP-based language learning platforms | The NLP-based tools are used to help users learn and enhance their language skills by using NLP-powered use cases such as exercise in multiple languages, vocabulary learning, etc. | Google Translate [22] Readlang [23] | NLP-based applications provide interactive exercises and features for effective language learning to enhance language learning activities. |

| Gamification-based language learning | The gamification-based apps are a type of unique tools which make the language learning experience more engaging and enjoyable. These are developed using game design techniques such as point systems, rewards, badges, and challenges to encourage learners for daily language learning practice. | FluentU MindSnacks [24] Panolingo [25] | The apps allow variety of tasks for language learners through gamification such as translating words, phrases; listening to a phrase and typing what you hear; pronouncing target language words, etc. |

| Speech recognition-based language learning | The speech recognition-based apps are used to assess learners’ pronunciation accuracy. | Elsa Speak [26] Pronunciation App [27] | These language learning apps analyze speech patterns of the learner and then compare them back to the patterns of native speakers. At the end, these apps provide feedback on pronunciation errors. |

| Chatbots and conversational AI | The chatbots and conversational AI based language learning tools are used to make learners engage in dialogue/conversation with an AI-powered language assistant. | Chatterbug ChatGPT TalkyLand ELSA Speak HiNative Mitsuku | These tools allow the learners to practice their conversational as well as other skills such as reading and writing. |

| Mean | SD | Min | Max | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|

| Speaking | 3.50 | 0.84 | 2 | 5 | 0 | 0.10 |

| Listening | 3.70 | 0.94 | 2 | 5 | −0.23 | −0.34 |

| Reading | 4.30 | 0.94 | 2 | 5 | −1.71 | 3.53 |

| Writing | 3.80 | 0.91 | 2 | 5 | −0.60 | 0.39 |

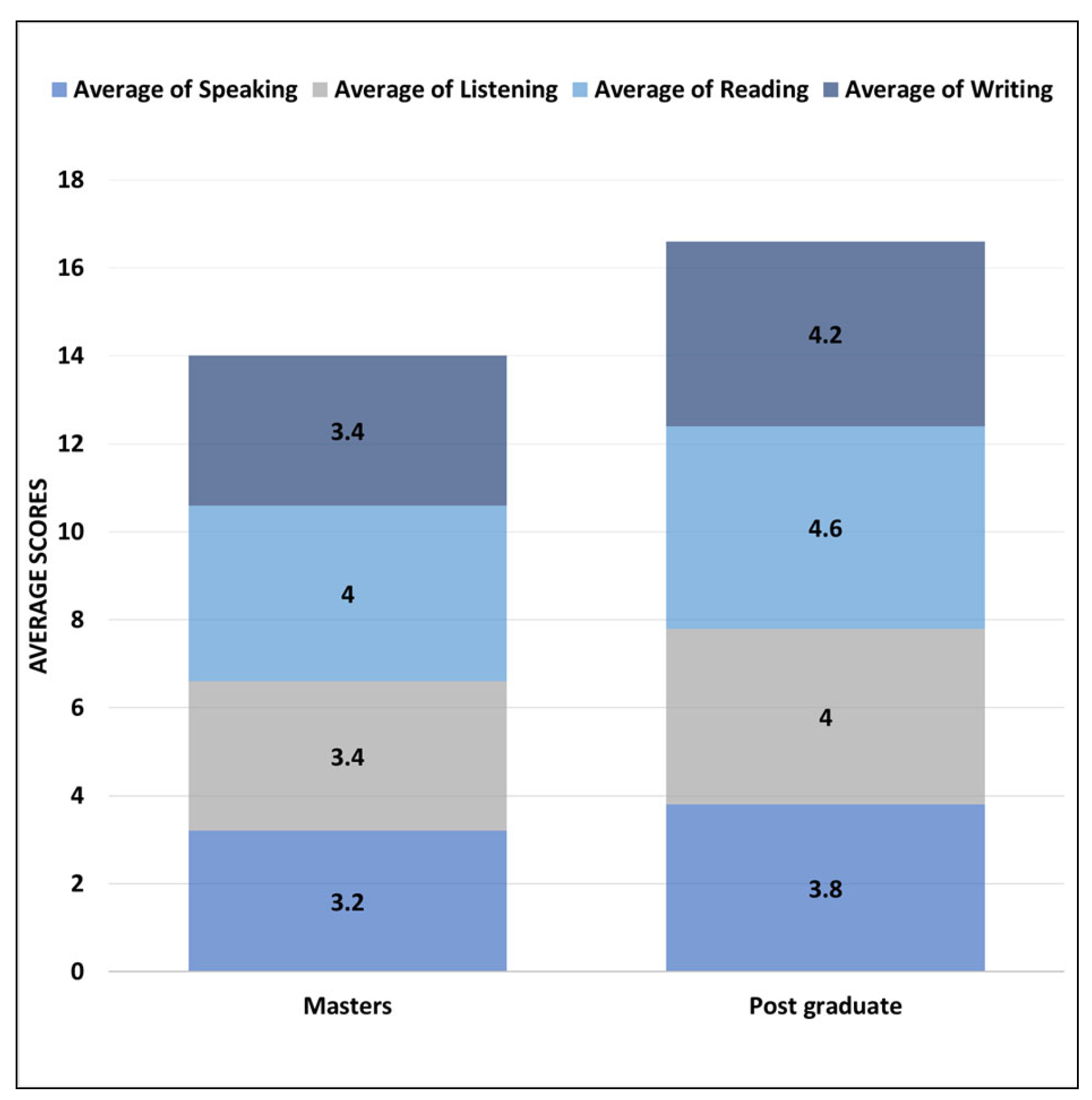

| Mean | SD | Min | Max | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|

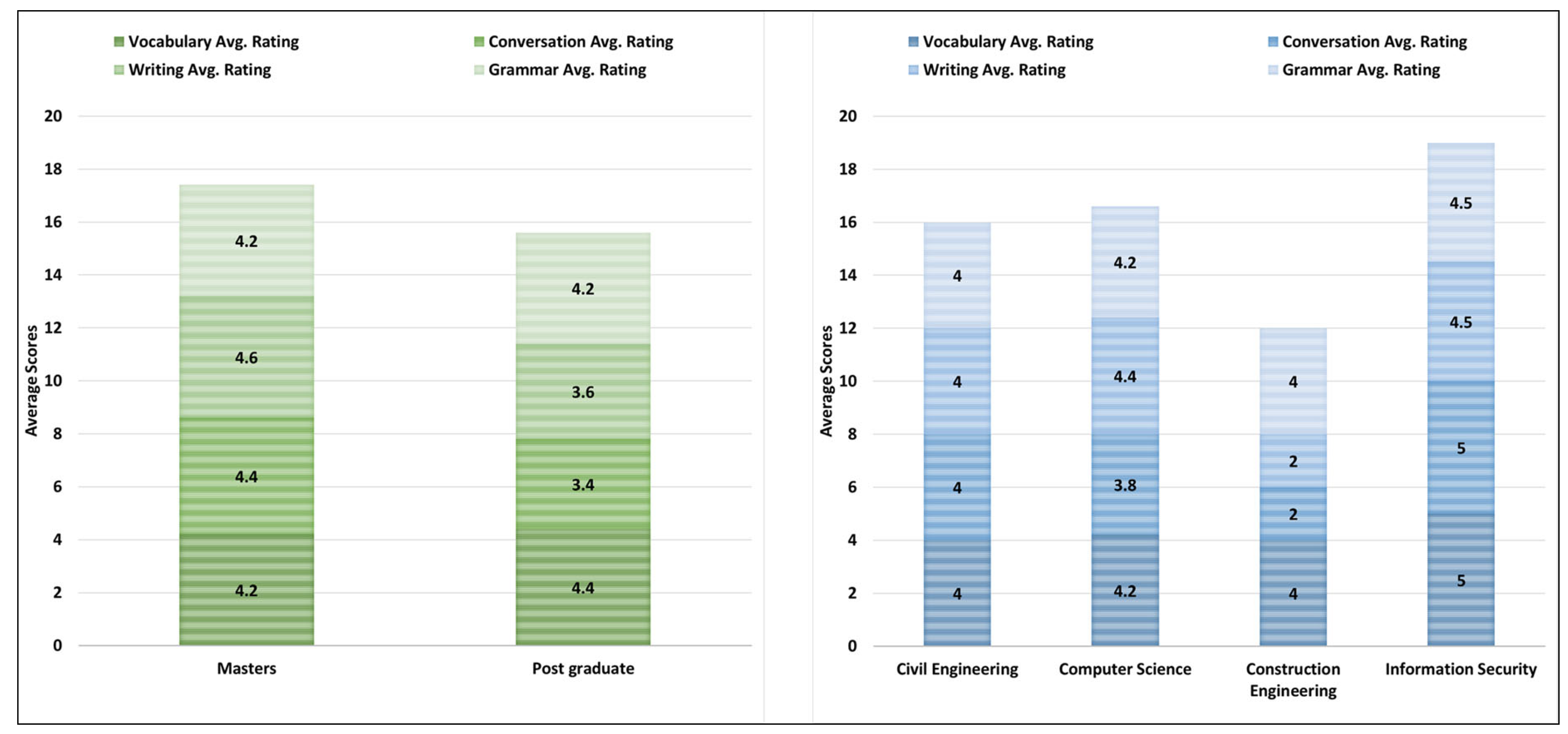

| Conversation | 3.90 | 0.99 | 2 | 5 | −0.61 | −0.15 |

| Writing | 4.10 | 0.99 | 2 | 5 | −1.08 | 0.91 |

| Grammar | 4.20 | 0.63 | 3 | 5 | −0.13 | 0.17 |

| Vocabulary | 4.30 | 0.94 | 2 | 5 | −1.71 | 3.53 |

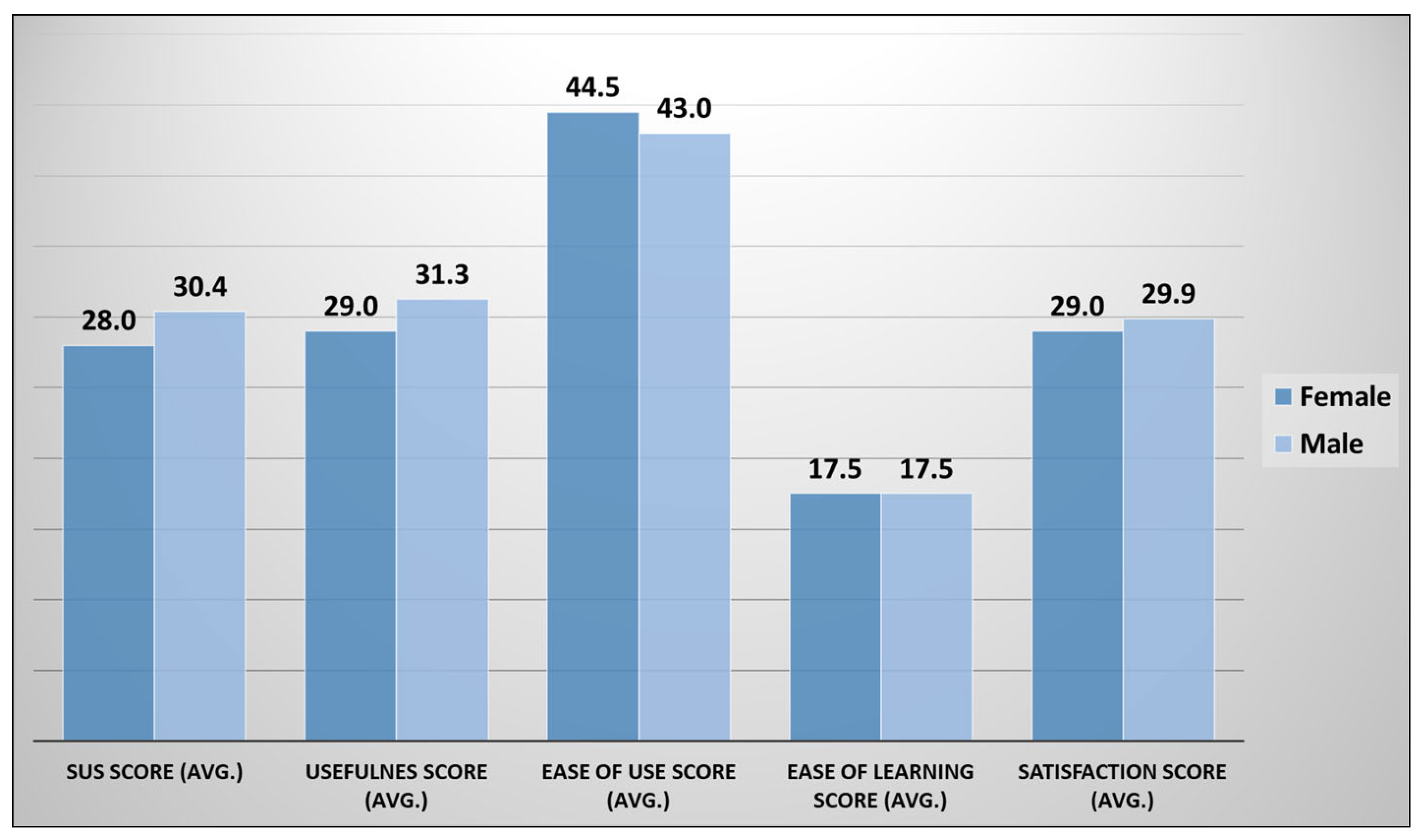

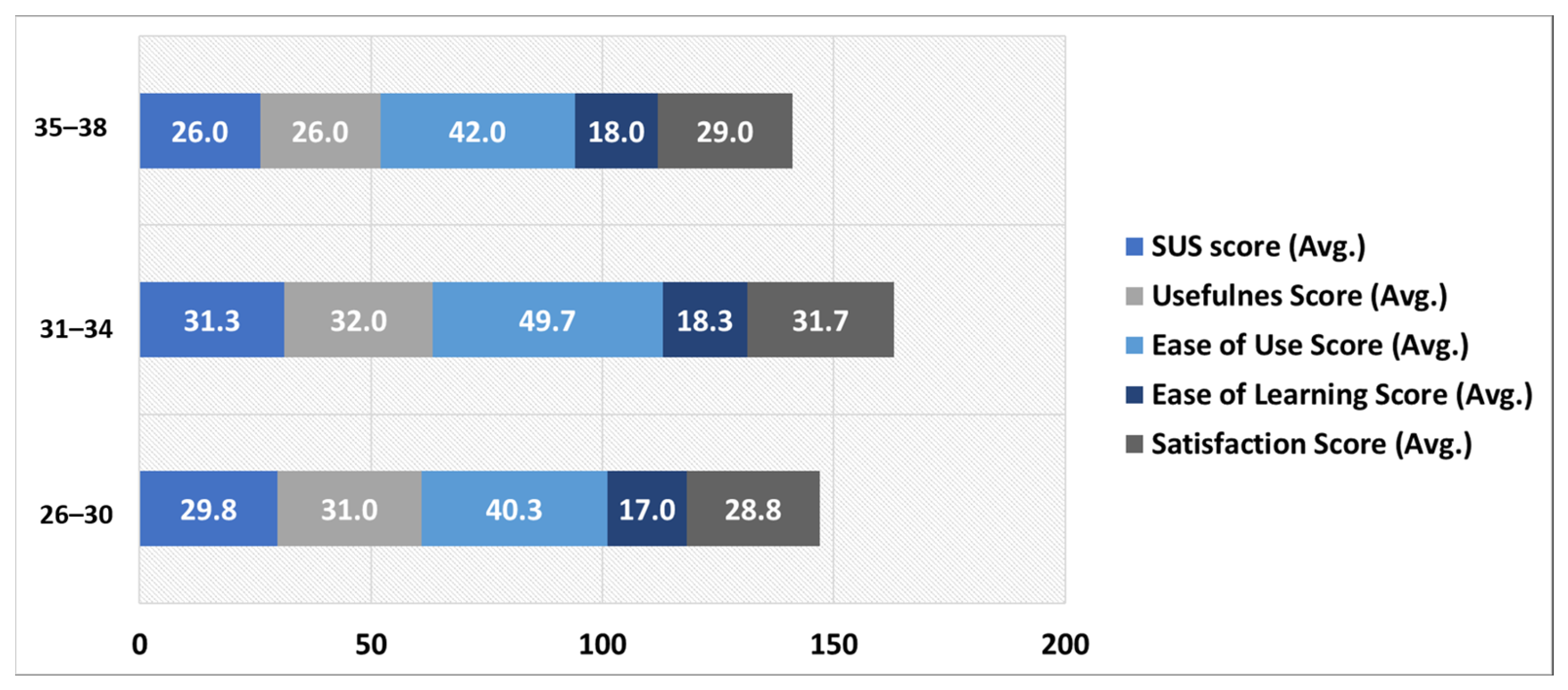

| Mean | SD | Min | Max | Skewness | Kurtosis | |

|---|---|---|---|---|---|---|

| SUS | 29.90 | 3.00 | 26 | 35 | 0.15 | −0.76 |

| Usefulness | 30.80 | 5.35 | 19 | 38 | −1.12 | 1.85 |

| Ease of Use | 43.30 | 7.60 | 31 | 54 | −0.52 | −0.91 |

| Ease of Learning | 17.50 | 2.36 | 13 | 20 | −1.00 | 0.15 |

| Satisfaction | 29.70 | 3.88 | 21 | 35 | −1.07 | 2.16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shaikh, S.; Yayilgan, S.Y.; Klimova, B.; Pikhart, M. Assessing the Usability of ChatGPT for Formal English Language Learning. Eur. J. Investig. Health Psychol. Educ. 2023, 13, 1937-1960. https://doi.org/10.3390/ejihpe13090140

Shaikh S, Yayilgan SY, Klimova B, Pikhart M. Assessing the Usability of ChatGPT for Formal English Language Learning. European Journal of Investigation in Health, Psychology and Education. 2023; 13(9):1937-1960. https://doi.org/10.3390/ejihpe13090140

Chicago/Turabian StyleShaikh, Sarang, Sule Yildirim Yayilgan, Blanka Klimova, and Marcel Pikhart. 2023. "Assessing the Usability of ChatGPT for Formal English Language Learning" European Journal of Investigation in Health, Psychology and Education 13, no. 9: 1937-1960. https://doi.org/10.3390/ejihpe13090140

APA StyleShaikh, S., Yayilgan, S. Y., Klimova, B., & Pikhart, M. (2023). Assessing the Usability of ChatGPT for Formal English Language Learning. European Journal of Investigation in Health, Psychology and Education, 13(9), 1937-1960. https://doi.org/10.3390/ejihpe13090140