Validation of the Persian Translation of the Children’s Test Anxiety Scale: A Multidimensional Rasch Model Analysis

Abstract

1. Introduction

2. Children’s Test Anxiety Scale (CTAS)

3. Method

3.1. Translation Procedure

3.2. Participants

3.3. Data Analysis

4. Findings

4.1. Rating Scale Structure

4.2. Unidimensionality Analysis

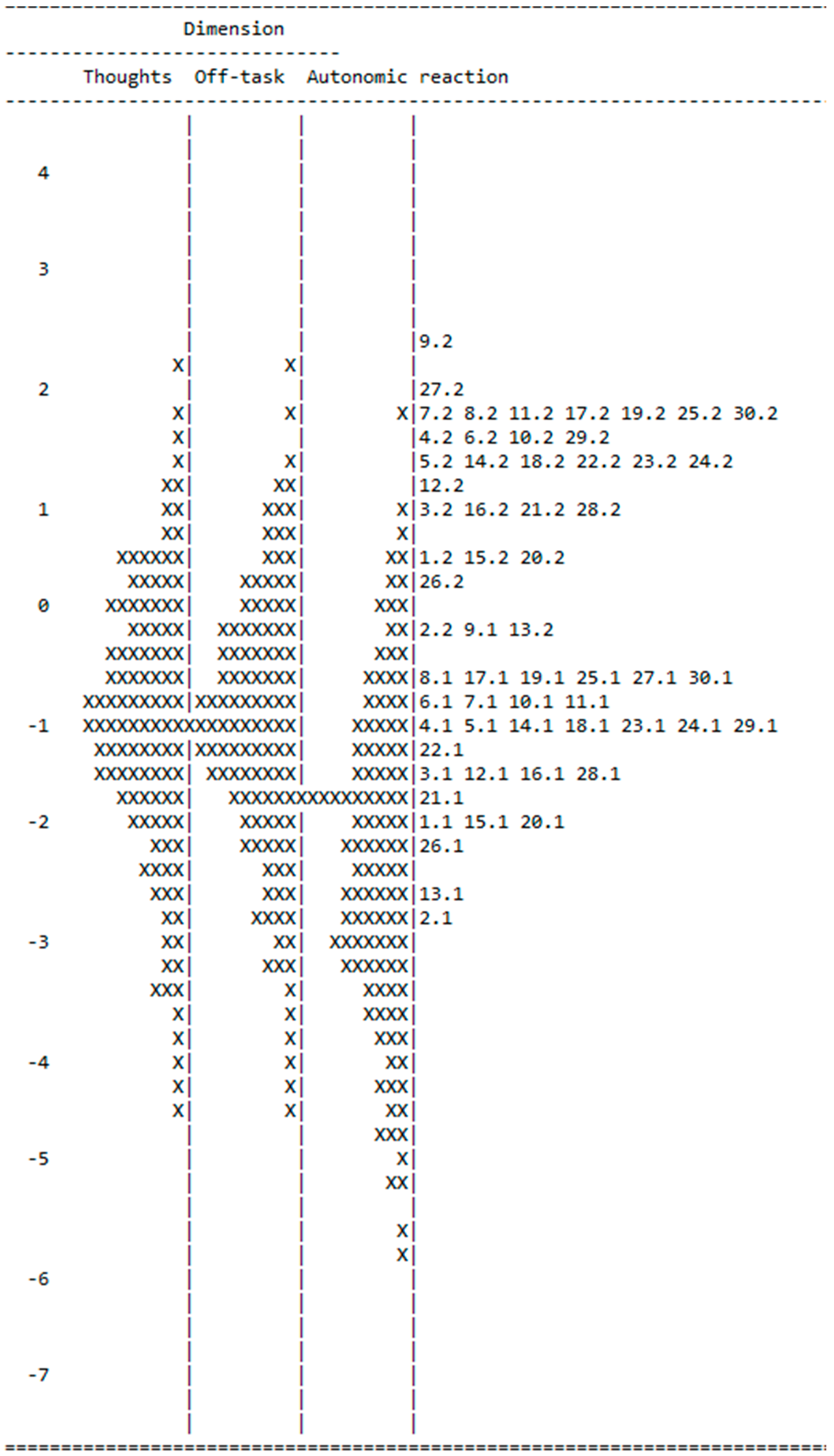

4.3. Consecutive Analysis

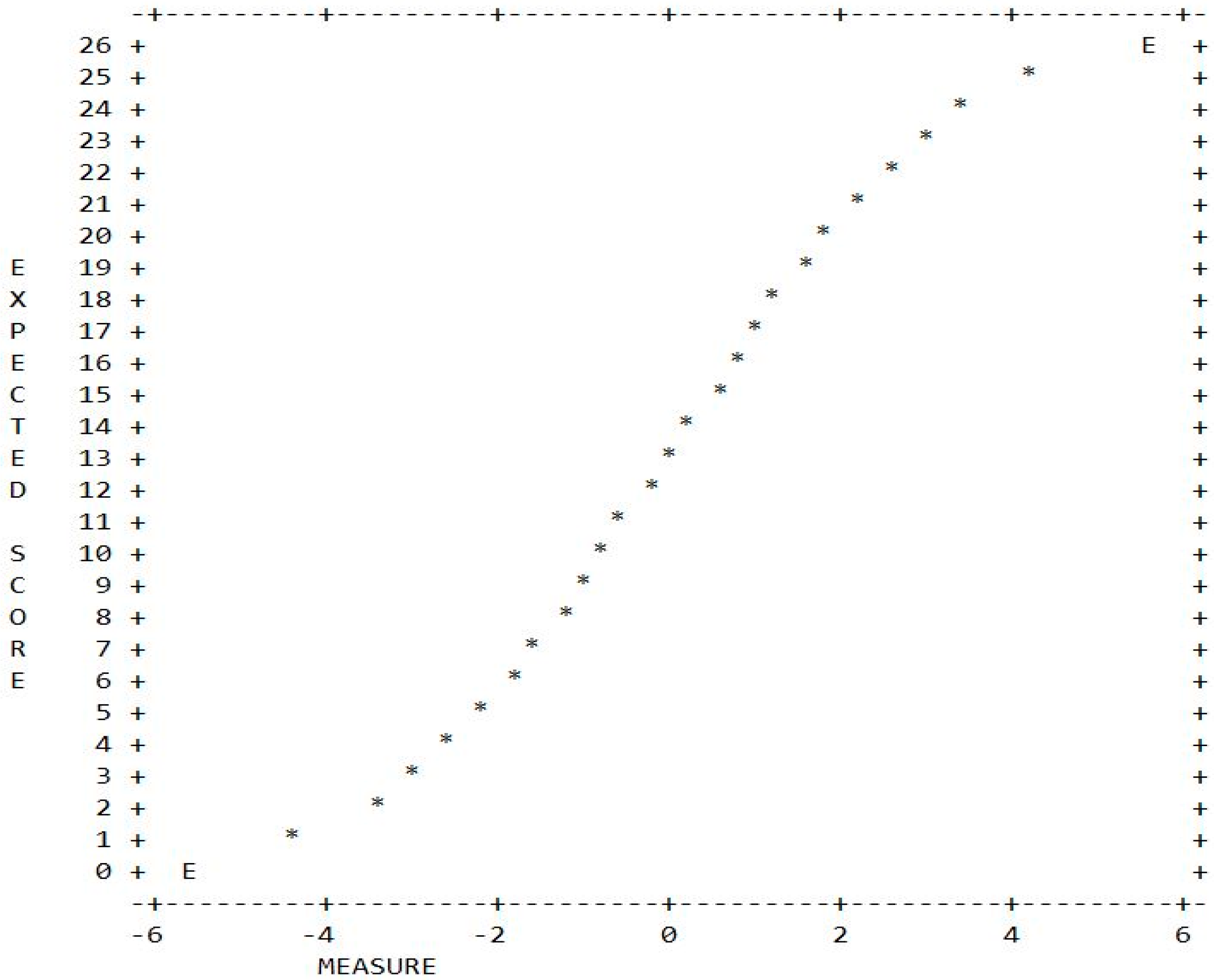

4.4. Raw Score to Interval Measure Conversion

4.5. External Validity

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Beidel, D.C.; Turner, S.M. Comorbidity of test anxiety and other anxiety disorders in children. J. Abnorm. Child Psychol. 1988, 16, 275–287. [Google Scholar] [CrossRef] [PubMed]

- Chapell, M.S.; Blanding, Z.B.; Silverstein, M.E.; Takahashi, M.; Newman, B.; Gubi, A.; McCann, N. Test anxiety and academic performance in undergraduate and graduate students. J. Educ. Psychol. 2005, 97, 268–274. [Google Scholar] [CrossRef]

- Tobias, S. Anxiety research in educational psychology. J. Educ. Psychol. 1979, 71, 573–582. [Google Scholar] [CrossRef]

- Wren, D.G.; Benson, J. Measuring test anxiety in children: Scale development and internal construct validation. Anxiety Stress Coping Int. J. 2004, 17, 227–240. [Google Scholar] [CrossRef]

- Spielberger, C.D.; Vagg, P.R. Test anxiety: A transactional process model. In Test Anxiety: Theory, Assessment, and Treatment; Spielberger, C.D., Vagg, P.R., Eds.; Taylor & Francis: Washington, DC, USA, 1995; pp. 3–14. [Google Scholar]

- Zeidner, M. Test Anxiety: The State of the Art; Plenum: New York, NY, USA, 1998. [Google Scholar]

- Lowe, P.A.; Lee, S.W.; Witteborg, K.M.; Prichard, K.W.; Luhr, M.E.; Cullinan, C.M.; Mildren, B.A.; Raad, J.M.; Cornelius, R.A.; Janik, M. The Test Anxiety Inventory for Children and Adolescents (TAICA). J. Psychoeduc. Assess. 2008, 26, 215–230. [Google Scholar] [CrossRef]

- Baghaei, P.; Cassady, J. Validation of the Persian translation of the Cognitive Test Anxiety Scale. Sage Open 2014, 4, 1–11. [Google Scholar] [CrossRef]

- Sarason, S.B.; Davidson, K.S.; Lighthall, F.F.; Waite, R.R.; Ruebush, B.K. Anxiety in Elementary School Children; Wiley: New York, NY, USA, 1960. [Google Scholar]

- Dunn, J.A. Factor structure of the Test Anxiety Scale for Children. J. Consult. Psychol. 1964, 28, 92. [Google Scholar] [CrossRef]

- Feld, S.; Lewis, J. Further evidence on the stability of the factor structure of the Test Anxiety Scale for Children. J. Consult. Psychol. 1967, 31, 434. [Google Scholar] [CrossRef][Green Version]

- Ludlow, L.H.; Guida, F.V. The Test Anxiety Scale for Children as a generalized measure of academic anxiety. Educ. Psychol. Meas. 1991, 51, 1013–1021. [Google Scholar] [CrossRef]

- Rhine, W.R.; Spaner, S.D. A comparison of the factor structure of the Test Anxiety Scale for Children among lower- and middle-class children. Dev. Psychol. 1973, 9, 421–423. [Google Scholar] [CrossRef]

- Spence, S.H. A measure of anxiety symptoms among children. Behav. Res. Ther. 1998, 36, 545–566. [Google Scholar] [CrossRef]

- Anisi, J. Spence Children’s Anxiety Scale; Azmonyar Pooya: Tehran, Iran, 2008. (In Persian) [Google Scholar]

- Andrich, D. A rating formulation for ordered response categories. Psychometrika 1978, 43, 561–573. [Google Scholar] [CrossRef]

- Wu, M.L.; Adams, R.J.; Haldane, S.A. ACER ConQuest [Computer Program v. 2]; Australian Council for Educational Research: Melbourne, Australia, 2007. [Google Scholar]

- Linacre, J.M. Sample size and item calibration stability. Rasch Meas. Trans. 1994, 7, 328. [Google Scholar]

- Rasch, G. Probabilistic Models for Some Intelligence and Attainment Tests; Danish Institute for Educational Research: Copenhagen, Denmark, 1960. [Google Scholar]

- Baghaei, P.; Shoahosseini, R. A note on the Rasch model and the instrument-based account of validity. Rasch Meas. Trans. 2019, 32, 1705–1708. [Google Scholar]

- Baghaei, P.; Tabatabaee-Yazdi, M. The logic of latent variable analysis as validity evidence in psychological measurement. Open Psychol. J. 2016, 9, 168–175. [Google Scholar] [CrossRef]

- Borsboom, D.; Mellenbergh, G.J.; Van Heerden, J. The concept of validity. Psychol. Rev. 2004, 111, 1061–1071. [Google Scholar] [CrossRef] [PubMed]

- Linacre, J.M. A User’s Guide to WINSTEPS; Winsteps: Chicago, IL, USA, 2017. [Google Scholar]

- Bond, T.G.; Fox, C.M. Applying the Rasch Model: Fundamental Measurement in the Human Sciences; Lawrence Erlbaum: Mahwah, NJ, USA, 2007. [Google Scholar]

- Linacre, J.M. Investigating rating scale category utility. J. Outcome Meas. 1999, 3, 103–122. [Google Scholar]

- Smith, E.V., Jr. Detecting and evaluating the impact of multidimensionality using item fit statistics and principal component analysis of residuals. J. Appl. Meas. 2002, 3, 205–231. [Google Scholar]

- Linacre, J.M. WINSTEPS Rasch Measurement [Computer Program]; Winsteps: Chicago, IL, USA, 2017. [Google Scholar]

- Adams, R.J.; Wilson, M.R.; Wang, W.-C. The multidimensional random coefficients multinomial logit model. Appl. Psychol. Meas. 1997, 21, 1–23. [Google Scholar] [CrossRef]

- Briggs, D.C.; Wilson, M. An introduction to multidimensional measurement using Rasch models. J. Appl. Meas. 2003, 4, 87–100. [Google Scholar]

- Wright, B.D.; Linacre, J.M. Reasonable mean-square fit values. Rasch Meas. Trans. 1994, 8, 370. [Google Scholar]

- Baghaei, P. Development and psychometric evaluation of a multidimensional scale of willingness to communicate in a foreign language. Eur. J. Psychol. Educ. 2013, 28, 1087–1103. [Google Scholar] [CrossRef]

- Baghaei, P. The application of multidimensional Rasch models in large scale assessment and evaluation: An empirical example. Electron. J. Res. Educ. Psychol. 2012, 10, 233–252. [Google Scholar]

- Tennant, A.; Geddes, J.M.L.; Chamberlain, M.A. The Barthel Index: An ordinal score or interval level measure? Clin. Rehabil. 1996, 10, 301–308. [Google Scholar] [CrossRef]

- Van Hartingsveld, F.; Lucas, C.; Kwakkel, G.; Lindeboom, R. Improved interpretation of stroke trial results using empirical Barthel item weights. Stroke 2006, 37, 162–166. [Google Scholar] [CrossRef]

- Knowles, S.R.; Skues, J. Development and validation of the Shy Bladder and Bowel Scale (SBBS). Cogn. Behav. Ther. 2016, 45, 324–338. [Google Scholar] [CrossRef]

- Van Newby, A.; Conner, G.R.; Bunderson, C.V. The Rasch model and additive conjoint measurement. J. Appl. Meas. 2009, 10, 348–354. [Google Scholar]

- Wright, B.D. Dichotomous Rasch model derived from counting right answers: Raw scores as sufficient statistics. Rasch Meas. Trans. 1989, 3, 62. [Google Scholar]

| Model Scoring | Thresholds | G² | Reliability | No. Parameters | Misfitting Items | AIC | CAIC | BIC |

|---|---|---|---|---|---|---|---|---|

| 1-dim 0123 | −0.39 0.35 −0.04 | 9543.13 | 0.95 | 33 | 22, 25 | 9609.13 | 9743.44 | 9710.44 |

| 1-dim 0122 | −0.18 0.18 | 8028.20 | 0.94 | 32 | 22, 25 | 8092.20 | 8222.44 | 8190.44 |

| 1-dim 0112 | −1.01 1.01 | 7439.54 | 0.93 | 32 | 25 | 7503.54 | 7633.78 | 7601.78 |

| 3-dim 0112 | −1.15 1.15 | 7307.87 | 0.87 0.86 0.85 | 37 | 25 | 7381.87 | 7532.46 | 7495.46 |

| Item | Estimate | Error | Infit MNSQ | Outfit MNSQ |

|---|---|---|---|---|

| 1 | −0.733 | 0.101 | 0.86 | 0.88 |

| 2 | −1.446 | 0.109 | 0.97 | 0.99 |

| 3 | −0.274 | 0.105 | 0.81 | 0.79 |

| 4 | 0.214 | 0.120 | 1.07 | 1.04 |

| 5 | 0.125 | 0.104 | 0.81 | 0.79 |

| 6 | 0.367 | 0.106 | 0.85 | 0.85 |

| 7 | 0.471 | 0.106 | 0.97 | 0.92 |

| 8 | 0.585 | 0.124 | 1.23 | 1.14 |

| 9 | 1.107 | 0.111 | 1.08 | 0.97 |

| 10 | 0.396 | 0.122 | 1.15 | 1.02 |

| 11 | 0.424 | 0.106 | 1.05 | 1.04 |

| 12 | −0.233 | 0.105 | 1.05 | 1.13 |

| 13 | −1.422 | 0.102 | 1.04 | 1.05 |

| 14 | 0.190 | 0.107 | 1.25 | 1.17 |

| 15 | −0.781 | 0.102 | 0.65 | 0.64 |

| 16 | −0.268 | 0.102 | 1.04 | 1.04 |

| 17 | 0.615 | 0.124 | 0.91 | 0.76 |

| 18 | 0.168 | 0.106 | 0.98 | 0.90 |

| 19 | 0.548 | 0.107 | 0.90 | 0.81 |

| 20 | −0.708 | 0.113 | 1.24 | 1.32 |

| 21 | −0.435 | 0.102 | 0.82 | 0.84 |

| 22 | 0.028 | 0.106 | 1.35 | 1.38 |

| 23 | 0.102 | 0.119 | 1.10 | 0.92 |

| 24 | 0.186 | 0.104 | 0.89 | 0.81 |

| 25 | 0.542 | 0.124 | 1.67 | 1.55 |

| 26 | −0.904 | 0.103 | 0.99 | 0.98 |

| 27 | 0.653 | 0.108 | 0.72 | 0.68 |

| 28 | −0.300 | 0.338 | 0.81 | 0.67 |

| 29 | 0.229 | 0.362 | 1.23 | 1.13 |

| 30 | 0.553 | 0.280 | 1.30 | 1.25 |

| Thresholds | Reliability | Eigenvalue (First Contrast) | Misfitting Items | Variance | |

|---|---|---|---|---|---|

| Thoughts | −1.52 1.52 | 0.82 | 1.8 | - | 3.22 |

| Off-task | −1.14 1.14 | 0.65 | 1.5 | - | 2.54 |

| Autonomic | −1.11 1.11 | 0.64 | 1.5 | 25 | 2.97 |

| 1 | 2 | 3 | |

|---|---|---|---|

| Thoughts | - | 0.62 | 0.62 |

| Off-task | 0.73 | - | 0.56 |

| Autonomic | 0.79 | 0.72 | - |

| Variance | 1.92 | 1.67 | 2.51 |

| Scales | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Thoughts | 0.89 | ||||||||||

| Off-Task | 0.58 ** | 0.79 | |||||||||

| Autonomic | 0.69 ** | 0.57 ** | 0.83 | ||||||||

| PTASC | 0.91 ** | 0.80 ** | 0.85 ** | 0.92 | |||||||

| Separation | 0.26 ** | 0.19 * | 0.24 ** | 0.27 ** | 0.65 | ||||||

| Social | 0.53 ** | 0.40 ** | 0.42 ** | 0.53 ** | 0.41 ** | 0.62 | |||||

| Obsessive | 0.45 ** | 0.36 ** | 0.39 ** | 0.47 ** | 0.42 ** | 0.38 ** | 0.60 | ||||

| Panic | 0.38 ** | 0.24 ** | 0.45 ** | 0.42 ** | 0.43 ** | 0.31 ** | 0.45 ** | 0.73 | |||

| Physical | 0.17 * | 0.12 | 0.12 | 0.16 * | 0.47 ** | 0.31 ** | 0.19 * | 0.24 ** | 0.57 | ||

| Generalized | 0.54 ** | 0.42 ** | 0.59 ** | 0.60 ** | 0.47 ** | 0.55 ** | 0.52 ** | 0.50 ** | 0.39 ** | 0.60 | |

| SCAS | 0.55 ** | 0.41 ** | 0.53 ** | 0.58 ** | 0.75 ** | 0.69 ** | 0.70 ** | 0.70 ** | 0.60 ** | 0.81 ** | 0.81 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shoahosseini, R.; Baghaei, P. Validation of the Persian Translation of the Children’s Test Anxiety Scale: A Multidimensional Rasch Model Analysis. Eur. J. Investig. Health Psychol. Educ. 2020, 10, 59-69. https://doi.org/10.3390/ejihpe10010006

Shoahosseini R, Baghaei P. Validation of the Persian Translation of the Children’s Test Anxiety Scale: A Multidimensional Rasch Model Analysis. European Journal of Investigation in Health, Psychology and Education. 2020; 10(1):59-69. https://doi.org/10.3390/ejihpe10010006

Chicago/Turabian StyleShoahosseini, Roya, and Purya Baghaei. 2020. "Validation of the Persian Translation of the Children’s Test Anxiety Scale: A Multidimensional Rasch Model Analysis" European Journal of Investigation in Health, Psychology and Education 10, no. 1: 59-69. https://doi.org/10.3390/ejihpe10010006

APA StyleShoahosseini, R., & Baghaei, P. (2020). Validation of the Persian Translation of the Children’s Test Anxiety Scale: A Multidimensional Rasch Model Analysis. European Journal of Investigation in Health, Psychology and Education, 10(1), 59-69. https://doi.org/10.3390/ejihpe10010006