A Framework in Calibration Process for Line Structured Light System Using Image Analysis

Abstract

1. Introduction

2. Literature Review

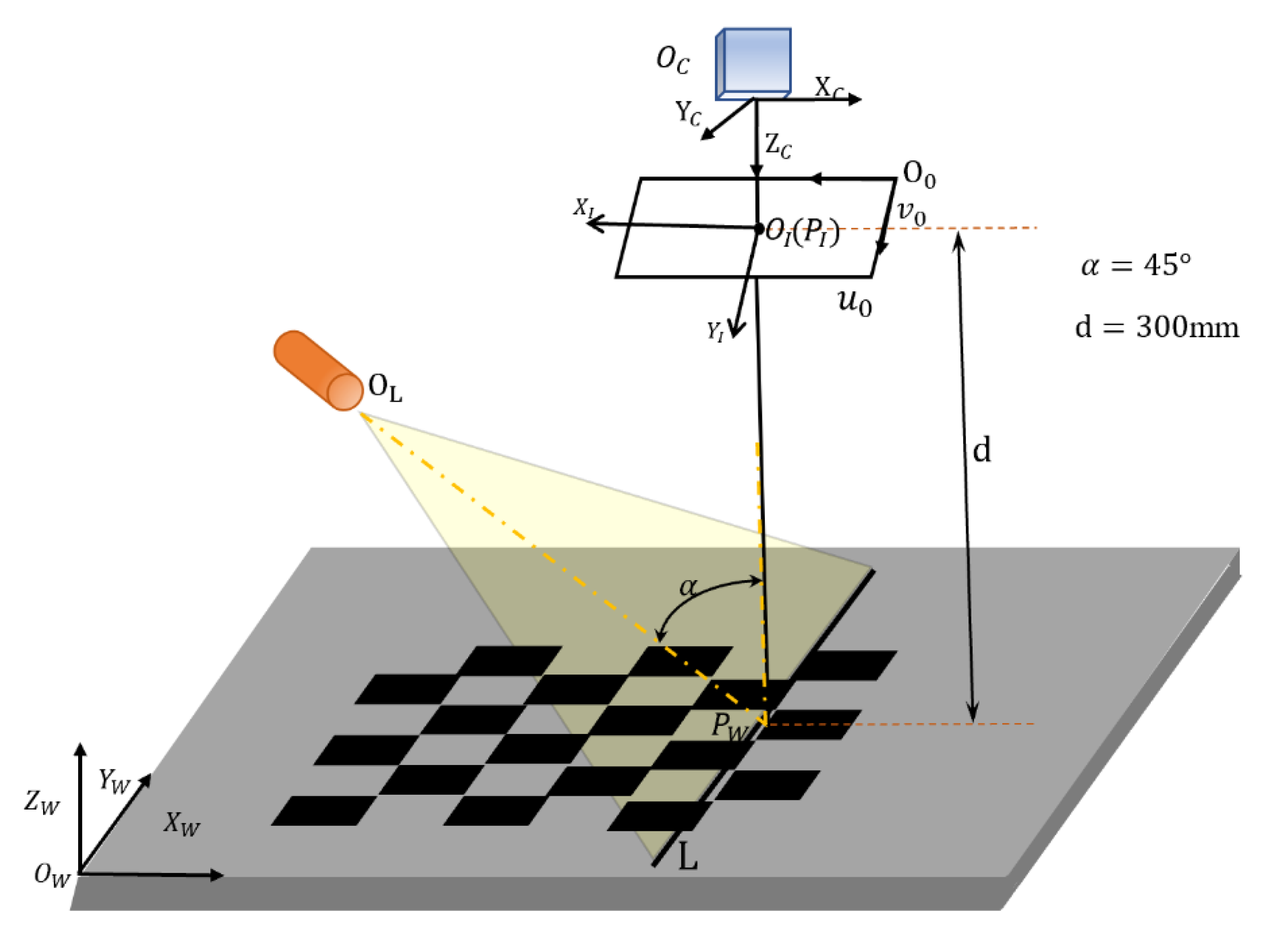

3. System Model of Line Structured Light

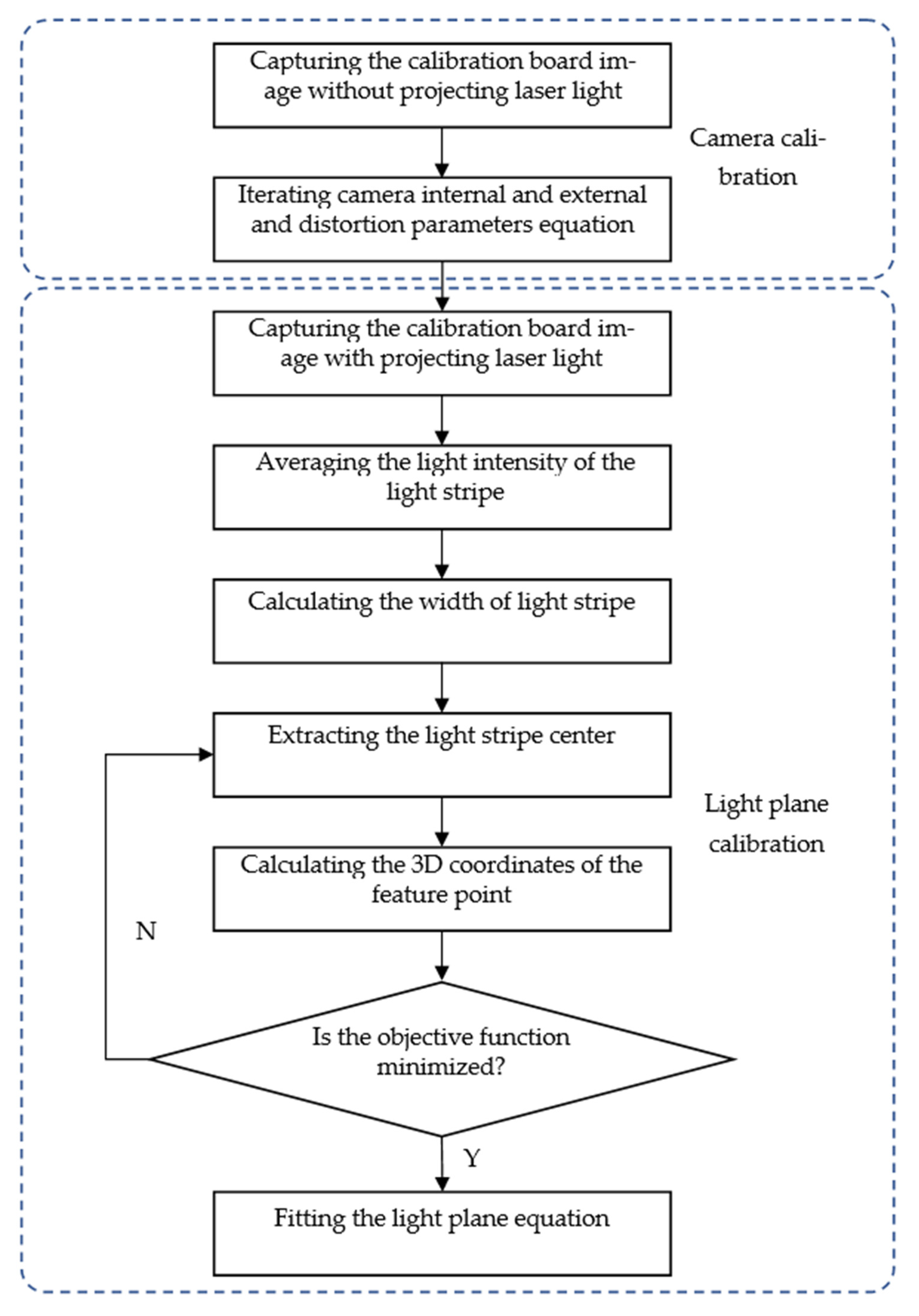

4. Calibration Process

4.1. Overview of Calibration Process

4.2. Camera Calibration

4.3. Light Plane Calibration

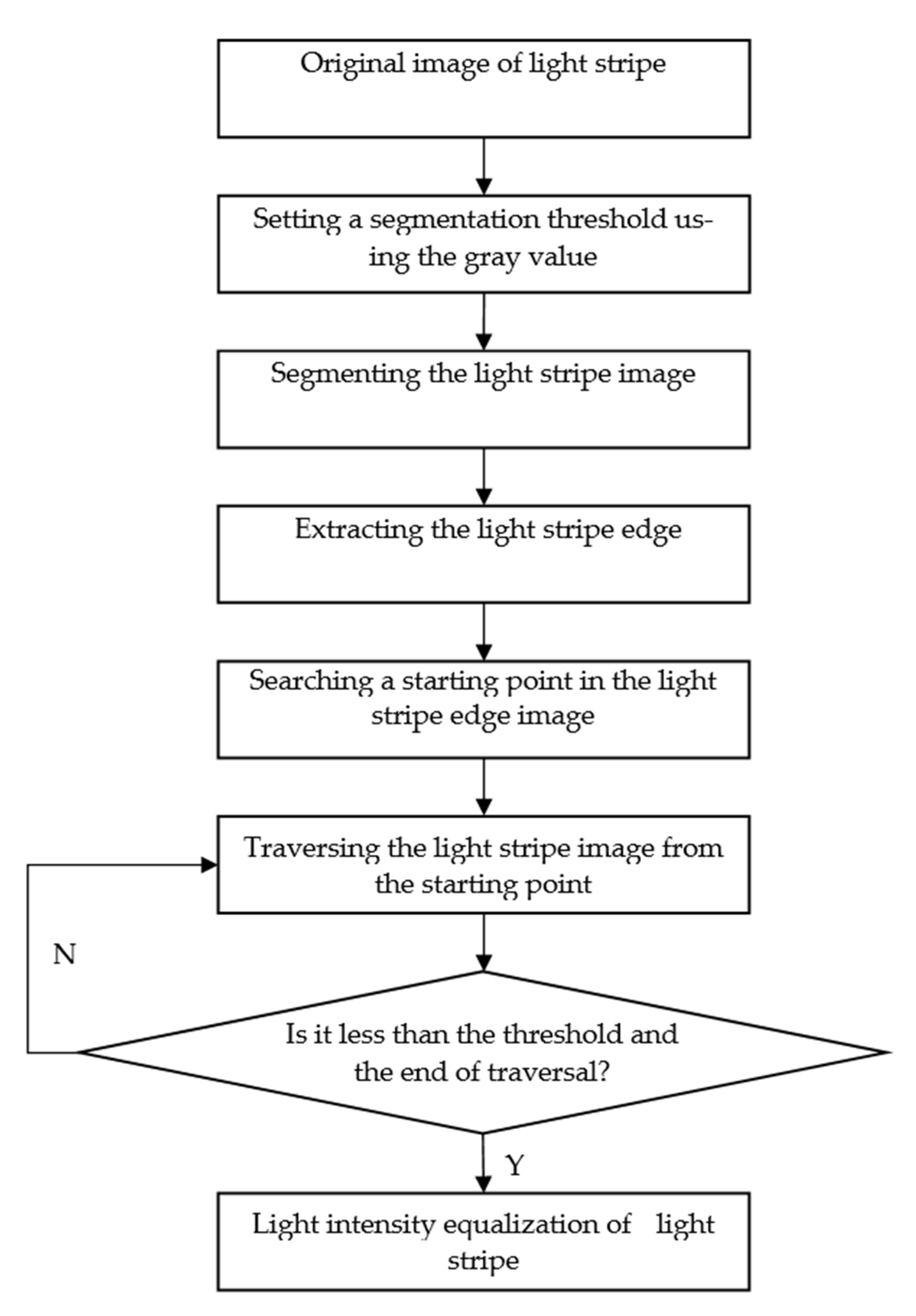

4.3.1. Light Intensity Equalization of Light Stripe

| Algorithm 1: Pseudo-code of Light Intensity Equalization Algorithm |

|

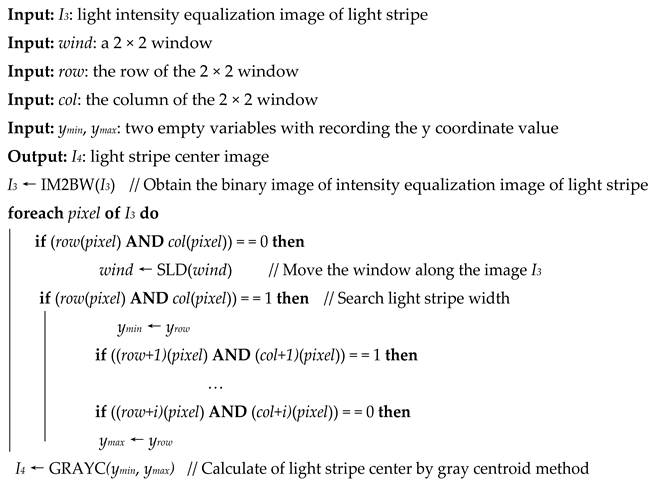

4.3.2. Adaptive Window-Based Extraction of Light Stripe Center

- Step 1: The light intensity equalization image is converted into a binary image. A 2 × 2 window is constructed in the binary image, and its upper left corner coincides with the upper left corner of the binary image. Let it move from left to right and from top to bottom in the binary image.

- Step 2: The 2 × 2 window and the pixels on the binary image are used to do the logic AND operation in order to determine whether the detected pixels are noise points or the points on the light stripe. The specific algorithm is as follows:

- Step 3: All the recorded y values are compared, and the maximum and minimum values of the y values are determined. The M value in Equation (6) is calculated by using the different between the maximum and minimum values of the y values.

- Step 4: The light stripe center is calculated by using the Equation (6) and the light stripe center image I4 is obtained by labelling the light stripe center in the light stripe image.

| Algorithm 2: Pseudo-code of Adaptive Window Algorithm of Light Stripe Center Extraction |

|

4.3.3. Light Plane Fitting

5. Experiment and Results

5.1. Camera Calibration Results

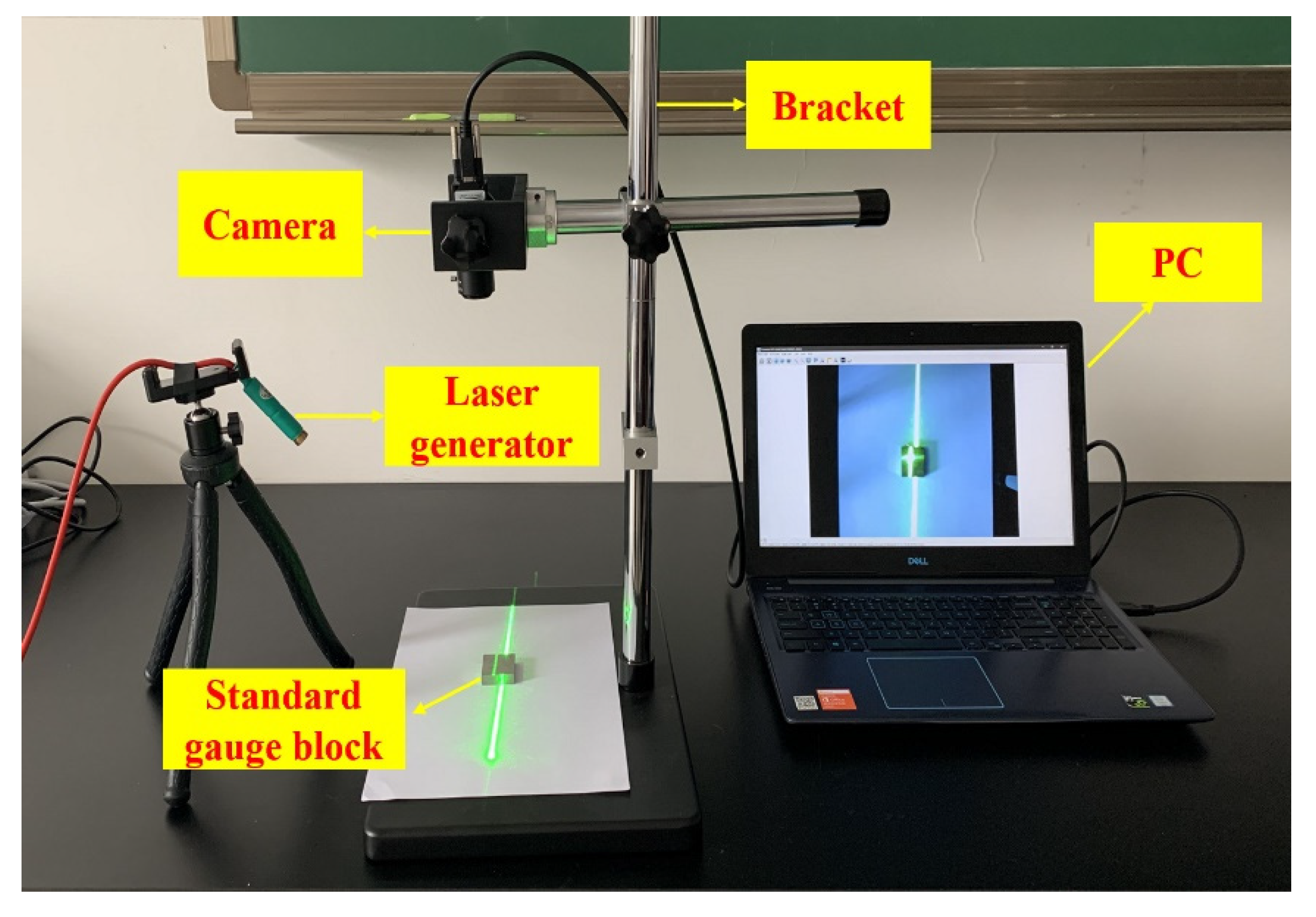

5.2. Light Plane Calibration Results

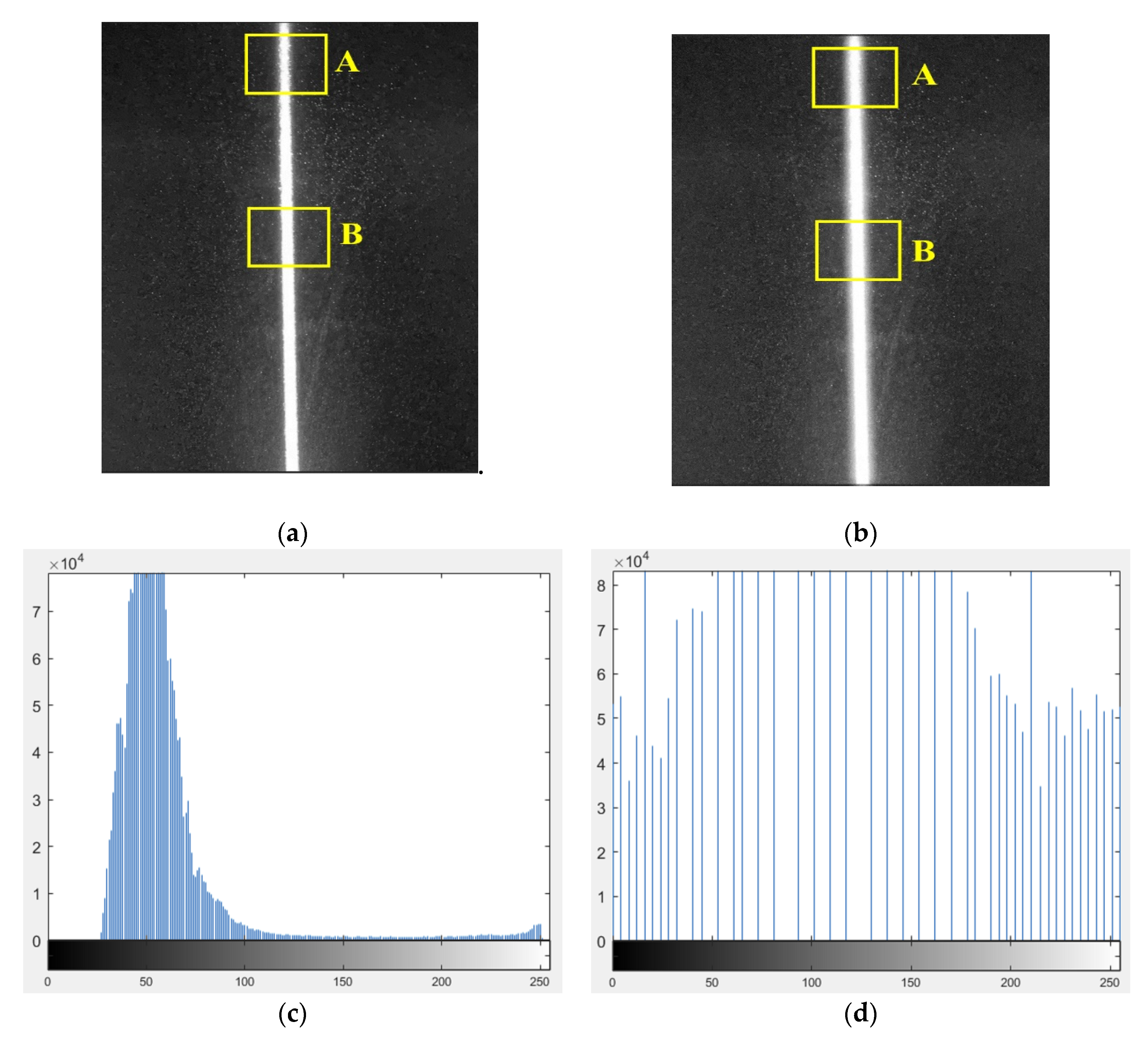

5.2.1. Light Intensity Averaging Results of Light Stripe Image

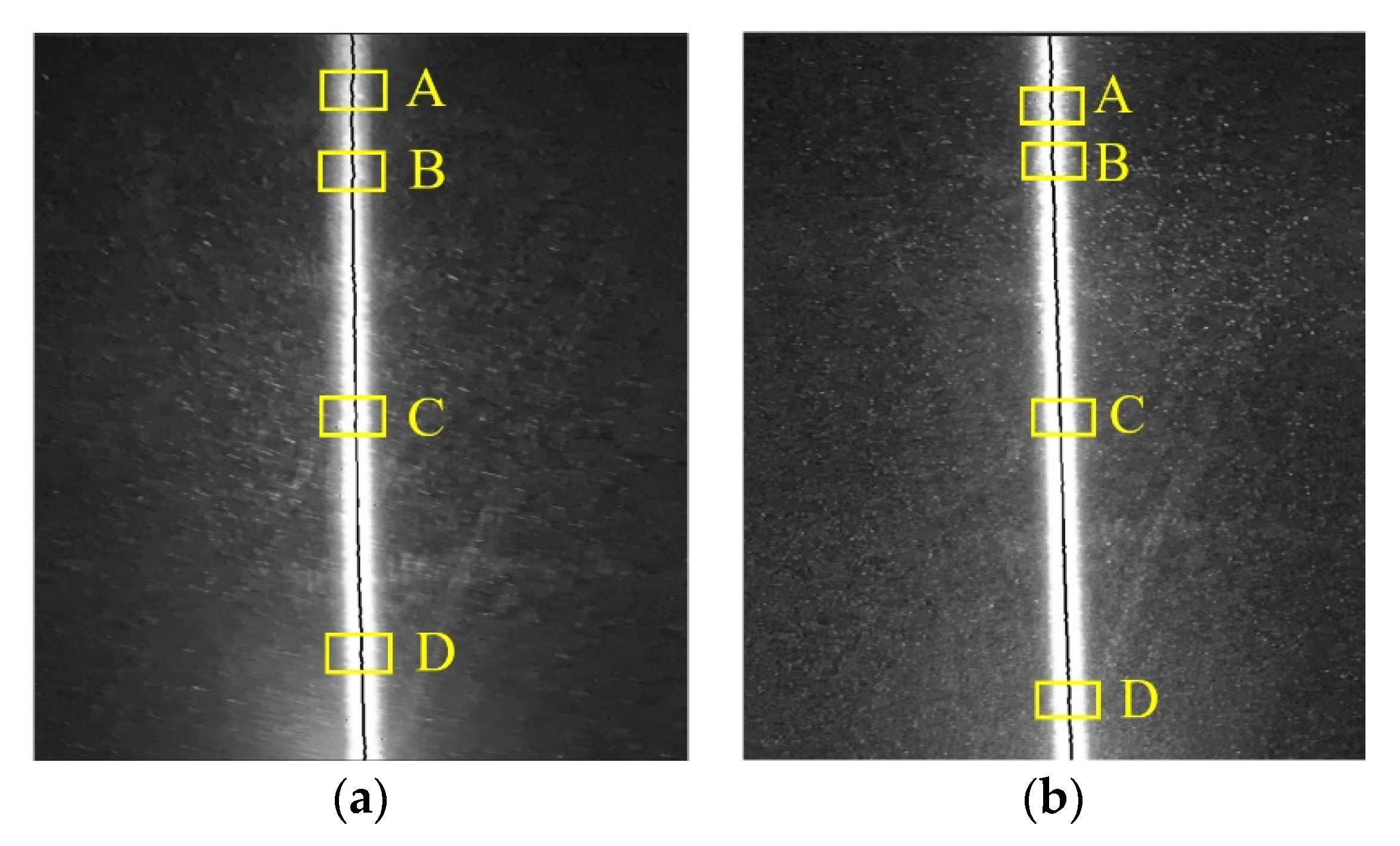

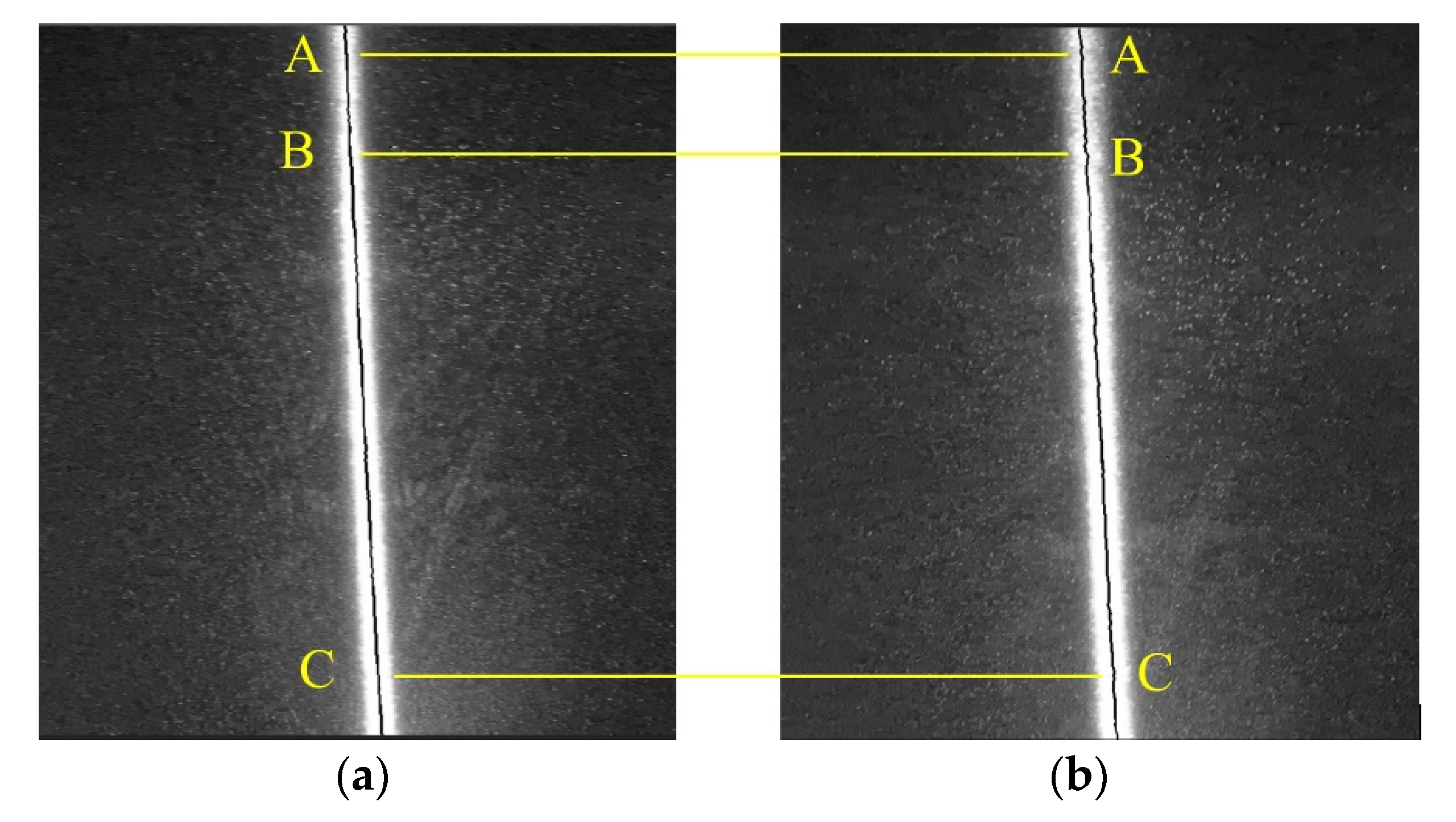

5.2.2. Results of Light Stripe Center Extraction

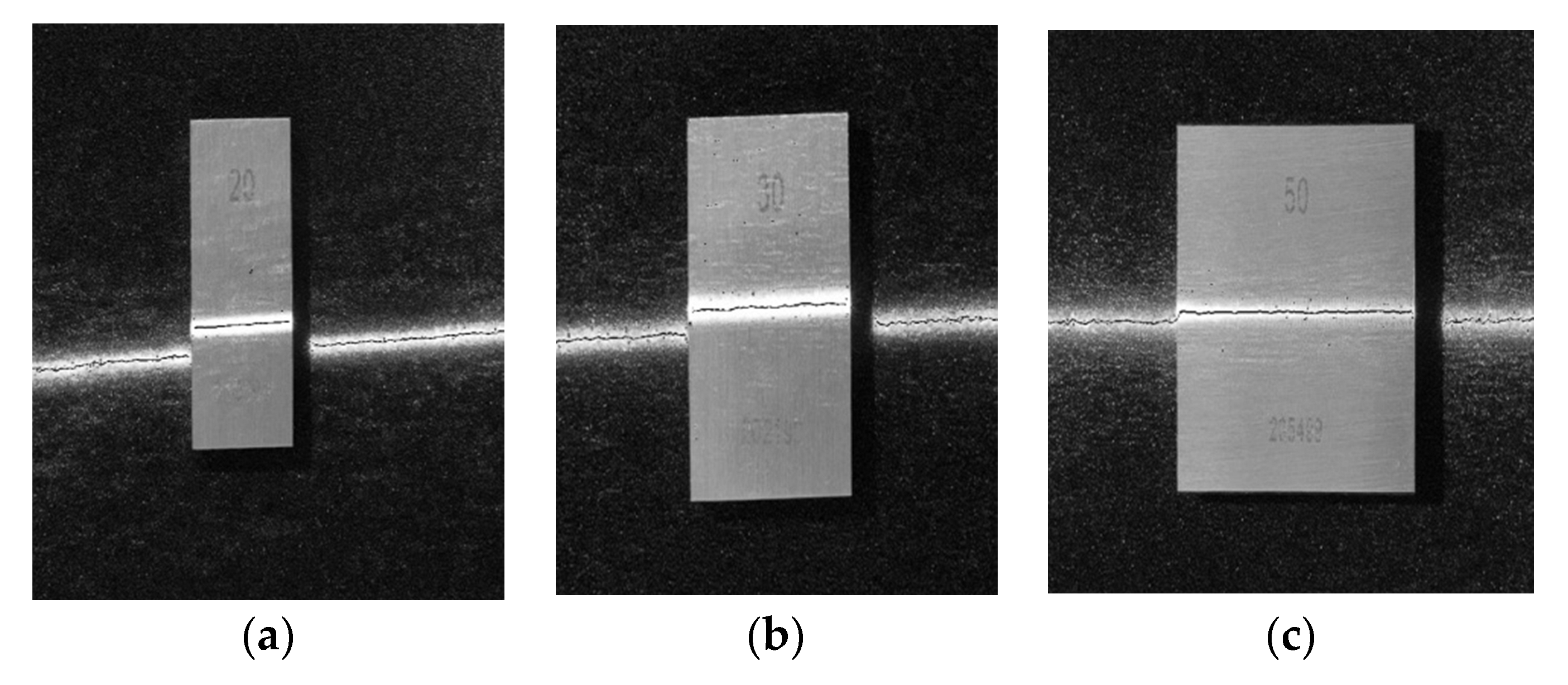

5.3. Measurement Experiment and Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Maul, A.; Mari, L.; Torres Irribarra, D.; Wilson, M. The quality of measurement results in terms of the structural features of the measurement process. Measurement 2018, 116, 611–620. [Google Scholar] [CrossRef]

- Wang, Z. Review of real-time three-dimensional shape measurement techniques. Measurement 2020, 156, 107624. [Google Scholar] [CrossRef]

- Suk, J.; Kim, S.; Ryoo, I. Non-Contact Plant Growth Measurement Method and System Based on Ubiquitous Sensor Network Technologies. Sensors 2011, 11, 4312–4334. [Google Scholar] [CrossRef] [PubMed]

- Hishikawa, Y.; Yamagoe, K.; Onuma, T. Non-contact measurement of electric potential of photovoltaic cells in a module and novel characterization technologies. Jpn. J. Appl. Phys. 2015, 54, 8KG05. [Google Scholar] [CrossRef]

- Wei, Y.; Ding, Z.; Huang, H. A non-contact measurement method of ship block using image-based 3D reconstruction technology. Ocean Eng. 2019, 178, 463–475. [Google Scholar] [CrossRef]

- Kulik, E.A.; Cahalan, P. Laser Profilometry of Polymeric Materials. Cells Mater. 1997, 17, 103–109. [Google Scholar]

- Mita, G.; Dobránsky, J.; Rubarsk, J.; Olejárová, S. Application of Laser Profilometry to Evaluation of the Surface of the Workpiece Machined by Abrasive Waterjet Technology. Appl. Sci. 2019, 9, 2134. [Google Scholar] [CrossRef]

- Krenický, T. Non-contact study of surfaces created using the AWJ technology. Manuf. Technol. 2015, 15, 61–64. [Google Scholar] [CrossRef]

- Xua, X.; Fei, Z.; Yang, J.; Tan, Z.; Luo, M. Line structured light calibration method and centerline extraction: A review. Results Phys. 2020, 19, 103637. [Google Scholar] [CrossRef]

- Chen, F.; Brown, G.; Song, M. Overview of three-dimensional shape measurement using optical methods. Opt. Eng. 1999, 39, 10–22. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, X.; Zhang, Y.; Lyu, S. Calibration algorithm of mobile robot vision camera. Int. J. Precis. Eng. Manuf. 2016, 1, 51–57. [Google Scholar] [CrossRef]

- Heinze, C.; Sptropouls, S.; Hussmann, S.; Perwass, C. Automated robust metric calibration algorithm for multifocus plenoptic cameras. IEEE Trans. Instrum. Meas. 2016, 65, 1197–1205. [Google Scholar] [CrossRef]

- Risholm; Petter, T.; Kirkhus; Trine, T.; Jens, T.; Thorstensen, J. Adaptive Structured Light with Scatter Correction for High-Precision Underwater 3D Measurements. Sensors 2019, 19, 1043. [Google Scholar] [CrossRef] [PubMed]

- Xin, J.; Shi, J.; Chao, D.; Bin, S. On site calibration of inner defect detection based on structured light. Vibroeng. Procedia 2018, 20, 161–166. [Google Scholar] [CrossRef]

- Han, Y.; Fan, J.; Yang, X. A structured light vision sensor for on-line weld bead measurement and weld quality inspection. Int. J. Adv. Manuf. Technol. 2020, 106, 2065–2078. [Google Scholar] [CrossRef]

- Ha, J.; Her, K. Calibration of structured light stripe system using plane with slits. Opt. Eng. 2013, 52, 1–4. [Google Scholar] [CrossRef][Green Version]

- Nan, M.; Kun, W.; Ze, X.; Ping, R. Calibration of a flexible measurement system based on industrial articulated robot and structured light sensor. Opt. Eng. 2017, 56, 1–9. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, Y.; Zhang, Y.; Shao, T.; Yuan, M. Research on 3D measurement model by line structure light vision. Eurasip. J. Image Video Process. 2018, 1, 1. [Google Scholar] [CrossRef]

- Li, Z.; Cui, J.; Wu, J.; Zhou, T.; Tan, J. A uniform and flexible model for three-dimensional measurement of line-structured light sensor. Tenth Int. Symp. Precis. Eng. Meas. Instrum. 2019, 11053, 110534N. [Google Scholar] [CrossRef]

- Li, G.; Tan, Q.; Kou, Y.; Zhang, Y. A New Method for Calibrating Line Structured-light 3D Measurement Model. Acta Photonica Sin. 2013, 42, 1334–1339. [Google Scholar] [CrossRef]

- Ze, X.; Peng, F.; Peng, Y.; Ping, R. Calibration of 6-DOF industrial robots based on line structured light. Optik. 2019, 183, 1166–1178. [Google Scholar] [CrossRef]

- Sun, Q.; Liu, R.; Zhang, H.; Tan, Q. A complete calibration method for a line structured light vision system. Lasers Eng. 2017, 37, 77–93. [Google Scholar]

- Xin, Y.; Zi, M.; Tian, F.; Peng, L. Novel calibration method for axes in line structured light vision measurement system. Chin. J. Lasers 2012, 39, 1–9. [Google Scholar] [CrossRef]

- Orghidan, R.; Salvi, J.; Gordan, M.; Florea, C.; Batlle, J. Structured light self-calibration with vanishing points. Mach. Vis. Appl. 2014, 25, 489–500. [Google Scholar] [CrossRef]

- Liu, Z.; Li, X.; Li, F.; Zhang, G. Calibration method for line-structured light vision sensor based on a single ball target. Opt. Lasers Eng. 2015, 69, 20–28. [Google Scholar] [CrossRef]

- Ke, F.; Xie, J.; Chen, Y. A flexible and high precision calibration method for the structured light vision system. Optik 2016, 127, 310–314. [Google Scholar] [CrossRef]

- Zeng, H.; Tang, S.; Song, Z.; Gu, F.; Huang, Z. Calibration of a Structured Light Measurement System Using Binary Shape Coding. Comput. Vis. Syst. 2017, 10528, 603–614. [Google Scholar] [CrossRef]

- Suresh, V.; Holton, J.; Beiwen, L. Structured light system calibration with unidirectional fringe patterns. Opt. Laser Eng. 2018, 106, 86–93. [Google Scholar] [CrossRef]

- Pan, X.; Liu, Z. High-accuracy calibration of line-structured light vision sensor by correction of image deviation. Opt. Express 2019, 27, 4364–4385. [Google Scholar] [CrossRef] [PubMed]

- Shao, M.; Dong, J.; Madessa, A. A new calibration method for line-structured light vision sensors based on concentric circle feature. J. Eur. Opt. Soc. Rapid. 2019, 15, 1. [Google Scholar] [CrossRef]

- Steger, C. An unbiased detector of curvilinear structures. IEEE Trans. Pattern. Anal. 1998, 20, 113–125. [Google Scholar] [CrossRef]

- Jang, J.H.; Hong, K.S. Detection of curvilinear structures and reconstruction of their regions in gray-scale images. Pattern. Recogn. 2002, 35, 807–824. [Google Scholar] [CrossRef]

- Izquierdo, M.A.G.; Sanchez, M.T. Sub-pixel measurement of 3D surfaces by laser scanning. Sens. Actuators A Phys. 1999, 76, 1–8. [Google Scholar] [CrossRef]

- Seokbae, S.; Hyunpung, P.; Lee, K.H. Automated laser scanning system for reverse engineering and inspection. Int. J. Mach. Tool. Manuf. 2002, 42, 889–897. [Google Scholar] [CrossRef]

- Mei, J.; Lai, L. Development of a novel line structured light measurement instrument for complex manufactured parts. Rev. Sci. Instrum. 2019, 90, 67–77. [Google Scholar] [CrossRef]

- Sun, Q.; Hou, Y.; Tan, Q.; Xu, Z. A fast and robust detection algorithm for extraction of the center of a structured light stripe. Lasers Eng. 2015, 31, 41–51. [Google Scholar]

- Cao, M.; Wang, D. The Application of CCD Pixel Positioning Subdivision in the Reach of Laser Triangulation Measurement. Int. J. Multimed. Ubiquitous Eng. 2016, 11, 41–51. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Chen, B. Improving the extracting precision of stripe center for structured light measurement. Optik 2020, 58, 9603–9613. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern. Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- More, J.J. The Levenberg-Marquardt Algorithm: Implementation and Theory. Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar]

| Proposed Method | Skeleton Method | Gray Centroid Method | ||

|---|---|---|---|---|

| Group 1 | max | 0.024 | 0.054 | 0.076 |

| min | 0.015 | 0.025 | 0.044 | |

| Group 2 | max | 0.031 | 0.105 | 0.098 |

| min | 0.014 | 0.036 | 0.053 | |

| Group 3 | max | 0.029 | 0.075 | 0.104 |

| min | 0.020 | 0.036 | 0.073 | |

| Group 4 | max | 0.045 | 0.084 | 0.083 |

| min | 0.015 | 0.034 | 0.054 | |

| Group 5 | max | 0.019 | 0.118 | 0.098 |

| min | 0.016 | 0.058 | 0.067 |

| Standard Gauge Blocks (mm) | Proposed Method | Skeleton Method | Gray Centroid Method | |

|---|---|---|---|---|

| 20 | max | 0.014 | 0.094 | 0.085 |

| min | 0.008 | 0.017 | 0.047 | |

| 30 | max | 0.021 | 0.089 | 0.083 |

| min | 0.008 | 0.042 | 0.057 | |

| 50 | max | 0.016 | 0.105 | 0.092 |

| min | 0.009 | 0.056 | 0.054 |

| Standard Gauge Blocks (mm) | Proposed Method | Skeleton Method | Gray Centroid Method | |

|---|---|---|---|---|

| 20 | max | 0.013 | 0.102 | 0.130 |

| min | 0.009 | 0.064 | 0.052 | |

| 30 | max | 0.023 | 0.092 | 0.104 |

| min | 0.015 | 0.043 | 0.042 | |

| 50 | max | 0.018 | 0.113 | 0.110 |

| min | 0.012 | 0.062 | 0.040 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, T.; Liu, S.; Wang, C.; Fu, Q.; Zheng, X. A Framework in Calibration Process for Line Structured Light System Using Image Analysis. Processes 2021, 9, 917. https://doi.org/10.3390/pr9060917

Luo T, Liu S, Wang C, Fu Q, Zheng X. A Framework in Calibration Process for Line Structured Light System Using Image Analysis. Processes. 2021; 9(6):917. https://doi.org/10.3390/pr9060917

Chicago/Turabian StyleLuo, Tianhong, Suchwen Liu, Chenglin Wang, Qiang Fu, and Xunjia Zheng. 2021. "A Framework in Calibration Process for Line Structured Light System Using Image Analysis" Processes 9, no. 6: 917. https://doi.org/10.3390/pr9060917

APA StyleLuo, T., Liu, S., Wang, C., Fu, Q., & Zheng, X. (2021). A Framework in Calibration Process for Line Structured Light System Using Image Analysis. Processes, 9(6), 917. https://doi.org/10.3390/pr9060917