A Systematic Model for Process Development Activities to Support Process Intelligence

Abstract

:1. Introduction

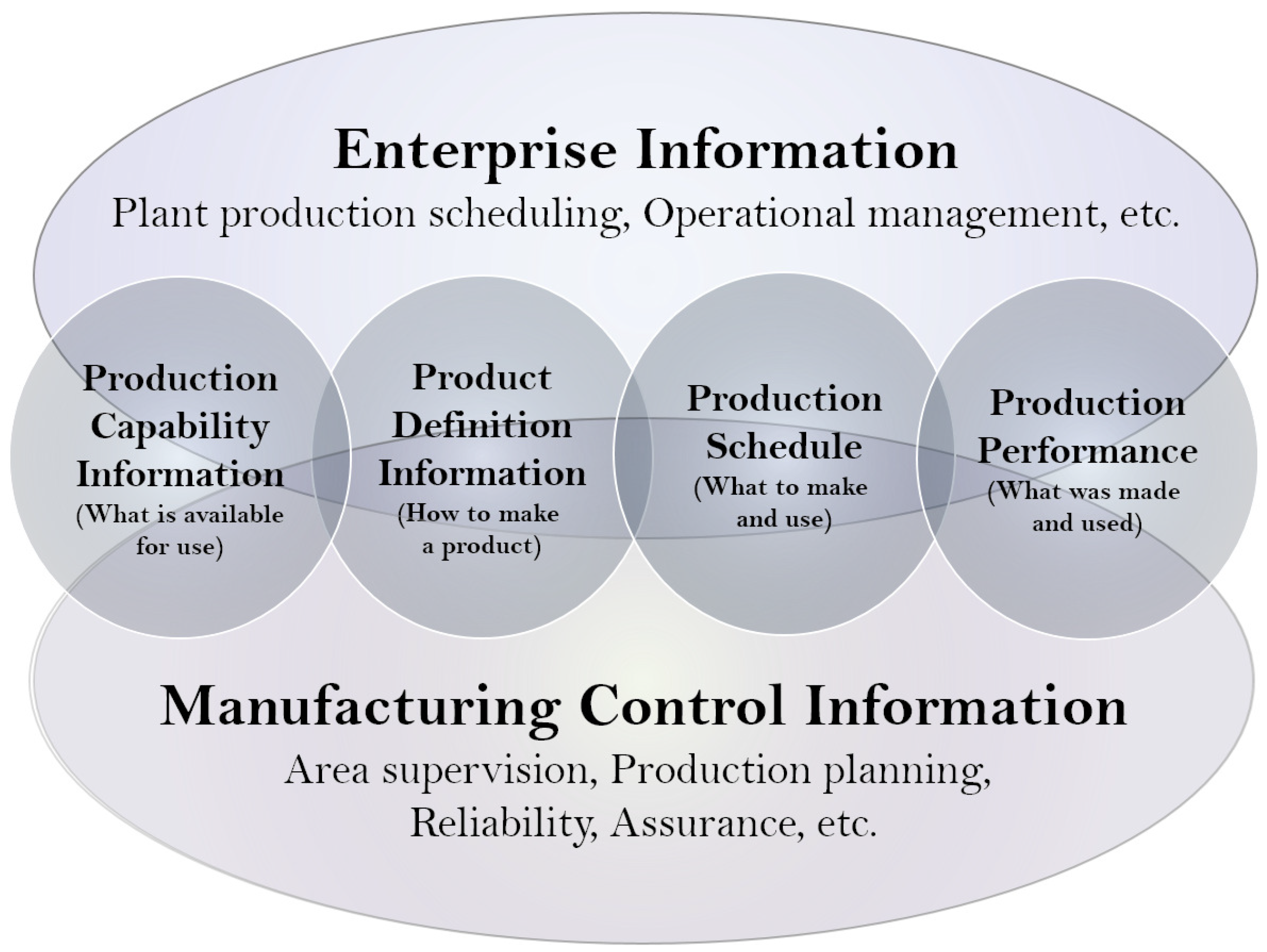

1.1. Decision-Making in the Enterprise

1.2. Enterprise Integration

- Level 6 Enterprise: Corporate Management (External Influences)

- Level 5 Facility: Planning Production

- Level 4 Section: Material/Resource Supervision

- Level 3 Cell: Coordinate Multiple Machines

- Level 2 Station: Command Machine Sequences

- Level 1 Equipment: Activate Sequences of Motion (Plant Machinery and Equipment)

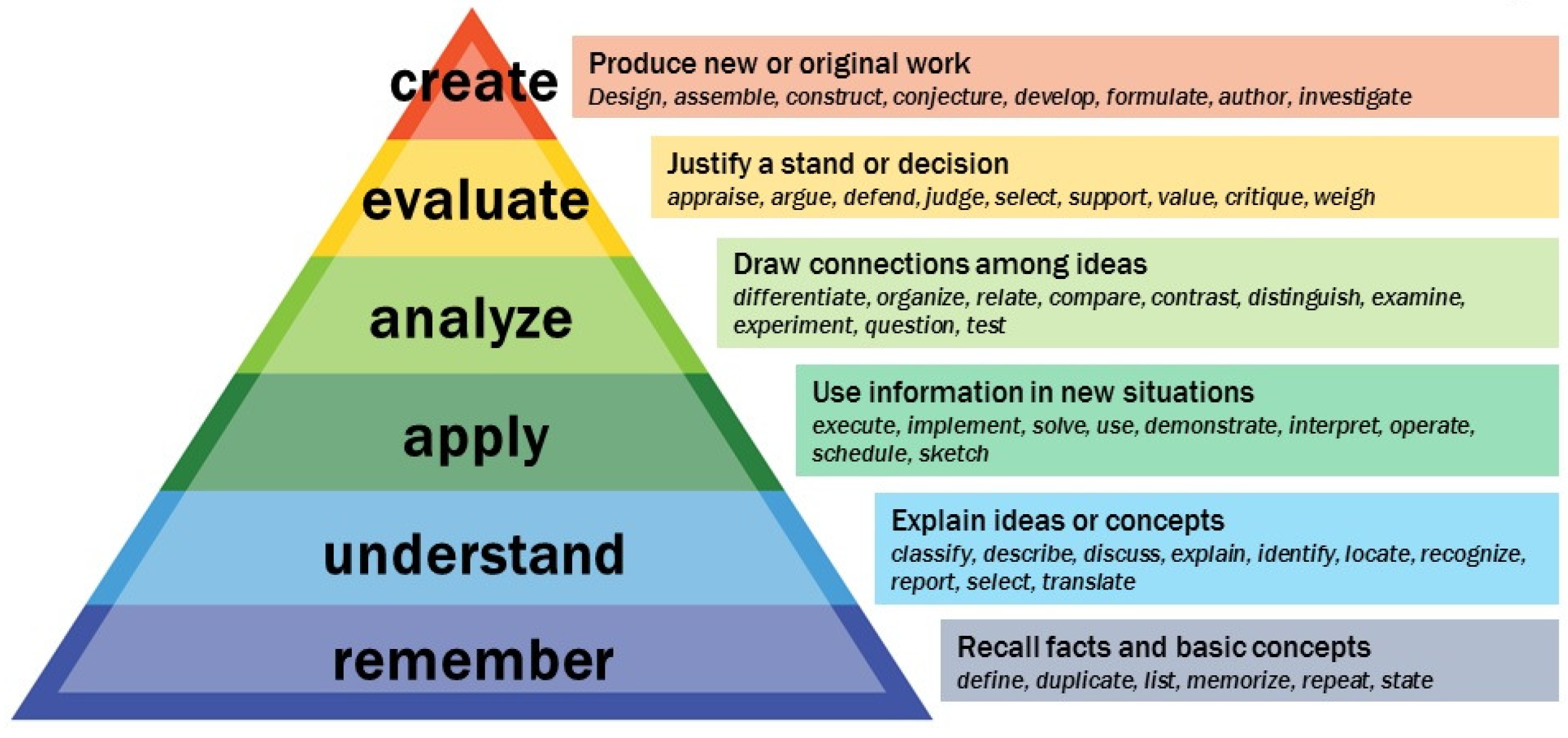

1.3. Knowledge Management

- a shared and common understanding of a domain among people and across application systems and

- an explicit conceptualization that describes the semantics of the data.

1.3.1. Bloom’s Cognition Taxonomy

- Remember: Recognizing, Recalling.

- Understand: Interpreting, Exemplifying, Classifying, Summarizing, Inferring, Comparing, Explaining.

- Apply: Executing, Implementing.

- Analyze: Differentiating, Organizing, Attributing.

- Evaluate: Checking, Critiquing.

- Create: Generating, Planning, Producing.

- Factual Knowledge

- Knowledge of terminology

- Knowledge of specific details & elements

- Conceptual Knowledge

- Knowledge of classifications and categories

- Knowledge of principles and generalizations

- Knowledge of theories, models, and structures

- Procedural Knowledge

- Knowledge of subject-specific skills and algorithms

- Knowledge of subject-specific techniques and methods

- Knowledge of criteria for determining when to use appropriate procedures

- Metacognitive Knowledge

- Strategic Knowledge

- Knowledge about cognitive tasks (appropriate contextual and conditional knowledge) Self-knowledge

1.4. Transactional System and Data Management

1.4.1. Transactional System

1.4.2. Data Management

2. Materials and Methods

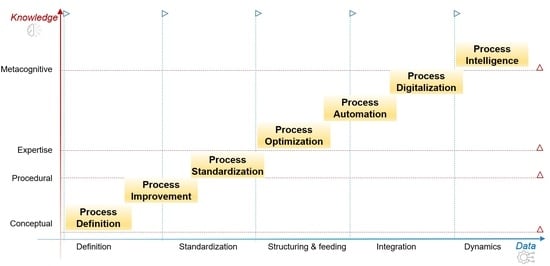

2.1. Process System Model

2.1.1. Process Definition

2.1.2. Process Improvement

2.1.3. Process Standardization

2.1.4. Process Optimization

2.1.5. Process Automation

2.1.6. Process Digitalization

2.1.7. Process Intelligence

2.2. Data System Model

2.2.1. Definition

2.2.2. Improvement

2.2.3. Standardization

2.2.4. Integration and Feeding

2.2.5. Dynamics

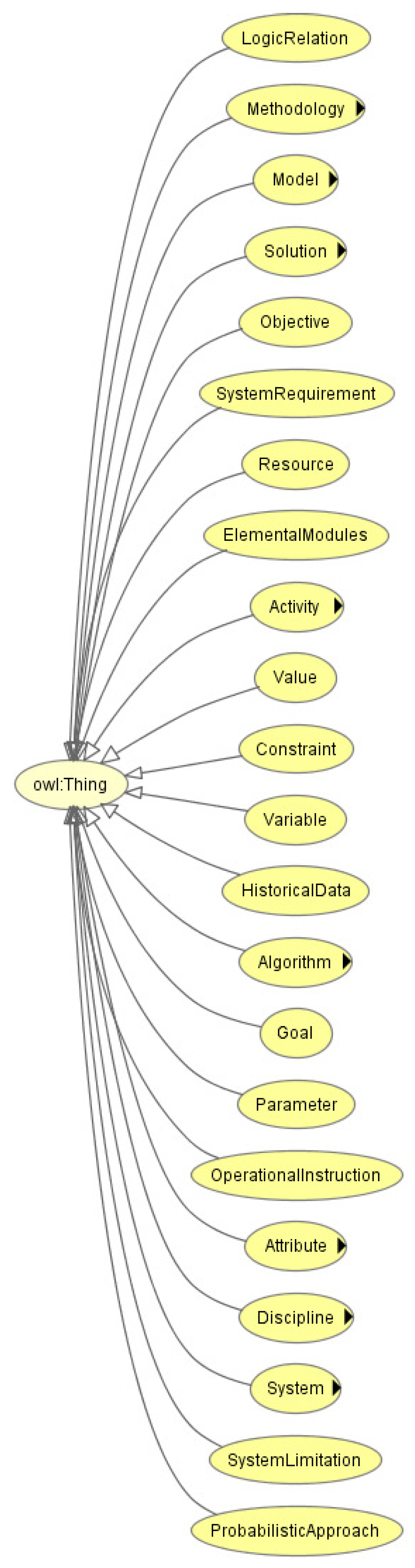

2.3. Knowledge System Model

2.3.1. Conceptual

2.3.2. Procedural

2.3.3. Expertise

2.3.4. Metacognitive

3. Results

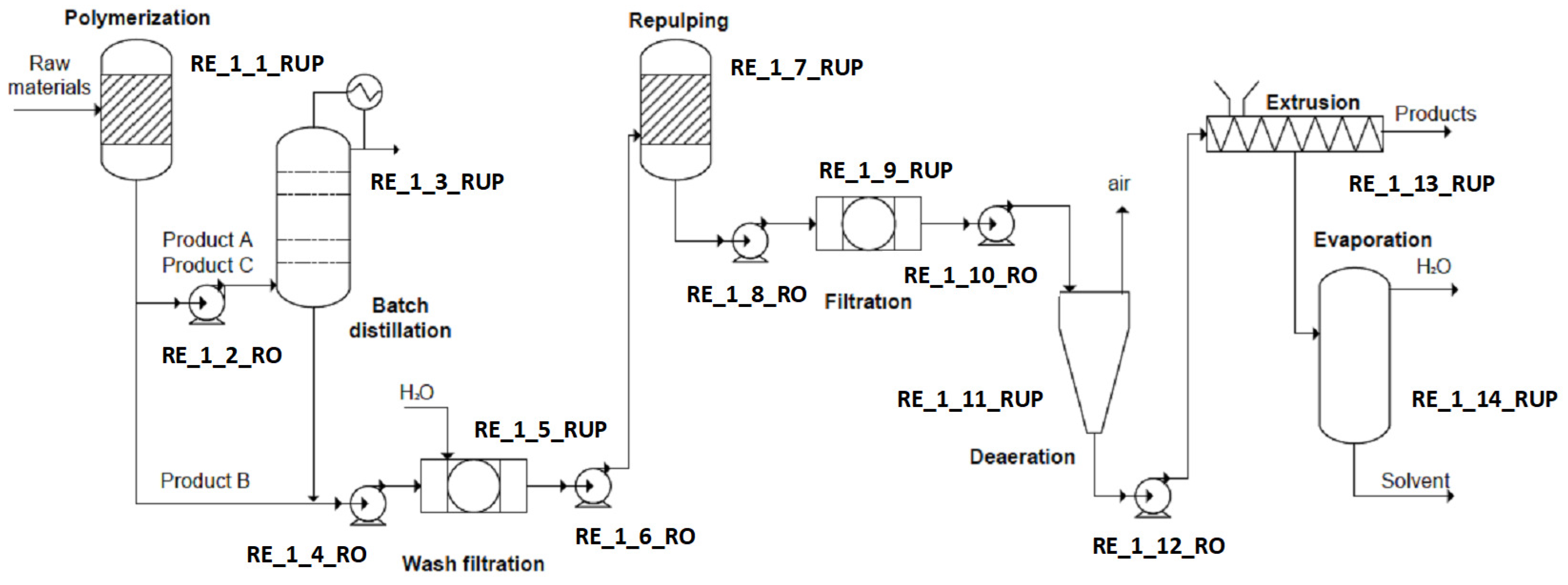

3.1. Case Study

- Level one (L1) regarding process definition, conceptual knowledge (process principles, process standards, and data standards), and data definition.

- Level three (L3) regarding process standardization and data standardization.

- Level four (L4) regarding process optimization, procedural knowledge, and integration and feeding data.

- Level seven (L7) regarding process intelligence, metacognitive knowledge, and data dynamics.

3.1.1. Process Model of Polymer Plant

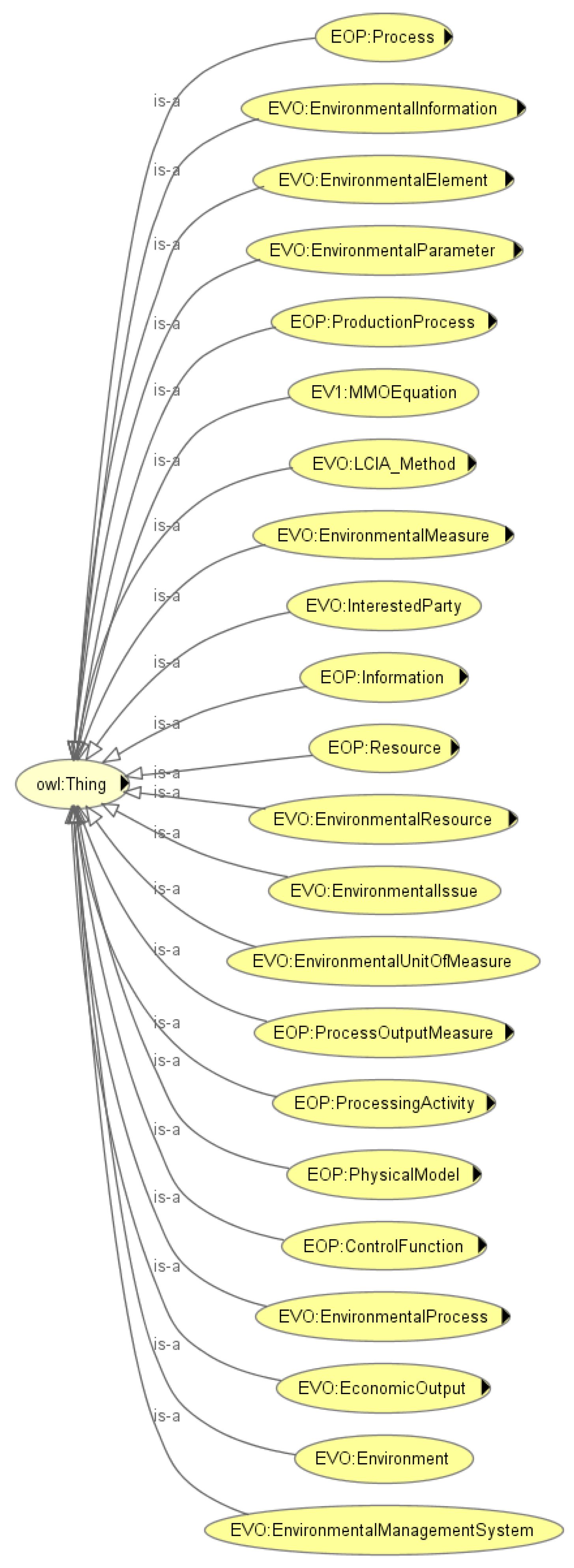

3.1.2. A Knowledge Model of the Polymer Plant

- Production system characterization: comprising physical, procedural, and recipe (site and general) models.

- Products contemplated: according to the processing order activity in the industry recipes defining the production requirements and production path for the products in the physical, process and recipe (master and control) models.

- Resource availability and plant status: provided by the process management and production information management activities.

3.1.3. The Data Model of the Polymer Plant

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Venkatasubramanian, V.; Zhao, C.; Joglekar, G.; Jain, A.; Hailemariam, L.; Suresh, P.; Akkisetty, P.; Morris, K.; Reklaitis, G. Ontological informatics infrastructure for pharmaceutical product development and manufacturing. Comput. Chem. Eng. 2006, 30, 1482–1496. [Google Scholar] [CrossRef]

- Simon, F.; Murray, T. Decision support systems. Commun. ACM 2007, 50, 39–40. [Google Scholar]

- Shim, J.P.; Warkentin, M.; Courtney, J.F.; Power, D.J.; Sharda, R.; Carlsson, C. Past, present, and future of decision support technology. Decis. Support Syst. 2002, 33, 111–126. [Google Scholar] [CrossRef]

- Shobrys, D.E.; White, D.C. Planning, scheduling and control systems: Why cannot they work together. Comput. Chem. Eng. 2002, 26, 149–160. [Google Scholar] [CrossRef]

- Park, J.; Du, J.; Harjunkoski, I.; Baldea, M. Integration of Scheduling and Control Using Internal Coupling Models. Comput. Chem. Eng. 2014, 33, 529–534. [Google Scholar]

- CEN Standards. European Committee for Standardization (CEN). Available online: https://www.cen.eu/about/Pages/default.aspx (accessed on 15 March 2021).

- Integrated Definition Methods (IDEF) Standards. Available online: https://www.idef.com/ (accessed on 15 March 2021).

- International Electrotechnical Commission (IEC) Standards. Available online: https://www.iec.ch/homepage (accessed on 15 March 2021).

- International Organization for Standardization (ISO). Available online: https://www.iso.org/standards.html (accessed on 15 March 2021).

- Manufacturing Execution Systems Association (MESA). Available online: http://www.mesa.org/en/modelstrategicinitiatives/MSI.asp (accessed on 15 March 2021).

- Machinery Information Management Open Systems Alliance MIMOSA. Available online: https://www.mimosa.org/mimosa-osa-eai/ (accessed on 15 March 2021).

- Object Management Group (OMG). Available online: https://www.omg.org/about/index.htm (accessed on 15 March 2021).

- Process Industry Practices (PIP). Available online: https://www.pip.org/ (accessed on 15 March 2021).

- International Society for Measurement and Control. ISA-88/95 technical report: Using ISA-88 and ISA-95 together. In ISA The Instrumentation, Systems, and Automation Society 2007; Technical Report; ISA: Durham, NC, USA, 2017. [Google Scholar]

- Williams, T.J. A Reference Model for Computer Integrated Manufacturing from the Viewpoint of Industrial Automation. IFAC Proc. Vol. 1990, 23, 281–291. [Google Scholar] [CrossRef]

- Apostolou, D.; Mentzas, G.; Abecker, A. Ontology-enabled knowledge management at multiple organizational levels. In Proceedings of the 2008 IEEE International Engineering Management Conference, Estoril, Portugal, 28–30 June 2008; pp. 1–6. [Google Scholar]

- Sowa, J.F. Conceptual Structures: Information Processing in Mind and Machine; Addison-Wesley: Reading, MA, USA, 1984. [Google Scholar]

- Gruber, T.R. A translation approach to portable ontology specifications. Knowl. Acquis. 1993, 5, 199–220. [Google Scholar] [CrossRef]

- Fensel, D. A Silver Bullet for Knowledge Management and Electronic Commerce. In Ontologies; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Anderson, L.W.; David, R.K.; Benjamin, S.B. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Lorin, W., Anderson, D.K., Eds.; Longman: New York, NY, USA, 2001. [Google Scholar]

- Munoz, E.; Capon-Garcia, E.; Puigjaner, L. Supervised Life-Cycle Assessment Using Automated Process Inventory Based on Process Recipes. ACS Sustain. Chem. Eng. 2018, 6, 11246–11254. [Google Scholar] [CrossRef]

- Munoz, E.; Capon-Garcia, E.; Espuna, A.; Puigjaner, L. Ontological framework for enterprise-wide integrated decision-making at operational level. Comput. Chem. Eng. 2012, 42, 217–234. [Google Scholar] [CrossRef]

- Munoz, E.; Capon-Garcia, E.; Lainez, J.; Espuna, A.; Puigjaner, L. Integration of enterprise levels based on an ontological framework. Chem. Eng. Res. Des. 2012, 91, 1542–1556. [Google Scholar] [CrossRef]

- Munoz, E.; Capon-Garcia, E. Intelligent Mathematical Modelling Agent for Supporting Decision-Making at Industry 4.0. In Trends and Applications in Software Engineering. Advances in Intelligent Systems and Computing; Mejia, J., Munoz, M., Rocha, A., Pena, A., Perez-Cisneros, M., Eds.; Springer: Cham, Switzerland, 2019; Volume 865. [Google Scholar]

- Munoz, E.; Capon-Garcia, E.; Lainez-Aguirre, J.M.; Espuna, A.; Puigjaner, L. Operations Research Ontology for the Integration of Analytic Methods and Transactional Data. In Trends and Applications in Software Engineering. Advances in Intelligent Systems and Computing; Mejia, J., Munoz, M., Rocha, A., Calvo-Manzano, J., Eds.; Springer: Cham, Switzerland, 2018; Volume 45. [Google Scholar]

- Munoz, E.; Capon-Garcia, E.; Puigjaner, L. Advanced Model Design Based on Intelligent System Characterization And Problem Definition. In Computer-Aided Chemical Engineering; Anton, A.K., Edwin, Z., Richard, L., Leyla, Ö., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; Volume 46, pp. 1045–1050. [Google Scholar]

| Process Model | Knowledge Model | Transactional Model | |

|---|---|---|---|

| L1 | Process Definition | Conceptual | Definition |

| Current process matter | Chemical principles Physics principles Mechanics principles | Data definition Data collection | |

| L2 | Improvement | Improvement | |

| Benchmarking | Good manufacturing practices Standard operational procedures | Data refining Database | |

| L3 | Standardization | Standardization | |

| Tear levels definition world-class process | Process standards Quality standards Data standards Security standards | Data metrics Data language Structured data | |

| L4 | Optimization | Procedural | Integration & Feeding |

| Better performance Key process variables Key process parameters | Analytic algorithms knowledge Analytical methods knowledge | Data to parameters Data to sets Planning systems | |

| L5 | Automation | Expertise | |

| Fixed parameters Fixed variablesKey indicators Set values ranges | Good & bad habits algorithms | Fixed data collection Fixed data structuring | |

| L6 | Digitalization | ||

| Virtual twin processes New process scenarios | Knowledge-based scenarios | Virtual feeding | |

| L7 | Intelligence | Metacognitive | Dynamics |

| Problem characterization Problem classificationIntelligent systems Intelligent agents Autonomous decision-making | Model knowledge characterization Model knowledge classification Knowledge reasoning Knowledge creation | Automated data collection Automated data structuring Intelligent database |

| General Feature | Value |

|---|---|

| Production capacity | Medium |

| Company size | Medium |

| Supply chain type | Good availability |

| Production type | Multi-stage |

| Market competition type | Low |

| Environmental regulations | Defined |

| Demand levels | High volume |

| Tactic Features | Value |

|---|---|

| Transport type | Land |

| Supply chain objective | Economic |

| Production policies | Defined |

| Customer features | - |

| Suppliers features | - |

| Process flow type | Forward |

| Material storage type | Limited |

| Strategic Features | Value |

|---|---|

| Production processing | Sequential |

| Technology | Multitask |

| Material storage | Limited |

| Material resource | Not perishable |

| Processing resources | Limited |

| Scheduling objective | Timing |

| Scheduling mode | On-line |

| Recipe ID | Recipe Type | Element ID | Procedural Element Type | Parameter ID | Parameter Name |

|---|---|---|---|---|---|

| MR-01 | Master | RE-3 | Unit procedure | I15 | Output3_2 |

| MR-01 | Master | RE-14 | Unit procedure | I56 | Output14_1 |

| MR-01 | Master | RE-14 | Unit procedure | I57 | Output14_2 |

| MR-01 | Master | RE-2 | Operation | I61 | CleaningWater_total |

| MR-01 | Master | RE-1 | Unit procedure | I62 | CoolingWater_total |

| MR-01 | Master | RE-1 | Unit procedure | I63 | Electricity_total |

| MR-01 | Master | RE-13 | Unit procedure | I50 | Output13_1 |

| MR-01 | Master | RE-1 | Unit procedure | I1 | Input1_1 |

| MR-01 | Master | RE-1 | Unit procedure | I2 | Input1_2 |

| MR-01 | Master | RE-1 | Unit procedure | I3 | Input1_3 |

| MR-01 | Master | RE-1 | Unit procedure | I4 | Input1_4 |

| MR-01 | Master | RE-1 | Unit procedure | I5 | Input1_5 |

| MR-01 | Master | RE-7 | Unit procedure | I26 | Output7_2 |

| MR-01 | Master | RE-3 | Unit procedure | I65 | Steam_total |

| Resource Type | Subtype | Resource Name | Procedural Information | Value | Unit |

|---|---|---|---|---|---|

| Material | By-product | ByProduct1 | Output Parameter | 1750 | kg |

| Material | By-product | ByProduct3 | Output Parameter | 1217 | kg |

| Material | By-product | ByProduct4 | Output Parameter | 1734 | kg |

| Material | CleaningWaterT1 | Process Parameter | 240,000 | kg | |

| Energetic | Cooling water | CoolingWaterT1 | Process Parameter | 8,613,983 | kg |

| Energetic | Electricity | ElectricityT1 | Process Parameter | 458,979 | kWh |

| Material | Final product | FinalProduct1 | Output Parameter | 1000 | kg |

| Material | Raw material | RawMaterial1 | Input Parameter | 100 | kg |

| Material | Raw material | RawMaterial2 | Input Parameter | 50 | kg |

| Material | Raw material | RawMaterial3 | Input Parameter | 25 | kg |

| Material | Raw material | RawMaterial4 | Input Parameter | 0 | kg |

| Material | Raw material | RawMaterial5 | Input Parameter | 0 | kg |

| Material | Residue | Residue1 | Output Parameter | 1974 | kg |

| Energetic | Steam | SteamP1 | Process Parameter | 441,323 | kg |

| Number Batches (A/B/C) | Objective Function | Solution Approach | Solution Value | Optimal Solution Value |

|---|---|---|---|---|

| 4/4/4 | Productivity | Mathematical programming | 2170 | 2174 |

| 4/4/4 | Environmental impact | Mathematical programming | 48,200 | 48,200 |

| 4/4/4 | Makespan | Mathematical programming | 48,000 | 48,000 |

| 17/10/13 | Productivity | Hybrid approach | 1301 | 1302 |

| 17/10/13 | Environmental impact | Mathematical programmin | 218,814 | 217,236 |

| 17/10/13 | Makespan | Genetic algorithm | 199,507 | 197,686 |

| 20/18/15 | Productivity | Hybrid approach | 1354 | 1356 |

| SWOT | Indicator | Related Feature | Metric | UBV | LBV |

|---|---|---|---|---|---|

| DataString | EOP_Class | EOP_Class | EOP_DataProperty | EOP_DataProperty | EOP_DataProperty |

| W | Time delivered hauler | Tardiness finish orders | u/month | 15 | 45 |

| W | Demand unacomplishment | Unfinished orders | u/month | 5 | 15 |

| Enumeration Set | Enumeration Value | Enumeration String | Description |

|---|---|---|---|

| Boolean | 0 | FALSE | Definition of a Boolean value. |

| 1 | TRUE | ||

| Direction Type | 0 | Invalid | Entry not valid |

| 1 | Internal | Identifies how a parameter is handled. Internal = only available within the Recipe Element. Defined at creation or created as an intermediate value. | |

| 2 | Input | The Recipe Element receives the Value from an external source. | |

| 3 | Output | The Recipe Element creates the Value and makes it available for external use. | |

| 4 | Input/Output | The Recipe Element and external element exchange the Value, and may change its Value. | |

| 5–99 | Reserved | ||

| 100+ | User defined |

| Object/Data Property | Range |

|---|---|

| hasParameterSource | Resource |

| hasID_ParameterID | ParameterID |

| parameter_type | constant; variable |

| hasEquationAsReferenceValue | MathematicalElement |

| value | float |

| engineering_units | string |

| description | string |

| scaled | float |

| ID_R1 | 4000 |

| ID_P1 | 13,000 |

| ID_C1 | 4000 |

| ID_P2 | 5000 |

| IN001B | 5000 |

| IN002A | 5000 |

| IN002B | 11,000 |

| ID-FP00A | 36,000 |

| ID_FP00B | 36,000 |

| ID_R001 | 36,000 |

| ID_R002 | 36,000 |

| 1 | ID_R1 | 0.20 | 0.00 | 2.00 | 0.30 | 0.25 |

| 2 | ID_P1 | 0.20 | 0.00 | 0.30 | 0.75 | 0.25 |

| 3 | ID_C1 | 0.50 | 0.30 | 2.50 | 0.00 | 0.75 |

| 4 | ID_P2 | 0.20 | 0.00 | 0.75 | 0.00 | 0.25 |

| 5 | IN001B | 0.50 | 0.00 | 0.75 | 0.00 | 0.50 |

| 6 | IN002A | 0.20 | 0.00 | 0.75 | 0.75 | 0.25 |

| 7 | IN002B | 0.30 | 0.75 | 1.00 | 0.00 | 0.25 |

| 8 | ID-FP00A | 0.20 | 0.00 | 0.75 | 0.00 | 0.25 |

| 9 | ID_FP00B | 0.30 | 0.00 | 0.75 | 0.00 | 0.25 |

| 10 | ID_R001 | 0.20 | 0.00 | 0.75 | 0.00 | 0.50 |

| 11 | ID_R002 | 0.20 | 0.00 | 0.75 | 0.00 | 0.25 |

| 1 | ID_R1 | 0.20 | 0.00 | 3.00 | 0.75 | 0.25 |

| 2 | ID_P1 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 3 | ID_C1 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 4 | ID_P2 | 0.50 | 0.00 | 0.75 | 0.00 | 0.25 |

| 5 | IN001B | 0.20 | 0.00 | 0.75 | 0.00 | 0.50 |

| 6 | IN002A | 0.30 | 0.00 | 0.75 | 0.00 | 0.25 |

| 7 | IN002B | 0.20 | 0.75 | 0.74 | 0.74 | 0.25 |

| 8 | ID-FP00A | 0.20 | 0.00 | 0.74 | 0.00 | 0.25 |

| 9 | ID_FP00B | 0.50 | 0.00 | 0.74 | 0.00 | 0.25 |

| 10 | ID_R001 | 0.20 | 0.00 | 0.74 | 0.00 | 0.50 |

| 11 | ID_R002 | 0.20 | 0.00 | 0.74 | 0.00 | 0.25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muñoz, E.; Capon-Garcia, E.; Muñoz, E.M.; Puigjaner, L. A Systematic Model for Process Development Activities to Support Process Intelligence. Processes 2021, 9, 600. https://doi.org/10.3390/pr9040600

Muñoz E, Capon-Garcia E, Muñoz EM, Puigjaner L. A Systematic Model for Process Development Activities to Support Process Intelligence. Processes. 2021; 9(4):600. https://doi.org/10.3390/pr9040600

Chicago/Turabian StyleMuñoz, Edrisi, Elisabet Capon-Garcia, Enrique Martinez Muñoz, and Luis Puigjaner. 2021. "A Systematic Model for Process Development Activities to Support Process Intelligence" Processes 9, no. 4: 600. https://doi.org/10.3390/pr9040600

APA StyleMuñoz, E., Capon-Garcia, E., Muñoz, E. M., & Puigjaner, L. (2021). A Systematic Model for Process Development Activities to Support Process Intelligence. Processes, 9(4), 600. https://doi.org/10.3390/pr9040600