A Dual-Norm Support Vector Machine: Integrating L1 and L∞ Slack Penalties for Robust and Sparse Classification

Abstract

1. Introduction

2. Preliminaries

2.1. Support Vector Machine (SVM)

2.2. Least Squares Support Vector Machine (LSSVM)

- (1)

- Lack of sparsity: Unlike classical SVMs, where only a subset of support vectors contributes to the decision function, LSSVM typically results in dense solutions because all training samples are involved in the final model. This not only increases storage and evaluation cost but also reduces model interpretability.

- (2)

- Sensitivity to outliers: The use of squared error loss on slack variables can overly penalize large deviations, making the model less robust to noisy data or outliers.

2.3. Motivation for Dual-Norm Regularization

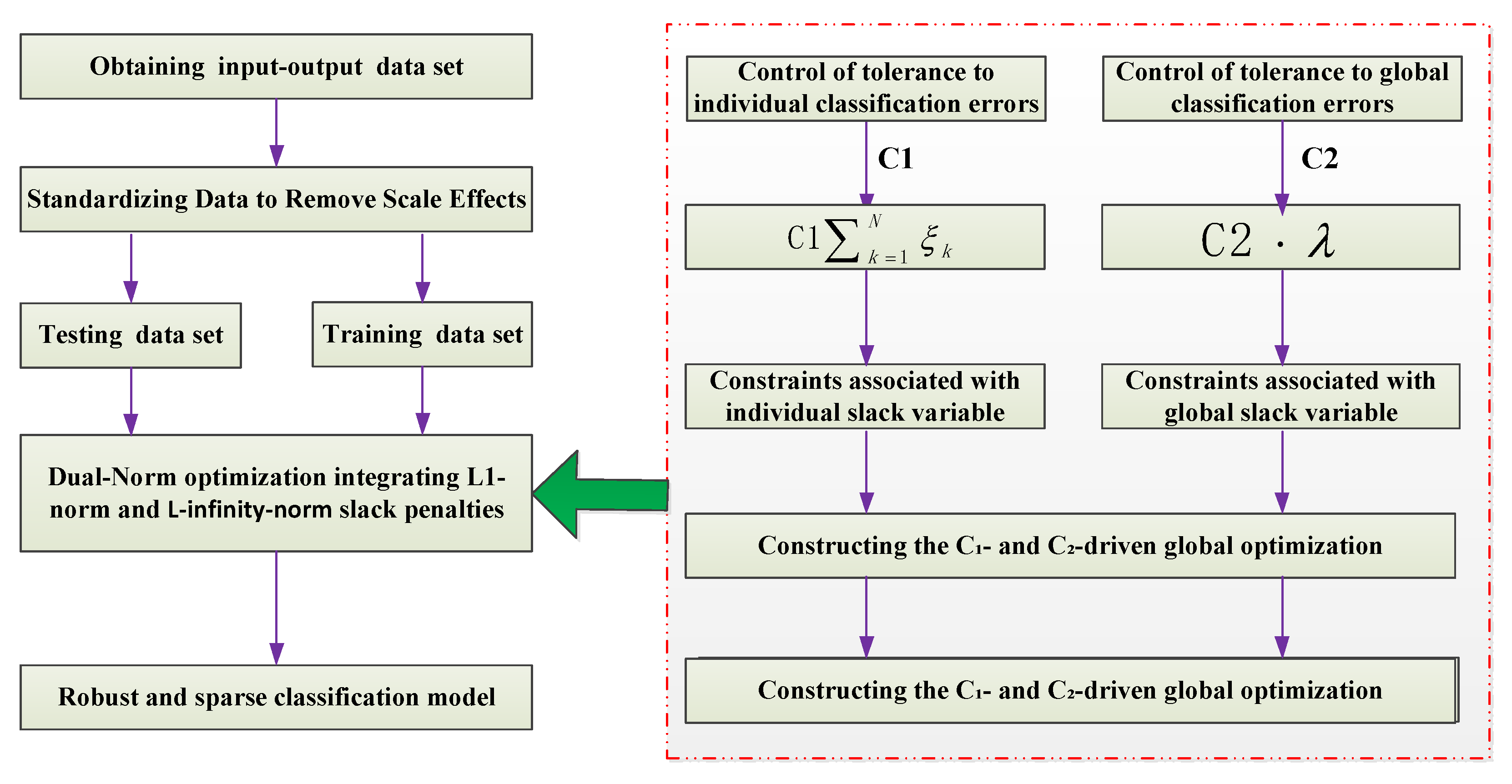

3. Dual-Norm Regularization for Support Vector Classification

3.1. Construction of Support Vector Classification Model Under Dual-Norm Regularization

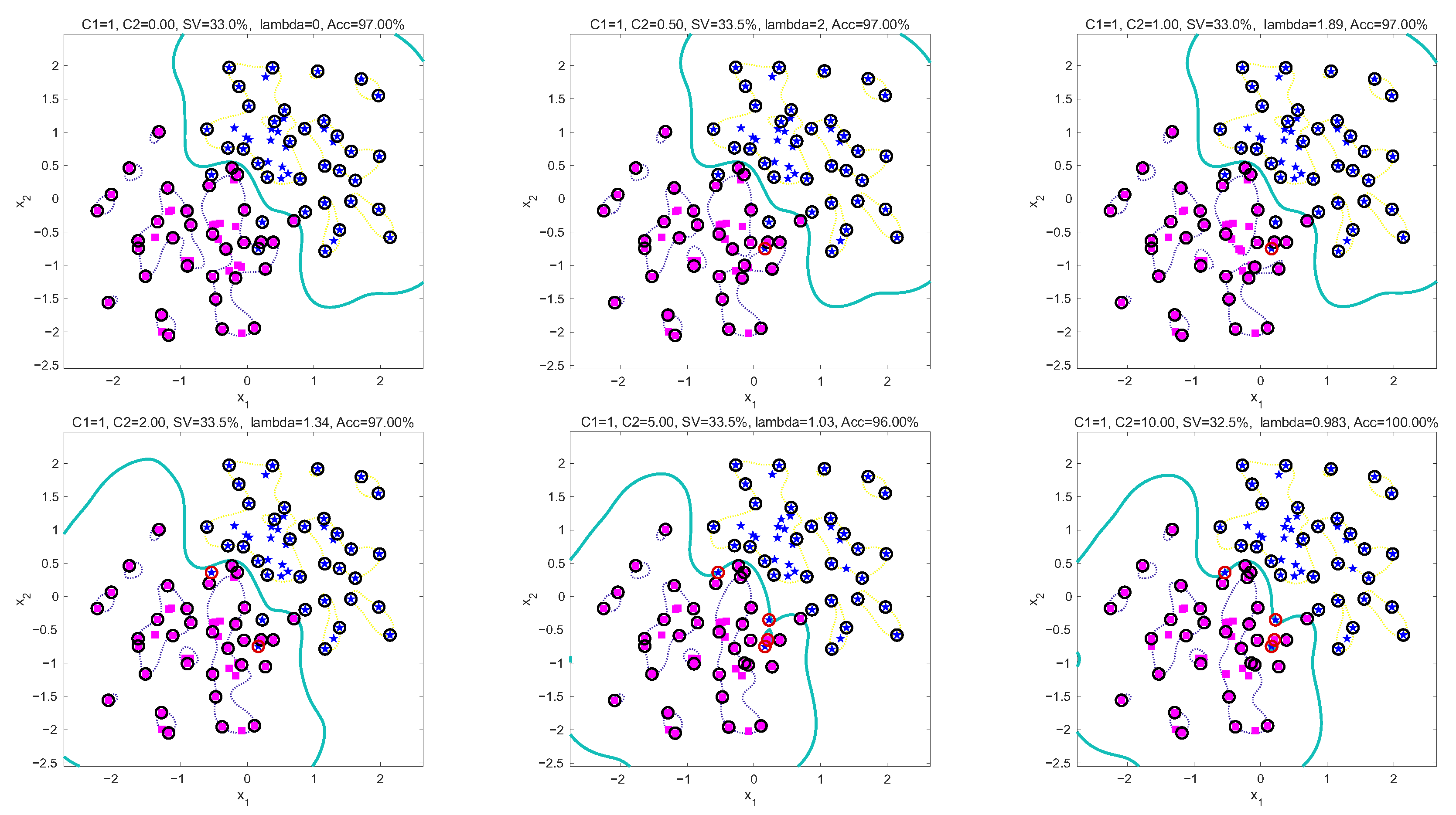

3.2. Hyperparameter Roles, Interaction, and Selection Strategy

- (1)

- The term penalizes individual slack variables , thereby controlling sample-level accuracy. A larger enforces stricter adherence to the training samples, which may reduce misclassification but increase the risk of overfitting.

- (2)

- The term penalizes the global slack variable , thereby regulating global sparsity and robustness. Increasing enforces tighter margin consistency across all samples, resulting in sparser solutions that are less sensitive to noise. Thus, emphasizes local accuracy, whereas emphasizes global structure and robustness. The performance of the optimization model depends on the relative magnitudes of and :

- (1)

- When , the model behaves similarly to a standard SVM, focusing primarily on minimizing sample-level errors.

- (2)

- When , the optimization emphasizes global sparsity, producing more compact models that are robust to outliers, albeit at the potential cost of reduced accuracy.

- (3)

- A balanced configuration achieves a trade-off between classification accuracy and sparsity, which is the central motivation for introducing both hyperparameters.

- (1)

- Hyperparameter Search Strategy: The hyperparameters and are optimized using a two-stage grid search procedure consisting of coarse tuning and fine tuning. In the coarse tuning stage, both and are explored over a wide logarithmic grid:This stage aims to roughly identify the region in which the optimal hyperparameters lie. For instance, if the best performance during coarse tuning is observed at and , the fine-tuning stage further refines the search within a narrower range centered around these values. Specifically, the fine-tuning grid is constructed aswith smaller incremental steps on the logarithmic scale, such as in the exponent. This hierarchical strategy ensures that the search process is computationally efficient while maintaining a high likelihood of locating the optimal hyperparameters.

- (2)

- Cross-Validation: A grid search with 5-fold cross-validation is applied to jointly optimize .

- (3)

- Selection Criterion: The optimal pair is determined by a composite evaluation index:where denotes the proportion of support vectors. Unless otherwise specified, we set to balance accuracy and sparsity.

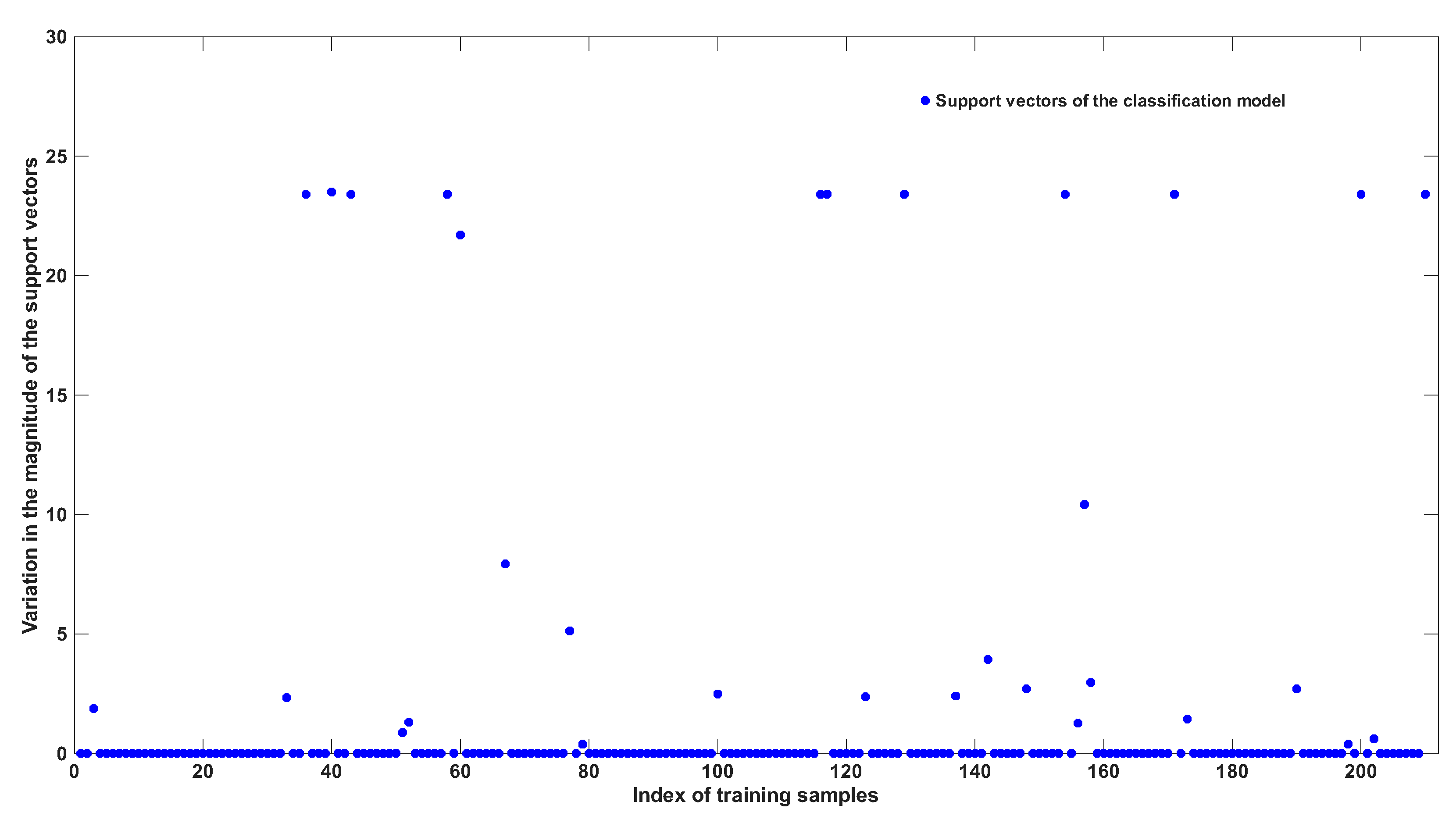

3.3. Sparsity Analysis Under Dual-Norm Regularization

- (1)

- The primal problem remains a convex quadratic program (QP) with variables (d: feature dimension; N: number of samples, 1; additional variables: ).

- (2)

- The dual problem introduces an additional dual variable corresponding to , but the kernelized QP structure remains intact.

- (3)

- The complexity of solving the dual remains in the worst case for standard QP solvers, similar to standard SVM, because the Hessian structure is still dominated by the kernel matrix.

- (4)

- The only overhead is one additional constraint and one additional variable, which is negligible compared to the N slack variables already present in classical SVM.

- (1)

- Sparse Solutions Induced by -Norm Slack Variables ()The -norm penalty on contributes to sparsity through the relationship (derived from Lagrangian derivatives) and complementary slackness :Case 1: Here, , so (from ). The first constraint becomes , meaning the sample lies on or within the correct side of the margin. Since , this sample does not contribute to the model parameter , making it a non-support vector and enhancing sparsity.Case 2: Here, , so (from ). The first constraint is active: , indicating is a support vector lying exactly on the margin. Though , such samples are sparse in practice because only margin-boundary samples satisfy this condition.Case 3: Here, , allowing . The first constraint may be inactive (), meaning is a misclassified sample or lies outside the margin. However, is bounded, and such samples are rare in well-regularized models, preventing dense solutions.

- (2)

- Sparse Solutions Induced by -Norm Slack Variable ()The -norm penalty on contributes to sparsity through and complementary slackness :Case 1: The second constraint may be inactive or active, but means does not contribute to the worst-case margin violation. Such samples do not affect (since ), reinforcing sparsity.Case 2: Here, (from ), so . This indicates is associated with the maximum slack variable (i.e., it is a worst-case violator). However, the constraint limits the number of such samples—only a few worst-case violators can have , ensuring sparsity.

- (3)

- Joint Sparsity from -Norm and -Norm FusionThe combination of and penalties amplifies sparsity through Mutual Exclusion of Non-Support Vectors: Samples with both and are completely excluded from , as they contribute nothing to the model. These samples form the majority in sparse solutions. Bounded Dual Variables: The constraints and prevent excessive non-zero dual variables, ensuring that only critical samples (margin-boundary or worst-case violators) retain non-zero or .

- (1)

- Local sparsity: arising from the selective activation of for margin-violating or support vectors, regulated by the -norm.

- (2)

- Extreme-point sparsity: due to the activation of only the most significant margin violator(s) through , regulated by the -like component.

3.4. Discussion

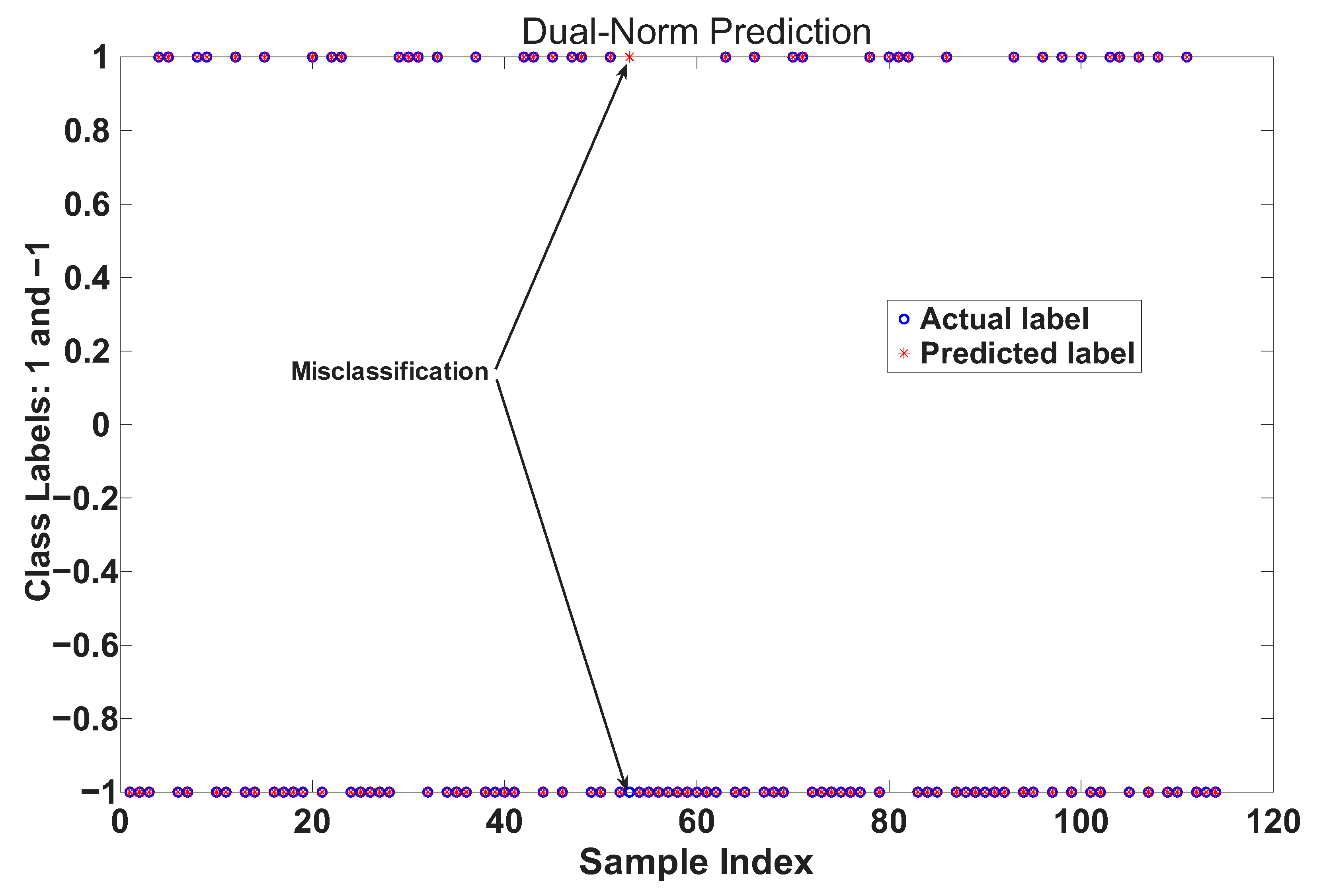

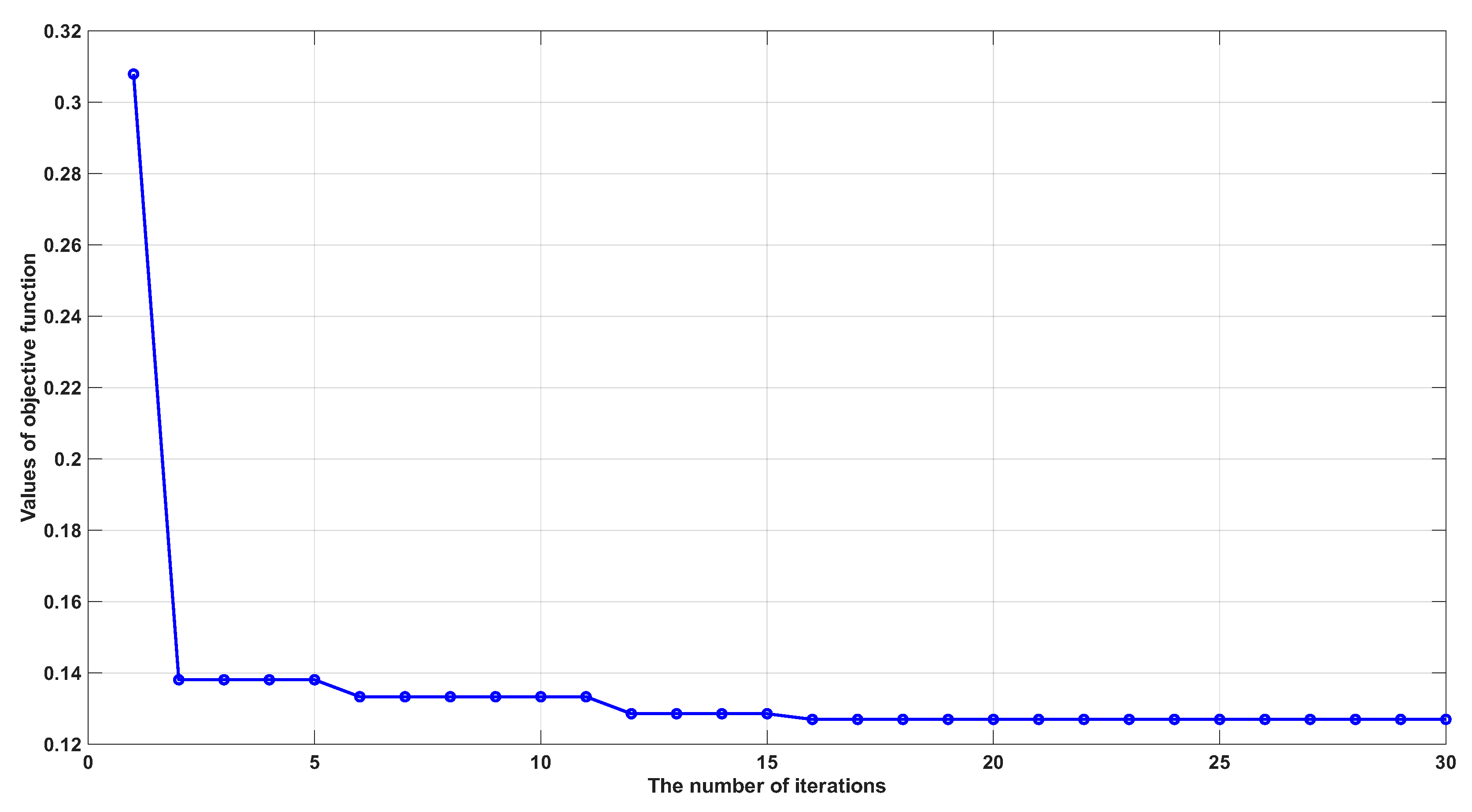

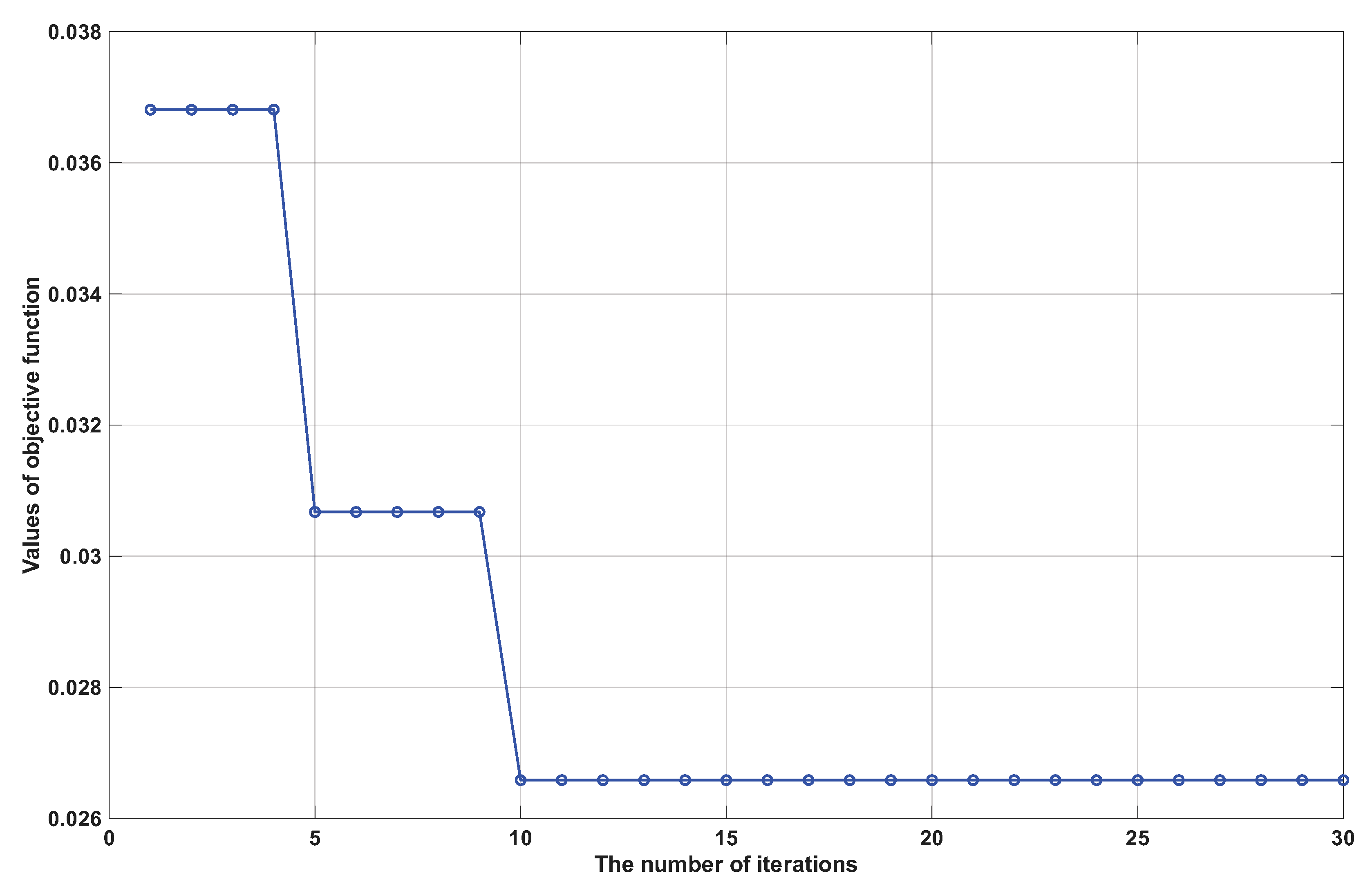

4. Experimental Studies

- Training Accuracy: The classification accuracy achieved on the training set, reflecting the model’s capacity to fit the training data.

- Testing Accuracy: The accuracy measured on the testing set, indicating the model’s generalization ability to unseen data.

- Model Sparsity : Defined as the proportion of zero-valued coefficients in the model’s weight representation,is the count of non-support vectors, has a fundamental influence on the model’s structural composition. In the optimization of classification models, a training sample is deemed a non-support vector if the absolute value of model coefficients is below a predefined threshold of . Specifically, for the dual-norm model, sparsity is computed as the ratio of zero-valued coefficients to the total number of training samples. In standard SVM, sparsity is evaluated by the proportion of non-support vectors. A higher sparsity value corresponds to a more compact model, which is favorable for interpretability and computational efficiency. Hyperparameters are selected using cross-validation on the training set to ensure fairness in comparison.

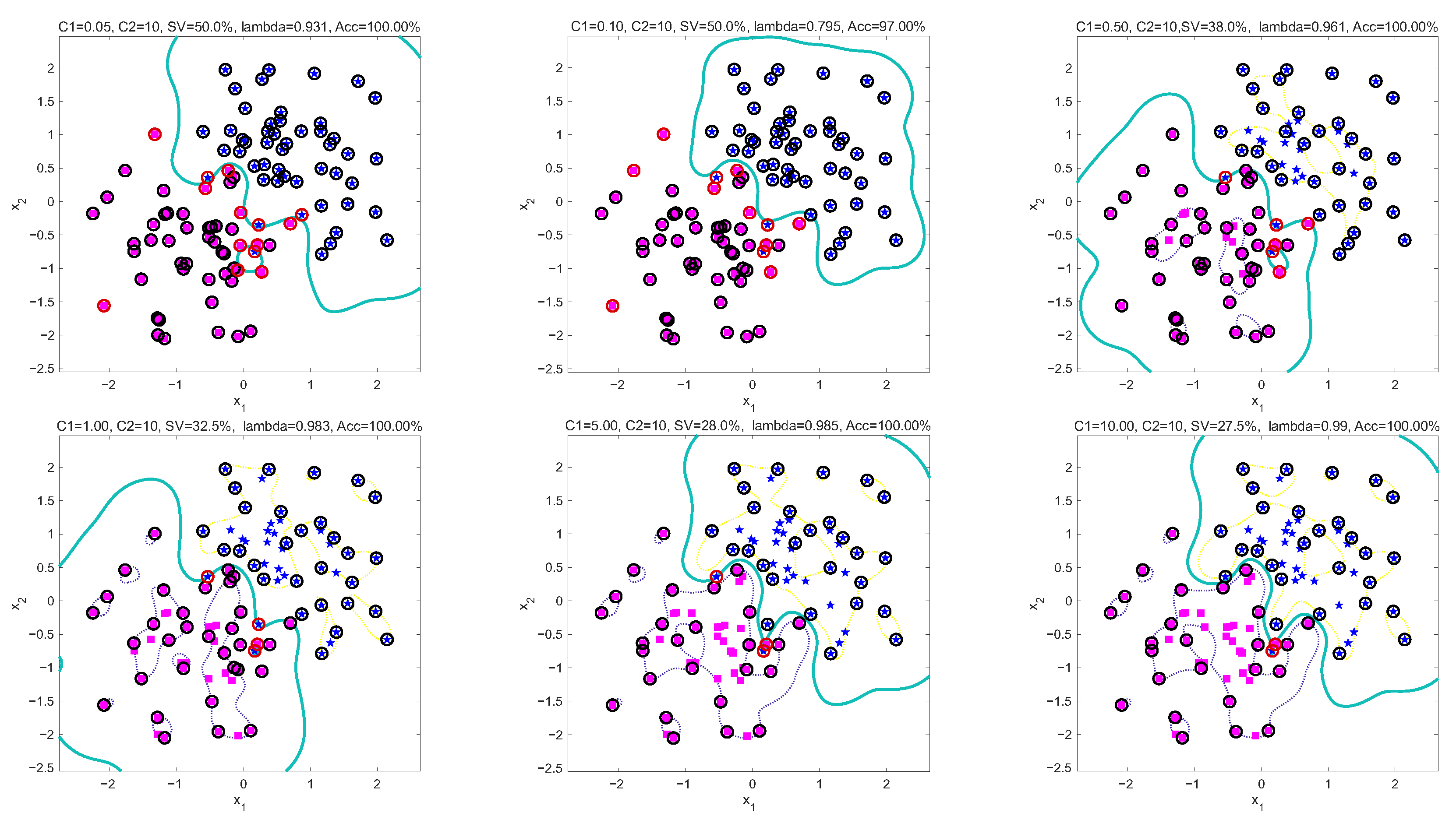

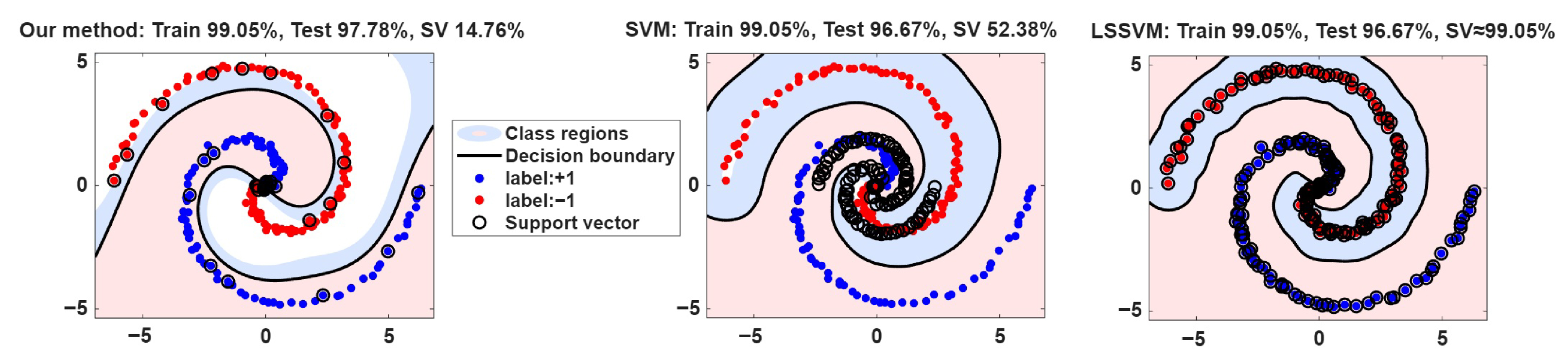

4.1. Spiral Dataset

4.2. UCI Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Suaarez, C.A.; Castro, M.; Leon, M.; Martin-Barreiro, C.; Liut, M. Improving SVM performance through data reduction and misclassification analysis with linear programming. Complex Intell. Syst. 2025, 11, 356. [Google Scholar] [CrossRef]

- Amaya-Tejera, N.; Gamarra, M.; Velez, J.I.; Zurek, E. A distance based kernel for classification via Support Vector Machines. Front. Artif. Intell. 2024, 7, 1287875. [Google Scholar] [CrossRef]

- Yang, C.Y.; Chen, Y.Z. Support vector machine classification of patients with depression based on resting state electroencephalography. Asian Biomed. 2024, 18, 212–223. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; Volume 5, pp. 144–152. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Bennett, K.P.; Campbell, C. Support vector machines: Hype or hallelujah? ACM SIGKDD Explor. Newsl. 2000, 2, 1–13. [Google Scholar] [CrossRef]

- Tsang, I.W.; Kwok, J.T.; Cheung, P.M. Core vector machines: Fast SVM training on very large data sets. J. Mach. Learn. Res. 2005, 6, 363–392. [Google Scholar]

- Xu, H.; Caramanis, C.; Mannor, S. Robust support vector machine training via convex outlier ablation. J. Mach. Learn. Res.. 2009, 10, 1485–1510. [Google Scholar]

- Wang, S.; Chen, Z.; Hu, Y. Robust twin support vector machine for binary classification. Knowl.-Based Syst. 2014, 67, 186–195. [Google Scholar]

- Zhang, J.; Zhang, L.; Huang, Y. Nonconvex and robust SVM with bounded ramp loss. Inf. Sci. 2021, 546, 453–467. [Google Scholar]

- Yuan, X.; Zhang, J.; Li, Y. Sparse support vector machine modeling by linear programming. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1077–1090. [Google Scholar]

- Li, Y.; Zhao, X.; Wang, J. Robust SVM with bounded loss for handling noisy and imbalanced data. Pattern Recognit. 2022, 129, 108688. [Google Scholar]

- Suykens, J.A.K.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Suykens, J.A.K.; Gestel, T.V.; De Brabanter, J.; De Moor, B.; Vandewalle, J. Least Squares Support Vector Machines; World Scientific Publishing Company: Singapore, 2002. [Google Scholar]

- Khanjani Shiraz, R.; Babapour-Azar, A.; Hosseini Nodeh, Z.; Pardalos, P. Distributionally robust joint chance-constrained support vector machines. Optim. Lett. 2022, 17, 1–19. [Google Scholar] [CrossRef]

- Faccini, D.; Maggioni, F.; Potra, F.A. Robust and distributionally robust optimization models for linear support vector machine. Comput. Oper. Res. 2022, 147, 105930. [Google Scholar] [CrossRef]

- Lin, F.; Yang, J.; Zhang, Y.; Gao, Z. Distributionally robust chance-constrained kernel-based support vector machine. Comput. Oper. Res. 2024, 170, 106755. [Google Scholar] [CrossRef]

- Tagawa, K. A support vector machine-based approach to chance constrained problems using huge data sets. In Proceedings of the 52nd ISCIE International Symposium on Stochastic Systems Theory and its Applications, Osaka, Japan, 29–30 October 2020. [Google Scholar]

- Zhao, H.; Wang, Y.; Zhang, L. Robust LSSVM with adaptive Huber loss for noisy classification problems. Neural Process. Lett. 2022, 54, 2187–2202. [Google Scholar]

- Xu, J.; Wang, C. An improved LSSVM model with ϵ-insensitive pinball loss. Expert Syst. Appl. 2023, 213, 119203. [Google Scholar]

- Liu, X.; Zhang, S.; Jin, Y. A sparse LSSVM using ℓ1-norm regularization for classification. Knowl.-Based Syst. 2021, 229, 107384. [Google Scholar]

- Sharma, R.; Suykens, J.A.K. Mixed-norm regularization in least squares support vector machines. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 4711–4723. [Google Scholar]

- Chen, T.; Zhou, H.; Wang, J. A convex–concave hybrid algorithm for robust LSSVM training. Appl. Soft Comput. 2023, 132, 109865. [Google Scholar]

- Banerjee, A.; Ghosh, A. Sparse LSSVM via kernel pruning and proximal gradient optimization. Pattern Recognit. 2021, 112, 107759. [Google Scholar]

- Wang, R.; Li, Z.; He, X. Dual-norm regularized LSSVM for robust classification. Pattern Recognit. Lett. 2023, 167, 78–85. [Google Scholar]

- Huang, M.; Zhang, Y.; Liu, Q. Slack variable norm hybridization for improved generalization in SVM classifiers. Neurocomputing 2024, 547, 126271. [Google Scholar]

- Xu, L.; Neufeld, J.; Larson, B.; Schuurmans, D. Maximum margin clustering. Adv. Neural Inf. Process. Syst. 2004, 17, 1537–1544. [Google Scholar]

- Zhu, J.; Rosset, S.; Hastie, T.; Tibshirani, R. 1-norm support vector machines. Adv. Neural Inf. Process. Syst. 2004, 16, 49–56. [Google Scholar]

- Shen, X.; Wang, L. Adaptive model selection and penalization for high-dimensional linear models. Stat. Sin. 2014, 24, 1113–1135. [Google Scholar]

- Yang, M.S.; Su, C.H. A fuzzy soft support vector machine for classifying multi-class data. Expert Syst. Appl. 2010, 37, 682–685. [Google Scholar]

- Hong, Y.; Zhang, H.; Wu, X. Multi-kernel least squares support vector machine based on optimized kernel combination. Knowl.-Based Syst. 2020, 192, 105320. [Google Scholar]

- Zhang, W.; Guo, Y.; Liang, J.; Song, C. Robust least squares support vector machine based on adaptive loss function. Pattern Recognit. 2022, 125, 108556. [Google Scholar]

- Jiang, T.; Wei, B.; Yu, G.; Ma, J. Generalized adaptive Huber loss driven robust twin support vector machine learning framework for pattern classification. Neural Process. Lett. 2025, 57, 63. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y.; Zhang, X.; Zhang, Y. Fast truncated Huber loss SVM for large scale classification. Knowl.-Based Syst. 2023, 191, 110074. [Google Scholar] [CrossRef]

- Liu, Q.; Zhu, W.; Dai, Z.; Ma, Z. Multi-task support vector machine classifier with generalized Huber loss. J. Classif. 2025, 42, 221–252. [Google Scholar] [CrossRef]

- Fang, K.; Zhang, J.; Zhang, Y. Structured sparse support vector machine with ordered penalty. J. Comput. Graph. Stat. 2020, 29, 1005–1018. [Google Scholar]

- Moosaei, H.; Tanveer, M.; Arshad, M. Sparse least-squares universum twin bounded support vector machine. Knowl.-Based Syst. 2024, 259, 107984. [Google Scholar]

- Wang, H.J.; Zhang, H.W.; Li, W.Q. Sparse and robust support vector machine with capped squared loss for large scale pattern classification. Pattern Recognit. 2024, 153, 110544. [Google Scholar] [CrossRef]

- Yuan, C.; Yang, L. Capped L2,p-norm metric based robust least squares twin support vector machine for pattern classification. Neural Netw. 2021, 142, 457–478. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Yu, G.; Ma, J. WCTBSVM: Welsch loss with capped L2,p-norm twin support vector machine. Symmetry 2023, 15, 1076. [Google Scholar] [CrossRef]

- Zhu, W.; Song, Y.; Xiao, Y. Robust support vector machine classifier with truncated loss function by gradient algorithm. Comput. Ind. Eng. 2022, 171 Pt A, 108630. [Google Scholar] [CrossRef]

- Akhtar, M.; Tanveer, M.; Arshad, M. RoBoSS: A robust, bounded, sparse, and smooth loss function for supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 149–160. [Google Scholar] [CrossRef]

- Bhardwaj, P.; Tiwari, P.; Olejar, K., Jr.; Parr, W.; Kulasiri, D. A machine learning approach for grape classification using near-infrared hyperspectral imaging. Expert Syst. Appl. 2021, 174, 114774. [Google Scholar]

- Dua, D.; Graff, C.; UCI Machine Learning Repository. University of California, Irvine, School of Information and Computer Sciences. 2019. Available online: https://archive.ics.uci.edu/ml/datasets/Breast+Cancer+Wisconsin+(Diagnostic) (accessed on 30 June 2025).

| Term | Parameter | Function | Effect on Model | Problem Addressed |

|---|---|---|---|---|

| Local slack variables | Allows individual samples to violate the margin | Controls classification accuracy vs. robustness: larger reduces misclassification but increases the number of support vectors | Handles local noise and outliers, stabilizes the decision boundary | |

| Global slack variable | Penalizes the global relaxation | Controls sparsity: larger suppresses non-critical samples, reduces the number of support vectors | Enhances sparsity, reduces model complexity, ensures that the decision boundary is determined by key samples |

| SV_Ratio | Training Accuracy | Test Accuracy | ||||

|---|---|---|---|---|---|---|

| 0.0 | 100.00% | 0.0 | 0.00 | 96.0% | 95.00% | |

| 0.5 | 100.00% | 0.5 | 1.044 | 92.00% | 92.00% | |

| 1.0 | 100.00% | 1.0 | 1.010 | 91.00% | 93.00% | |

| 0.1 | 2.0 | 100.00% | 2.0 | 1.003 | 90.00% | 92.00% |

| 5.0 | 100.00% | 5.0 | 0.978 | 100.00% | 92.00% | |

| 10.0 | 100.00% | 10.0 | 0.795 | 97.00% | 93.00% | |

| 0.0 | 76.00% | 0.0 | 0.0 | 97.00% | 95.00% | |

| 0.5 | 74.00% | 0.5 | 1.718 | 96.00% | 95.00% | |

| 1.0 | 74.00% | 1.0 | 1.411 | 96.00% | 95.00% | |

| 0.5 | 2.0 | 73.00% | 2.0 | 1.050 | 94.00% | 98.00% |

| 5.0 | 77.00% | 5.0 | 1.015 | 95.00% | 97.00% | |

| 10.0 | 76.00% | 10.0 | 0.961 | 100.00% | 96.00% | |

| 0.0 | 66.00% | 0.0 | 0.0 | 97.00% | 95.00% | |

| 0.5 | 67.00% | 0.5 | 2.003 | 97.00% | 95.00% | |

| 1.0 | 66.00% | 1.0 | 1.886 | 97.00% | 95.00% | |

| 1.0 | 2.0 | 67.00% | 2.0 | 1.342 | 97.00% | 95.00% |

| 5.0 | 67.00% | 5.0 | 1.029 | 96.00% | 98.00% | |

| 10.0 | 65.00% | 10.0 | 0.983 | 100.00% | 97.00% | |

| 0.0 | 60.00% | 0.0 | 0.0 | 98.00% | 95.00% | |

| 0.5 | 60.00% | 0.5 | 1.712 | 98.00% | 95.00% | |

| 1.0 | 60.00% | 1.0 | 1.644 | 98.00% | 95.00% | |

| 5.0 | 2.0 | 59.00% | 2.0 | 1.506 | 98.00% | 95.00% |

| 5.0 | 57.00% | 5.0 | 1.104 | 98.00% | 96.00% | |

| 10.0 | 56.00% | 10.0 | 0.985 | 100.00% | 96.00% | |

| 0.0 | 56.00% | 0.0 | 0.0 | 99.00% | 97.00% | |

| 0.5 | 57.00% | 0.5 | 1.499 | 99.00% | 97.00% | |

| 1.0 | 57.00% | 1.0 | 1.435 | 99.00% | 98.00% | |

| 10.0 | 2.0 | 57.00% | 2.0 | 1.306 | 99.00% | 98.00% |

| 5.0 | 56.00% | 5.0 | 1.017 | 98.00% | 98.00% | |

| 10.0 | 55.00% | 10.0 | 0.990 | 100.00% | 98.00% |

| Method | Training Accuracy (%) | Testing Accuracy (%) | Zero Sparsity (%) |

|---|---|---|---|

| SVM | 99.52 | 100.00 | 22.38 |

| LSSVM | 99.52 | 100.00 | 0.00 |

| Dual-Norm | 99.52 | 100.00 | 84.76 |

| Method | Training Accuracy (%) | Testing Accuracy (%) | Zero Sparsity(%) |

|---|---|---|---|

| SVM | 99.05 | 97.78 | 47.62 |

| LSSVM | 99.05 | 96.67 | 0.00 |

| Dual-Norm | 99.05 | 97.78 | 85.24 |

| Method | Training Accuracy | Test Accuracy | Zero Sparsity |

|---|---|---|---|

| LSSVM | 99.56% | 99.12% | 0.00% |

| SVM | 98.46% | 98.25% | 80.66% |

| Dual-Norm | 98.02% | 99.12% | 90.55% |

| Datasets () | Method | Training Accuracy | Test Accuracy | Non-Zero Sparsity |

|---|---|---|---|---|

| Balance () | LSSVM | 91.78% (402/438) | 91.44% (314/483) | 100.00% (438/438) |

| SVM | 100.00% (483/483) | 99.47% (186/187) | 20.40% (89/438) | |

| Proposed | 100.00% (438/438) | 100.00% (187/187) | 20.40% (89/438) | |

| Fisheriris () | LSSVM | 98.10% (104/105) | 93.33% (42/45) | 100% (100/100) |

| SVM | 99.05 % (104/105) | 95.56% (43/45) | 32.38% (34/105) | |

| Proposed | 99.05% (104/105) | 95.56% (43/45) | 28.57% (30/105) | |

| Australian () | LSSVM | 87.37% (422/483) | 90.34% (187/207) | 99.59% (481/483) |

| SVM | 86.34% (417/483) | 89.86% (186/207) | 65.01% (314/483) | |

| Proposed | 86.54% (418/483) | 89.86% (186/207) | 64.18% (310/483) | |

| ILPD () | LSSVM | 100.00% (409/409) | 71.84% (125/174) | 100.00% (409/409) |

| SVM | 100.00% (409/409) | 71.84% (125/174) | 65.01% (404/409) | |

| Proposed | 90.22% (369/409) | 73.00% (127/174) | 64.18% (394/409) | |

| Hepatitis () | LSSVM | 89.91% (98/109) | 80.44% (37/46) | 100.00% (109/109) |

| SVM | 89.91% (98/109) | 80.44% (37/46) | 41.28% (45/109) | |

| Proposed | 96.33% (105/109) | 84.78% (39/46) | 56.88% (62/109) | |

| Ionosphere () | LSSVM | 80.92% (424/524) | 76.79% (172/224) | 100.00% (524/524) |

| SVM | 81.30% (426/524) | 73.66% (165/224) | 70.42% (369/524) | |

| Proposed | 94.72% (233/246) | 77.68% (174/224) | 60.69% (318/524) | |

| Transfusion () | LSSVM | 100.00% (246/246) | 96.19% (101/105) | 100.00% (246/246) |

| SVM | 96.34% (237/246) | 91.43% (96/105) | 72.36% (178/246) | |

| Proposed | 84.35% (442/524) | 94.29% (99/105) | 32.93% (81/246) | |

| Wholesale () | LSSVM | 72.08% (222/308) | 71.21% (94/132) | 100.00% (308/308) |

| SVM | 100.00% (308/308) | 71.32% (94/132) | 66.88% (206/308) | |

| Proposed | 72.08% (222/308) | 71.97% (95/132) | 73.05% (222/308) | |

| Cancer () | LSSVM | 97.14% (475/489) | 96.19% (202/210) | 88.98% (440/489) |

| SVM | 97.14% (475/489) | 96.19% (202/210) | 19.22% (94/489) | |

| Proposed | 97.34% (476/489) | 97.14% (204/210) | 10.63% (52/489) | |

| Banknote () | LSSVM | 100.00% (960/960) | 100.00% (412/412) | 100.00% (960/960) |

| SVM | 99.90% (959/960) | 99.51% (410/412) | 100.00% (960/960) | |

| Proposed | 100.00% (960/960) | 100.00% (412/412) | 36.15% (347/960) | |

| Haberman () | LSSVM | 76.64% (164/214) | 70.65% (65/92) | 100.00% (214/214) |

| SVM | 98.13% (210/214) | 66.30% (61/92) | 94.86% (203/214) | |

| Proposed | 77.10% (165/214) | 69.57% (64/92) | 88.32% (189/214) | |

| Raisin () | LSSVM | 87.14% (549/630) | 87.78% (237/270) | 100.00% (630/630) |

| SVM | 86.67% (546/630) | 88.52% (239/270) | 50.79% (320/630) | |

| Proposed | 77.10% (165/214) | 90.00% (243/270) | 48.41% (305/630) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Liu, Q.; Liu, S.; Yan, G.; Zhang, F.; Zeng, C.; Yang, X. A Dual-Norm Support Vector Machine: Integrating L1 and L∞ Slack Penalties for Robust and Sparse Classification. Processes 2025, 13, 2858. https://doi.org/10.3390/pr13092858

Liu X, Liu Q, Liu S, Yan G, Zhang F, Zeng C, Yang X. A Dual-Norm Support Vector Machine: Integrating L1 and L∞ Slack Penalties for Robust and Sparse Classification. Processes. 2025; 13(9):2858. https://doi.org/10.3390/pr13092858

Chicago/Turabian StyleLiu, Xiaoyong, Qingyao Liu, Shunqiang Liu, Genglong Yan, Fabin Zhang, Chengbin Zeng, and Xiaoliu Yang. 2025. "A Dual-Norm Support Vector Machine: Integrating L1 and L∞ Slack Penalties for Robust and Sparse Classification" Processes 13, no. 9: 2858. https://doi.org/10.3390/pr13092858

APA StyleLiu, X., Liu, Q., Liu, S., Yan, G., Zhang, F., Zeng, C., & Yang, X. (2025). A Dual-Norm Support Vector Machine: Integrating L1 and L∞ Slack Penalties for Robust and Sparse Classification. Processes, 13(9), 2858. https://doi.org/10.3390/pr13092858