Research on a Lightweight Textile Defect Detection Algorithm Based on WSF-RTDETR

Abstract

1. Introduction

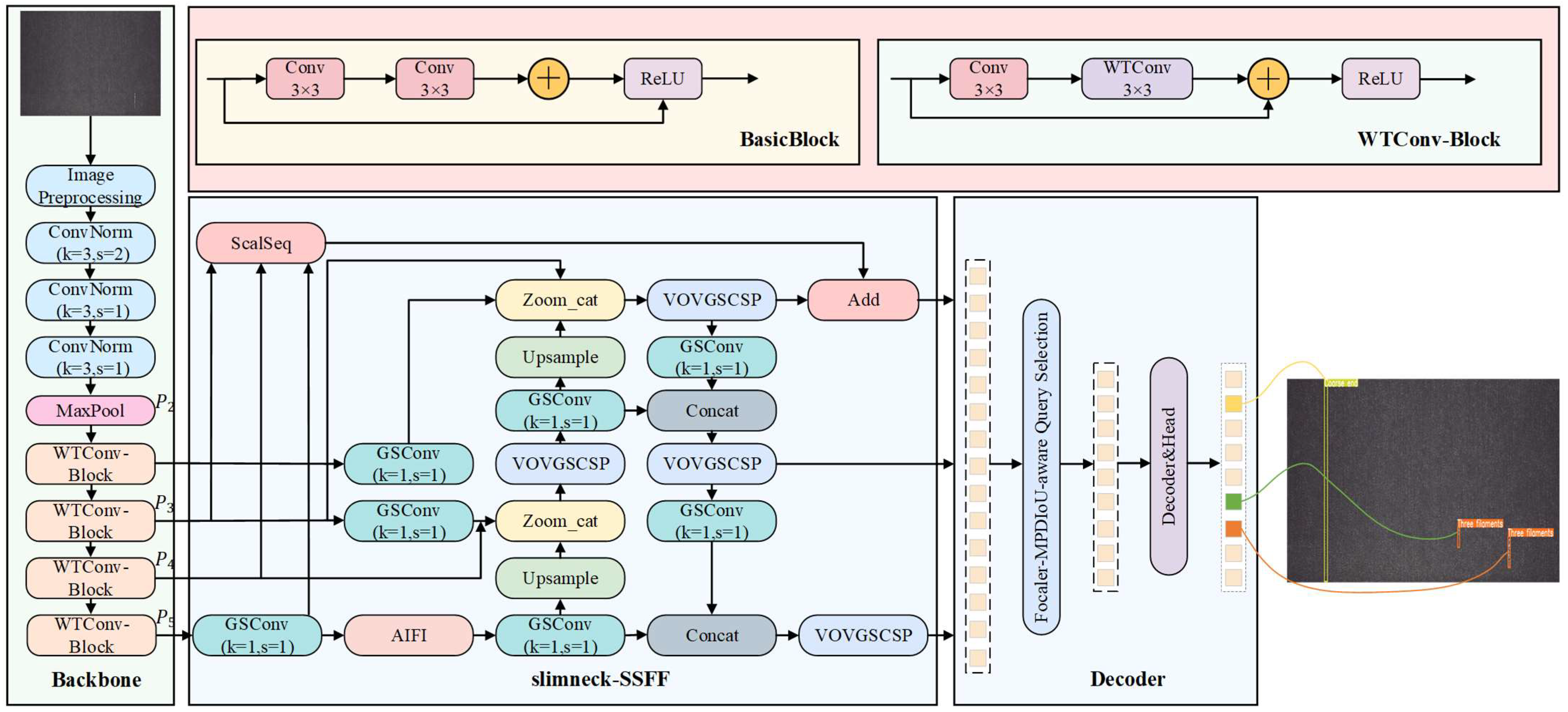

- The paper combined WTConv convolution with residual blocks to form a lightweight WTConv-Block module, which could improve the feature extraction capability while maintaining low computational complexity;

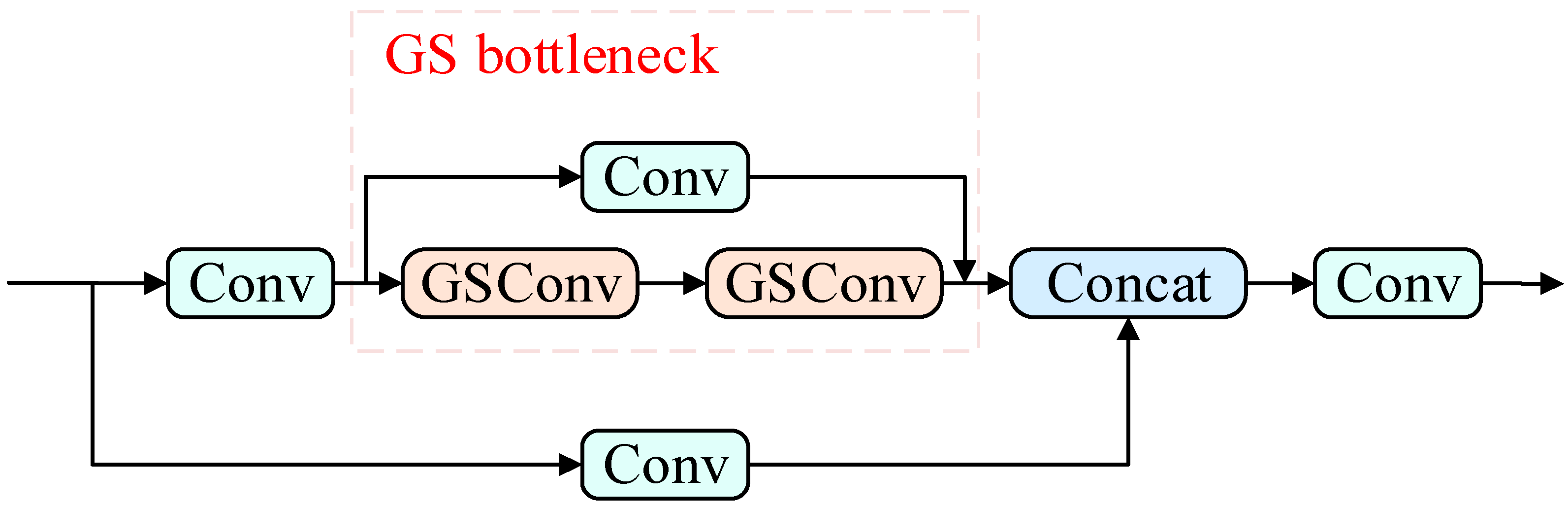

- The lightweight slimneck-SSFF architecture was constructed by integrating the Sequence Feature Fusion (SSFF), GSConv and VoVGSCSP, which could promote the detection efficiency of tiny defects while reducing computational cost;

- With incorporating dynamic weighting adjustment and multi-scale perception mechanism, the Focaler–MPDIoU loss function was proposed to balance the regression errors of targets in the different scales, thereby improving the detection accuracy.

2. Related Work

2.1. Traditional Algorithms for Textile Defect Detection

2.2. Deep Learning Methods for Textile Defect Detection

3. Methods

3.1. WSF-RTDETR Model Architecture

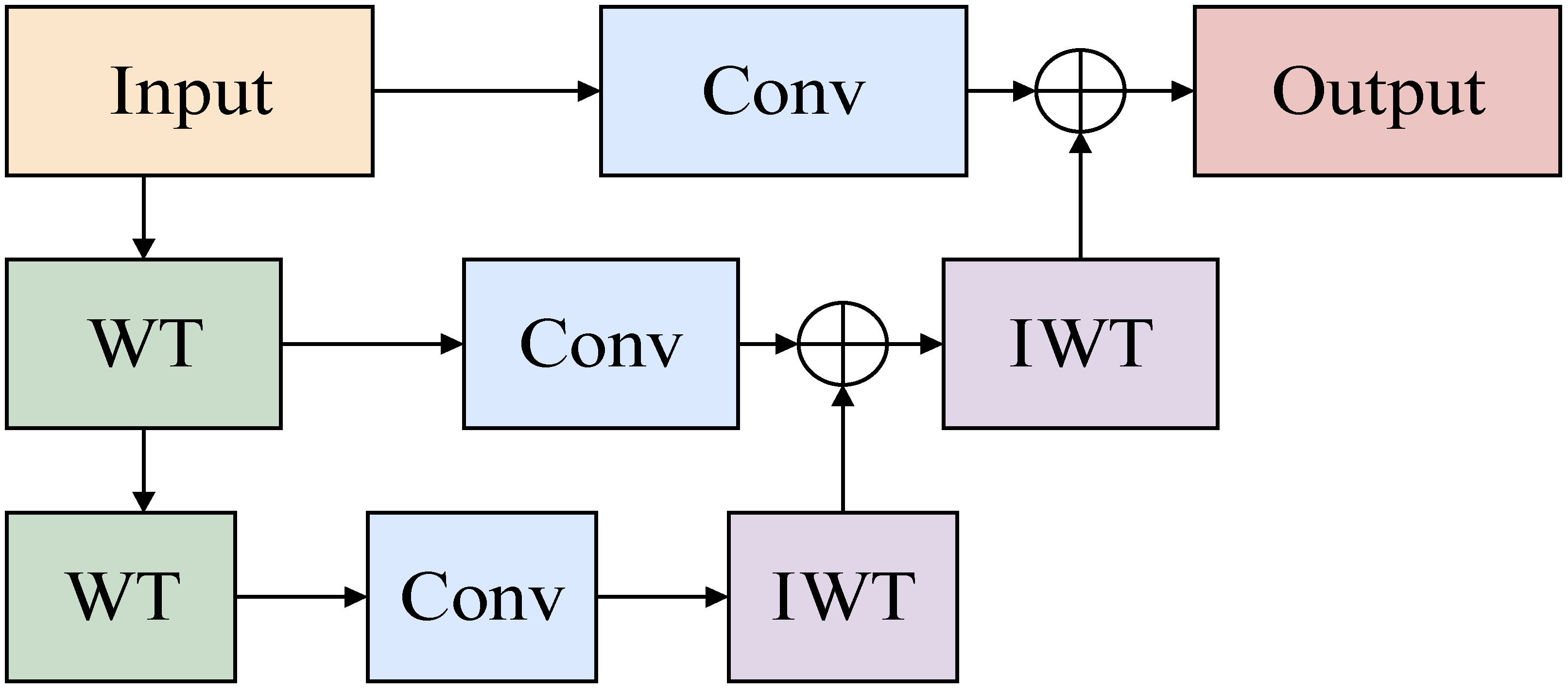

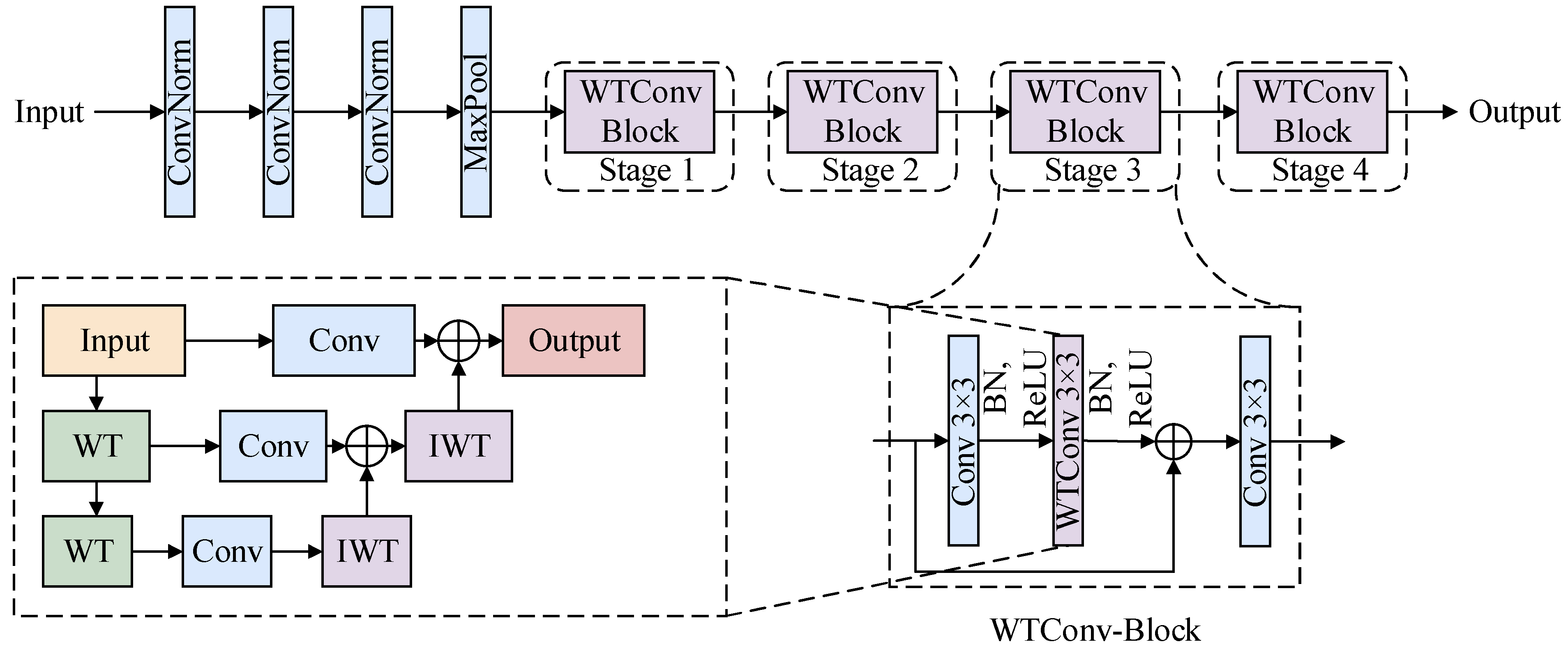

3.2. Improvement of Feature Extraction Network

3.3. Optimization of Cross-Scale Feature Fusion Network

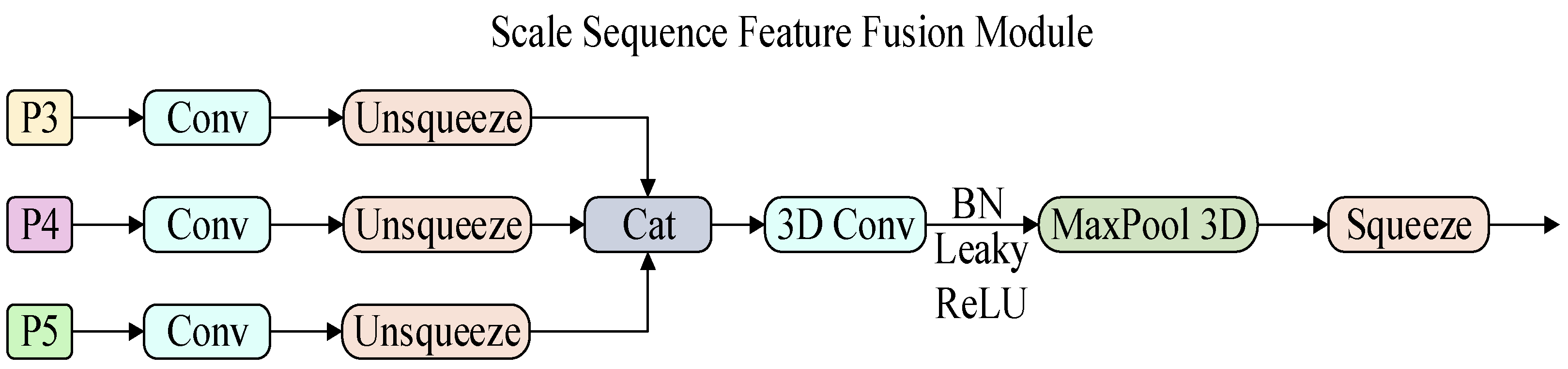

3.4. Optimization of the Loss Function

4. Experimental Results and Analyses

4.1. Experimental Environment

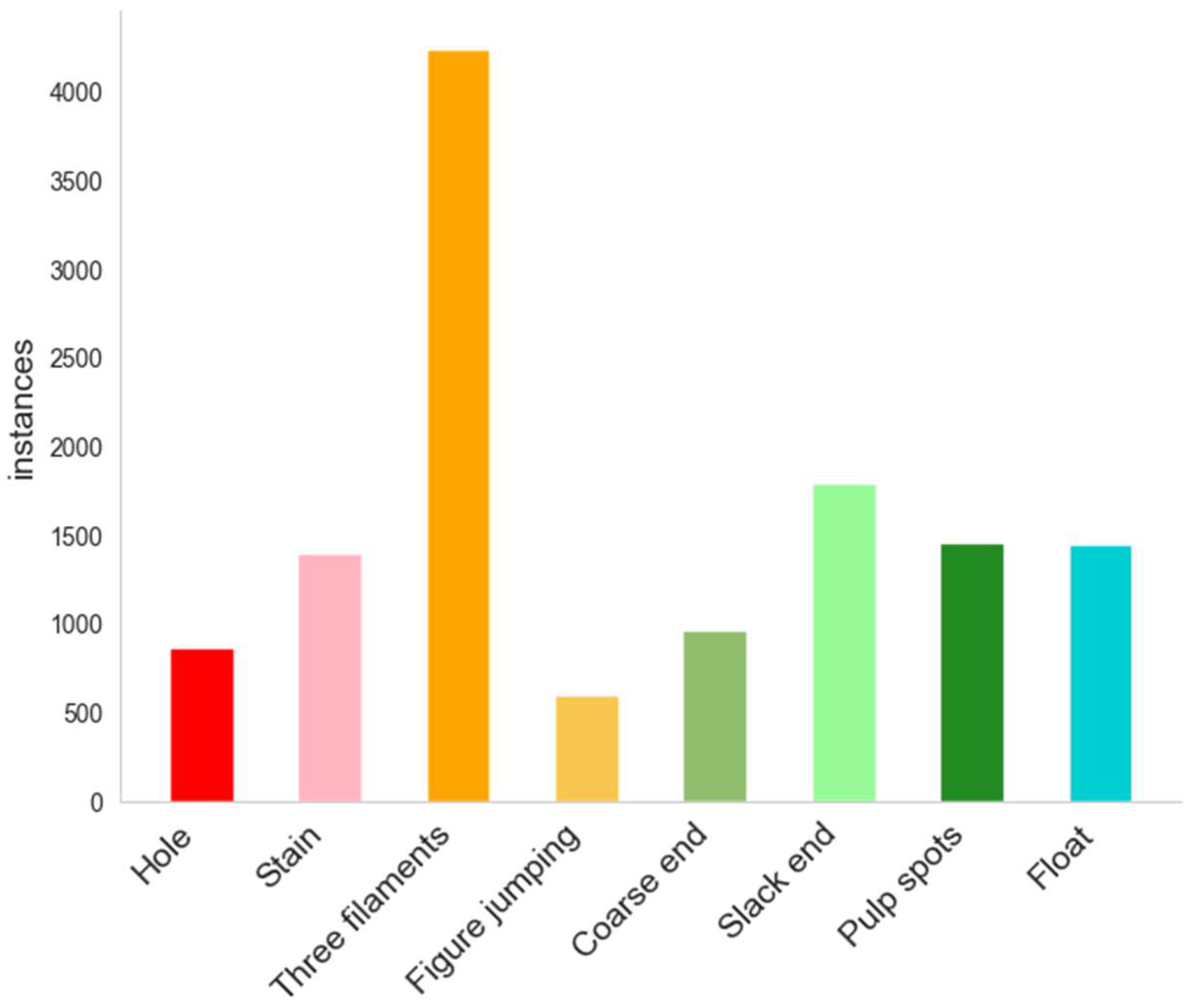

4.2. Datasets

4.3. Evaluation Indicators

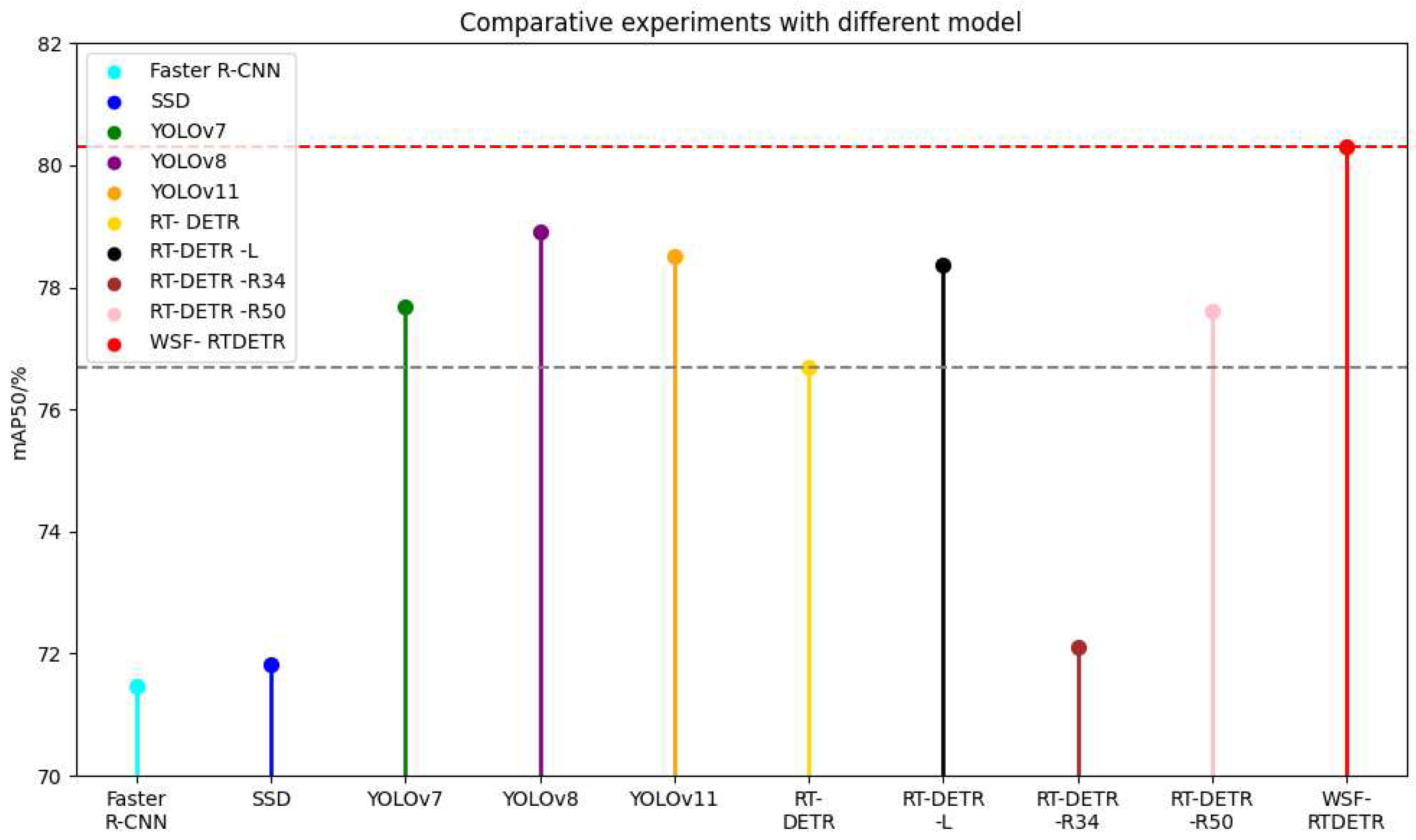

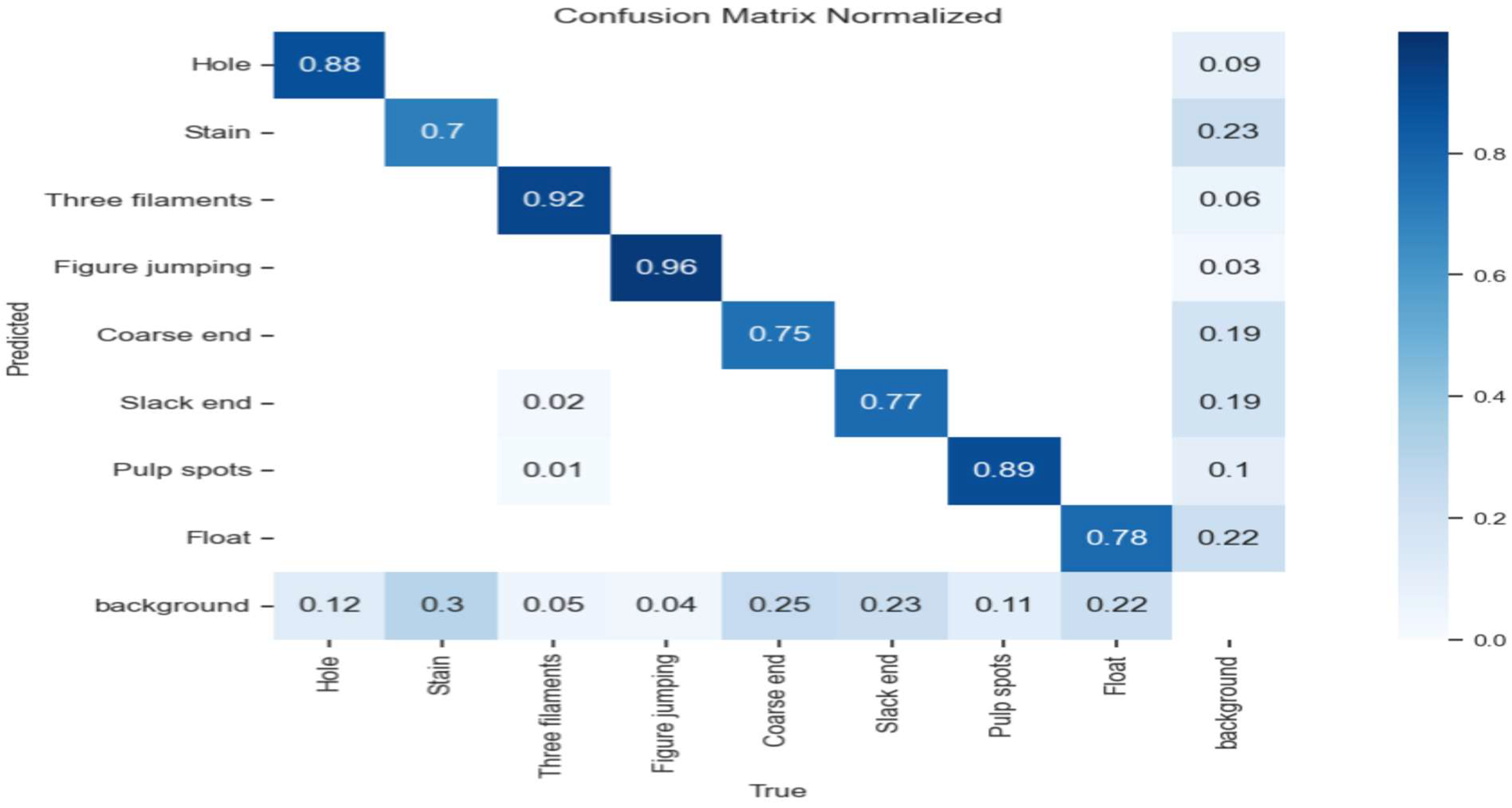

4.4. Comparative Experiments

4.5. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ngan, H.Y.T.; Pang, G.K.H.; Yung, N.H.C. Automated fabric defect detection—A Review. Image Vis. Comput. 2011, 29, 442–458. [Google Scholar] [CrossRef]

- Ha, Y.-S.; Oh, M.; Pham, M.-V.; Lee, J.-S.; Kim, Y.-T. Enhancements in image quality and block detection performance for Reinforced Soil-Retaining Walls under various illuminance conditions. Adv. Eng. Softw. 2024, 195, 103713. [Google Scholar] [CrossRef]

- Shahrabadi, S.; Castilla, Y.; Guevara, M.; Magalhães, L.G.; Gonzalez, D.; Adão, T. Defect detection in the textile industry using image-based machine learning methods: A brief review. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2022; Volume 2224, p. 012010. [Google Scholar]

- Wei, B.; Hao, K.; Gao, L.; Tang, X.-S. Detecting textile micro-defects: A novel and efficient method based on visual gain mechanism. Inf. Sci. 2020, 541, 60–74. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25 (NIPS 2012); Pereira, F., Burges, C.J., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012; ISBN 9781627480031. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Dang, L.M.; Wang, H.; Li, Y.; Nguyen, T.N.; Moon, H. DefectTR: End-to-end defect detection for sewage networks using a transformer. Constr. Build. Mater. 2022, 325, 126584. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

- Wei, Y.; Tian, Q.; Guo, J.; Huang, W.; Cao, J. Multi-vehicle detection algorithm through combining Harr and HOG features. Math. Comput. Simul. 2019, 155, 130–145. [Google Scholar] [CrossRef]

- Low, D.G. Distinctive image features from scale-invariant keypoints. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Tang, Y. Deep learning using linear support vector machines. arXiv 2013, arXiv:1306.0239. [Google Scholar] [CrossRef]

- Lüddecke, T.; Ecker, A. Image segmentation using text and image prompts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7086–7096. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Ouyang, W.; Xu, B.; Hou, J.; Yuan, X. Fabric defect detection using activation layer embedded convolutional neural network. IEEE Access 2019, 7, 70130–70140. [Google Scholar] [CrossRef]

- Chen, M.; Yu, L.; Zhi, C.; Sun, R.; Zhu, S.; Gao, Z.; Ke, Z.; Zhu, M.; Zhang, Y. Improved faster R-CNN for fabric defect detection based on Gabor filter with Genetic Algorithm optimization. Comput. Ind. 2022, 134, 103551. [Google Scholar] [CrossRef]

- Xie, H.; Zhang, Y.; Wu, Z. An improved fabric defect detection method based on SSD. AATCC J. Res. 2021, 8 (Suppl. 1), 181–190. [Google Scholar] [CrossRef]

- Liu, B.; Wang, H.; Cao, Z.; Wang, Y.; Tao, L.; Yang, J.; Zhang, K. PRC-Light YOLO: An efficient lightweight model for fabric defect detection. Appl. Sci. 2024, 14, 938. [Google Scholar] [CrossRef]

- Li, C.; Huang, Z.; Yu, M.; Tang, S. Enhanced fabric detection network with retained low-level texture features and adaptive anchors. J. Ind. Text. 2025, 55, 15280837251330532. [Google Scholar] [CrossRef]

- Sy, H.D.; Thi, P.D.; Gia, H.V.; An, K.L.N. Automated fabric defect classification in textile manufacturing using advanced optical and deep learning techniques. Int. J. Adv. Manuf. Technol. 2025, 137, 2963–2977. [Google Scholar] [CrossRef]

- Zhang, H.; Xiong, W.; Lu, S.; Chen, M.; Yao, L. QA-USTNet: Yarn-dyed fabric defect detection via U-shaped Swin Transformer Network based on Quadtree Attention. Text. Res. J. 2023, 93, 3492–3508. [Google Scholar] [CrossRef]

- Li, D.; Yang, P.; Zou, Y. Optimizing insulator defect detection with improved DETR models. Mathematics 2024, 12, 1507. [Google Scholar] [CrossRef]

- Zhang, L.; Yan, S.-F.; Hong, J.; Xie, Q.; Zhou, F.; Ran, S.-L. An improved defect recognition framework for casting based on DETR algorithm. J. Iron Steel Res. Int. 2023, 30, 949–959. [Google Scholar] [CrossRef]

- Fang, H.; Lin, S.; Hu, J.; Chen, J.; He, Z. CPF-DETR: An End-to-End DETR Model for Detecting Complex Patterned Fabric Defects. Fibers Polym. 2025, 26, 369–382. [Google Scholar] [CrossRef]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet convolutions for large receptive fields. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 363–380. [Google Scholar] [CrossRef]

- Kang, M.; Ting, C.-M.; Ting, F.F.; Phan, R.C.-W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhang, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S. Focaler-iou: More focused intersection over union loss. arXiv 2024, arXiv:2401.10525. [Google Scholar] [CrossRef]

- Ma, S.; Xu, Y. Mpdiou: A loss for efficient and accurate bounding box regression. arXiv 2023, arXiv:2307.07662. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zhang, D. Wavelet transform. In Fundamentals of Image Data Mining: Analysis, Features, Classification and Retrieval; Springer International Publishing: Cham, Switzerland, 2019; pp. 35–44. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.Y.; Sadeghian, A.; Reid, A.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Room, C. Confusion matrix. Mach. Learn 2019, 6, 27. [Google Scholar]

| Environmental Parameters | Value |

|---|---|

| Operating system | Windows 10.0 |

| Deep Learning Framework | PyTorch 1.13.1 |

| Programming language | Python 3.8.0 |

| CPU | Intel(R) Core(TM) i5-11400F |

| GPU | GeForce RTX 3060 |

| Parameters | Value |

|---|---|

| Learning Rate | 0.0001 |

| Image Size | 640 × 640 |

| Momentum | 0.9 |

| Optimizer Type | Adamw |

| Weight Decay | 0.0001 |

| Batch Size | 4 |

| Epoch | 100 |

| Number | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|---|

| Classifications | Hole | Stain | Three Filaments | Figure jumping | Coarse end | Stack end | Pulp spots | Float |

| Quantities | 876 | 1409 | 4251 | 606 | 973 | 1796 | 1462 | 1454 |

| Model | Precision (%) | Recall (%) | mAP50 (%) | mAP50:95 (%) | F1 (%) | FPS (f/s) |

|---|---|---|---|---|---|---|

| Faster R-CNN | 78.62 | 68.35 | 71.46 | 41.21 | 73.14 | 48 |

| SSD | 80.21 | 68.52 | 71.82 | 42.05 | 73.91 | 102 |

| YOLOv7 | 79.64 | 74.76 | 77.68 | 46.27 | 77.12 | 98 |

| YOLOv8 | 82.37 | 79.23 | 78.92 | 48.13 | 80.77 | 118 |

| YOLOv11 | 81.50 | 78.11 | 78.52 | 47.81 | 79.77 | 120 |

| RT-DETR | 86.04 | 75.11 | 76.69 | 51.20 | 80.20 | 112 |

| RT-DETR-L | 84.11 | 77.56 | 78.38 | 49.49 | 80.70 | 110 |

| RT-DETR-R34 | 81.75 | 74.06 | 72.10 | 48.95 | 77.72 | 115 |

| RT-DETR-R50 | 86.23 | 77.08 | 77.62 | 51.10 | 80.95 | 108 |

| WSF-RTDETR | 89.39 | 77.16 | 80.30 | 52.81 | 81.51 | 128 |

| Model | Defect Category | |||

| Hole | Stain | Three Filaments | Figure Jumping | |

| RT-DETR |  |  |  |  |

| WSF-RTDETR |  |  |  |  |

| Model | Defect Category | |||

| Coarse End | Slack End | Pulp Spots | Float | |

| RT-DETR |  |  |  |  |

| WSF-RTDETR |  |  |  |  |

| ID | RT-DETR | WTConv- Block | Slimneck- SSFF | Focaler- MPDIoU | Params (M) | GFLOPs (G) | mAP50 (%) | mAP50:95 (%) | FPS (f/s) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | √ | 19.83 | 57.0 | 76.69 | 51.20 | 112 | |||

| 2 | √ | √ | 12.83 | 40.3 | 77.74 | 51.81 | 122 | ||

| 3 | √ | √ | 19.62 | 57.6 | 78.33 | 52.19 | 115 | ||

| 4 | √ | √ | √ | 13.66 | 43.1 | 79.27 | 53.47 | 124 | |

| 5 | √ | √ | √ | √ | 13.66 | 43.1 | 80.30 | 52.81 | 128 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Zhang, S.; Yang, Y.; Li, W.; Wang, G. Research on a Lightweight Textile Defect Detection Algorithm Based on WSF-RTDETR. Processes 2025, 13, 2851. https://doi.org/10.3390/pr13092851

Chen J, Zhang S, Yang Y, Li W, Wang G. Research on a Lightweight Textile Defect Detection Algorithm Based on WSF-RTDETR. Processes. 2025; 13(9):2851. https://doi.org/10.3390/pr13092851

Chicago/Turabian StyleChen, Jun, Shubo Zhang, Yingying Yang, Weiqian Li, and Gangfeng Wang. 2025. "Research on a Lightweight Textile Defect Detection Algorithm Based on WSF-RTDETR" Processes 13, no. 9: 2851. https://doi.org/10.3390/pr13092851

APA StyleChen, J., Zhang, S., Yang, Y., Li, W., & Wang, G. (2025). Research on a Lightweight Textile Defect Detection Algorithm Based on WSF-RTDETR. Processes, 13(9), 2851. https://doi.org/10.3390/pr13092851