1. Introduction

Classification prediction [

1] is a significant technique within the areas of pattern recognition and data mining. The objectives are to define and distinguish model and function (i.e., classifier) for data class or concept, based on the characteristics of the dataset, which enables the system to predictively label unknown objects and make autonomous decisions. Numerous classification prediction methods have been proposed, such as Bayes [

2], Naive Bayes [

3], K-Nearest Neighbor (KNN) [

4], Support Vector Machine (SVM) [

5] and Neural Network [

6]. However, some studies [

7,

8,

9] demonstrate that no single classifier is optimal for all datasets. In contrast to standard classification problems such as image or text, process classification prediction, as a specific classification task, requires dealing with large volumes of high-dimensional nonlinear and label-ambiguous data. These unique challenges lead to suboptimal performance of traditional classification methods for process data [

10,

11]. Therefore, investigating more efficient classification methods tailored to different process datasets is particularly essential.

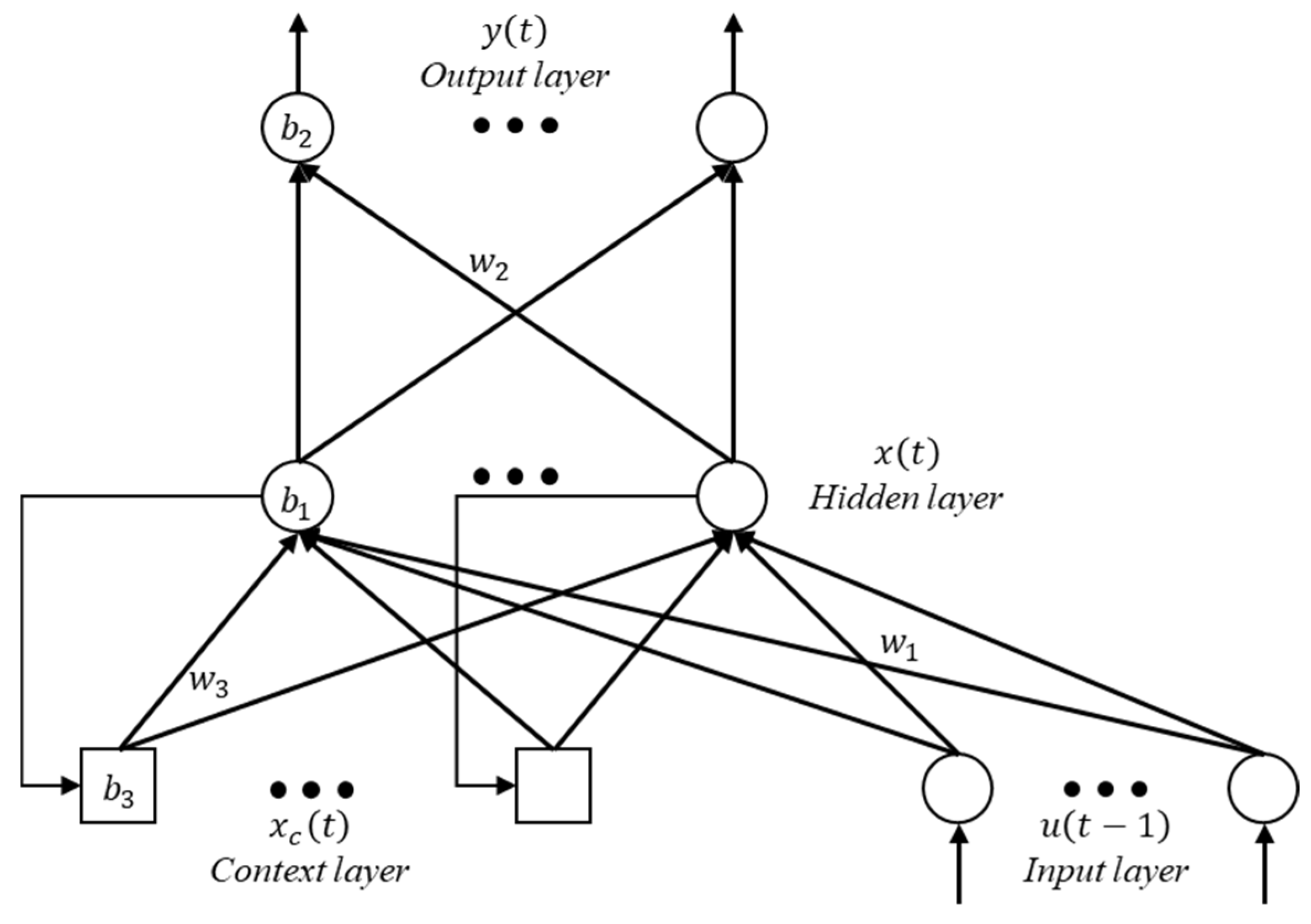

As a variant of Artificial Neural Network (ANN), the Elman neural network (ENN) is inspired by neurobiological principles. It is a local recursion delay feedback neural network that offers better stability and adaptability compared to ordinary neural networks, which makes it particularly adept at solving nonlinear problems. Consequently, the application of ENN has attracted significant research in many different engineering and scientific fields. Zhang et al. [

12] utilize ENN to calculate the synthetic efficiency of a hydro turbine and find that it possesses superior nonlinear mapping abilities. In [

13], an enhanced ENN is presented to solve the problem of quality prediction during product design. Li et al. [

14] apply ENN to predict sectional passenger flow in urban rail transit, and the results highlight the accuracy and usefulness of the method. Gong et al. [

15] employ ENN and wavelet decomposition to predict wind power generation, which achieves good results in this context. A modified ENN [

16] is suggested for the purpose of establishing predictive relationships between the compressive and flexural strength of jarosite mixed concrete. A hidden recurrent feedback-based of modified Elman [

17] is proposed to predict the absolute gas emission quantity, resulting in improved accuracy and efficiency.

ENN is also widely applied to classification prediction problems. Chiroma et al. [

18] propose the Jordan-ENN classifier to help medical practitioners to quickly detect malaria and determine severity. Boldbaatar et al. [

19] develop an intelligent classification system for breast tumors to distinguish between benign or malignant based on recurrent wavelet ENN. An improved Elman-AdaBoost algorithm [

20] is proposed for fault diagnosis of rolling bearings operating under random noise conditions, ultimately achieving better accuracy and practicability. Arun et al. [

21] introduce a deep ENN classifier for static sign language recognition. Zhang et al. [

22] propose a hybrid classifier that combines a convolutional neural network (CNN) and ENN for radar waveform recognition, resulting in improved overall successful recognition ratio. A fusion model, which integrates Radial Basis Function (RBF) and ENN, is suggested for the purpose of solving residential load identification, and the results indicate that this method improves identification performance [

23].

However, ENN also exhibits inherent limitations, particularly escaping from local optimum and convergence deceleration, ultimately resulting in low accuracy [

24,

25]. The primary factor contributing to this problem is the difficulty in obtaining the suitable weights and thresholds [

26]. In order to overcome these weaknesses, it has become increasingly popular to combine Swarm Intelligence (SI), which is a stochastic optimization algorithm inspired by nature, with neural network to optimize weights and thresholds. In [

27], the flamingo search algorithm is utilized to refine an enhanced Elman Spike neural network, thereby enabling it to effectively classify lung cancer in CT images. In [

28], the weights, thresholds and numbers of hidden layer neurons of ENN are optimized by a genetic algorithm. Wang et al. [

29] utilize the adaptive ant colony algorithm to optimize ENN and demonstrate its efficacy in compensating a drilling inclinometer sensor. Although the aforementioned methods of optimizing ENN via SI algorithms have demonstrated significant advantages across various fields, traditional classification methods still dominate the problem of process classification in Selective Laser Melting (SLM). Barile et al. [

30,

31] employed CNN to classify the deformation behavior of AlSi10Mg specimens fabricated under different SLM processes. Ji et al. [

32] achieved effective classification of spatter features under different laser energy densities using SVM and Random Forest (RF). Li et al. [

33] classified extracted melt pool feature data with Backpropagation neural network (BPNN), SVM and Deep Belief Network (DBN), thereby reducing the likelihood of porosity.

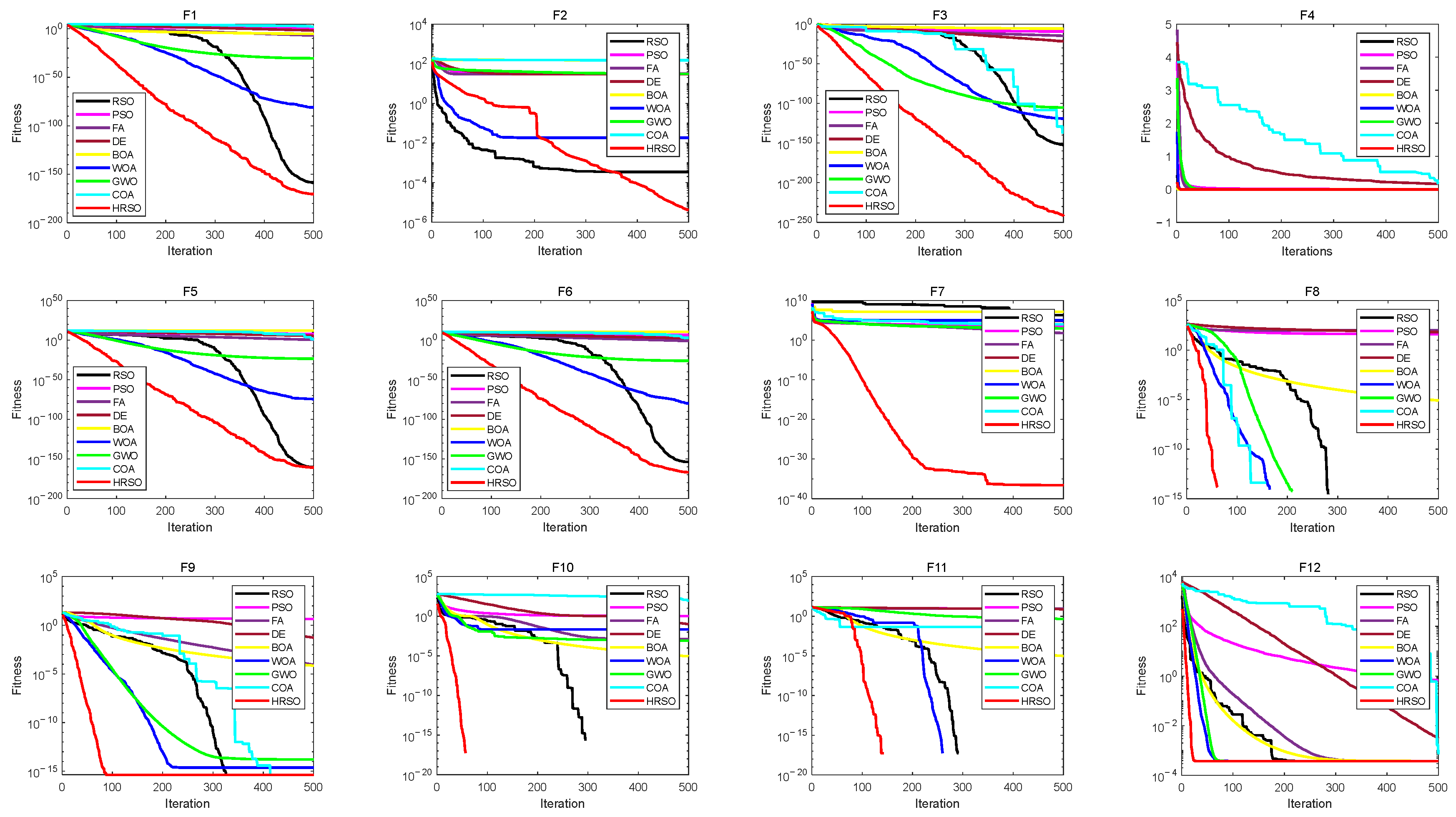

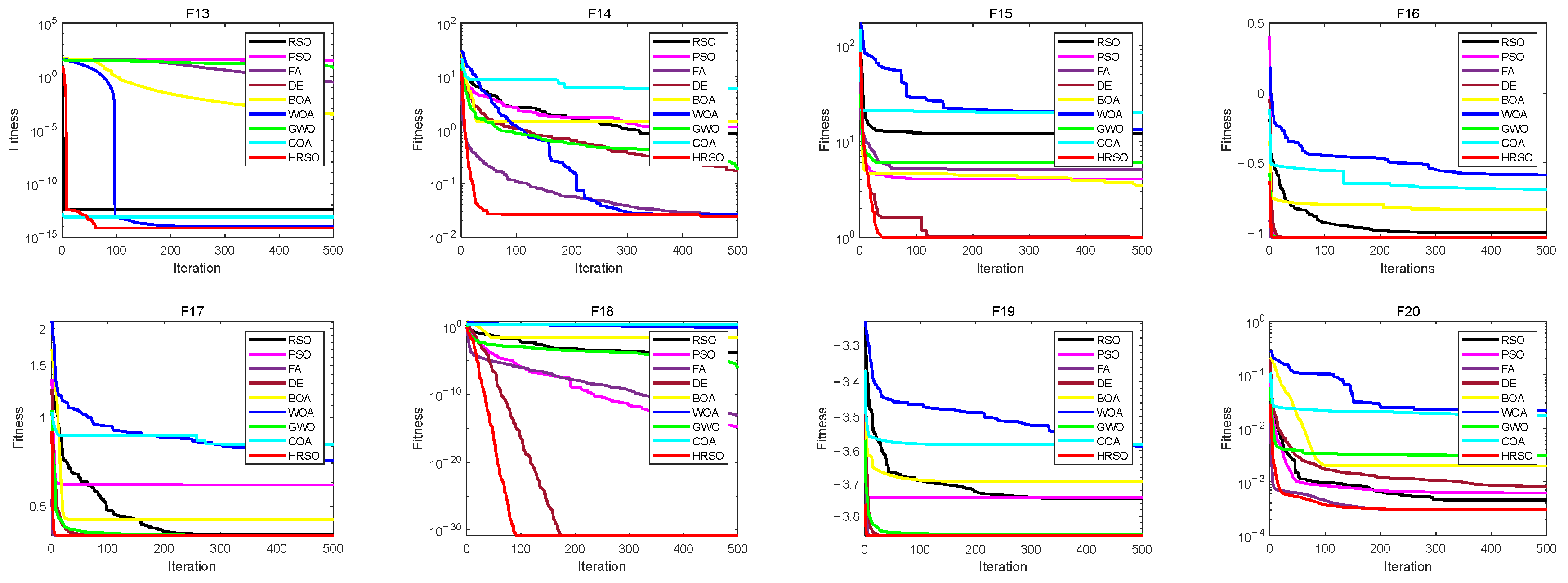

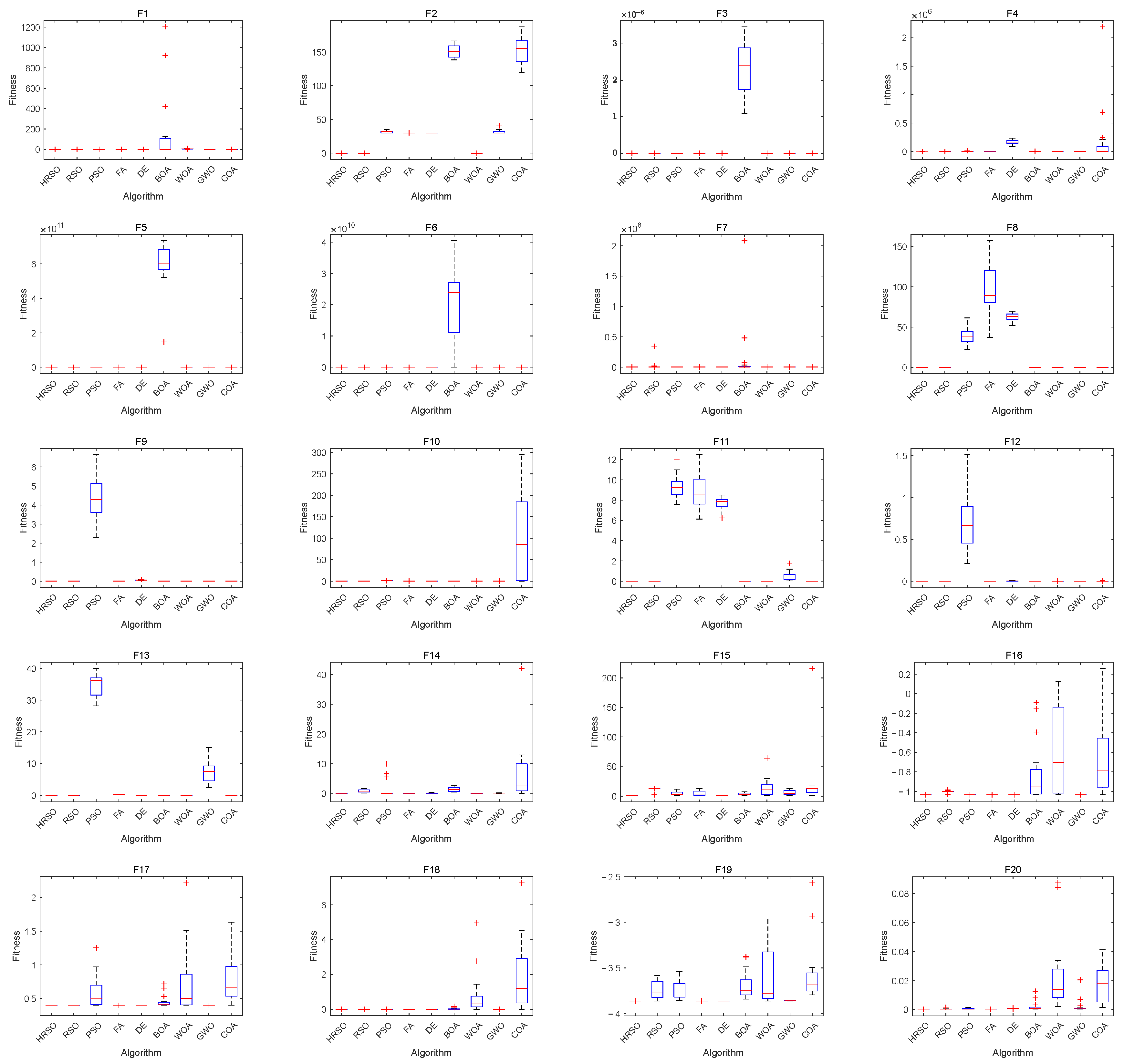

According to the literature review, a two-phase Rat Swarm Optimizer consisting of five search actions is proposed in this paper, named Hyperactive Rat Swarm Optimizer (HRSO). As stated by Moghadam et al. [

34], RSO demonstrates algorithmic merits in simpler structure design and fast convergence. However, similar to other SI, RSO has difficultly in escaping from local optimum when handling complex objective functions or a large number of variables. For this reason, the algorithm outlined in this study is built upon the following four aspects.

First, a nonlinear adaptive parameter is introduced to regulate the balance of exploration and exploitation parts in search phase. Second, the center point search and cosine search are introduced to enhance the effectiveness of global search in the exploration part. Third, three methods, including rush search, contrast search and random search, are introduced into the exploitation part to improve the ability of convergence speed and local search. Fourth, a stochastic wandering strategy is introduced to enhance the ability to jump out local extreme values.

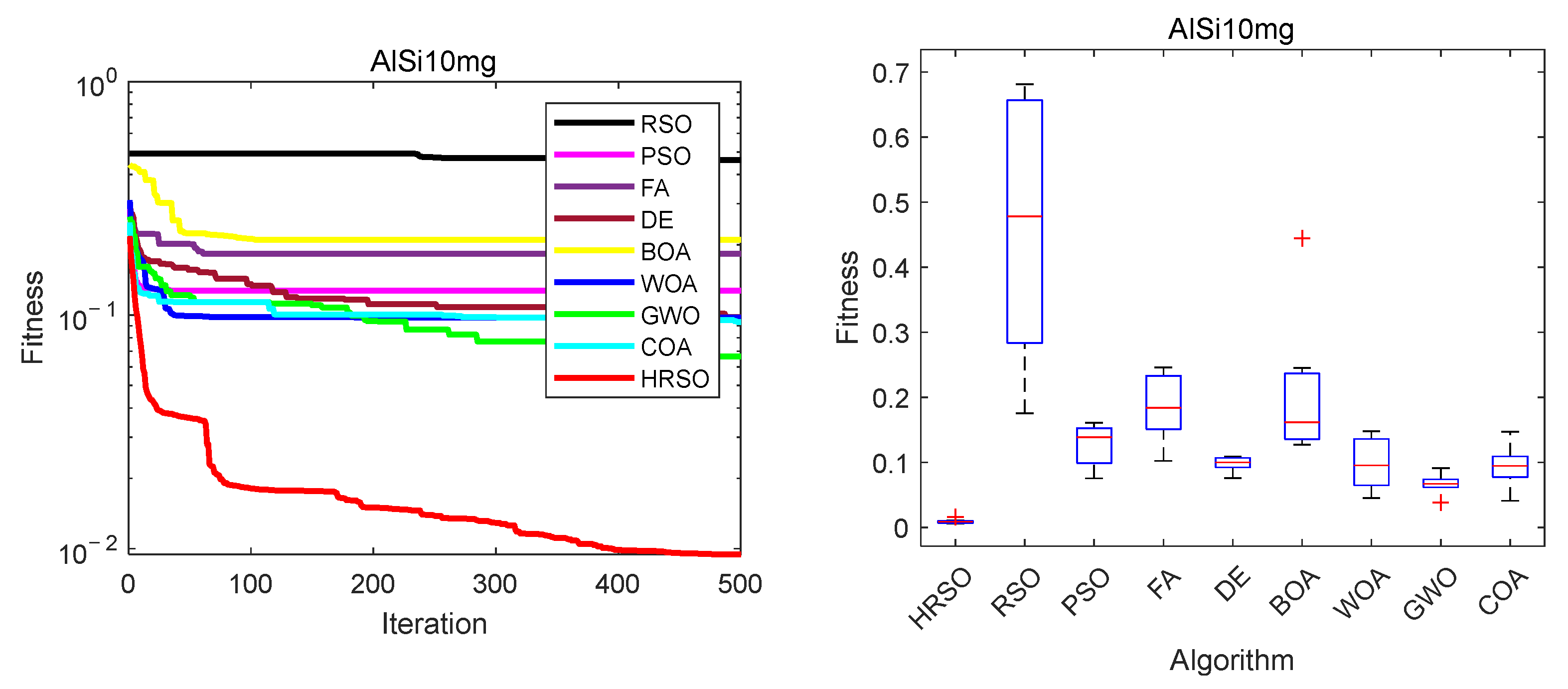

Another theme related to this paper is data classification prediction. To elevate the classification prediction capabilities of ENN, a classifier based on HRSO is proposed, named HRSO-ENNC. Unlike the traditional iterative training method for neural networks, the proposed classifier utilizes HRSO to adjust the weights and thresholds. Tested on benchmark functions, classification datasets and a practical AlSi10Mg process classification problem, the experiment results demonstrate the accuracy and stability of HRSO-ENNC.

The structure of this manuscript is arranged in the following manner.

Section 2 provides an overview of the ENN and the original RSO.

Section 3 elaborates on the proposed HRSO and the design of an ENN classifier.

Section 4 presents the experiments conducted and the corresponding analysis of the results.

Section 5 outlines the conclusions drawn from the research presented in this paper.

3. Methods

3.1. Improve Algorithm

In this section, a Hyperactivity Rat Swarm Optimizer (HRSO) based on multiple types of chase search and attack actions is proposed to improve the shortcomings of RSO. HRSO implements a two-phase calculation process, comprising a search and a mutation phase. The search phase is further divided into two parts, exploration and exploitation, by means of a nonlinear adaptive parameter. There are five search actions, center point search and cosine search, which are based on the exploration part, and rush search, contrast search and random search, which are based on the exploitation part. The mutation phase employs a stochastic wandering strategy. Algorithm 2 presents the pseudo-algorithm for HRSO.

| Algorithm 2 HRSO |

| 1 | Initialization: |

| 2 | Generate the initial population Xi of the HRSO |

| 3 | Calculate fitness scores and identify X*best |

| 4 | while t ≤ T do |

| 5 | | Execute Search Phase |

| 6 | | for i = 1, 2, …, N do |

| 7 | | | Update parameters using Formulas (4) and (5) |

| 8 | | | if |E| ≥ 1 then |

| 9 | | | Execute Exploration Search using Algorithm 3 |

| 10 | | | elseif |E| < 1 then |

| 11 | | | Execute Exploitation Search using Algorithm 4 |

| 12 | | | end if |

| 13 | | end for |

| 14 | | Calculate fitness scores and identify X*best |

| 15 | | Execute Mutation Phase using Algorithm 5 |

| 16 | | Calculate fitness scores and identify X*best |

| 17 | end while |

3.2. Nonlinear Adaptive Parameter

A nonlinear adaptive parameter

E is employed to enhance RSO, which is an important parameter to regulate the proportion of exploration and exploitation parts during the search phase. Depending on the absolute value of

E, the algorithm either performs exploration part (when |

E| ≥ 1) or exploitation part (when |

E| < 1). The mathematical expression for

E is given as in Formula (4).

In Formula (4),

E0 is a random number from –1 to 1.

cp is an adaptive parameter that decreases progressively with iterations. Its representation is given as Formula (5):

According to Formulas (4) and (5), the range of values for parameter E can be determined as −2 to 2.

3.3. Exploration Search

To enable RSO to explore more deeply the invisible domains within search space to improve the efficiency of discovering the global optimal position, center point search and cosine search are introduced in the exploration part of the proposed algorithm.

Center point search is a linear search method based on the average position of the population, which uses

cp to control the extended search. This search method is given as Formula (6).

In Formula (6), mean(Xti) denotes the average position of the population. r2 constrains within [0, 1].

The cosine search is a search pattern characterized by a wide range of oscillatory variation. A set of adaptive random numbers

z is used to control the cosine fluctuation variation. This search method is given as Formula (7).

In Formula (7), the mathematical expression for the parameter

z is as follows, in Formula (8):

In Formula (8), r3 and r5 are random numbers. r6 is Gaussian distributed random numbers. This is controlled by a random number r4. When r4 ≥ cp, r6 shows parts greater than 0.5, otherwise, it shows the parts less than 0.5.

To maximize the exploration performance of HRSO, a random number

r1, with values between 0 and 1, is used to control the search method. Specifically, if

r1 ≥ 0.5, the search iteration is performed using Formula (6), otherwise, Formula (7) is used. The pseudo-algorithm for details of the exploration search is presented in Algorithm 3.

| Algorithm 3 Exploration Search |

| 1 | Update parameters using Formulas (5) and (8) |

| 2 | Calculate mean(Xti) based on the current population |

| 3 | when |E| ≥ 1 then |

| 4 | | if r1 ≥ 0.5 then |

| 5 | | | Update population positions using Formula (6) |

| 6 | | else then |

| 7 | | | Update population positions using Formula (7) |

| 8 | | end if |

| 9 | end when |

3.4. Exploitation Search

To enhance the convergence speed and local search capability, three search methods are introduced into the exploitation part of the proposed algorithm, which are rush search, contrast search and random search.

The rush search is the same as the original algorithm, which is Equation (1), but the ranges of the parameters R and C are set to [1, 3] and [–1, 1] respectively, aiming to focus the algorithm on the neighborhood to enhance the local optimization.

The contrast search is performed by randomly selecting two individuals,

j1 and

j2, from the population and comparing them with the

i-th individual, approaching in the direction of the optimal fitness value among the three individuals. The mathematical expression is as in Formulas (9) and (10):

In Formulas (9) and (10), r7 denotes a random number that lies between 0 and 1. m is a constant with the value 1 or 2.

The random search also selects two individuals, with the current individual search in the direction of the optimal fitness value among

j1 and

j2. It can be expressed by Formulas (11) and (12):

In Formulas (11) and (12), r8 constrains within [0, 1].

To maximize the exploitation performance of the proposed algorithm, a random constant

k is introduced to determine the search methods, where

k takes values of 1, 2 and 3. Specifically,

k = 1 employs Formula (2) for the rush search,

k = 2 utilizes Formula (9) for the contrast search and

k = 3 applies Formula (10) for the random search. The pseudo-algorithm for details of the exploitation search is presented in Algorithm 4.

| Algorithm 4 Exploitation Search |

| 1. | Update random parameters k, j1, and j2 |

| 2. | when |E| < 1 then |

| 3. | | if k = 1 then |

| 4. | | | Update population positions using Formula (2) |

| 5. | | elseif k = 2 then |

| 6. | | | Update random parameters jm |

| 7. | | | if fit(j) < fit(i) then |

| 8. | | | | Update population positions using Formula (9) |

| 9. | | | else then |

| 10. | | | | Update population positions using Formula (10) |

| 11. | | | end if |

| 12. | | elseif k = 3 then |

| 13. | | | if fit(j1) < fit(j2) then |

| 14. | | | | Update population positions using Formula (11) |

| 15. | | | else then |

| 16. | | | | Update population positions using Formula (12) |

| 17. | | | end if |

| 18. | | end if |

| 19. | end when |

3.5. Stochastic Wandering Strategy

In the mutation phase, a stochastic wandering strategy is employed to improve the algorithmic capability to jump out of local extreme values. This means that a part of the population is randomly selected to be compared with the global optimization and updated iteratively, and the individual with the best fitness will be retained. This strategy is mathematically expressed as in Formulas (13) and (14):

In Formulas (13) and (14),

randn is a set of normally distributed random numbers.

X*

wrost denotes the worst individual in a population at the

t-th iteration. It is worth noting that a constant

NS is used to control the number of individuals selected in this phase, with

NS set to 0.2 in this paper. The pseudo-algorithm for details of the mutation phase is presented in Algorithm 5.

| Algorithm 5 Mutation Phase |

| 1. | for i = 1, 2, …, N do |

| 2. | | if fit(i) > fit(best) then |

| 3. | | | Update population positions using Formula (13) |

| 4. | | else fit(i) = fit(best) then |

| 5. | | | Update population positions using Formula (14) |

| 6. | | end if |

| 7. | end for |

3.6. Classifier Design

For constructing a complete HRSO-ENNC, the structural design of the hidden layer is also a key factor, indicating that the neuron count of the hidden layer has a significant influence on the ENN’s overall performance. An insufficient number of neurons can cause feature information to be lost during the propagation process, preventing the desired accuracy from being achieved. Conversely, an excessive number of neurons may lead to a more complex system prone to overfitting. It is important to highlight that the configuration of the hidden layer also has effects on the weights and thresholds optimization. Therefore, it is necessary to select a reasonable number for the hidden layer. The method presented in [

38] is employed in this research, mathematically formalized as in Formula (15):

Formula (15) represents a common empirical equation for determining the number of the hidden layer in neural network, where a represents an integer within the range of [1, 10].

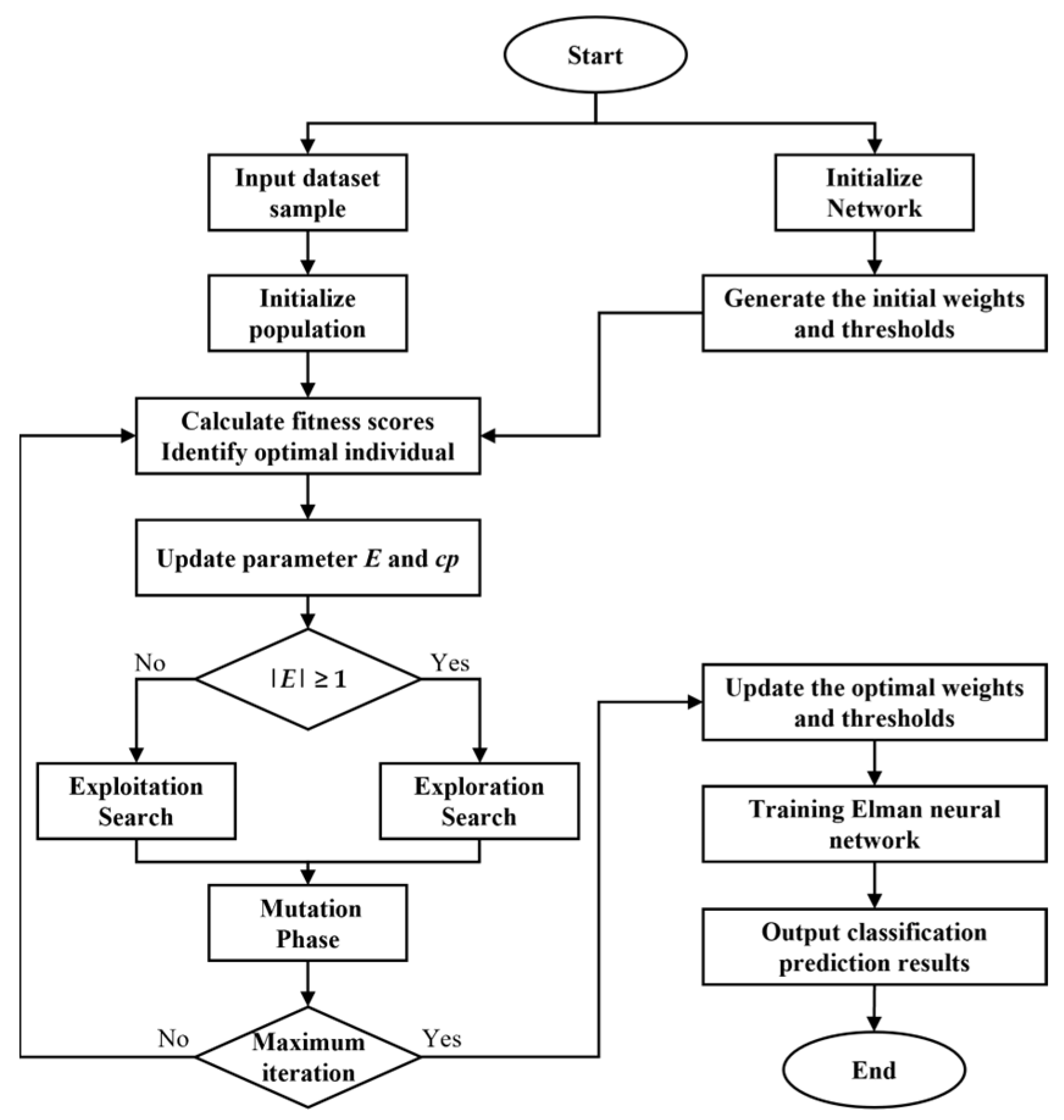

Additionally, the gradient descend method is commonly employed to determine the suitable weights and thresholds in traditional ENN, but it easily obtains a local optimum, ultimately resulting in low accuracy for the system. According to the previous section, the proposed HRSO algorithm ensures an equilibrium between proportions of exploration and exploitation, and it shows a great ability to jump out of the local optimum. In this case, it serves as an adaptive global training method, which means that the proposed algorithm replaces traditional training processes to overcome the shortcomings of the ENN during weights and thresholds optimization. Algorithm 6 presents the pseudo-algorithm for HRSO-ENNC, and

Figure 2 represents a specific flowchart.

| Algorithm 6 HRSO-ENNC |

| 1. | Input: Dataset sample |

| 2. | Normalized dataset |

| 3. | Selection of training set by the stratified k-fold cross validation method |

| 4. | for i = 1, 2, …, k |

| 5. | | Initialize ENN and HRSO algorithm parameters |

| 6. | | Calculate fitness scores and identify X*best |

| 7. | | while t ≤ T do |

| 8. | | | Update network parameters using Algorithm 2 |

| 9. | | | Calculate fitness scores and identify X*best |

| 10. | | end while |

| 11. | | Get X*best for i-th |

| 12. | | Update network parameters and Training ENN |

| 13. | | Output classification prediction results |

| 14. | end for |