Abstract

Predictive maintenance (PdM) is a cornerstone of smart manufacturing, enabling the early detection of equipment degradation and reducing unplanned downtimes. This study proposes an advanced machine learning framework that integrates the Extreme Learning Machine (ELM) with a novel hybrid metaheuristic optimization algorithm, the Polar Lights Salp Cooperative Optimizer (PLSCO), to enhance predictive modeling in manufacturing processes. PLSCO combines the strengths of the Polar Light Optimizer (PLO), Competitive Swarm Optimization (CSO), and Salp Swarm Algorithm (SSA), utilizing a cooperative strategy that adaptively balances exploration and exploitation. In this mechanism, particles engage in a competitive division process, where winners intensify search via PLO and losers diversify using SSA, effectively avoiding local optima and premature convergence. The performance of PLSCO was validated on CEC2015 and CEC2020 benchmark functions, demonstrating superior convergence behavior and global search capabilities. When applied to a real-world predictive maintenance dataset, the ELM-PLSCO model achieved a high prediction accuracy of 95.4%, outperforming baseline and other optimization-assisted models. Feature importance analysis revealed that torque and tool wear are dominant indicators of machine failure, offering interpretable insights for condition monitoring. The proposed approach presents a robust, interpretable, and computationally efficient solution for predictive maintenance in intelligent manufacturing environments.

1. Introduction

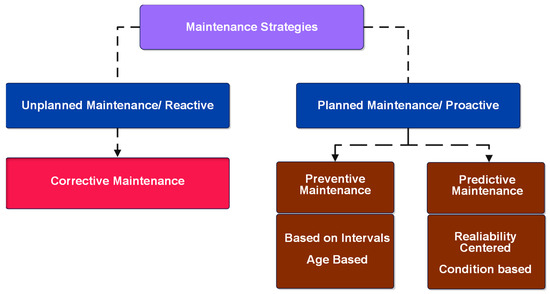

The significance of maintenance has increased throughout the years with extensive mechanization and automation. Maintenance now plays a crucial role in the industrial sector, and its expenses account for a substantial portion of the manufacturing costs of an enterprise [1]. Existing literature broadly outlines three primary maintenance methods; namely, Corrective Maintenance (CM), Preventive Maintenance (PM), and PdM methods [2]. CM is the simplest and consists of fixing the equipment only after failure. While simple, CM often results in expensive production interruptions and unscheduled component replacements. PM involves scheduling regular maintenance tasks for specified equipment to reduce the probability of failures. Maintenance is performed while the machine remains operational to prevent unforeseen malfunctions and the resultant downtime and expenses [3,4]. While PM may decrease repair expenses and unanticipated downtime, it leads to superfluous maintenance. The assessment of when equipment will wear out relies on an assumed failure rate rather than empirical data regarding the state of the particular equipment. This frequently leads to expensive and entirely superfluous maintenance activities, resulting in increased planned downtime and necessitating complex inventory management [5]. Figure 1 illustrates the hierarchy of maintenance strategies.

Figure 1.

Hierarchy of maintenance strategies [6].

Introduced as a more advanced approach, PdM or condition-based maintenance is designed to anticipate equipment failures and determine the appropriate maintenance actions to optimize the balance between maintenance recurrence and the associated expenses. PdM relies on the predictive analysis of data gathered from sensors and meters linked to equipment, historical maintenance records, and operational logs to determine when equipment requires maintenance or withdrawal. PdM enables firms to enhance their operations by performing maintenance activities only when absolutely necessary [7]. As the complexity of industrial systems grows and the amount of data generated increases, Machine Learning (ML) has been increasingly applied to predictive maintenance and has notably enhanced its effectiveness. ML algorithms have demonstrated the ability to manage high-dimensional and multivariate data, uncover hidden links within data in intricate and dynamic contexts, and deliver unparalleled accuracy in failure prediction [8,9]. Common ML algorithms include Support Vector Machines (SVM) [10], K-Nearest Neighbors (KNN) [11], Recurrent Neural Networks (RNNs) [12], Decision Trees (DT) [13], Bayesian Networks (BN) [14], Long Short-Term Memory networks (LSTMs) [15], Convolutional Neural Networks (CNNs) [16], Gradient Boosting Machines (GBM) [17], Random Forests (RF) [18], Naive Bayes (NB) [19], K-means clustering [20], and the Extreme Learning Machine (ELM) [21]. ELM stands out among other ML algorithms due to its rapid training process and versatility. Unlike traditional neural networks, ELM eliminates the iterative learning process, achieving faster convergence and reducing computational complexity [22]. Few studies have explored the application of ELM for predictive maintenance across various domains, including electrical systems [23], power transformers [24], aero-engines [25], lithium-ion batteries [26], and steam turbines [27].

However, ELM’s effectiveness depends heavily on parameter tuning. Manual parameter tuning is both time-intensive and frequently ineffective in attaining high-precision outcomes. To address this challenge, Metaheuristic Algorithms (MAs) are used to autonomously tune parameters via extensive simulation tests, guaranteeing that the model parameters reach ideal settings [28]. Moreover, the integration of MAs notably improves the model’s stability and generalization capacity. Van Thieu et al. introduced a combination of ELM with the weighted mean of vectors (INFO) algorithm, Pareto-like Sequential Sampling (PSS), and the Runge–Kutta optimizer (RUN) [29]. Their comparison analysis, utilizing streamflow data, demonstrates improved forecast accuracy, strong convergence, and consistent stability, especially the PSS-ELM. Li et al. developed an optimal forecasting model for supercapacitor capacity, which combines an ELM with the Heuristic Kalman Filter (HKF) technique [30]. The experiments revealed that HKF-ELM requires fewer setting parameters, reduced time cost, and higher prediction accuracy. Similarly, Boriratrit et al. proposed three hybrid forecasting models by integrating ELM with Jellyfish Search (JS-ELM), Flower Pollination (FP-ELM), and Harris Hawk (HH-ELM) algorithms to improve precision and reduce overfitting [31]. JS-ELM surpassed the other models by attaining the lowest Root Mean Square Error (RMSE) and ensuring efficient processing times. Sun and Huang presented the Whale Optimization Algorithm-ELM (WOA-ELM) for forecasting carbon emission intensity [32]. The WOA-ELM exhibited a strong ability to handle extensive datasets while proficiently accommodating smaller samples. The incorporation of the WOA significantly augmented the ELM’s performance by enhancing its accuracy and overall predictive efficacy. Tan introduced an alternative variant of ELM, a hybrid method that uses the Improved Multi-Objective Particle Swarm Optimization (IMOPSO) algorithm to optimize multiple competing objectives [33]. This technique limits the ELM network’s input weights and hidden biases to appropriate ranges, improving generalization performance and resolving instability. Hua et al. proposed a hybrid framework joining Partial Least Squares (PLS), Variational Mode Decomposition (VMD), Improved Atom Search Optimization (IASO), and ELM [34]. VMD decomposes wind speed data into frequency-based sub-series, PLS refines the dataset, and IASO, enhanced with simulated annealing, optimizes the ELM. The model outperformed other models, demonstrating superior accuracy and reliability. Kardani et al. [35] also proposed the integration of MAs, Firefly Algorithm (FFA), and Improved Firefly Algorithm (IFFA) with ELM, Adaptive Neuro-Fuzzy Inference System (ANFIS), and Artificial Neural Networks (ANN) to optimize prediction performance. Among them, the ELM-IFFA model delivered the most accurate and reliable results [35]. Gao et al. [36] developed a hybrid prediction model in which the RF is utilized to extract parameters. The ELM is then optimized for prediction using the Improved Parallel Whale Optimization Algorithm (IPWOA). Simulation experiments demonstrated that the RF-IPWOA-ELM model achieves superior prediction accuracy [36]. Shariati et al. utilized the hybrid ELM and the Grey Wolf Optimizer (GWO) to predict the compressive strength of concrete with partial cement replacements [37]. The authors reported notable improvements in the performance of the ELM-GWO model.

Despite the advancements made in integrating MAs with ELM across various applications, the adoption of ELM in PdM domains remains limited. While ELM has demonstrated significant potential in other fields, its application in other fields shows that it faces challenges stemming from limited accuracy and generalizability. These limitations primarily arise due to the random initialization of weights and biases, which, although contributing to ELM’s computational speed, hinder its ability to achieve optimal performance. Furthermore, the absence of multiple hidden layers and robust optimization strategies exacerbates these issues, particularly in complex industrial scenarios where accurate predictions are critical. Although prior studies have demonstrated the benefits of integrating MAs with ELM to enhance its predictive capabilities, the existing literature lacks approaches that incorporate novel competitive-based learning mechanisms and dynamic adaptive strategies. Such strategies are essential to ensure an effective balance between exploitation and exploration of the problem space by the optimization algorithm, enabling the identification of optimal weights and biases and thus significantly improving ELM’s accuracy and robustness. This gap in the literature forms the core motivation and impetus for this research, which seeks to address these challenges through the development of an innovative optimization framework tailored for ELM in PdM applications.

Therefore, this study introduces the integration of the PLSCO to optimize the weights and biases of ELM for PdM prediction. The PLO was introduced by Yuan et al., and it draws inspiration from the aurora phenomenon [38]. It models the behavior of high-energy particles interacting with the Earth’s magnetic field and atmosphere. PLO presents the advantage of effectively balancing local and global search processes by using adaptive weights. However, similar to other MAs, PLO can be trapped in local optima, and its convergence can be hindered in high-dimensional spaces. The PLSCO addresses these drawbacks by introducing two mechanisms into the traditional PLO. One approach to mitigate the limitations of PLO is to incorporate a CSO strategy, where the population is dynamically split into two groups: winners and losers, based on their fitness values. This division ensures a structured division of optimization roles, enhancing both exploration and exploitation. The winner group, comprising the top-performing individuals, focuses on refining local searches. These particles are updated using the PLO mechanism, which excels in fine-tuning solutions and exploiting promising regions in the search space. This ensures that high-quality solutions are further improved through focused exploitation. The loser group, consisting of the lower-performing individuals, adopts the SSA mechanism. SSA is well-suited for exploration due to its ability to simulate salp chain dynamics, which allows for broader coverage of the solution space. Unlike existing hybrid metaheuristic algorithms for ELM tuning, which frequently operate by sequentially invoking constituent algorithms, PLSCO introduces a co-evolutionary control layer that unifies the core mechanisms of PLO, CSO, and SSA into a single adaptive search framework. The collision-based exploitation mechanism from PLO is embedded directly into the swarm update equations, enabling localized refinements to occur concurrently with global exploration. The competitive learning dynamics of CSO are adapted to act as a real-time regulator of exploration–exploitation balance, informed by SSA’s leader–follower hierarchy. This integration allows PLSCO to adapt its parameter settings dynamically as the search progresses. Consequently, PLSCO offers a more synergistic and responsive search process, improving convergence behavior. A detailed comparison of the architectural components, parameter adaptation strategies, and optimization mechanisms of the proposed ELM-PLSCO framework with other benchmark ELM-based hybrid models is presented in Table 1.

Table 1.

Comparative Summary of the Proposed ELM-PLSCO Model and Prior ELM-Based Hybrid Optimization Models.

The remainder of this paper is structured as follows. Section 2 presents background information on the PLO and the proposed PLSCO. Section 3 describes the novel ELM-PLSCO model, while Section 4 presents the experiments conducted and discusses their results. Section 5 provides a conclusion and outlines potential directions for future research.

2. Methodology

2.1. Polar Light Optimizer (PLO)

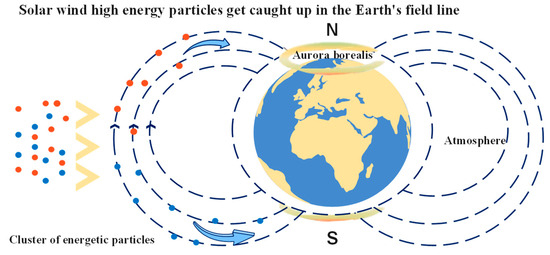

The PLO draws inspiration from the aurora borealis, where charged particles (solar wind) interact with the Earth’s magnetic field, creating luminous patterns in the sky as shown in Figure 2.

Figure 2.

Solar wind energetic particles enter the Earth’s magnetic field lines [38].

The algorithm incorporates three primary mechanisms: gyration motion, aurora oval walk, and particle collision, which are designed to simulate global exploration and local exploitation in the optimization process [38]. The algorithm starts with a randomly generated population of candidate solutions. The population matrix , is given as follows in Equation (1).

where is the number of particles. is the dimension of the solution space. and denote the upper and lower boundaries of the search space, respectively. denotes a random value in . To search for optimal solutions, PLO imitates the gyration motion. The gyration motion simulates the spiraling motion of charged particles under the influence of the Earth’s magnetic field, characterized by the Lorentz force as expressed in Equation (2).

where is the particle’s charge. is its velocity. is the magnetic field vector. The motion is further modeled using Newton’s second law in Equation (3).

Here, represents the mass of the charged particle. Combining Equations (2) and (3) yields the first-order ordinary differential equation, as expressed in Equation (4).

Integrating Equation (4) over time from 0 to , and velocity to , yields Equation (5).

Solving Equation (5) yields Equation (6).

By accounting for atmospheric damping, the equation is rewritten as expressed in Equation (7).

where is the damping factor. This modification transforms the equation into a nonhomogeneous first-order linear differential equation. To solve it, the method of variation of constants is applied by assuming a solution of the form , where and are constants to be determined. First, obtain the linear first-order ODE as given in Equation (8).

Seeking a solution of the form with constant , substitution yields Equation (9).

Equation (10)

where is the integration constant. The parameters , and are set to 1, while is assigned a value of 100. The damping factor is a random variable within the range . The time-dependent behavior of the velocity described by Equation (10) is incorporated into the algorithm to evaluate the fitness function during the optimization process. The auroral oval walk is a mechanism in the PLO that enhances global exploration of the solution space. Auroras typically form along an elliptical region known as the auroral oval, whose size and boundaries vary with geomagnetic activity and the interplanetary magnetic field. The dynamic fluctuations of the auroral oval introduce unpredictable chaos, which is beneficial for efficient global search. To model this behavior, Levy Flight (LF) is employed due to its non-Gaussian random walk characteristics. The LF step distribution is defined in Equation (11).

where determines stability, and represents the step size. The auroral oval motion is described in Equation (12).

Here, models the dynamic expansion and contraction of the auroral oval, is the population’s center of mass; it is expressed in Equation (13).

is the current position of a particle. PLO combines gyration motion for local exploitation and the auroral oval walk for global exploration. Gyration motion simulates particles spiraling along the Earth’s magnetic field lines to perform fine local adjustments, while the auroral oval walk enables larger exploratory steps to discover valuable regions in the search space. The combined position update rule is expressed in Equation (14).

where accounts for environmental randomness (), and are adaptive weights balancing gyration motion and auroral oval walk . and are given in Equations (15) and (16).

As iterations progress, increases, emphasizing local exploitation while decreases, prioritizing global exploration early on. This dynamic balance allows the PLO to efficiently explore and exploit the solution space, achieving optimal or near-optimal solutions. Robust global exploration and local exploitation alone are insufficient for a well-rounded optimization algorithm; the ability to escape local optima is equally critical. To address this, the PLO incorporates a particle collision strategy inspired by the dynamic interactions of charged particles in the solar wind as they travel toward Earth. When entering the Earth’s atmosphere and magnetic field, high-energy particles such as electrons and protons experience frequent collisions, altering their velocity and trajectory. These collisions transfer energy, trigger excitation and ionization of other particles, and contribute to the complex, evolving shapes of auroras. In the PLO, particle collisions are modeled to induce chaotic movement, enabling particles to escape local optima. The position of a particle after a collision is calculated in Equation (17).

Here, represents a randomly selected particle. The collision probability , which increases over time, is given in Equation (18). In Equation (17), is a uniformly distributed random variable in [0,1] that controls the phase shift of the sine function, influencing the displacement magnitude following particle collisions. is a uniformly distributed random variable in [0,1] used to determine whether a collision event occurs in relation to the collision probability . The parameter , increases monotonically with iteration count, thereby raising the probability of collision-induced perturbations during later search stages and enhancing local search diversity. Where is an independent random variable used to add stochastic variability in collision activation.

As the algorithm progresses, collisions occur more frequently, enhancing the ability of particles to explore new regions of the solution space. This strategy plays a crucial role in maintaining diversity and preventing premature convergence.

2.2. Proposed PLSCO

2.2.1. Salp Swarm Algorithm (SSA)

A notable limitation of the PLO lies in its tendency to exhibit reduced exploration. PLO effectively leverages the auroral oval walk for exploration. Although the auroral oval walk introduces randomness through LF, it focuses on specific candidate solutions within a limited region, reducing the likelihood of escaping local optima. Its ability to maintain a diverse population and explore uncharted regions of the solution space is relatively constrained. This limitation becomes especially pronounced in complex and high-dimensional optimization problems, where premature convergence to local optima hinders the algorithm’s overall performance. The integration of the SSA within the PLSCO directly addresses this shortcoming by introducing a dynamic mechanism to bolster global exploration. The SSA is inspired by the collective swarming behavior of salps, marine organisms that navigate and forage in a structured formation called a salp chain. In SSA, the population of salps is divided into two roles: leaders and followers [39]. The leader, positioned at the head of the chain, determines the primary movement direction by moving closer to the food source, which represents the best solution (global optimum) found so far. The followers occupy subsequent positions in the chain and adapt their movements based on the leader’s trajectory. Leader salps position is updated as follows using Equation (19) [40].

where is the updated position of leader salps in the -th dimension. represents the current position of the best solution in the -th dimension. and are the upper and lower bounds of the search space in the -th dimension. represent random coefficients, where controls step size, adds randomness within bounds, and determines movement direction. The parameter is a random value in , while is randomly drawn from . The parameter determines the movement direction of leader salps. When ≥ 0.5, the leader moves toward the food source; when c3 < 0.5, the leader moves away, enabling exploration of alternative regions. For example, if c3 = 0.7, the leader’s position update is biased toward the best-known solution, accelerating exploitation. Conversely, if c3 = 0.4, the leader moves in the opposite direction, facilitating exploration and helping to escape potential local optima. This bidirectional adjustment mechanism ensures a balanced search process. The upper and lower bounds of the -th dimension are represented as and , respectively. A critical parameter, , dynamically governs the balance between exploration and exploitation and is defined in Equation (20).

where is the total number of iterations and is the current iteration. For the followers, their positions are updated based on the average of their current position and the position of the preceding salp in the chain as given in Equation (21).

The SSA directly addresses the exploration flaw by introducing a stochastic, hierarchical leader–follower dynamic that promotes broader and adaptive exploration. SSA’s design mitigates the deterministic and localized behavior of PLO through the exploration strategy of SSA in Equation (19). Furthermore, the adaptive step size , decreases gradually over iterations, ensuring that the algorithm focuses on exploration in the early stages and transitions seamlessly to exploitation as the search progresses.

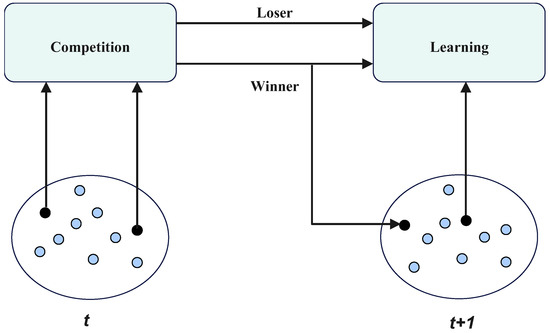

2.2.2. Competitive Swarm Optimizer (CSO)

The integration of CSO into PLSCO transforms the algorithm’s exploitation phase by addressing the inherent limitations of PLO. CSO introduces a structured, adaptive approach to local refinement, ensuring that high-quality solutions are continuously improved as shown in Figure 3. This is achieved by continuously refining the best solutions and exploring regions of possible solutions with worst-performing particles. This refinement complements the global exploration capabilities introduced by SSA, creating a dynamic balance between exploration and exploitation. The CSO improves upon the PLO algorithm by introducing a competitive mechanism. The population of search agents (set to an even number) is randomly divided into pairs. In each pair, one agent is designated as the winner and the other as the loser, based on their fitness values. Winners are directly carried over to the next generation without modification, while losers update their velocity and position by learning from the winners. This approach ensures that agents are updated in each iteration, preserving the optimization process of PLO while enhancing performance. The velocity and position of a loser in the -th pair at iteration are updated using Equations (22) and (23) [41].

Figure 3.

Competitive Swarm Optimizer (CSO) process.

is the position of the winner in the -th dimension. represent the position and velocity of the loser in the -th dimension, respectively. denotes the average position of the entire population in the -th dimension. , and are random stochastic vectors in , influencing the learning behavior; is a predefined parameter regulating the influence on velocity. For winner and loser selection, a random permutation of the population indices, denoted as , is created as the randomized pairing of agents. For each iteration of the pairing process, k represents the loop index that determines the pairs of agents being compared. represent the first agent in the pair , and is a second agent in the pair . The winner and loser are determined using Equations (24) and (25) [42].

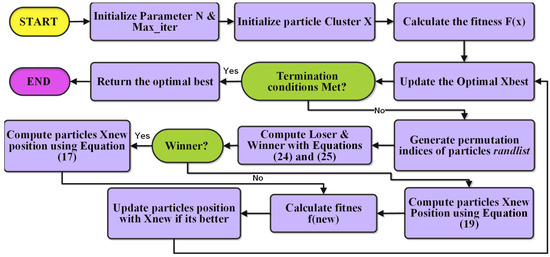

The integration of PLO, CSO, and SSA in PLSCO is a deliberate design choice based on both theoretical complementarity and empirical success reported in the recent metaheuristic literature. PLO contributes strong local exploitation capabilities through its gyration motion and auroral oval walk, supported by adaptive weighting to fine-tune solutions within promising regions. SSA introduces leader–follower dynamics that enable broad, adaptive exploration while maintaining population diversity, a feature particularly advantageous in multimodal landscapes. CSO adds a structured competition mechanism, dividing the population into winners and losers to create an adaptive feedback loop that reinforces exploitation for top-performing solutions while stimulating exploration among underperformers. The algorithm begins by initializing a population of high-energy particles, each representing a candidate solution within the search space. The fitness of these particles is evaluated based on the problem’s objective function, and the global best solution, is identified. In each iteration, the population is randomly divided into pairs, where is the population size. Fitness comparisons are conducted within each pair to classify agents as winners or losers, according to Equations (24) and (25). Winners who demonstrate better fitness update their positions using the PLO mechanism as described in Equation (17). PLO emphasizes exploitation by leveraging gyration motion, auroral oval walks, and particle collisions to refine solutions in potential regions. Losers with inferior fitness update their positions using SSA as described in Equation (19), which focuses on exploration. The SSA mechanism employs leader–follower dynamics to encourage the exploration of unexplored regions, preventing stagnation in local optima and maintaining population diversity. After updating the winners and losers’ positions, each particle’s fitness is re-evaluated, and positions are selectively retained if they improve fitness. The global best solution, , is continuously updated as better solutions are identified. The process iterates until the maximum number of iterations is reached or a predefined convergence criterion is satisfied, at which point the best solution, , is returned as seen in Algorithm 1. This competitive division incorporates an adaptive learning mechanism, where winners continue exploiting the local search space while losers enhance exploration by learning from both winners and global dynamics. By dynamically pairing agents and employing diverse update strategies, PLSCO prevents premature convergence, ensures an efficient search process, and adaptively balances global and local search efforts. This design addresses the limitations of standalone PLO and SSA, making PLSCO a robust and efficient optimization approach suitable for solving complex, high-dimensional, and multimodal optimization problems. The flow chart of PLSCO is illustrated in Figure 4.

| Algorithm 1: PLSCO Pseudo Code |

| Initialize parameters: t = 0, Max_t (maximum number of iterations) Initialize the high-energy particle population Evaluate the fitness value of each particle in Set the current best solution: While t < Max_t: Identify winners and losers in the population using Equations (24) and (25) For each particle: If the particle is a winner: Update its position using the PLO update rule (Equation (17)) Else: Update its position using the Salp Swarm Optimization rule (Equation (19)) Calculate the fitness: If : Replace the current position with the updated position: = Else: Retain the current position of the particle Update based on the best fitness value in the population t = t + 1 Return |

Figure 4.

PLSCO flow chart.

2.3. Computational Complexity of PLSCO

The computational complexity of the PLSCO is explained in this section. During initialization, generating the population of particles with dimensions incurs a complexity of , while the computation of fitness values for all particles requires , where represents the cost of evaluating the fitness function. Within each iteration, the classification of particles into winners and losers based on fitness comparisons introduces a complexity of . The position updates for winners and losers, governed by the PLO and SSA mechanisms, require each, resulting in overall. Fitness evaluation of all particles following the updates contributes , while updating the global best solution adds . Combining these operations, the per-iteration complexity is dominated by the fitness evaluation and is approximated as . For a total of iterations, the overall computational complexity is . Including the initialization phase, the total complexity is expressed as . Since , and typically dominate , the algorithm’s complexity is effectively , with the fitness evaluation being the most computationally intensive component.

3. Machine Learning Hyper-Parameter Optimization with PLSCO

3.1. Extreme Learning Machine (ELM)

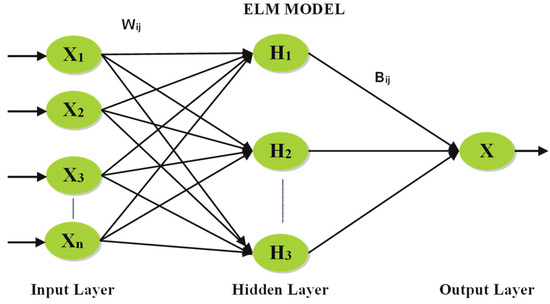

The ELM addresses the limitations of traditional feedforward neural networks, extensive parameter tuning, and excessive time by introducing a single hidden layer architecture with gradient-free learning. ELM is widely applied due to its rapid learning capability and ability to model both linear and nonlinear systems [43]. For pairs of observations , where is the input and is the target, ELM randomly initializes the input weights and hidden biases . The output weights are then computed using a direct matrix solution. The output of the ELM is expressed in Equation (26).

Here, represents the number of hidden neurons, are the output weights, is the activation function, are the input weights, and are the biases of the -th hidden neuron. Equation (26) can be reformulated in matrix form as given in Equation (27).

where is the hidden-layer output matrix, is the vector of output weights, and is the training target. The hidden-layer matrix is defined in Equation (28).

Each element of corresponds to the output of a hidden neuron for a specific input.

The parameters , and are optimized by minimizing the deviation between the predicted output and the training target, represented in Equation (29).

The cost function for this optimization is given in Equation (30).

The solution for is obtained using the Moore–Penrose generalized inverse of , which is calculated in Equation (31).

Here, represents the pseudo-inverse of . The training process of ELM involves solving to determine the optimal output weights , thereby minimizing the error between the model output and the target . This direct, matrix-based approach makes ELM computationally efficient and suitable for modeling complex systems. ELM is illustrated in Figure 5.

Figure 5.

Extreme Learning Machine.

3.2. ELM-PLSCO Model

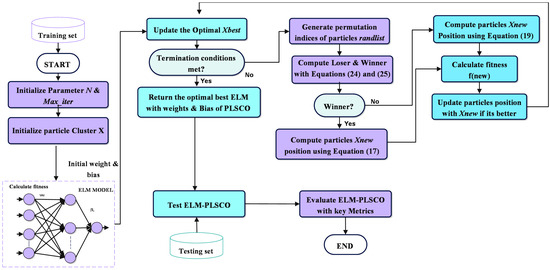

PLSCO is employed to optimize the weights and biases of ELM, enhancing its predictive accuracy and robustness. In this model, PLSCO is utilized to identify the optimal parameters for ELM by iteratively refining the input weight matrix () and hidden bias vector to minimize the error between the predicted output and the target values . The integration of PLSCO ensures an adaptive and balanced search process, leveraging both exploration and exploitation. The process begins by initializing a population of high-energy particles, where each particle represents a candidate solution comprising randomly generated and values for the ELM. The fitness of each particle is evaluated using the accuracy metric, which measures the accuracy of the ELM output. The global best solution, , is identified based on the highest accuracy score.

In each iteration, the PLSCO framework divides the population into winners and losers through competitive learning. Winners, representing candidate solutions with better fitness, are updated using the PLO mechanism, which emphasizes exploitation. The PLO’s components, including gyration motion and auroral oval walks, refine the and values in high potential regions of the search space, ensuring precise adjustments for convergence. Conversely, losers are updated using the SSA, which focuses on exploration. The SSA encourages global search by directing particles to unexplored areas, preventing premature convergence, and maintaining population diversity. After updating the positions of winners and losers, the fitness of each particle is re-evaluated using the outcomes of the ELM predictions. Particles are selectively updated, retaining new positions if fitness improves; otherwise, previous positions are maintained. The global best solution, , is dynamically updated whenever better fitness is achieved. This iterative process continues until the maximum number of iterations is reached. By integrating PLSCO into the ELM framework, the optimization process benefits from an adaptive balance between local exploitation and global exploration, ensuring efficient and accurate tuning of and . This combination enhances the ELM’s ability to model complex systems, reduces the risk of overfitting, and achieves higher prediction accuracy compared to conventional optimization methods. The flowchart of ELM-PLSCO is illustrated in Figure 6.

Figure 6.

ELM-PLSCO flow chart.

4. Experiment and Discussion

4.1. Analysis of PLSCO Search Capability

In this section, the performance of the proposed optimization algorithm is evaluated and compared against existing and improved algorithms prior to its application to the predictive maintenance problem. The evaluation is conducted using two established benchmark function suites: CEC2015 and CEC2020. The experimentation and analyses employ the CEC2015 test suite to assess the algorithm’s capabilities. This suite comprises single-modal functions (F1–F2), multimodal functions (F3–F5), hybrid functions (F6–F8), and composite functions (F9–F15) [44]. Similarly, the CEC2020 test suite includes F16 (single modal functions), F17–F19 (basic multimodal functions), F20–F22 (hybrid functions), and F23–F25 (composition functions) [45]. The parameter settings for each algorithm are detailed in Table 2. Other key experimental parameters include a maximum of 2000 iterations, 30 independent runs, a problem dimension of 30, and a population size of 30. The proposed algorithm is compared with several state-of-the-art optimization algorithms: Adaptive Chaotic Gaussian RIME optimizer (ACGRIME) [46], Aquila Optimizer (AO) [47], African Vulture Optimization Algorithm (AVOA) [48], Opposition Based Learning Path Finder Algorithm (OBLPFA) [49], PathFinder Algorithm (PFA) [50], Parrot Optimizer (PO) [51], Pelican Optimization Algorithm (POA) [52], Sine Cosine Algorithm (SCA) [53], and Polar Light Optimizer (PLO) [38]. It is noteworthy that within this study, the comparative experiments incorporated an improved recent optimizer, such as ACGRIME and OBLPFA, as a representative hybrid and enhanced optimizer. While other optimizers, such as AO, AVOA, PFA, POA, SCA, and PLO represent recent and original optimizers, PLSCO is distinct in its cooperative competition mechanism, where winners are refined using PLO’s exploitation dynamics and losers explore via SSA’s leader–follower strategy. This structure, combined with adaptive parameter adjustment, enables a dynamic balance between exploration and exploitation throughout the optimization process.

Table 2.

Parameter Settings.

4.1.1. Analysis of CEC 2015

In the experiments conducted using the CEC2015 test functions, two key metrics were evaluated: the Average (AVG) and Standard Deviation (STD) of results, as presented in Table 3. The unimodal functions selected for this study were specifically used to assess the exploitation capabilities of the algorithms. The results indicate that for the test function F1, the proposed PLSCO achieved the lowest mean value, outperforming all compared algorithms. However, for F2, ACGRIME demonstrated superior performance, while algorithms such as AO, PO, POA, and SCA exhibited subpar performance in these functions. For the multimodal functions (F3–F5), PLSCO consistently outperformed other algorithms in terms of average values, highlighting its superior accuracy in addressing multimodal optimization problems. An exception is observed in F5, where PLSCO achieved results similar to POA, which remained the most accurate optimizer among the comparisons. In the hybrid function tests (F6–F8), PLSCO exhibited varying performance. While it was less accurate than ACGRIME for F6, it achieved better mean values and greater stability, as reflected in lower standard deviations for F7 and F8. These results underscore the improved capability of PLSCO to handle hybrid problems with diverse characteristics effectively. The improvement of ACGRIME in F2 and F6 is attributed to ACGRIME’s enhanced optimization ability in specific unimodal landscapes and hybrid functions with narrow optima, where its adaptive chaotic perturbations provide improved precision. Also, according to the no free lunch theorem, no single algorithm can be efficient for all optimization problems [54]. For composite functions (F9–F15), PLSCO demonstrated outstanding performance, particularly in F11, F13, F14, and F15, achieving solutions closest to the global optimum compared to other algorithms. These results validate the algorithm’s robust ability to optimize complex, high-dimensional problems with composite structures.

Table 3.

Results of PLSCO and other optimizers on CEC2015 30 dimensions.

The experimental results with the CEC2015 test functions demonstrate that PLSCO consistently performs well across unimodal, multimodal, hybrid, and composite functions. This superior performance can be attributed to the integration of SSA operators, which enhance the algorithm’s exploration capabilities by encouraging global search and maintaining diversity within the population. Additionally, the competitive learning framework plays a crucial role in dynamically balancing exploration and exploitation by classifying particles into winners and losers. Winners refine their solutions through the PLO mechanism, which emphasizes precise local search, while losers adopt the SSA approach to explore unvisited regions, preventing premature convergence. The synergy between CSO’s competitive learning and SSA operators ensures that PLSCO effectively navigates complex search spaces and adapts to the characteristics of diverse optimization problems. These findings underscore PLSCO’s potential as a robust, adaptive, and versatile optimization algorithm for solving a wide range of challenging optimization tasks.

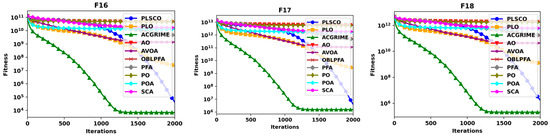

4.1.2. Analysis of CEC2020

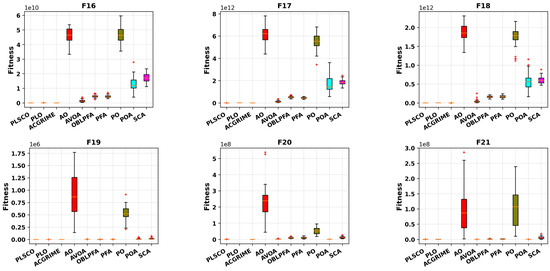

The experimental results presented in Table 4 for the CEC2020 benchmark functions demonstrate that PLSCO consistently obtained superior performance across various categories of optimization problems. For single-modal functions (F16), PLSCO achieved an average fitness value that significantly surpasses the traditional PLO, showcasing its enhanced exploitation capabilities. However, the best performance in F16 was achieved by the ACGRIME algorithm. In basic multimodal functions (F17–F19), PLSCO exhibited exceptional exploration abilities and resistance to local optima, particularly in F19, where it achieved the most optimal average fitness, matching the performance of the improved algorithm ACGRIME. While the performance of PLSCO in F17 and F18 was slightly lower than that of ACGRIME, it demonstrated a competitive capability to navigate multimodal landscapes effectively.

Table 4.

Results of PLSCO and other Optimizers on CEC2020 30 dimensions.

The results for hybrid functions (F20–F22) further underscore PLSCO’s adaptability and robustness. PLSCO outperformed other algorithms, such as PO and PFA, in terms of average fitness across F20–F22. Additionally, its relatively low standard deviation reflects a high degree of stability and reliability, further establishing its superiority over competing methods, including recently developed algorithms like POA. For composition functions (F23–F25), PLSCO showcased its ability to maintain an effective balance between exploration and exploitation. It consistently achieved the best average fitness in these functions, outperforming not only improved algorithms such as ACGRIME and OBLPFA but also recently proposed methods like AVOA and PO. These results highlight PLSCO’s capacity to converge rapidly while effectively exploring complex, high-dimensional solution spaces. The results in Table 4 emphasize PLSCO’s superior optimization performance, robustness, and stability across all function categories. By integrating advanced strategies, including competitive learning and SSA operators, PLSCO achieves a dynamic balance between exploration and exploitation, leading to improved convergence rates, enhanced exploratory capabilities, and better overall performance compared to competing algorithms. These findings firmly establish PLSCO as a versatile and reliable optimization algorithm for tackling a wide range of challenging problems.

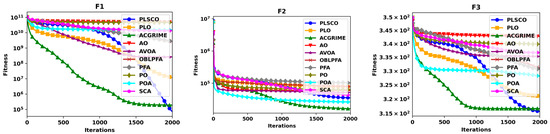

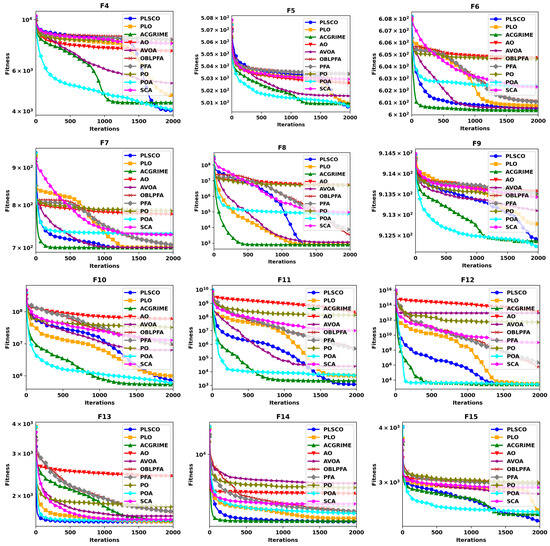

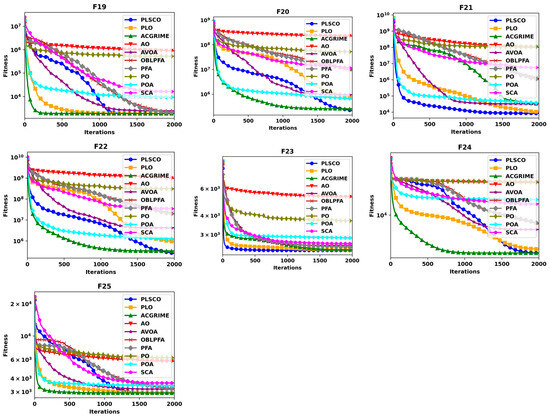

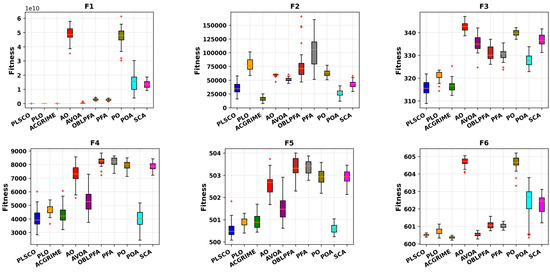

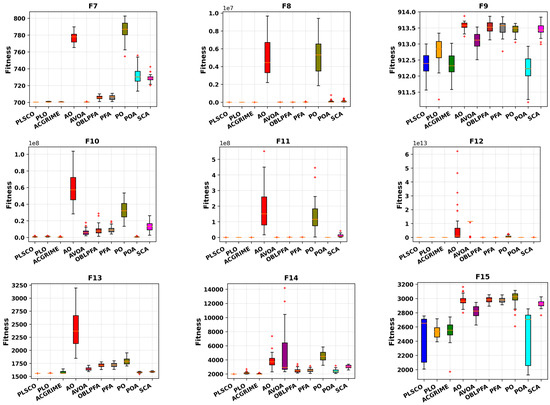

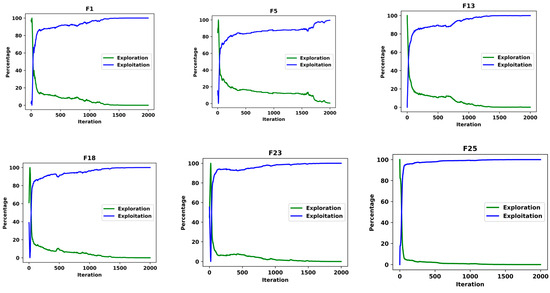

4.1.3. Convergence and Box Plot Analysis

Figure 7, Figure 8, Figure 9 and Figure 10 present a comparative analysis of the PLSCO method against nine state-of-the-art algorithms using convergence curves and box plots on the CEC2015 and CEC2020 benchmark functions, respectively. The convergence curves, depicted in Figure 7 and Figure 8, illustrate how the average accuracy of each algorithm evolves as the number of iterations increases. The box plots in Figure 9 and Figure 10 provide a detailed view of the distribution of final optimal solutions attained by each algorithm. These plots display critical statistical elements, including the minimum, maximum, lower quartile (Q1), median, upper quartile (Q3), and any outliers, with outliers distinctly marked as red “+” symbols. While the convergence curves evaluate how effectively each algorithm achieves optimal solutions over iterations, the box plots highlight the stability and consistency of the results, with smaller box sizes indicating greater robustness against variations in the search space. The convergence curves demonstrate that PLSCO achieves rapid convergence in the early stages of the optimization process. Unlike other algorithms, which tend to exhibit flattened curves and stagnate in local optima, PLSCO continues to explore high-quality regions of the search space. Notably, PLSCO demonstrates faster convergence across all functions except F1 in the unimodal category (F1–F2), underscoring its effective exploitation capabilities. For multimodal functions (F3–F5), PLSCO delivers superior performance, achieving higher accuracy, particularly in F3 and F4, compared to other optimizers. In hybrid functions (F6–F8), PLSCO shows exceptional convergence for F6 and F8, with results comparable to other advanced algorithms, indicating its adaptability to diverse problem characteristics.

Figure 7.

Convergence Curves of PLSCO and Compared Algorithms on CEC2015 30 dimension.

Figure 8.

Convergence Curves of PLSCO and Compared Algorithms on CEC2020 30 dimension.

Figure 9.

Box Plots of PLSCO and Compared Algorithms on CEC2015 30 dimension.

Figure 10.

Box Plots of PLSCO and Compared Algorithms on CEC2020 30 dimension.

In composite functions F11 and F15, PLSCO surpasses advanced algorithms such as ACGRIME, OBLPFA, and PLO by maintaining an optimal balance between exploration and exploitation. The findings depicted in Figure 7 further validate PLSCO’s ability to achieve superior convergence accuracy compared to other techniques. This highlights the effectiveness of the enhancements introduced in PLSCO, which not only improve the trade-off between exploration and exploitation but also enhance its ability to avoid local optima and approach the global optimum with greater precision.

The integration of the SSA and CSO strategies within PLSCO is central to its superior performance. The competitive learning framework fosters diversity by dynamically classifying particles as winners and losers, encouraging both local refinement and global exploration. SSA operators ensure continuous exploration of the solution space, maintaining diversity and preventing premature convergence. Additionally, the methodological innovations in PLSCO enable it to excel in shifted, rotated, and hybrid functions within the CEC2020 benchmark, particularly in F20, F21, F22, F23, and F25, as shown in Figure 8. The box plot analyses in Figure 9 and Figure 10 further reinforce PLSCO’s stability, with tight distributions and minimal outliers, underscoring its robustness. The red “+” in the plots are outliers

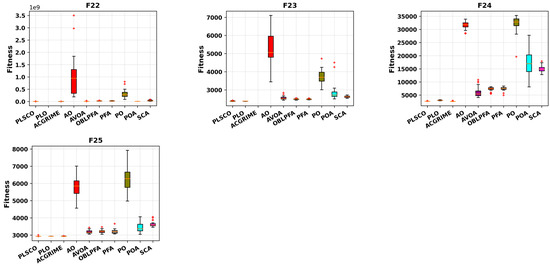

4.1.4. Diversity Analysis

To emphasize the enhancements introduced by PLSCO over the standard PLO, a diversity analysis is conducted to evaluate the distribution of the population throughout the optimization process [55]. Population diversity captures the nonlinear dynamics of the population by measuring the average distance between individuals over iterations. A reduction in diversity, characterized by nonlinear behavior, suggests increased aggregation within the population, heightening the risk of premature convergence to suboptimal solutions. The population radius, defined as the maximum distance between any two individuals, is mathematically expressed in Equation (32) [56].

where is the population size, is the dimensionality of the problem space, and represents the position of the -th individual in the -th dimension. From this, the diversity of the population is calculated as expressed in Equation (33).

where denotes the center of the population. Figure 11 illustrates the diversity trajectory for selected benchmark functions, specifically F4, F5, and F15 from CEC2015 and F18, F23, and F25 from CEC2020. The results reveal that during the early stages of the optimization process, PLSCO exhibits significantly higher diversity compared to the standard PLO. This heightened diversity is attributed to the integration of SSA operators within PLSCO, which ensures a broad exploration of the search space by maintaining the dispersion of search agents. Consequently, this reduces the risk of premature convergence to suboptimal solutions, allowing for more effective global exploration.

Figure 11.

Diversity Plots of PLSCO and PLO.

In contrast, PLSCO demonstrates reduced population diversity in the later stages of the search compared to PLO. This indicates that the search agents in PLSCO have transitioned from global exploration to focused exploitation, progressively converging toward the global optimum. This behavior is driven by the CSO strategy, which dynamically refines the solutions by guiding the population to optimal regions while avoiding local optima. The interplay between SSA and CSO ensures a balanced and adaptive search process. SSA maintains diversity during the initial phases, enabling PLSCO to escape local optima and explore diverse regions of the solution space. CSO, on the other hand, enhances exploitation in the later stages, concentrating the search around high-potential areas and ensuring convergence to near-optimal solutions. This dynamic balance, as evidenced by the diversity trajectory analysis, underscores PLSCO’s superiority over PLO, enabling it to achieve higher optimization performance, robustness, and stability across a wide range of benchmark functions.

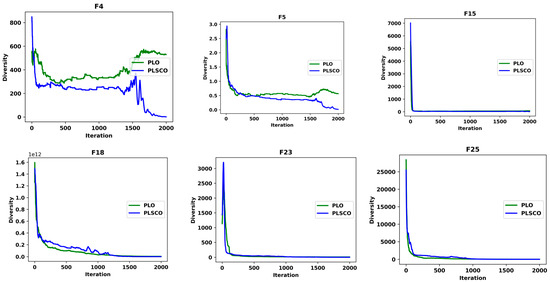

4.1.5. Exploration vs. Exploitation

This section presents the comprehensive analysis of PLSCO’s local and global search capabilities, focusing on its ability to balance exploration and exploitation; these are the two foundational components of optimization algorithms. The aim of evaluating the mathematical formulations and visual representations of these proportions is to assess PLSCO’s effectiveness in dynamically adapting its search strategies. The mathematical expressions for calculating exploration and exploitation proportions are expressed in Equations (34)–(37) [57].

Here, represents the -th element of the -th agent in the population during the -th iteration. The median function computes the median value of the matrix, reflecting central tendencies within the population. measures the average difference in the population’s dimensions at the -th iteration, while represents the maximum observed value of . Exploration and exploitation proportions, denoted as ERP and ETP, respectively, are expressed as percentages, indicating the relative focus of the algorithm during each phase of the search.

Figure 12 provides visual insights into the dynamics of exploration and exploitation rates for benchmark functions from CEC2015 (, and ) and CEC2020 (, and ). The curves illustrate PLSCO’s remarkable ability to adaptively balance these two phases across all agents during the optimization process. In the initial stages, the ERP is dominant, reflecting the algorithm’s emphasis on global exploration to investigate diverse regions of the solution space. As the search progresses, the ERP gradually decreases, allowing the ETP to predominate, signaling a shift toward refined exploitation of promising regions to identify optimal solutions. This adaptive behavior is attributed to the integration of the SSA and CSO within PLSCO. The SSA operators enhance global exploration in the early iterations by maintaining population diversity and preventing premature convergence. Meanwhile, the CSO strategy dynamically guides the search toward high-potential regions by refining local exploitation, particularly in later stages. These techniques orchestrate a seamless interplay between exploration and exploitation, enabling PLSCO to navigate complex optimization landscapes effectively.

Figure 12.

Exploration vs. Exploitation.

The findings depicted in Figure 12 highlight PLSCO’s ability to maintain a dynamic equilibrium between exploration and exploitation, steering agents toward the global optimum. By emphasizing exploration in the initial phases and transitioning toward exploitation as the search progresses, PLSCO demonstrates its capability to adaptively balance these phases, resulting in efficient convergence and superior optimization performance. This methodological refinement positions PLSCO as a robust and versatile optimization algorithm capable of solving high-dimensional and complex problems with precision.

4.1.6. Non-Parametric Analysis

To ensure a rigorous evaluation of the experimental results and mitigate the impact of randomness, the outcomes of 30 independent iterations for each of the 10 algorithms across 25 test functions were analyzed using the Wilcoxon rank-sum test at a significance level of 0.05. This statistical test was employed to identify significant differences between the results of PLSCO and the comparison algorithms. A p-value greater than 0.05 indicates no significant difference between the results, whereas a p-value less than or equal to 0.05 signifies a statistically significant difference. The pairwise Wilcoxon rank-sum test results for PLSCO and the other algorithms are summarized in Table 5, with “-” denoting instances where no results were available for pairwise comparison.

Table 5.

Friedman and Wilcoxon Non-Parametric Analysis Results.

The results from the CEC2015 and CEC2020 benchmark functions demonstrate that PLSCO exhibits significant differences compared to the majority of the evaluated algorithms, particularly the traditional PLO. This underscores the enhancements introduced in PLSCO, including the integration of SSA and CSO strategies, which contribute to its superior performance. In some cases, algorithms such as ACGRIME and POA achieved superior outcomes on specific test functions, resulting in p-values greater than 0.05, indicating comparable performance with PLSCO. However, in the majority of the benchmark functions, PLSCO achieved the best results, reaffirming its robustness and effectiveness in solving diverse optimization problems. Additionally, the Friedman Mean Rank Test was conducted to further validate the relative efficiency of the algorithms. PLSCO consistently obtained the highest mean rank across both the CEC2015 and CEC2020 test suites, establishing it as the most efficient algorithm among the compared methods. This performance can be attributed to the synergistic integration of SSA and CSO mechanisms, which ensure a dynamic balance between exploration and exploitation. The SSA strategy enhances global exploration by maintaining diversity and preventing premature convergence, while the CSO mechanism fosters local refinement, guiding the population toward optimal solutions in the later stages. These methodological advancements position PLSCO as a versatile and highly effective optimization algorithm capable of achieving superior performance across a wide range of challenging optimization landscapes.

4.2. Predictive Maintenance Prediction Using ELM-PLSCO Model

4.2.1. Dataset

In this study, the AI4I 2020 Predictive Maintenance Dataset from the UCI Machine Learning Repository was utilized [58]. This dataset was selected due to the challenges associated with acquiring predictive maintenance data from real-world industrial environments [59]. It provides a reliable representation of actual predictive maintenance scenarios encountered in industrial applications. The dataset comprises 10,000 data points with seven distinct features and a machine failure label, making it a robust resource for evaluating predictive maintenance models [60]. The features of the dataset are as follows:

- Type: This categorical variable classifies products into three categories based on performance levels: high (H, representing 20% of all products), medium (M, representing 30% of all products), and low (L, representing 50% of all products).

- Air Temperature: Measured in Kelvin (K), this feature is normalized to have a standard deviation of 2K centered around 300 K, reflecting the air temperature conditions during operations.

- Process Temperature: Also measured in Kelvin (K), this feature is normalized with a standard deviation of 1K and represents the temperature within the machine’s operational processes.

- Rotational Speed: Expressed in revolutions per minute (rpm), this value is calculated based on a machine power output of 2860 W, indicating the tool’s operational speed.

- Torque: Measured in Newton meters (N m), torque values are distributed around 40 N m with a standard deviation of 10 N m, with no negative values recorded.

- Tool Wear Time: This feature, measured in minutes, captures the cumulative operational time of the tool, representing its wear level over time.

- Failure type: Machine failures are categorized into five distinct modes:

- i.

- Tool Wear Failure: Tool wear or replacement occurs randomly between 200 and 240 minutes of operation.

- ii.

- Heat Dissipation Failure: Failure occurs when the difference between air temperature and process temperature falls below 8.6 K, and the rotational speed drops below 1380 rpm.

- iii.

- Power Failure: This failure arises when the product of torque and rotational speed results in power exceeding 9000 W or dropping below 3500 W.

- iv.

- Overstrain Failure: Overstrain occurs when the product of torque and tool wear exceeds specified thresholds for each product category: 11,000 min N m for L products, 12,000 min N m for M products, and 13,000 min N m for H products.

- v.

- Random Failure: Failures that occur randomly with a probability of 0.1%, irrespective of process parameters.

The diverse and complex characteristics of the dataset make it an ideal benchmark for evaluating the performance of predictive maintenance models. By integrating PLSCO with ELM, the weight and bias parameters of ELM are optimized to enhance its predictive accuracy for identifying machine faults. The detailed feature set and failure labels enable comprehensive testing of PLSCO’s capability to balance exploration and exploitation, ensuring ELM effectively learns and generalizes across diverse failure scenarios. In this dataset, failure type is used as the target variable, while others are used as the input variables in a predictive model. Although the AI4I 2020 dataset provides a robust and well-structured platform for evaluating predictive maintenance models, it does not fully replicate the noise levels, class imbalance, and heterogeneous data distributions encountered in real industrial environments. The selection of this dataset was driven by the limited availability of publicly accessible PdM datasets with comprehensive feature sets and accurate labeling. Nonetheless, the proposed PLSCO-ELM framework incorporates features such as competitive learning and adaptive parameter adjustment that are suited to handle variability and noise in more complex datasets.

4.2.2. Evaluation Metrics

The evaluation criteria used to assess the performance of the PLSCO-enhanced ELM are summarized in Table 6. They provide a comprehensive framework for evaluating the model’s predictive accuracy and reliability. Aside from the metrics in the table, the Area Under the Receiver Operating Characteristic Curve (ROC-AUC) is included in the metrics. It reflects the classifier’s ability to discriminate between different classes, and is a performance metric derived from the ROC curve. The interpretation of ROC-AUC scores is generally as follows: 0.5–0.6 indicates poor or failed classification performance, 0.6–0.7 suggests a weak or marginal level of class separability, 0.7–0.8 indicates fair performance, 0.8–0.9 indicates considerable or good discrimination, and above 0.9 indicates excellent classification ability [61].

Table 6.

Evaluation Metrics.

These metrics are critical for understanding the effectiveness of the optimized ELM in identifying machine faults within the PdM dataset. By employing these evaluation criteria, the effectiveness of PLSCO in optimizing ELM’s weights and biases can be rigorously assessed. These metrics ensure a thorough evaluation of the model’s ability to identify machine faults accurately and reliably, highlighting the enhancements brought about by the integration of PLSCO into the predictive maintenance framework.

4.2.3. Prediction Results and Discussion

In this section, the parameters for all optimization algorithms are maintained as specified in Table 2. The number of iterations is set to 100, and the population size is fixed at 30 for all optimization algorithms utilized in this study. The search boundaries for PLSCO and the comparison algorithms in optimizing the weights and biases of ELM are defined within the range of [−10,10]. The hidden layer of ELM is configured with 15 neurons across all optimization algorithms employed in this study. The AI4I 2020 dataset was partitioned into training and testing sets using a randomized 70:30 split. To ensure that performance evaluations were robust to variability arising from random data partitioning, the performance metrics presented in Table 7 and Table 8 correspond to the average, standard deviation, and best performance, providing a statistically reliable measure of predictive accuracy and stability. In this study, the number of hidden layer neurons in ELM was fixed at 15. This choice aligns with existing applications of ELM, where similar configurations have demonstrated a favorable trade-off between model complexity and generalization capability, while mitigating overfitting risks [62]. Given that the central focus of this work is to evaluate the impact of PLSCO on the optimization of ELM’s weights and biases, the number of neurons is kept constant to ensure that observed performance differences stem directly from the optimization mechanism rather than architecture variability. The results of the experiment demonstrate that the improved PLSCO significantly enhances the predictive performance of ELM in identifying machine faults more accurately from the dataset compared to other nature-inspired optimization techniques. The performance of the algorithms was assessed using several metrics across both training and testing datasets, as seen in Table 7 and Table 8. PLSCO consistently outperformed its counterparts, achieving the highest average accuracy of 0.95682 for training and 0.95465 for testing, respectively, and recall of 0.86951 for training and 0.86587 for testing, respectively, alongside superior F1, precision, and specificity. This exceptional performance underscores the algorithm’s robustness and effectiveness in optimizing the ELM model for PdM tasks.

Table 7.

Training Results of ELM-PLSCO and Compared Optimizers on Predictive Maintenance Predictions.

Table 8.

Test Results of ELM-PLSCO and Compared Optimizers on Predictive Maintenance Predictions.

When compared to other algorithms, such as ACGRIME, AO, AVOA, and POA, PLSCO demonstrated a clear advantage in accuracy, as evidenced by its score. As seen in Table 8, PLSCO’s accuracy score is superior to competing algorithms, indicating a high degree of reliability. A deeper analysis of the performance metrics reveals that while most algorithms demonstrated high specificity, there was a trade-off in terms of precision and F1 score for several methods. However, PLSCO achieved the highest specificity (0.97409 for training and 0.97280 for testing) and maintained a balanced and superior performance across precision and F1 score, reflecting its capability to minimize false positives while accurately identifying true machine faults. This balance is crucial for predictive maintenance applications, where both overestimating and underestimating machine faults can lead to operational inefficiencies or unexpected downtimes.

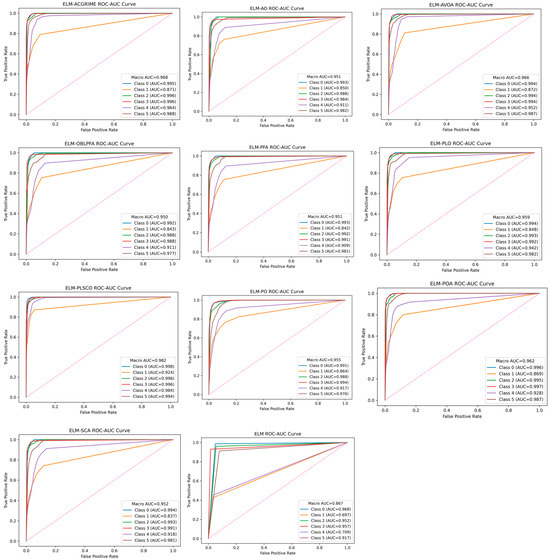

The consistency and stability of PLSCO’s performance are further reinforced by its low standard deviations across all metrics. Also observed in the ROC-AUC average score, PLSCO demonstrated superior performance with an average test score of 0.97224, indicating that it can discriminate effectively within the classes of the predicted value. However, ELM demonstrated even better standard deviations for all metrics. This superior standard deviation in ELM is attributed to its deterministic nature in training, stemming from the direct computation of output weights using the Moore-Penrose pseudo-inverse. Unlike iterative optimization-based methods, such as PLSCO, where performance may be slightly influenced by randomness in population initialization and search trajectories, ELM’s matrix-based approach ensures minimal variability across multiple runs. Consequently, this characteristic contributes to ELM’s exceptionally stable performance, particularly in scenarios requiring repeated trials or consistent outcomes. This stability is particularly evident in scenarios involving noisy or imbalanced data, where other algorithms may struggle to maintain reliability. The results indicate that PLSCO’s design effectively addresses these challenges, making it a robust choice for real-world predictive maintenance tasks. Additionally, the algorithm’s ability to outperform the baseline ELM model by a significant margin underscores the importance of integrating advanced optimization techniques for enhancing machine learning models.

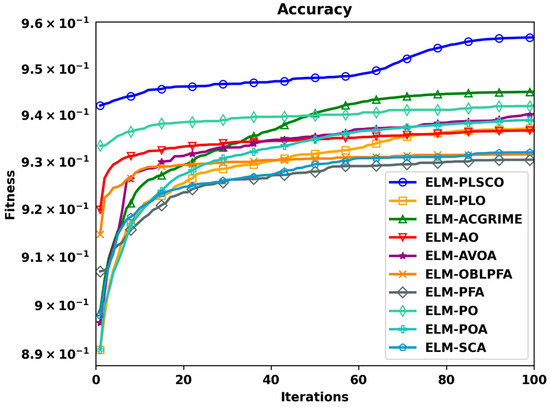

Furthermore, the convergence behavior, as depicted in Figure 13, illustrates the superior fitness and rapid stabilization of PLSCO relative to other optimization methods. Unlike competing algorithms, which exhibited slower or suboptimal convergence patterns, PLSCO achieved higher fitness values with fewer iterations, further validating its efficacy in enhancing the ELM-PLSCO model’s learning process. The plot provides valuable insights into their optimization performance. The fitness values for PLSCO are consistently higher throughout the iterations, clearly demonstrating its ability to quickly adapt and achieve optimal solutions. In contrast, other algorithms, such as AO and AVOA, exhibit slower convergence rates, requiring more iterations to approach fitness levels that are still below those achieved by PLSCO. Notably, PLSCO’s convergence curve shows a smooth and steady ascent, highlighting its efficiency in navigating the solution space without being hindered by local optima. This is a critical advantage in predictive maintenance applications, where timely and accurate predictions are essential for minimizing downtime and optimizing operational efficiency. The superior convergence behavior of PLSCO also suggests its potential applicability in other complex optimization scenarios.

Figure 13.

Accuracy Plot of ELM-PLSCO and Compared Models.

The results highlight the transformative impact of using the PLSCO algorithm for optimizing ELM in PdM prediction. The algorithm’s ability to achieve superior accuracy, stability, and convergence speed positions it as a highly effective tool for enhancing machine fault prediction. The comparative analysis and convergence trends underscore its advantages over other nature-inspired algorithms, providing a strong case for its adoption in industrial settings. These findings demonstrate the potential of integrating advanced nature-inspired optimization techniques into predictive maintenance frameworks, paving the way for more reliable and efficient industrial applications.

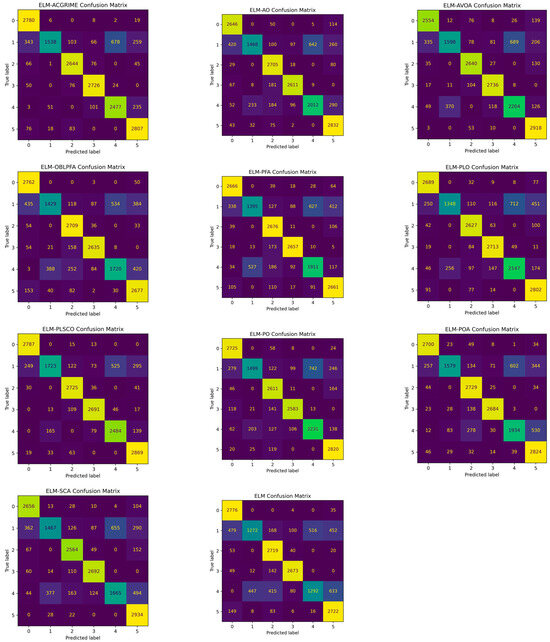

The confusion matrix plots of the best test accuracy from 30 independent runs are shown in Figure 14, confirming that ELM-PLSCO maintains a high true positive rate while minimizing false classifications, indicating its suitability for predictive maintenance tasks where fault detection accuracy is critical. Furthermore, the corresponding ROC–AUC plots of the best test accuracy from 30 independent runs are shown in Figure 15, illustrating that ELM-PLSCO consistently attains higher area under the curve values compared to other models, reflecting its robust discriminative capability under varying decision thresholds. This improvement can be attributed to the synergy of PLO’s competitive dynamics, CSO’s stochastic search diversity, and SSA’s adaptive exploitation, which collectively enhance the optimizer’s ability to balance exploration and exploitation. These findings suggest that the hybridization strategy not only accelerates convergence but also strengthens classification stability.

Figure 14.

Confusion Matrix of ELM-PLSCO and Compared Models.

Figure 15.

ROC–AUC plots of ELM-PLSCO and Compared Models.

The computation time in seconds results presented in Table 9 reveal notable differences among the ELM-based hybrid optimization models. The proposed ELM-PLSCO achieved a moderate computation time of 287.21 seconds, which is competitive given its enhanced exploration–exploitation balance and improved convergence characteristics, which is higher than baseline ELM-PLO. While algorithms such as ELM-SCA and ELM-AVOA demonstrated the lowest computation times, their reduced runtime comes at the cost of lower predictive accuracy in earlier evaluations. Conversely, models such as ELM-PO and ELM-POA exhibited substantially longer runtimes, largely due to their more complex update strategies and less efficient convergence behavior. The ELM baseline, with a computation time of only 3.37 seconds, remains the fastest; however, it lacks the optimization capacity to fine-tune parameters effectively, leading to inferior predictive performance. The results indicate that ELM-PLSCO provides a favorable trade-off between computational efficiency and prediction accuracy, making it suitable for predictive maintenance applications where both accuracy and timeliness are critical.

Table 9.

Computation Time Comparison of ELM-PLSCO and other Models.

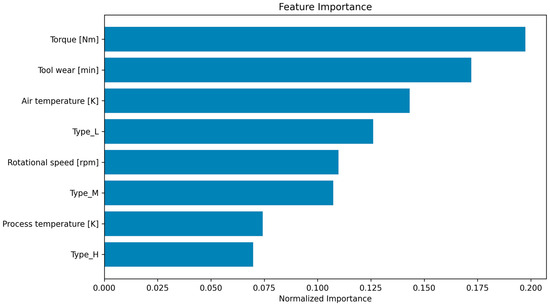

4.2.4. Feature Importance Score Analysis

The feature importance analysis provides crucial insights into the predictive power of individual variables in the dataset for machine failure prediction. Feature importance was evaluated using the permutation importance score method, which assesses the decrease in model performance when the values of a given feature are randomly shuffled while all others remain unchanged. A greater drop in performance indicates a higher importance of that feature to the model’s predictions. As evident in Figure 16, torque emerges as the most significant feature, with the highest importance score. This indicates that torque plays a pivotal role in determining machine health, as variations in torque could directly reflect changes in mechanical load and operational efficiency. Following closely are tool wear and air temperature, both of which exhibit substantial contributions to the prediction model. The high importance of tool wear is expected, given its direct relationship to equipment degradation, while air temperature highlights the impact of environmental conditions on machine performance. The feature Type_L demonstrates a comparable level of importance. This is due to the inherent characteristics or operational conditions associated with these products, such as lower thresholds for durability or higher susceptibility to wear and tear. Rotational speed and Type_M exhibit moderate contributions, emphasizing their role in reflecting operational conditions.

Figure 16.

Feature Importance Score of ELM-PLSCO Model.

In contrast, features such as process temperature and Type_H demonstrate lower importance, indicating they contribute less predictive power to the model’s predictions. Their relatively lower influence may stem from weaker correlations with the target variable or redundancy with other features. When combined with the performance metrics from the improved ELM-PLSCO algorithm, the feature importance analysis underscores the effectiveness of leveraging domain-specific insights for model optimization. The prioritization of features such as torque and tool wear validates the practical relevance of the proposed approach. Moreover, the hierarchical ranking of features highlights potential areas for targeted monitoring and intervention in real-world maintenance practices.

The findings from the feature importance analysis also complement the accuracy trends observed in the optimization algorithms’ fitness plots. Specifically, the ability of ELM-PLSCO to achieve superior performance could be attributed, in part, to its capability to better capture the relationships among these influential features. This synergy between feature importance and algorithmic performance demonstrates the potential for ELM-PLSCO to uncover meaningful patterns in complex datasets, ultimately driving advancements in predictive maintenance.

5. Conclusions

This study proposes an advanced PdM model that integrates the ELM with an enhanced optimization algorithm, termed PLSCO. PLSCO is developed by synergistically combining mechanisms from the CSO and the SSA within the PLO framework. This hybridization effectively addresses the critical challenge of balancing exploration and exploitation in optimization processes. A novel competitive division mechanism enables adaptive learning, enhancing both local refinement and global search capabilities, thereby significantly improving the overall optimization performance of the algorithm. The ELM-PLSCO model was evaluated on the AI4I 2020 Predictive Maintenance dataset, where it demonstrated superior predictive accuracy and stability compared to baseline ELM and conventional optimization-based approaches. Feature importance analysis revealed that variables such as torque and tool wear play a pivotal role in fault prediction, while product type and other operational parameters provide insights into performance-specific vulnerabilities. These findings highlight the model’s ability to extract meaningful and actionable knowledge from industrial data.

Despite its promising results, this study has several limitations. First, the evaluation was conducted using only a single publicly available dataset, the AI4I 2020 dataset, due to the limited availability of industry-reported PdM datasets in the public domain. Industrial predictive maintenance data are often proprietary and protected by confidentiality agreements, restricting access for research purposes. While the AI4I 2020 dataset offers a well-structured and balanced benchmark for controlled experimentation, it may not fully capture the complexities of real-world industrial environments, such as high noise levels, severe class imbalance, and heterogeneous operational conditions. Second, although the hybrid PLSCO algorithm exhibits strong optimization performance, its computational complexity in large-scale or real-time applications requires further investigation, as it requires more computation time. The scalability and efficiency of the algorithm under dynamic operational conditions warrant deeper analysis.

Future research will address these limitations by extending the proposed framework to dynamic, real-time environments and evaluating its performance across multiple PdM datasets obtained through industrial collaborations. This will enable a more comprehensive assessment of the model’s generalization capability across diverse industrial domains. Additionally, future work will incorporate domain-specific constraints and real-world operational dynamics into the optimization process to further improve the robustness and practical applicability of the model. Further experimental evaluations will include comparisons with other high-performance hybrid optimizers and emerging metaheuristic–machine learning integration techniques. The framework will also be systematically evaluated with respect to neuron count variation and different dataset partitioning strategies to identify optimal configurations and enhance model reliability.

Author Contributions

A.R.M.A.B., Methodology, Validation; O.S.O. writing—original draft preparation, supervision; T.O. Resources, Supervision and Editing: O.A. Conceptualization, Writing, Methodology, Validation. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study did not involve human participants, animals, or data requiring ethical review.

Informed Consent Statement

No human subjects were involved, and as such, no informed consent was required for this study.

Data Availability Statement

The data obtained through the experiments are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Nunes, P.; Santos, J.; Rocha, E. Challenges in predictive maintenance—A review. CIRP J. Manuf. Sci. Technol. 2023, 40, 53–67. [Google Scholar] [CrossRef]

- Ahmad, R.; Kamaruddin, S. An overview of time-based and condition-based maintenance in industrial application. Comput. Ind. Eng. 2012, 63, 135–149. [Google Scholar] [CrossRef]

- Bouabdallaoui, Y.; Lafhaj, Z.; Yim, P.; Ducoulombier, L.; Bennadji, B. Predictive Maintenance in Building Facilities: A Machine Learning-Based Approach. Sensors 2021, 21, 1044. [Google Scholar] [CrossRef]

- Abidi, M.H.; Mohammed, M.K.; Alkhalefah, H. Predictive Maintenance Planning for Industry 4.0 Using Machine Learning for Sustainable Manufacturing. Sustainability 2022, 14, 3387. [Google Scholar] [CrossRef]

- A Survey of Predictive Maintenance: Systems, Purposes and Approaches. Available online: https://www.researchgate.net/publication/337971929_A_Survey_of_Predictive_Maintenance_Systems_Purposes_and_Approaches (accessed on 21 January 2025).

- Hamasha, M.; Bani-Irshid, A.H.; Almashaqbeh, S.; Shwaheen, G.; Qadri, L.; Shbool, M.; Muathen, D.; Ababneh, M.; Harfoush, S.; Albedoor, Q.; et al. Strategical selection of maintenance type under different conditions. Sci. Rep. 2023, 13, 15560. [Google Scholar] [CrossRef] [PubMed]

- Carvalho, T.P.; Soares, F.A.A.M.N.; Vita, R.; Francisco, R.d.P.; Basto, J.P.; Alcalá, S.G.S. A systematic literature review of machine learning methods applied to predictive maintenance. Comput. Ind. Eng. 2019, 137, 106024. [Google Scholar] [CrossRef]

- Theissler, A.; Pérez-Velázquez, J.; Kettelgerdes, M.; Elger, G. Predictive maintenance enabled by machine learning: Use cases and challenges in the automotive industry. Reliab. Eng. Syst. Saf. 2021, 215, 107864. [Google Scholar] [CrossRef]

- Vithi, N.L.; Chibaya, C. Advancements in Predictive Maintenance: A Bibliometric Review of Diagnostic Models Using Machine Learning Techniques. Analytics 2024, 3, 493–507. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Zhang, Z. Introduction to machine learning: K-nearest neighbors. Ann. Transl. Med. 2016, 4, 218. [Google Scholar] [CrossRef]

- Grossberg, S. Recurrent Neural Networks. Scholarpedia 2013, 8, 1888. [Google Scholar] [CrossRef]

- Costa, V.G.; Pedreira, C.E. Recent advances in decision trees: An updated survey. Artif. Intell. Rev. 2023, 56, 4765–4800. [Google Scholar] [CrossRef]

- Friedman, N.; Geiger, D.; Goldszmidt, M. Bayesian Network Classifiers. Mach. Learn. 1997, 29, 131–163. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Touzani, S.; Granderson, J.; Fernandes, S. Gradient boosting machine for modeling the energy consumption of commercial buildings. Energy Build. 2018, 158, 1533–1543. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Yang, F.-J. An Implementation of Naive Bayes Classifier. In Proceedings of the 2018 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 12–14 December 2018; pp. 301–306. [Google Scholar]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A K-Means Clustering Algorithm. J. R. Stat. Society. Ser. C (Appl. Stat.) 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2012, 42, 513–529. [Google Scholar] [CrossRef]

- Wang, J.; Lu, S.; Wang, S.-H.; Zhang, Y.-D. A review on extreme learning machine. Multimed. Tools Appl. 2022, 81, 41611–41660. [Google Scholar] [CrossRef]